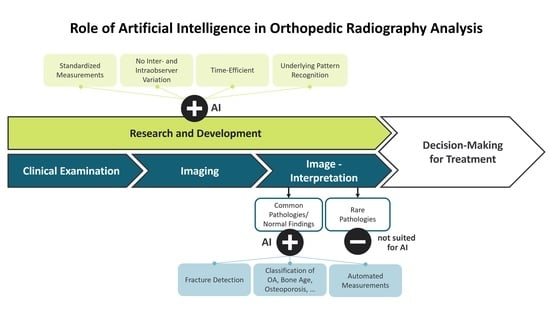

Artificial Intelligence in Orthopedic Radiography Analysis: A Narrative Review

Abstract

:1. Introduction

1.1. Inter- and Intra-Observer Variability

1.2. Artificial Intelligence and Machine Learning

1.3. Applications of AI in Musculoskeletal Radiology

2. Material and Methods

2.1. AI in X-ray Imaging

2.1.1. Fracture Detection

2.1.2. Classification

2.2. Measurements

2.3. Computed Tomography and Magnetic Resonance Imaging

2.4. Internal and External Validation

3. Discussion

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence, August 31, 1955. AI Mag. 2006, 27, 12. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69S, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; ISBN 0262337371. [Google Scholar]

- Rouzrokh, P.; Wyles, C.C.; Philbrick, K.A.; Ramazanian, T.; Weston, A.D.; Cai, J.C.; Taunton, M.J.; Lewallen, D.G.; Berry, D.J.; Erickson, B.J.; et al. A Deep Learning Tool for Automated Radiographic Measurement of Acetabular Component Inclination and Version After Total Hip Arthroplasty. J. Arthroplast. 2021, 36, 2510–2517.e6. [Google Scholar] [CrossRef]

- Braun, J.; Sieper, J.; Bollow, M. Imaging of sacroiliitis. Clin. Rheumatol. 2000, 19, 51–57. [Google Scholar] [CrossRef]

- Hameed, B.M.Z.; Dhavileswarapu, A.V.L.S.; Raza, S.Z.; Karimi, H.; Khanuja, H.S.; Shetty, D.K.; Ibrahim, S.; Shah, M.J.; Naik, N.; Paul, R.; et al. Artificial Intelligence and Its Impact on Urological Diseases and Management: A Comprehensive Review of the Literature. J. Clin. Med. 2021, 10, 1864. [Google Scholar] [CrossRef]

- Rutgers, J.J.; Bánki, T.; van der Kamp, A.; Waterlander, T.J.; Scheijde-Vermeulen, M.A.; van den Heuvel-Eibrink, M.M.; van der Laak, J.A.W.M.; Fiocco, M.; Mavinkurve-Groothuis, A.M.C.; de Krijger, R.R. Interobserver variability between experienced and inexperienced observers in the histopathological analysis of Wilms tumors: A pilot study for future algorithmic approach. Diagn. Pathol. 2021, 16, 77. [Google Scholar] [CrossRef]

- Ahmad, O.F.; Soares, A.S.; Mazomenos, E.; Brandao, P.; Vega, R.; Seward, E.; Stoyanov, D.; Chand, M.; Lovat, L.B. Artificial intelligence and computer-aided diagnosis in colonoscopy: Current evidence and future directions. Lancet Gastroenterol. Hepatol. 2019, 4, 71–80. [Google Scholar] [CrossRef]

- Maffulli, N.; Rodriguez, H.C.; Stone, I.W.; Nam, A.; Song, A.; Gupta, M.; Alvarado, R.; Ramon, D.; Gupta, A. Artificial intelligence and machine learning in orthopedic surgery: A systematic review protocol. J. Orthop. Surg. Res. 2020, 15, 478. [Google Scholar] [CrossRef]

- Senders, J.T.; Arnaout, O.; Karhade, A.V.; Dasenbrock, H.H.; Gormley, W.B.; Broekman, M.L.; Smith, T.R. Natural and Artificial Intelligence in Neurosurgery: A Systematic Review. Neurosurgery 2018, 83, 181–192. [Google Scholar] [CrossRef] [Green Version]

- Federer, S.J.; Jones, G.G. Artificial intelligence in orthopaedics: A scoping review. PLoS ONE 2021, 16, e0260471. [Google Scholar] [CrossRef] [PubMed]

- Cabitza, F.; Locoro, A.; Banfi, G. Machine Learning in Orthopedics: A Literature Review. Front. Bioeng. Biotechnol. 2018, 6, 75. [Google Scholar] [CrossRef] [PubMed]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef] [PubMed]

- Demšar, J.; Zupan, B. Hands-on training about overfitting. PLoS Comput. Biol. 2021, 17, e1008671. [Google Scholar] [CrossRef]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Bayliss, L.; Jones, L.D. The role of artificial intelligence and machine learning in predicting orthopaedic outcomes. Bone Jt. J. 2019, 101, 1476–1478. [Google Scholar] [CrossRef]

- Mutasa, S.; Sun, S.; Ha, R. Understanding artificial intelligence based radiology studies: What is overfitting? Clin. Imaging 2020, 65, 96–99. [Google Scholar] [CrossRef]

- Lidströmer, N.; Ashrafian, H. (Eds.) Artificial Intelligence in Medicine; Springer International Publishing: Cham, Switzerland, 2020; ISBN 9783030580803. [Google Scholar]

- Razavian, N.; Knoll, F.; Geras, K.J. Artificial Intelligence Explained for Nonexperts. Semin. Musculoskelet. Radiol. 2020, 24, 3–11. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Brown, N.; Sandholm, T. Superhuman AI for multiplayer poker. Science 2019, 365, 885–890. [Google Scholar] [CrossRef]

- De Kok, J.; Vroonhof, P.; Snijders, J.; Roullis, G.; Clarke, M.; Peereboom, K.; Dorst, P.; Isusi, I. Work-Related MSDs: Prevalence, Costs and Demographics in the EU; European Agency for Safety and Health at Work (EU-OSHA): Bilbao, Spain, 2019. [Google Scholar]

- Cieza, A.; Causey, K.; Kamenov, K.; Hanson, S.W.; Chatterji, S.; Vos, T. Global estimates of the need for rehabilitation based on the Global Burden of Disease study 2019: A systematic analysis for the Global Burden of Disease Study 2019. Lancet 2020, 396, 2006–2017. [Google Scholar] [CrossRef]

- Ajmera, P.; Kharat, A.; Botchu, R.; Gupta, H.; Kulkarni, V. Real-world analysis of artificial intelligence in musculoskeletal trauma. J. Clin. Orthop. Trauma 2021, 22, 101573. [Google Scholar] [CrossRef] [PubMed]

- Laur, O.; Wang, B. Musculoskeletal trauma and artificial intelligence: Current trends and projections. Skelet. Radiol. 2022, 51, 257–269. [Google Scholar] [CrossRef] [PubMed]

- Bruno, M.A.; Walker, E.A.; Abujudeh, H.H. Understanding and Confronting Our Mistakes: The Epidemiology of Error in Radiology and Strategies for Error Reduction. Radiographics 2015, 35, 1668–1676. [Google Scholar] [CrossRef] [PubMed]

- Lindsey, R.; Daluiski, A.; Chopra, S.; Lachapelle, A.; Mozer, M.; Sicular, S.; Hanel, D.; Gardner, M.; Gupta, A.; Hotchkiss, R.; et al. Deep neural network improves fracture detection by clinicians. Proc. Natl. Acad. Sci. USA 2018, 115, 11591–11596. [Google Scholar] [CrossRef]

- Liu, P.-R.; Zhang, J.-Y.; Xue, M.; Duan, Y.-Y.; Hu, J.-L.; Liu, S.-X.; Xie, Y.; Wang, H.-L.; Wang, J.-W.; Huo, T.-T.; et al. Artificial Intelligence to Diagnose Tibial Plateau Fractures: An Intelligent Assistant for Orthopedic Physicians. Curr. Med. Sci. 2021, 41, 1158–1164. [Google Scholar] [CrossRef]

- Murata, K.; Endo, K.; Aihara, T.; Suzuki, H.; Sawaji, Y.; Matsuoka, Y.; Nishimura, H.; Takamatsu, T.; Konishi, T.; Maekawa, A.; et al. Artificial intelligence for the detection of vertebral fractures on plain spinal radiography. Sci. Rep. 2020, 10, 20031. [Google Scholar] [CrossRef]

- Saun, T.J. Automated Classification of Radiographic Positioning of Hand X-rays Using a Deep Neural Network. Plast. Surg. 2021, 29, 75–80. [Google Scholar] [CrossRef]

- Suzuki, T.; Maki, S.; Yamazaki, T.; Wakita, H.; Toguchi, Y.; Horii, M.; Yamauchi, T.; Kawamura, K.; Aramomi, M.; Sugiyama, H.; et al. Detecting Distal Radial Fractures from Wrist Radiographs Using a Deep Convolutional Neural Network with an Accuracy Comparable to Hand Orthopedic Surgeons. J. Digit. Imaging 2022, 35, 39–46. [Google Scholar] [CrossRef]

- Yu, J.S.; Yu, S.M.; Erdal, B.S.; Demirer, M.; Gupta, V.; Bigelow, M.; Salvador, A.; Rink, T.; Lenobel, S.S.; Prevedello, L.M.; et al. Detection and localisation of hip fractures on anteroposterior radiographs with artificial intelligence: Proof of concept. Clin. Radiol. 2020, 75, 237.e1–237.e9. [Google Scholar] [CrossRef]

- Zech, J.R.; Santomartino, S.M.; Yi, P.H. Artificial Intelligence (AI) for Fracture Diagnosis: An Overview of Current Products and Considerations for Clinical Adoption, from the AJR Special Series on AI Applications. Am. J. Roentgenol. 2022. [Google Scholar] [CrossRef] [PubMed]

- Kachalia, A.; Gandhi, T.K.; Puopolo, A.L.; Yoon, C.; Thomas, E.J.; Griffey, R.; Brennan, T.A.; Studdert, D.M. Missed and delayed diagnoses in the emergency department: A study of closed malpractice claims from 4 liability insurers. Ann. Emerg. Med. 2007, 49, 196–205. [Google Scholar] [CrossRef] [PubMed]

- Kalmet, P.H.S.; Sanduleanu, S.; Primakov, S.; Wu, G.; Jochems, A.; Refaee, T.; Ibrahim, A.; Hulst, L.V.; Lambin, P.; Poeze, M. Deep learning in fracture detection: A narrative review. Acta Orthop. 2020, 91, 215–220. [Google Scholar] [CrossRef] [PubMed]

- Berry, G.E.; Adams, S.; Harris, M.B.; Boles, C.A.; McKernan, M.G.; Collinson, F.; Hoth, J.J.; Meredith, J.W.; Chang, M.C.; Miller, P.R. Are plain radiographs of the spine necessary during evaluation after blunt trauma? Accuracy of screening torso computed tomography in thoracic/lumbar spine fracture diagnosis. J. Trauma 2005, 59, 1410–1413; discussion 1413. [Google Scholar] [CrossRef]

- Paixao, T.; DiFranco, M.D.; Ljuhar, R.; Ljuhar, D.; Goetz, C.; Bertalan, Z.; Dimai, H.P.; Nehrer, S. A novel quantitative metric for joint space width: Data from the Osteoarthritis Initiative (OAI). Osteoarthr. Cartil. 2020, 28, 1055–1061. [Google Scholar] [CrossRef]

- Kim, Y.J.; Lee, S.R.; Choi, J.-Y.; Kim, K.G. Using Convolutional Neural Network with Taguchi Parametric Optimization for Knee Segmentation from X-ray Images. Biomed. Res. Int. 2021, 2021, 5521009. [Google Scholar] [CrossRef]

- Kohn, M.D.; Sassoon, A.A.; Fernando, N.D. Classifications in Brief: Kellgren-Lawrence Classification of Osteoarthritis. Clin. Orthop. Relat. Res. 2016, 474, 1886–1893. [Google Scholar] [CrossRef]

- Nehrer, S.; Ljuhar, R.; Steindl, P.; Simon, R.; Maurer, D.; Ljuhar, D.; Bertalan, Z.; Dimai, H.P.; Goetz, C.; Paixao, T. Automated Knee Osteoarthritis Assessment Increases Physicians’ Agreement Rate and Accuracy: Data from the Osteoarthritis Initiative. Cartilage 2021, 13, 957S–965S. [Google Scholar] [CrossRef]

- Dallora, A.L.; Anderberg, P.; Kvist, O.; Mendes, E.; Diaz Ruiz, S.; Sanmartin Berglund, J. Bone age assessment with various machine learning techniques: A systematic literature review and meta-analysis. PLoS ONE 2019, 14, e0220242. [Google Scholar] [CrossRef]

- Larson, D.B.; Chen, M.C.; Lungren, M.P.; Halabi, S.S.; Stence, N.V.; Langlotz, C.P. Performance of a Deep-Learning Neural Network Model in Assessing Skeletal Maturity on Pediatric Hand Radiographs. Radiology 2018, 287, 313–322. [Google Scholar] [CrossRef] [Green Version]

- Tsai, A.; Johnston, P.R.; Gordon, L.B.; Walters, M.; Kleinman, M.; Laor, T. Skeletal maturation and long-bone growth patterns of patients with progeria: A retrospective study. Lancet Child Adolesc. Health 2020, 4, 281–289. [Google Scholar] [CrossRef]

- Lane, N.E. Epidemiology, etiology, and diagnosis of osteoporosis. Am. J. Obstet. Gynecol. 2006, 194, S3–S11. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, N.; Sukegawa, S.; Kitamura, A.; Goto, R.; Noda, T.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Kawasaki, K.; et al. Deep Learning for Osteoporosis Classification Using Hip Radiographs and Patient Clinical Covariates. Biomolecules 2020, 10, 1534. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, N.; Sukegawa, S.; Yamashita, K.; Manabe, M.; Nakano, K.; Takabatake, K.; Kawai, H.; Ozaki, T.; Kawasaki, K.; Nagatsuka, H.; et al. Effect of Patient Clinical Variables in Osteoporosis Classification Using Hip X-rays in Deep Learning Analysis. Medicina 2021, 57, 846. [Google Scholar] [CrossRef] [PubMed]

- Snell, D.L.; Hipango, J.; Sinnott, K.A.; Dunn, J.A.; Rothwell, A.; Hsieh, C.J.; DeJong, G.; Hooper, G. Rehabilitation after total joint replacement: A scoping study. Disabil. Rehabil. 2018, 40, 1718–1731. [Google Scholar] [CrossRef]

- Borjali, A.; Chen, A.F.; Bedair, H.S.; Melnic, C.M.; Muratoglu, O.K.; Morid, M.A.; Varadarajan, K.M. Comparing the performance of a deep convolutional neural network with orthopedic surgeons on the identification of total hip prosthesis design from plain radiographs. Med. Phys. 2021, 48, 2327–2336. [Google Scholar] [CrossRef]

- Urban, G.; Porhemmat, S.; Stark, M.; Feeley, B.; Okada, K.; Baldi, P. Classifying shoulder implants in X-ray images using deep learning. Comput. Struct. Biotechnol. J. 2020, 18, 967–972. [Google Scholar] [CrossRef]

- Lee, C.S.; Lee, M.S.; Byon, S.S.; Kim, S.H.; Lee, B.I.; Lee, B.-D. Computer-aided automatic measurement of leg length on full leg radiographs. Skelet. Radiol. 2021, 51, 1007–1016. [Google Scholar] [CrossRef]

- Pei, Y.; Yang, W.; Wei, S.; Cai, R.; Li, J.; Guo, S.; Li, Q.; Wang, J.; Li, X. Automated measurement of hip-knee-ankle angle on the unilateral lower limb X-rays using deep learning. Phys. Eng. Sci. Med. 2021, 44, 53–62. [Google Scholar] [CrossRef]

- Schock, J.; Truhn, D.; Abrar, D.B.; Merhof, D.; Conrad, S.; Post, M.; Mittelstrass, F.; Kuhl, C.; Nebelung, S. Automated Analysis of Alignment in Long-Leg Radiographs by Using a Fully Automated Support System Based on Artificial Intelligence. Radiol. Artif. Intell. 2021, 3, e200198. [Google Scholar] [CrossRef]

- Simon, S.; Schwarz, G.M.; Aichmair, A.; Frank, B.J.H.; Hummer, A.; DiFranco, M.D.; Dominkus, M.; Hofstaetter, J.G. Fully automated deep learning for knee alignment assessment in lower extremity radiographs: A cross-sectional diagnostic study. Skelet. Radiol. 2022, 51, 1249–1259. [Google Scholar] [CrossRef] [PubMed]

- Tsai, A. Anatomical landmark localization via convolutional neural networks for limb-length discrepancy measurements. Pediatr. Radiol. 2021, 51, 1431–1447. [Google Scholar] [CrossRef] [PubMed]

- Yoon, S.-J.; Hyong Kim, T.; Joo, S.-B.; Eel Oh, S. Automatic multi-class intertrochanteric femur fracture detection from CT images based on AO/OTA classification using faster R-CNN-BO method. J. Appl. Biomed. 2020, 18, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Xu, Z.; Tong, Y.; Xia, L.; Jie, B.; Ding, P.; Bai, H.; Zhang, Y.; He, Y. Detection and classification of mandibular fracture on CT scan using deep convolutional neural network. Clin. Oral Investig. 2022, 26, 4593–4601. [Google Scholar] [CrossRef]

- Pranata, Y.D.; Wang, K.-C.; Wang, J.-C.; Idram, I.; Lai, J.-Y.; Liu, J.-W.; Hsieh, I.-H. Deep learning and SURF for automated classification and detection of calcaneus fractures in CT images. Comput. Methods Programs Biomed. 2019, 171, 27–37. [Google Scholar] [CrossRef]

- Aghnia Farda, N.; Lai, J.-Y.; Wang, J.-C.; Lee, P.-Y.; Liu, J.-W.; Hsieh, I.-H. Sanders classification of calcaneal fractures in CT images with deep learning and differential data augmentation techniques. Injury 2021, 52, 616–624. [Google Scholar] [CrossRef]

- Meng, X.H.; Di Wu, J.; Wang, Z.; Ma, X.L.; Dong, X.M.; Liu, A.E.; Chen, L. A fully automated rib fracture detection system on chest CT images and its impact on radiologist performance. Skelet. Radiol. 2021, 50, 1821–1828. [Google Scholar] [CrossRef]

- Kamiya, N. Deep Learning Technique for Musculoskeletal Analysis. Adv. Exp. Med. Biol. 2020, 1213, 165–176. [Google Scholar] [CrossRef]

- Sage, J.E.; Gavin, P. Musculoskeletal MRI. Vet. Clin. N. Am. Small Anim. Pract. 2016, 46, 421–451. [Google Scholar] [CrossRef]

- Bien, N.; Rajpurkar, P.; Ball, R.L.; Irvin, J.; Park, A.; Jones, E.; Bereket, M.; Patel, B.N.; Yeom, K.W.; Shpanskaya, K.; et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet. PLoS Med. 2018, 15, e1002699. [Google Scholar] [CrossRef] [Green Version]

- Rizk, B.; Brat, H.; Zille, P.; Guillin, R.; Pouchy, C.; Adam, C.; Ardon, R.; d’Assignies, G. Meniscal lesion detection and characterization in adult knee MRI: A deep learning model approach with external validation. Phys. Med. 2021, 83, 64–71. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Zhou, Z.; Samsonov, A.; Blankenbaker, D.; Larison, W.; Kanarek, A.; Lian, K.; Kambhampati, S.; Kijowski, R. Deep Learning Approach for Evaluating Knee MR Images: Achieving High Diagnostic Performance for Cartilage Lesion Detection. Radiology 2018, 289, 160–169. [Google Scholar] [CrossRef] [PubMed]

- D’Antoni, F.; Russo, F.; Ambrosio, L.; Vollero, L.; Vadalà, G.; Merone, M.; Papalia, R.; Denaro, V. Artificial Intelligence and Computer Vision in Low Back Pain: A Systematic Review. Int. J. Environ. Res. Public Health 2021, 18, 10909. [Google Scholar] [CrossRef]

- Siouras, A.; Moustakidis, S.; Giannakidis, A.; Chalatsis, G.; Liampas, I.; Vlychou, M.; Hantes, M.; Tasoulis, S.; Tsaopoulos, D. Knee Injury Detection Using Deep Learning on MRI Studies: A Systematic Review. Diagnostics 2022, 12, 537. [Google Scholar] [CrossRef]

- Li, P.; Kong, D.; Tang, T.; Su, D.; Yang, P.; Wang, H.; Zhao, Z.; Liu, Y. Orthodontic Treatment Planning based on Artificial Neural Networks. Sci. Rep. 2019, 9, 2037. [Google Scholar] [CrossRef] [PubMed]

- Rohella, D.; Oswal, P.; Pisarla, M.; Maini, A.P.; Das, A.; Kandikatla, P.; Rajesh, D. Interrelation of Orthopedic and Orthodontic Findings from 6–12 Years Of Age: An Original Research. J. Posit. Sch. Psychol. 2022, 6, 4960–4968. [Google Scholar]

- Capuani, S.; Gambarini, G.; Guarnieri, R.; Di Pietro, G.; Testarelli, L.; Di Nardo, D. Nuclear Magnetic Resonance Microimaging for the Qualitative Assessment of Root Canal Treatment: An Ex Vivo Preliminary Study. Diagnostics 2021, 11, 1012. [Google Scholar] [CrossRef]

- Gaudino, C.; Cosgarea, R.; Heiland, S.; Csernus, R.; Beomonte Zobel, B.; Pham, M.; Kim, T.-S.; Bendszus, M.; Rohde, S. MR-Imaging of teeth and periodontal apparatus: An experimental study comparing high-resolution MRI with MDCT and CBCT. Eur. Radiol. 2011, 21, 2575–2583. [Google Scholar] [CrossRef]

- Niraj, L.K.; Patthi, B.; Singla, A.; Gupta, R.; Ali, I.; Dhama, K.; Kumar, J.K.; Prasad, M. MRI in Dentistry—A Future Towards Radiation Free Imaging—Systematic Review. J. Clin. Diagn. Res. 2016, 10, ZE14–ZE19. [Google Scholar] [CrossRef]

- Gili, T.; Di Carlo, G.; Capuani, S.; Auconi, P.; Caldarelli, G.; Polimeni, A. Complexity and data mining in dental research: A network medicine perspective on interceptive orthodontics. Orthod. Craniofac. Res. 2021, 24 (Suppl. 2), 16–25. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Buda, M.; Saha, A.; Bashir, M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reson. Imaging 2019, 49, 939–954. [Google Scholar] [CrossRef] [PubMed]

- Ho, S.Y.; Wong, L.; Goh, W.W.B. Avoid Oversimplifications in Machine Learning: Going beyond the Class-Prediction Accuracy. Patterns 2020, 1, 100025. [Google Scholar] [CrossRef] [PubMed]

- Zech, J.R.; Badgeley, M.A.; Liu, M.; Costa, A.B.; Titano, J.J.; Oermann, E.K. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study. PLoS Med. 2018, 15, e1002683. [Google Scholar] [CrossRef]

- Ho, S.Y.; Phua, K.; Wong, L.; Bin Goh, W.W. Extensions of the External Validation for Checking Learned Model Interpretability and Generalizability. Patterns 2020, 1, 100129. [Google Scholar] [CrossRef] [PubMed]

- Bongers, M.E.R.; Thio, Q.C.B.S.; Karhade, A.V.; Stor, M.L.; Raskin, K.A.; Lozano Calderon, S.A.; DeLaney, T.F.; Ferrone, M.L.; Schwab, J.H. Does the SORG Algorithm Predict 5-year Survival in Patients with Chondrosarcoma? An External Validation. Clin. Orthop. Relat. Res. 2019, 477, 2296–2303. [Google Scholar] [CrossRef] [PubMed]

- Bleeker, S.; Moll, H.; Steyerberg, E.; Donders, A.; Derksen-Lubsen, G.; Grobbee, D.; Moons, K. External validation is necessary in prediction research. J. Clin. Epidemiol. 2003, 56, 826–832. [Google Scholar] [CrossRef]

- Oliveira, E.; Carmo, L.; van den Merkhof, A.; Olczak, J.; Gordon, M.; Jutte, P.C.; Jaarsma, R.L.; IJpma, F.F.A.; Doornberg, J.N.; Prijs, J. An increasing number of convolutional neural networks for fracture recognition and classification in orthopaedics: Are these externally validated and ready for clinical application? Bone Jt. Open 2021, 2, 879–885. [Google Scholar] [CrossRef]

- Langlotz, C.P. Will Artificial Intelligence Replace Radiologists? Radiol. Artif. Intell. 2019, 1, e190058. [Google Scholar] [CrossRef]

- Çalışkan, S.A.; Demir, K.; Karaca, O. Artificial intelligence in medical education curriculum: An e-Delphi study for competencies. PLoS ONE 2022, 17, e0271872. [Google Scholar] [CrossRef]

- Ogink, P.T.; Groot, O.Q.; Karhade, A.V.; Bongers, M.E.R.; Oner, F.C.; Verlaan, J.-J.; Schwab, J.H. Wide range of applications for machine-learning prediction models in orthopedic surgical outcome: A systematic review. Acta Orthop. 2021, 92, 526–531. [Google Scholar] [CrossRef]

- Murdoch, T.B.; Detsky, A.S. The inevitable application of big data to health care. JAMA 2013, 309, 1351–1352. [Google Scholar] [CrossRef] [PubMed]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, K.; Stotter, C.; Klestil, T.; Nehrer, S. Artificial Intelligence in Orthopedic Radiography Analysis: A Narrative Review. Diagnostics 2022, 12, 2235. https://doi.org/10.3390/diagnostics12092235

Chen K, Stotter C, Klestil T, Nehrer S. Artificial Intelligence in Orthopedic Radiography Analysis: A Narrative Review. Diagnostics. 2022; 12(9):2235. https://doi.org/10.3390/diagnostics12092235

Chicago/Turabian StyleChen, Kenneth, Christoph Stotter, Thomas Klestil, and Stefan Nehrer. 2022. "Artificial Intelligence in Orthopedic Radiography Analysis: A Narrative Review" Diagnostics 12, no. 9: 2235. https://doi.org/10.3390/diagnostics12092235

APA StyleChen, K., Stotter, C., Klestil, T., & Nehrer, S. (2022). Artificial Intelligence in Orthopedic Radiography Analysis: A Narrative Review. Diagnostics, 12(9), 2235. https://doi.org/10.3390/diagnostics12092235