1. Introduction

The COVID-19 pandemic has caused severe healthcare crises across the globe in a very short period. The pandemic broke out in early December 2019 in Wuhan, China, and was declared a global pandemic on 11th March 2020 by the World Health Organization (WHO) [

1]. Researchers across the world have reported COVID-19 to be a highly infectious disease that severely affects the respiratory system and has common symptoms like dry cough, myalgia, fever, headache, chest pain, and sore throat [

2]. The current medical diagnostic processes lack proper medicine and drugs as well as hospital resources for the treatment of COVID-19 infection [

3,

4]. Reverse transcription-polymerase chain reaction (RT-PCR), a manual, time-consuming, and costly tool, is the most frequently used diagnostic method for detection which causes the risk to medical staff [

5,

6]. It is still an ongoing pandemic and has led to various variants, thereby, resulting in high mortality rates in many countries. Hence, there is a strong need for a safe and effective methodology that can detect COVID-19 infection at an early stage. The significance of two imaging modalities such as chest X-ray (CXR) and computed tomography (CT) has been studied for diagnosing COVID-19 [

7,

8,

9]. However, manual visual inspection of both CXR and CT images is time-taking and tedious which may sometimes result in an inaccurate diagnosis [

7,

10]. Recently, tremendous efforts have been made in developing artificial intelligence (AI) based automated models for accurate diagnosis of COVID-19 to lessen the workload of radiologists [

11].

Deep learning (DL) algorithms, especially, convolutional neural network (CNN), have offered efficient solutions for pneumonia detection in CXR images [

12,

13,

14,

15,

16]. Soon after, many DL-based approaches have been reported to diagnose COVID-19 using CXR images. Ozturk et al. [

17] proposed the DarkCovidNet model for COVID-19 detection in CXR images which obtained a classification accuracy of 98.08% and 87.02% for two-class and three-class scenarios, respectively. Hemdan et al. [

18] proposed COVIDX-Net for binary classification task which was validated using only 50 CXR images. Narin et al. [

19] proposed a model using the ResNet-50 and achieved a binary classification accuracy of 98% over 100 images. Ucar and Korkmaz [

20] presented a multi-class classification system based on SqueezeNet and Bayesian optimizer that yielded an accuracy of 98.3%. Rahimzadeh and Attar [

21] designed a model that concatenates Xception and ResNet50 networks and yields 91.4% accuracy for multi-class cases. Wang et al. [

22] developed a tailored deep CNN model using CXR images that achieved an accuracy of 93.3%. Toğaçar et al. [

23] achieved a classification accuracy of 97.06% using fuzzy color, stacking approach, and two DL approaches such as MobileNetV2 and SqueezeNet. Toraman et al. [

24] proposed a convolutional CapsNet model to detect COVID-19 using CXR images and achieved an accuracy of 84.22% for multi-class classification cases. Han et al. [

25] developed a deep 3D multiple instance learning methodology using chest CT images and obtained an accuracy of 94.3% on a three-class classification task. Zhang et al. [

26] proposed a 7-layer-based CNN with stochastic pooling to detect COVID-19 from CT images. Wang et al. [

27] developed a novel CCSHNet system for COVID-19 classification where two best pre-trained CNN models were selected to learn features and a discriminant correlation analysis method was used to fuse those features. Chaudhary and Pachori [

28] used pretrained CNN models over the sub-band images generated from ourier-Bessel series expansion-based decomposition (FBSE) for COVID-19 detection. Joshi et al. [

29] designed a multi-scale CNN for effective COVID-19 diagnosis from CT images. Recently, Bhattacharyya et al. [

30] employed the VGG-19 model with the binary robust invariant scalable key points (BRISK) to detect COVID-19 cases from X-ray images. Jyoti et al. [

31] proposed an automated COVID-19 detection method using memristive crossbar array-based tunable Q-wavelet transform (TQWT) and ResNet-50.

The literature shows that many existing DL-based methods have been validated using a few annotated CXR/CT images for COVID-19 diagnosis. The most frequently used DL models require a lot of training parameters and memory. The design of a lightweight DL method thus assumes significant urgency. Moreover, the performance of DL models relies on several hyperparameters: learning rate, batch size, type of optimizer, and the number of epochs, etc. However, there are only a limited number of studies on these parameters. Hence, there exists ample scope to conduct an investigation that includes an in-depth analysis of different hyperparameters to obtain the best possible results for COVID-19 detection. In a recent study [

32], the COVID-19 classification performance was evaluated using eight pre-trained CNN models over a small dataset. All those models required a huge number of parameters. The performance of these models was evaluated for only binary classification scenarios. Nonetheless, despite being very challenging, a multi-class classification is in high demand.

To address the aforementioned issues, a lightweight CNN is proposed for the automated detection of COVID-19 infection in the current study. The major contributions of this study are summarized as follows:

A light-weight CNN model is proposed to diagnose COVID-19 infection in CXR images which require low computational cost and memory, thereby making it more suitable for real-time diagnosis.

The impact of several hyperparameters like different optimization techniques, number of epochs, batch size, and the learning rate is analyzed.

The performances of both binary (Normal and COVID-19) and multi-class (Normal, COVID-19, and pneumonia) classification cases have been studied.

A performance evaluation is conducted employing two larger datasets. Finally, the proposed model is compared with a few contemporary pre-trained CNN architectures including VGG-19 [

33], ResNet-101 [

34], DenseNet-121 [

35], and Xception [

36] in terms of the number of parameters and memory required. The performance of the model is also compared with a few state-of-the-art methods.

The remaining of this article is structured as follows: In

Section 2, the datasets used and the suggested method for automated COVID-19 diagnosis are detailed.

Section 3 presents the experimental setup and results. Finally,

Section 4 draws the concluding remarks.

3. Experimental Setup and Results

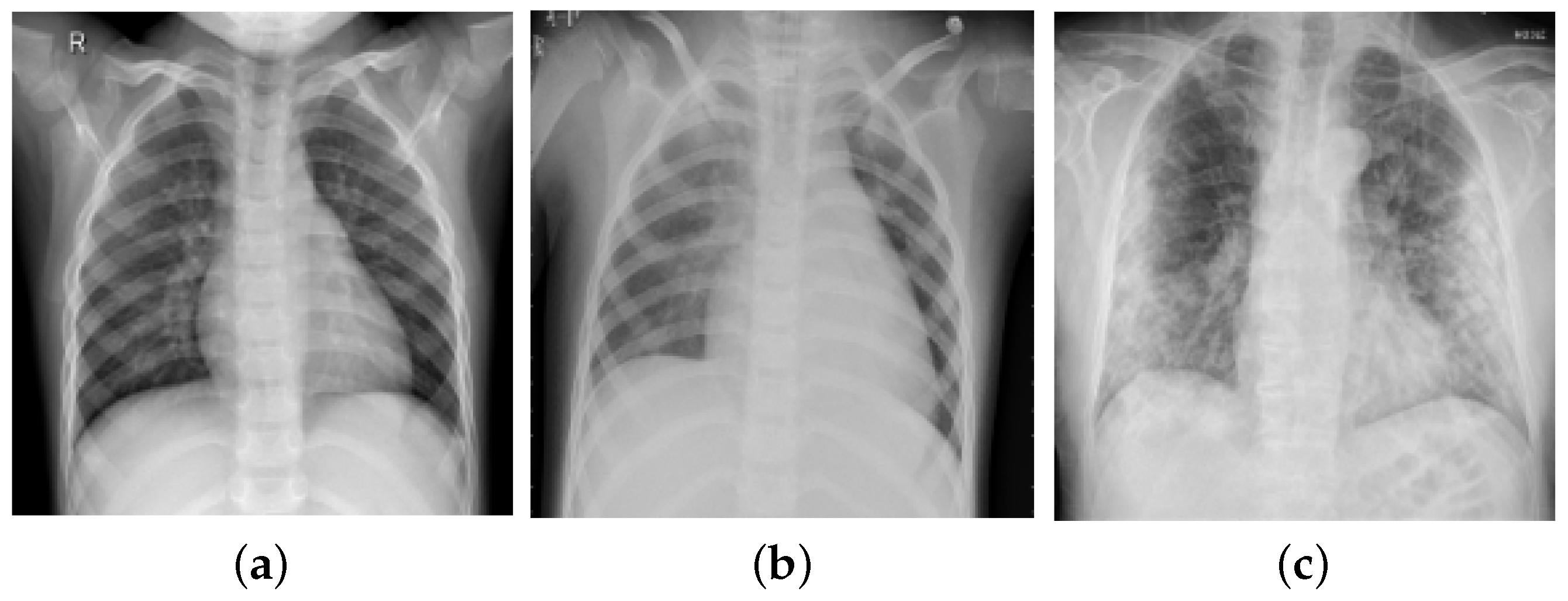

In this section, we present the experimental setup and results to verify the effectiveness of the proposed model. A set of experiments were performed using two datasets: Dataset-1 of 2250 frontal-view CXR images (pneumonia: 750, COVID-19: 750, and normal: 750) and Dataset-2 of 15,999 CXR images (pneumonia: 5575, COVID-19: 2358, and Normal: 8066). These images were first rescaled into a size

. All CNN models were developed using the PyTorch toolbox and all experiments were conducted on the Google Colab GPU platform with NVIDIA Tesla T4 GPU of 16 GB RAM. The performance of both the suggested approach and pre-trained architectures was evaluated using 10-fold cross-validation (CV) for Dataset-1, wherein in each trial, one fold was utilized for testing, and the rest folds for training. From the training set, 20% of samples were randomly chosen for validation. For Dataset-2, results were evaluated based on the train-test division strategy reported by Wang et al. [

22], wherein the test set contained 200 CXR images from each class and the remaining samples were retained for training the model, of which 20% are used for validation.

A different set of performance metrics such as sensitivity, specificity, precision, accuracy, and F1-score were used to assess each model. Furthermore, the heap-map results were computed using gradient-weighted class activation mapping (Grad-CAM) [

51] to visually interpret the effectiveness of the model by highlighting the relevant regions. We evaluated the performance of the model in two different scenarios: the first scenario deals with three-class classification (pneumonia, COVID-19, and normal) and the second scenario deals with binary classification (Normal and COVID-19). The hyperparameter setting used in our study is presented in

Table 4 which has been set empirically. Furthermore, a comparison analysis with pre-trained CNN architectures, namely VGG-19 [

33], ResNet-101 [

34], DenseNet-121 [

35], and Xception [

36] was done.

3.1. Results of the Proposed Model

The classification results obtained by the proposed model on both datasets are presented in this section.

3.1.1. Results on Dataset-1

The fold-wise results in terms of accuracy, precision, sensitivity, and F1-score for Dataset-1 are shown in

Table 5. The proposed model was also tested over binary classification tasks and the results are shown in

Table 6. Notably, the results provided in each fold were average results computed over all three classes. The average accuracy of 98.67% and 99.00% was obtained for multi-class and binary-class classification tasks, respectively. The confusion matrices in each run of 10-fold CV for multi-class and binary class scenarios are exhibited in

Figure 5 and

Figure 6, respectively.

Figure 7 shows the training and validation loss curves. It can be observed that the proposed model converges within 100 epochs. The plot is shown for a single run of a 10-fold CV. The Mathew correlation coefficient (MCC) and kappa score were computed as 0.9730 and 0.9860, respectively which indicate better prediction results for each class. The pictorial presentation of MCC and kappa score along with multi-class classification accuracy is depicted in

Figure 8. These values were recorded at different epochs and computed for a single fold.

Figure 9 shows the receiver operating characteristic (ROC) curves obtained by the suggested and pre-trained models for binary classification scenarios.

Finally, heat maps were provided using Grad-CAM to verify the visual interpretability of the proposed model.

Figure 10 illustrates the heat map results of a few sample normal and COVID-19 CXR images. The proposed model could locate the suspicious regions that indicated better interpretability of the classification results. Hence, it can be helpful to assist radiologists in their diagnosis.

Figure 10h depicts the heat map of a normal sample where suspicious regions are not indicated.

The computational cost of the proposed model was evaluated in terms of time (in seconds). Our model takes around s in the training stage to converge, which is comparatively faster than training the state-of-the-art CNN architectures. While testing an image takes approximately 0.035 s using the proposed model.

3.1.2. Results on Dataset-2

The performance of the proposed model is evaluated on Dataset 2.

Table 7 shows the classification results for both the classification scenarios (multi-class and binary classification) for Dataset-2. The average accuracy of 95.67% and 96.25% was achieved for multi-class and binary classification, respectively.

Figure 11 shows the confusion matrices for both scenarios.

3.2. Experiment on Different Hyperparameters

In this section, the impact of different hyperparameters, namely the number of epochs, batch size, learning rate, different optimizers, etc., is evaluated to identify the best classification performance. It is worth noting here that all experiments were conducted using Dataset-1.

3.2.1. Impact of Optimization Techniques

In this experiment, we explore several optimization techniques such as SGD [

52], Adam [

53], RMSProp [

54], and AdaGrad [

55] to ascertain the best classification performance.

Table 8 shows the detailed classification results using different optimizers. It can be seen that observed that the classification performance of the LW-CORONet with Adam optimizer is promising as compared to others. Therefore, all remaining experiments in this study were carried out using the Adam optimizer.

3.2.2. Impact of Learning Rate

In this experiment, we investigated the impact of different learning rates, and the learning rate with minimum validation loss was chosen.

Figure 12 illustrates a plot of learning rates versus validation loss. The point (represented in red dot) in the

Figure 12 specifies the optimal learning rate from which the loss drops significantly, thereby, facilitating better classification performance.

3.2.3. Impact of Different Batch Sizes

We evaluated the effect of different batch sizes in this experiment. The performance of LW-CORONet when trained with batch sizes of 32, 16, and 8 is tabulated in

Table 9. These results showed that the proposed model with a batch size of 32 results in stable and higher testing performance.

3.3. Misclassification Results Analysis

Figure 13 shows few misclassification results yielded by LW-CORONet on Dataset-1. These errors possibly occurred because of the similar visual features among the CXR images of the three classes.

3.4. Comparative Analysis of Pre-Trained CNN Architectures and the Proposed Model

We performed a comparative analysis among the pre-trained classification architectures, VGG-19 [

33], ResNet-101 [

34], DenseNet [

35], and Xception [

36] and the proposed model. The detailed classification performance on Dataset-1 is tabulated in

Table 10. It is worth mentioning here that these models were validated on the same images as done for the proposed model and the experimental setup was also the same. The proposed model required fewer parameters and memory but obtained a comparable or better performance compared to effective architectures like ResNet-101. All these pre-trained models were originally designed over ImageNet [

56] dataset but, were fined tuned later using the CXR images to perform multi-class classification. We fine-tuned only the last layer of these models and initialized other layers with the pre-trained weights.

3.5. Comparison with Existing Approaches

The proposed model was compared against the existing DL-based schemes for automated COVID-19 detection in CXR images.

Table 11 summarizes the obtained performance. It can be observed that the suggested model obtained promising results as compared with other approaches on Dataset-1 and achieved comparable or better performance even on the larger dataset (Dataset-2). The proposed model was validated using a relatively larger number of CXR samples compared to several recent studies [

17,

18,

19,

23,

28,

30,

31,

32]). Although studies in [

20,

21] employed datasets of comparable sizes, the COVID-19 class samples are only 76 and 180, respectively which imposes the class imbalance problem. Further, several studies were focused on solving either binary or multi-class classification tasks. But, the current study investigated both tasks and achieved an accuracy of 99.00% and 98.67% for binary and multi-class classification respectively on Dataset-1. Similarly, for Dataset-2, an accuracy of 96.25% and 95.67% was achieved for binary and multi-class classification, respectively.

3.6. Discussion

An automated DL-based model, LW-CORONet, is proposed for the effective detection of COVID-19 infection using CXR images. Of late, in the setting of COVID-19 detection, many DL-based studies have been performed; however, most of these studies were limited to smaller datasets and require huge memory space and higher computational costs. Hence, LW-CORONet was designed aiming to handle these issues. LW-CORONet was capable of learning discriminant features directly from CXR images while demanding fewer learning parameters and memory.

To verify the effectiveness of the proposed scheme, several extensive experiments were carried out using two larger datasets of 2250 and 15999 CXR samples from three categories such as normal, pneumonia, and COVID-19. This study also explored the impact of various hyperparameters: batch size, learning rate, and optimizers. A comparative analysis was done with four contemporary pre-trained classification networks and state-of-art approaches. The proposed scheme yielded a higher classification accuracy of 98.67% and 99.00% for multi-class and binary cases respectively on Dataset-1, whereas it is 95.67% and 96.25% on a larger dataset (Dataset-2). The proposed model is thus effective, lightweight, and hence can be utilized by radiologists for the early diagnosis of COVID-19 infection and pneumonia. The main advantages of the proposed model are as follows:

The proposed LW-CORONet has only five learnable layers which promote the learning of high-level features automatically from the CXR samples.

The proposed model is well-suited for both binary and multi-class classification scenarios and does not involve a hand-held feature extraction process.

The proposed architecture demands very few parameters compared to other CNN models and thus, necessitates low computational cost.

The proposed architecture is lightweight and uses less memory space.

The major drawback of this investigation is that the proposed model was trained with limited COVID-19 data due to the unavailability of a large-scale COVID-19 dataset.

4. Conclusions

This paper proposed, LW-CORONet, a novel model, for the early and accurate detection of COVID-19 infection from CXR images. The LW-CORONet was composed of five learnable layers with low computational power and memory space to extract detailed features. For the validation of the proposed scheme, an extensive set of experiments was carried out using two publicly available CXR datasets with larger COVID-19 samples. The effect of notable hyperparameters was verified on the proposed model to detect the best detection performance. Comparisons with pre-trained CNN models as well as current existing approaches revealed the superiority of the proposed approach in both multi-class and binary classification scenarios. Furthermore, the LW-CORONet model is better in terms of memory and computational cost. Overall, the suggested model is effective, lightweight, and hence can be suitable for clinicians for real-time COVID-19 diagnosis. However, in the future, the performance of LW-CORONet is suggested to be verified over a big and diverse dataset with more COVID-19 samples. Also, the lungs affected regions for both pneumonia and COVID-19 cases can be identified simultaneously with the classification of CXR images.