Automatic Refractive Error Estimation Using Deep Learning-Based Analysis of Red Reflex Images

Abstract

:1. Introduction

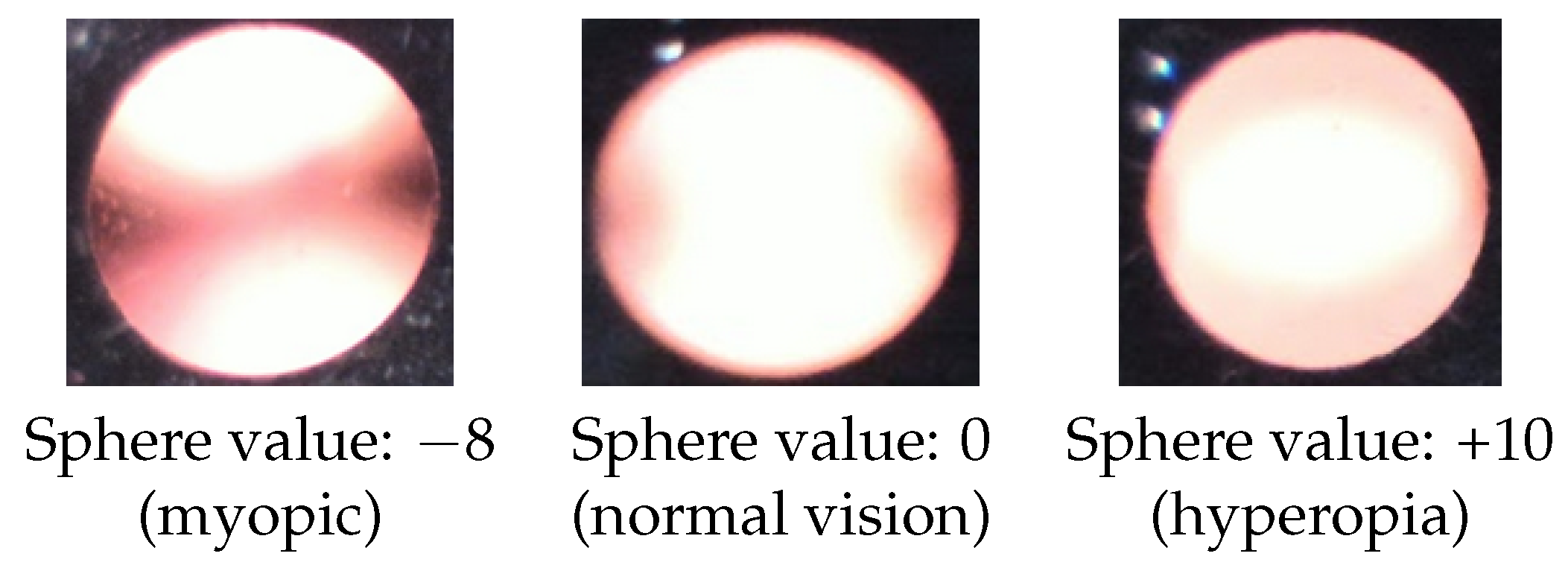

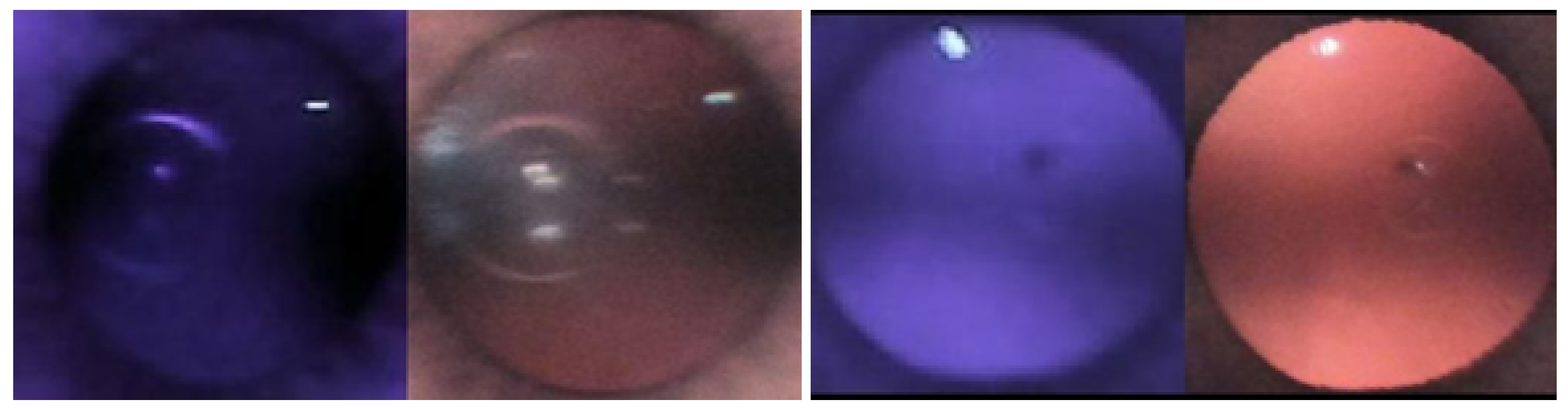

1.1. Red Reflex Test

1.2. Photoscreeners

1.3. Artificial Intelligence in Ophthalmology

2. Methods

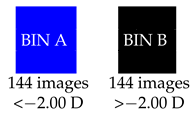

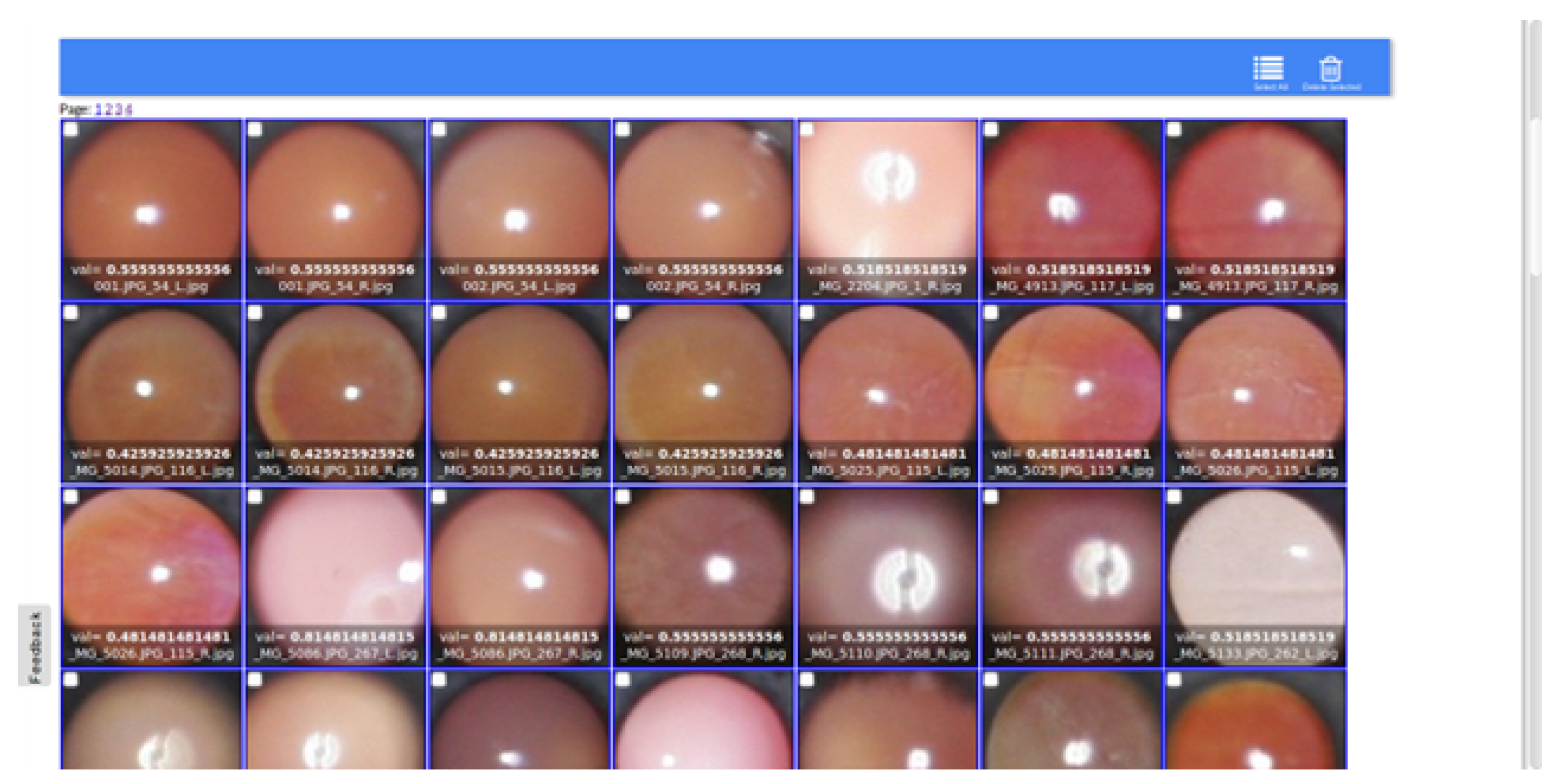

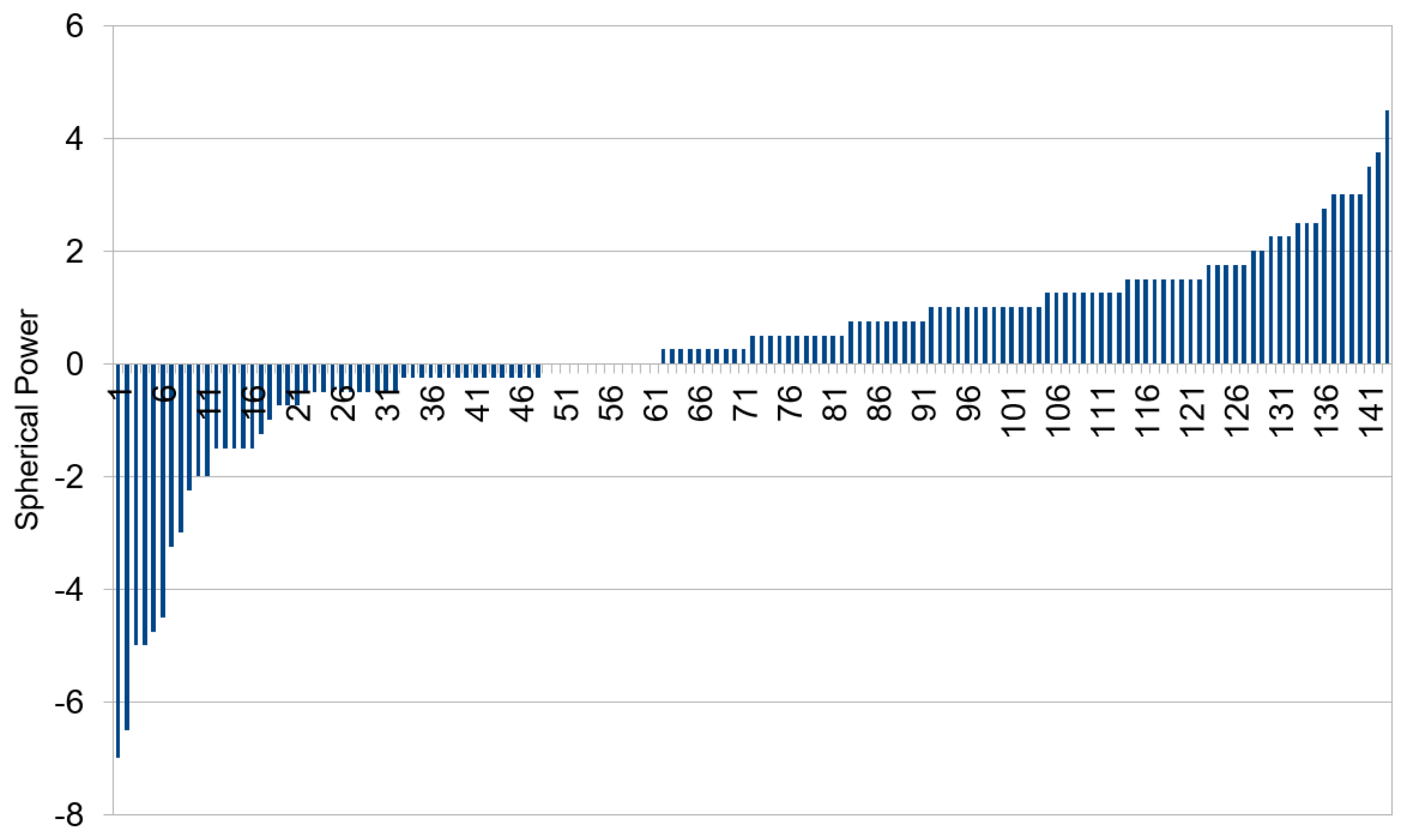

2.1. Data Preparation

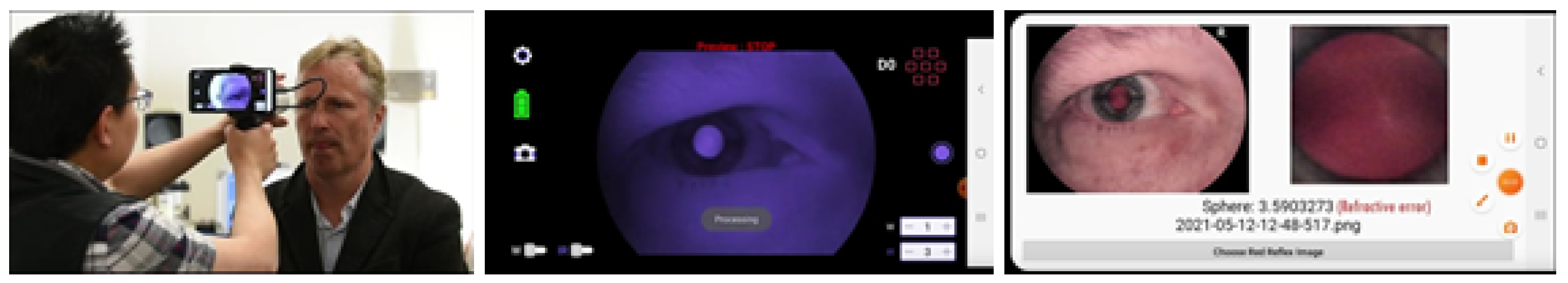

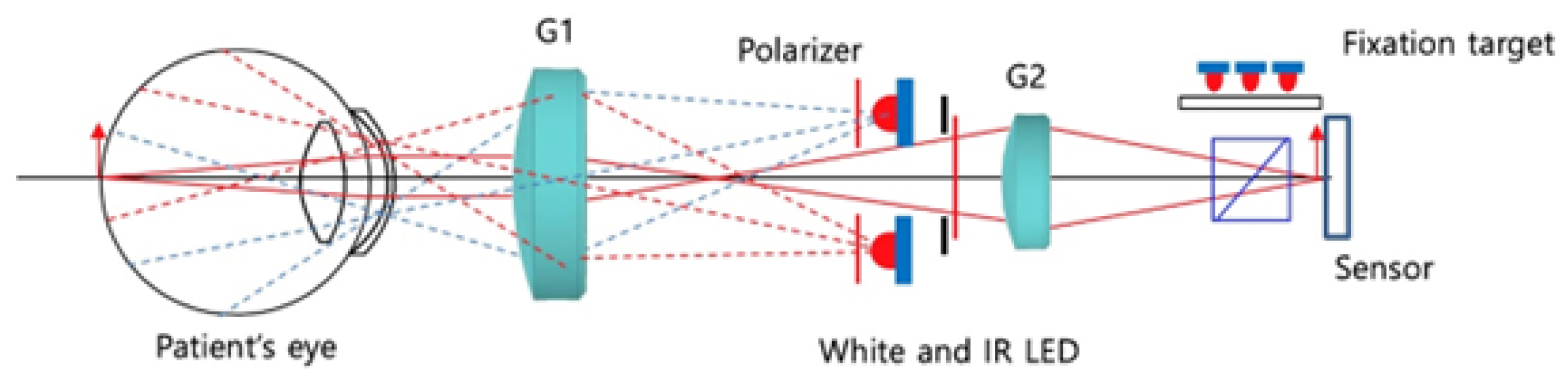

2.2. IR-Based Imaging with nun IR

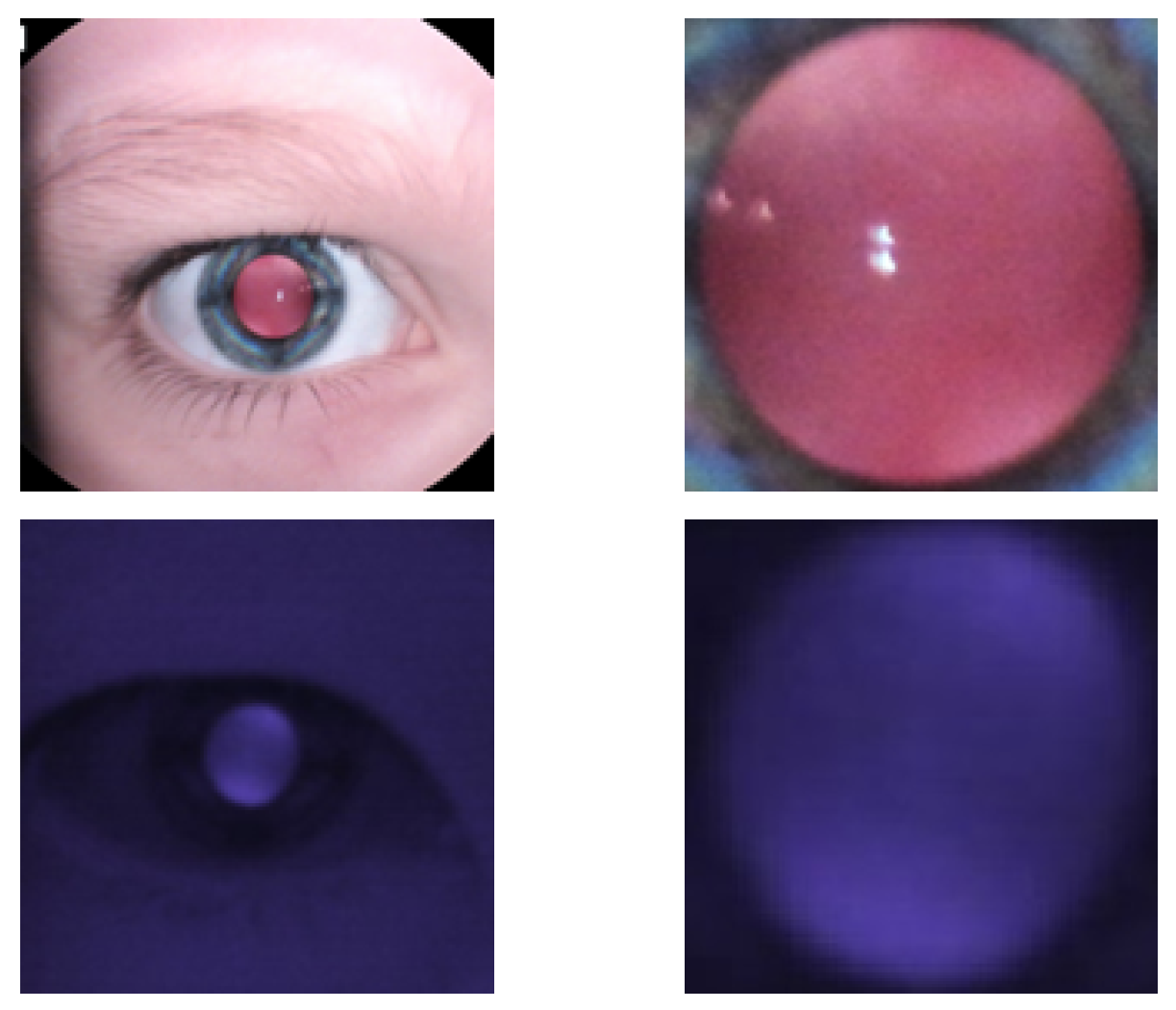

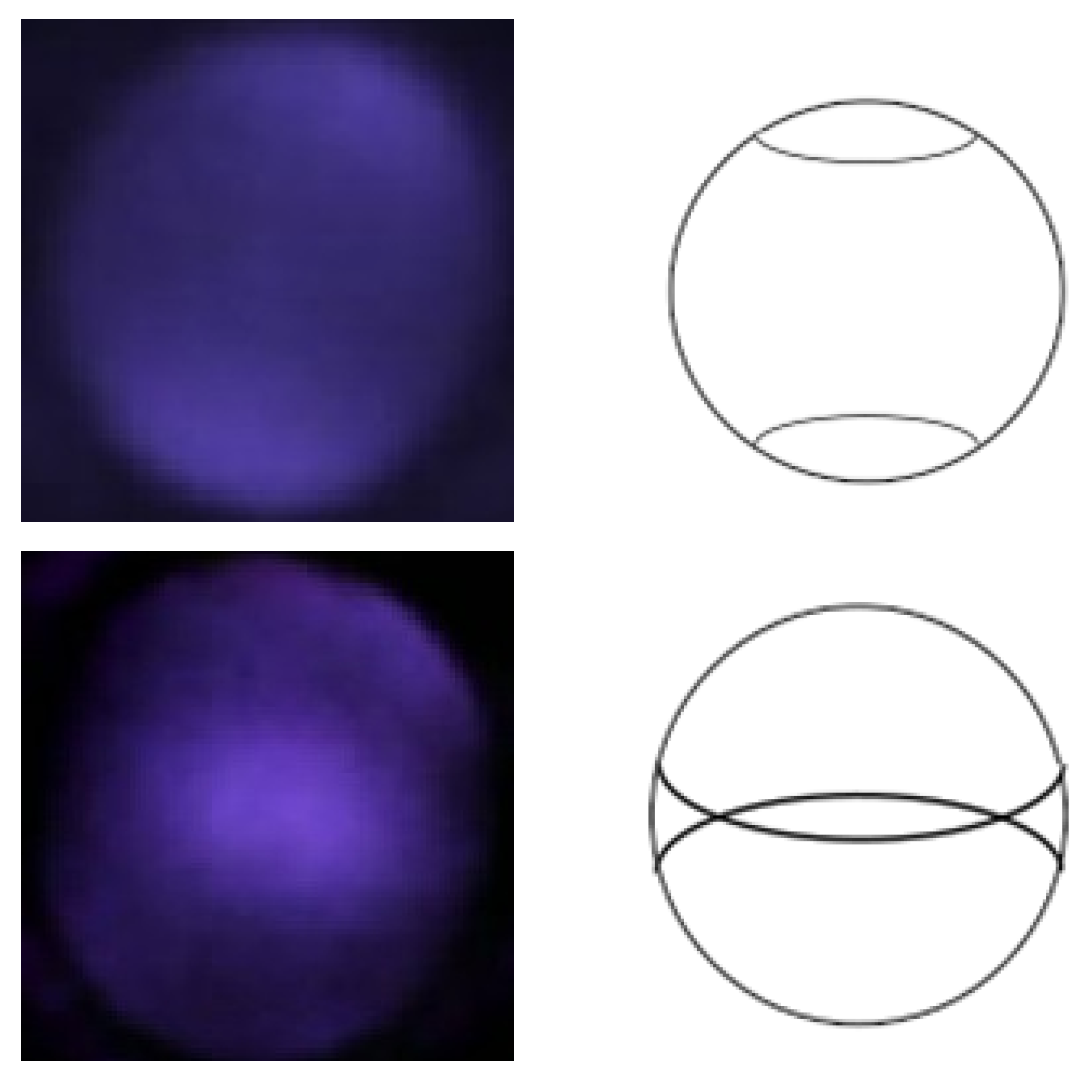

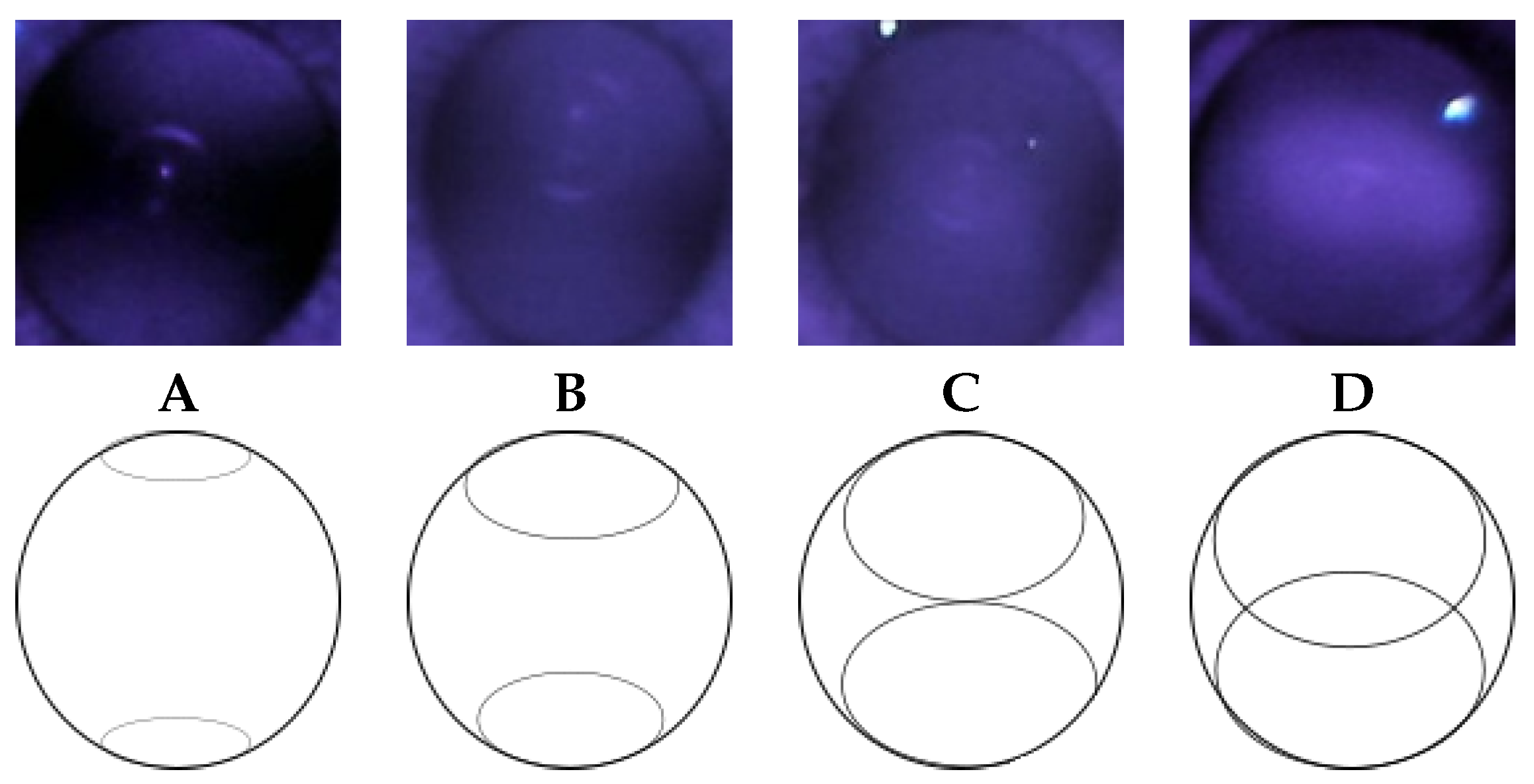

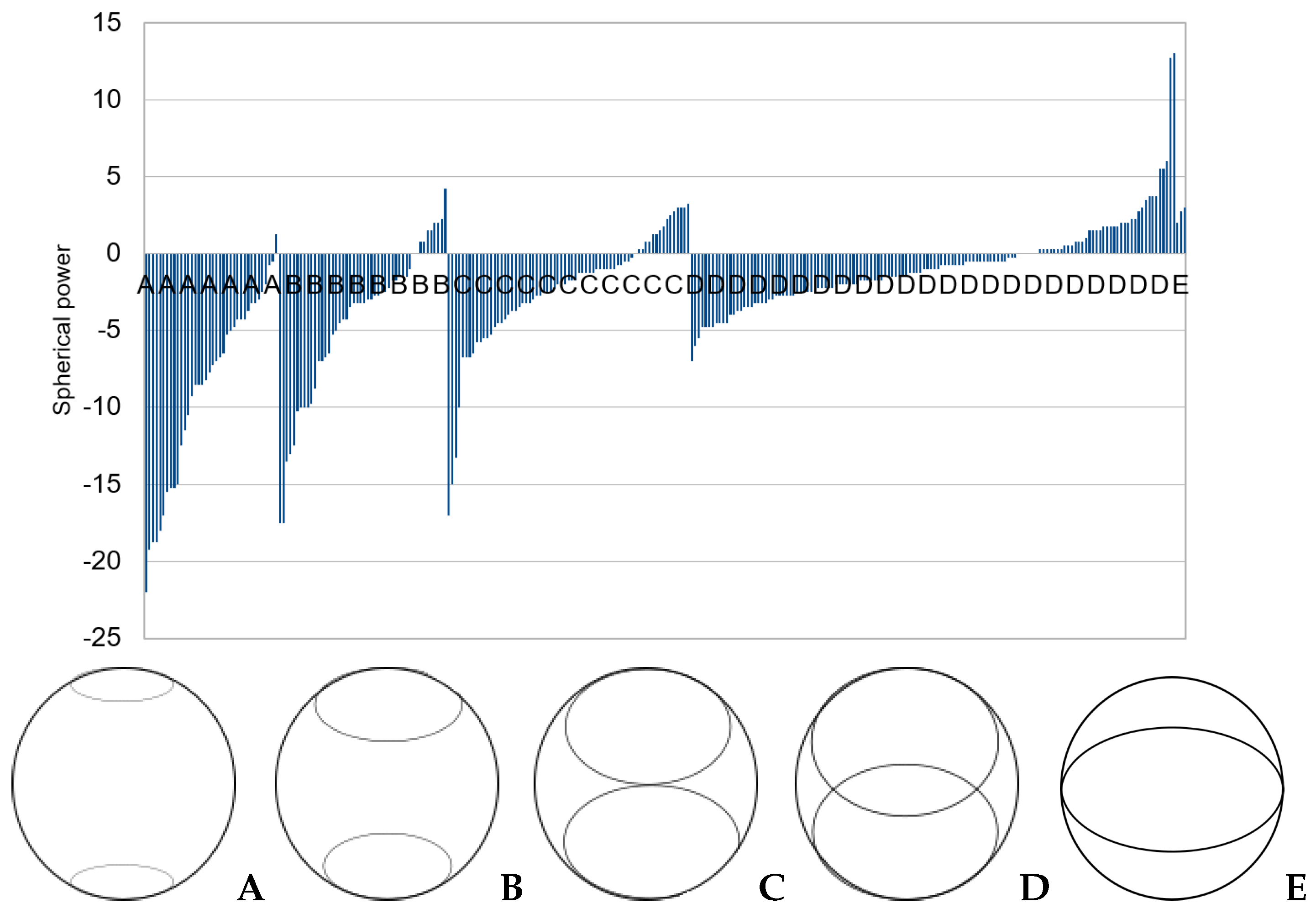

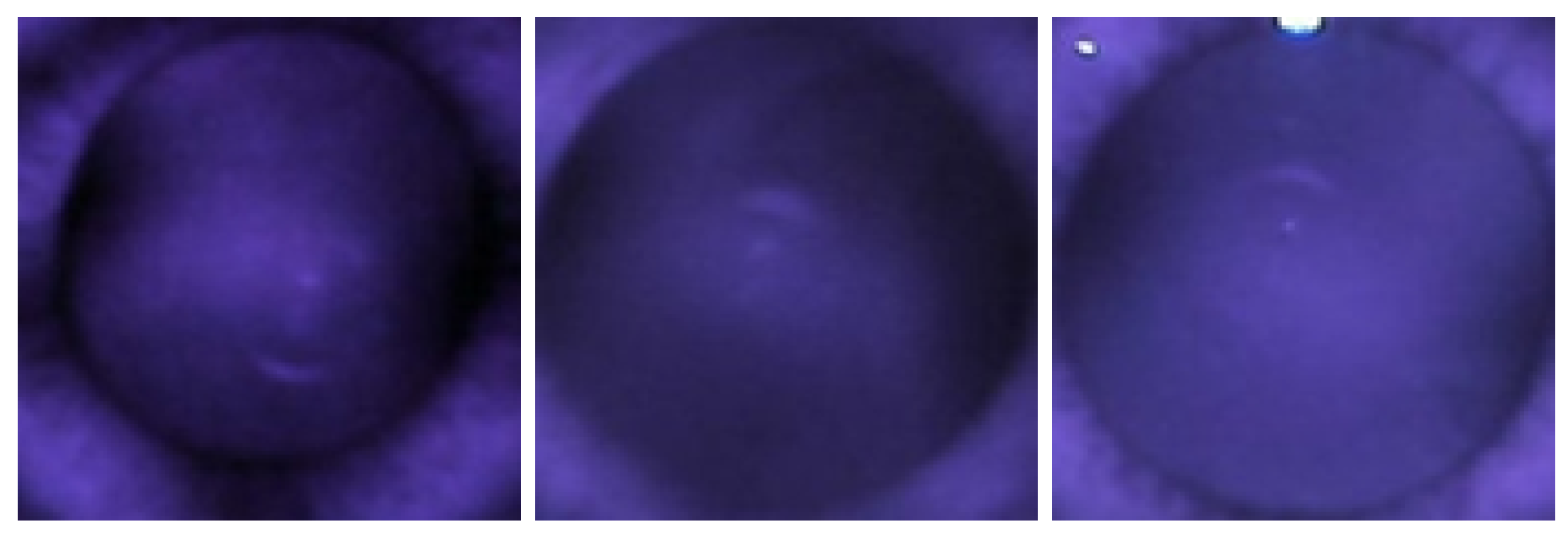

2.3. Observation of Crescents

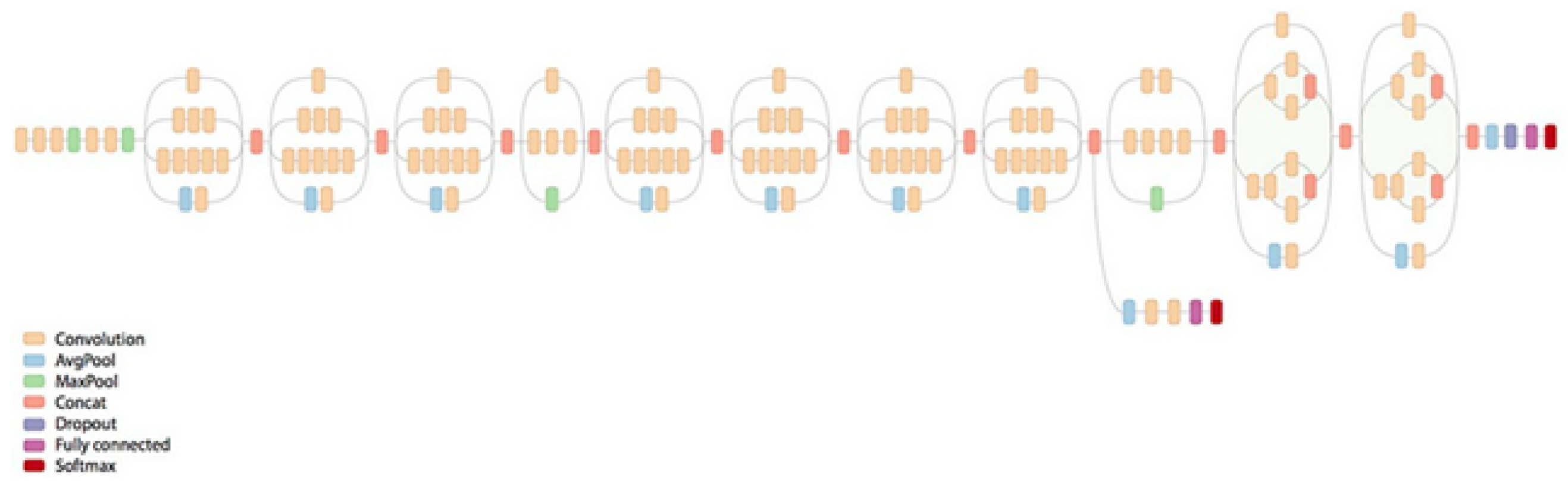

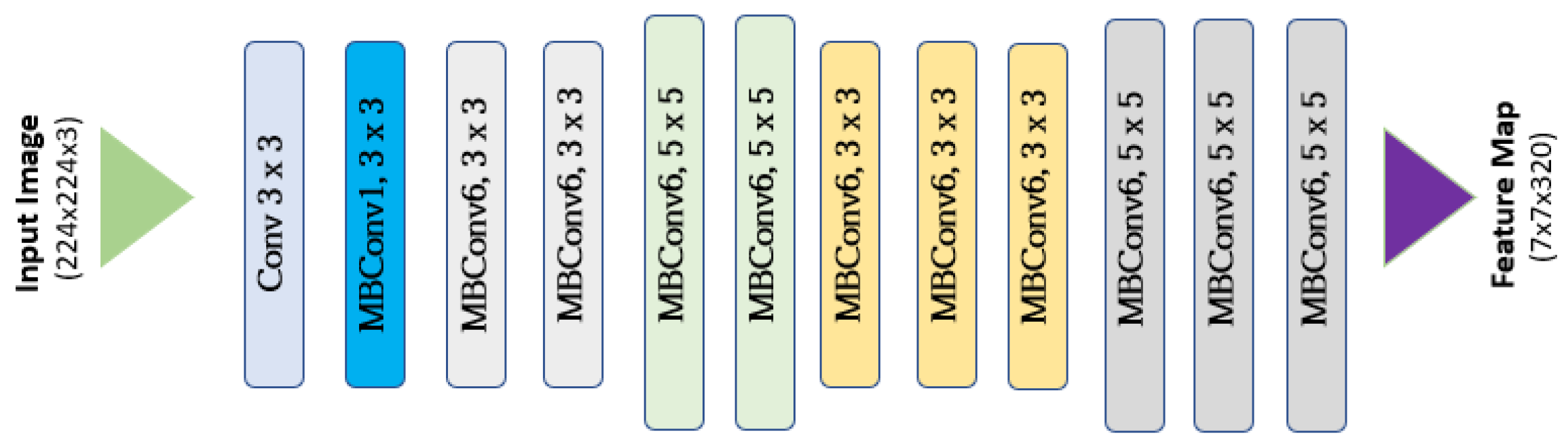

2.4. Grading Classification with CNN

2.5. Training, Validation, and Evaluation

3. Results

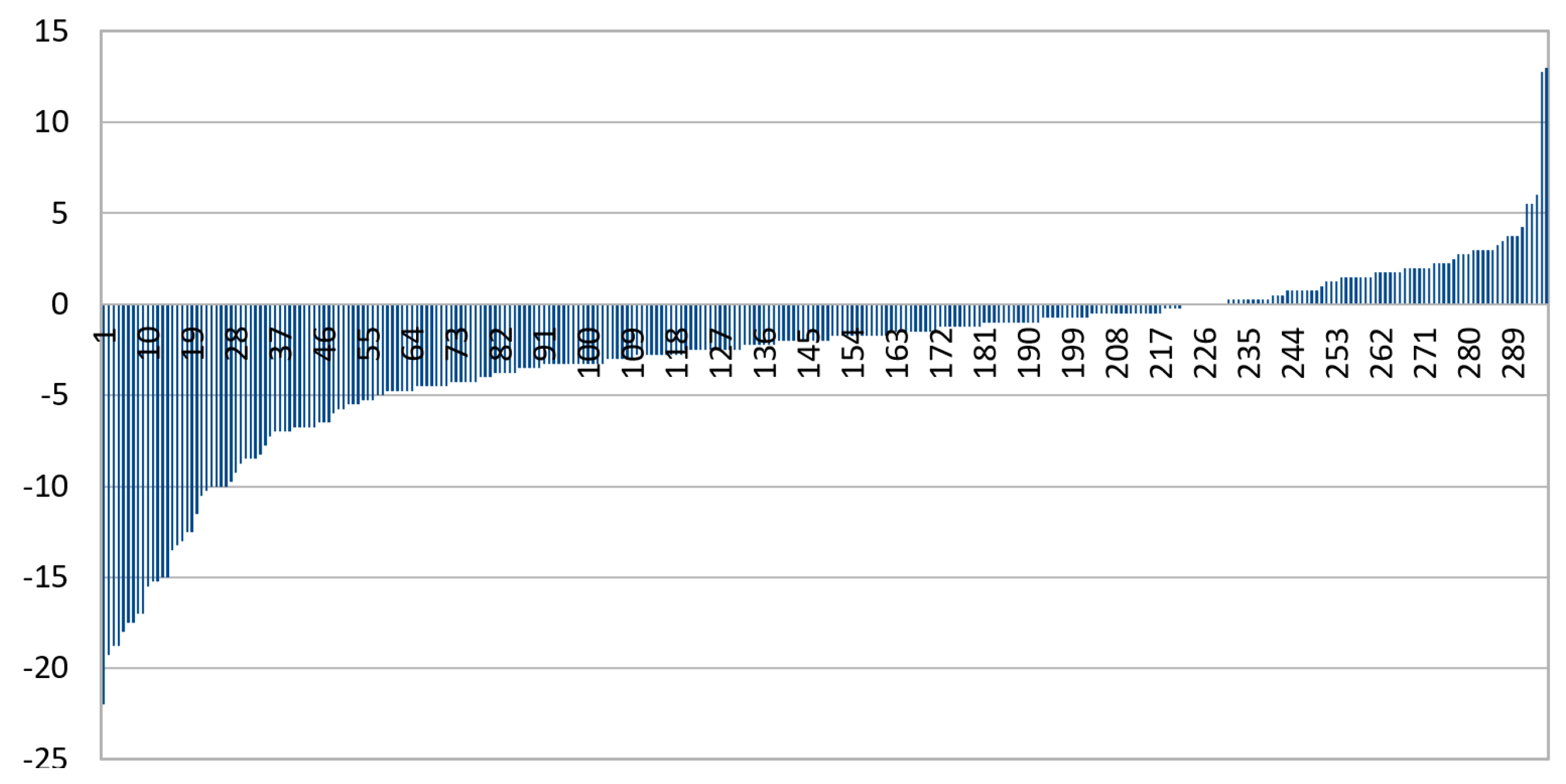

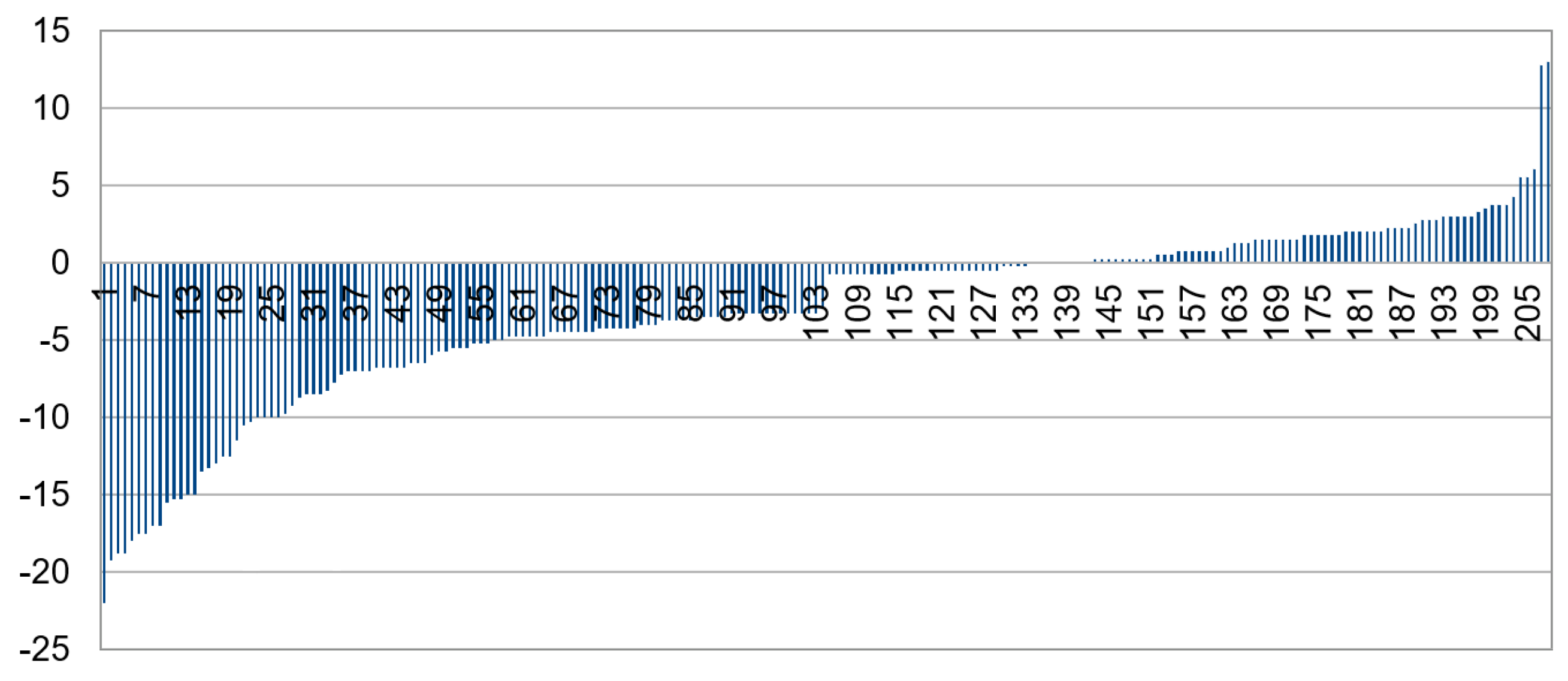

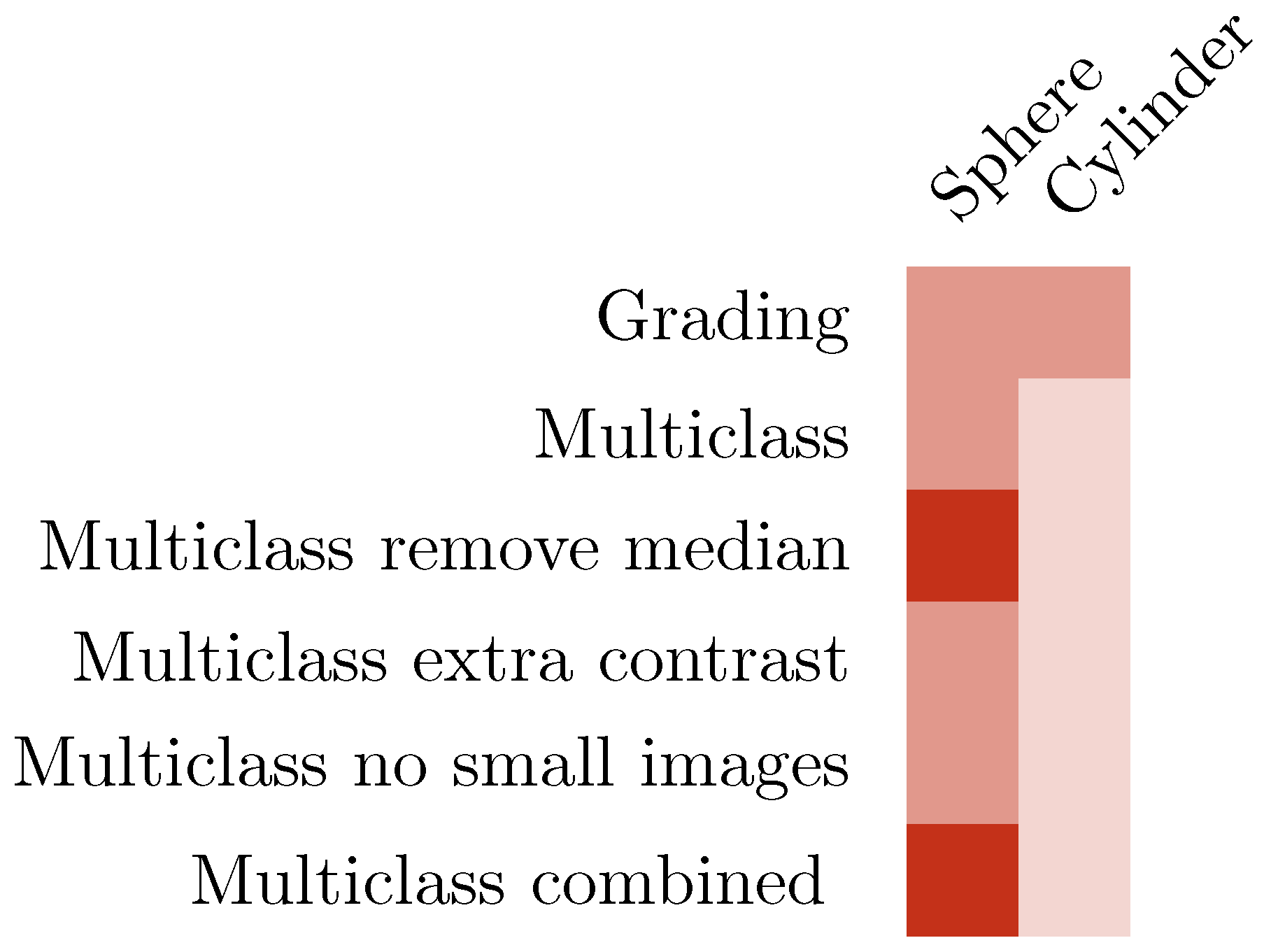

3.1. CNN Grading Results

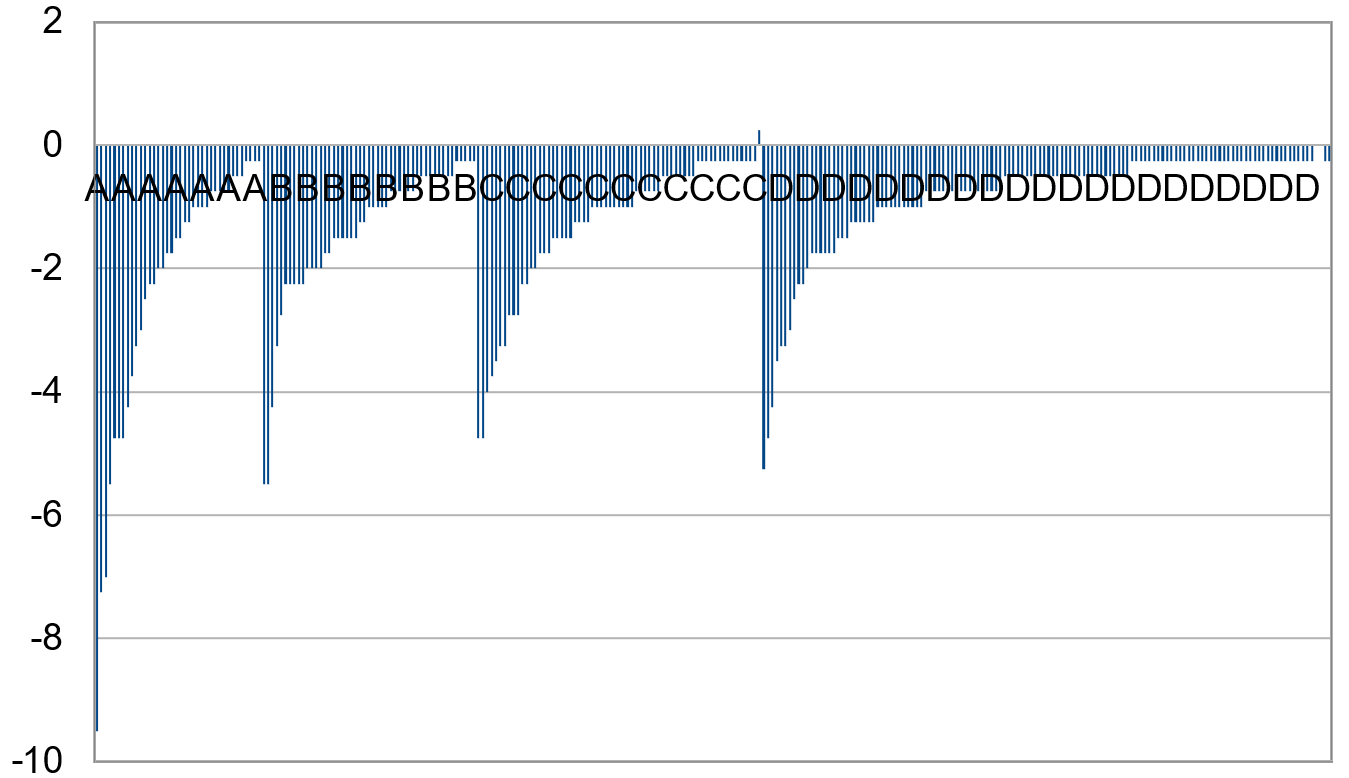

3.2. Categorizing into Crescent Types

3.3. Multiclass Classification with CNN

3.4. Increasing Contrast

3.5. Exclusion of Small Images

3.6. Combining IR and Color

3.7. Summary of Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- GBD 2019 Blindness and Vision Impairment Collaborators; Vision Loss Expert Group of the Global Burden of Disease Study. Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to VISION 2020: The Right to Sight: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar] [CrossRef] [PubMed]

- Holden, B.A.; Fricke, T.R.; Wilson, D.A.; Jong, M.; Naidoo, K.S.; Sankaridurg, P.; Wong, T.Y.; Naduvilath, T.J.; Resnikoff, S. Global prevalence of myopia and high myopia and temporal trends from 2000 through 2050. Ophthalmology 2016, 123, 1036–1042. [Google Scholar] [CrossRef] [PubMed]

- Pizzarello, L.; Abiose, A.; Ffytche, T.; Duerksen, R.; Thulasiraj, R.; Taylor, H.; Faal, H.; Rao, G.; Kocur, I.; Resnikoff, S. VISION 2020: The Right to Sight: A global initiative to eliminate avoidable blindness. Arch. Ophthalmol. 2004, 122, 615–620. [Google Scholar] [CrossRef]

- Haarman, A.E.; Enthoven, C.A.; Tideman, J.W.L.; Tedja, M.S.; Verhoeven, V.J.; Klaver, C.C. The complications of myopia: A review and meta-analysis. Investig. Ophthalmol. Vis. Sci. 2020, 61, 49. [Google Scholar] [CrossRef] [PubMed]

- Verhoeven, V.J.; Wong, K.T.; Buitendijk, G.H.; Hofman, A.; Vingerling, J.R.; Klaver, C.C. Visual consequences of refractive errors in the general population. Ophthalmology 2015, 122, 101–109. [Google Scholar] [CrossRef] [PubMed]

- Fricke, T.R.; Jong, M.; Naidoo, K.S.; Sankaridurg, P.; Naduvilath, T.J.; Ho, S.M.; Wong, T.Y.; Resnikoff, S. Global prevalence of visual impairment associated with myopic macular degeneration and temporal trends from 2000 through 2050: Systematic review, meta-analysis and modelling. Br. J. Ophthalmol. 2018, 102, 855–862. [Google Scholar] [CrossRef]

- Flitcroft, D. The complex interactions of retinal, optical and environmental factors in myopia aetiology. Prog. Retin. Eye Res. 2012, 31, 622–660. [Google Scholar] [CrossRef] [PubMed]

- Ha, A.; Kim, C.Y.; Shim, S.R.; Chang, I.B.; Kim, Y.K. Degree of myopia and glaucoma risk: A dose-response meta-analysis. Am. J. Ophthalmol. 2022, 236, 107–119. [Google Scholar] [CrossRef]

- Fricke, T.; Holden, B.; Wilson, D.; Schlenther, G.; Naidoo, K.; Resnikoff, S.; Frick, K. Global cost of correcting vision impairment from uncorrected refractive error. Bull. World Health Organ. 2012, 90, 728–738. [Google Scholar] [CrossRef] [PubMed]

- Yekta, A.; Hooshmand, E.; Saatchi, M.; Ostadimoghaddam, H.; Asharlous, A.; Taheri, A.; Khabazkhoob, M. Global prevalence and causes of visual impairment and blindness in children: A systematic review and meta-analysis. J. Curr. Ophthalmol. 2022, 34, 1–15. [Google Scholar] [PubMed]

- Chua, S.Y.; Sabanayagam, C.; Cheung, Y.B.; Chia, A.; Valenzuela, R.K.; Tan, D.; Wong, T.Y.; Cheng, C.Y.; Saw, S.M. Age of onset of myopia predicts risk of high myopia in later childhood in myopic Singapore children. Ophthalmic Physiol. Opt. 2016, 36, 388–394. [Google Scholar] [CrossRef] [PubMed]

- Wong, K.; Dahlmann-Noor, A. Myopia and its progression in children in London, UK: A retrospective evaluation. J. Optom. 2020, 13, 146–154. [Google Scholar] [CrossRef] [PubMed]

- French, A.N.; Murphy, E.; Martin, F.; de Mello, N.; Rose, K.A. Vision Screening in Children: The New South Wales Statewide Eyesight Preschooler Screening Program. Asia-Pac. J. Ophthalmol. 2022, 11, 425–433. [Google Scholar] [CrossRef]

- Langeslag-Smith, M.A.; Vandal, A.C.; Briane, V.; Thompson, B.; Anstice, N.S. Preschool children’s vision screening in New Zealand: A retrospective evaluation of referral accuracy. BMJ Open 2015, 5, e009207. [Google Scholar] [CrossRef]

- Vision in Preschoolers Study Group. Sensitivity of screening tests for detecting vision in preschoolers-targeted vision disorders when specificity is 94%. Optom. Vis. Sci. 2005, 82, 432–438. [Google Scholar] [CrossRef] [PubMed]

- Chun, J.; Kim, Y.; Shin, K.Y.; Han, S.H.; Oh, S.Y.; Chung, T.Y.; Park, K.A.; Lim, D.H. Deep learning–based prediction of refractive error using photorefraction images captured by a smartphone: Model development and validation study. JMIR Med. Inform. 2020, 8, e16225. [Google Scholar] [CrossRef]

- Covenant, A.C.; Circumcision, R. The red reflex examination in neonates: An efficient tool for early diagnosis of congenital ocular diseases. Isr. Med. Assoc. J. 2010, 12, 259–261. [Google Scholar]

- Tongue, A.C. Refractive errors in children. Pediatr. Clin. N. Am. 1987, 34, 1425–1437. [Google Scholar] [CrossRef]

- Toli, A.; Perente, A.; Labiris, G. Evaluation of the red reflex: An overview for the pediatrician. World J. Methodol. 2021, 11, 263. [Google Scholar] [CrossRef] [PubMed]

- Paysse, E.A.; Williams, G.C.; Coats, D.K.; Williams, E.A. Detection of red reflex asymmetry by pediatric residents using the Bruckner reflex versus the MTI photoscreener. Pediatrics 2001, 108, e74. [Google Scholar] [CrossRef]

- Jain, P.; Kothari, M.T.; Gode, V. The opportunistic screening of refractive errors in school-going children by pediatrician using enhanced Brückner test. Indian J. Ophthalmol. 2016, 64, 733. [Google Scholar] [PubMed]

- Kothari, M.T. Can the Brückner test be used as a rapid screening test to detect significant refractive errors in children? Indian J. Ophthalmol. 2007, 55, 213–215. [Google Scholar] [CrossRef] [PubMed]

- Bani, S.A.; Amitava, A.K.; Sharma, R.; Danish, A. Beyond photography: Evaluation of the consumer digital camera to identify strabismus and anisometropia by analyzing the Bruckner’s reflex. Indian J. Ophthalmol. 2013, 61, 608. [Google Scholar] [PubMed]

- Srivastava, R.M.; Verma, S.; Gupta, S.; Kaur, A.; Awasthi, S.; Agrawal, S. Reliability of Smart Phone Photographs for School Eye Screening. Children 2022, 9, 1519. [Google Scholar] [CrossRef] [PubMed]

- Simons, B.D.; Siatkowski, R.M.; Schiffman, J.C.; Berry, B.E.; Flynn, J.T. Pediatric photoscreening for strabismus and refractive errors in a high-risk population. Ophthalmology 1999, 106, 1073–1080. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.; Guan, Y.; Yuan, Y.; Tai, Y.; Wang, T. A one-step, streamlined children’s vision screening solution based on smartphone imaging for resource-limited areas: Design and preliminary field evaluation. JMIR MHealth UHealth 2020, 8, e18226. [Google Scholar] [CrossRef]

- Kothari, M.T.; Turakhia, J.K.; Vijayalakshmi, P.; Karthika, A.; Nirmalan, P.K. Can the Brückner Test Be Used as a Rapid Screening Test to Detect Amblyogenic Factors in Developing Countries? Am. Orthopt. J. 2003, 53, 121–126. [Google Scholar] [CrossRef]

- Molteno, A.; Hoare-Nairne, J.; Parr, J.; Simpson, A.; Hodgkinson, I.; O’Brien, N.; Watts, S. The Otago photoscreener, a method for the mass screening of infants to detect squint and refractive errors. Trans. Ophthalmol. Soc. N. Z. 1983, 35, 43–49. [Google Scholar] [PubMed]

- Peterseim, M.M.W.; Trivedi, R.H.; Monahan, S.R.; Smith, S.M.; Bowsher, J.D.; Alex, A.; Wilson, M.E.; Wolf, B.J. Effectiveness of the Spot Vision Screener using updated 2021 AAPOS guidelines. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 2023, 27, 24.e1–24.e7. [Google Scholar] [CrossRef] [PubMed]

- Arnold, R.W.; O’Neil, J.W.; Cooper, K.L.; Silbert, D.I.; Donahue, S.P. Evaluation of a smartphone photoscreening app to detect refractive amblyopia risk factors in children aged 1–6 years. Clin. Ophthalmol. 2018, 12, 1533–1537. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Li, Y.; Jiang, B. Diagnostic test accuracy of Spot and Plusoptix photoscreeners in detecting amblyogenic risk factors in children: A systemic review and meta-analysis. Ophthalmic Physiol. Opt. 2019, 39, 260–271. [Google Scholar] [CrossRef]

- Redd, T.K.; Campbell, J.P.; Chiang, M.F. Artificial intelligence for refractive surgery screening: Finding the balance between myopia and hype-ropia. JAMA Ophthalmol. 2020, 138, 526–527. [Google Scholar] [CrossRef] [PubMed]

- Foo, L.L.; Ng, W.Y.; San Lim, G.Y.; Tan, T.E.; Ang, M.; Ting, D.S.W. Artificial intelligence in myopia: Current and future trends. Curr. Opin. Ophthalmol. 2021, 32, 413–424. [Google Scholar] [CrossRef] [PubMed]

- Ng, W.Y.; Zhang, S.; Wang, Z.; Ong, C.J.T.; Gunasekeran, D.V.; Lim, G.Y.S.; Zheng, F.; Tan, S.C.Y.; Tan, G.S.W.; Rim, T.H.; et al. Updates in deep learning research in ophthalmology. Clin. Sci. 2021, 135, 2357–2376. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Wu, S.; Zhang, C.; Yu, W.; Dai, R.; Chen, Y. Global trends and frontiers of research on pathologic myopia since the millennium: A bibliometric analysis. Front. Public Health 2022, 10, 1047787. [Google Scholar] [CrossRef]

- Lim, J.S.; Hong, M.; Lam, W.S.; Zhang, Z.; Teo, Z.L.; Liu, Y.; Ng, W.Y.; Foo, L.L.; Ting, D.S. Novel technical and privacy-preserving technology for artificial intelligence in ophthalmology. Curr. Opin. Ophthalmol. 2022, 33, 174–187. [Google Scholar] [CrossRef]

- Varadarajan, A.V.; Poplin, R.; Blumer, K.; Angermueller, C.; Ledsam, J.; Chopra, R.; Keane, P.A.; Corrado, G.S.; Peng, L.; Webster, D.R. Deep learning for predicting refractive error from retinal fundus images. Investig. Ophthalmol. Vis. Sci. 2018, 59, 2861–2868. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.C.; Niemeijer, M. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef]

- Chalakkal, R.J.; Abdulla, W.H.; Hong, S.C. Fundus retinal image analyses for screening and diagnosing diabetic retinopathy, macular edema, and glaucoma disorders. In Diabetes and Fundus OCT; Elsevier: Amsterdam, The Netherlands, 2020; pp. 59–111. [Google Scholar]

- Li, F.; Wang, Z.; Qu, G.; Song, D.; Yuan, Y.; Xu, Y.; Gao, K.; Luo, G.; Xiao, Z.; Lam, D.S.; et al. Automatic differentiation of Glaucoma visual field from non-glaucoma visual filed using deep convolutional neural network. BMC Med. Imaging 2018, 18, 35. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Cheung, C.Y.L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; San Yeo, I.Y.; Lee, S.Y.; et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Brown, J.M.; Campbell, J.P.; Beers, A.; Chang, K.; Ostmo, S.; Chan, R.P.; Dy, J.; Erdogmus, D.; Ioannidis, S.; Kalpathy-Cramer, J.; et al. Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. 2018, 136, 803–810. [Google Scholar] [CrossRef]

- Lee, C.S.; Tyring, A.J.; Deruyter, N.P.; Wu, Y.; Rokem, A.; Lee, A.Y. Deep-learning based, automated segmentation of macular edema in optical coherence tomography. Biomed. Opt. Express 2017, 8, 3440–3448. [Google Scholar] [CrossRef] [PubMed]

- Tan, T.E.; Anees, A.; Chen, C.; Li, S.; Xu, X.; Li, Z.; Xiao, Z.; Yang, Y.; Lei, X.; Ang, M.; et al. Retinal photograph-based deep learning algorithms for myopia and a blockchain platform to facilitate artificial intelligence medical research: A retrospective multicohort study. Lancet Digit. Health 2021, 3, e317–e329. [Google Scholar] [CrossRef] [PubMed]

- Burlina, P.M.; Joshi, N.; Pekala, M.; Pacheco, K.D.; Freund, D.E.; Bressler, N.M. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017, 135, 1170–1176. [Google Scholar] [CrossRef] [PubMed]

- Grassmann, F.; Mengelkamp, J.; Brandl, C.; Harsch, S.; Zimmermann, M.E.; Linkohr, B.; Peters, A.; Heid, I.M.; Palm, C.; Weber, B.H. A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology 2018, 125, 1410–1420. [Google Scholar] [CrossRef]

- Son, J.; Shin, J.Y.; Chun, E.J.; Jung, K.H.; Park, K.H.; Park, S.J. Predicting high coronary artery calcium score from retinal fundus images with deep learning algorithms. Transl. Vis. Sci. Technol. 2020, 9, 28. [Google Scholar] [CrossRef]

- Munjral, S.; Maindarkar, M.; Ahluwalia, P.; Puvvula, A.; Jamthikar, A.; Jujaray, T.; Suri, N.; Paul, S.; Pathak, R.; Saba, L.; et al. Cardiovascular risk stratification in diabetic retinopathy via atherosclerotic pathway in COVID-19/non-COVID-19 frameworks using artificial intelligence paradigm: A narrative review. Diagnostics 2022, 12, 1234. [Google Scholar] [CrossRef] [PubMed]

- Hafiz, F.; Chalakkal, R.J.; Hong, S.C.; Linde, G.; Hu, R.; O’Keeffe, B.; Boobin, Y. A new approach to non-mydriatic portable fundus imaging. Expert Rev. Med. Devices 2022, 19, 303–314. [Google Scholar] [CrossRef]

- MedicMind, Dunedin, Otago, 9013, NZ. 2022. Available online: https://www.medicmind.tech/ (accessed on 1 January 2017).

- Lee, M.; Bin Mahmood, A.B.S.; Lee, E.S.; Smith, H.E.; Tudor Car, L. Smartphone and Mobile App Use Among Physicians in Clinical Practice: Scoping Review. JMIR MHealth UHealth 2023, 11, e44765. [Google Scholar] [CrossRef]

- Gupta, R.; Agrawal, S.; Srivastava, R.M.; Singh, V.; Katiyar, V. Smartphone photography for screening amblyogenic conditions in children. Indian J. Ophthalmol. 2019, 67, 1560. [Google Scholar] [PubMed]

- Peterseim, M.M.W.; Rhodes, R.S.; Patel, R.N.; Wilson, M.E.; Edmondson, L.E.; Logan, S.A.; Cheeseman, E.W.; Shortridge, E.; Trivedi, R.H. Effectiveness of the GoCheck Kids vision screener in detecting amblyopia risk factors. Am. J. Ophthalmol. 2018, 187, 87–91. [Google Scholar] [CrossRef] [PubMed]

- Yoo, T.K.; Ryu, I.H.; Kim, J.K.; Lee, I.S. Deep learning for predicting uncorrected refractive error using posterior segment optical coherence tomography images. Eye 2022, 36, 1959–1965. [Google Scholar] [CrossRef] [PubMed]

| IR Inception-v3 | IR EfficientNet | Colour Inception-v3 | Colour EfficientNet | |

|---|---|---|---|---|

| Sphere | 55% | 63% | 54% | 64% |

| Cylinder | 49% | 70% | 50% | 57% |

| IR Inception-v3 | IR EfficientNet | Colour Inception-v3 | Colour EfficientNet | |

|---|---|---|---|---|

| Sphere | 55% | 70% | 50% | 64% |

| Cylinder | 48% | 55% | 56% | 60% |

| IR Inception-v3 | IR EfficientNet | Colour Inception-v3 | Colour EfficientNet | |

|---|---|---|---|---|

| Sphere | 75% | 74% | 63% | 68% |

| Cylinder | 49% | 49% | 49% | 60% |

| IR EfficientNet | Colour EfficientNet | |

|---|---|---|

| 20% spherical power median removed | 74% | 68% |

| Small-sized images and 20% spherical power median removed | 64% | 60% |

| Inception-v3 | EfficientNet | |

|---|---|---|

| Sphere | 74% | 75% |

| Cylinder | 60% | 56% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Linde, G.; Chalakkal, R.; Zhou, L.; Huang, J.L.; O’Keeffe, B.; Shah, D.; Davidson, S.; Hong, S.C. Automatic Refractive Error Estimation Using Deep Learning-Based Analysis of Red Reflex Images. Diagnostics 2023, 13, 2810. https://doi.org/10.3390/diagnostics13172810

Linde G, Chalakkal R, Zhou L, Huang JL, O’Keeffe B, Shah D, Davidson S, Hong SC. Automatic Refractive Error Estimation Using Deep Learning-Based Analysis of Red Reflex Images. Diagnostics. 2023; 13(17):2810. https://doi.org/10.3390/diagnostics13172810

Chicago/Turabian StyleLinde, Glenn, Renoh Chalakkal, Lydia Zhou, Joanna Lou Huang, Ben O’Keeffe, Dhaivat Shah, Scott Davidson, and Sheng Chiong Hong. 2023. "Automatic Refractive Error Estimation Using Deep Learning-Based Analysis of Red Reflex Images" Diagnostics 13, no. 17: 2810. https://doi.org/10.3390/diagnostics13172810

APA StyleLinde, G., Chalakkal, R., Zhou, L., Huang, J. L., O’Keeffe, B., Shah, D., Davidson, S., & Hong, S. C. (2023). Automatic Refractive Error Estimation Using Deep Learning-Based Analysis of Red Reflex Images. Diagnostics, 13(17), 2810. https://doi.org/10.3390/diagnostics13172810