Artificial Intelligence in Endoscopic Ultrasonography-Guided Fine-Needle Aspiration/Biopsy (EUS-FNA/B) for Solid Pancreatic Lesions: Opportunities and Challenges

Abstract

:1. Introduction

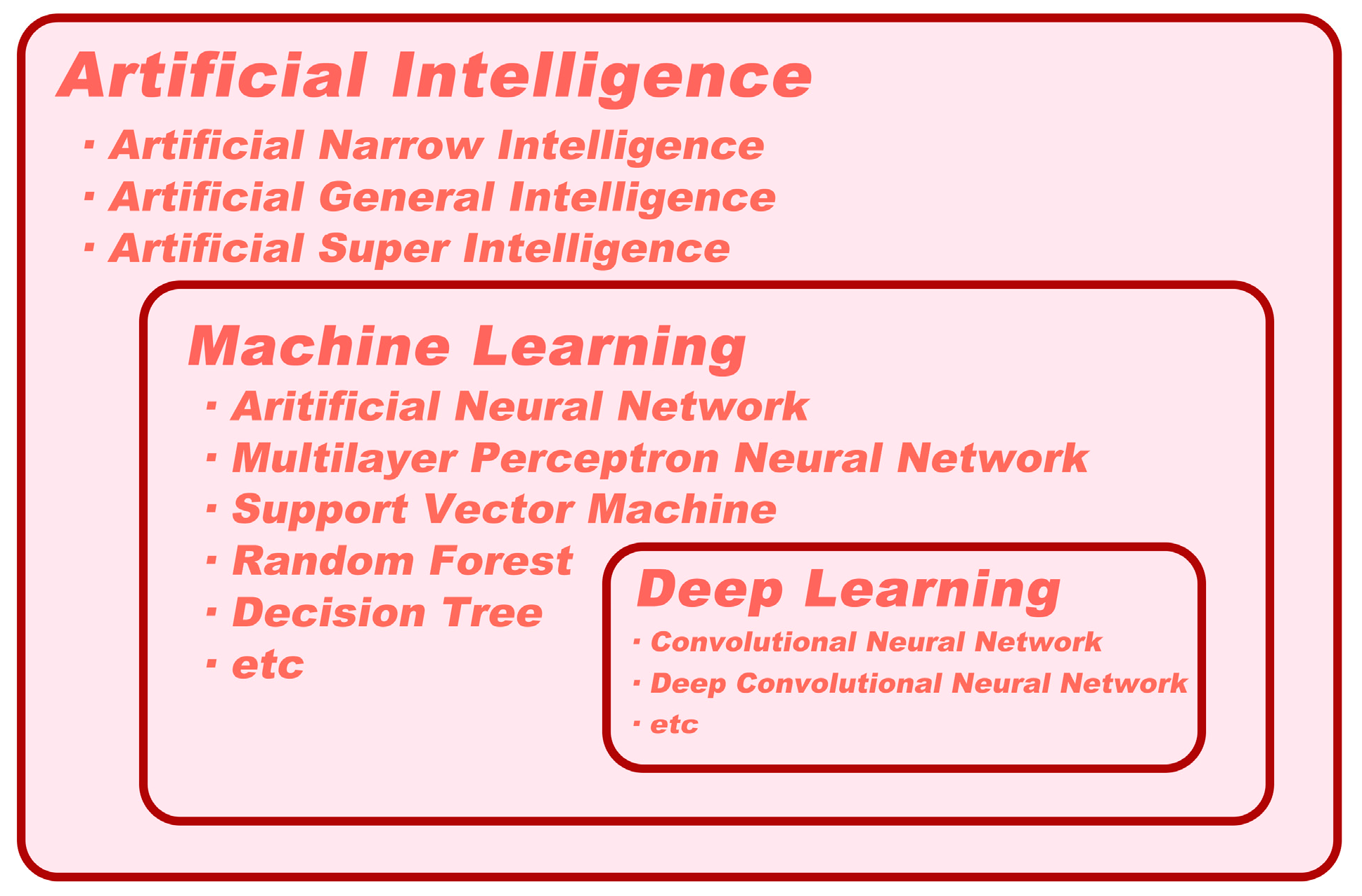

2. Definitions of Artificial Intelligence, Machine Learning, and Deep Learning

3. Use of Artificial Intelligence in EUS-FNA/B

3.1. AI and Digital Pathology

3.2. AI in Assisting with Pathological Diagnosis

3.3. AI in Guiding Targeted EUS-FNA/B

4. The Limitations and Shortages of Artificial Intelligence in EUS-FNA/B

5. Conclusions and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iglesias-Garcia, J.; de la Iglesia-Garcia, D.; Olmos-Martinez, J.M.; Larino-Noia, J.; Dominguez-Munoz, J.E. Differential diagnosis of solid pancreatic masses. Minerva Gastroenterol. Dietol. 2020, 66, 70–81. [Google Scholar] [CrossRef] [PubMed]

- Strasberg, S.M.; Gao, F.; Sanford, D.; Linehan, D.C.; Hawkins, W.G.; Fields, R.; Carpenter, D.H.; Brunt, E.M.; Phillips, C. Jaundice: An important, poorly recognized risk factor for diminished survival in patients with adenocarcinoma of the head of the pancreas. HPB 2014, 16, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Guarneri, G.; Gasparini, G.; Crippa, S.; Andreasi, V.; Falconi, M. Diagnostic strategy with a solid pancreatic mass. Presse Med. 2019, 48, e125–e145. [Google Scholar] [CrossRef] [PubMed]

- McGuigan, A.; Kelly, P.; Turkington, R.C.; Jones, C.; Coleman, H.G.; McCain, R.S. Pancreatic cancer: A review of clinical diagnosis, epidemiology, treatment and outcomes. World J. Gastroenterol. 2018, 24, 4846–4861. [Google Scholar] [CrossRef] [PubMed]

- Lucas, A.L.; Kastrinos, F. Screening for Pancreatic Cancer. JAMA 2019, 322, 407–408. [Google Scholar] [CrossRef]

- Ma, Z.Y.; Gong, Y.F.; Zhuang, H.K.; Zhou, Z.X.; Huang, S.Z.; Zou, Y.P.; Huang, B.W.; Sun, Z.H.; Zhang, C.Z.; Tang, Y.Q.; et al. Pancreatic neuroendocrine tumors: A review of serum biomarkers, staging, and management. World J. Gastroenterol. 2020, 26, 2305–2322. [Google Scholar] [CrossRef]

- Iglesias-Garcia, J.; Larino-Noia, J.; Dominguez-Munoz, J.E. When to puncture, when not to puncture: Pancreatic masses. Endosc. Ultrasound 2014, 3, 91–97. [Google Scholar] [CrossRef]

- Kitano, M.; Yoshida, T.; Itonaga, M.; Tamura, T.; Hatamaru, K.; Yamashita, Y. Impact of endoscopic ultrasonography on diagnosis of pancreatic cancer. J. Gastroenterol. 2019, 54, 19–32. [Google Scholar] [CrossRef]

- Iglesias-Garcia, J.; Lindkvist, B.; Larino-Noia, J.; Abdulkader-Nallib, I.; Dominguez-Munoz, J.E. Differential diagnosis of solid pancreatic masses: Contrast-enhanced harmonic (CEH-EUS), quantitative-elastography (QE-EUS), or both? United Eur. Gastroenterol. J. 2017, 5, 236–246. [Google Scholar] [CrossRef]

- Gong, T.T.; Hu, D.M.; Zhu, Q. Contrast-enhanced EUS for differential diagnosis of pancreatic mass lesions: A meta-analysis. Gastrointest. Endosc. 2012, 76, 301–309. [Google Scholar] [CrossRef]

- Facciorusso, A.; Mohan, B.P.; Crino, S.F.; Ofosu, A.; Ramai, D.; Lisotti, A.; Chandan, S.; Fusaroli, P. Contrast-enhanced harmonic endoscopic ultrasound-guided fine-needle aspiration versus standard fine-needle aspiration in pancreatic masses: A meta-analysis. Expert Rev. Gastroenterol. Hepatol. 2021, 15, 821–828. [Google Scholar] [CrossRef] [PubMed]

- Iglesias-Garcia, J.; de la Iglesia-Garcia, D.; Larino-Noia, J.; Dominguez-Munoz, J.E. Endoscopic Ultrasound (EUS) Guided Elastography. Diagnostics 2023, 13, 1686. [Google Scholar] [CrossRef] [PubMed]

- Dietrich, C.F.; Burmeister, S.; Hollerbach, S.; Arcidiacono, P.G.; Braden, B.; Fusaroli, P.; Hocke, M.; Iglesias-Garcia, J.; Kitano, M.; Larghi, A.; et al. Do we need elastography for EUS? Endosc. Ultrasound 2020, 9, 284–290. [Google Scholar] [CrossRef]

- Ignee, A.; Jenssen, C.; Arcidiacono, P.G.; Hocke, M.; Moller, K.; Saftoiu, A.; Will, U.; Fusaroli, P.; Iglesias-Garcia, J.; Ponnudurai, R.; et al. Endoscopic ultrasound elastography of small solid pancreatic lesions: A multicenter study. Endoscopy 2018, 50, 1071–1079. [Google Scholar] [CrossRef]

- Vilmann, P.; Jacobsen, G.K.; Henriksen, F.W.; Hancke, S. Endoscopic ultrasonography with guided fine needle aspiration biopsy in pancreatic disease. Gastrointest. Endosc. 1992, 38, 172–173. [Google Scholar] [CrossRef] [PubMed]

- Pouw, R.E.; Barret, M.; Biermann, K.; Bisschops, R.; Czako, L.; Gecse, K.B.; de Hertogh, G.; Hucl, T.; Iacucci, M.; Jansen, M.; et al. Endoscopic tissue sampling–Part 1: Upper gastrointestinal and hepatopancreatobiliary tracts. European Society of Gastrointestinal Endoscopy (ESGE) Guideline. Endoscopy 2021, 53, 1174–1188. [Google Scholar] [CrossRef]

- Tempero, M.A.; Malafa, M.P.; Al-Hawary, M.; Asbun, H.; Bain, A.; Behrman, S.W.; Benson, A.B., 3rd; Binder, E.; Cardin, D.B.; Cha, C.; et al. Pancreatic Adenocarcinoma, Version 2.2017, NCCN Clinical Practice Guidelines in Oncology. J. Natl. Compr. Canc. Netw. 2017, 15, 1028–1061. [Google Scholar] [CrossRef]

- Hewitt, M.J.; McPhail, M.J.; Possamai, L.; Dhar, A.; Vlavianos, P.; Monahan, K.J. EUS-guided FNA for diagnosis of solid pancreatic neoplasms: A meta-analysis. Gastrointest. Endosc. 2012, 75, 319–331. [Google Scholar] [CrossRef]

- Chen, G.; Liu, S.; Zhao, Y.; Dai, M.; Zhang, T. Diagnostic accuracy of endoscopic ultrasound-guided fine-needle aspiration for pancreatic cancer: A meta-analysis. Pancreatology 2013, 13, 298–304. [Google Scholar] [CrossRef]

- de Moura, D.T.H.; McCarty, T.R.; Jirapinyo, P.; Ribeiro, I.B.; Hathorn, K.E.; Madruga-Neto, A.C.; Lee, L.S.; Thompson, C.C. Evaluation of endoscopic ultrasound fine-needle aspiration versus fine-needle biopsy and impact of rapid on-site evaluation for pancreatic masses. Endosc. Int. Open 2020, 8, E738–E747. [Google Scholar] [CrossRef]

- Hassan, G.M.; Laporte, L.; Paquin, S.C.; Menard, C.; Sahai, A.V.; Masse, B.; Trottier, H. Endoscopic Ultrasound Guided Fine Needle Aspiration versus Endoscopic Ultrasound Guided Fine Needle Biopsy for Pancreatic Cancer Diagnosis: A Systematic Review and Meta-Analysis. Diagnostics 2022, 12, 2951. [Google Scholar] [CrossRef]

- Facciorusso, A.; Bajwa, H.S.; Menon, K.; Buccino, V.R.; Muscatiello, N. Comparison between 22G aspiration and 22G biopsy needles for EUS-guided sampling of pancreatic lesions: A meta-analysis. Endosc. Ultrasound 2020, 9, 167–174. [Google Scholar] [CrossRef]

- Gkolfakis, P.; Crino, S.F.; Tziatzios, G.; Ramai, D.; Papaefthymiou, A.; Papanikolaou, I.S.; Triantafyllou, K.; Arvanitakis, M.; Lisotti, A.; Fusaroli, P.; et al. Comparative diagnostic performance of end-cutting fine-needle biopsy needles for EUS tissue sampling of solid pancreatic masses: A network meta-analysis. Gastrointest. Endosc. 2022, 95, 1067–1077. [Google Scholar] [CrossRef]

- Cho, J.H.; Kim, J.; Lee, H.S.; Ryu, S.J.; Jang, S.I.; Kim, E.J.; Kang, H.; Lee, S.S.; Song, T.J.; Bang, S. Factors Influencing the Diagnostic Performance of Repeat Endoscopic Ultrasound-Guided Fine-Needle Aspiration/Biopsy after the First Inconclusive Diagnosis of Pancreatic Solid Lesions. Gut Liver 2023, 17. [Google Scholar] [CrossRef]

- Iglesias-Garcia, J.; Dominguez-Munoz, J.E.; Abdulkader, I.; Larino-Noia, J.; Eugenyeva, E.; Lozano-Leon, A.; Forteza-Vila, J. Influence of on-site cytopathology evaluation on the diagnostic accuracy of endoscopic ultrasound-guided fine needle aspiration (EUS-FNA) of solid pancreatic masses. Am. J. Gastroenterol. 2011, 106, 1705–1710. [Google Scholar] [CrossRef] [PubMed]

- Yang, F.; Liu, E.; Sun, S. Rapid on-site evaluation (ROSE) with EUS-FNA: The ROSE looks beautiful. Endosc. Ultrasound 2019, 8, 283–287. [Google Scholar]

- Spadaccini, M.; Koleth, G.; Emmanuel, J.; Khalaf, K.; Facciorusso, A.; Grizzi, F.; Hassan, C.; Colombo, M.; Mangiavillano, B.; Fugazza, A.; et al. Enhanced endoscopic ultrasound imaging for pancreatic lesions: The road to artificial intelligence. World J. Gastroenterol. 2022, 28, 3814–3824. [Google Scholar] [CrossRef] [PubMed]

- Tonozuka, R.; Mukai, S.; Itoi, T. The Role of Artificial Intelligence in Endoscopic Ultrasound for Pancreatic Disorders. Diagnostics 2020, 11, 18. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.M.; Ang, T.L. Optimizing endoscopic ultrasound guided fine needle aspiration through artificial intelligence. J. Gastroenterol. Hepatol. 2023, 38, 839–840. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef]

- Pohl, J. Artificial Superintelligence: Extinction or Nirvana? In Proceedings of the InterSymp-2015, IIAS, 27th International Conference on Systems Research, Informatics, and Cybernetics, Baden-Baden, Germany, 3 August 2015. [Google Scholar]

- Abonamah, A.A.; Tariq, M.U.; Shilbayeh, S. On the Commoditization of Artificial Intelligence. Front. Psychol. 2021, 12, 696346. [Google Scholar] [CrossRef] [PubMed]

- Jeste, D.V.; Graham, S.A.; Nguyen, T.T.; Depp, C.A.; Lee, E.E.; Kim, H.C. Beyond artificial intelligence: Exploring artificial wisdom. Int. Psychogeriatr. 2020, 32, 993–1001. [Google Scholar] [CrossRef] [PubMed]

- Bostrom, N. Superintelligence; Dunod: Malakoff, France, 2017. [Google Scholar]

- Robert, C.J.C. Machine Learning, a Probabilistic Perspective; The MIT Press: Cambridge, MA, USA, 2014; Volume 27, pp. 62–63. [Google Scholar]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Yin, M.; Liu, L.; Gao, J.; Lin, J.; Qu, S.; Xu, W.; Liu, X.; Xu, C.; Zhu, J. Deep learning for pancreatic diseases based on endoscopic ultrasound: A systematic review. Int. J. Med. Inform. 2023, 174, 105044. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Zhou, Y.; Tang, D.; Ni, M.; Zheng, J.; Xu, G.; Peng, C.; Shen, S.; Zhan, Q.; Wang, X.; et al. A deep learning-based segmentation system for rapid onsite cytologic pathology evaluation of pancreatic masses: A retrospective, multicenter, diagnostic study. EBioMedicine 2022, 80, 104022. [Google Scholar] [CrossRef]

- Mahmoudi, T.; Kouzahkanan, Z.M.; Radmard, A.R.; Kafieh, R.; Salehnia, A.; Davarpanah, A.H.; Arabalibeik, H.; Ahmadian, A. Segmentation of pancreatic ductal adenocarcinoma (PDAC) and surrounding vessels in CT images using deep convolutional neural networks and texture descriptors. Sci. Rep. 2022, 12, 3092. [Google Scholar] [CrossRef]

- Beltrami, E.J.; Brown, A.C.; Salmon, P.J.M.; Leffell, D.J.; Ko, J.M.; Grant-Kels, J.M. Artificial intelligence in the detection of skin cancer. J. Am. Acad. Dermatol. 2022, 87, 1336–1342. [Google Scholar] [CrossRef]

- Khened, M.; Kori, A.; Rajkumar, H.; Krishnamurthi, G.; Srinivasan, B. A generalized deep learning framework for whole-slide image segmentation and analysis. Sci. Rep. 2021, 11, 11579. [Google Scholar] [CrossRef]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial intelligence in digital pathology—New tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703–715. [Google Scholar] [CrossRef]

- Mubarak, M. Move from Traditional Histopathology to Digital and Computational Pathology: Are we Ready? Indian. J. Nephrol. 2022, 32, 414–415. [Google Scholar] [CrossRef] [PubMed]

- Nam, S.; Chong, Y.; Jung, C.K.; Kwak, T.Y.; Lee, J.Y.; Park, J.; Rho, M.J.; Go, H. Introduction to digital pathology and computer-aided pathology. J. Pathol. Transl. Med. 2020, 54, 125–134. [Google Scholar] [CrossRef] [PubMed]

- Jahn, S.W.; Plass, M.; Moinfar, F. Digital Pathology: Advantages, Limitations and Emerging Perspectives. J. Clin. Med. 2020, 9, 3697. [Google Scholar] [CrossRef] [PubMed]

- Van Es, S.L. Digital pathology: Semper ad meliora. Pathology 2019, 51, 1–10. [Google Scholar] [CrossRef]

- Pallua, J.D.; Brunner, A.; Zelger, B.; Schirmer, M.; Haybaeck, J. The future of pathology is digital. Pathol. Res. Pract. 2020, 216, 153040. [Google Scholar] [CrossRef]

- Forsch, S.; Klauschen, F.; Hufnagl, P.; Roth, W. Artificial Intelligence in Pathology. Dtsch. Arztebl. Int. 2021, 118, 194–204. [Google Scholar] [CrossRef]

- Loewenstein, Y.; Raviv, O.; Ahissar, M. Dissecting the Roles of Supervised and Unsupervised Learning in Perceptual Discrimination Judgments. J. Neurosci. 2021, 41, 757–765. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Gur, S.; Ali, A.; Wolf, L. Visualization of Supervised and Self-Supervised Neural Networks via Attribution Guided Factorization. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually Held, 2–9 February 2021; AAAI: Washington, DC, USA, 2021. [Google Scholar]

- Benjamens, S.; Dhunnoo, P.; Mesko, B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: An online database. NPJ. Digit. Med. 2020, 3, 118. [Google Scholar] [CrossRef]

- Hashimoto, Y.J.G. 44 Prospective Comparison Study of EUS-FNA Onsite Cytology Diagnosis by Pathologist versus Our Designed Deep Learning Algorhythm in Suspected Pancreatic Cancer. Gastroenterology 2020, 158, S17. [Google Scholar] [CrossRef]

- Patel, J.; Bhakta, D.; Elzamly, S.; Moreno, V.; Joldoshova, A.; Ghosh, A.; Shitawi, M.; Lin, M.; Aakash, N.; Guha, S.J.G.E. ID: 3526830 Artificial Intelligence Based Rapid Onsite Cytopathology Evaluation (Rose-AIDTM) vs. Physician Interpretation of Cytopathology Images of Endoscopic Ultrasound-Guided Fine-Needle Aspiration (EUS-FNA) of Pancreatic Solid Lesions. Intell. Based Med. 2021, 93, AB193–AB194. [Google Scholar] [CrossRef]

- Yeaton, P.; Sears, R.J.; Ledent, T.; Salmon, I.; Kiss, R.; Decaestecker, C. Discrimination between chronic pancreatitis and pancreatic adenocarcinoma using artificial intelligence-related algorithms based on image cytometry-generated variables. Cytometry 1998, 32, 309–316. [Google Scholar] [CrossRef]

- Biesterfeld, S.; Deacu, L. DNA image cytometry in the differential diagnosis of benign and malignant lesions of the bile duct, the pancreatic duct and the papilla of Vater. Anticancer Res. 2009, 29, 1579–1584. [Google Scholar] [PubMed]

- Okon, K.; Tomaszewska, R.; Nowak, K.; Stachura, J. Application of neural networks to the classification of pancreatic intraductal proliferative lesions. Anal. Cell. Pathol. 2001, 23, 129–136. [Google Scholar] [CrossRef]

- Bloom, G.; Yang, I.V.; Boulware, D.; Kwong, K.Y.; Coppola, D.; Eschrich, S.; Quackenbush, J.; Yeatman, T.J. Multi-platform, multi-site, microarray-based human tumor classification. Am. J. Pathol. 2004, 164, 9–16. [Google Scholar] [CrossRef] [PubMed]

- Song, J.W.; Lee, J.H. New morphological features for grading pancreatic ductal adenocarcinomas. Biomed. Res. Int. 2013, 2013, 175271. [Google Scholar] [CrossRef]

- Momeni-Boroujeni, A.; Yousefi, E.; Somma, J. Computer-assisted cytologic diagnosis in pancreatic FNA: An application of neural networks to image analysis. Cancer Cytopathol. 2017, 125, 926–933. [Google Scholar] [CrossRef]

- Hashimoto, Y.; Ohno, I.; Imaoka, H.; Takahashi, H.; Ikeda, M.J.G.E. Mo1296 Reliminary Result of Computer Aided Diagnosis (CAD) Performance Using Deep Learning in EUS-FNA Cytology of Pancreatic Cancer. Gastrointest. Endosc. 2018, 87, AB434. [Google Scholar] [CrossRef]

- Halicek, M.; Fabelo, H.; Ortega, S.; Callico, G.M.; Fei, B. In-Vivo and Ex-Vivo Tissue Analysis through Hyperspectral Imaging Techniques: Revealing the Invisible Features of Cancer. Cancers 2019, 11, 756. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, M.; Zhou, C.; Ran, T.; Pan, Y.; Deng, Y.; Xie, X.; Zhang, Y.; Gong, T.; Zhang, B.; et al. A deep learning model using hyperspectral image for EUS-FNA cytology diagnosis in pancreatic ductal adenocarcinoma. Cancer Med. 2023. [Google Scholar] [CrossRef]

- Lin, R.; Sheng, L.P.; Han, C.Q.; Guo, X.W.; Wei, R.G.; Ling, X.; Ding, Z. Application of artificial intelligence to digital-rapid on-site cytopathology evaluation during endoscopic ultrasound-guided fine needle aspiration: A proof-of-concept study. J. Gastroenterol. Hepatol. 2023, 38, 883–887. [Google Scholar] [CrossRef] [PubMed]

- Kong, F.; Kong, X.; Zhu, J.; Sun, T.; Du, Y.; Wang, K.; Jin, Z.; Li, Z.; Wang, D. A prospective comparison of conventional cytology and digital image analysis for the identification of pancreatic malignancy in patients undergoing EUS-FNA. Endosc. Ultrasound 2019, 8, 269–276. [Google Scholar]

- Thosani, N.; Patel, J.; Moreno, V.; Bhakta, D.; Patil, P.; Guha, S.; Zhang, S. Development and validation of artificial intelligence based rapid onsite cytopathology evaluation (rose-aidtm) for endoscopic ultrasound-guided fine-needle aspiration (eus-fna) of pancreatic solid lesions. Gastroenterology 2021, 160, S-17. [Google Scholar] [CrossRef]

- Naito, Y.; Tsuneki, M.; Fukushima, N.; Koga, Y.; Higashi, M.; Notohara, K.; Aishima, S.; Ohike, N.; Tajiri, T.; Yamaguchi, H.; et al. A deep learning model to detect pancreatic ductal adenocarcinoma on endoscopic ultrasound-guided fine-needle biopsy. Sci. Rep. 2021, 11, 8454. [Google Scholar] [CrossRef]

- Yamada, R.; Nakane, K.; Kadoya, N.; Matsuda, C.; Imai, H.; Tsuboi, J.; Hamada, Y.; Tanaka, K.; Tawara, I.; Nakagawa, H. Development of “Mathematical Technology for Cytopathology”, an Image Analysis Algorithm for Pancreatic Cancer. Diagnostics 2022, 12, 1149. [Google Scholar] [CrossRef]

- Ishikawa, T.; Hayakawa, M.; Suzuki, H.; Ohno, E.; Mizutani, Y.; Iida, T.; Fujishiro, M.; Kawashima, H.; Hotta, K. Development of a Novel Evaluation Method for Endoscopic Ultrasound-Guided Fine-Needle Biopsy in Pancreatic Diseases Using Artificial Intelligence. Diagnostics 2022, 12, 434. [Google Scholar] [CrossRef]

- Mohamadnejad, M.; Mullady, D.; Early, D.S.; Collins, B.; Marshall, C.; Sams, S.; Yen, R.; Rizeq, M.; Romanas, M.; Nawaz, S.; et al. Increasing Number of Passes Beyond 4 Does Not Increase Sensitivity of Detection of Pancreatic Malignancy by Endoscopic Ultrasound-Guided Fine-Needle Aspiration. Clin. Gastroenterol. Hepatol. 2017, 15, 1071–1078. [Google Scholar] [CrossRef] [PubMed]

- Cheng, B.; Zhang, Y.; Chen, Q.; Sun, B.; Deng, Z.; Shan, H.; Dou, L.; Wang, J.; Li, Y.; Yang, X.; et al. Analysis of Fine-Needle Biopsy vs Fine-Needle Aspiration in Diagnosis of Pancreatic and Abdominal Masses: A Prospective, Multicenter, Randomized Controlled Trial. Clin. Gastroenterol. Hepatol. 2018, 16, 1314–1321. [Google Scholar] [CrossRef] [PubMed]

- van Riet, P.A.; Cahen, D.L.; Poley, J.W.; Bruno, M.J. Mapping international practice patterns in EUS-guided tissue sampling: Outcome of a global survey. Endosc. Int. Open 2016, 4, E360–E370. [Google Scholar] [CrossRef]

- Iwashita, T.; Yasuda, I.; Mukai, T.; Doi, S.; Nakashima, M.; Uemura, S.; Mabuchi, M.; Shimizu, M.; Hatano, Y.; Hara, A.; et al. Macroscopic on-site quality evaluation of biopsy specimens to improve the diagnostic accuracy during EUS-guided FNA using a 19-gauge needle for solid lesions: A single-center prospective pilot study (MOSE study). Gastrointest. Endosc. 2015, 81, 177–185. [Google Scholar] [CrossRef]

- Kitano, M.; Sakamoto, H.; Kudo, M. Contrast-enhanced endoscopic ultrasound. Dig. Endosc. 2014, 26 (Suppl. S1), 79–85. [Google Scholar] [CrossRef] [PubMed]

- Otsuka, Y.; Kamata, K.; Kudo, M. Contrast-Enhanced Harmonic Endoscopic Ultrasound-Guided Puncture for the Patients with Pancreatic Masses. Diagnostics 2023, 13, 1039. [Google Scholar] [CrossRef] [PubMed]

- Sugimoto, M.; Takagi, T.; Suzuki, R.; Konno, N.; Asama, H.; Watanabe, K.; Nakamura, J.; Kikuchi, H.; Waragai, Y.; Takasumi, M.; et al. Contrast-enhanced harmonic endoscopic ultrasonography in gallbladder cancer and pancreatic cancer. Fukushima J. Med. Sci. 2017, 63, 39–45. [Google Scholar] [CrossRef] [PubMed]

- Hou, X.; Jin, Z.; Xu, C.; Zhang, M.; Zhu, J.; Jiang, F.; Li, Z. Contrast-enhanced harmonic endoscopic ultrasound-guided fine-needle aspiration in the diagnosis of solid pancreatic lesions: A retrospective study. PLoS ONE 2015, 10, e0121236. [Google Scholar] [CrossRef]

- Kitano, M.; Kamata, K.; Imai, H.; Miyata, T.; Yasukawa, S.; Yanagisawa, A.; Kudo, M. Contrast-enhanced harmonic endoscopic ultrasonography for pancreatobiliary diseases. Dig. Endosc. 2015, 27 (Suppl. S1), 60–67. [Google Scholar] [CrossRef]

- Imazu, H.; Kanazawa, K.; Mori, N.; Ikeda, K.; Kakutani, H.; Sumiyama, K.; Hino, S.; Ang, T.L.; Omar, S.; Tajiri, H. Novel quantitative perfusion analysis with contrast-enhanced harmonic EUS for differentiation of autoimmune pancreatitis from pancreatic carcinoma. Scand. J. Gastroenterol. 2012, 47, 853–860. [Google Scholar] [CrossRef]

- Saftoiu, A.; Vilmann, P.; Dietrich, C.F.; Iglesias-Garcia, J.; Hocke, M.; Seicean, A.; Ignee, A.; Hassan, H.; Streba, C.T.; Ioncica, A.M.; et al. Quantitative contrast-enhanced harmonic EUS in differential diagnosis of focal pancreatic masses (with videos). Gastrointest. Endosc. 2015, 82, 59–69. [Google Scholar] [CrossRef]

- Tang, A.; Tian, L.; Gao, K.; Liu, R.; Hu, S.; Liu, J.; Xu, J.; Fu, T.; Zhang, Z.; Wang, W.; et al. Contrast-enhanced harmonic endoscopic ultrasound (CH-EUS) MASTER: A novel deep learning-based system in pancreatic mass diagnosis. Cancer Med. 2023, 12, 7962–7973. [Google Scholar] [CrossRef]

- Wadden, J.J. Defining the undefinable: The black box problem in healthcare artificial intelligence. J. Med. Ethics 2021, 48, 764–768. [Google Scholar] [CrossRef]

- van der Velden, B.H.M.; Kuijf, H.J.; Gilhuijs, K.G.A.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Pierce, R.L.; Van Biesen, W.; Van Cauwenberge, D.; Decruyenaere, J.; Sterckx, S. Explainability in medicine in an era of AI-based clinical decision support systems. Front. Genet. 2022, 13, 903600. [Google Scholar] [CrossRef] [PubMed]

- Dahiya, D.S.; Al-Haddad, M.; Chandan, S.; Gangwani, M.K.; Aziz, M.; Mohan, B.P.; Ramai, D.; Canakis, A.; Bapaye, J.; Sharma, N. Artificial Intelligence in Endoscopic Ultrasound for Pancreatic Cancer: Where Are We Now and What Does the Future Entail? J. Clin. Med. 2022, 11, 7476. [Google Scholar] [CrossRef] [PubMed]

- Smistad, E.; Falch, T.L.; Bozorgi, M.; Elster, A.C.; Lindseth, F. Medical image segmentation on GPUs—A comprehensive review. Med. Image Anal. 2015, 20, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Yang, W.; Wen, H.; Liu, Y.; Peng, Y. A Lightweight 1-D Convolution Augmented Transformer with Metric Learning for Hyperspectral Image Classification. Sensors 2021, 21, 1751. [Google Scholar] [CrossRef]

- Qu, L.; Liu, S.; Liu, X.; Wang, M.; Song, Z. Towards label-efficient automatic diagnosis and analysis: A comprehensive survey of advanced deep learning-based weakly-supervised, semi-supervised and self-supervised techniques in histopathological image analysis. Phys. Med. Biol. 2022, 67, 20TR01. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Lee, I.; Kim, D.; Wee, D.; Lee, S. An Efficient Human Instance-Guided Framework for Video Action Recognition. Sensors 2021, 21, 8309. [Google Scholar] [CrossRef]

- Sinz, F.H.; Pitkow, X.; Reimer, J.; Bethge, M.; Tolias, A.S. Engineering a Less Artificial Intelligence. Neuron 2019, 103, 967–979. [Google Scholar] [CrossRef] [PubMed]

| Year/Journal | Author | Ref. | Purpose | Data Source | Sample Size | Algorithm | Diagnostic Performance |

|---|---|---|---|---|---|---|---|

| 2017/ Cancer Cytopathology | Momeni-Boroujeni et al. | [61] | Distinguish benign and malignant pancreatic cytology | EUS-FNA | 277 images from 75 pancreatic FNA cases | MNN | For benign and malignant categories: Accuracy 100% For atypical cases: Accuracy 77% |

| 2018/ Gastrointestinal Endoscopy | Hashimoto et al. | [62] | PDAC identification | EUS-FNA | 450 images | CNN | Accuracy 80% |

| 2019/ Endoscopic Ultrasound | Kong et al. | [66] | PC detection | EUS-FNA | 142 cases | DIA | Accuracy (83%) is comparable to conventional cytology (78%) |

| 2020/ Gastroenterology | Hashimoto et al. | [54] | Distinguish benign and malignant in ROSE | EUS-FNA | Retrospectively collected: 1440 cytology specimens; Retrospective validated: 400 cytology specimens | CNN | Accuracy (93–94%) is comparable to an onsite pathologist (98–99%) |

| 2021/ Gastroenterology | Thosani et al. | [67] | Interpretation for adequacy and identification of SPLs in ROSE | EUS-FNA | 400 cases for training and 77 images for validation | ML | For onsite adequacy testing: Accuracy 87.25%; For cytopathological diagnosis: Accuracy 81.8% |

| 2021/ Gastrointestinal Endoscopy | Patel et al. | [55] | Comparison of AI and subspecialty physicians for identification of SPLs | EUS-FNA | 77 images | ML | Accuracy (87%) is on par or superior compared to most physicians (36–96%) |

| 2021/ Scientific Reports | Naito et al. | [68] | PDAC detection in WSIs | EUS-FNB | 532 WSIs | CNN | Accuracy 94.17%, AUC 0.9836 |

| 2022/ Diagnostics (Basel) | Yamada et al. | [69] | Distinguish PDAC and benign pancreatic cytology | EUS-FNA/B | 246 specimens | DL | Accuracy 74% |

| 2022/ Diagnostics (Basel) | Ishikawa et al. | [70] | Evaluation of diagnosable EUS-FNB specimen in MOSE | EUS-FNB | 271 specimens from 159 patients | CNN | Accuracy (84.4%) is comparable to endoscopists (82.1–83.2%) |

| 2022/ EBioMedicine | Zhang et al. | [39] | Identification of PDAC in ROSE | EUS-FNA | 6667 images from 194 cases | DCNN | Accuracy (94.4%) with AUC 0.958, is comparable to cytopathologists (91.7%) |

| 2022/ Journal of Gastroenterology and Hepatology | Lin et al. | [65] | Detection of cancer cells with pancreatic or other celiac lesions in ROSE | EUS-FNA | 1160 images from 51 cases | CNN | For internal validation dataset: Accuracy 83.4% For external validation dataset: Accuracy 88.7% |

| 2023/ Cancer Medicine | Qin et al. | [64] | Distinguish benign and malignant masses via pancreatic cytology | EUS-FNA | 1913 images from 72 cases | CNN | For internal test dataset: Accuracy 92.04% For external test dataset: Accuracy 92.27% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, X.; Ran, T.; Chen, Y.; Zhang, Y.; Wang, D.; Zhou, C.; Zou, D. Artificial Intelligence in Endoscopic Ultrasonography-Guided Fine-Needle Aspiration/Biopsy (EUS-FNA/B) for Solid Pancreatic Lesions: Opportunities and Challenges. Diagnostics 2023, 13, 3054. https://doi.org/10.3390/diagnostics13193054

Qin X, Ran T, Chen Y, Zhang Y, Wang D, Zhou C, Zou D. Artificial Intelligence in Endoscopic Ultrasonography-Guided Fine-Needle Aspiration/Biopsy (EUS-FNA/B) for Solid Pancreatic Lesions: Opportunities and Challenges. Diagnostics. 2023; 13(19):3054. https://doi.org/10.3390/diagnostics13193054

Chicago/Turabian StyleQin, Xianzheng, Taojing Ran, Yifei Chen, Yao Zhang, Dong Wang, Chunhua Zhou, and Duowu Zou. 2023. "Artificial Intelligence in Endoscopic Ultrasonography-Guided Fine-Needle Aspiration/Biopsy (EUS-FNA/B) for Solid Pancreatic Lesions: Opportunities and Challenges" Diagnostics 13, no. 19: 3054. https://doi.org/10.3390/diagnostics13193054

APA StyleQin, X., Ran, T., Chen, Y., Zhang, Y., Wang, D., Zhou, C., & Zou, D. (2023). Artificial Intelligence in Endoscopic Ultrasonography-Guided Fine-Needle Aspiration/Biopsy (EUS-FNA/B) for Solid Pancreatic Lesions: Opportunities and Challenges. Diagnostics, 13(19), 3054. https://doi.org/10.3390/diagnostics13193054