Early Detection of Heart Failure with Autonomous AI-Based Model Using Chest Radiographs: A Multicenter Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Patients

2.2. Chest Radiographs

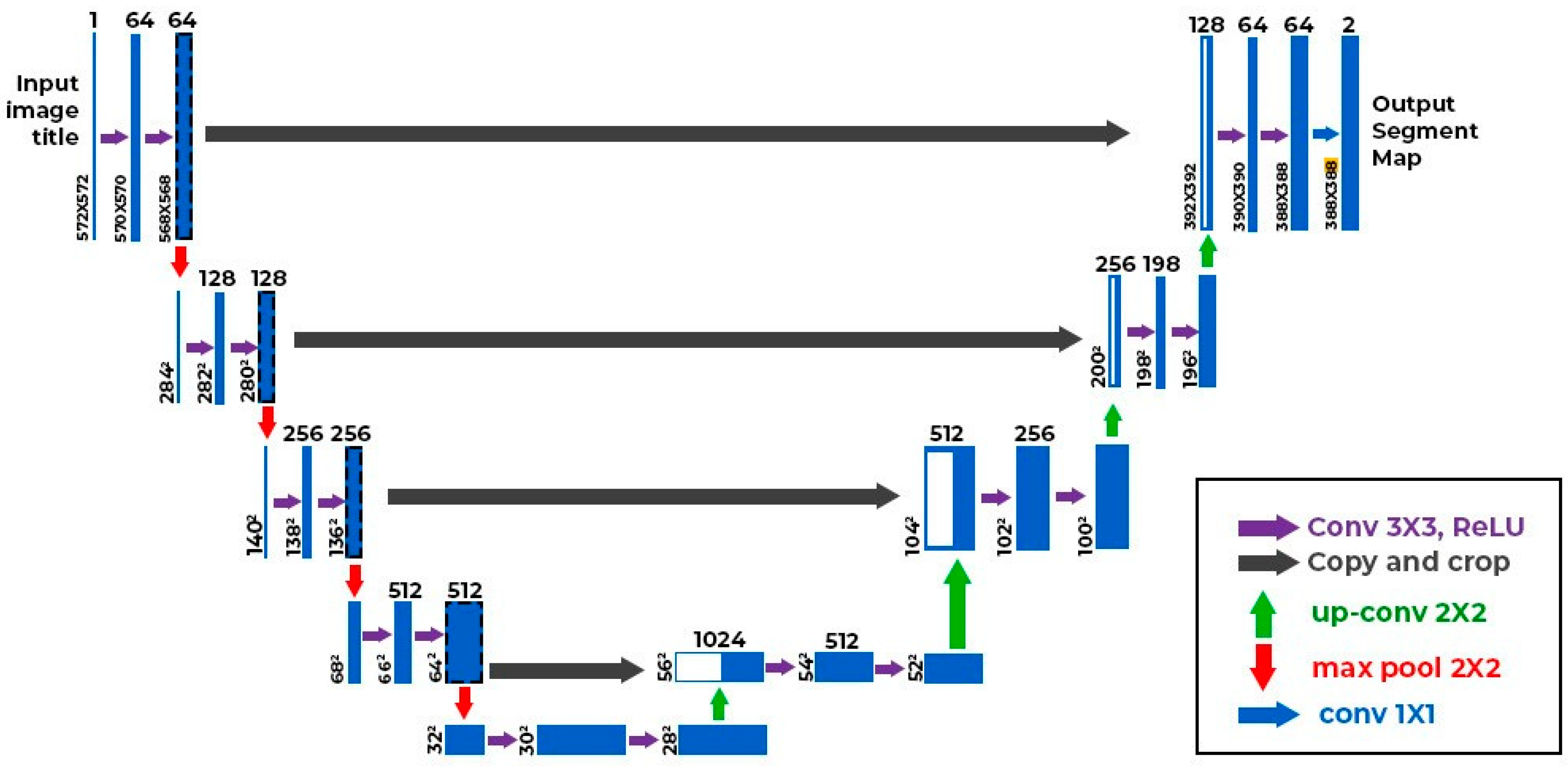

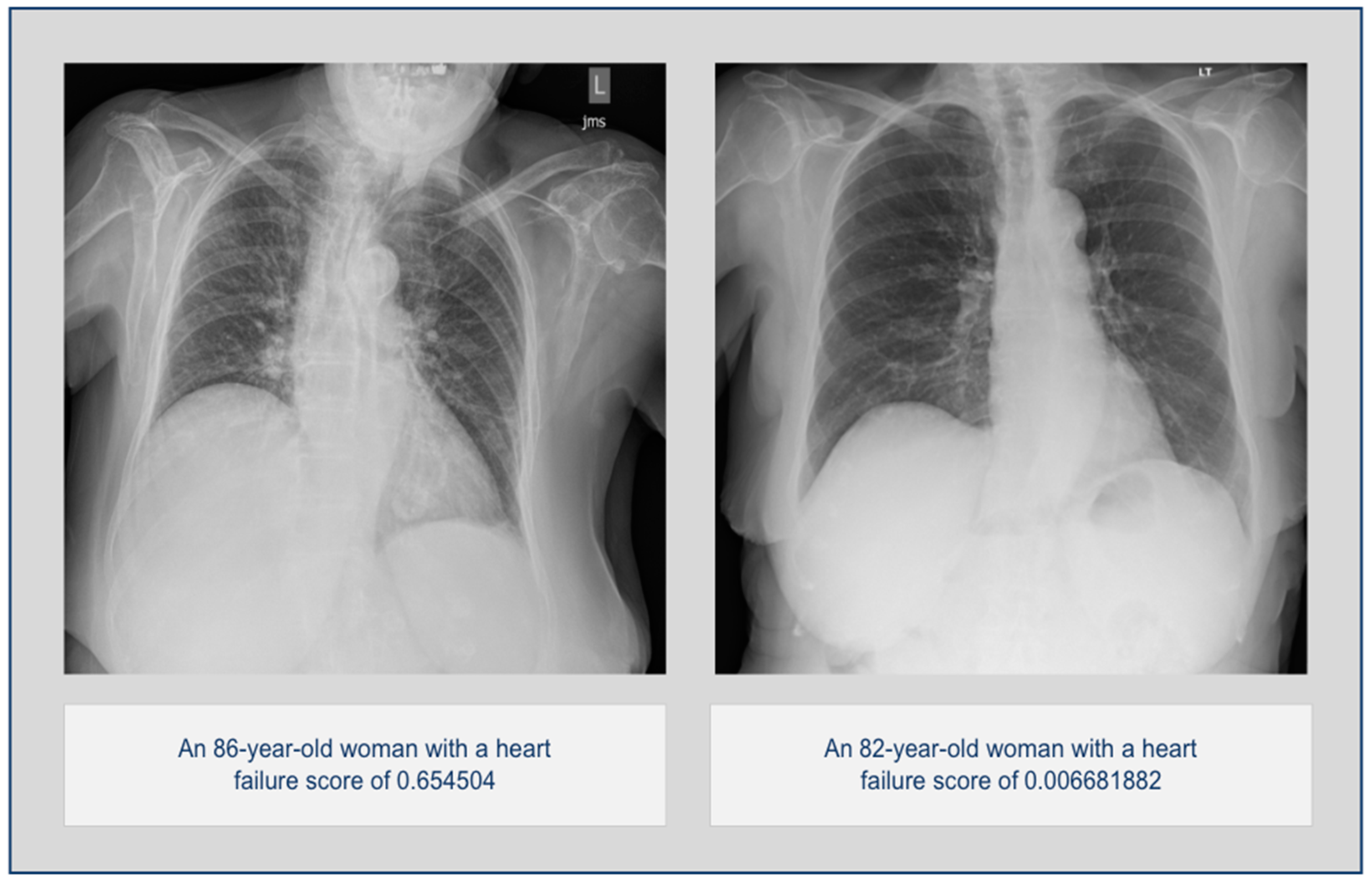

2.3. AI Algorithm

2.4. Statistical Analysis

3. Results

3.1. Overall Statistics

3.2. Stratified Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Virani, S.S.; Alonso, A.; Benjamin, E.J.; Bittencourt, M.S.; Callaway, C.W.; Carson, A.P.; Chamberlain, A.M.; Chang, A.R.; Cheng, S.; Delling, F.N.; et al. Heart Disease and Stroke Statistics-2020 Update: A Report from the American Heart Association. Circulation 2020, 141, e139–e596. [Google Scholar] [CrossRef]

- Savarese, G.; Becher, P.M.; Lund, L.H.; Seferovic, P.; Rosano, G.M.C.; Coats, A.J.S. Global burden of heart failure: A comprehensive and updated review of epidemiology. Cardiovasc. Res. 2023, 118, 3272–3287. [Google Scholar] [CrossRef] [PubMed]

- Malik, A.; Brito, D.; Vaqar, S.; Chhabra, L. Congestive Heart Failure. [Updated 2023 Nov 5]. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2024. Available online: https://www.ncbi.nlm.nih.gov/books/NBK430873/ (accessed on 17 June 2024).

- Sandhu, A.T.; Tisdale, R.L.; Rodriguez, F.; Stafford, R.S.; Maron, D.J.; Hernandez-Boussard, T.; Lewis, E.; Heidenreich, P.A. Disparity in the Setting of Incident Heart Failure Diagnosis. Circ. Heart Fail. 2021, 14, e008538. [Google Scholar] [CrossRef] [PubMed]

- Boonman-de Winter, L.J.; Rutten, F.H.; Cramer, M.J.; Landman, M.J.; Liem, A.H.; Rutten, G.E.; Hoes, A.W. High prevalence of previously unknown heart failure and left ventricular dysfunction in patients with type 2 diabetes. Diabetologia 2012, 55, 2154–2162. [Google Scholar] [CrossRef]

- Patil, V.C.; Patil, H.V.; Shah, K.B.; Vasani, J.D.; Shetty, P. Diastolic dysfunction in asymptomatic type 2 diabetes mellitus with normal systolic function. J. Cardiovasc. Dis. Res. 2011, 2, 213–222. [Google Scholar] [CrossRef]

- Mishra, T.K.; Rath, P.K.; Mohanty, N.K.; Mishra, S.K. Left ventricular systolic and diastolic dysfunction and their relationship with microvascular complications in normotensive, asymptomatic patients with type 2 diabetes mellitus. Indian Heart J. 2008, 60, 548–553. [Google Scholar]

- Antakly-Hanon, Y.; Ben Hamou, A.; Garçon, P.; Moeuf, Y.; Banu, I.; Fumery, M.; Voican, A.; Abassade, P.; Oriez, C.; Chatellier, G.; et al. Asymptomatic left ventricular dysfunction in patients with type 2 diabetes free of cardiovascular disease and its relationship with clinical characteristics: The DIACAR cohort study. Diabetes Obes. Metab. 2021, 23, 434–443. [Google Scholar] [CrossRef]

- Hoek, A.G.; Dal Canto, E.; Wenker, E.; Bindraban, N.; Handoko, M.L.; Elders, P.J.M.; Beulens, J.W.J. Epidemiology of heart failure in diabetes: A disease in disguise. Diabetologia 2024, 67, 574–601. [Google Scholar] [CrossRef] [PubMed]

- Swiatkiewicz, I.; Patel, N.T.; Villarreal-Gonzalez, M.; Taub, P.R. Prevalence of diabetic cardiomyopathy in patients with type 2 diabetes in a large academic medical center. BMC Med. 2024, 22, 195. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Barrios, V.; Escobar, C.; De La Sierra, A.; Llisterri, J.L.; González-Segura, D. Detection of unrecognized clinical heart failure in elderly hypertensive women attended in primary care setting. Blood Press. 2010, 19, 301–307. [Google Scholar] [CrossRef]

- Rutten, F.H.; Cramer, M.-J.M.; Grobbee, D.E.; Sachs, A.P.; Kirkels, J.H.; Lammers, J.-W.J.; Hoes, A.W. Unrecognized heart failure in elderly patients with stable chronic obstructive pulmonary disease. Eur. Heart J. 2005, 26, 1887–1894. [Google Scholar] [CrossRef] [PubMed]

- Wong, C.W.; Tafuro, J.; Azam, Z.; Satchithananda, D.; Duckett, S.; Barker, D.; Patwala, A.; Ahmed, F.Z.; Mallen, C.; Kwok, C.S. Misdiagnosis of Heart Failure: A Systematic Review of the Literature. J. Card. Fail. 2021, 27, 925–933. [Google Scholar] [CrossRef] [PubMed]

- Stehlik, J.; Schmalfuss, C.; Bozkurt, B.; Nativi-Nicolau, J.; Wohlfahrt, P.; Wegerich, S.; Rose, K.; Ray, R.; Schofield, R.; Deswal, A.; et al. Continuous Wearable Monitoring Analytics Predict Heart Failure Hospitalization: The LINK-HF Multicenter Study. Circ. Heart Fail. 2020, 13, e006513. [Google Scholar] [CrossRef] [PubMed]

- Gardner, R.S.; Singh, J.P.; Stancak, B.; Nair, D.G.; Cao, M.; Schulze, C.; Thakur, P.H.; An, Q.; Wehrenberg, S.; Hammill, E.F.; et al. HeartLogic Multisensor Algorithm Identifies Patients During Periods of Significantly Increased Risk of Heart Failure Events: Results from the MultiSENSE Study. Circ. Heart Fail. 2018, 11, e004669. [Google Scholar] [CrossRef] [PubMed]

- Yoon, M.; Park, J.J.; Hur, T.; Hua, C.H.; Hussain, M.; Lee, S.; Choi, D.J. Application and Potential of Artificial Intelligence in Heart Failure: Past, Present, and Future. Int. J. Heart Fail. 2023, 6, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Choi, D.-J.; Park, J.J.; Ali, T.; Lee, S. Artificial intelligence for the diagnosis of heart failure. NPJ Digit. Med. 2020, 3, 54. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Guo, A.; Pasque, M.; Loh, F.; Mann, D.L.; Payne, P.R.O. Heart Failure Diagnosis, Readmission, and Mortality Prediction Using Machine Learning and Artificial Intelligence Models. Curr. Epidemiol. Rep. 2020, 7, 212–219. [Google Scholar] [CrossRef]

- Celik, A.; Surmeli, A.O.; Demir, M.; Esen, K.; Camsari, A. The diagnostic value of chest X-ray scanning by the help of Artificial Intelligence in Heart Failure (ART-IN-HF). Clin. Cardiol. 2023, 46, 1562–1568. [Google Scholar] [CrossRef] [PubMed]

- Matsumoto, T.; Kodera, S.; Shinohara, H.; Ieki, H.; Yamaguchi, T.; Higashikuni, Y.; Kiyosue, A.; Ito, K.; Ando, J.; Takimoto, E.; et al. Diagnosing Heart Failure from Chest X-Ray Images Using Deep Learning. Int. Heart J. 2020, 61, 781–786. [Google Scholar] [CrossRef]

- Saito, Y.; Omae, Y.; Fukamachi, D.; Nagashima, K.; Mizobuchi, S.; Kakimoto, Y.; Toyotani, J.; Okumura, Y. Quantitative estimation of pulmonary artery wedge pressure from chest radiographs by a regression convolutional neural network. Heart Vessel. 2022, 37, 1387–1394. [Google Scholar] [CrossRef]

- Hirata, Y.; Kusunose, K.; Tsuji, T.; Fujimori, K.; Kotoku, J.; Sata, M. Deep Learning for Detection of Elevated Pulmonary Artery Wedge Pressure Using Standard Chest X-Ray. Can. J. Cardiol. 2021, 37, 1198–1206. [Google Scholar] [CrossRef] [PubMed]

- Ueda, D.; Matsumoto, T.; Ehara, S.; Yamamoto, A.; Walston, S.L.; Ito, A.; Shimono, T.; Shiba, M.; Takeshita, T.; Fukuda, D.; et al. Artificial intelligence-based model to classify cardiac functions from chest radiographs: A multi-institutional, retrospective model development and validation study. Lancet Digit. Health 2023, 5, e525–e533. [Google Scholar] [CrossRef] [PubMed]

- Kobirumaki-Shimozawa, F.; Inoue, T.; Shintani, S.A.; Oyama, K.; Terui, T.; Minamisawa, S.; Ishiwata, S.I.; Fukuda, N. Cardiac thin filament regulation and the Frank–Starling mechanism. J. Physiol. Sci. 2014, 64, 221–232. [Google Scholar] [CrossRef] [PubMed]

- Delicce, A.V.; Makaryus, A.N. Physiology, Frank Starling Law. [Updated 2023 Jan 30]. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2024. Available online: https://www.ncbi.nlm.nih.gov/books/NBK470295/ (accessed on 17 June 2024).

- Abdin, A.; Anker, S.D.; Butler, J.; Coats, A.J.S.; Kindermann, I.; Lainscak, M.; Lund, L.H.; Metra, M.; Mullens, W.; Rosano, G.; et al. ‘Time is prognosis’ in heart failure: Time-to-treatment initiation as a modifiable risk factor. ESC Heart Fail. 2021, 8, 4444–4453. [Google Scholar] [CrossRef] [PubMed]

- Ng, K.; Steinhubl, S.R.; deFilippi, C.; Dey, S.; Stewart, W.F. Early Detection of Heart Failure Using Electronic Health Records: Practical Implications for Time Before Diagnosis, Data Diversity, Data Quantity, and Data Density. Circ. Cardiovasc. Qual. Outcomes 2016, 9, 649–658. [Google Scholar] [CrossRef]

- Galasko, G.I.; Barnes, S.C.; Collinson, P.; Lahiri, A.; Senior, R. What is the most cost-effective strategy to screen for left ventricular systolic dysfunction: Natriuretic peptides, the electrocardiogram, hand-held echocardiography, traditional echocardiography, or their combination? Eur. Heart J. 2006, 27, 193–200. [Google Scholar] [CrossRef]

- Dini, F.L.; Bajraktari, G.; Zara, C.; Mumoli, N.; Rosa, G.M. Optimizing Management of Heart Failure by Using Echo and Natriuretic Peptides in the Outpatient Unit. Adv. Exp. Med. Biol. 2018, 1067, 145–159. [Google Scholar] [CrossRef]

- Castiglione, V.; Aimo, A.; Vergaro, G.; Saccaro, L.; Passino, C.; Emdin, M. Biomarkers for the diagnosis and management of heart failure. Heart Fail Rev. 2022, 27, 625–643. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

| <3 months group (n = 831; negatives:positives, 704:127) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 114 | 222 |

| Negative | 13 | 482 | |

| 4–6 months group (n = 805; negatives:positives, 704:101) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 86 | 222 |

| Negative | 15 | 482 | |

| 7–9 months group (n = 812; negatives:positives, 704:108) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 83 | 222 |

| Negative | 25 | 482 | |

| 10–12 months group (n = 781; negatives:positives, 704:77) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 53 | 222 |

| Negative | 24 | 482 | |

| Low ejection fraction group (n = 753; negatives:positives, 704:49) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 42 | 222 |

| Negative | 7 | 482 | |

| Mildly reduced ejection fraction group (n = 740; negatives:positives, 704:36) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 29 | 222 |

| Negative | 7 | 482 | |

| Normal ejection fraction group (n = 1019; negatives:positives, 704:315) | Disease–Ground Truth | ||

| Positive | Negative | ||

| Model Inference | Positive | 257 | 222 |

| Negative | 58 | 482 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garza-Frias, E.; Kaviani, P.; Karout, L.; Fahimi, R.; Hosseini, S.; Putha, P.; Tadepalli, M.; Kiran, S.; Arora, C.; Robert, D.; et al. Early Detection of Heart Failure with Autonomous AI-Based Model Using Chest Radiographs: A Multicenter Study. Diagnostics 2024, 14, 1635. https://doi.org/10.3390/diagnostics14151635

Garza-Frias E, Kaviani P, Karout L, Fahimi R, Hosseini S, Putha P, Tadepalli M, Kiran S, Arora C, Robert D, et al. Early Detection of Heart Failure with Autonomous AI-Based Model Using Chest Radiographs: A Multicenter Study. Diagnostics. 2024; 14(15):1635. https://doi.org/10.3390/diagnostics14151635

Chicago/Turabian StyleGarza-Frias, Emiliano, Parisa Kaviani, Lina Karout, Roshan Fahimi, Seyedehelaheh Hosseini, Preetham Putha, Manoj Tadepalli, Sai Kiran, Charu Arora, Dennis Robert, and et al. 2024. "Early Detection of Heart Failure with Autonomous AI-Based Model Using Chest Radiographs: A Multicenter Study" Diagnostics 14, no. 15: 1635. https://doi.org/10.3390/diagnostics14151635