Robot-Assisted 2D Fluoroscopic Needle Placement—A Phantom Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Phantom

2.2. Robotic Navigation System

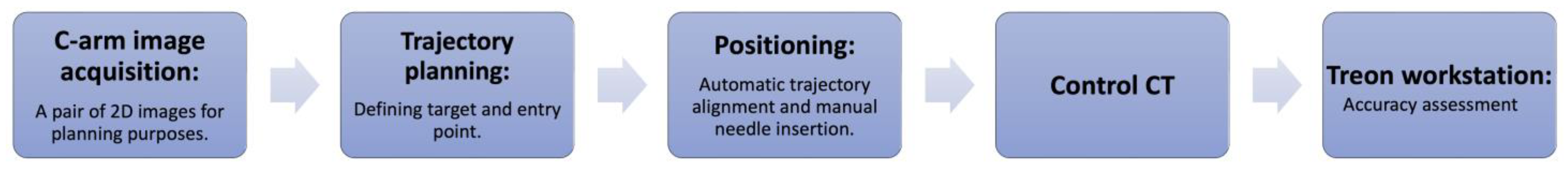

2.3. Experimental Workflow

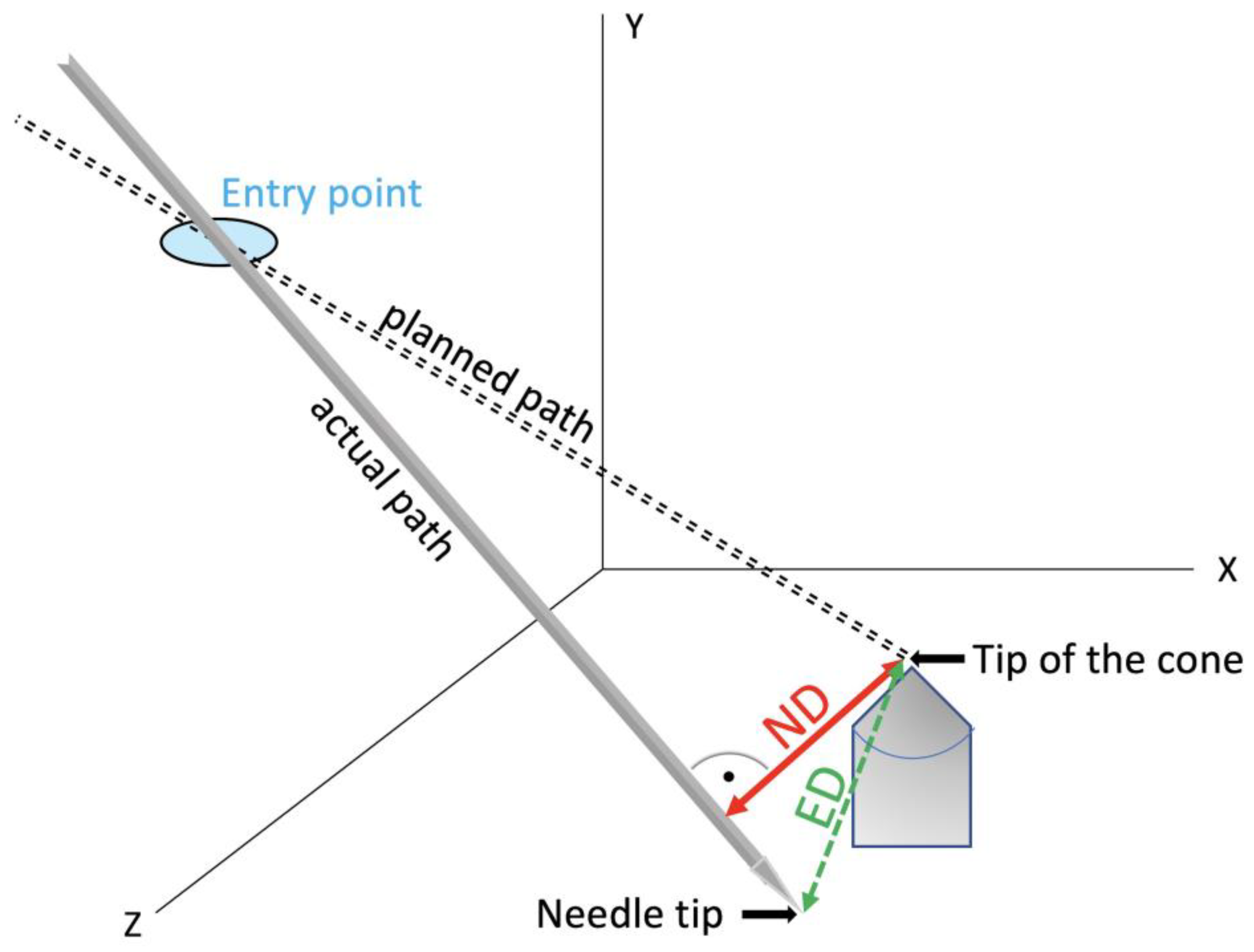

2.4. Evaluation

3. Results

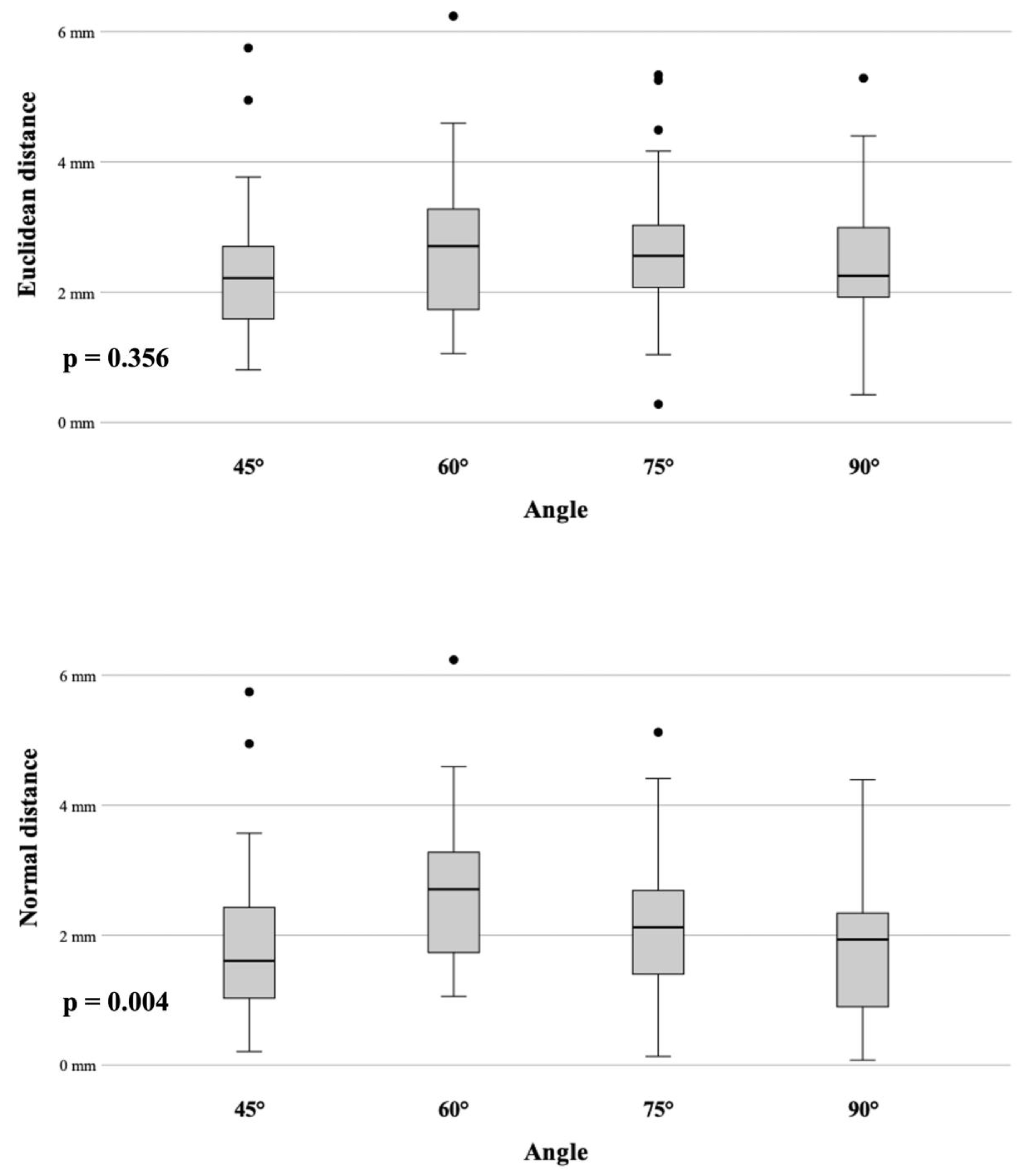

3.1. Angle Accuracy Comparison

3.2. Accuracy and Target Depth

3.3. Procedural Time

4. Discussion

4.1. Imaging Modalities

4.2. Robotic Systems

4.3. Combination of Robotic Device with C-Arm

4.4. Accuracy

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bale, R.; Widmann, G. Navigated CT-guided interventions. Minim. Invasive Ther. Allied Technol. MITAT Off. J. Soc. Minim. Invasive Ther. 2007, 16, 196–204. [Google Scholar] [CrossRef] [PubMed]

- Scharll, Y.; Letrari, S.; Laimer, G.; Schullian, P.; Bale, R. Puncture accuracy of an optical tracked robotic aiming device—A phantom study. Eur. Radiol. 2022, 32, 6769–6776. [Google Scholar] [CrossRef] [PubMed]

- Putzer, D.; Arco, D.; Schamberger, B.; Schanda, F.; Mahlknecht, J.; Widmann, G.; Schullian, P.; Jaschke, W.; Bale, R. Comparison of Two Electromagnetic Navigation Systems For CT-Guided Punctures: A Phantom Study. RoFo 2016, 188, 470–478. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Wang, G.; Zhang, J.; Zhang, S.; Chen, X.; Guo, Q.; Qu, F.; Shou, F. Robotic laser position versus freehand in CT-guided percutaneous microwave ablation for single hepatocellular carcinoma (diameter < 3 cm): A preliminary study. Int. J. Hyperth. 2022, 39, 725–732. [Google Scholar] [CrossRef]

- Han, Z.; Li, T.; Wang, S.; Gao, L.; Hu, Y.; Zhao, Y.; Yan, J. Ultrasound-Guided Radiofrequency Ablation for Primary Hyperparathyroidism Induced by Multiple Endocrine Neoplasia 1—A Case Report. Diagnostics 2022, 12, 2553. [Google Scholar] [CrossRef] [PubMed]

- Cleary, K.; Lim, S.; Jun, C.; Monfaredi, R.; Sharma, K.; Fricke, S.T.; Vargas, L.; Petrisor, D.; Stoianovici, D. Robotically Assisted Long Bone Biopsy Under MRI Imaging: Workflow and Preclinical Study. Acad. Radiol. 2018, 25, 74–81. [Google Scholar] [CrossRef] [PubMed]

- Doulgeris, J.J.; Gonzalez-Blohm, S.A.; Filis, A.K.; Shea, T.M.; Aghayev, K.; Vrionis, F.D. Robotics in Neurosurgery: Evolution, Current Challenges, and Compromises. Cancer Control 2015, 22, 352–359. [Google Scholar] [CrossRef]

- Scharll, Y.; Mitteregger, A.; Laimer, G.; Schwabl, C.; Schullian, P.; Bale, R. Comparison of a Robotic and Patient-Mounted Device for CT-Guided Needle Placement: A Phantom Study. J. Clin. Med. 2022, 11, 3746. [Google Scholar] [CrossRef]

- Mbalisike, E.C.; Vogl, T.J.; Zangos, S.; Eichler, K.; Balakrishnan, P.; Paul, J. Image-guided microwave thermoablation of hepatic tumours using novel robotic guidance: An early experience. Eur. Radiol. 2015, 25, 454–462. [Google Scholar] [CrossRef]

- Scharll, Y.; Radojicic, N.; Laimer, G.; Schullian, P.; Bale, R. Puncture Accuracy of Robot-Assisted CT-Based Punctures in Interventional Radiology: An Ex Vivo Study. Diagnostics 2024, 14, 1371. [Google Scholar] [CrossRef]

- Elisei, R.C.; Graur, F.; Szold, A.; Melzer, A.; Moldovan, S.C.; Motrescu, M.; Moiş, E.; Popa, C.; Pîsla, D.; Vaida, C.; et al. Gelatin-Based Liver Phantoms for Training Purposes: A Cookbook Approach. J. Clin. Med. 2024, 13, 3440. [Google Scholar] [CrossRef] [PubMed]

- Stoffner, R.; Augschöll, C.; Widmann, G.; Böhler, D.; Bale, R. Accuracy and feasibility of frameless stereotactic and robot-assisted CT-based puncture in interventional radiology: A comparative phantom study. RoFo Fortschritte Auf Dem Geb. Rontgenstrahlen Nukl. 2009, 181, 851–858. [Google Scholar] [CrossRef] [PubMed]

- Venturi, D.; Glossop, N.; Bale, R. Patient-specific templates for image-guided intervention—A phantom study. Minim. Invasive Ther. Allied Technol. 2020, 29, 251–260. [Google Scholar] [CrossRef] [PubMed]

- Scharll, Y.; Böhler, D.; Laimer, G.; Schullian, P.; Bale, R. Laser Target System in Combination with an Aiming Device for Percutaneous CT-Guided Interventions—An Accuracy Study. Acad. Radiol. 2023, 30, 3047–3055. [Google Scholar] [CrossRef] [PubMed]

- Bartsch, H.-J. Taschenbuch Mathematischer Formeln; Fachbuchverlag Leipzig: Leipzig, Germany, 2001. [Google Scholar]

- Abdullah, B.J.J.; Yeong, C.H.; Goh, K.L.; Yoong, B.K.; Ho, G.F.; Yim, C.C.W.; Kulkarni, A. Robot-assisted radiofrequency ablation of primary and secondary liver tumours: Early experience. Eur. Radiol. 2014, 24, 79–85. [Google Scholar] [CrossRef] [PubMed]

- Unger, M.; Berger, J.; Melzer, A. Robot-Assisted Image-Guided Interventions. Front. Robot. AI 2021, 8, 664622. [Google Scholar] [CrossRef] [PubMed]

- Kettenbach, J.; Kronreif, G. Robotic systems for percutaneous needle-guided interventions. Minim. Invasive Ther. Allied Technol. 2015, 24, 45–53. [Google Scholar] [CrossRef] [PubMed]

- Charalampopoulos, G.; Bale, R.; Filippiadis, D.; Odisio, B.C.; Wood, B.; Solbiati, L. Navigation and Robotics in Interventional Oncology: Current Status and Future Roadmap. Diagnostics 2023, 14, 98. [Google Scholar] [CrossRef]

- Lanza, C.; Carriero, S.; Buijs, E.F.M.; Mortellaro, S.; Pizzi, C.; Sciacqua, L.V.; Biondetti, P.; Angileri, S.A.; Ianniello, A.A.; Ierardi, A.M.; et al. Robotics in Interventional Radiology: Review of Current and Future Applications. Technol. Cancer Res. Treat. 2023, 22, 15330338231152084. [Google Scholar] [CrossRef]

- Cleary, K.; Peters, T.M. Image-Guided Interventions: Technology Review and Clinical Applications. Annu. Rev. Biomed. Eng. 2010, 12, 119–142. [Google Scholar] [CrossRef]

- de Battisti, M.B.; de Senneville, B.D.; Hautvast, G.; Binnekamp, D.; Lagendijk, J.J.W.; Maenhout, M.; Moerland, M.A. A novel adaptive needle insertion sequencing for robotic, single needle MR-guided high-dose-rate prostate brachytherapy. Phys. Med. Biol. 2017, 62, 4031–4045. [Google Scholar] [CrossRef] [PubMed]

- Chan, K.G.; Fielding, T.; Anvari, M. An image-guided automated robot for MRI breast biopsy. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 461–477. [Google Scholar] [CrossRef] [PubMed]

- Hata, N.; Song, S.; Olubiyi, O.; Arimitsu, Y.; Fujimoto, K.; Kato, T.; Tuncali, K.; Tani, S.; Tokuda, J. Body-mounted robotic instrument guide for image-guided cryotherapy of renal cancer. Med. Phys. 2016, 43, 843–853. [Google Scholar] [CrossRef] [PubMed]

- Berger, J.; Unger, M.; Keller, J.; Reich, C.M.; Neumuth, T.; Melzer, A. Design and validation of a medical robotic device system to control two collaborative robots for ultrasound-guided needle insertions. Front. Robot. AI 2022, 9, 875845. [Google Scholar] [CrossRef] [PubMed]

- Czerny, C.; Eichler, K.; Croissant, Y.; Schulz, B.; Kronreif, G.; Schmidt, R.; von Roden, M.; Schomerus, C.; Vogl, T.J.; Marzi, I.; et al. Combining C-arm CT with a new remote operated positioning and guidance system for guidance of minimally invasive spine interventions. J. Neurointerv. Surg. 2015, 7, 303–308. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.C.; Jeon, H.; An, S.B.; Kim, H.; Hwang, S.; Cha, Y.; Moon, S.; Shin, D.A.; Ha, Y.; Kim, K.N.; et al. Novel C-arm based planning spine surgery robot proved in a porcine model and quantitative accuracy assessment methodology. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2182. [Google Scholar] [CrossRef] [PubMed]

- Najafi, G.; Kreiser, K.; Abdelaziz, M.E.M.K.; Hamady, M.S. Current State of Robotics in Interventional Radiology. Cardiovasc. Interv. Radiol. 2023, 46, 549–561. [Google Scholar] [CrossRef] [PubMed]

- Kraus, M.; Dehner, C.; Riepl, C.; Krischak, G.; Gebhard, F.; Schöll, H. Navigated treatment of metatarsal V fractures using a new image based guidance system. Int. J. Med. Robot. Comput. Assist. Surg. 2012, 8, 441–447. [Google Scholar] [CrossRef]

- Tam, A.L.; Mohamed, A.; Pfister, M.; Chinndurai, P.; Rohm, E.; Hall, A.F.; Wallace, M.J. C-Arm cone beam computed tomography needle path overlay for fluoroscopic guided vertebroplasty. Spine 2010, 35, 1095–1099. [Google Scholar] [CrossRef]

- Bale, R.J.; Hoser, C.; Rosenberger, R.; Rieger, M.; Benedetto, K.P.; Fink, C. Osteochondral lesions of the talus: Computer-assisted retrograde drilling—Feasibility and accuracy in initial experiences. Radiology 2001, 218, 278–282. [Google Scholar] [CrossRef]

- Filippiadis, D.K.; Yevich, S.; Deschamps, F.; Jennings, J.W.; Tutton, S.; Kelekis, A. The Role of Ablation in Cancer Pain Relief. Curr. Oncol. Rep. 2019, 21, 105. [Google Scholar] [CrossRef] [PubMed]

- Yevich, S.; Tselikas, L.; Kelekis, A.; Filippiadis, D.; de Baere, T.; Deschamps, F. Percutaneous management of metastatic osseous disease. Chin. Clin. Oncol. 2019, 8, 62. [Google Scholar] [CrossRef] [PubMed]

- Tam, A.; Ahrar, K. Palliative Interventions for Pain in Cancer Patients. Semin. Interv. Radiol. 2007, 24, 419–429. [Google Scholar] [CrossRef] [PubMed]

| Angle | ED | ND |

|---|---|---|

| 45° | 2.32 mm (SD ± 0.16) | 1.86 mm (SD ± 0.19) |

| 60° | 2.68 mm (SD ± 0.18) | 2.68 mm (SD ± 0.18) |

| 75° | 2.65 mm (SD ± 0.16) | 2.19 mm (SD ± 0.18) |

| 90° | 2.44 mm (SD ± 0.15) | 1.86 mm (SD ± 0.18) |

| Angle | Image Acquisition | Trajectory Planning | Positioning | Total |

|---|---|---|---|---|

| 45° | 5.2 (2.9–7.5) | 3.5 (2.7–5.0) | 2.7 (2.3–3) | 11.4 (10.1–13.5) |

| 60° | 9.2 (4.7–16.6) | 3.1 (2.8–3.5) | 2.7 (2.4–3.1) | 15.1 (10.2–22.6) |

| 75° | 6.9 (3.1–16.9) | 3.1 (2.2–3.5) | 3.0 (2.3–3.2) | 13.0 (9.3–22.9) |

| 90° | 5.7 (2.5–10.4) | 2.7 (1.1–4.0) | 2.9 (2.4–3.1) | 11.4 (8.0–16.8) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Scharll, Y.; Radojicic, N.; Laimer, G.; Schullian, P.; Bale, R. Robot-Assisted 2D Fluoroscopic Needle Placement—A Phantom Study. Diagnostics 2024, 14, 1723. https://doi.org/10.3390/diagnostics14161723

Scharll Y, Radojicic N, Laimer G, Schullian P, Bale R. Robot-Assisted 2D Fluoroscopic Needle Placement—A Phantom Study. Diagnostics. 2024; 14(16):1723. https://doi.org/10.3390/diagnostics14161723

Chicago/Turabian StyleScharll, Yannick, Nenad Radojicic, Gregor Laimer, Peter Schullian, and Reto Bale. 2024. "Robot-Assisted 2D Fluoroscopic Needle Placement—A Phantom Study" Diagnostics 14, no. 16: 1723. https://doi.org/10.3390/diagnostics14161723