Technological Frontiers in Brain Cancer: A Systematic Review and Meta-Analysis of Hyperspectral Imaging in Computer-Aided Diagnosis Systems

Abstract

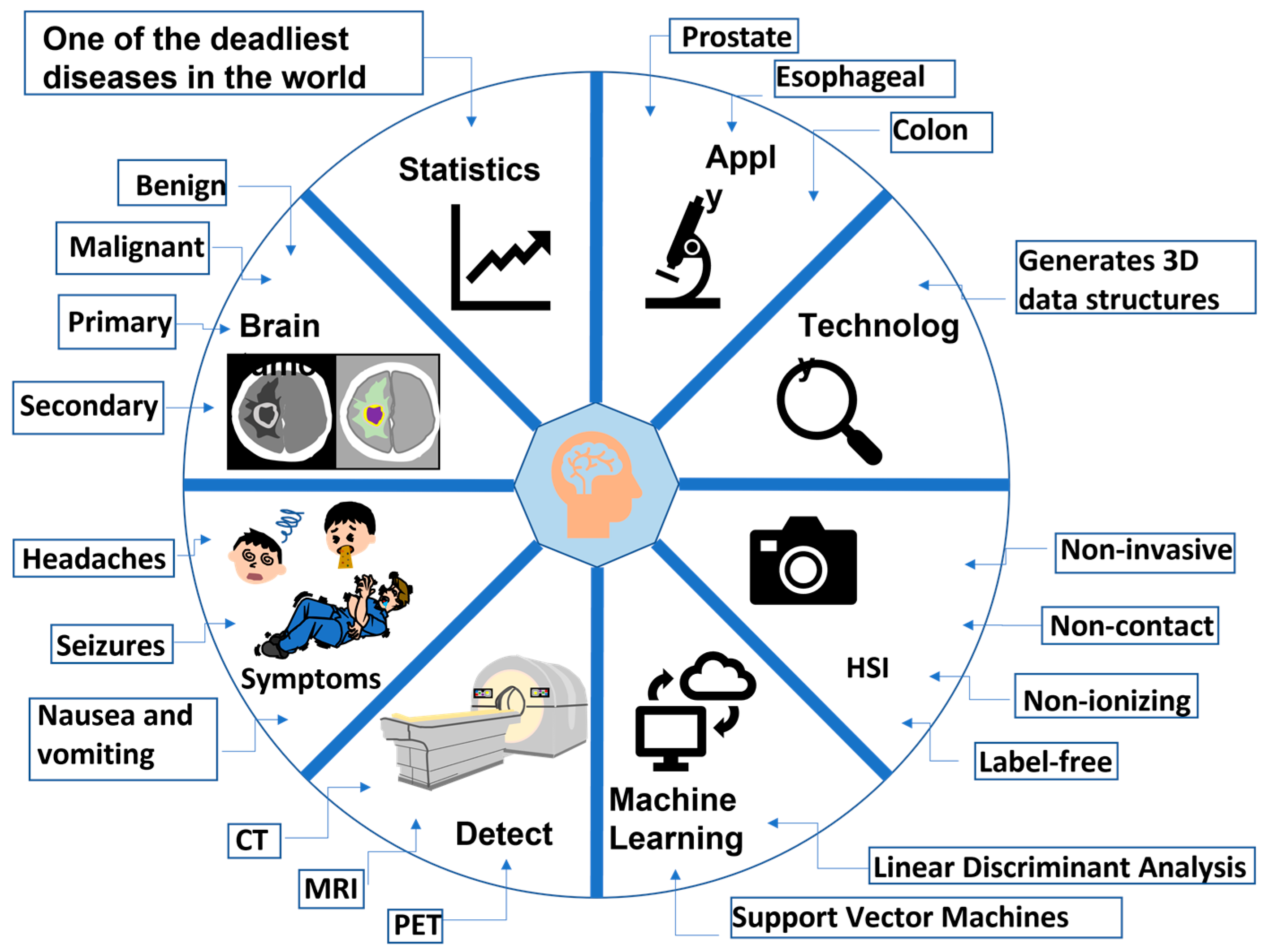

:1. Introduction

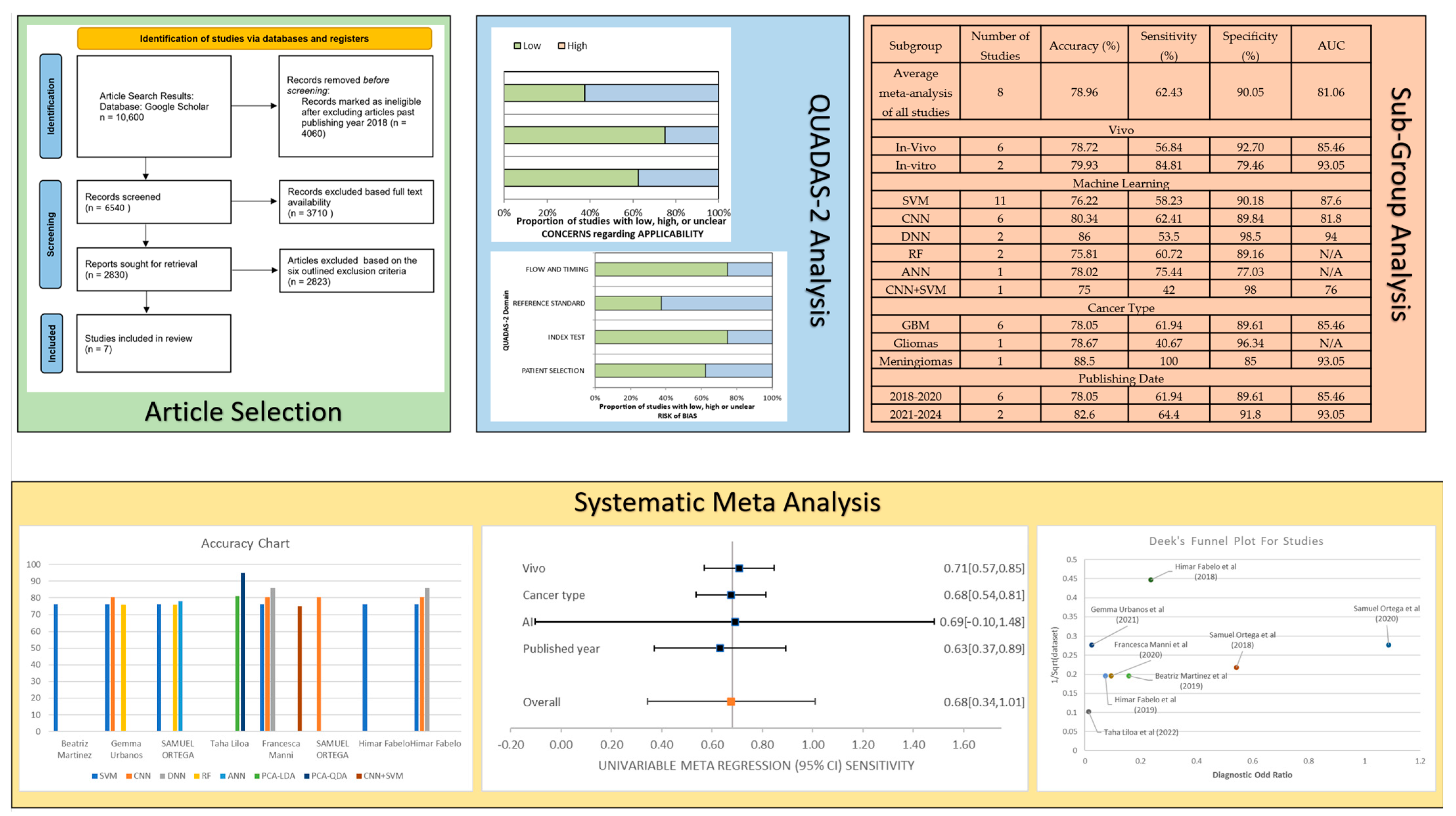

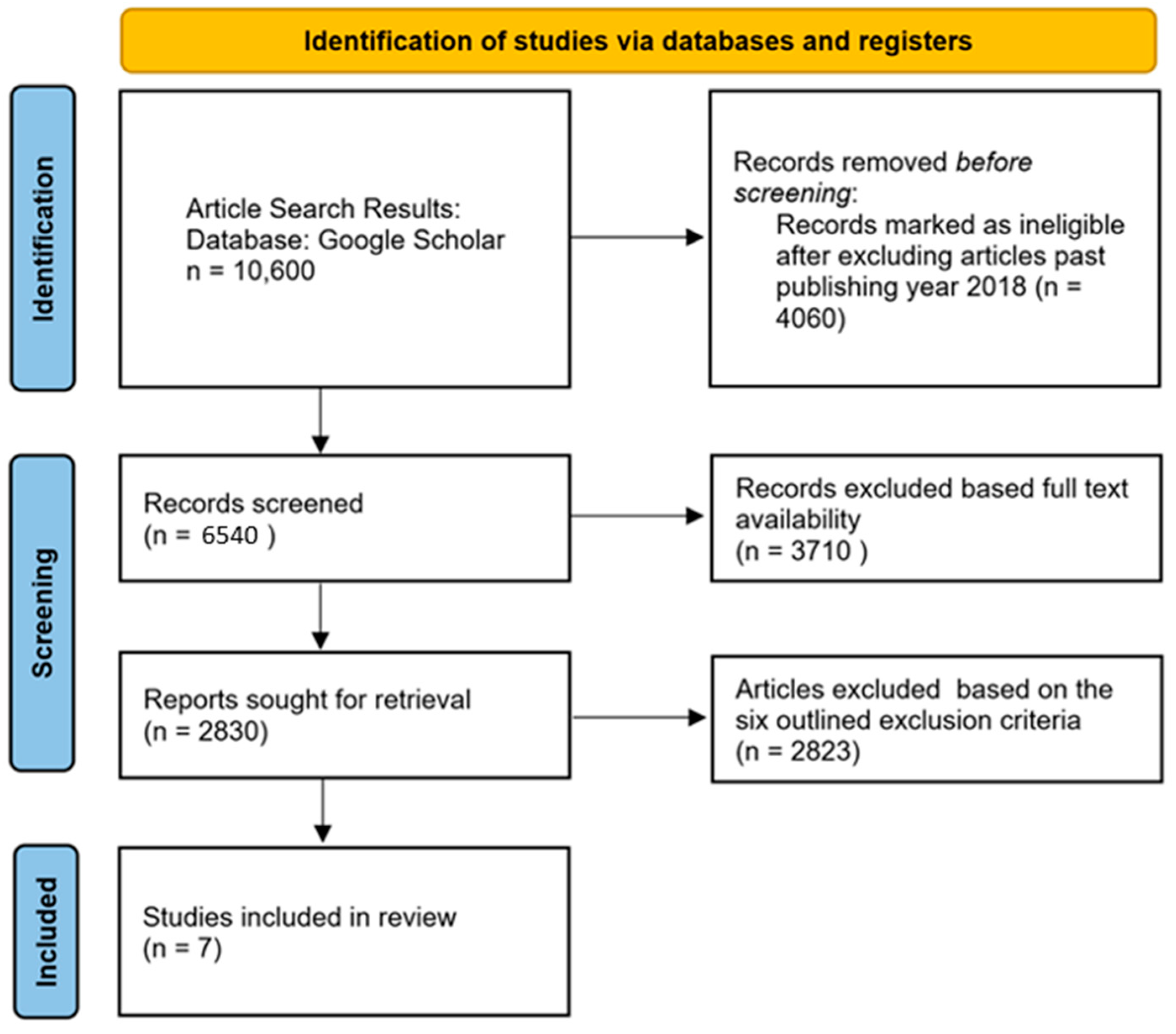

2. Materials and Methods

2.1. Study Selection Criteria

2.2. QUADAS-2 Results

3. Results

3.1. Clinical Features Observed in the Studies

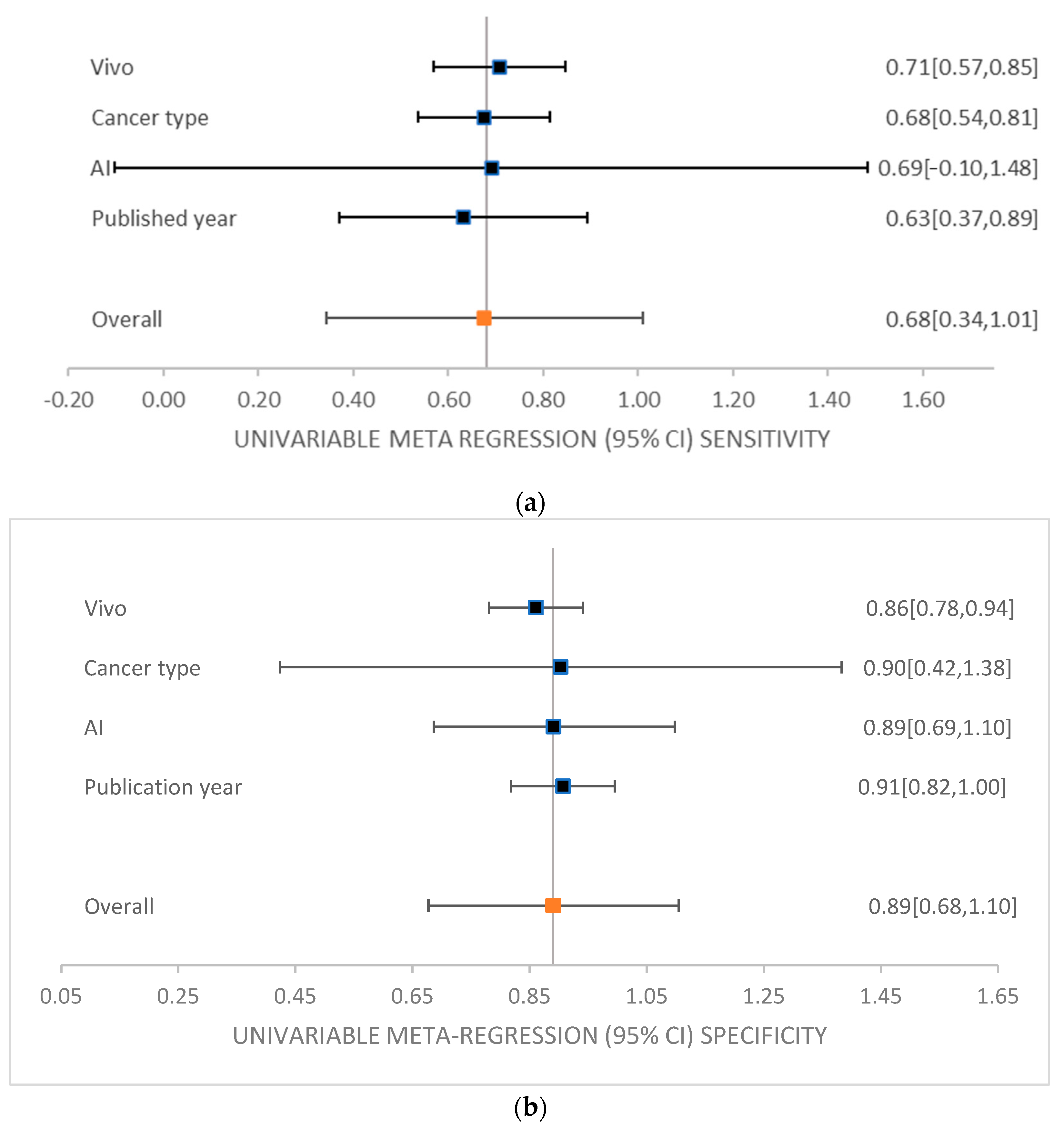

3.2. Meta-Analysis of the Studies

3.3. Subgroup Meta-Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Siegel, R.L.; Miller, K.D.; DVM, A.J. Cancer Statistics. A Cancer J. Clin. 2017, 67, 7–30. [Google Scholar] [CrossRef]

- Tempany, C.M.C.; Jayender, J.; Kapur, T.; Bueno, R.; Golby, A.; Agar, N.; Jolesz, F.A. Multimodal imaging for improved diagnosis and treatment of cancer. Cancer 2015, 121, 817–825. [Google Scholar] [CrossRef]

- Gull, S.; Akbar, S. Artificial Intelligence in Brain Tumor Detection through MRI Scans. In Artificial Intelligence and Internet of Things; CRC Press: Boca Raton, FL, USA, 2021; Volume 1, 36p. [Google Scholar]

- Tandel, G.S.; Biswas, M.; Kakde, O.G.; Tiwari, A.; Suri, H.S.; Turk, M.; Laird, J.R.; Asare, C.K.; Ankrah, A.A.; Khanna, N.N.; et al. A review on a deep learning perspective in brain cancer classification. Cancers 2019, 11, 111. [Google Scholar] [CrossRef]

- Agarwal, A.K.; Sharma, N.; Jain, M.K. Brain tumor classification using CNN. Adv. Appl. Math. Sci. 2021, 20, 397–407. [Google Scholar]

- Cho, K.-T.; Wang, K.-C.; Kim, S.-K.; Shin, S.-H.; Chi, J.G.; Cho, B.-K. Pediatric brain tumors: Statistics of SNUH, Korea. Child’s Nerv. Syst. 2002, 18, 30–37. [Google Scholar]

- Sinha, T. Tumors: Benign and Malignant. Cancer Ther. Oncol. Int. J. 2018, 10, 52–54. [Google Scholar] [CrossRef]

- Strowd, R.E.; Blakeley, J.O. Common Histologically Benign Tumors of the Brain. Contin. Lifelong Learn. Neurol. 2017, 23, 1680–1708. [Google Scholar] [CrossRef] [PubMed]

- Handa, H.; Bucy, P.C. Benign cysts of the Brain Simulating Brain Tumor. J. Neurosurg. 1956, 13, 489–499. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, D.A.; Fecci, P.E.; Sampson, J.H. Immunotherapy of malignant brain tumors. Immunol. Rev. 2008, 222, 70–100. [Google Scholar] [CrossRef]

- Smith, M.A.; Freidlin, B.; Ries, L.A.G.; Simon, R. Trends in Reported Incidence of Primary Malignant Brain Tumors in Children in the United States. J. Natl. Cancer Inst. 1998, 90, 1269–1277. [Google Scholar] [CrossRef]

- Patel, A. Benign vs Malignant tumors. JAMA Oncol. 2020, 6, 1488. [Google Scholar] [CrossRef]

- Devkota, B.; Alsadoon, A.; Prasad, P.W.; Singh, A.K.; Elchouemi, A. Elchouemi. Image Segmentation for Early Stage Brain Tumor Detection using Mathematical Morphological Reconstruction. Procedia Comput. Sci. 2018, 125, 115–123. [Google Scholar] [CrossRef]

- Alentorn, A.; Hoang-Xuan, K.; Mikkelsen, T. Presentinng signs and symptoms in brain tumors. Handb. Clin. Neurol. 2016, 134, 19–26. [Google Scholar]

- Bandyopadhyay, S.K. Detection of Brain Tumor-A Proposed Method. J. Glob. Res. Comput. Sci. 2011, 2, 56–64. [Google Scholar]

- Ari, A.; Hanbay, D. Deep learning based brain tumor classification and detection system. Turk. J. Electr. Eng. Comput. Sci. 2018, 26, 2275–2286. [Google Scholar] [CrossRef]

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Shankar, G.M.; Balaj, L.; Stott, S.L.; Nahed, B.; Carter, B.S. Liquid biopsy for brain tumors. Expert Rev. Mol. Diagn. 2017, 17, 943–947. [Google Scholar] [CrossRef] [PubMed]

- Ostertag, C.; Mennel, H.D.; Kiessling, M. Stereotactic biopsy of brain tumors. Surg. Neurol. 1980, 14, 275–283. [Google Scholar]

- Rincon-Torroella, J.; Khela, H.; Bettegowda, A.; Bettegowda, C. Biomarkers and focused ultrasound: The future of liquid biopsy for brain tumor patients. J. Neuro Oncol. 2011, 156, 33–48. [Google Scholar] [CrossRef]

- van den Brekel, M.W.; Lodder, W.L.; Stel, H.V.; Bloemena, E.; Leemans, C.R.; van der Waal, I. Observer variation in the histopathologic assessment of extranodal tumor spread in lymph node metastases in the neck. Head Neck 2012, 34, 840–845. [Google Scholar] [CrossRef]

- Coleman, R.E.; Hoffman, J.M.; Hanson, M.W.; Sostman, H.D.; Schold, S.C. Clinical application of PET for the evaluation of brain tumors. J. Nucl. Med. 1991, 32, 616–622. [Google Scholar] [PubMed]

- Buzug, T.M. Computed Tomography. In Springer Handbook of Medical Technology; Springer: Berlin/Heidelberg, Germany, 2011; pp. 311–342. [Google Scholar]

- Gordillo, N.; Montseny, E.; Sobrevilla, P. State of the art survey on MRI brain tumor segmentation. Magn. Reson. Imaging 2013, 31, 1426–1438. [Google Scholar] [CrossRef]

- Katti, G.; Ara, S.A.; Shireen, A. Magnetic Resonance Imaging (MRI)—A Review. Int. J. Dent. Clin. 2011, 3, 65–70. [Google Scholar]

- Chen, W. Clinical Applications of PET in Brain Tumors. J. Nucl. Med. 2017, 48, 1468–1481. [Google Scholar] [CrossRef] [PubMed]

- Basu, S.; Kwee, T.C.; Surti, S.; Akin, E.A.; Yoo, D.; Alavi, A. Fundamentals of PET and PET/CT imaging. N. Y. Acad. Sci. 2011, 1228, 1–174. [Google Scholar] [CrossRef]

- Brooks, W.H.; Mortara, R.H.; Preston, D. The Clinical Limitations of Brain Scanning in Metastatic Disease. J. Nucl. Med. 1974, 15, 620–621. [Google Scholar]

- Siegel, R.L.; Wagle, N.S.; Cercek, A.; Smith, R.A.; Jemal, A. Colorectal cancer statistics. A Cancer J. Clin. 2023, 73, 233–254. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Fedewa, S.A.; Ahnen, D.J.; Meester, R.G.; Barzi, A.; Jemal, A. Colorectal cancer statistics. CA A Cancer J. Clin. 2017, 67, 177–193. [Google Scholar] [CrossRef] [PubMed]

- Fei, B.; Lu, G.; Halicek, M.T.; Wang, X.; Zhang, H.; Little, J.V.; Magliocca, K.R.; Patel, M.; Griffith, C.C.; El-Deiry, M.W.; et al. Label-free hyperspectral imaging and quantification methods for surgical margin assessment of tissue specimens of cancer patients. In Proceedings of the 39th annual international conference of the IEEE engineering in medicine and biology society, Seogwipo, Republic of Korea, 11–15 July 2017; IEEE: Piscataway, NJ, USA; pp. 4041–4045. [Google Scholar]

- Lu, G.; Little, J.V.; Wang, X.; Zhang, H.; Patel, M.R.; Griffith, C.C.; El-Deiry, M.W.; Chen, A.Y.; Fei, B. Detection of head and neck cancer in surgical specimens using quantitative hyperspectral imaging. Clin. Cancer Res. 2017, 23, 5426–5436. [Google Scholar] [CrossRef]

- Kho, E.; de Boer, L.L.; Van de Vijver, K.K.; Sterenborg, H.J.; Ruers, T.J. Hyperspectral imaging for detection of breast cancer in resection margins using spectral-spatial classification. In Proceedings of the SPIE 10472, Diagnosis and Treatment of Diseases in the Breast and Reproductive System IV, San Francisco, CA, USA, 27–28 January 2018; SPIE: Bellingham, WA, USA, 2018; p. 104720f. [Google Scholar]

- Akbari, H.; Halig, L.V.; Schuster, D.M.; Osunkoya, A.; Master, V.; Nieh, P.; Chen, G.Z.; Fei, B. Hyperspectral imaging and quantitative analysis for prostate cancer detection. J. Biomed. Opt. 2012, 17, 076005. [Google Scholar] [CrossRef]

- Lu, G.; Qin, X.; Wang, D.; Muller, S.; Zhang, H.; Chen, A.; Chen, Z.G.; Fei, B. Hyperspectral imaging of neoplastic progression in a mouse model of oral carcinogenesis. In Proceedings of the SPIE 9788, Medical Imaging 2016: Biomedical Applications in Molecular, Structural, and Functional Imaging, San Diego, CA, USA, 1–3 March 2016; SPIE: Bellingham, WA, USA, 2016; p. 978812. [Google Scholar]

- Florimbi, G.; Fabelo, H.; Torti, E.; Lazcano, R.; Madroñal, D.; Ortega, S.; Salvador, R.; Leporati, F.; Danese, G.; Báez-Quevedo, A.; et al. Accelerating the K-nearest neighbors filtering algorithm to optimize the real-time classification of human brain tumor in hyperspectral images. Sensors 2018, 18, 2314. [Google Scholar] [CrossRef]

- Fabelo, H.; Ortega, S.; Ravi, D.; Kiran, B.R.; Sosa, C.; Bulters, D.; Callicó, G.M.; Bulstrode, H.; Szolna, A.; Piñeiro, J.F.; et al. Spatio-spectral classification of hyperspectral images for brain cancer detection during surgical operations. PLoS ONE 2018, 13, e0193721. [Google Scholar] [CrossRef] [PubMed]

- Zarei, N.; Bakhtiari, A.; Gallagher, P.; Keys, M.; MacAulay, C. Automated prostate glandular and nuclei detection using hyperspectral imaging. In Proceedings of the IEEE 14th international symposium on biomedical imaging, Melbourne, VIC, Australia, 18–21 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1028–1031. [Google Scholar]

- Ortega, S.; Fabelo, H.; Camacho, R.; Plaza, M.L.; Callico, G.M.; Lazcano, R.; Madroñal, D.; Salvador, R.; Juárez, E.; Sarmiento, R. P03.18 detection of human brain cancer in pathological slides using hyperspectral images. Neuro-Oncology 2017, 19, 37. [Google Scholar] [CrossRef]

- Rich, T.C.; Leavesley, S. Classification of normal and Lesional colon tissue using fluorescence excitation-scanning hyperspectral imaging as a method for early diagnosis of colon cancer. In Proceedings of the National Conference on Undergraduate Research, University of Memphis, Memphis, TN, USA, 6–8 April 2017; pp. 1063–1073. [Google Scholar]

- Pike, R.; Lu, G.; Wang, D.; Chen, Z.G.; Fei, B. A minimum spanning forest-based method for noninvasive cancer detection with hyperspectral imaging. IEEE Trans. Biomed. Eng. 2016, 63, 653–663. [Google Scholar] [CrossRef] [PubMed]

- Regeling, B.; Thies, B.; Gerstner, A.O.; Westermann, S.; Müller, N.A.; Bendix, J.; Laffers, W. Hyperspectral imaging using flexible endoscopy for laryngeal cancer detection. Sensors 2016, 16, 1288. [Google Scholar] [CrossRef] [PubMed]

- Ravi, D.; Fabelo, H.; Callic, G.M.; Yang, G.Z. Manifold embedding and semantic segmentation for intraoperative guidance with hyperspectral brain imaging. IEEE Trans. Med. Imaging 2017, 36, 1845–1857. [Google Scholar] [CrossRef]

- Nathan, M.; Kabatznik, A.S.; Mahmood, A. Hyperspectral imaging for cancer detection and classification. In Proceedings of the 3rd Biennial South African Biomedical Engineering Conference, Stellenbosch, South Africa, 4–6 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Ortega, S.; Callicó, G.M.; Plaza, M.L.; Camacho, R.; Fabelo, H.; Sarmiento, R. Hyperspectral database of pathological in-vitro human brain samples to detect carcinogenic tissues. In Proceedings of the IEEE 13th International Symposium on Biomedical Imaging, Prague, Czech Republic, 13–16 April 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 369–372. [Google Scholar]

- Gao, X.W.; Hui, R.; Tian, Z. Classification of CT brain images based on deep learning networks. Comput. Methods Programs Biomed. 2017, 138, 49–56. [Google Scholar] [CrossRef]

- Özyurt, F.; Toğaçar, M.; Avcı, E.; Avcı, D. Classification of breast cancer images by using of convolutional attribute of ANN. In Proceedings of the 2018 3rd International Conference on Computer Science and Engineering (UBMK), Sarajevo, Bosnia and Herzegovina, 20–23 September 2018; pp. 420–423. [Google Scholar]

- Vijayakumar, D.T. Classification of Brain Cancer Type Using Machine Learning. J. Artif. Intell. Capsul. Netw. 2018, 1, 105–113. [Google Scholar]

- Koo YE, L.; Reddy, G.R.; Bhojani, M.; Schneider, R.; Philbert, M.A.; Rehemtulla, A.; Ross, B.D.; Kopelman, R. Brain cancer diagnosis and therapy with nanoplatforms. Adv. Drug Deliv. Rev. 2006, 58, 1556–1577. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, C.H.; Sun, Y.; Pu, Y.; Boydston-White, S.; Liu, Y.; Alfano, R.R. Human brain cancer studied by resonance Raman spectroscopy. J. Biomed. Opt. 2012, 17, 116021. [Google Scholar] [CrossRef]

- El-Dahshan ES, A.; Mohsen, H.M.; Revett, K.; Salem AB, M. Computer-aided diagnosis of human brain tumor through MRI: A survey and a new algorithm. Expert Syst. Appl. 2014, 41, 5526–5545. [Google Scholar] [CrossRef]

- Starr, C.; Evers, C.; Starr, L. Biology: Concepts and Applications without Physiology; Cengage Learning: Boston, MA, USA, 2010. [Google Scholar]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Goetz, A.F.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging spectrometry for earth remote sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef]

- Calin, M.A.; Parasca, S.V.; Savastru, D.; Manea, D. Hyperspectral imaging in the medical field: Present and future. Appl. Spectrosc. Rev. 2013, 49, 435–447. [Google Scholar] [CrossRef]

- Fabelo, H.; Ortega, S.; Szolna, A.; Bulters, D.; Piñeiro, J.F.; Kabwama, S.; J-O’Shanahan, A.; Bulstrode, H.; Bisshopp, S.; Ravi Kiran, B.; et al. In-Vivo Hyperspectral Human Brain Image Database for Brain Cancer Detection. IEEE Access 2019, 7, 39098–39116. [Google Scholar] [CrossRef]

- Kamruzzaman, M.; Sun, D.W. Introduction to Hyperspectral Imaging Technology. In Computer Vision Technology for Food Quality Evaluation; Academic Press: Cambridge, MA, USA, 2016; pp. 111–139. [Google Scholar]

- Halicek, M.; Fabelo, H.; Ortega, S.; Callico, G.M.; Fei, B. In-Vivo and Ex-Vivo Tissue Analysis through Hyperspectral Imaging Techniques: Revealing the Invisible Features of Cancer. Cancers 2019, 11, 756. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W. Advanced applications of hyperspectral imaging technology for food quality and safety analysis and assessment. A review—Part I: Fundamentals. Innov. Food Sci. Emerg. Technol. 2013, 19, 1–14. [Google Scholar] [CrossRef]

- Pan, Z.; Healey, G.; Prasad, M.; Tromberg, B. Face recognition in hyperspectral images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1552–1560. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Akbari, H.; Kosugi, Y.; Kojima, K.; Tanaka, N. Detection and analysis of the intestinal ischemia using visible and invisible hyperspectral imaging. IEEE Geosci. Remote Sens. Mag. 2010, 57, 2011–2017. [Google Scholar] [CrossRef]

- Fischer, C.; Kakoulli, I. Multispectral and hyperspectral imaging technologies in conservation: Current research and potential applications. Stud. Conserv. 2006, 51, 3–16. [Google Scholar] [CrossRef]

- Edelman, G.J.; Gaston, E.; Van Leeuwen, T.G.; Cullen, P.J.; Aalders, M.C. Hyperspectral imaging for non-contact analysis of forensic traces. Forensic Sci. Int. 2012, 223, 28–39. [Google Scholar] [CrossRef] [PubMed]

- Malkoff, D.B.; Oliver, W.R. Hyperspectral imaging applied to forensic medicine. Proc. SPIE 2000, 3920, 108–116. [Google Scholar]

- Kuula, J.; Pölönen, I.; Puupponen, H.H.; Selander, T.; Reinikainen, T.; Kalenius, T.; Saari, H. Using VIS/NIR and IR spectral cameras for detecting and separating crime scene details. In Proceedings of the SPIE 8359, Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Security and Homeland Defense, Baltimore, MD, USA, 23–25 April 2012; Volume XI, p. 83590. [Google Scholar]

- Schuler, R.L.; Kish, P.E.; Plese, C.A. Preliminary observations on the ability of hyperspectral imaging to provide detection and visualization of bloodstain patterns on black fabrics. J. Forensic Sci. 2012, 57, 1562–1569. [Google Scholar] [CrossRef]

- Rodrigues, E.M.; Hemmer, E. Trends in hyperspectral imaging: From environmental and health sensing to structure-property and nano-bio interaction studies. Anal. Bioanal. Chem. 2022, 414, 4269–4279. [Google Scholar] [CrossRef] [PubMed]

- Mukundan, A.; Tsao, Y.M.; Wang, H.C. Detection of Counterfeit Holograms Using Hyperspectral Imaging; SPIE: Bellingham, WA, USA, 2013; Volume 12768. [Google Scholar]

- Huang, S.-Y.; Karmakar, R.; Chen, Y.-Y.; Hung, W.-C.; Mukundan, A.; Wang, H.-C. Large-Area Film Thickness Identification of Transparent Glass by Hyperspectral Imaging. Sensors 2024, 24, 5094. [Google Scholar] [CrossRef]

- Fang, Y.J.; Huang, C.W.; Karmakar, R.; Mukundan, A.; Tsao, Y.M.; Yang, K.Y.; Wang, H.C. Assessment of Narrow-Band Imaging Algorithm for Video Capsule Endoscopy Based on Decorrelated Color Space for Esophageal Cancer: Part II, Detection and Classification of Esophageal Cancer. Cancers 2024, 16, 572. [Google Scholar] [CrossRef]

- Nasir, F.A.; Liaquat, S.; Khurshid, K.; Mahyuddin, N.M. A hyperspectral unmixing approach for ink mismatch detection in unbalanced clusters. J. Inf. Intell. 2024, 2, 2949–7159. [Google Scholar] [CrossRef]

- Mukundan, A.; Patel, A.; Saraswat, K.D.; Tomar, A.; Wang, H. Novel Design of a Sweeping 6-Degree of Freedom Lunar Penetrating Radar. In Proceedings of the AIAA AVIATION 2023 Forum, San Diego, CA, USA, 12–16 June 2023. [Google Scholar]

- Mukundan, A.; Wang, H.-C.; Tsao, Y.-M. A Novel Multipurpose Snapshot Hyperspectral Imager used to Verify Security Hologram. In Proceedings of the 2022 International Conference on Engineering and Emerging Technologies (ICEET), Kuala Lumpur, Malaysia, 27–28 October 2022; pp. 1–3. [Google Scholar]

- Mukundan, A.; Huang, C.C.; Men, T.C.; Lin, F.C.; Wang, H.C. Air Pollution Detection Using a Novel Snap-Shot Hyperspectral Imaging Technique. Sensors 2022, 22, 6231. [Google Scholar] [CrossRef]

- Wang, C.Y.; Mukundan, A.; Liu, Y.S.; Tsao, Y.M.; Lin, F.C.; Fan, W.S.; Wang, H.C. Optical Identification of Diabetic Retinopathy Using Hyperspectral Imaging. J. Pers. Med. 2023, 13, 939. [Google Scholar] [CrossRef]

- Mukundan, A.; Tsao, Y.-M.; Lin, F.-C.; Wang, H.-C. Portable and low-cost hologram verification module using a snapshot-based hyperspectral imaging algorithm. Sci. Rep. 2022, 12, 18475. [Google Scholar] [CrossRef] [PubMed]

- Mukundan, A.; Tsao, Y.M.; Cheng, W.M.; Lin, F.C.; Wang, H.C. Automatic Counterfeit Currency Detection Using a Novel Snapshot Hyperspectral Imaging Algorithm. Sensors 2023, 23, 2026. [Google Scholar] [CrossRef]

- Afromowitz, M.; Callis, J.; Heimbach, D.; DeSoto, L.; Norton, M. Multispectral imaging of burn wounds: A new clinical instrument for evaluating burn depth. IEEE Trans. Biomed. Eng. 1988, 35, 842–850. [Google Scholar] [CrossRef]

- Carrasco, O.; Gomez, R.B.; Chainani, A.; Roper, W.E. Hyperspectral imaging applied to medical diagnoses and food safety. Geo-Spat. Temporal Image Data Exploit. 2003, III, 215–221. [Google Scholar]

- Zuzak, K.J.; Naik, S.C.; Alexandrakis, G.; Hawkins, D.; Behbehani, K.; Livingston, E.H. Characterization of a near-infrared laparoscopic hyperspectral imaging system for minimally invasive surgery. Anal. Chem. 2017, 79, 4709–4715. [Google Scholar] [CrossRef]

- Benavides, J.M.; Chang, S.; Park, S.Y.; Richards-Kortum, R.; Mackinnon, N.; MacAulay, C.; Milbourne, A.; Malpica, A.; Follen, M. Multispectral digital colposcopy for in vivo detection of cervical cancer. Opt. Express 2003, 11, 1223–1236. [Google Scholar] [CrossRef] [PubMed]

- Hirohara, Y.; Okawa, Y.; Mihashi, T.; Yamaguchi, T.; Nakazawa, N.; Tsuruga, Y.; Aoki, H.; Maeda, N.; Uchida, I.; Fujikado, T. Validity of retinal oxygen saturation analysis: Hyperspectral imaging in visible wavelength with fundus camera and liquid crystal wavelength tunable filter. Opt. Rev. 2007, 14, 151–158. [Google Scholar] [CrossRef]

- Fawzi, A.A.; Lee, N.; Acton, J.H.; Laine, A.F.; Smith, R.T. Recovery of macular pigment spectrum in vivo using hyperspectral image analysis. J. Biomed. Opt. 2011, 16, 106008. [Google Scholar] [CrossRef]

- Bégin, S.; Burgoyne, B.; Mercier, V.; Villeneuve, A.; Vallée, R.; Côté, D. Coherent anti-stokes Raman scattering hyperspectral tissue imaging with a wavelength-swept system. Biomed. Opt. Express 2011, 2, 1296–1306. [Google Scholar] [CrossRef]

- Schweizer, J.; Hollmach, J.; Steiner, G.; Knels, L.; Funk, R.H.W.; Koch, E. Hyperspectral imaging—A new modality for eye diagnostics. Biomed. Tech. 2012, 57, 293–296. [Google Scholar] [CrossRef]

- Palmer, G.M.; Fontanella, A.N.; Zhang, G.; Hanna, G.; Fraser, C.L.; Dewhirst, M.W. Optical imaging of tumor hypoxia dynamics. J. Biomed. Opt. 2010, 15, 066021. [Google Scholar] [CrossRef] [PubMed]

- Maeder, U.; Marquardt, K.; Beer, S.; Bergmann, T.; Schmidts, T.; Heverhagen, J.; Zink, K.; Runkel, F.; Fiebich, M. Evaluation and quantification of spectral information in tissue by confocal microscopy. J. Biomed. Opt. 2012, 17, 106011. [Google Scholar] [CrossRef] [PubMed]

- Manni, F.; Fonolla, R.; van der Sommen, F.; Zinger, S.; Shan, C.; Kho, E.; de Koning, S.B.; Ruers, T.; de With, P.H. Hyperspectral imaging for colon cancer classification in surgical specimens: Towards optical biopsy during image-guided surgery. In Proceedings of the 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1169–1173. [Google Scholar]

- Tsai, T.-J.; Mukundan, A.; Chi, Y.-S.; Tsao, Y.-M.; Wang, Y.-K.; Chen, T.-H.; Wu, I.-C.; Huang, C.-W.; Wang, H.-C. Intelligent Identification of Early Esophageal Cancer by Band-Selective Hyperspectral Imaging. Cancers 2022, 14, 4292. [Google Scholar] [CrossRef]

- Goto, A.; Nishikawa, J.; Kiyotoki, S.; Nakamura, M.; Nishimura, J.; Okamoto, T.; Ogihara, H.; Fujita, Y.; Hamamoto, Y.; Sakaida, I. Use of hyperspectral imaging technology to develop a diagnostic support system for gastric cancer. J. Biomed. Opt. 2015, 20, 016017. [Google Scholar] [CrossRef]

- Wang, X.; Wang, T.; Zheng, Y.; Yin, X. Hyperspectral-attention mechanism-based improvement of radiomics prediction method for primary liver cancer. Photodiagnosis Photodyn. Ther. 2021, 36, 102486. [Google Scholar] [CrossRef] [PubMed]

- Aboughaleb, I.H.; Aref, M.H.; El-Sharkawy, Y.H. El-Sharkawy. Hyperspectral imaging for diagnosis and detection of ex-vivo breast cancer. Photodiagnosis Photodyn. Ther. 2020, 31, 101922. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Luo, T.; Nie, J.; Chu, Y. Blood cancer diagnosis using hyperspectral imaging combined with the forward searching method and machine learning. Anal. Methods 2023, 15, 3885–3892. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M.; QUADAS-2 Group. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Martinez, B.; Leon, R.; Fabelo, H.; Ortega, S.; Piñeiro, J.F.; Szolna, A.; Hernandez, M.; Espino, C.; O’Shanahan, A.J.; Carrera, D.; et al. Most Relevant Spectral Bands Identification for Brain Cancer Detection Using Hyperspectral imaging. Sensors 2019, 19, 5481. [Google Scholar] [CrossRef]

- Urbanos, G.; Martín, A.; Vázquez, G.; Villanueva, M.; Villa, M.; Jimenez-Roldan, L.; Chavarrías, M.; Lagares, A.; Juárez, E.; Sanz, C. Supervised Machine Learning Methods and Hyperspectral Imaging Techniques Jointly Applied for Brain Cancer Classification. Sensors 2021, 21, 3827. [Google Scholar] [CrossRef]

- Ortega, S.; Fabelo, H.; Camacho, R.; de la Luz Plaza, M.; Callicó, G.M.; Sarmiento, R. Detecting brain tumor in pathological slides using hyperspectral imaging. Biomed. Opt. Express 2018, 9, 818–831. [Google Scholar] [CrossRef]

- Manni, F.; van der Sommen, F.; Fabelo, H.; Zinger, S.; Shan, C.; Edström, E.; Elmi-Terander, A.; Ortega, S.; Marrero Callicó, G.; de With, P.H.N. Hyperspectral Imaging for Glioblastoma Surgery: Improving Tumor Identification Using a Deep Spectral-Spatial Approach. Sensors 2020, 20, 6955. [Google Scholar] [CrossRef]

- Ortega, S.; Halicek, M.; Fabelo, H.; Camacho, R.; Plaza, M.d.l.L.; Godtliebsen, F.; Callicó, G.M.; Fei, B. Hyperspectral Imaging for the Detection of Glioblastoma Tumor Cells in H&E Slides Using Convolutional Neural Networks. Sensors 2020, 20, 1911. [Google Scholar] [CrossRef]

- Fabelo, H.; Halicek, M.; Ortega, S.; Shahedi, M.; Szolna, A.; Piñeiro, J.F.; Sosa, C.; O’Shanahan, A.J.; Bisshopp, S.; Espino, C.; et al. Deep Learning-Based Framework for In Vivo Identification of Glioblastoma Tumor using Hyperspectral Images of Human Brain. Sensors 2019, 19, 920. [Google Scholar] [CrossRef]

- Yin, J.; Tian, L. Joint inference about sensitivity and specificity at the optimal cut-off point associated with Youden index. Comput. Stat. Data Anal. 2014, 77, 1–13. [Google Scholar] [CrossRef]

- Khan, S.I.; Rahman, A.; Debnath, T.; Karim, R.; Nasir, M.K.; Band, S.S.; Mosavi, A.; Dehzangi, I. Accurate brain tumor detection using deep convolutional neural network. Comput. Struct. Biotechnol. J. 2022, 20, 4733–4745. [Google Scholar] [CrossRef] [PubMed]

- Writing Committee Members; Brott, T.G.; Halperin, J.L.; Abbara, S.; Bacharach, J.M.; Barr, J.D.; Bush, R.L.; Cates, C.U.; Creager, M.A.; Fowler, S.B.; et al. 2011 ASA/ACCF/AHA/AANN/AANS/ACR/ASNR/CNS/SAIP/SCAI/SIR/SNIS/SVM/SVS Guideline on the Management of Patients with Extracranial Carotid and Vertebral Artery Disease. Stroke 2011, 42, e464–e540. [Google Scholar] [PubMed]

- McNeill, K.A. Epidemiology of Brain Tumors. Neurol. Clin. 2016, 34, 981–998. [Google Scholar] [CrossRef]

- Nazir, M.; Shakil, S.; Khurshid, K. Role of deep learning in brain tumor detection and classification (2015 to 2020): A review. Comput. Med. Imaging Graph. 2021, 91, 101940. [Google Scholar] [CrossRef]

| Year | Author | Application | Spectral Range (nm) |

|---|---|---|---|

| 2012 [34] | Hamed Akbari et al. | Prostate cancer | 500 to 950 nm |

| 2017 [32] | Guolan Lu et al. | Head and neck cancer | 450 to 900 nm |

| 2020 [89] | Francesca Manni et al. | Colon cancer | up to 1700 nm |

| 2022 [90] | Tsung-Jung Tsai at al. | Esophageal cancer | 415 and 540 nm |

| 2015 [91] | Atsushi Goto et al. | Gastric cancer | 1000 to 2500 nm |

| 2021 [92] | Xuehu Wang et al. | Liver cancer | 400–1000 nm |

| 2020 [93] | Ibrahim H et al. | Breast cancer | 400–700 nm |

| 2023 [94] | Riheng Chen et al. | Blood cancer | 400–1000 nm |

| Study | Risk of Bias | Applicability Concerns | |||||

|---|---|---|---|---|---|---|---|

| Patient Selection | Index Test | Reference Standard | Flow and Timing | Patient Selection | Index Test | Reference Standard | |

| Beatriz Martinez et al., 2019 [97] |  |  | ? |  | ? |  | ? |

| Gemma Urbanos et al., 2021 [98] |  |  | ? |  |  |  | ? |

| Samuel Ortega et al., 2018 [99] | ? |  |  |  |  |  |  |

| Francesca Manni et al., 2020 [100] |  |  | ? |  | ? |  | ? |

| Samuel Ortega et al., 2020 [101] | ? |  |  |  | ? | ? |  |

| Himar Fabelo et al., 2018 [32] |  | ? | ? |  |  | ? | ? |

| Himar Fabelo et al., 2019 [102] |  | ? | ? | ? |  | ? | ? |

Low Risk, ? Unclear Risk,

Low Risk, ? Unclear Risk,  High Risk.

High Risk.| Author Year | Nationality | Method | Band | Vivo | Index Number | Dataset | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Beatriz Martinez et al., 2019 [97] | Western | SVM band L1 | VNIR | In Vivo | 1 | 26 | 77.9 | 52.7 | 94.6 | N/A |

| SVM band L2 | 2 | 77 | 57 | 91.2 | ||||||

| SVM band L3 | 3 | 53.8 | 57.6 | 70.3 | ||||||

| Gemma Urbanos et al., 2021 [98] | Western | SVM | VNIR | In Vitro | 4 | 13 | 76.5 | 26 | 91 | N/A |

| RF | 5 | 82.5 | 48.5 | 99 | ||||||

| CNN | 6 | 77 | 47.5 | 99 | ||||||

| SAMUEL ORTEGA et al., 2018 [99] | Western | SVM | VNIR | In Vivo | 7 | 21 biopsies | 75.53 | 75.69 | 70.97 | N/A |

| ANN | 8 | 78.02 | 75.44 | 77.03 | ||||||

| RF | 9 | 69.13 | 72.94 | 79.33 | ||||||

| Francesca Manni et al., 2020 [100] | Western | 3D–2D CNN | VNIR | In Vivo | 10 | 26 | 80 | 68 | 98 | 70 |

| 3D–2D CNN + SVM | 11 | 75 | 42 | 98 | 76 | |||||

| SVM | 12 | 76 | 43 | 98 | 70 | |||||

| 2DCNN | 13 | 72 | 14 | 97 | 71 | |||||

| 1DDNN | 14 | 78 | 19 | 97 | 89 | |||||

| SAMUEL ORTEGA et al., 2020 [101] | Western | CNN HIS | VNIR | In Vivo | 15 | 13 biopsies | 85 | 88 | 77 | 87 |

| CNN RGB | 16 | 80 | 81 | 68 | 84 | |||||

| Himar Fabelo et al., 2018 [32] | Western | SVM | VNIR | In Vivo | 17 | 5 | 99.72 | 99.62 | 99.91 | N/A |

| Himar Fabelo et al., 2019 [102] | Western | 1D-DNN | VNIR | In Vivo | 18 | 26 | 94 | 88 | 100 | 99 |

| 2D-CNN | 19 | 88 | 76 | 100 | 97 | |||||

| SVM RBF Opt. | 20 | 84 | 68 | 100 | 97 | |||||

| SVM RBF Def. | 21 | 73 | 58 | 88 | 86 | |||||

| SVM Linear Opt. | 22 | 77 | 54 | 100 | 99 | |||||

| SVM Linear Def | 23 | 68 | 49 | 88 | 86 |

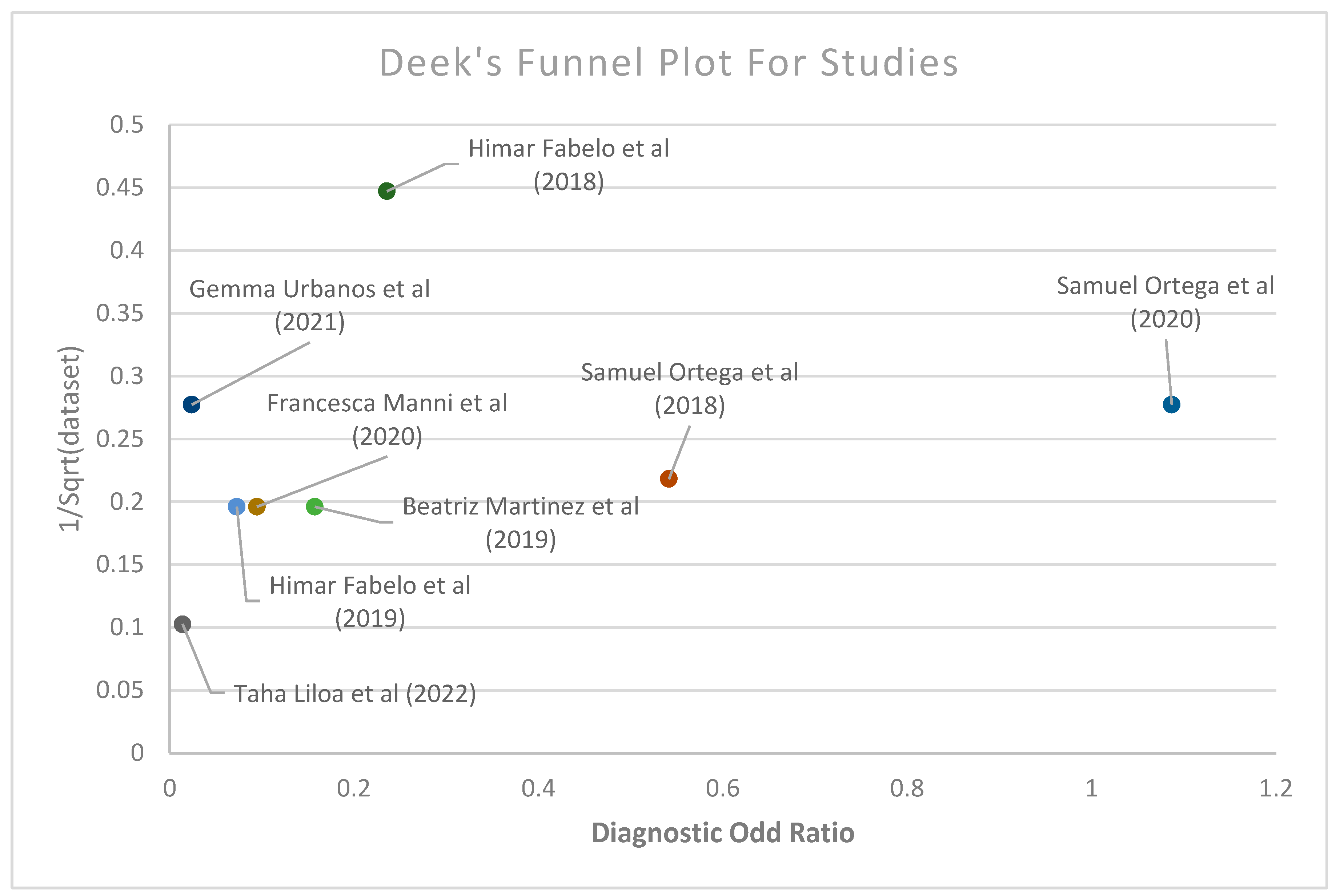

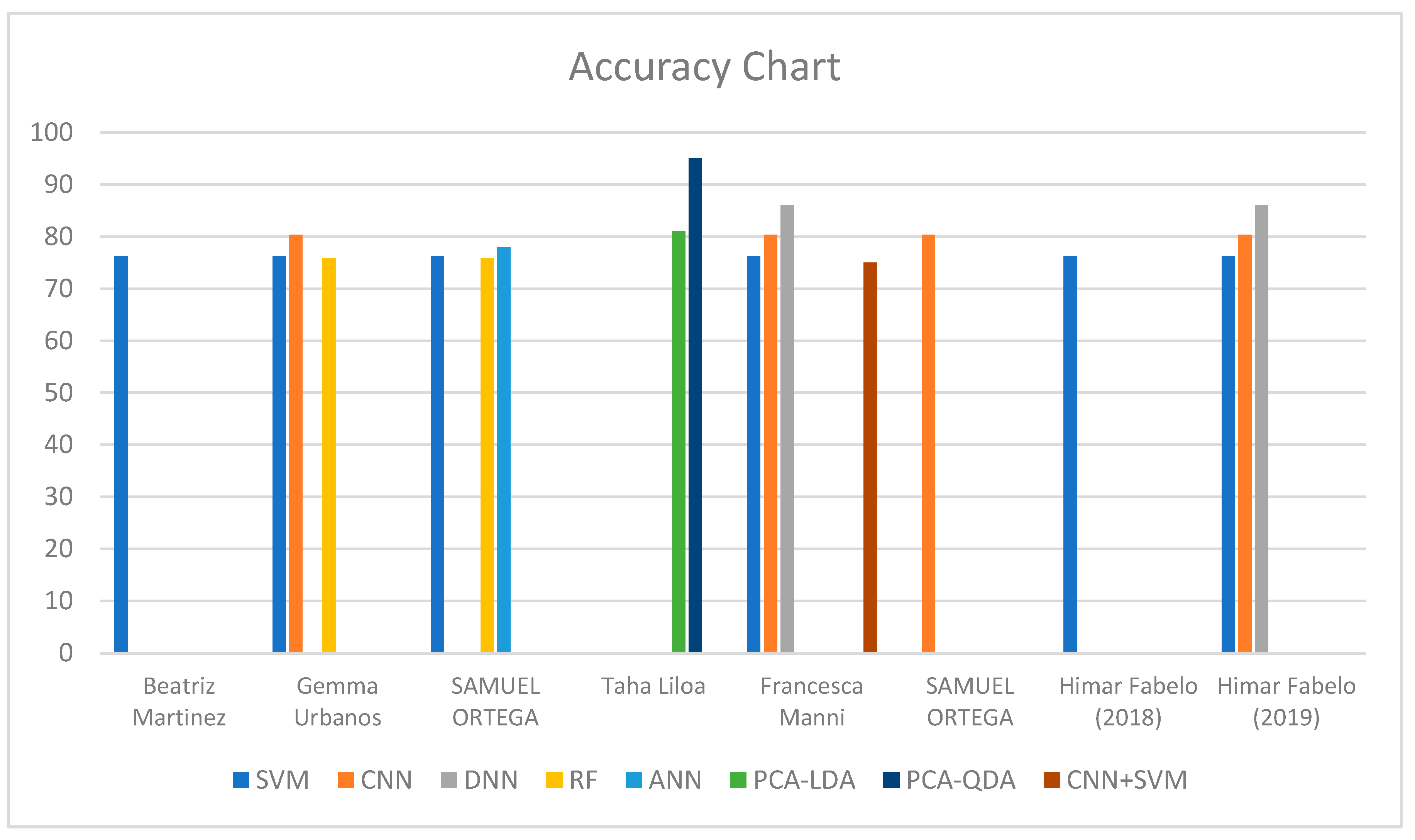

| Subgroup | Number of Studies | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) |

|---|---|---|---|---|---|

| Average meta-analysis of all studies | 8 | 78.96 | 62.43 | 90.05 | 81.06 |

| Vivo | |||||

| In vivo | 6 | 78.72 | 56.84 | 92.70 | 85.46 |

| In vitro | 2 | 79.93 | 84.81 | 79.46 | 93.05 |

| Machine Learning | |||||

| SVM | 11 | 76.22 | 58.23 | 90.18 | 87.6 |

| CNN | 6 | 80.34 | 62.41 | 89.84 | 81.8 |

| DNN | 2 | 86 | 53.5 | 98.5 | 94 |

| RF | 2 | 75.81 | 60.72 | 89.16 | N/A |

| ANN | 1 | 78.02 | 75.44 | 77.03 | N/A |

| CNN + SVM | 1 | 75 | 42 | 98 | 76 |

| Cancer Type | |||||

| GBM | 6 | 78.05 | 61.94 | 89.61 | 85.46 |

| Gliomas | 1 | 78.67 | 40.67 | 96.34 | N/A |

| Meningiomas | 1 | 88.5 | 100 | 85 | 93.05 |

| Publishing Date | |||||

| 2018–2020 | 6 | 78.05 | 61.94 | 89.61 | 85.46 |

| 2021–2024 | 2 | 82.6 | 64.4 | 91.8 | 93.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leung, J.-H.; Karmakar, R.; Mukundan, A.; Lin, W.-S.; Anwar, F.; Wang, H.-C. Technological Frontiers in Brain Cancer: A Systematic Review and Meta-Analysis of Hyperspectral Imaging in Computer-Aided Diagnosis Systems. Diagnostics 2024, 14, 1888. https://doi.org/10.3390/diagnostics14171888

Leung J-H, Karmakar R, Mukundan A, Lin W-S, Anwar F, Wang H-C. Technological Frontiers in Brain Cancer: A Systematic Review and Meta-Analysis of Hyperspectral Imaging in Computer-Aided Diagnosis Systems. Diagnostics. 2024; 14(17):1888. https://doi.org/10.3390/diagnostics14171888

Chicago/Turabian StyleLeung, Joseph-Hang, Riya Karmakar, Arvind Mukundan, Wen-Shou Lin, Fathima Anwar, and Hsiang-Chen Wang. 2024. "Technological Frontiers in Brain Cancer: A Systematic Review and Meta-Analysis of Hyperspectral Imaging in Computer-Aided Diagnosis Systems" Diagnostics 14, no. 17: 1888. https://doi.org/10.3390/diagnostics14171888