Profile Photograph Classification Performance of Deep Learning Algorithms Trained Using Cephalometric Measurements: A Preliminary Study

Abstract

1. Introduction

2. Materials and Methods

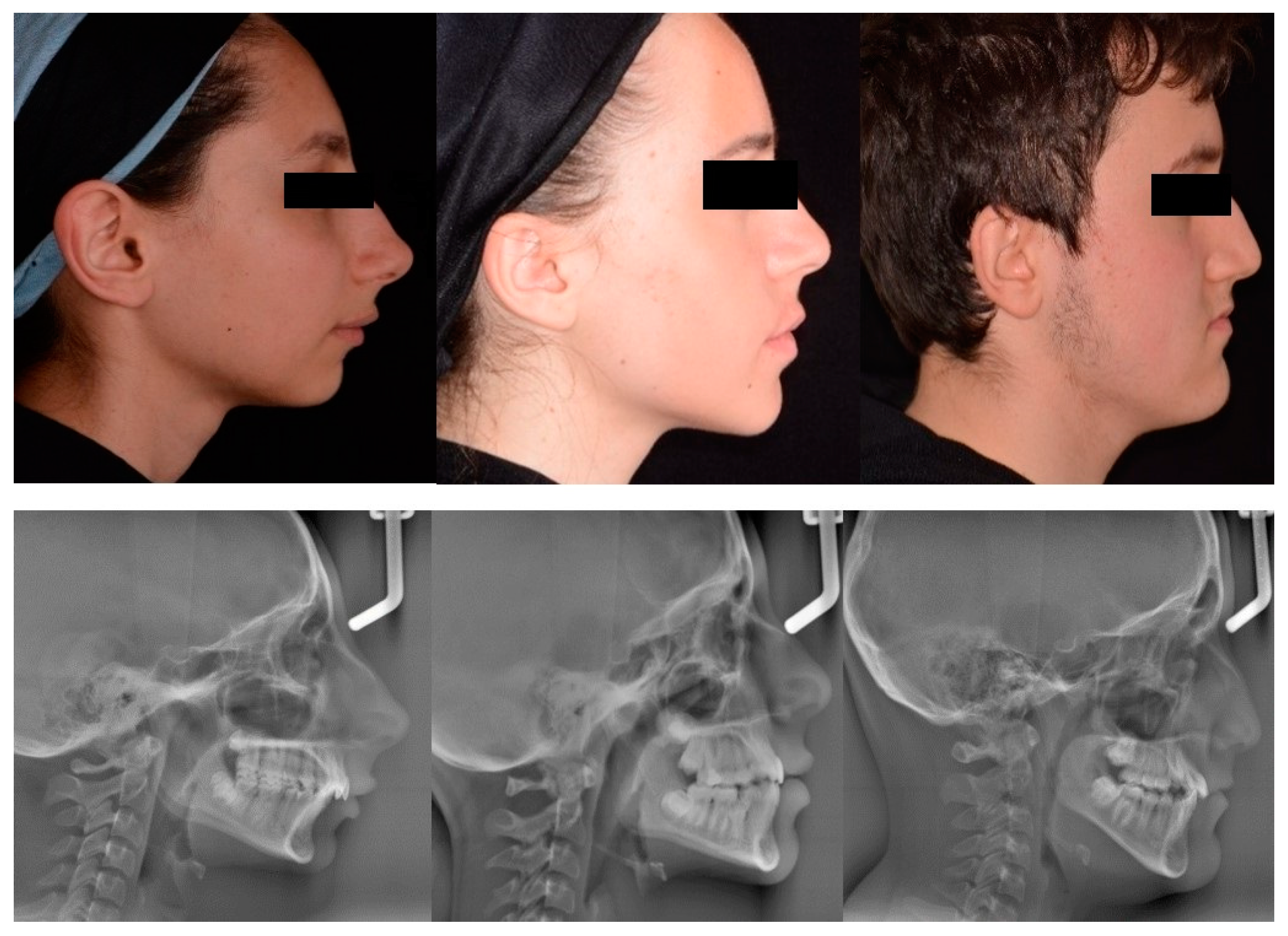

2.1. Study Group Selection

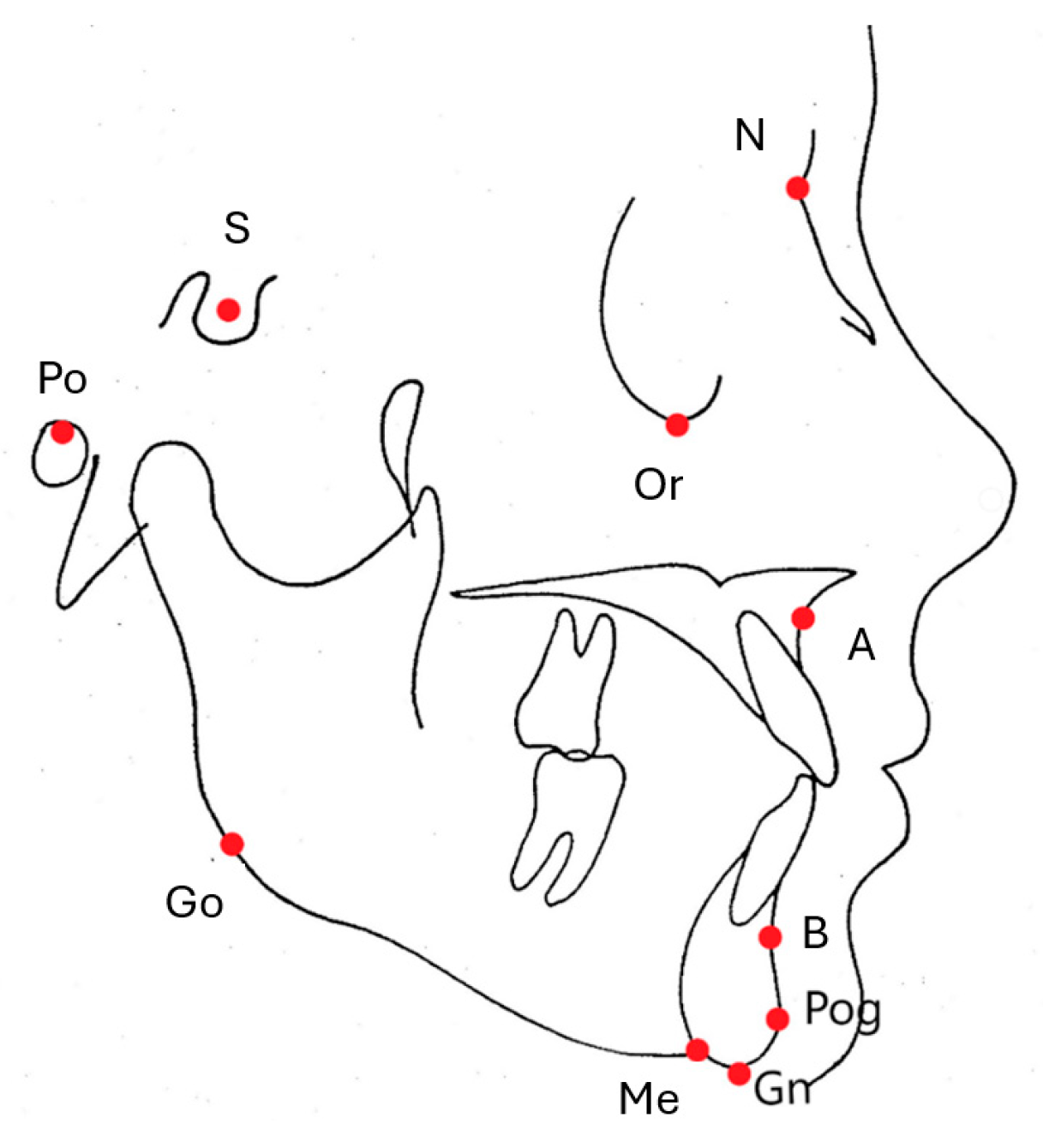

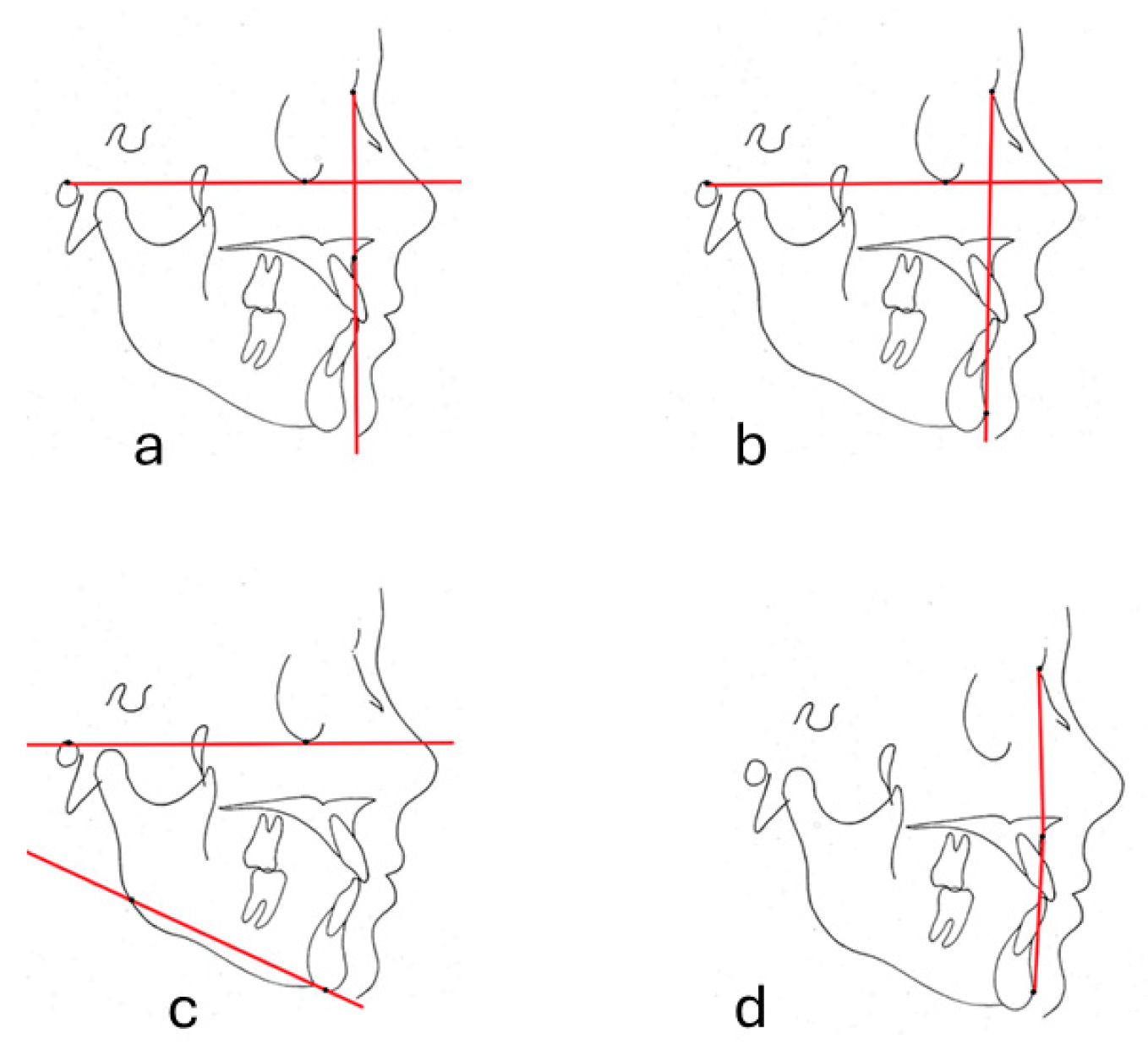

2.2. Cephalometric Measurements

2.3. Profile Photograph Group Formation for DL

2.4. Preparation of Deep Learning Models

- MobileNet V2,

- Inception V3,

- DenseNet 121,

- DenseNet 169,

- DenseNet 201,

- EfficientNet B0,

- Xception,

- VGG16,

- VGG19,

- NasNetMobile,

- ResNet101,

- ResNet 152,

- ResNet 50,

- EfficientNet V2

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sandler, J.; Murray, A. Digital photography in orthodontics. J. Orthod. 2001, 28, 197–202. [Google Scholar] [CrossRef][Green Version]

- Sandler, J.; Dwyer, J.; Kokich, V.; McKeown, F.; Murray, A.; McLaughlin, R.; O’Brien, C.; O’Malley, P. Quality of clinical photographs taken by orthodontists, professional photographers, and orthodontic auxiliaries. Am. J. Orthod. Dentofac. Orthop. 2009, 135, 657–662. [Google Scholar] [CrossRef]

- Alam, M.K.; Abutayyem, H.; Alotha, S.N.; Alsiyat, B.M.H.; Alanazi, S.H.K.; Alrayes, M.H.H.; Alrayes, R.H.; Khalaf Alanazi, D.F.; Alswairki, H.J.; Ali Alfawzan, A.; et al. Impact of Portraiture Photography on Orthodontic Treatment: A Systematic Review and Meta-Analysis. Cureus 2023, 15, e48054. [Google Scholar] [CrossRef]

- Monill-González, A.; Rovira-Calatayud, L.; d’Oliveira, N.G.; Ustrell-Torrent, J.M. Artificial intelligence in orthodontics: Where are we now? A scoping review. Orthod. Craniofac. Res. 2021, 24 (Suppl. S2), 6–15. [Google Scholar] [CrossRef]

- Akdeniz, S.; Tosun, M.E. A review of the use of artificial intelligence in orthodontics. J. Exp. Clin. Med. 2021, 38, 157–162. [Google Scholar] [CrossRef]

- Katne, T.; Kanaparthi, A.; Srikanth Gotoor, S.; Muppirala, S.; Devaraju, R.; Gantala, R. Artificial intelligence: Demystifying dentistry—The future and beyond. Int. J. Contemp. Med. Surg. Radiol. 2019, 4, D6–D9. [Google Scholar] [CrossRef]

- Redelmeier, D.A.; Shafir, E. Medical decision making in situations that offer multiple alternatives. J. Am. Med. Assoc. 1995, 273, 302. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Lee, Y.S.; Mo, S.P.; Lim, K.; Jung, S.K.; Kim, T.W. Application of deep learning artificial intelligence technique to the classification of clinical orthodontic photos. BMC Oral. Health 2022, 22, 454. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, J. What Is Artificial Intelligence? Available online: https://www-formal.stanford.edu/jmc/whatisai.pdf (accessed on 14 June 2024).

- Lee, J.G.; Jun, S.; Cho, Y.W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep Learning in Medical Imaging: General Overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef]

- Wan, J.; Wang, D.; Hoi, S.C.H.; Wu, P.; Zhu, J.; Zhang, Y.; Li, J. Deep Learning for Content-Based Image Retrieval: A Comprehensive Study. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 157–166. [Google Scholar]

- Chartrand, G.; Cheng, P.M.; Vorontsov, E.; Drozdzal, M.; Turcotte, S.; Pal, C.J.; Kadoury, S.; Tang, A. Deep Learning: A Primer for Radiologists. Radiographics 2017, 37, 2113–2131. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.; van Ginneken, B.; Sanchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Hurst, C.A.; Eppley, B.L.; Havlik, R.J.; Sadove, A.M. Surgical cephalometrics: Applications and developments. Plast. Reconstr. Surg. 2007, 120, 92e–104e. [Google Scholar] [CrossRef] [PubMed]

- Durão, A.R.; Pittayapat, P.; Rockenbach, M.I.; Olszewski, R.; Ng, S.; Ferreira, A.P.; Jacobs, R. Validity of 2D lateral cephalometry in orthodontics: A systematic review. Prog. Orthod. 2013, 14, 31. [Google Scholar] [CrossRef]

- Tanriver, G.; Soluk Tekkesin, M.; Ergen, O. Automated Detection and Classification of Oral Lesions Using Deep Learning to Detect Oral Potentially Malignant Disorders. Cancers 2021, 13, 2766. [Google Scholar] [CrossRef]

- Warin, K.; Limprasert, W.; Suebnukarn, S.; Jinaporntham, S.; Jantana, P. Automatic classification and detection of oral cancer in photographic images using deep learning algorithms. J. Oral Pathol. Med. 2021, 50, 911–918. [Google Scholar] [CrossRef]

- Warin, K.; Limprasert, W.; Suebnukarn, S.; Jinaporntham, S.; Jantana, P. Performance of deep convolutional neural network for classification and detection of oral potentially malignant disorders in photographic images. Int. J. Oral Maxillofac. Surg. 2022, 51, 699–704. [Google Scholar] [CrossRef] [PubMed]

- Benyahia, S.; Meftah, B.; Lézoray, O. Multi-features extraction based on deep learning for skin lesion classification. Tissue Cell 2022, 74, 101701. [Google Scholar] [CrossRef]

- Thalakottor, L.A.; Shirwaikar, R.D.; Pothamsetti, P.T.; Mathews, L.M. Classification of Histopathological Images from Breast Cancer Patients Using Deep Learning: A Comparative Analysis. Crit. Rev. Biomed. Eng. 2023, 51, 41–62. [Google Scholar] [CrossRef]

- Meng, M.; Zhang, M.; Shen, D.; He, G. Differentiation of breast lesions on dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) using deep transfer learning based on DenseNet201. Medicine 2022, 101, e31214. [Google Scholar] [CrossRef]

- Limprasert, W.; Suebnukarn, S.; Jinaporntham, S.; Jantana, P. Orientation-sella-nasion or Frankfort horizontal. Am. J. Orthod. 1976, 69, 648–654. [Google Scholar]

- Manosudprasit, A.; Haghi, A.; Allareddy, V.; Masoud, M.I. Diagnosis and treatment planning of orthodontic patients with 3-dimensional dentofacial records. Am. J. Orthod. Dentofac. Orthop. 2017, 151, 1083–1091. [Google Scholar] [CrossRef]

- Patel, D.P.; Trivedi, R. Photograpy versus lateral cephalogram: Role in facial diagnosis. Indian. J. Dent. Res. 2013, 24, 587–592. [Google Scholar] [PubMed]

- Jaiswal, P.; Gandhi, A.; Gupta, A.R.; Malik, N.; Singh, S.K.; Ramesh, K. Reliability of Photogrammetric Landmarks to the Conventional Cephalogram for Analyzing Soft-Tissue Landmarks in Orthodontics. J. Pharm. Bioallied Sci. 2021, 13 (Suppl. S1), S171–S175. [Google Scholar] [CrossRef] [PubMed]

| DL Model | FH-NA (%) | FH-NPog (%) | FMA (%) | N-A-Pog (%) |

|---|---|---|---|---|

| MobileNet V2 | 90.33 | 88.33 | 92.67 | 89.33 |

| Inception V3 | 36.37 | 31.33 | 89.00 | 79.00 |

| DenseNet 121 | 93.00 | 93.67 | 95.00 | 93.00 |

| DenseNet 169 | 91.67 | 93.67 | 92.67 | 95.00 |

| DenseNet 201 | 94.67 | 97.33 * | 97.67 * | 96.00 |

| EfficientNet B0 | 96.67 * | 96.33 | 93.33 | 96.33 |

| XCeption | 94.00 | 33.3 | 33.67 | 93.00 |

| VGG16 | 30.67 | 32.33 | 37 | 35.33 |

| VGG19 | 34.33 | 31.33 | 31.33 | 34.67 |

| NasNetMobile | 77.00 | 80.33 | 84.00 | 81.67 |

| ResNet 101 | 83.00 | 34.67 | 34.33 | 64.00 |

| ResNet 152 | 67.67 | 35.33 | 33.37 | 64.33 |

| ResNet 50 | 84.33 | 84.67 | 88.67 | 81.67 |

| EfficientNet V2 | 95.67 | 96.00 | 97.00 | 97.00 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kocakaya, D.N.C.; Özel, M.B.; Kartbak, S.B.A.; Çakmak, M.; Sinanoğlu, E.A. Profile Photograph Classification Performance of Deep Learning Algorithms Trained Using Cephalometric Measurements: A Preliminary Study. Diagnostics 2024, 14, 1916. https://doi.org/10.3390/diagnostics14171916

Kocakaya DNC, Özel MB, Kartbak SBA, Çakmak M, Sinanoğlu EA. Profile Photograph Classification Performance of Deep Learning Algorithms Trained Using Cephalometric Measurements: A Preliminary Study. Diagnostics. 2024; 14(17):1916. https://doi.org/10.3390/diagnostics14171916

Chicago/Turabian StyleKocakaya, Duygu Nur Cesur, Mehmet Birol Özel, Sultan Büşra Ay Kartbak, Muhammet Çakmak, and Enver Alper Sinanoğlu. 2024. "Profile Photograph Classification Performance of Deep Learning Algorithms Trained Using Cephalometric Measurements: A Preliminary Study" Diagnostics 14, no. 17: 1916. https://doi.org/10.3390/diagnostics14171916

APA StyleKocakaya, D. N. C., Özel, M. B., Kartbak, S. B. A., Çakmak, M., & Sinanoğlu, E. A. (2024). Profile Photograph Classification Performance of Deep Learning Algorithms Trained Using Cephalometric Measurements: A Preliminary Study. Diagnostics, 14(17), 1916. https://doi.org/10.3390/diagnostics14171916