Automatic Pest Counting from Pheromone Trap Images Using Deep Learning Object Detectors for Matsucoccus thunbergianae Monitoring

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Chemicals

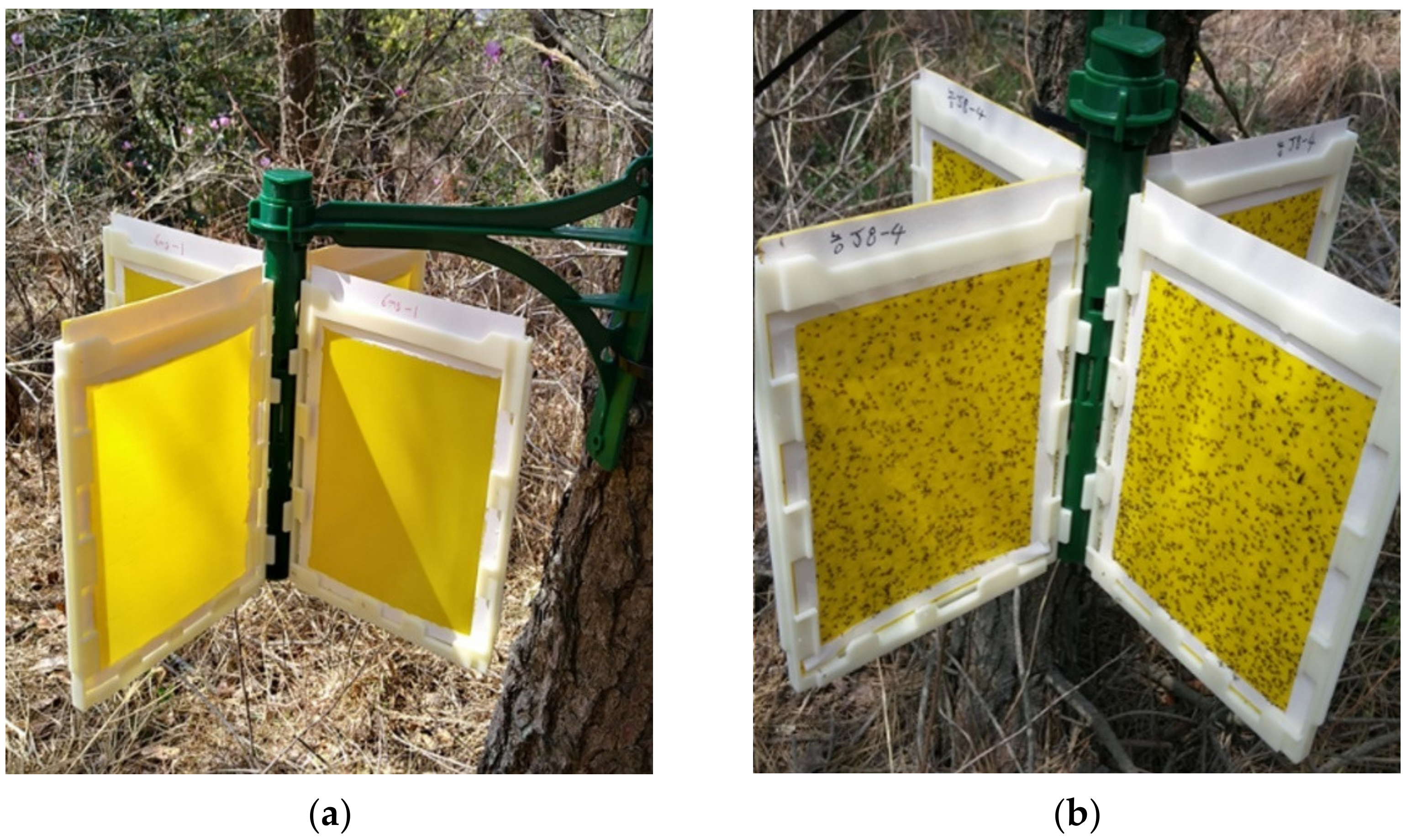

2.1.2. Trap Collection

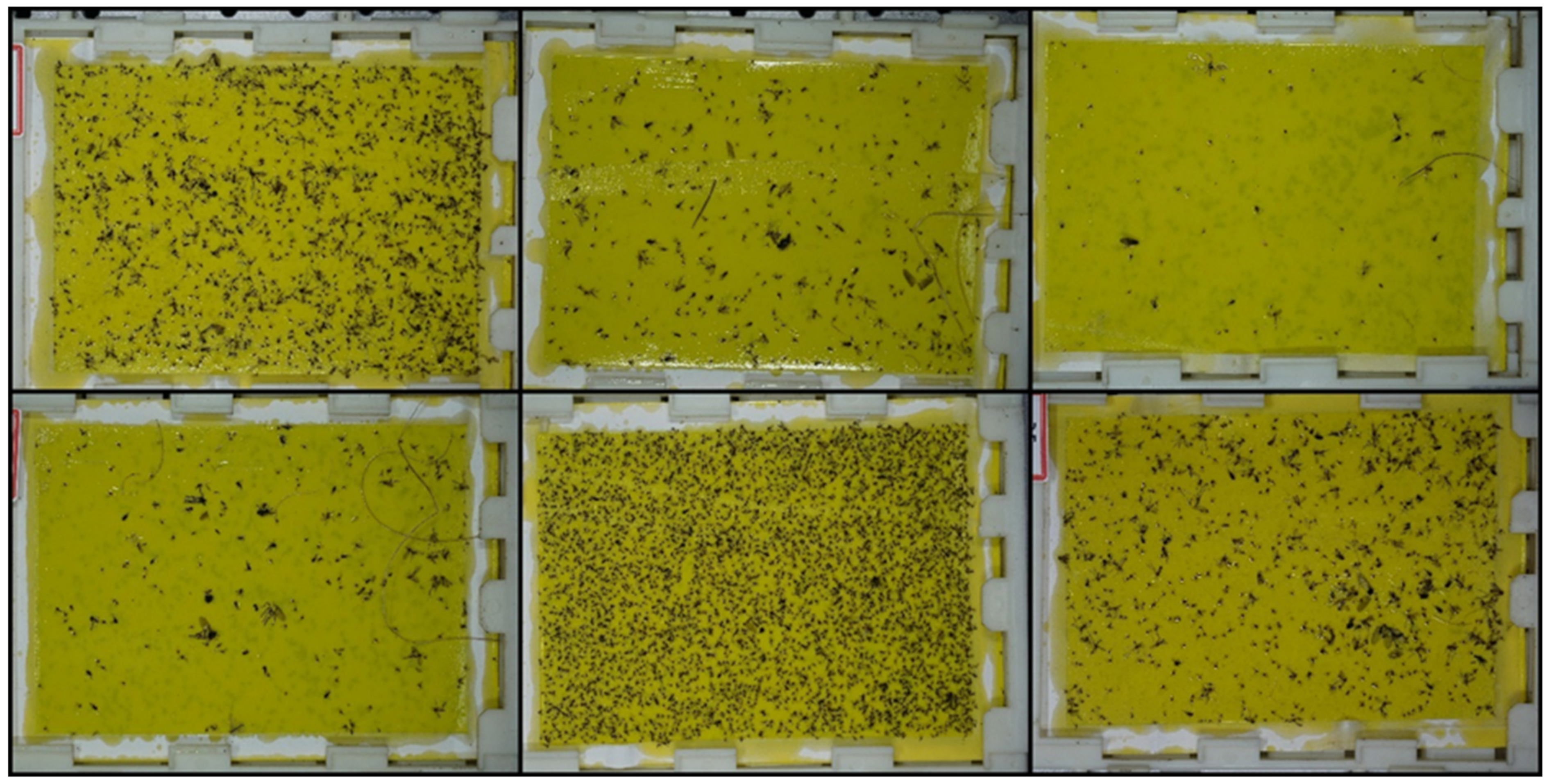

2.1.3. Image Acquisition

2.2. Data Preparation

2.3. Detector Training and Evaluation

2.4. Counting Accuracy Evaluation

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Miller Douglass, R.; Park, S.-C. A new species of Matsucoccus (Homoptera: Coccoidea: Margarodidae) from Korea. Korean J. Appl. Entomol. 1987, 26, 49–62. [Google Scholar]

- Choi, W.I.; Goh, S.H.; Lee, S.G.; Choi, K.S. Annual Report of Monitoring for Forest Insect Pests and Diseases in Korea; Korea Forest Research Institute: Seoul, Korea, 2014. [Google Scholar]

- Lanier, G.N.; Qi, Y.T.; West, J.R.; Park, S.C.; Webster, F.X.; Silverstein, R.M. Identification of the sex pheromone of three Matsucoccus pine bast scales. J. Chem. Ecol. 1989, 15, 1645–1659. [Google Scholar] [CrossRef]

- Hibbard, B.E.; Lanier, G.N.; Parks, S.C.; Qi, Y.T.; Webster, F.X.; Silverstein, R.M. Laboratory and field tests with the synthetic sex pheromone of three Matsucoccus pine bast scales. J. Chem. Ecol. 1991, 17, 89–102. [Google Scholar] [CrossRef] [PubMed]

- Cywin, C.L.; Webster, F.X.; Kallmerten, J. Synthesis of (−)-(6R,10R)-Matsuone. assignment of relative stereochemistry to a pheromone of Matsucoccus pine bast scales. J. Org. Chem. 1991, 56, 2953–2955. [Google Scholar] [CrossRef]

- Kim, J.; Kim, D.S.; Matsuyama, S.; Lee, S.M.; Lee, S.C.; Park, I.K. Development of a pheromone trap for monitor black pine bast scale, Matsucoccus thunbergianae (Hemiptera: Margarodidae). J. Asia Pac. Entomol. 2016, 19, 899–902. [Google Scholar] [CrossRef]

- Lee, S.C.; Kwon, J.H.; Cha, D.J.; Kim, D.S.; Lee, D.H.; Seo, S.M.; Lee, H.R.; Huh, M.J.; Jung, M.; Park, I.K. Effects of pheromone dose and trap height on capture of a bast scale of pine, Matsucoccus thunbergianae (Hemiptera: Margarodidae) and development of a new synthesis method. J. Econ. Entomol. 2019, 112, 1752–1759. [Google Scholar] [CrossRef] [PubMed]

- Cho, J.; Choi, J.; Qiao, M.; Ji, C.; Kim, H.; Uhm, K.; Chon, T. Automatic identification of whiteflies, aphids and thrips in greenhouse based on image analysis. Int. J. Math. Comput. Simul. 2007, 1, 46–53. [Google Scholar]

- Teale, S.A.; Letkowski, S.; Matusick, G.; Stehman, S.V.; Castello, J.D. Quantitative, Nondestructive Assessment of Beech Scale (Hemiptera: Cryptococcidae) Density Using Digital Image Analysis of Wax Masses. Environ. Entomol. 2009, 38, 1235–1240. [Google Scholar] [CrossRef]

- Wen, C.; Guyer, D.E.; Li, W. Local feature-based identification and classification for orchard insects. Biosyst. Eng. 2009, 104, 299–307. [Google Scholar] [CrossRef]

- Wang, J.; Lin, C.; Ji, L.; Liang, A. A new automatic identification system of insect images at the order level. Knowl. Based Syst. 2012, 33, 102–110. [Google Scholar] [CrossRef]

- Xia, C.; Chon, T.S.; Ren, Z.; Lee, J.M. Automatic identification and counting of small size pests in greenhouse conditions with low computational cost. Ecol. Inform. 2015, 29, 139–146. [Google Scholar] [CrossRef]

- Li, Y.; Xia, C.; Lee, J. Detection of small-sized insect pest in greenhouses based on multifractal analysis. Optik 2015, 126, 2138–2143. [Google Scholar] [CrossRef]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef] [Green Version]

- Nam, N.T.; Hung, P.D. Pest Detection on Traps Using Deep Convolutional Neural Networks. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2018; pp. 33–38. [Google Scholar]

- Nieuwenhuizen, A.; Hemming, J.; Suh, H. Detection and Classification of Insects on Stick-Traps in a Tomato Crop Using Faster R-CNN. In Proceedings of the the Netherlands Conference on Computer Vision, Eindhoven, The Netherlands, 26–27 September 2018. [Google Scholar]

- Chulu, F.; Phiri, J.; Nkunika, P.; Nyirenda, M.; Kabemba, M.; Sohati, P. A convolutional neural network for automatic identification and classification of fall army worm moth. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 112–118. [Google Scholar] [CrossRef]

- Hong, S.-J.; Kim, S.-Y.; Kim, E.; Lee, C.-H.; Lee, J.-S.; Lee, D.-S.; Bang, J.; Kim, G. Moth detection from pheromone trap images using deep learning object detectors. Agriculture 2020, 10, 170. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10778–10787. [Google Scholar]

- Du, X.; El-Khamy, M.; Lee, J.; Davis, L. Fused DNN: A Deep Neural Network Fusion Approach to Fast and Robust Pedestrian Detection. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, WACV 2017, Santa Rosa, CA, USA, 24–31 March 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; pp. 953–961. [Google Scholar]

- Hou, Y.L.; Song, Y.; Hao, X.; Shen, Y.; Qian, M.; Chen, H. Multispectral pedestrian detection based on deep convolutional neural networks. Infrared Phys. Technol. 2018, 94, 69–77. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.Y.; Liao, W.; Li, X.; Rosenhahn, B. Deep Learning for Vehicle Detection in Aerial Images. In Proceedings of the International Conference on Image Processing, ICIP, Athens, Greece, 7–10 October 2018; IEEE Computer Society: Piscataway, NJ, USA, 2018; pp. 3079–3083. [Google Scholar]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Ruan, C.; Sun, Y. Cucumber fruits detection in greenhouses based on instance segmentation. IEEE Access 2019, 7, 139635–139642. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Torney, C.J.; Lloyd-Jones, D.J.; Chevallier, M.; Moyer, D.C.; Maliti, H.T.; Mwita, M.; Kohi, E.M.; Hopcraft, G.C. A comparison of deep learning and citizen science techniques for counting wildlife in aerial survey images. Methods Ecol. Evol. 2019, 10, 779–787. [Google Scholar] [CrossRef] [Green Version]

- Eikelboom, J.A.J.; Wind, J.; van de Ven, E.; Kenana, L.M.; Schroder, B.; de Knegt, H.J.; van Langevelde, F.; Prins, H.H.T. Improving the precision and accuracy of animal population estimates with aerial image object detection. Methods Ecol. Evol. 2019, 10, 1875–1887. [Google Scholar] [CrossRef] [Green Version]

- Guirado, E.; Tabik, S.; Rivas, M.L.; Alcaraz-Segura, D.; Herrera, F. Whale counting in satellite and aerial images with deep learning. Sci. Rep. 2019, 9, 14259. [Google Scholar] [CrossRef] [Green Version]

- Hong, S.-J.; Han, Y.; Kim, S.-Y.; Lee, A.-Y.; Kim, G.; Hong, S.-J.; Han, Y.; Kim, S.-Y.; Lee, A.-Y.; Kim, G. Application of deep-learning methods to bird detection using unmanned aerial vehicle imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef] [Green Version]

- Ozge Unel, F.; Ozkalayci, B.O.; Cigla, C. The Power of Tiling for Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S. Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3296–3297. [Google Scholar]

- Tetila, E.C.; MacHado, B.B.; Menezes, G.V.; de Souza Belete, N.A.; Astolfi, G.; Pistori, H. A deep-learning approach for automatic counting of soybean insect pests. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1837–1841. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [Green Version]

| Train Set | Validation Set | Test Set | |

|---|---|---|---|

| Images | 30 | 10 | 10 |

| M. thunbergianae | 13,419 | 5071 | 4566 |

| Model | Input Size | Inference Time (ms) | AP (%) | |

|---|---|---|---|---|

| IoU:0.3 | IoU:0.5 | |||

| Faster R-CNN Resnet 101 | 1024 | 78.26 | 89.78 | 85.63 |

| Faster R-CNN Resnet 101 | 512 | 39.64 | 89.58 | 84.32 |

| EfficientDet D4 | 1024 | 86.74 | 89.26 | 84.79 |

| EfficientDet D0 | 512 | 25.58 | 88.36 | 83.79 |

| Retinanet 50 | 1024 | 30.97 | 89.35 | 84.40 |

| Retinanet 50 | 640 | 20.56 | 89.86 | 86.40 |

| SSD Mobilenet v.2 | 640 | 15.28 | 89.02 | 84.76 |

| SSD Mobilenet v.2 | 320 | 11.82 | 89.46 | 84.54 |

| Model | Input Size | Inference Time (ms) | AP (%) | |

|---|---|---|---|---|

| IoU:0.3 | IoU:0.5 | |||

| Faster R-CNN Resnet 101 | 1024 | 79.58 | 87.13 | 82.92 |

| Faster R-CNN Resnet 101 | 512 | 41.48 | 85.04 | 80.18 |

| EfficientDet D4 | 1024 | 90.33 | 84.87 | 81.22 |

| EfficientDet D0 | 512 | 26.12 | 85.30 | 80.21 |

| Retinanet 50 | 1024 | 33.52 | 86.58 | 82.62 |

| Retinanet 50 | 640 | 21.85 | 85.33 | 81.71 |

| SSD Mobilenet v.2 | 640 | 16.83 | 85.75 | 81.35 |

| SSD Mobilenet v.2 | 320 | 12.22 | 79.87 | 72.05 |

| Model | Input Size | Counting Time (s) | Counting Error (%) |

|---|---|---|---|

| Faster R-CNN Resnet 101 | 1024 | 14.14 | 2.11 |

| Faster R-CNN Resnet 101 | 512 | 9.17 | 3.69 |

| EfficientDet | 1024 | 14.44 | 3.37 |

| EfficientDet | 512 | 5.29 | 3.42 |

| Retinanet50 | 1024 | 6.58 | 3.30 |

| Retinanet50 | 640 | 4.78 | 2.95 |

| SSD Mobilenet v.2 | 640 | 3.81 | 2.32 |

| SSD Mobilenet v.2 | 320 | 3.63 | 3.32 |

| Model | Input Size | Counting Time (s) | Counting Error (%) |

|---|---|---|---|

| Faster R-CNN Resnet 101 | 1024 | 3.90 | 3.95 |

| Faster R-CNN Resnet 101 | 512 | 2.50 | 4.02 |

| EfficientDet | 1024 | 3.92 | 4.70 |

| EfficientDet | 512 | 1.68 | 4.21 |

| Retinanet50 | 1024 | 1.88 | 3.83 |

| Retinanet50 | 640 | 1.45 | 3.74 |

| SSD Mobilenet v.2 | 640 | 1.40 | 3.65 |

| SSD Mobilenet v.2 | 320 | 1.19 | 6.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, S.-J.; Nam, I.; Kim, S.-Y.; Kim, E.; Lee, C.-H.; Ahn, S.; Park, I.-K.; Kim, G. Automatic Pest Counting from Pheromone Trap Images Using Deep Learning Object Detectors for Matsucoccus thunbergianae Monitoring. Insects 2021, 12, 342. https://doi.org/10.3390/insects12040342

Hong S-J, Nam I, Kim S-Y, Kim E, Lee C-H, Ahn S, Park I-K, Kim G. Automatic Pest Counting from Pheromone Trap Images Using Deep Learning Object Detectors for Matsucoccus thunbergianae Monitoring. Insects. 2021; 12(4):342. https://doi.org/10.3390/insects12040342

Chicago/Turabian StyleHong, Suk-Ju, Il Nam, Sang-Yeon Kim, Eungchan Kim, Chang-Hyup Lee, Sebeom Ahn, Il-Kwon Park, and Ghiseok Kim. 2021. "Automatic Pest Counting from Pheromone Trap Images Using Deep Learning Object Detectors for Matsucoccus thunbergianae Monitoring" Insects 12, no. 4: 342. https://doi.org/10.3390/insects12040342