Ultraviolet Radiation Transmission in Building’s Fenestration: Part II, Exploring Digital Imaging, UV Photography, Image Processing, and Computer Vision Techniques

Abstract

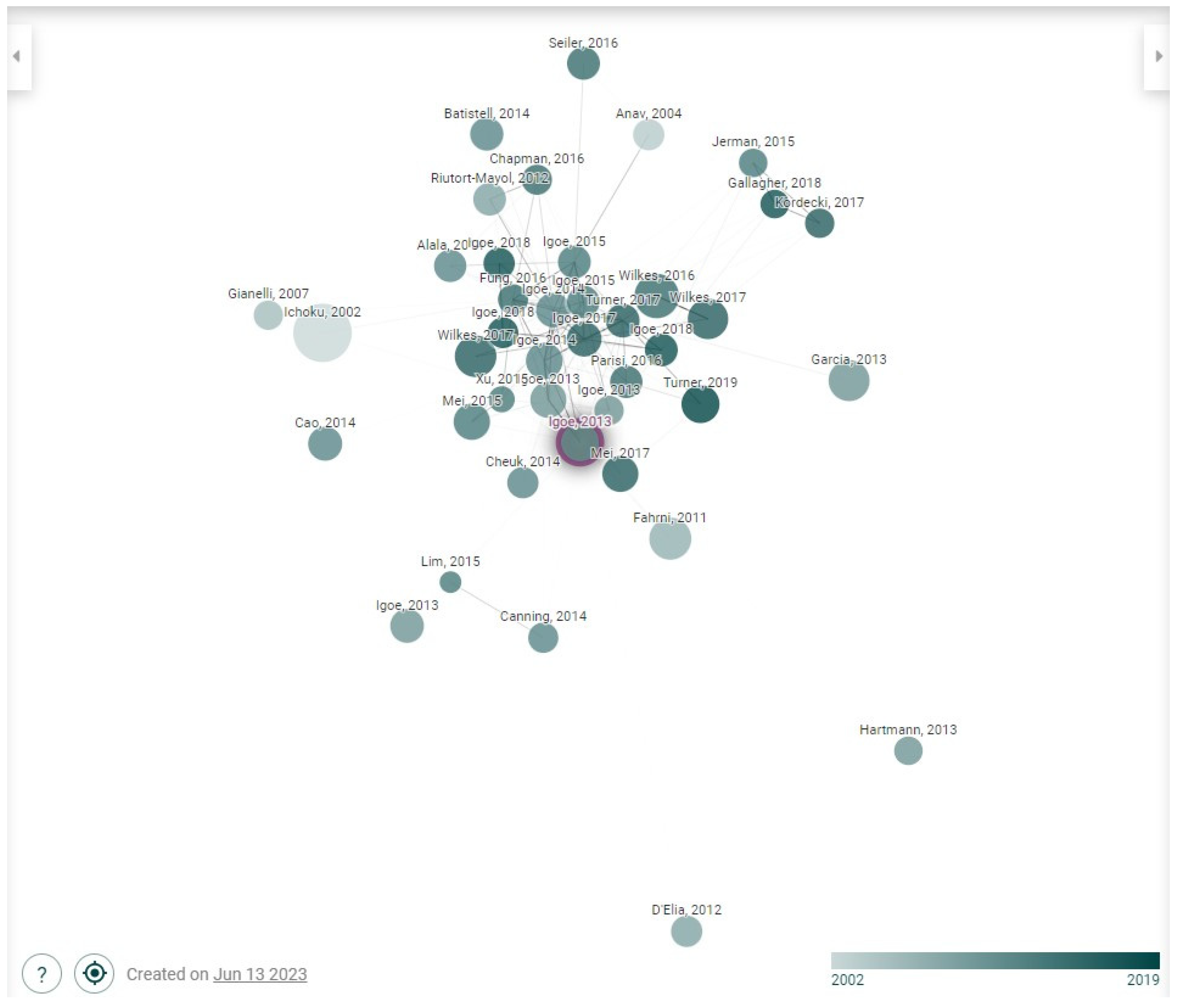

:1. Introduction

- Explore digital imaging and ultraviolet image capture process and enabling technologies.

- Identify the present application of UV radiation detection using digital cameras.

- Discuss the Computer Vision process and integration of image analysis for improving raw data, enhancing visual inspection, and enabling more reliable measurements and assessments.

- Explore existing image pixel transformation equations and the mathematical relationships to image data conversion.

2. Methodology

3. Digital Imaging and UV Photography

3.1. Digital Image Acquisition

3.2. UV Imaging

4. Status of UV Radiation Detection Using Cameras

5. Image Analysis

5.1. Image Preprocessing and Digital Processing: A Comprehensive Examination

Image De-Noising

5.2. Analyzing UV Images: Computational Tools, Adaptive Thresholding, and Programming Libraries

5.3. Expanding the Boundaries of Image Analysis: An Exploration of Computer Vision Techniques

5.4. Image Segmentation Overview

| Techniques | Advantages | Disadvantages | Authors | New Proposed Technique |

|---|---|---|---|---|

| Histogram-based threshold | Simple and fast | Sensitive to noise and illumination changes | [127,128] | Otsu–Thresholding [129] |

| K means segmentation | Easy to implement and interpret. Tighter clusters than hierarchical methods. | Requires prior knowledge of a number of clusters and initial centroids. | [130] | FCM with advanced optimization techniques [129] |

| Watershed segmentation | Effective for separating touching objects | Prone to over-segmentation and noise sensitivity | [128] | Marker Controlled Watershed Segmentation [129] |

| Neural networks approach | High accuracy and flexibility | Require large amounts of training data and computational resources | [128,130] | Deep learning techniques [129] |

| Region-based segmentation | Robust to noise and intensity variations | May fail to detect boundaries or merge regions incorrectly | [130] | Hemitropic region-growing algorithm [129] |

6. Existing Pixels Transformation Equations

7. Discussion

- Cost-effective and accessible UV detection: Digital imaging methods, particularly those utilizing cameras, have made UV detection more cost-effective and accessible [22,28]. High-resolution cameras can be used to measure UV radiation with potential in fenestration glazing, eliminating the need for expensive spectrophotometers or radiometers.

- Non-invasive and real-time analysis: Digital imaging techniques provide a non-invasive approach to analyzing and allowing for real-time assessment of images [36,108]. This non-destructive approach enables more efficient monitoring and decision-making without damaging the glazing materials and also ensures an increase in the sample size of measurements [37].

- Advanced image processing techniques: The development of advanced image processing algorithms and techniques will improve the accuracy and precision of UV radiation detection [121,133,142]. These techniques, such as segmentation and pixel transformation equations, provide better insights into the complex relationship between pixel values and UV radiation [2,139].

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tuchinda, C.; Srivannaboon, S.; Lim, H.W. Photoprotection by Window Glass, Automobile Glass, and Sunglasses. J. Am. Acad. Dermatol. 2006, 54, 845–854. [Google Scholar] [CrossRef] [PubMed]

- Dawes, A.J.; Igoe, D.P.; Rummenie, K.J.; Parisi, A.V. Glass Transmitted Solar Irradiances on Horizontal and Sun-Normal Planes Evaluated with a Smartphone Camera. Measurement 2020, 153, 107410. [Google Scholar] [CrossRef] [Green Version]

- Parisi, A.V.; Sabburg, J.; Kimlin, M.G. Scattered and Filtered Solar UV Measurements; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2004; Volume 17. [Google Scholar] [CrossRef] [Green Version]

- Starzyńska-Grześ, M.B.; Roussel, R.; Jacoby, S.; Asadipour, A. Computer Vision-Based Analysis of Buildings and Built Environments: A Systematic Review of Current Approaches. ACM Comput. Surv. 2022, 55, 284. [Google Scholar] [CrossRef]

- Almutawa, F.; Buabbas, H. Photoprotection: Clothing and Glass. Dermatol. Clin. 2014, 32, 439–448. [Google Scholar] [CrossRef]

- Duarte, I.; Rotter, A.; Malvestiti, A.; Silva, M. The Role of Glass as a Barrier against the Transmission of Ultraviolet Radiation: An Experimental Study. Photodermatol. Photoimmunol. Photomed. 2009, 25, 181–184. [Google Scholar] [CrossRef] [PubMed]

- Reule, A.G. Errors in Spectrophotometry and Calibration Procedures to Avoid Them. J. Res. Natl. Bur. Stand A Phys. Chem. 1976, 80A, 609. [Google Scholar] [CrossRef]

- Heo, S.; Hwang, H.S.; Jeong, Y.; Na, K. Skin Protection Efficacy from UV Irradiation and Skin Penetration Property of Polysaccharide-Benzophenone Conjugates as a Sunscreen Agent. Carbohydr. Polym. 2018, 195, 534–541. [Google Scholar] [CrossRef]

- Diffey, B.L. Physics in Medicine & Biology Ultraviolet Radiation Physics and the Skin Ultraviolet Radiation Physics and the Skin. Phys. Med. Biol. 1980, 25, 405–426. [Google Scholar]

- Inanici, M.N. Evaluation of High Dynamic Range Photography as a Luminance Data Acquisition System. Light. Res. Technol. 2006, 38, 123–136. [Google Scholar] [CrossRef]

- Prabaharan, T.; Periasamy, P.; Mugendiran, V. Studies on Application of Image Processing in Various Fields: An Overview. IOP Conf. Ser. Mater. Sci. Eng. 2020, 961, 012006. [Google Scholar] [CrossRef]

- Valença, J.; Puente, I.; Júlio, E.; González-Jorge, H.; Arias-Sánchez, P. Assessment of Cracks on Concrete Bridges Using Image Processing Supported by Laser Scanning Survey. Constr. Build. Mater. 2017, 146, 668–678. [Google Scholar] [CrossRef]

- Ren, Z.; Fang, F.; Yan, N.; Wu, Y. State of the Art in Defect Detection Based on Machine Vision. Int. J. Precis. Eng. Manuf.-Green Technol. 2022, 9, 661–691. [Google Scholar] [CrossRef]

- Giger, M.L.; Karssemeijer, N.; Armato, S.G. Computer-Aided Diagnosis in Medical Imaging. IEEE Trans. Med. Imaging 2001, 20, 1205–1207. [Google Scholar] [CrossRef] [Green Version]

- Farhang, S.H.; Rezaifar, O.; Sharbatdar, M.K.; Ahmadyfard, A. Evaluation of Different Methods of Machine Vision in Health Monitoring and Damage Detection of Structures. J. Rehabil. Civ. Eng. 2021, 9, 93–132. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Liu, H.G.; Chen, Y.P.; Peng, X.Q.; Xie, J.M. A Classification Method of Glass Defect Based on Multiresolution and Information Fusion. Int. J. Adv. Manuf. Technol. 2011, 56, 1079–1090. [Google Scholar] [CrossRef]

- Peng, X.; Chen, Y.; Yu, W.; Zhou, Z.; Sun, G. An Online Defects Inspection Method for Float Glass Fabrication Based on Machine Vision. Int. J. Adv. Manuf. Technol. 2008, 39, 1180–1189. [Google Scholar] [CrossRef]

- Yang, J.; Wang, W.; Lin, G.; Li, Q.; Sun, Y.; Sun, Y. Infrared Thermal Imaging-Based Crack Detection Using Deep Learning. IEEE Access 2019, 7, 182060–182077. [Google Scholar] [CrossRef]

- Igoe, D.; Parisi, A.V. Evaluation of a Smartphone Sensor to Broadband and Narrowband Ultraviolet A Radiation. Instrum. Sci. Technol. 2015, 43, 283–289. [Google Scholar] [CrossRef]

- Igoe, D.; Parisi, A.; Carter, B. Characterization of a Smartphone Camera’s Response to Ultraviolet A Radiation. Photochem. Photobiol. 2013, 89, 215–218. [Google Scholar] [CrossRef] [Green Version]

- Turner, J.; Parisi, A.V.; Igoe, D.P.; Amar, A. Detection of Ultraviolet B Radiation with Internal Smartphone Sensors. Instrum. Sci. Technol. 2017, 45, 618–638. [Google Scholar] [CrossRef]

- Igoe, D.P.; Parisi, A.; Carter, B. Smartphone Based Android App for Determining UVA Aerosol Optical Depth and Direct Solar Irradiances. Photochem. Photobiol. 2014, 90, 233–237. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Igoe, D.P.; Parisi, A.V.; Amar, A.; Rummenie, K.J. Median Filters as a Tool to Determine Dark Noise Thresholds in High Resolution Smartphone Image Sensors for Scientific Imaging. Rev. Sci. Instrum. 2018, 89, 015003. [Google Scholar] [CrossRef] [PubMed]

- Fung, C.H.; Wong, M.S. Improved Mobile Application for Measuring Aerosol Optical Thickness in the Ultraviolet—A Wavelength. IEEE Sens. J. 2016, 16, 2055–2059. [Google Scholar] [CrossRef]

- Tetley, C.; Young, S. Digital Infrared and Ultraviolet Imaging Part 2: Ultraviolet. J. Vis. Commun. Med. 2008, 31, 51–60. [Google Scholar] [CrossRef] [PubMed]

- Davies, A. Digital Ultraviolet and Infrared Photography; Taylor & Francis: Abingdon, UK, 2017. [Google Scholar]

- Mei, B.; Li, R.; Cheng, W.; Yu, J.; Cheng, X. Ultraviolet Radiation Measurement via Smart Devices. IEEE Internet Things J. 2017, 4, 934–944. [Google Scholar] [CrossRef]

- Prutchi, D. Exploring Ultraviolet Photograph: Bee Vision, Forensic Imaging, and Other Near-Ultraviolet Adventures with Your DSLR; Amherst Media: Amherst, MA, USA, 2016; p. 127. [Google Scholar]

- Cucci, C.; Pillay, R.; Herkommer, A.; Crowther, J. Ultraviolet Fluorescence Photography—Choosing the Correct Filters for Imaging. J. Imaging 2022, 8, 162. [Google Scholar] [CrossRef]

- Chapman, G.H.; Thomas, R.; Thomas, R.; Koren, I.; Koren, Z. Improved Correction for Hot Pixels in Digital Imagers. In Proceedings of the 2014 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFT), Amsterdam, The Netherlands, 1–3 October 2014; pp. 116–121. [Google Scholar] [CrossRef]

- Cheng, Y.; Fang, C.; Yuan, J.; Zhu, L. Design and Application of a Smart Lighting System Based on Distributed Wireless Sensor Networks. Appl. Sci. 2020, 10, 8545. [Google Scholar] [CrossRef]

- Bigas, M.; Cabruja, E.; Forest, J.; Salvi, J. Review of CMOS Image Sensors. Microelectron. J. 2006, 37, 433–451. [Google Scholar] [CrossRef] [Green Version]

- Al-Mallahi, A.; Kataoka, T.; Okamoto, H.; Shibata, Y. Detection of Potato Tubers Using an Ultraviolet Imaging-Based Machine Vision System. Biosyst. Eng. 2010, 105, 257–265. [Google Scholar] [CrossRef]

- Maharlooei, M.; Sivarajan, S.; Bajwa, S.G.; Harmon, J.P.; Nowatzki, J. Detection of Soybean Aphids in a Greenhouse Using an Image Processing Technique. Comput. Electron. Agric. 2017, 132, 63–70. [Google Scholar] [CrossRef]

- Ramil, A.; López, A.J.; Pozo-Antonio, J.S.; Rivas, T. A Computer Vision System for Identification of Granite-Forming Minerals Based on RGB Data and Artificial Neural Networks. Measurement 2018, 117, 90–95. [Google Scholar] [CrossRef]

- Pedreschi, F.; León, J.; Mery, D.; Moyano, P. Development of a Computer Vision System to Measure the Color of Potato Chips. Food Res. Int. 2006, 39, 1092–1098. [Google Scholar] [CrossRef]

- Sena, D.G.; Pinto, F.A.C.; Queiroz, D.M.; Viana, P.A. Fall Armyworm Damaged Maize Plant Identification Using Digital Images. Biosyst. Eng. 2003, 85, 449–454. [Google Scholar] [CrossRef]

- Dyer, J.; Verri, G.; Cupitt, J. Multispectral Imaging in Reflectance and Photo-Induced Luminescence Modes: A User Manual; Academia: San Francisco, CA, USA, 2013. [Google Scholar]

- Zhang, L.; Zhang, L.; Wang, Y. Shape Optimization of Free-Form Buildings Based on Solar Radiation Gain and Space Efficiency Using a Multi-Objective Genetic Algorithm in the Severe Cold Zones of China. Sol. Energy 2016, 132, 38–50. [Google Scholar] [CrossRef]

- Girolami, A.; Napolitano, F.; Faraone, D.; Braghieri, A. Measurement of Meat Color Using a Computer Vision System. Meat Sci. 2013, 93, 111–118. [Google Scholar] [CrossRef]

- Mathai, A.; Guo, N.; Liu, D.; Wang, X. 3D Transparent Object Detection and Reconstruction Based on Passive Mode Single-Pixel Imaging. Sensors 2020, 20, 4211. [Google Scholar] [CrossRef]

- Turner, J.; Igoe, D.; Parisi, A.V.; McGonigle, A.J.; Amar, A.; Wainwright, L. A Review on the Ability of Smartphones to Detect Ultraviolet (UV) Radiation and Their Potential to Be Used in UV Research and for Public Education Purposes. Sci. Total Environ. 2020, 706, 135873. [Google Scholar] [CrossRef]

- De Oliveira, H.J.S.; de Almeida, P.L.; Sampaio, B.A.; Fernandes, J.P.A.; Pessoa-Neto, O.D.; de Lima, E.A.; de Almeida, L.F. A Handheld Smartphone-Controlled Spectrophotometer Based on Hue to Wavelength Conversion for Molecular Absorption and Emission Measurements. Sens. Actuators B Chem. 2017, 238, 1084–1091. [Google Scholar] [CrossRef]

- Azzazy, H.M.E.; Elbehery, A.H.A. Clinical Laboratory Data: Acquire, Analyze, Communicate, Liberate. Clin. Chim. Acta 2014, 438, 186–194. [Google Scholar] [CrossRef] [PubMed]

- Hiscocks, P.D. Measuring Camera Shutter Speed; Toronto Centre: Toronto, ON, Canada, 2010. [Google Scholar]

- Chapman, G.H.; Thomas, R.; Thomas, R.; Coelho, K.J.; Meneses, S.; Yang, T.Q.; Koren, I.; Koren, Z. Increases in Hot Pixel Development Rates for Small Digital Pixel Sizes. Electron. Imaging 2016, art00013. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Li, J.; Lin, L.; Du, Y.; Jin, Y. The Key Technology and Research Progress of CMOS Image Sensor. In Proceedings of the 2008 International Conference on Optical Instruments and Technology: Advanced Sensor Technologies and Applications, Beijing, China, 16–19 November 2008; Volume 7157, p. 71571B. [Google Scholar] [CrossRef]

- Nehir, M.; Frank, C.; Aßmann, S.; Achterberg, E.P. Improving Optical Measurements: Non-Linearity Compensation of Compact Charge-Coupled Device (CCD) Spectrometers. Sensors 2019, 19, 2833. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jurkovic, J.; Korosec, M.; Kopac, J. New Approach in Tool Wear Measuring Technique Using CCD Vision System. Int. J. Mach. Tools Manuf. 2005, 45, 1023–1030. [Google Scholar] [CrossRef]

- Grimes, D.R. Ultraviolet Radiation Therapy and UVR Dose Models. Med. Phys. 2015, 42, 440–455. [Google Scholar] [CrossRef] [PubMed]

- Alala, B.; Mwangi, W.; Okeyo, G. Image Representation Using RGB Color Space. Int. J. Innov. Res. Dev. 2014, 3. [Google Scholar]

- Igoe, D.; Parisi, A.V. Broadband Direct UVA Irradiance Measurement for Clear Skies Evaluated Using a Smartphone. Radiat. Prot. Dosim. 2015, 167, 485–489. [Google Scholar] [CrossRef] [Green Version]

- Parisi, A.V.; Turnbull, D.J.; Kimlin, M.G. Dosimetric and Spectroradiometric Investigations of Glass-Filtered Solar UV. Photochem. Photobiol. 2007, 83, 777–781. [Google Scholar] [CrossRef]

- Wilkes, T.C.; McGonigle, A.J.S.; Pering, T.D.; Taggart, A.J.; White, B.S.; Bryant, R.G.; Willmott, J.R. Ultraviolet Imaging with Low Cost Smartphone Sensors: Development and Application of a Raspberry Pi-Based UV Camera. Sensors 2016, 16, 1649. [Google Scholar] [CrossRef] [Green Version]

- Tetley, C. The Photography of Bruises. J. Vis. Commun. Med. 2005, 28, 72–77. [Google Scholar] [CrossRef]

- Salman, J.; Gangishetty, M.K.; Rubio-Perez, B.E.; Feng, D.; Yu, Z.; Yang, Z.; Wan, C.; Frising, M.; Shahsafi, A.; Congreve, D.N.; et al. Passive Frequency Conversion of Ultraviolet Images into the Visible Using Perovskite Nanocrystals. J. Opt. 2021, 23, 054001. [Google Scholar] [CrossRef]

- Igoe, D.P.; Amar, A.; Parisi, A.V.; Turner, J. Characterisation of a Smartphone Image Sensor Response to Direct Solar 305 Nm Irradiation at High Air Masses. Sci. Total Environ. 2017, 587–588, 407–413. [Google Scholar] [CrossRef]

- Garcia, J.E.; Dyer, A.G.; Greentree, A.D.; Spring, G.; Wilksch, P.A. Linearisation of RGB Camera Responses for Quantitative Image Analysis of Visible and UV Photography: A Comparison of Two Techniques. PLoS ONE 2013, 8, e79534. [Google Scholar] [CrossRef] [Green Version]

- Turner, J.; Igoe, D.P.; Parisi, A.V.; Downs, N.J.; Amar, A. Beyond the Current Smartphone Application: Using Smartphone Hardware to Measure UV Radiation. In Proceedings of the UV Radiation: Effects on Human Health and the Environment, Wellington, New Zealand, 4–6 April 2018. [Google Scholar]

- Pratt, H.; Hassanin, K.; Troughton, L.D.; Czanner, G.; Zheng, Y.; McCormick, A.G.; Hamill, K.J. UV Imaging Reveals Facial Areas That Are Prone to Skin Cancer Are Disproportionately Missed during Sunscreen Application. PLoS ONE 2017, 12, e0185297. [Google Scholar] [CrossRef] [Green Version]

- Tamburello, G.; Aiuppa, A.; Kantzas, E.P.; McGonigle, A.J.S.; Ripepe, M. Passive vs. Active Degassing Modes at an Open-Vent Volcano (Stromboli, Italy). Earth Planet Sci. Lett. 2012, 359–360, 106–116. [Google Scholar] [CrossRef] [Green Version]

- Gibbons, F.X.; Gerrard, M.; Lane, D.J.; Mahler, H.I.M.; Kulik, J.A. Using UV Photography to Reduce Use of Tanning Booths: A Test of Cognitive Mediation. Health Psychol. 2005, 24, 358–363. [Google Scholar] [CrossRef]

- Wilkes, T.C.; Pering, T.D.; McGonigle, A.J.S. Semantic Segmentation of Explosive Volcanic Plumes through Deep Learning. Comput. Geosci. 2022, 168, 105216. [Google Scholar] [CrossRef]

- Salunkhe, A.A.; Gobinath, R.; Vinay, S.; Joseph, L. Progress and Trends in Image Processing Applications in Civil Engineering: Opportunities and Challenges. Adv. Civ. Eng. 2022, 2022, 6400254. [Google Scholar] [CrossRef]

- Sai, V.; Kothala, K. Use of Image Analysis as a Tool for Evaluating Various Construction Materials Recommended Citation. Doctoral dissertation, Clemson University, Clemson, SC, USA, 2018. [Google Scholar]

- Masad, E.; Sivakumar, K. Advances in the Characterization and Modeling of Civil Engineering Materials Using Imaging Techniques. J. Comput. Civ. Eng. 2004, 18, 1. [Google Scholar] [CrossRef]

- Zhang, B.; Huang, W.; Li, J.; Zhao, C.; Fan, S.; Wu, J.; Liu, C. Principles, Developments and Applications of Computer Vision for External Quality Inspection of Fruits and Vegetables: A Review. Food Res. Int. 2014, 62, 326–343. [Google Scholar] [CrossRef]

- Burger, W.; Burge, M.J. Digital Image Processing; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Lukinac, J.; Jukić, M.; Mastanjević, K.; Lučan, M. Application of Computer Vision and Image Analysis Method in Cheese-Quality Evaluation: A Review. Ukr. Food J. 2018, 7, 192–214. [Google Scholar] [CrossRef]

- Kheradmand, A.; Milanfar, P. Non-Linear Structure-Aware Image Sharpening with Difference of Smoothing Operators. Front. ICT 2015, 2, 22. [Google Scholar] [CrossRef] [Green Version]

- Polesel, A.; Ramponi, G.; Mathews, V.J. Image Enhancement via Adaptive Unsharp Masking. IEEE Trans. Image Process. 2000, 9, 505–510. [Google Scholar] [CrossRef] [Green Version]

- Atherton, T.J.; Kerbyson, D.J. Size Invariant Circle Detection. Image Vis. Comput. 1999, 17, 795–803. [Google Scholar] [CrossRef]

- Nakagomi, K.; Shimizu, A.; Kobatake, H.; Yakami, M.; Fujimoto, K.; Togashi, K. Multi-Shape Graph Cuts with Neighbor Prior Constraints and Its Application to Lung Segmentation from a Chest CT Volume. Med. Image Anal. 2013, 17, 62–77. [Google Scholar] [CrossRef] [PubMed]

- Bai, J.; Feng, X.C. Fractional-Order Anisotropic Diffusion for Image Denoising. IEEE Trans. Image Process. 2007, 16, 2492–2502. [Google Scholar] [CrossRef]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2008; p. 829. [Google Scholar]

- Crognale, M.; De Iuliis, M.; Rinaldi, C.; Gattulli, V. Damage Detection with Image Processing: A Comparative Study. Earthq. Eng. Eng. Vib. 2023, 22, 333–345. [Google Scholar] [CrossRef]

- Gijsenij, A.; Lu, R.; Gevers, T. Color Constancy for Multiple Light Sources. IEEE Trans. Image Process. 2012, 21, 697–707. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, Y.; Wang, K. Fault Diagnosis and Prognosis Using Wavelet Packet Decomposition, Fourier Transform and Artificial Neural Network. J. Intell. Manuf. 2013, 24, 1213–1227. [Google Scholar] [CrossRef]

- Luo, C.; Hao, Y.; Tong, Z. Research on Digital Image Processing Technology and Its Application. In Proceedings of the 2018 8th International Conference on Management, Education and Information (MEICI 2018), Shenyang, China, 21–23 September 2018; pp. 587–592. [Google Scholar] [CrossRef] [Green Version]

- Chang, L.-M.; Abdelrazig, Y.; Chen, P.-H. Optical Imaging Method for Bridge Painting Maintenance and Inspection; U.S. Department of Transportation: Washington, DC, USA, 2000.

- Kamboj, A.; Grewal, K.; Mittal, R. Color Edge Detection in RGB Color Space Using Automatic Threshold Detection. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2012, 1. [Google Scholar]

- Eftekhar, P. Comparative Study of Edge Detection Algorithm. Doctoral dissertation, California State University, Northridge, Los Angeles, CA, USA, 2020. [Google Scholar]

- Ai, J.; Zhu, X. Analysis and Detection of Ceramic-Glass Surface Defects Based on Computer Vision. In Proceedings of the World Congress on Intelligent Control and Automation (WCICA), Shanghai, China, 10–14 June 2002; Volume 4, pp. 3014–3018. [Google Scholar] [CrossRef]

- Agrawal, S. Glass Defect Detection Techniques Using Digital Image Processing—A Review. Spec. Issues IP Multimed. Commun. 2011, 1, 65–67. [Google Scholar]

- Adamo, F.; Attivissimo, F.; Di Nisio, A.; Savino, M. A Low-Cost Inspection System for Online Defects Assessment in Satin Glass. Measurement 2009, 42, 1304–1311. [Google Scholar] [CrossRef]

- Awang, N.; Fauadi, M.H.F.M.; Rosli, N.S. Image Processing of Product Surface Defect Using Scilab. Appl. Mech. Mater. 2015, 789–790, 1223–1226. [Google Scholar] [CrossRef]

- Rosli, N.S.; Fauadi, M.H.F.M.; Awang, N. Some Technique for an Image of Defect in Inspection Process Based on Image Processing. J. Image Graph. 2016, 4, 55–58. [Google Scholar] [CrossRef] [Green Version]

- Kmec, J.; Pavlíček, P.; Šmíd, P. Optical Noncontact Method to Detect Amplitude Defects of Polymeric Objects. Polym. Test. 2022, 116, 107802. [Google Scholar] [CrossRef]

- Bandyopadhyay, Y. Glass Defect Detection and Sorting Using Computational Image Processing. Int. J. Emerg. Technol. Innov. Res. 2015, 2, 73–75. [Google Scholar]

- Zhu, Y.; Huang, C. An Improved Median Filtering Algorithm for Image Noise Reduction. Phys. Procedia 2012, 25, 609–616. [Google Scholar] [CrossRef] [Green Version]

- Shanmugavadivu, P.; Eliahim Jeevaraj, P.S. Laplace Equation Based Adaptive Median Filter for Highly Corrupted Images. In Proceedings of the 2012 International Conference on Computer Communication and Informatics, Coimbatore, India, 10–12 January 2012. [Google Scholar] [CrossRef]

- George, J. Automatic Inspection of Potential Flaws in Glass Based on Image Segmentation. IOSR J. Eng. 2013, 3, 20–24. [Google Scholar] [CrossRef]

- Baer, R.L. A Model for Dark Current Characterization and Simulation. In Proceedings of the Sensors, Cameras, and Systems for Scientific/Industrial Applications VII, San Jose, CA, USA, 15–19 January 2006; Volume 6068, pp. 37–48. [Google Scholar] [CrossRef]

- Pereira, E. dos S. Determining the Fixed Pattern Noise of a CMOS Sensor: Improving the Sensibility of Autonomous Star Trackers. J. Aerosp. Technol. Manag. 2013, 5, 217–222. [Google Scholar] [CrossRef] [Green Version]

- Chapman, G.H.; Thomas, R.; Koren, I.; Koren, Z. Hot Pixel Behavior as Pixel Size Reduces to 1 Micron. In Proceedings of the IS and T International Symposium on Electronic Imaging Science and Technology, Burlingame, CA, USA, 29 January–2 February 2017; pp. 39–45. [Google Scholar] [CrossRef]

- Guo, Z.Y.; Le, Z. Improved Adaptive Median Filter. In Proceedings of the 2014 10th International Conference on Computational Intelligence and Security, Kunming, China, 15–16 November 2014; pp. 44–46. [Google Scholar] [CrossRef]

- Patidar, P. Image De-Noising by Various Filters for Different Noise Sumit Srivastava. Int. J. Comput. Appl. 2010, 9, 975–8887. [Google Scholar]

- Gomez-Perez, S.L.; Haus, J.M.; Sheean, P.; Patel, B.; Mar, W.; Chaudhry, V.; McKeever, L.; Braunschweig, C. Measuring Abdominal Circumference and Skeletal Muscle from a Single Cross-Sectional Computed Tomography Image: A Step-by-Step Guide for Clinicians Using National Institutes of Health ImageJ. J. Parenter. Enter. Nutr. 2016, 40, 308–318. [Google Scholar] [CrossRef] [Green Version]

- Limpert, E.; Stahel, W.A.; Abbt, M. Log-Normal Distributions across the Sciences: Keys and Clues. Bioscience 2001, 51, 341–352. [Google Scholar]

- Andersson, A. Mechanisms for Log Normal Concentration Distributions in the Environment. Sci. Rep. 2021, 11, 16418. [Google Scholar] [CrossRef]

- Agam, G. Introduction to Programming with OpenCV. 2006. Available online: https://www.cs.cornell.edu/courses/cs4670/2010fa/projects/Introduction%20to%20Programming%20With%20OpenCV.pdf (accessed on 13 July 2023).

- Oliphant, T.E. Python for Scientific Computing. Comput. Sci. Eng. 2007, 9, 10–20. [Google Scholar] [CrossRef] [Green Version]

- Abraham, A.; Pedregosa, F.; Eickenberg, M.; Gervais, P.; Mueller, A.; Kossaifi, J.; Gramfort, A.; Thirion, B.; Varoquaux, G. Machine Learning for Neuroimaging with Scikit-Learn. Front. Neuroinform. 2014, 8, 14. [Google Scholar] [CrossRef] [Green Version]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow. In Hands-On Machine Learning with R; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017; p. 510. [Google Scholar]

- ATOMS: Image Processing and Computer Vision Toolbox Details. Available online: https://atoms.scilab.org/toolboxes/IPCV (accessed on 15 June 2023).

- Image Processing & Computer Vision|Scilab. Available online: https://www.scilab.org/software/atoms/image-processing-computer-vision (accessed on 15 June 2023).

- Wu, D.; Sun, D.W. Colour Measurements by Computer Vision for Food Quality Control—A Review. Trends Food Sci. Technol. 2013, 29, 5–20. [Google Scholar] [CrossRef]

- Cossio, M.; Cossio, M. The New Landscape of Diagnostic Imaging with the Incorporation of Computer Vision; IntechOpen: London, UK, 2023. [Google Scholar] [CrossRef]

- Mendoza, F.; Aguilera, J.M. Application of Image Analysis for Classification of Ripening Bananas. J. Food Sci. 2004, 69, E471–E477. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D.W. Improving Quality Inspection of Food Products by Computer Vision––A Review. J. Food Eng. 2004, 61, 3–16. [Google Scholar] [CrossRef]

- Huo, Y.; Deng, R.; Liu, Q.; Fogo, A.B.; Yang, H. AI Applications in Renal Pathology. Kidney Int. 2021, 99, 1309–1320. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 9780262035613. [Google Scholar]

- Ramachandran, S.S.; George, J.; Skaria, S.; Varun, V.V. Using YOLO Based Deep Learning Network for Real Time Detection and Localization of Lung Nodules from Low Dose CT Scans. SPIE 2018, 10575, 105751I. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016: Proceedings of the 14th European Conference; Amsterdam, The Netherlands, 11–14 October 2016, Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Dikbayir, H.S.; Ibrahim Bulbul, H. Deep Learning Based Vehicle Detection from Aerial Images. In Proceedings of the 2020 19th IEEE International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 14–17 December 2020; pp. 956–960. [Google Scholar] [CrossRef]

- Chen, C.; Lu, J.; Zhou, M.; Yi, J.; Liao, M.; Gao, Z. A YOLOv3-Based Computer Vision System for Identification of Tea Buds and the Picking Point. Comput. Electron. Agric. 2022, 198, 107116. [Google Scholar] [CrossRef]

- Tian, D.; Han, Y.; Wang, B.; Guan, T.; Gu, H.; Wei, W. Review of Object Instance Segmentation Based on Deep Learning. J. Electron. Imaging 2021, 31, 041205. [Google Scholar] [CrossRef]

- Hafiz, A.M.; Bhat, G.M. A Survey on Instance Segmentation: State of the Art. Int. J. Multimed. Inf. Retr. 2020, 9, 171–189. [Google Scholar] [CrossRef]

- Xu, Y.; Nagahara, H.; Shimada, A.; Taniguchi, R.-I. TransCut: Transparent Object Segmentation from a Light-Field Image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015. [Google Scholar]

- Mollazade, K.; Omid, M.; Tab, F.A.; Mohtasebi, S.S. Principles and Applications of Light Backscattering Imaging in Quality Evaluation of Agro-Food Products: A Review. Food Bioprocess Technol. 2012, 5, 1465–1485. [Google Scholar] [CrossRef]

- Hornberg, A. Handbook of Machine Vision; Wiley: Hoboken, NJ, USA, 2007; pp. 1–798. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef] [Green Version]

- Zhu, J.; Wu, A.; Wang, X.; Zhang, H. Identification of Grape Diseases Using Image Analysis and BP Neural Networks. Multimed. Tools Appl. 2020, 79, 14539–14551. [Google Scholar] [CrossRef]

- Sakshi; Kukreja, V. Image Segmentation Techniques: Statistical, Comprehensive, Semi-Automated Analysis and an Application Perspective Analysis of Mathematical Expressions. Arch. Comput. Methods Eng. 2022, 30, 457–495. [Google Scholar] [CrossRef]

- Qing, Z.; Ji, B.; Zude, M. Predicting Soluble Solid Content and Firmness in Apple Fruit by Means of Laser Light Backscattering Image Analysis. J. Food Eng. 2007, 82, 58–67. [Google Scholar] [CrossRef]

- Venkata Ravi Kumar, D.; Naga Satish, G.; Raghavendran, C.V. A Literature Study of Image Segmentation Techniques for Images. Int. J. Eng. Res. Technol. 2018, 4, 1–3. [Google Scholar]

- Dhingra, G.; Kumar, V.; Joshi, H.D. Study of Digital Image Processing Techniques for Leaf Disease Detection and Classification. Multimed. Tools Appl. 2018, 77, 19951–20000. [Google Scholar] [CrossRef]

- Sarma, R.; Gupta, Y.K. A Comparative Study of New and Existing Segmentation Techniques. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1022, 012027. [Google Scholar] [CrossRef]

- Song, Y.; Yan, H. Image Segmentation Techniques Overview. In Proceedings of the AMS 2017—Asia Modelling Symposium 2017 and 11th International Conference on Mathematical Modelling and Computer Simulation, Kota Kinabalu, Malaysia, 4–6 December 2018; pp. 103–107. [Google Scholar] [CrossRef]

- Mohammad, N.; Yusof, M.Y.P.M.; Ahmad, R.; Muad, A.M. Region-Based Segmentation and Classification of Mandibular First Molar Tooth Based on Demirjian’s Method. J. Phys. Conf. Ser. 2020, 1502, 012046. [Google Scholar] [CrossRef]

- Fan, Y.Y.; Li, W.J.; Wang, F. A Survey on Solar Image Segmentation Techniques. Adv. Mater. Res. 2014, 945–949, 1899–1902. [Google Scholar] [CrossRef]

- Stawiaski, J.; Decencière, E.; Bidault, F. Interactive Liver Tumor Segmentation Using Graph-Cuts and Watershed; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Kapoor, L.; Thakur, S. A Survey on Brain Tumor Detection Using Image Processing Techniques. In Proceedings of the 2017 7th International Conference Confluence on Cloud Computing, Data Science and Engineering, Noida, India, 12–13 January 2017; pp. 582–585. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, K.; Ji, S. Simultaneous Road Surface and Centerline Extraction from Large-Scale Remote Sensing Images Using CNN-Based Segmentation and Tracing. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8919–8931. [Google Scholar] [CrossRef]

- Perng, D.B.; Liu, H.W.; Chang, C.C. Automated SMD LED Inspection Using Machine Vision. Int. J. Adv. Manuf. Technol. 2011, 57, 1065–1077. [Google Scholar] [CrossRef]

- Li, D.; Liang, L.Q.; Zhang, W.J. Defect Inspection and Extraction of the Mobile Phone Cover Glass Based on the Principal Components Analysis. Int. J. Adv. Manuf. Technol. 2014, 73, 1605–1614. [Google Scholar] [CrossRef]

- Gayeski, N.; Stokes, E.; Andersen, M. Using Digital Cameras as Quasi-Spectral Radiometers to Study Complex Fenestration Systems. Light. Res. Technol. 2009, 41, 7–23. [Google Scholar] [CrossRef]

- Asada, N.; Amano, A.; Baba, M. Photometric Calibration of Zoom Lens Systems. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 1, pp. 186–190. [Google Scholar] [CrossRef]

- Holst, G. CCD Arrays, Cameras, and Displays, 2nd ed.; JCD Publishing: Winter Park, FL, USA, 1998. [Google Scholar]

- Debevec, P.E.; Malik, J. Recovering High Dynamic Range Radiance Maps from Photographs; Association for Computing Machinery: New York, NY, USA, 1997. [Google Scholar]

- Porter, J.N.; Miller, M.; Pietras, C.; Motell, C. Ship-Based Sun Photometer Measurements Using Microtops Sun Photometers. J. Atmos. Ocean. Technol. 2001, 18, 765–774. [Google Scholar] [CrossRef]

- Cabral, J.D.D.; de Araújo, S.A. An Intelligent Vision System for Detecting Defects in Glass Products for Packaging and Domestic Use. Int. J. Adv. Manuf. Technol. 2015, 77, 485–494. [Google Scholar] [CrossRef]

| Camera Type | Sensitivity | Lens | Saved Format | External Source | UV | Processing Software | Analysis File Format | Domain | Author |

|---|---|---|---|---|---|---|---|---|---|

| Nikon Coolpix 5400 | - | fisheye lens | RadianceRGBE and LogLuv TIFF | Natural sunlight, warm yellow light, and MH and HPS lamp | N | Photosphere | RadianceRGBE | HDR Imaging | [10] |

| Canon EOS 6D | ISO6400 | Rayfact 105 mm f4.5 UV lens and a Micro Nikkor 105 mm f4 | RAW&JPEG | Y (Xenon lamp) | Y | RawDigger x64 and Excel 2010 | RAW | - | [30] |

| Ultraviolet (UV) 1-CCD camera (SONY, XC- EU50 | - | Pentax, B2528-UV | RAW | N and National, PRF-500WB | Y | pixel-segmented Algorithms | RAW | Ultraviolet imaging-based machine vision system | [34] |

| Canon, Power shot A-80 | - | - | RAW | N and National, PRF-500WB | N | pixel-segmented Algorithms | RAW | imaging-based machine vision system | [34] |

| Canon EOS Rebel T2i DSLR digital camera | - | - | - | 1000 W tungsten halogen lamp | N | MATLAB (MathWorks, Natick, MA, USA) software, R2014a version | - | Agricultural | [35] |

| DSLR camera, Nikon D100 | - | F Micro-Nikkor 60 mm f/2.8D lens | - | Two tungsten lamps | N | MATLAB® customized software | - | Granite-forming minerals | [36] |

| Power Shot G3 (Canon, Japan) | - | - | TIFF | Four fluorescent lamps (Philips, Natural Daylight) | N | Canon Remote Capture Software (version 2.7.0) | TIFF | Agricultural | [37] |

| MS3100 (Duncan Technologies, Inc., CA, USA) | - | 7 mm focal length lens with f-stop of 3.5 | - | Halogen lamp | MATLAB (MathWorks Inc., MA, USA) toolbox | - | Agricultural | [38] | |

| CANON EOS 450D | 100 | EF-S18-55 mm f 3.5–5.6 IS | RAW | Four fluorescent lamps (Philips Master Graphica TLD 965 | N | Adobe Photoshop CS3 software for image analysis | RAW | Agricultural | [41] |

| Device | Special Band Pass | Band Pass Type | Camera Settings | Application | Reference | Wavelength | Price ($) |

|---|---|---|---|---|---|---|---|

| Sony Xperia Z1 | Y | UG11 broadband transmission filter with the KG05 infrared blocking filter | 5248 × 3936 pixels 20.7MP an exposure time of 0.125 s. | Determine how thick optical materials affect the camera’s ability to measure and monitor UVA light in places where direct illumination is blocked. | [2] | - | 530 |

| LG L3 smartphone | Y | CVI Melles Griot | - | Evaluated the direct sun clear sky irradiances from narrowband direct sun smartphone-derived images | [20] | 340 and 380 nm. | 123.58 |

| Samsung Galaxy SII (camera model GT-19100), iPhone5, and Nokia Lumia 800 | Samsung f/2.6 exposure time 1/17; iPhone f/2.4 exposure time 1/15 or 1/16; Nokia f/2.2 exposure time 1/14; | Described how smartphone cameras react to ultraviolet B radiation and show that they can sense this radiation without extra equipment. | [22] | 280 to 320 nm | - | ||

| Canon EOS 6D | Y | LaLaU UV pass filter | Rayfact 105 mm f4.5 UV lens | Imaging of Vase under UV Light | [30] | 320–400 nm | |

| Samsung Galaxy 5 | Y | CVI Melles Griot | - | To characterize the ultraviolet A (UVA; 320–400 nm) response of a consumer complementary metal oxide semiconductor (CMOS)-based smartphone image sensor in a controlled laboratory environment | [21] | 380 and 340 nm | 70 |

| Sony Xperia Z1 | Y | Solar Light Inc | 7.487 mm lens, 21 MP | To characterize the photobiological important direct UVB solar irradiances at 305 nm in clear sky conditions at high air masses. | [58] | 305 nm | 530 |

| DSLR (Canon EOS Rebel XTi 400D) | Y | Lifepixel | F 2.8, ISO 1800, shutter speed 1.2 s | To determine if skin cancer-prone facial regions are ineffectively covered | [61] | - | 899 |

| Alta U260 cameras | Y | Asahi Spectra 10 nm FWHM XBPA310 and XBPA330 | f25 mm, 16 bit 512 × 512 pixel | Imaging the sulphur dioxide flux distribution of the fumarolic field of La Fossa crater | [62] | - | 5800 |

| Polaroid CU5, Faraghan Medical Camera Systems | Y | N/A | 35-mm single-lens | Used UV photography to highlight the damage to facial skin caused by the previous UV exposure | [63] | - | 39 |

| Raspberry Pi camera module | Y | UV transmissive AR-coated plano-convex lens | F9-12 mm, 10-bit images, at an initial resolution of 1392 × 1040 | Using low-cost UV cameras to measure how much sulphur dioxide comes out of volcanoes with UV light | [64] | 320 and 330 nm | 500 |

| Wavelengths | Camera Instrument | Irradiance Transformation Function | Illumination | Illuminance Transformation Function | Scene Radiation Function | Author |

|---|---|---|---|---|---|---|

| - | Nikon Camera | - | Sun | L = −k∗((0.2127∗R) + (0.7151∗G) + (0.722∗B)) (cd/m2) | - | [10] |

| - | smartphone | - | Sun | - | [28] | |

| 305 nm, 312 nm and 320 nm | smartphone | Sun | - | - | [60] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onatayo, D.A.; Srinivasan, R.S.; Shah, B. Ultraviolet Radiation Transmission in Building’s Fenestration: Part II, Exploring Digital Imaging, UV Photography, Image Processing, and Computer Vision Techniques. Buildings 2023, 13, 1922. https://doi.org/10.3390/buildings13081922

Onatayo DA, Srinivasan RS, Shah B. Ultraviolet Radiation Transmission in Building’s Fenestration: Part II, Exploring Digital Imaging, UV Photography, Image Processing, and Computer Vision Techniques. Buildings. 2023; 13(8):1922. https://doi.org/10.3390/buildings13081922

Chicago/Turabian StyleOnatayo, Damilola Adeniyi, Ravi Shankar Srinivasan, and Bipin Shah. 2023. "Ultraviolet Radiation Transmission in Building’s Fenestration: Part II, Exploring Digital Imaging, UV Photography, Image Processing, and Computer Vision Techniques" Buildings 13, no. 8: 1922. https://doi.org/10.3390/buildings13081922