Exploring the Impact of Different Registration Methods and Noise Removal on the Registration Quality of Point Cloud Models in the Built Environment: A Case Study on Dickabrma Bridge

Abstract

:1. Introduction

2. Related Work

2.1. Registration Method

2.2. Noise and Outlier Removal

2.3. The Importance of High-Quality Point Cloud Models

3. Methodology and Experiment

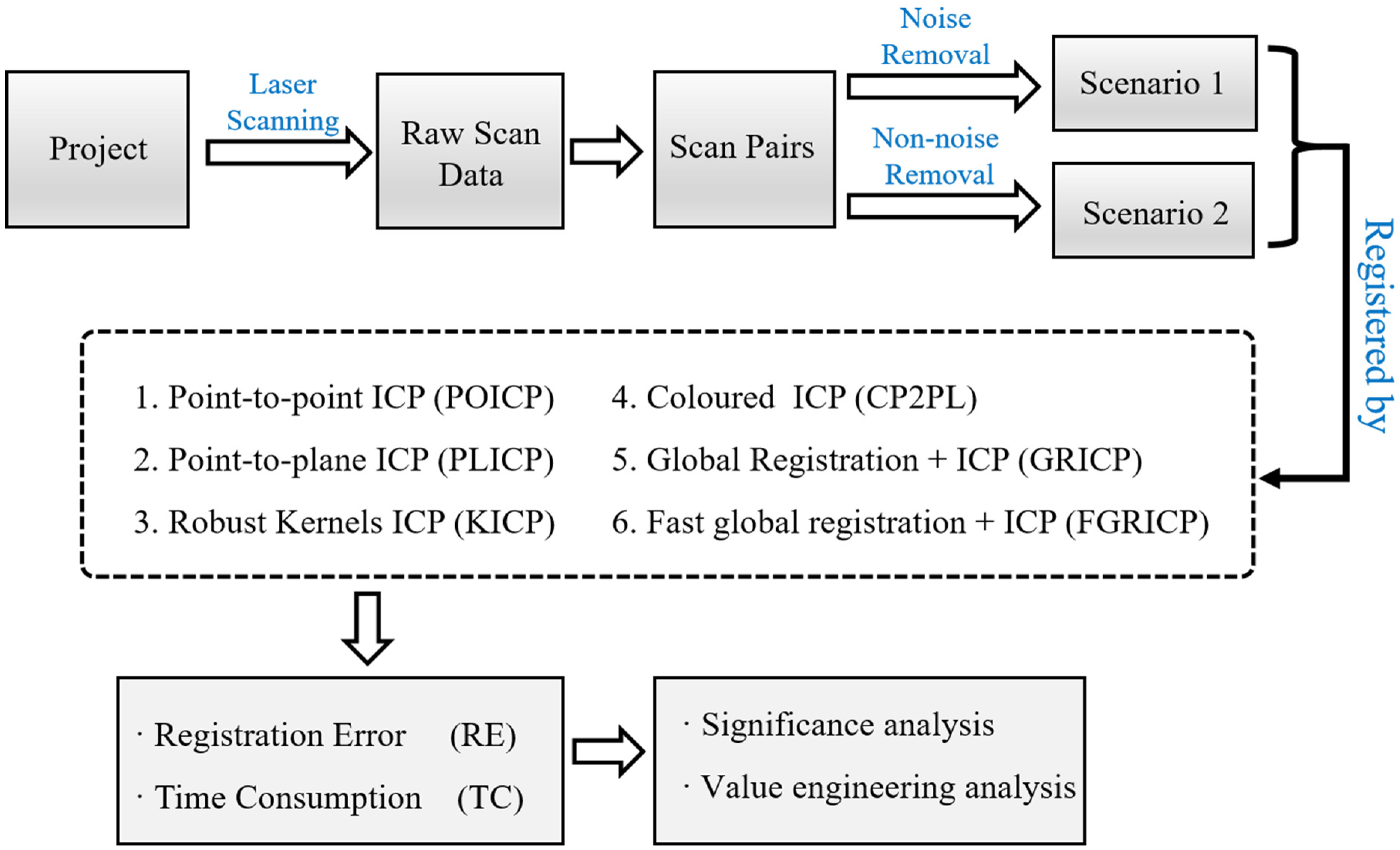

3.1. Framework

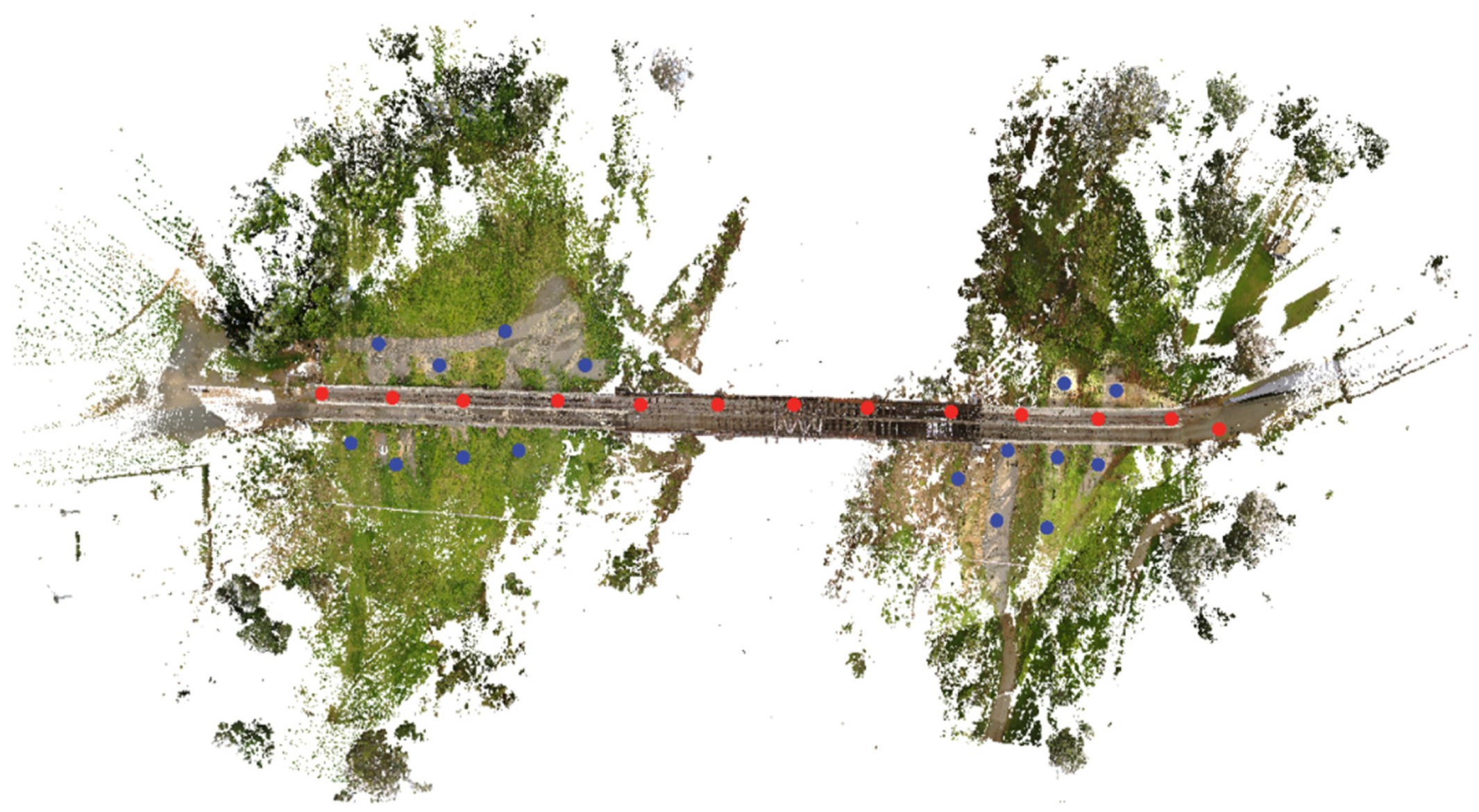

3.2. Project and Equipment

3.3. Point Cloud Data Collection and Noise Removal

3.4. Registration Strategies Used in Experiment

3.4.1. Point-to-Point ICP (POICP)

3.4.2. Point-to-Plane ICP (PLICP)

3.4.3. Colored ICP (CICP)

3.4.4. Robust Kernels ICP (KICP)

3.4.5. Global Registration + ICP (GRICP)

- ‘CorrespondenceCheckerBasedOnDistance’ confirms whether the distances between aligned point clouds are within a specific threshold.

- ‘CorrespondenceCheckerBasedOnEdgeLength’ assesses whether the lengths of two arbitrary edges (lines formed by two points), derived individually from source and target correspondences, exhibit similarity. This check verifies the conditions ||source edge length|| > 0.9 * ||target edge length|| and ||target edge length|| > 0.9 * ||source edge length||.

- ‘CorrespondenceCheckerBasedOnNormal’ evaluates the affinity of vertex normal for any given correspondences. This is performed by computing the dot product of two normal vectors, using a radian value as the threshold.

3.4.6. Fast Global Registration + ICP (FGRICP)

- Feature Extraction and Matching: Initially, features are extracted from the two adjacent point clouds that need to be registered. Based on these features, matching is performed to identify potential point pairs. In the experiment, the feature is the same as the global registration, which is FPFH.

- Graph Model Construction: Using the matched point pairs, a graph model is constructed where each edge represents a point pair.

- Linear Process Weight Optimization: The next step involves the optimization of the linear process weights to determine the significance of each point pair. This step is central to the Fast Global Registration algorithm and the reason for its efficient performance. The aim of optimization is to achieve as much consistency as possible in the model after registration.

- Iterative Optimization: Iterative optimization continues until the model converges or a predetermined number of iterations are reached.

- Final Registration Result: Finally, the optimized linear process weights are used to obtain the final registration result.

3.5. Registration Error and Time Consumption

| Algorithm 1. The pseudocode for the computational procedure of RE and TC |

| Input: source point cloud Q, target point cloud P, distance threshold d Output: registration error RE, time consumption TC |

| 1: Initialize start time as now () 2: For each registration operation in the specific registration algorithm: 3: Calculate transformation matrix T 4: Transform source point cloud Q using T to get Q’ 5: Set end time as now () 6: Calculate TC as the difference between end time and start time 7: Initialize kd-tree based on target point cloud P 8: Initialize pairs as an empty list 9: For each point qi in Q’: 10: Find the closest point pi in P using kd-tree within range d 11: Add the pair (pi, qi) to pairs 12: Initialize distances as an empty list 13: For each pair (pi, qi) in pairs: 14: Calculate the Euclidean distance between pi and qi 15: Add the distance to distances 16: Calculate RE as the median of distances 17: Return TC, RE |

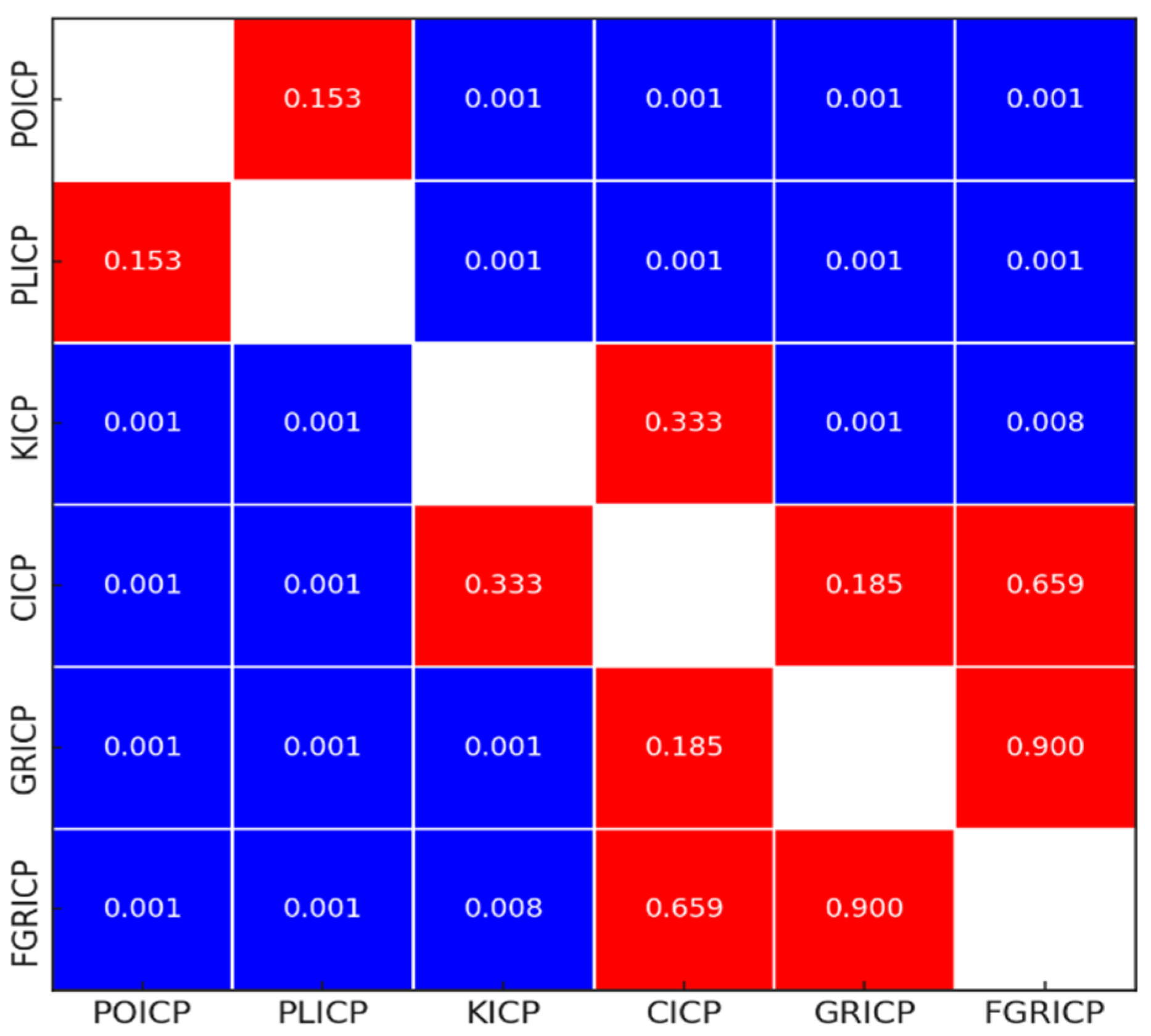

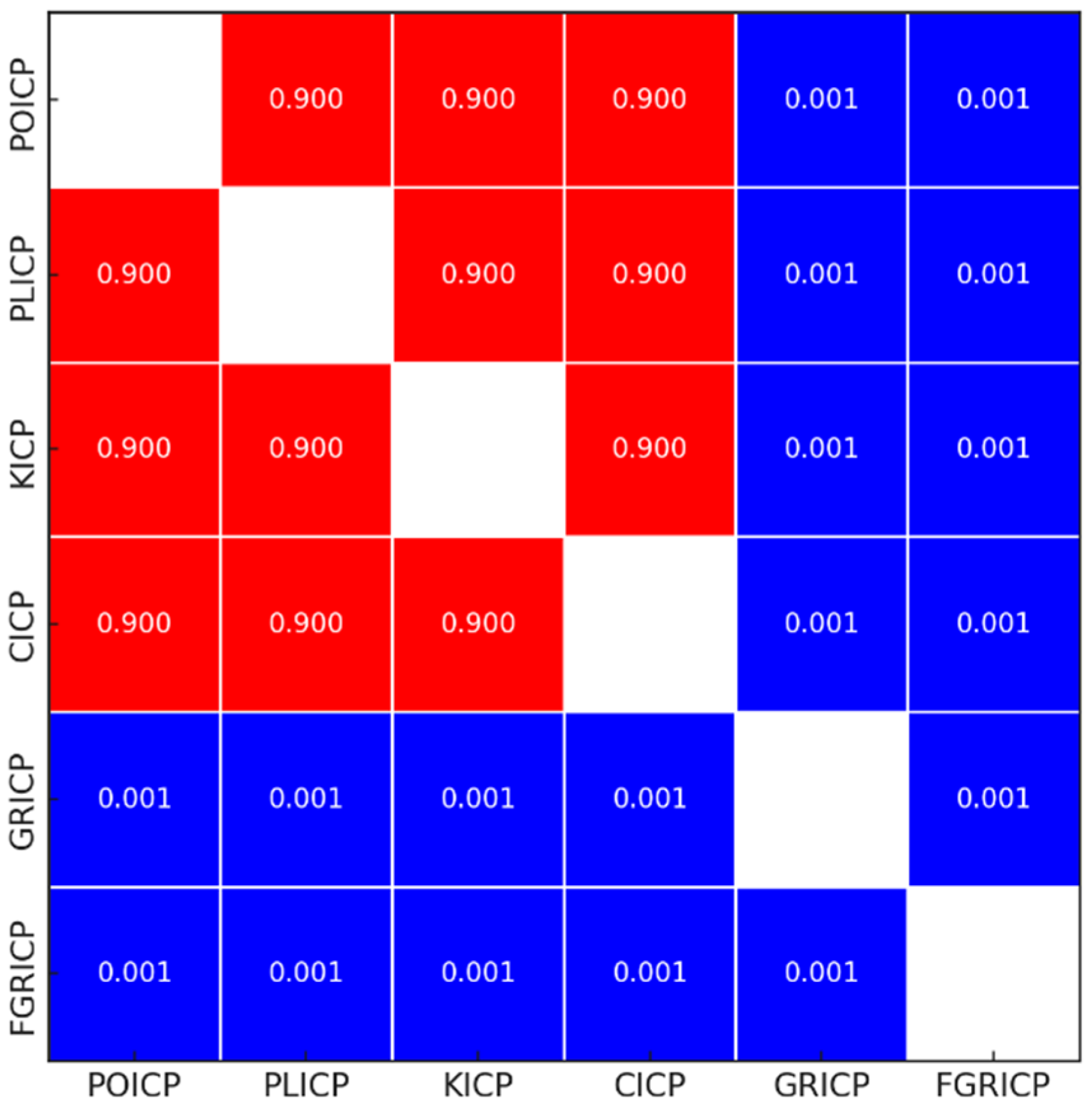

3.6. Significance and Value Analysis

4. Result

4.1. Scenario 1 (Non-Noise Removal)

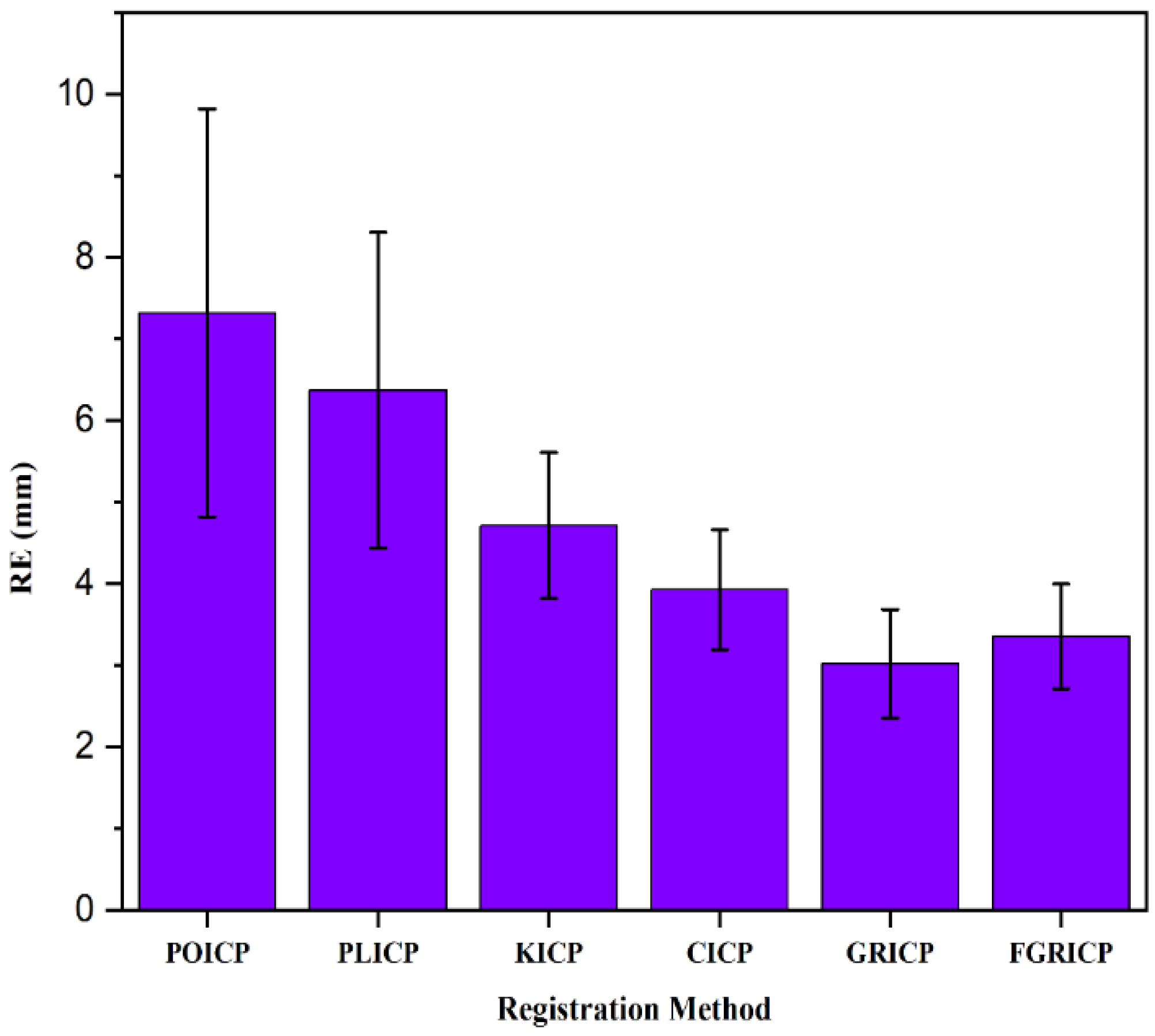

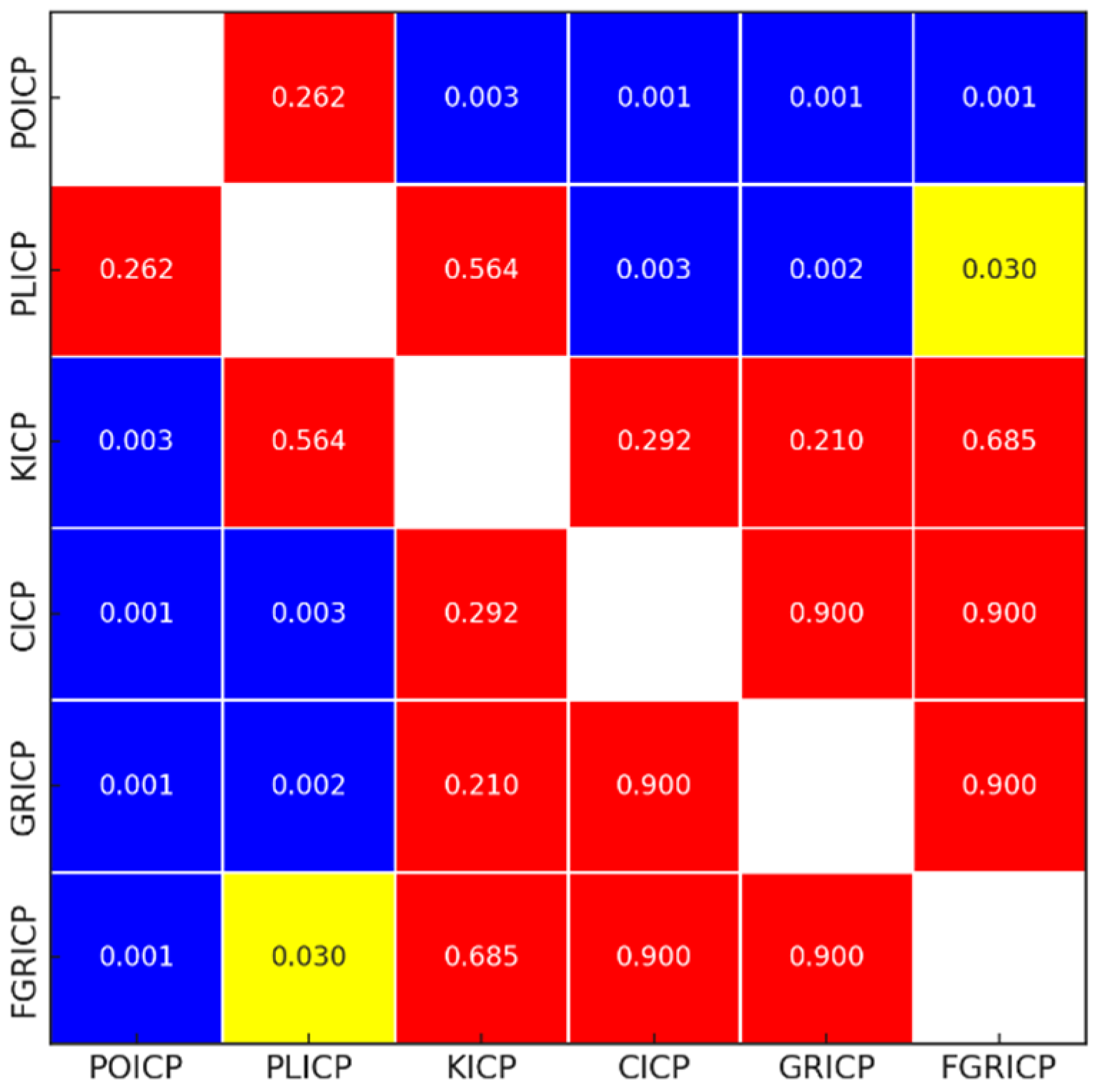

4.1.1. Registration Error

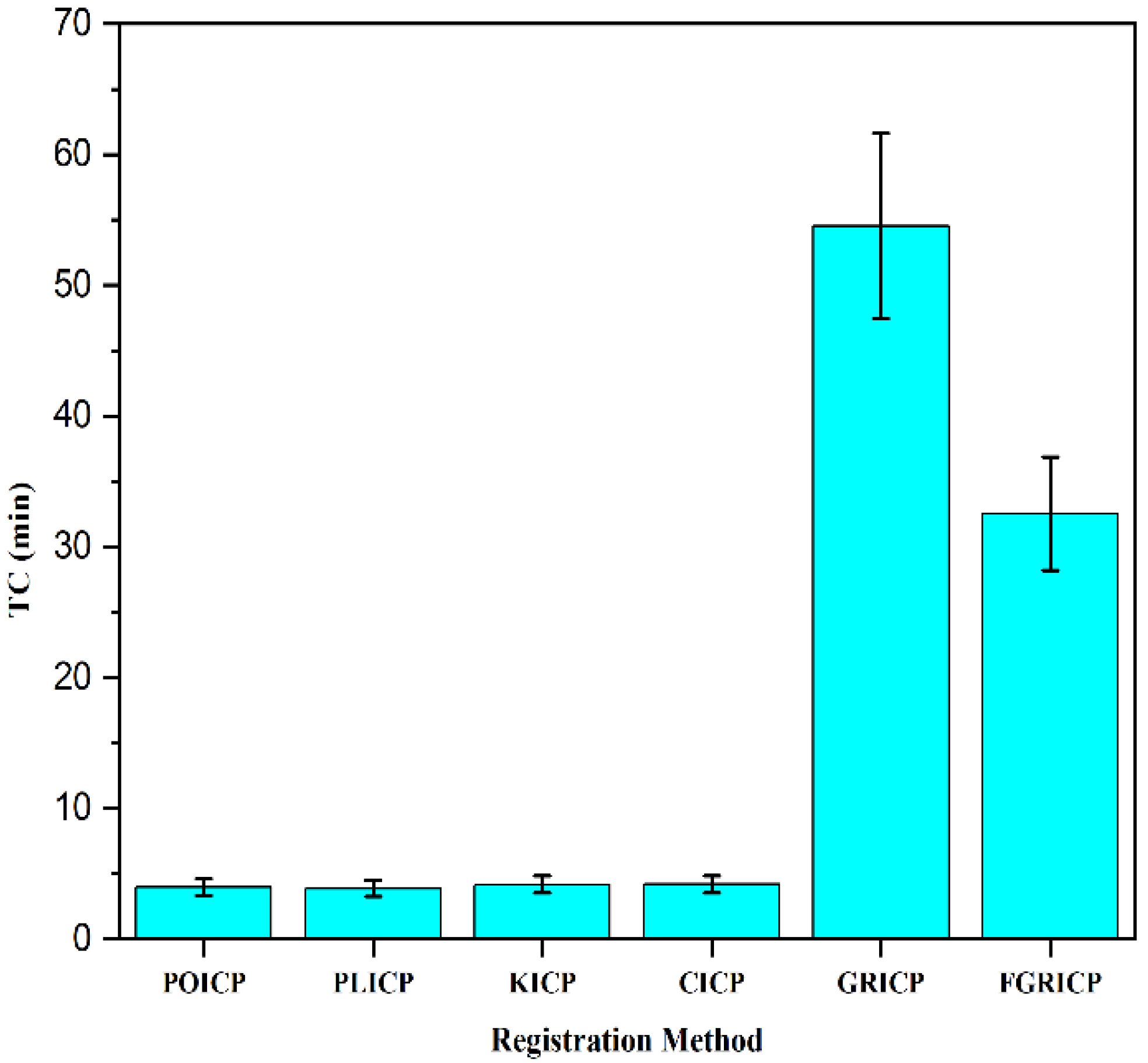

4.1.2. Time Consumption

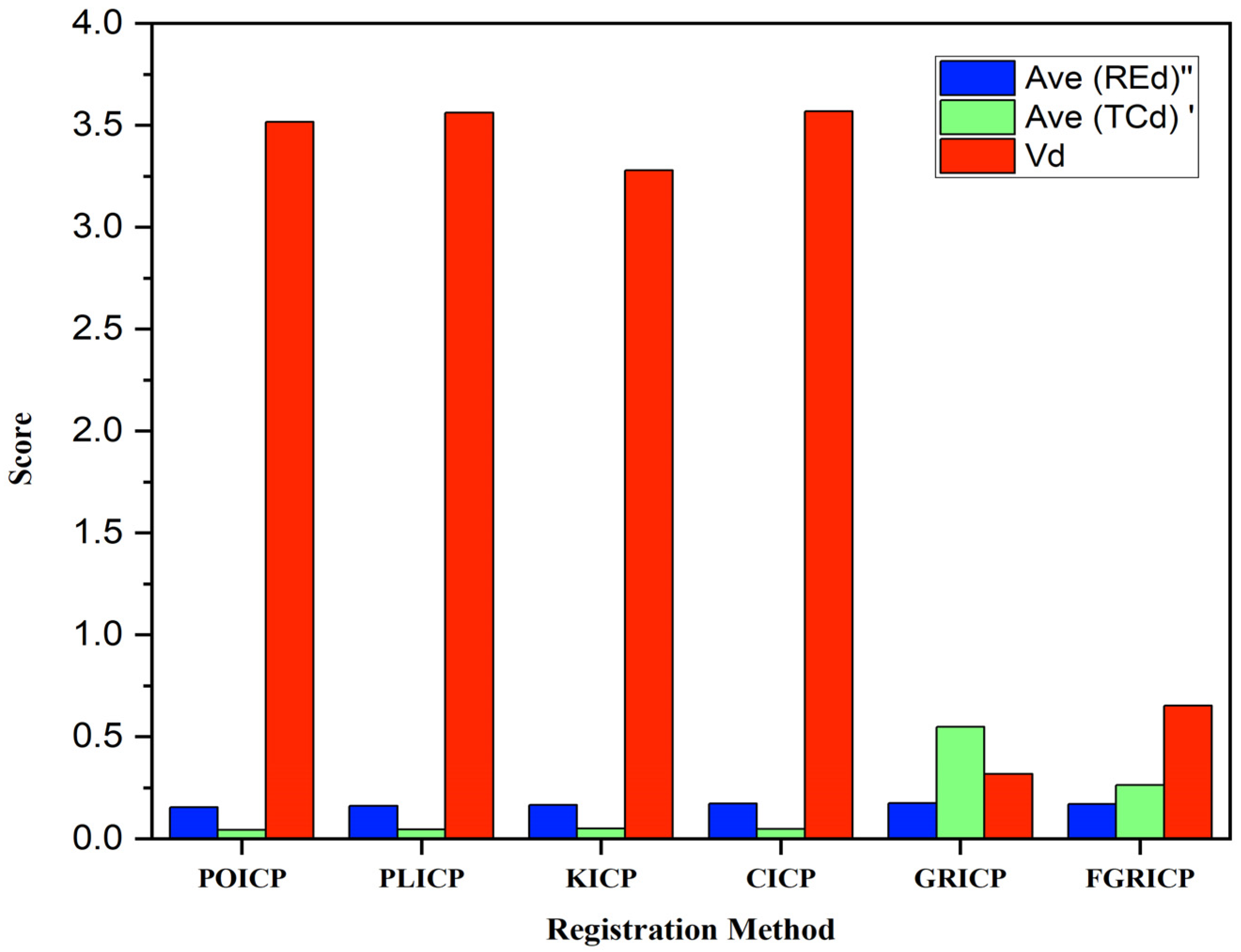

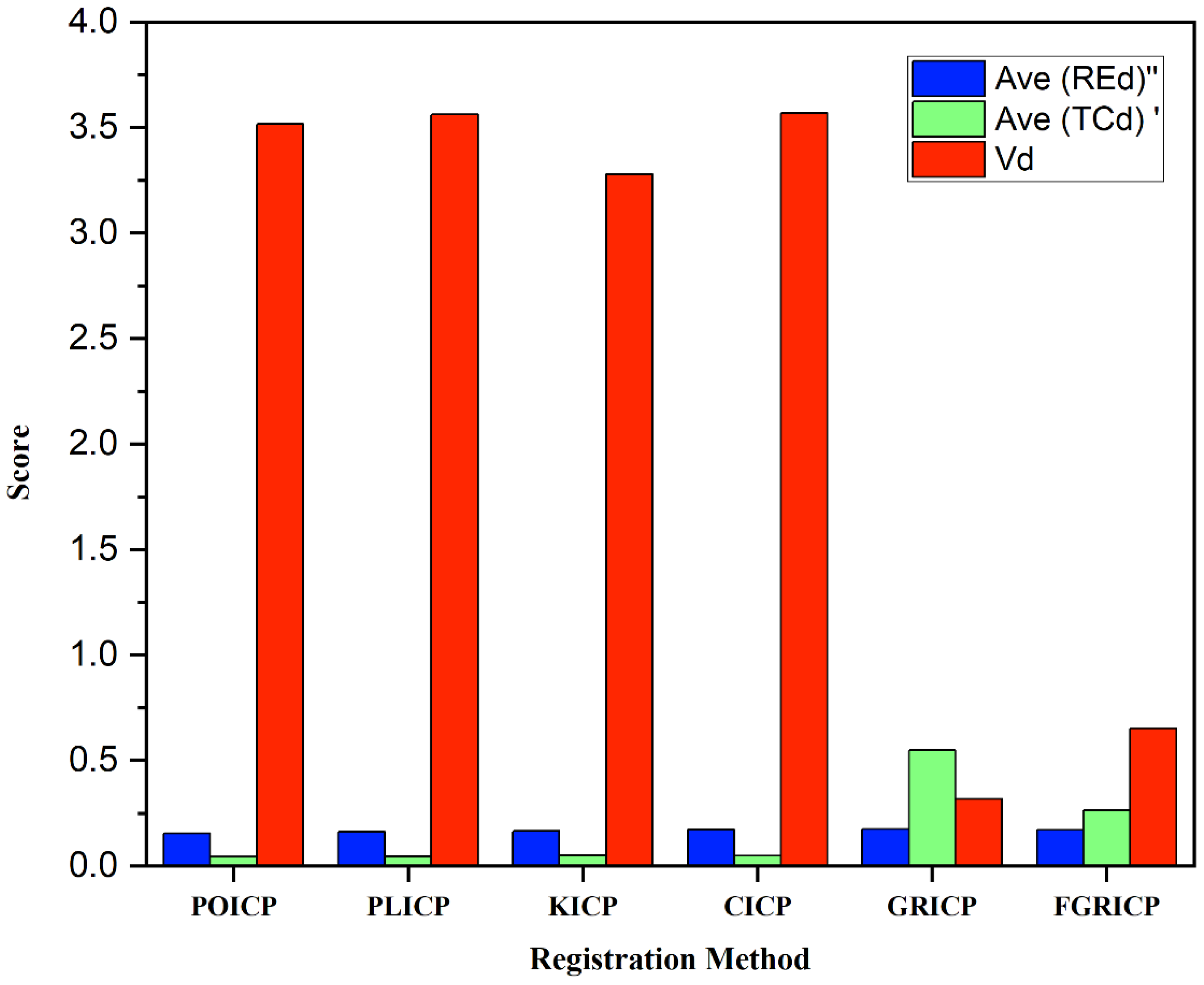

4.1.3. Value Comparison

4.2. Scenario 2 (Noise Removal)

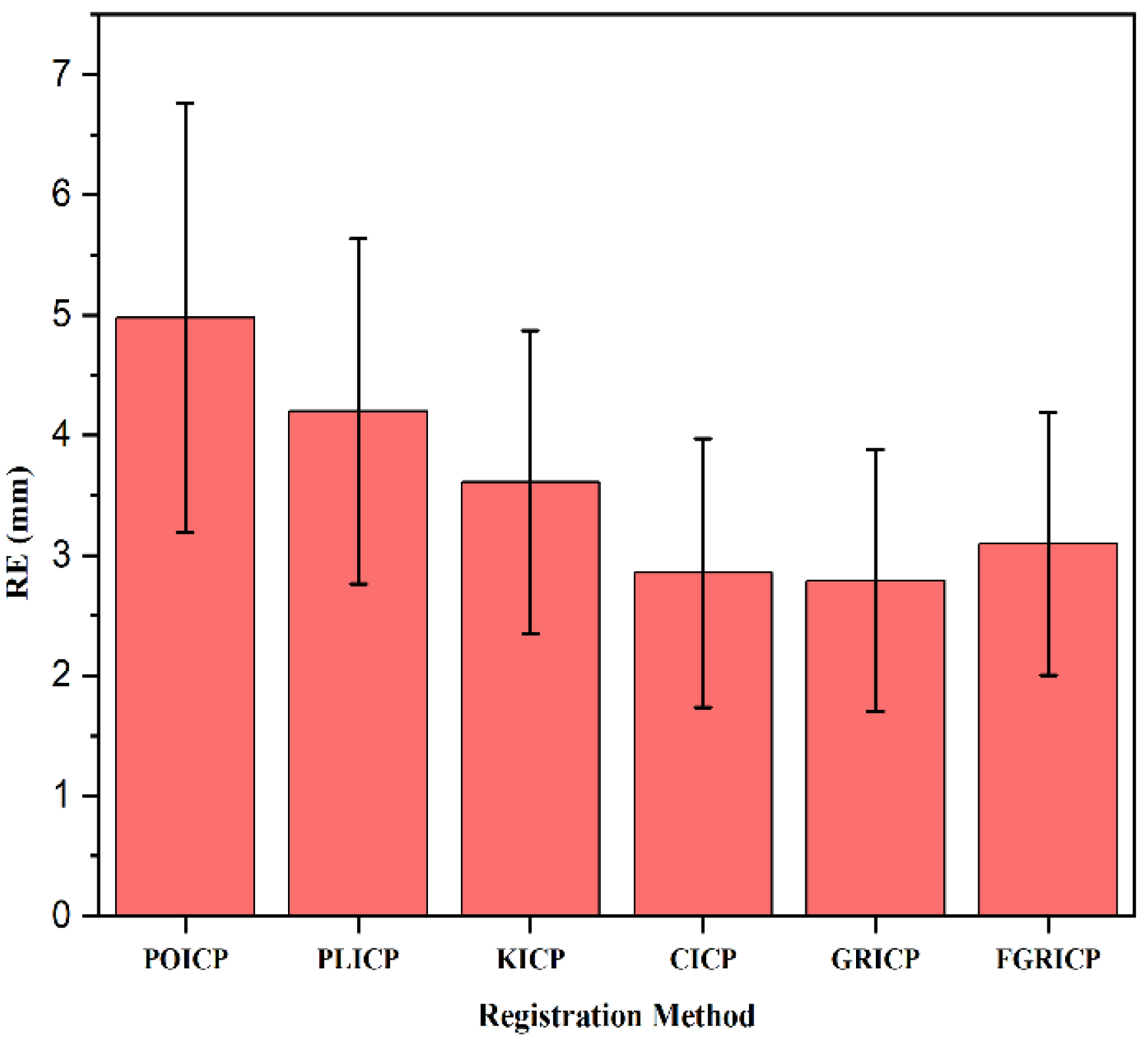

4.2.1. Registration Error

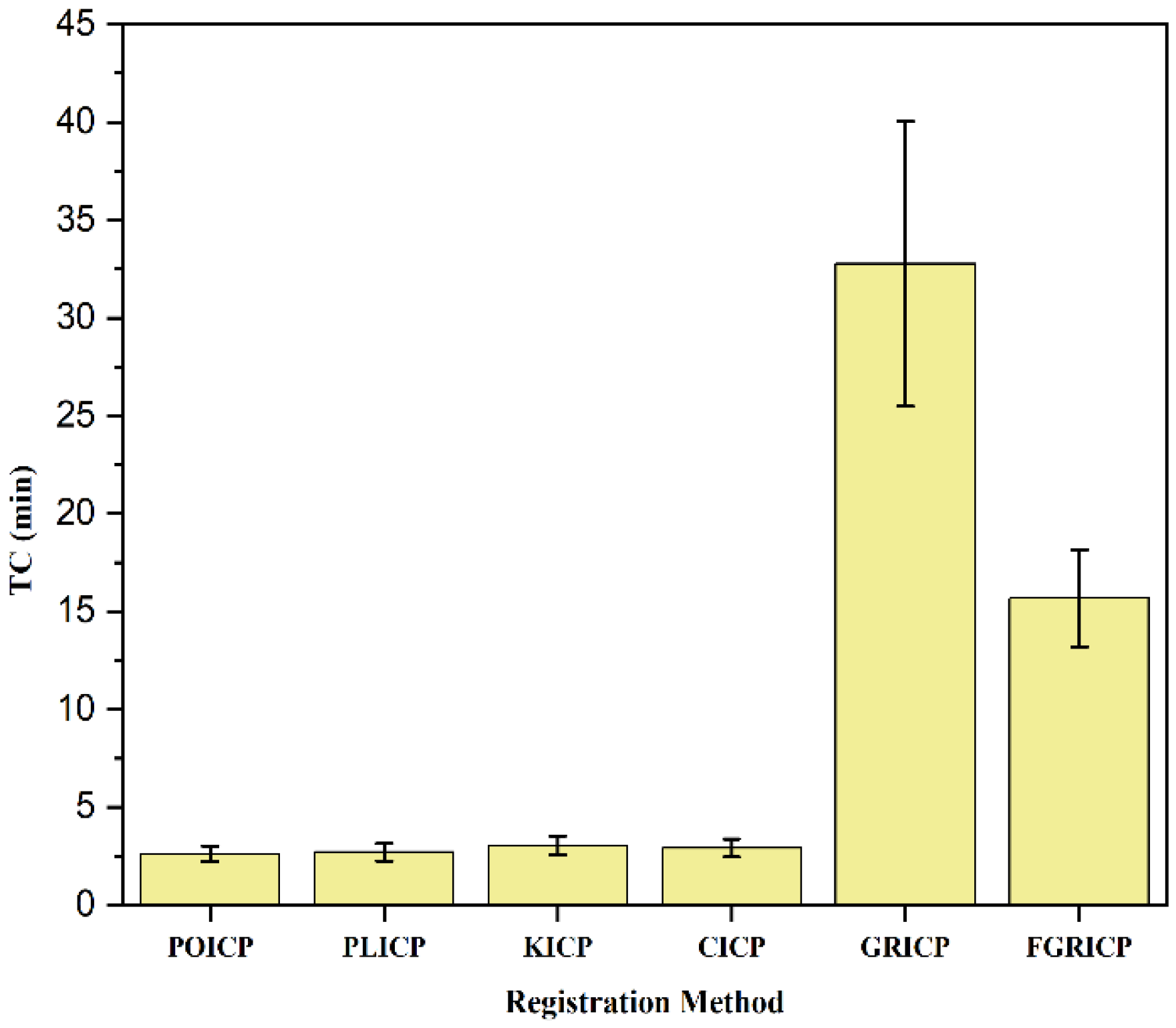

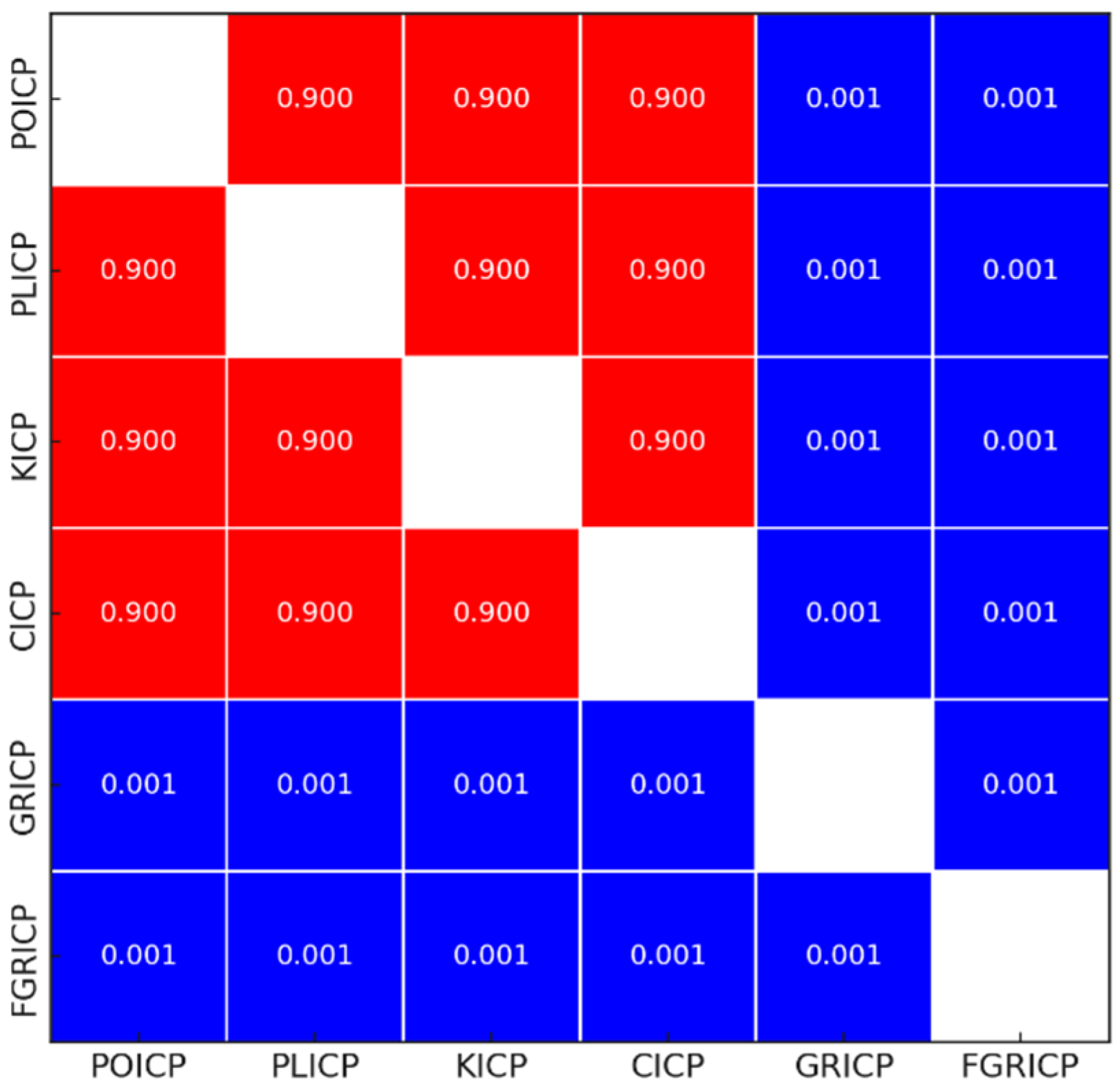

4.2.2. Time Consumption

4.2.3. Value Comparison

4.3. Scenario 1 vs. Scenario 2

5. Discussion

5.1. Key Findings

- In conditions without noise removal, the average RE for six different point cloud registration methods, listed from highest to lowest, are as follows: POICP (7.32 mm), PLICP (6.38 mm), KICP (4.71 mm), CICP (3.93 mm), FGRICP (3.36 mm), GRICP (3.02 mm). When operating under conditions with noise removal, the average Registration Error (RE) values from highest to lowest are: POICP (4.98 mm), PLICP (4.20 mm), KICP (3.61 mm), FGRICP (3.10 mm), CICP (2.86 mm), and GRICP (2.79 mm).

- Under conditions without noise removal, the TC for six different point cloud registration methods, listed from highest to lowest, are as follows: GRICP (54.53 min), FGRICP (32.53 min), CICP (4.19 min), KICP (4.18 min), POICP (3.98 min), and PLICP (3.86 min). When operating under conditions with noise removal, the Time Consumption (TC) values, from highest to lowest, are: GRICP (32.76 min), FGRICP (15.68 min), KICP (3.03 min), CICP (2.90 min), PLICP (2.70 min), and POICP (2.61 min).

- In the non-noise removal condition, the score of value engineering for CICP was 4.26, while under the noise removal condition, it scored 3.57. In both conditions, CICP achieved the highest score. This suggests that CICP is the most cost-effective point cloud registration algorithm in this experiment. Its overall performance is outstanding, with average registration errors of 3.93 mm (non-noise removal) and 2.86 mm (noise removal), respectively. The average time consumption is 4.19 min (non-noise removal) and 2.90 min (noise removal), respectively.

- In the analysis of value engineering, GRICP scored 0.34 and 0.32 under non-noise removal and noise removal conditions, respectively. These are the lowest scores under both conditions, suggesting a relatively low cost-effectiveness. Although when solely comparing the registration error, its averages of 3.02 mm (non-noise removal) and 2.79 mm (noise removal) are among the best in their respective groups, the time consumption is extremely long, at 54.53 min (non-noise removal) and 32.76 min (noise removal), respectively.

- Noise removal can significantly enhance registration accuracy and reduce computational time for the majority of point cloud registration algorithms. Among the six algorithms involved in this study, the average reduction in registration error (RE) was 1.20 mm, and the average reduction in time consumption (TC) was 7.27 min under the noise removal condition compared to the non-noise removal condition. Specifically, for the POICP algorithm, the RE and TC were reduced by 32% and 34%, respectively, after noise removal. For PLICP, there was a reduction of 34% in RE and 30% in TC. KICP showed a decrease of 23% in RE and 28% in TC, CICP demonstrated a 27% and 31% decline in RE and TC respectively, GRICP witnessed a reduction of 8% in RE and 40% in TC, and for FGRICP, the RE and TC decreased by 8% and 52%, respectively, after noise removal.

5.2. Future Development Trend

5.3. Experimental Statement and Limitation

- In this study, all point cloud data were not subsampled during the calculation of RE and TC. The only exception was when calculating the FPFH for GRICP and FGRICP, where subsampling was applied due to the extensive duration of the global registration process associated with these methods.

- The measure of TC in this study only encompasses the period from the commencement of the algorithm to the determination and transformation of the source point cloud via the transformation matrix T. It does not include the subsequent time for Registration Error (RE) calculation. The calculation of RE involves traversing all newly formed point pairs within the RE algorithm and computing the Euclidean distance, a process that is notably time-consuming.

- The primary objective of the RE and TC metrics in this paper is to aid in evaluating the significance of the registration method and noise removal as influential factors, as well as assisting in assessing the value of each registration method. The results of RE and TC in this study should only be used as a reference under similar environmental conditions to those detailed in this paper, and where no point cloud subsampling is performed. This is because the calculation of RE in this study is highly sensitive to environmental factors and point cloud subsampling. In particular, subsampling that increases the intervals between point clouds can have a substantial impact on the results.

- The RE calculation method utilized in this study has certain limitations, including its high sensitivity to subsampling, low robustness, and a propensity for local optimal solutions due to the range search for the nearest points. To address these limitations, the author proposes an alternative approach for future researchers. Specifically, the use of markers during scanning could provide a more accurate method for evaluating registration accuracy. By placing markers within the source and target point clouds, these known points can serve as references. Consequently, the Euclidean distance between these markers can be computed as the RE, providing a more accurate reflection of registration accuracy.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Aydin, C.C. Designing building façades for the urban rebuilt environment with integration of digital close-range photogrammetry and geographical information systems. Autom. Constr. 2014, 43, 38–48. [Google Scholar] [CrossRef]

- Bouzas, Ó.; Cabaleiro, M.; Conde, B.; Cruz, Y.; Riveiro, B. Structural health control of historical steel structures using HBIM. Autom. Constr. 2022, 140, 104308. [Google Scholar] [CrossRef]

- Valero, E.; Bosché, F.; Forster, A. Automatic segmentation of 3D point clouds of rubble masonry walls, and its application to building surveying, repair and maintenance. Autom. Constr. 2018, 96, 29–39. [Google Scholar] [CrossRef]

- Ursini, A.; Grazzini, A.; Matrone, F.; Zerbinatti, M. From scan-to-BIM to a structural finite elements model of built heritage for dynamic simulation. Autom. Constr. 2022, 142, 104518. [Google Scholar] [CrossRef]

- Kong, X.; Hucks, R.G. Preserving our heritage: A photogrammetry-based digital twin framework for monitoring deteriorations of historic structures. Autom. Constr. 2023, 152, 104928. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.-K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Moon, D.; Chung, S.; Kwon, S.; Seo, J.; Shin, J. Comparison and utilization of point cloud generated from photogrammetry and laser scanning: 3D world model for smart heavy equipment planning. Autom. Constr. 2019, 98, 322–331. [Google Scholar] [CrossRef]

- Page, C.; Sirguey, P.; Hemi, R.; Ferrè, G.; Simonetto, E.; Charlet, C.; Houvet, D. Terrestrial Laser Scanning for the Documentation of Heritage Tunnels: An Error Analysis. In Proceedings of the FIG Working Week, Helsinki, Finland, 29 May–2 June 2017. [Google Scholar]

- Zhu, Z.; Brilakis, I. Comparison of optical sensor-based spatial data collection techniques for civil infrastructure modeling. J. Comput. Civ. Eng. 2009, 23, 170–177. [Google Scholar] [CrossRef]

- Aryan, A.; Bosché, F.; Tang, P. Planning for terrestrial laser scanning in construction: A review. Autom. Constr. 2021, 125, 103551. [Google Scholar] [CrossRef]

- Son, H.; Kim, C.; Turkan, Y. Scan-to-BIM-an overview of the current state of the art and a look ahead. In Proceedings of the ISARC—The International Symposium on Automation and Robotics in Construction, Oulu, Finland, 15–18 June 2015; Volume 32, p. 1. [Google Scholar]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic creation of semantically rich 3D building models from laser scanner data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Haala, N.; Peter, M.; Kremer, J.; Hunter, G. Mobile LiDAR mapping for 3D point cloud collection in urban areas—A performance test. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1119–1127. [Google Scholar]

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Eker, R. Comparative use of PPK-integrated close-range terrestrial photogrammetry and a handheld mobile laser scanner in the measurement of forest road surface deformation. Measurement 2023, 206, 112322. [Google Scholar] [CrossRef]

- Williams, K.; Olsen, M.J.; Roe, G.V.; Glennie, C. Synthesis of transportation applications of mobile LiDAR. Remote Sens. 2013, 5, 4652–4692. [Google Scholar] [CrossRef]

- Thomson, C.; Apostolopoulos, G.; Backes, D.; Boehm, J. Mobile laser scanning for indoor modelling. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 289–293. [Google Scholar] [CrossRef]

- Chen, C.; Tang, L.; Hancock, C.M.; Zhang, P. Development of low-cost mobile laser scanning for 3D construction indoor mapping by using inertial measurement unit, ultra-wide band and 2D laser scanner. Eng. Constr. Archit. Manag. 2019, 26, 1367–1386. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. Lect. Notes Comput. Sci. 2006, 3951, 404–417. [Google Scholar]

- Nouwakpo, S.K.; Weltz, M.A.; McGwire, K. Assessing the performance of structure-from-motion photogrammetry and terrestrial LiDAR for reconstructing soil surface microtopography of naturally vegetated plots. Earth Surf. Process. Landf. 2016, 41, 308–322. [Google Scholar] [CrossRef]

- Kim, M.-C.; Yoon, H.-J. A study on utilization 3D shape pointcloud without GCPs using UAV images. J. Korea Acad.-Ind. Coop. Soc. 2018, 19, 97–104. [Google Scholar]

- Zhao, L.; Zhang, H.; Mbachu, J. Multi-Sensor Data Fusion for 3D Reconstruction of Complex Structures: A Case Study on a Real High Formwork Project. Remote Sens. 2023, 15, 1264. [Google Scholar] [CrossRef]

- Będkowski, J. Benchmark of multi-view Terrestrial Laser Scanning Point Cloud data registration algorithms. Measurement 2023, 219, 113199. [Google Scholar] [CrossRef]

- Zhu, Z.; Chen, T.; Rowlinson, S.; Rusch, R.; Ruan, X. A Quantitative Investigation of the Effect of Scan Planning and Multi-Technology Fusion for Point Cloud Data Collection on Registration and Data Quality: A Case Study of Bond University’s Sustainable Building. Buildings 2023, 13, 1473. [Google Scholar] [CrossRef]

- Pătrăucean, V.; Armeni, I.; Nahangi, M.; Yeung, J.; Brilakis, I.; Haas, C. State of research in automatic as-built modelling. Adv. Eng. Inform. 2015, 29, 162–171. [Google Scholar] [CrossRef]

- Kim, P.; Cho, Y.K. An automatic robust point cloud registration on construction sites. In Computing in Civil Engineering 2017; ASCE: Washington, DC, USA, 2017; pp. 411–419. [Google Scholar]

- Cho, Y.K.; Wang, C.; Tang, P.; Haas, C.T. Target-focused local workspace modeling for construction automation applications. J. Comput. Civ. Eng. 2012, 26, 661–670. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef]

- Jian, B.; Vemuri, B.C. Robust point set registration using gaussian mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 1633–1645. [Google Scholar] [CrossRef]

- Gelfand, N.; Mitra, N.J.; Guibas, L.J.; Pottmann, H. Robust global registration. In Proceedings of the Symposium on Geometry Processing, Vienna, Austria, 4–6 July 2005; Volume 2, p. 5. [Google Scholar]

- Yang, B.; Zang, Y. Automated registration of dense terrestrial laser-scanning point clouds using curves. ISPRS J. Photogramm. Remote Sens. 2014, 95, 109–121. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Xie, Z.; Xu, S.; Li, X. A high-accuracy method for fine registration of overlapping point clouds. Image Vis. Comput. 2010, 28, 563–570. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef]

- Klein, L.; Li, N.; Becerik-Gerber, B. Imaged-based verification of as-built documentation of operational buildings. Autom. Constr. 2012, 21, 161–171. [Google Scholar] [CrossRef]

- Becerik-Gerber, B. Scan to BIM: Factors Affecting Operational and Computational Errors and Productivity Loss. In Proceedings of the 27th International Symposium on Automation and Robotics in Construction, Bratislava, Slovakia, 25–27 June 2010; pp. 265–272. [Google Scholar]

- Schall, O.; Belyaev, A.; Seidel, H.-P. Robust filtering of noisy scattered point data. In Proceedings of the Eurographics/IEEE VGTC Symposium Point-Based Graphics, Stony Brook, NY, USA, 21–22 June 2005; pp. 71–144. [Google Scholar]

- Knorr, E.M.; Ng, R.T. A Unified Notion of Outliers: Properties and Computation. In Proceedings of the KDD, Newport Beach, CA, USA, 14–17August 1997; Volume 97, pp. 219–222. [Google Scholar]

- Huhle, B.; Schairer, T.; Jenke, P.; Straßer, W. Fusion of range and color images for denoising and resolution enhancement with a non-local filter. Comput. Vis. Image Underst. 2010, 114, 1336–1345. [Google Scholar] [CrossRef]

- Papadimitriou, S.; Kitagawa, H.; Gibbons, P.B.; Faloutsos, C. Loci: Fast outlier detection using the local correlation integral. In Proceedings of the 19th International Conference on Data Engineering (Cat. No. 03CH37405), Bangalore, India, 5–8 March 2003; pp. 315–326. [Google Scholar]

- Kanzok, T.; Süß, F.; Linsen, L.; Rosenthal, P. Efficient removal of inconsistencies in large multi-scan point clouds. In Proceedings of the 21st International Conference on Computer Graphics, Visualization and Computer Vision, Plzen, Czech Republic, 24–27 June 2013. [Google Scholar]

- Laing, R.; Leon, M.; Isaacs, J.; Georgiev, D. Scan to BIM: The development of a clear workflow for the incorporation of point clouds within a BIM environment. WIT Trans. Built Environ. 2015, 149, 279–289. [Google Scholar]

- Liu, S.; Chan, K.-C.; Wang, C.C. Iterative consolidation of unorganized point clouds. IEEE Comput. Graph. Appl. 2011, 32, 70–83. [Google Scholar]

- Lange, C.; Polthier, K. Anisotropic smoothing of point sets. Comput. Aided Geom. Des. 2005, 22, 680–692. [Google Scholar] [CrossRef]

- Wang, J.; Xu, K.; Liu, L.; Cao, J.; Liu, S.; Yu, Z.; Gu, X.D. Consolidation of low-quality point clouds from outdoor scenes. In Computer Graphics Forum; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 32, pp. 207–216. [Google Scholar]

- Ahmed, M.F.; Haas, C.T.; Haas, R. Automatic detection of cylindrical objects in built facilities. J. Comput. Civ. Eng. 2014, 28, 04014009. [Google Scholar] [CrossRef]

- Bosché, F. Automated recognition of 3D CAD model objects in laser scans and calculation of as-built dimensions for dimensional compliance control in construction. Adv. Eng. Inform. 2010, 24, 107–118. [Google Scholar] [CrossRef]

- Tan, Y.; Li, S.; Wang, Q. Automated geometric quality inspection of prefabricated housing units using BIM and LiDAR. Remote Sens. 2020, 12, 2492. [Google Scholar] [CrossRef]

- Kim, M.-K.; Wang, Q.; Li, H. Non-contact sensing based geometric quality assessment of buildings and civil structures: A review. Autom. Constr. 2019, 100, 163–179. [Google Scholar] [CrossRef]

- Esfahani, M.E.; Rausch, C.; Sharif, M.M.; Chen, Q.; Haas, C.; Adey, B.T. Quantitative investigation on the accuracy and precision of Scan-to-BIM under different modelling scenarios. Autom. Constr. 2021, 126, 103686. [Google Scholar] [CrossRef]

- Wang, Q.; Li, J.; Tang, X.; Zhang, X. How data quality affects model quality in scan-to-BIM: A case study of MEP scenes. Autom. Constr. 2022, 144, 104598. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, J.; Kim, M.-K. An application oriented scan-to-BIM framework. Remote Sens. 2019, 11, 365. [Google Scholar] [CrossRef]

- Scherer, R.J.; Katranuschkov, P. BIMification: How to create and use BIM for retrofitting. Adv. Eng. Inform. 2018, 38, 54–66. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Park, J.; Zhou, Q.-Y.; Koltun, V. Colored point cloud registration revisited. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 143–152. [Google Scholar]

- Babin, P.; Giguere, P.; Pomerleau, F. Analysis of robust functions for registration algorithms. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1451–1457. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Choi, S.; Park, J.; Yu, W. Simplified epipolar geometry for real-time monocular visual odometry on roads. Int. J. Control Autom. Syst. 2015, 13, 1454–1464. [Google Scholar] [CrossRef]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Fast global registration. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. pp. 766–782. [Google Scholar]

- Abdi, H.; Williams, L.J. Tukey’s honestly significant difference (HSD) test. Encycl. Res. Des. 2010, 3, 1–5. [Google Scholar]

| Method | Count | Sum | Average | Variance |

|---|---|---|---|---|

| POICP | 28 | 204.87 | 7.32 | 6.49 |

| PLICP | 28 | 178.51 | 6.38 | 3.88 |

| KICP | 28 | 131.95 | 4.71 | 0.82 |

| CICP | 28 | 108.96 | 3.93 | 0.56 |

| GRICP | 28 | 107.97 | 3.02 | 0.42 |

| FGRICP | 28 | 117.39 | 3.36 | 0.46 |

| Source of Variation | SS | df | MS | F | p-Value | F Crit |

|---|---|---|---|---|---|---|

| Between Groups | 415.51 | 5 | 83.10 | 39.43 | 0.000 | 2.27 |

| Within Groups | 341.45 | 162 | 2.11 | |||

| Total | 756.96 | 167 |

| Groups | Count | Sum | Average | Variance |

|---|---|---|---|---|

| POICP | 28 | 111.48 | 3.98 | 0.42 |

| PLICP | 28 | 108.21 | 3.86 | 0.37 |

| KICP | 28 | 116.95 | 4.18 | 0.43 |

| CICP | 28 | 117.31 | 4.19 | 0.45 |

| GRICP | 28 | 1526.87 | 54.53 | 51.86 |

| FGRICP | 28 | 910.94 | 32.53 | 19.34 |

| Source of Variation | SS | df | MS | F | p-Value | F Crit |

|---|---|---|---|---|---|---|

| Between Groups | 64,964.54 | 5 | 12,992.91 | 1069.61 | 0.000 | 2.27 |

| Within Groups | 1967.87 | 162 | 12.15 | |||

| Total | 66,932.41 | 167 |

| Registration Method | Ave (REd)′′ | Ave (TCd)′ | Vd |

|---|---|---|---|

| POICP | 0.15 | 0.04 | 3.87 |

| PLICP | 0.16 | 0.04 | 4.16 |

| KICP | 0.17 | 0.04 | 4.13 |

| CICP | 0.17 | 0.04 | 4.26 |

| GRICP | 0.18 | 0.53 | 0.34 |

| FGRICP | 0.18 | 0.32 | 0.56 |

| Total | 1.00 | 1.00 | 17.31 |

| Method | Count | Sum | Average | Variance |

|---|---|---|---|---|

| POICP | 28 | 139.34 | 4.98 | 3.30 |

| PLICP | 28 | 117.59 | 4.20 | 2.14 |

| KICP | 28 | 101.09 | 3.61 | 1.64 |

| CICP | 28 | 79.94 | 2.86 | 1.30 |

| GRICP | 28 | 78.18 | 2.79 | 1.23 |

| FGRICP | 28 | 86.73 | 3.10 | 1.24 |

| Source of Variation | SS | df | MS | F | p-Value | F Crit |

|---|---|---|---|---|---|---|

| Between Groups | 103.98 | 5 | 20.80 | 11.49 | 0.000 | 2.27 |

| Within Groups | 293.16 | 162 | 1.81 | |||

| Total | 397.14 | 167 |

| Method | Count | Sum | Average | Variance |

|---|---|---|---|---|

| POICP | 28 | 73.06 | 2.61 | 0.16 |

| PLICP | 28 | 75.51 | 2.70 | 0.20 |

| KICP | 28 | 84.84 | 3.03 | 0.24 |

| CICP | 28 | 81.23 | 2.90 | 0.22 |

| GRICP | 28 | 917.27 | 32.76 | 54.91 |

| FGRICP | 28 | 439.09 | 15.68 | 6.40 |

| Source of Variation | SS | df | MS | F | p-Value | F Crit |

|---|---|---|---|---|---|---|

| Between Groups | 21,201.66 | 5 | 4240.33 | 409.46 | 0.000 | 2.27 |

| Within Groups | 1677.65 | 162 | 10.36 | |||

| Total | 22,879.31 | 167 |

| Registration Method | Ave (REd)′′ | Ave (TCd)′ | Vd |

|---|---|---|---|

| POICP | 0.15 | 0.04 | 3.52 |

| PLICP | 0.16 | 0.05 | 3.56 |

| KICP | 0.17 | 0.05 | 3.28 |

| CICP | 0.17 | 0.05 | 3.57 |

| GRICP | 0.17 | 0.55 | 0.32 |

| FGRICP | 0.17 | 0.26 | 0.65 |

| Total | 1.00 | 1.00 | 14.90 |

| Method | t-Value | p-Value | Average RE (Non-Noise Removal) | Average RE (Noise Removal) |

|---|---|---|---|---|

| POICP | 3.96 | 0.000 | 7.32 | 4.98 |

| PLICP | 4.69 | 0.000 | 6.38 | 4.20 |

| KICP | 3.71 | 0.001 | 4.71 | 3.61 |

| CICP | 4.16 | 0.000 | 3.93 | 2.86 |

| GRICP | 0.93 | 0.359 | 3.02 | 2.79 |

| FGRICP | 1.06 | 0.295 | 3.36 | 3.10 |

| Method | t-Value | p-Value | Average TC (Non-Noise Removal) | Average TC (Noise Removal) |

|---|---|---|---|---|

| POICP | 9.46 | 0.000 | 3.98 | 2.61 |

| PLICP | 8.15 | 0.000 | 3.86 | 2.70 |

| KICP | 7.39 | 0.000 | 4.18 | 3.03 |

| CICP | 8.32 | 0.000 | 4.19 | 2.90 |

| GRICP | 11.15 | 0.000 | 54.53 | 32.76 |

| FGRICP | 17.58 | 0.000 | 32.53 | 15.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Rowlinson, S.; Chen, T.; Patching, A. Exploring the Impact of Different Registration Methods and Noise Removal on the Registration Quality of Point Cloud Models in the Built Environment: A Case Study on Dickabrma Bridge. Buildings 2023, 13, 2365. https://doi.org/10.3390/buildings13092365

Zhu Z, Rowlinson S, Chen T, Patching A. Exploring the Impact of Different Registration Methods and Noise Removal on the Registration Quality of Point Cloud Models in the Built Environment: A Case Study on Dickabrma Bridge. Buildings. 2023; 13(9):2365. https://doi.org/10.3390/buildings13092365

Chicago/Turabian StyleZhu, Zicheng, Steve Rowlinson, Tianzhuo Chen, and Alan Patching. 2023. "Exploring the Impact of Different Registration Methods and Noise Removal on the Registration Quality of Point Cloud Models in the Built Environment: A Case Study on Dickabrma Bridge" Buildings 13, no. 9: 2365. https://doi.org/10.3390/buildings13092365