Application of Deep Reinforcement Learning for Proportional–Integral–Derivative Controller Tuning on Air Handling Unit System in Existing Commercial Building

Abstract

:1. Introduction

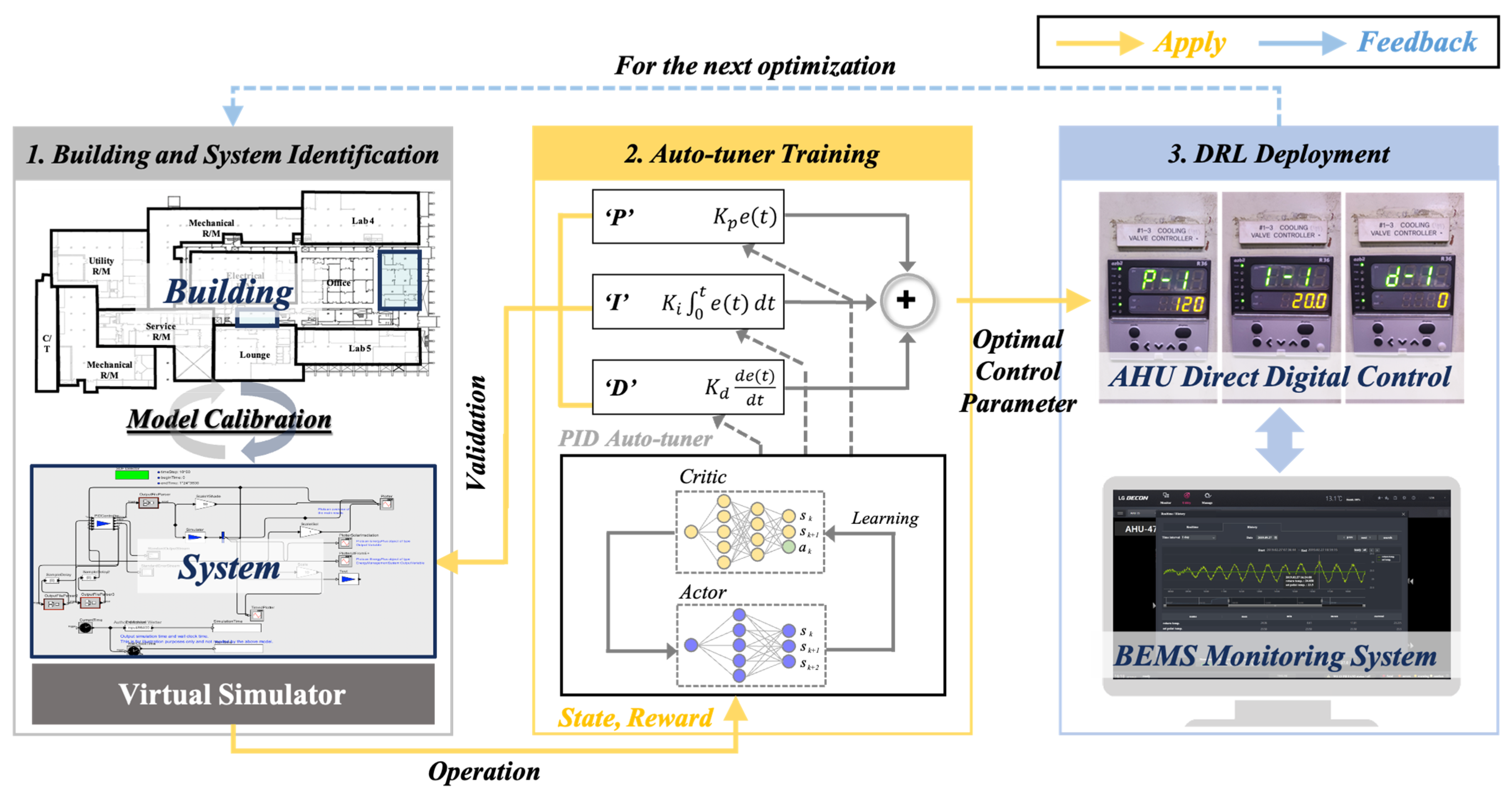

2. Methodology

2.1. Description of Building and HVAC System

2.2. Existing HVAC Control System

2.3. Virtual Simulator

2.4. Deep Deterministic Policy Gradient (DDPG) Algorithm

- (1)

- State space: the state encompasses relevant information for the decision of control actions, including the setting of the room temperature Tz (t) of the particular zone z and the bound user indoor set-point temperature Tset-point (t). The state parameters include the indoor set-point temperature, which is the control baseline for the valve. The temperature is controlled by opening/closing the valve based on the PID control’s t + 1 time steps that calculate the difference between the indoor and set-point temperatures.

- (2)

- Action space: the HVAC control method allows the tuning of different parameters, such as the adjustment of the cold-water temperature according to the valve controlling the indoor temperature set point. The valve is controlled via PID control by tuning the Kp, Ki, and Kd parameters. Therefore, the action spaces of this research study are Kp, Ki, and Kd.

- (3)

- State transition: the state transition probability is not incorporated in the presented MDP because the HVAC system’s thermal dynamic process is influenced by numerous ambiguous factors such as the supply airflow rate, which depends on the mass flow rate of cold water passing through the valve.

- (4)

- Reward function: the objective of reinforcement learning is maximizing or minimizing the reward cost function. In this study, the reward function is minimized as shown below. The control objectives can be achieved by predetermining the reward of every pair of state and action, such as the optimal temperature control and energy savings, which are the valve opening positions. The probabilities of are weighted coefficient values, which are determined during the training of the algorithm, as follows:

- (5)

- Building control plan: an optimal HVAC regulator maximizes rewards during the operation of a building. It can also be expressed as a (stochastic) policy that provides a probability distribution over activities while considering the current building status.

3. Results

3.1. System Identification by Building Simulator

3.2. Validation of Low-Level Control Condition

3.3. Convergence of DDPG Algorithm with Control Parameter Estimation

3.4. Performance Evaluation Based on Actual Implementation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- International Energy Agency. 2021 Global Status Report for Buildings and Construction: Towards a Zero-Emissions, Efficient and Resilient Buildings and Construction Sector; International Energy Agency: Paris, France, 2019. [Google Scholar]

- González-Torres, M.; Pérez-Lombard, L.; Coronel, J.F.; Maestre, I.R.; Yan, D. A review on buildings energy information: Trends, end-uses, fuels and drivers. Energy Rep. 2020, 8, 626–637. [Google Scholar] [CrossRef]

- International Renewable Energy Agency. Global Energy Transformation: A Roadmap to 2050; International Renewable Energy Agency: Masdar City, United Arab Emirates, 2019. [Google Scholar]

- Olivier, J.G.; Schure, K.M.; Peters, J.A.H.W. Trends in global CO2 and total greenhouse gas emissions. PBL Neth. Environ. Assess. Agency 2017, 5, 1–11. [Google Scholar]

- Almusaed, A.; Almssad, A.; Homod, R.Z.; Yitmen, I. Environmental profile on building material passports for hot climates. Sustainability 2020, 12, 3720. [Google Scholar] [CrossRef]

- Goldstein, B.; Gounaridis, D.; Newell, J.P. The carbon footprint of household energy use in the United States. Proc. Natl. Acad. Sci. USA 2020, 117, 19122–19130. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Ren, A.; Li, Q. Exploring patterns of transportation-related CO2 emissions using machine learning methods. Sustainability 2022, 14, 4588. [Google Scholar] [CrossRef]

- Huang, T.Y.; Huang, P.Y.; Tsai, H.Y. Automatic design system of optimal sunlight-guiding micro prism based on genetic algorithm. Dev. Built Environ. 2022, 12, 100105. [Google Scholar] [CrossRef]

- Jia, L.R.; Han, J.; Chen, X.; Li, Q.Y.; Lee, C.C.; Fung, Y.H. Interaction between thermal comfort, indoor air quality and ventilation energy consumption of educational buildings: A comprehensive review. Buildings 2021, 11, 591. [Google Scholar] [CrossRef]

- Oh, S.; Song, S. Detailed analysis of thermal comfort and indoor air quality using real-time multiple environmental monitoring data for a childcare center. Energies 2021, 14, 643. [Google Scholar] [CrossRef]

- Khare, V.R.; Garg, R.; Mathur, J.; Garg, V. Thermal comfort analysis of personalized conditioning system and performance assessment with different radiant cooling systems. Energy Built Environ. 2023, 4, 111–121. [Google Scholar] [CrossRef]

- Kong, M.; Dong, B.; Zhang, R.; O’Neill, Z. HVAC energy savings, thermal comfort and air quality for occupant-centric control through a side-by-side experimental study. Appl. Energy 2022, 306, 117987. [Google Scholar] [CrossRef]

- Yang, L.; Yan, H.; Lam, J.C. Thermal comfort and building energy consumption implications—A review. Appl. Energy 2014, 115, 164–173. [Google Scholar] [CrossRef]

- Hu, M.; Milner, D. Visualizing the research of embodied energy and environmental impact research in the building and construction field: A bibliometric analysis. Dev. Built Environ. 2020, 3, 100010. [Google Scholar] [CrossRef]

- Gao, Y.; Meng, L.; Li, C.; Ge, L.; Meng, X. An experimental study of thermal comfort zone extension in the semi-open spray space. Dev. Built Environ. 2023, 15, 100217. [Google Scholar] [CrossRef]

- Cao, X.; Dai, X.; Liu, J. Building energy-consumption status worldwide and the state-of-the-art technologies for zero-energy buildings during the past decade. Energy Build. 2016, 128, 198–213. [Google Scholar] [CrossRef]

- Lawrence, T.M.; Boudreau, M.C.; Helsen, L.; Henze, G.; Mohammadpour, J.; Noonan, D.; Patteeuw, D.; Pless, S.; Watson, R.T. Ten questions concerning integrating smart buildings into the smart grid. Build. Environ. 2016, 108, 273–283. [Google Scholar] [CrossRef]

- Dakwale, V.A.; Ralegaonkar, R.V.; Mandavgane, S. Improving environmental performance of building through increased energy efficiency: A review. Sustain. Cities Soc. 2011, 1, 211–218. [Google Scholar] [CrossRef]

- Jung, W.; Jazizadeh, F. Energy saving potentials of integrating personal thermal comfort models for control of building systems: Comprehensive quantification through combinatorial consideration of influential parameters. Appl. Energy 2020, 268, 114882. [Google Scholar] [CrossRef]

- Li, W.; Zhang, J.; Zhao, T. Indoor thermal environment optimal control for thermal comfort and energy saving based on online monitoring of thermal sensation. Energy Build. 2019, 197, 57–67. [Google Scholar] [CrossRef]

- Guo, W.; Zhou, M. Technologies toward thermal comfort-based and energy-efficient HVAC systems: A review. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 3883–3888. [Google Scholar]

- Benard, C.; Guerrier, B.; Rosset-Louerat, M.M. Optimal building energy management. Part 2; Control. J. Sol. Energy Eng. 1992, 114, 12–22. [Google Scholar]

- Mathews, E.H.; Arndt, D.C.; Piani, C.B.; Van Heerden, E. Developing cost efficient control strategies to ensure optimal energy use and sufficient indoor comfort. Appl. Energy 2000, 66, 135–159. [Google Scholar] [CrossRef]

- Levermore, G.J. Building Energy Management System: Applications to Low-Energy HVAC and Natural Ventilation Control; E & FN Spon: London, UK, 2000. [Google Scholar]

- Knospe, C. PID control. IEEE Control Syst. Mag. 2006, 26, 30–31. [Google Scholar] [CrossRef]

- Ho, W.K.; Gan, O.P.; Tay, E.B.; Ang, E.L. Performance and gain and phase margins of well-known PID tuning formulas. IEEE Trans. Control Syst. Technol. 1996, 4, 473–477. [Google Scholar] [CrossRef]

- Li, Y.; Ang, K.H.; Chong, G.C. PID control system analysis and design. IEEE Control Syst. Mag. 2006, 26, 32–41. [Google Scholar]

- Valério, D.; Da Costa, J.S. Tuning of fractional PID controllers with Ziegler–Nichols-type rules. Signal Process. 2006, 86, 2771–2784. [Google Scholar] [CrossRef]

- Joseph, E.A.; Olaiya, O.O. Cohen-coon PID tuning method; A better option to Ziegler Nichols-PID tuning method. Eng. Res. 2017, 2, 141–145. [Google Scholar]

- Wang, Q.G.; Lee, T.H.; Fung, H.W.; Bi, Q.; Zhang, Y. PID tuning for improved performance. IEEE Trans. Control Syst. Technol. 1999, 7, 457–465. [Google Scholar] [CrossRef]

- Hamamci, S.E. An algorithm for stabilization of fractional-order time delay systems using fractional-order PID controllers. IEEE Trans. Autom. Control 2007, 52, 1964–1969. [Google Scholar] [CrossRef]

- Moradi, M. A genetic-multivariable fractional order PID control to multi-input multi-output processes. J. Process Control 2014, 24, 336–343. [Google Scholar] [CrossRef]

- Lu, L.I.U.; Liang, S.H.A.N.; Yuewei, D.A.I.; Chenglin, L.I.U.; Zhidong, Q.I. Improved quantum bacterial foraging algorithm for tuning parameters of fractional-order PID controller. J. Syst. Eng. Electron. 2018, 29, 166–175. [Google Scholar] [CrossRef]

- Hussain, S.; Gupta, S.; Gupta, R. Internal Model Controller Design for HVAC System. In Proceedings of the 2018 2nd IEEE International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 22–24 October 2018; pp. 471–476. [Google Scholar]

- Zhao, Y.M.; Xie, W.F.; Tu, X.W. Improved parameters tuning method of model-driven PID control systems. In Proceedings of the 2011 6th IEEE Conference on Industrial Electronics and Applications, Beijing, China, 21–23 June 2011; pp. 1513–1518. [Google Scholar]

- Khodadadi, H.; Dehghani, A. Fuzzy logic self-tuning PID controller design based on smith predictor for heating system. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Republic of Korea, 16–19 October 2016; pp. 161–166. [Google Scholar]

- Karyono, K.; Abdullah, B.M.; Cotgrave, A.J.; Bras, A. The adaptive thermal comfort review from the 1920s, the present, and the future. Dev. Built Environ. 2020, 4, 100032. [Google Scholar] [CrossRef]

- Hussain, S.; Gabbar, H.A.; Bondarenko, D.; Musharavati, F.; Pokharel, S. Comfort-based fuzzy control optimization for energy conservation in HVAC systems. Control. Eng. Pract. 2014, 32, 172–182. [Google Scholar] [CrossRef]

- Satrio, P.; Mahlia, T.M.I.; Giannetti, N.; Saito, K. Optimization of HVAC system energy consumption in a building using artificial neural network and multi-objective genetic algorithm. Sustain. Energy Technol. Assess. 2019, 35, 48–57. [Google Scholar]

- Kang, W.H.; Yoon, Y.; Lee, J.H.; Song, K.W.; Chae, Y.T.; Lee, K.H. In-situ application of an ANN algorithm for optimized chilled and condenser water temperatures set-point during cooling operation. Energy Build. 2021, 233, 110666. [Google Scholar] [CrossRef]

- Sangi, R.; Müller, D. A novel hybrid agent-based model predictive control for advanced building energy systems. Energy Convers. Manag. 2018, 178, 415–427. [Google Scholar] [CrossRef]

- West, S.R.; Ward, J.K.; Wall, J. Trial results from a model predictive control and optimisation system for commercial building HVAC. Energy Build. 2014, 72, 271–279. [Google Scholar] [CrossRef]

- Li, W.; Wang, S.; Koo, C. A real-time optimal control strategy for multi-zone VAV air-conditioning systems adopting a multi-agent based distributed optimization method. Appl. Energy 2021, 287, 116605. [Google Scholar] [CrossRef]

- Fang, X.; Gong, G.; Li, G.; Chun, L.; Peng, P.; Li, W.; Shi, X.; Chen, X. Deep reinforcement learning optimal control strategy for temperature setpoint real-time reset in multi-zone building HVAC system. Appl. Therm. Eng. 2022, 212, 118552. [Google Scholar] [CrossRef]

- Barbosa, R.S.; Machado, J.T.; Ferreira, I.M. Tuning of PID controllers based on Bode’s ideal transfer function. Nonlinear Dyn. 2004, 38, 305–321. [Google Scholar] [CrossRef]

- Arendt, K.; Jradi, M.; Shaker, H.R.; Veje, C. Comparative analysis of white-, gray-and black-box models for thermal simulation of indoor environment: Teaching building case study. In Proceedings of the 2018 Building Performance Modeling Conference and SimBuild Co-Organized by ASHRAE and IBPSA-USA, Chicago, IL, USA, 26–28 September 2018. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Gupta, S.; Bagga, S.; Sharma, D.K. Intelligent Data Analysis: Black Box Versus White Box Modeling. In Intelligent Data Analysis: From Data Gathering to Data Comprehension; Wiley: Hoboken, NJ, USA, 2020; pp. 1–15. [Google Scholar]

- Jradi, M.; Sangogboye, F.C.; Mattera, C.G.; Kjærgaard, M.B.; Veje, C.; Jørgensen, B.N. A world class energy efficient university building by danish 2020 standards. Energy Procedia 2017, 132, 21–26. [Google Scholar] [CrossRef]

- Zhu, D.; Hong, T.; Yan, D.; Wang, C. A detailed loads comparison of three building energy modeling programs: EnergyPlus, DeST and DOE-2.1 E. In Building Simulation; Springer: Berlin/Heidelberg, Germany, 2013; Volume 6, pp. 323–335. [Google Scholar]

- Khadanga, R.K.; Padhy, S.; Panda, S.; Kumar, A. Design and analysis of multi-stage PID controller for frequency control in an islanded micro-grid using a novel hybrid whale optimization-pattern search algorithm. Int. J. Numer. Model. Electron. Netw. Devices Fields 2018, 31, e2349. [Google Scholar] [CrossRef]

- Bagirov, A.M.; Barton, A.F.; Mala-Jetmarova, H.; Al Nuaimat, A.; Ahmed, S.T.; Sultanova, N.; Yearwood, J. An algorithm for minimization of pumping costs in water distribution systems using a novel approach to pump scheduling. Math. Comput. Model. 2013, 57, 873–886. [Google Scholar] [CrossRef]

- KIRGAT, M.G.; Surde, A.N. Review of Hooke and Jeeves Direct Search Solution M Ethod Analysis Applicable to Mechanical Design Engineering. Int. J. Innov. Eng. Res. Technol. 2014, 1, 1–14. [Google Scholar]

- ASHRAE. ASHRAE Guideline 14-2002 for Measurement of Energy and Demand Savings. In American Society of Heating, Refrigeration and Air Conditioning Engineers; ASHRAE: Atlanta, GA, USA, 2002. [Google Scholar]

- FEMP-US Department of Energy Federal Energy Management Program. M & V Guidelines: Measurement and Verification for Performance-Based Contracts Version 4.0; FEMP—Federal Energy Management Program: Washington, DC, USA, 2015. Available online: https://www.energy.gov/eere/femp/downloads/mv-guidelines-measurement-and-verification-performance-based-contracts-version (accessed on 20 May 2023).

- IPMV—International Performance Measurement & Verification Protocol. “Concepts and Options for Determining Energy and Water Savings”. In Handbook of Financing Energy Projects; EVO—Efficiency Valuation Organization: Toronto, ON, Canada, 2012. [Google Scholar]

- Si, B.; Tian, Z.; Chen, W.; Jin, X.; Zhou, X.; Shi, X. Performance assessment of algorithms for building energy optimization problems with different properties. Sustainability 2018, 11, 18. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, MA, USA, 1998; Volume 135, pp. 223–260. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 1999, 12, 1057–1063. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

| Element | Parameters | |

|---|---|---|

| Location | Seoul (Republic of Korea) | |

| Latitude/Longitude | 37.46991061154597/127.02686081776729 | |

| Building type | Office building | |

| Building scale | B1F/3F | |

| Operation schedule | 5:30~19:00 | |

| Area | Total (conditioned) | 32,278 m2 (22,594 m2) |

| Occupancy density | 0.11 person/m2 | |

| Target section | 594 m2 | |

| Composition of envelope (nominal U-value, SHGC) | Wall | Brick [100 mm] + Concrete [200 mm] + Insulation [50 mm] (0.341 W/m2·K) |

| Floor | Concrete [200 mm] + Insulation [50 mm] (0.384 W/m2·K) | |

| Roof | Concrete [100 mm] (0.855 W/m2·K) | |

| Window | Clear [3 mm] + Air [13 mm] + Clear [3 mm] (2.720 W/m2·K, 0.764) Wall-Window-Ratio = 30% (Strip window) | |

| Element | Parameters |

|---|---|

| Capacity | 489.02 kW |

| Air delivery | CAV (Constant Air Volume) |

| Air flow rate | 2.5 kg/s |

| Fan pressure | 112 mmAQ |

| Coil type | Cooling and heating water coil |

| Set-point temperature | Heating: 20 °C, Cooling: 23.5 °C |

| Plant | Heating: Hot boiler, Cooling: Centrifugal compressor chiller |

| Model 1 (Initial) | Model 2 (Iter.-32) | Model 3 (Iter.-165) | Model 4 (Iter.-540) | ||

|---|---|---|---|---|---|

| Building parameter | Insulation thickness (mm) | 90 | 43 | 50 | 62 |

| SHGC (-) | 0.70 | 0.32 | 0.65 | 0.54 | |

| Infiltration (ACH) | 1.50 | 5.70 | 3.00 | 3.50 | |

| Thermal Mass (J/kg·m) | 5 | 35 | 15 | 10 | |

| Calibration performance | CV(RMSE) | 0.569 | 0.422 | 0.393 | 0.231 |

| MBE | −0.905 | +0.901 | +0.244 | +0.087 |

| Hyperparameter | DDPG |

|---|---|

| Observation range | (−100, 100) |

| Action range | (−1, 1) |

| Optimizer | Adam |

| Actor learning rate | 0.0004 |

| Critic learning rate | 0.003 |

| Batch size | 128 |

| Epoch | Kp | Ki | Kd | Q-Value | |

|---|---|---|---|---|---|

| Actual | - | 20.2 | 120 | 0 | - |

| Model (DDPG) | 0 | 0 | 0 | 0 | 1 |

| 3000 | 0 | 9.9 | 1.3 | 3200 | |

| 6000 | 379.7 | 0.1 | 1.2 | 6600 | |

| 9000 | 168.5 | 8.5 | 0.8 | 7800 | |

| 12,000 | 59.4 | 9.2 | 0.1 | 7850 | |

| 15,000 | 18.7 | 10 | 0.01 | 7955 |

| Existing PID | Auto-Tuned PID | ||

|---|---|---|---|

| Cooling coil valve position | Min/Max | 0%/80.42% | 10.20%/43/40% |

| Mean (Standard deviation) | 19.15% (18.94) | 17.41% (12.08) | |

| Daily averaged cooling energy consumption (saving rate) | 131 kWh (-) | 113 kWh (13.71%) | |

| Daily averaged room temperature (percentage of satisfied room temperature) | 23.36 °C (49%) | 23.54 °C (97%) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Jeong, J.; Chae, Y.T. Application of Deep Reinforcement Learning for Proportional–Integral–Derivative Controller Tuning on Air Handling Unit System in Existing Commercial Building. Buildings 2024, 14, 66. https://doi.org/10.3390/buildings14010066

Lee D, Jeong J, Chae YT. Application of Deep Reinforcement Learning for Proportional–Integral–Derivative Controller Tuning on Air Handling Unit System in Existing Commercial Building. Buildings. 2024; 14(1):66. https://doi.org/10.3390/buildings14010066

Chicago/Turabian StyleLee, Dongkyu, Jinhwa Jeong, and Young Tae Chae. 2024. "Application of Deep Reinforcement Learning for Proportional–Integral–Derivative Controller Tuning on Air Handling Unit System in Existing Commercial Building" Buildings 14, no. 1: 66. https://doi.org/10.3390/buildings14010066

APA StyleLee, D., Jeong, J., & Chae, Y. T. (2024). Application of Deep Reinforcement Learning for Proportional–Integral–Derivative Controller Tuning on Air Handling Unit System in Existing Commercial Building. Buildings, 14(1), 66. https://doi.org/10.3390/buildings14010066