Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling

Abstract

1. Introduction

Research Questions and Objectives

2. Literature Review

2.1. Construction Site Management

2.2. Advancements in PCM to BIM Conversion, Photorealistic Rendering, and AI-Driven Object Recognition

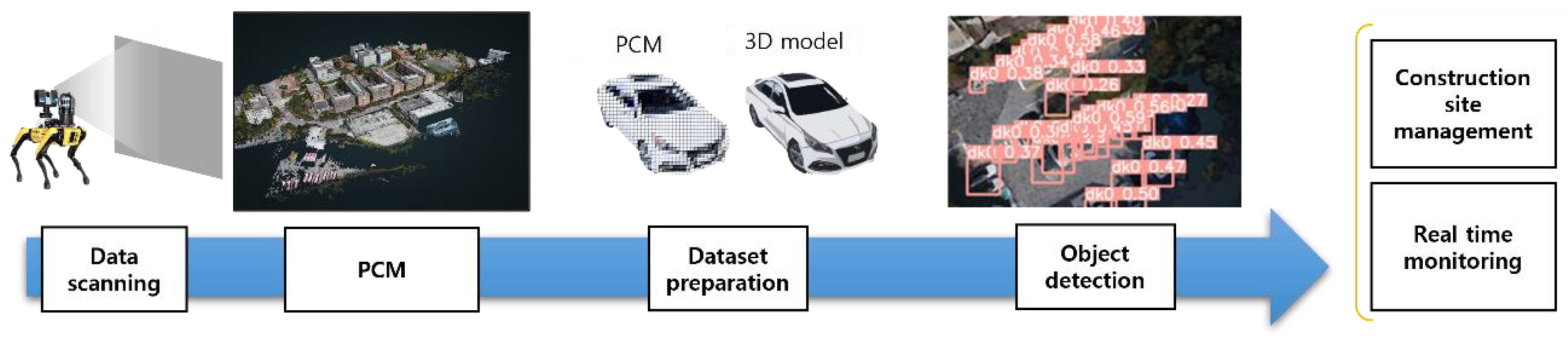

3. Research Method

3.1. Process

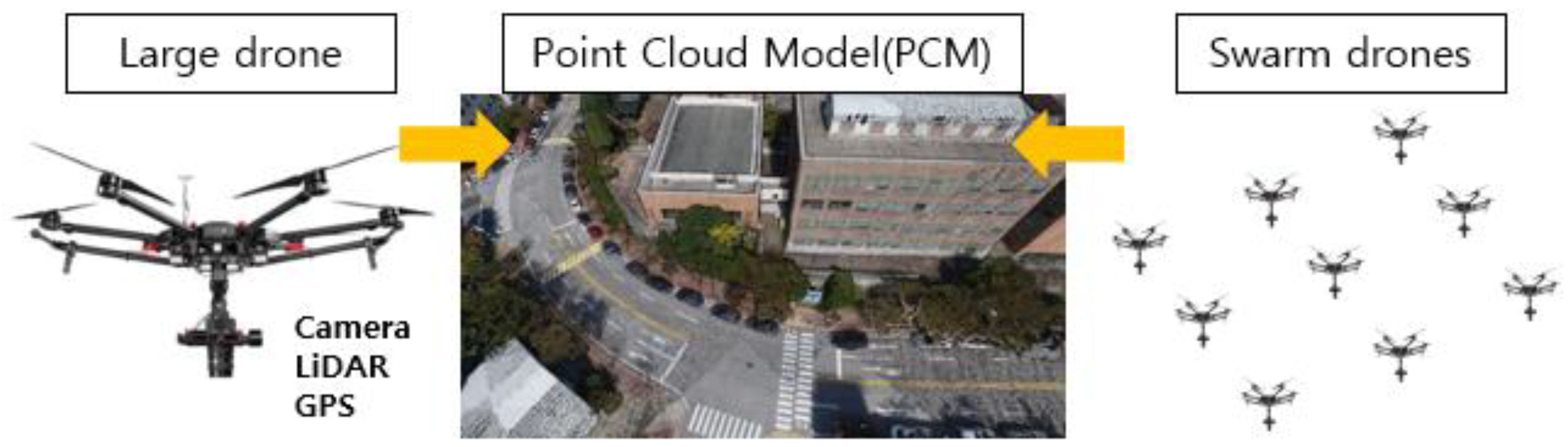

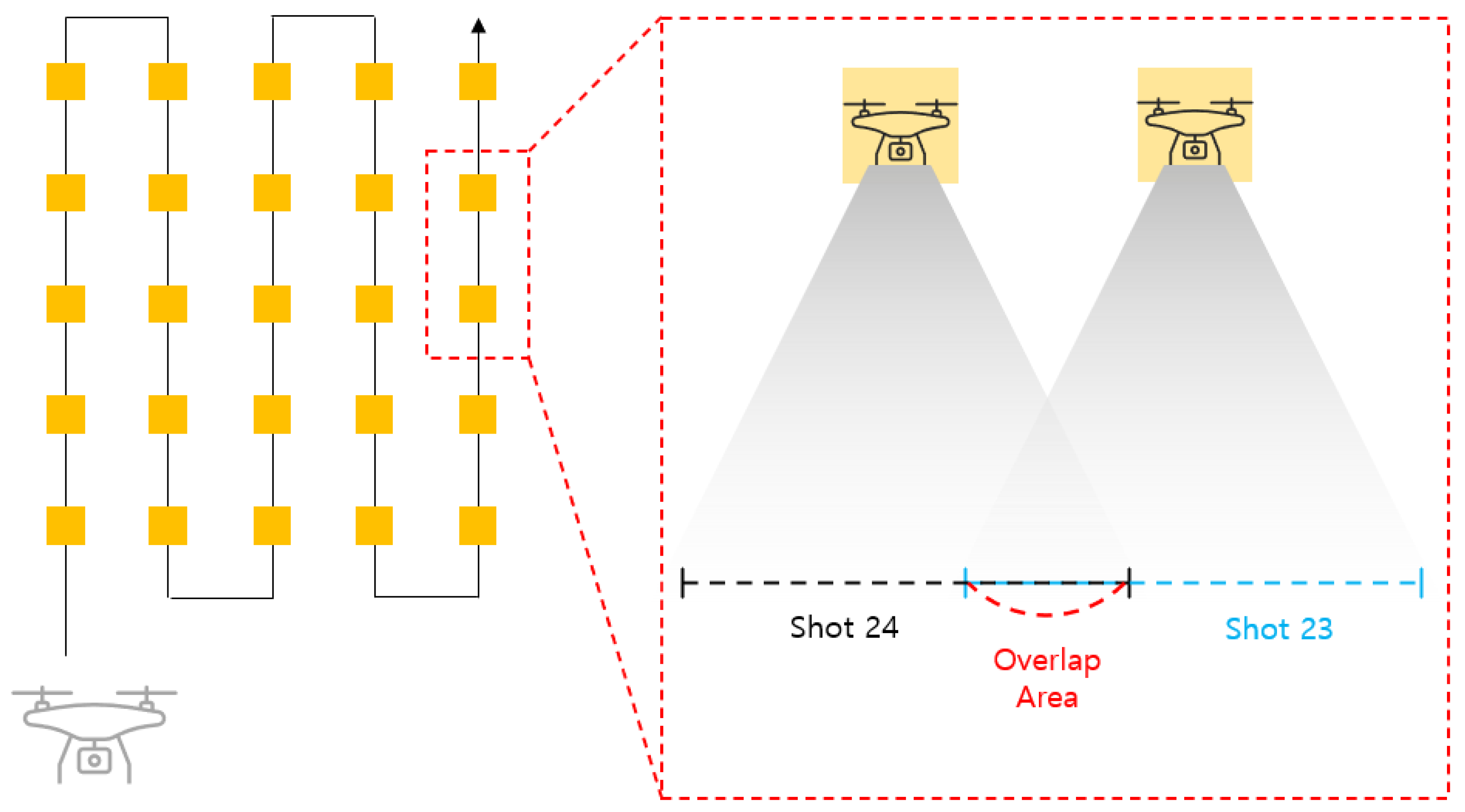

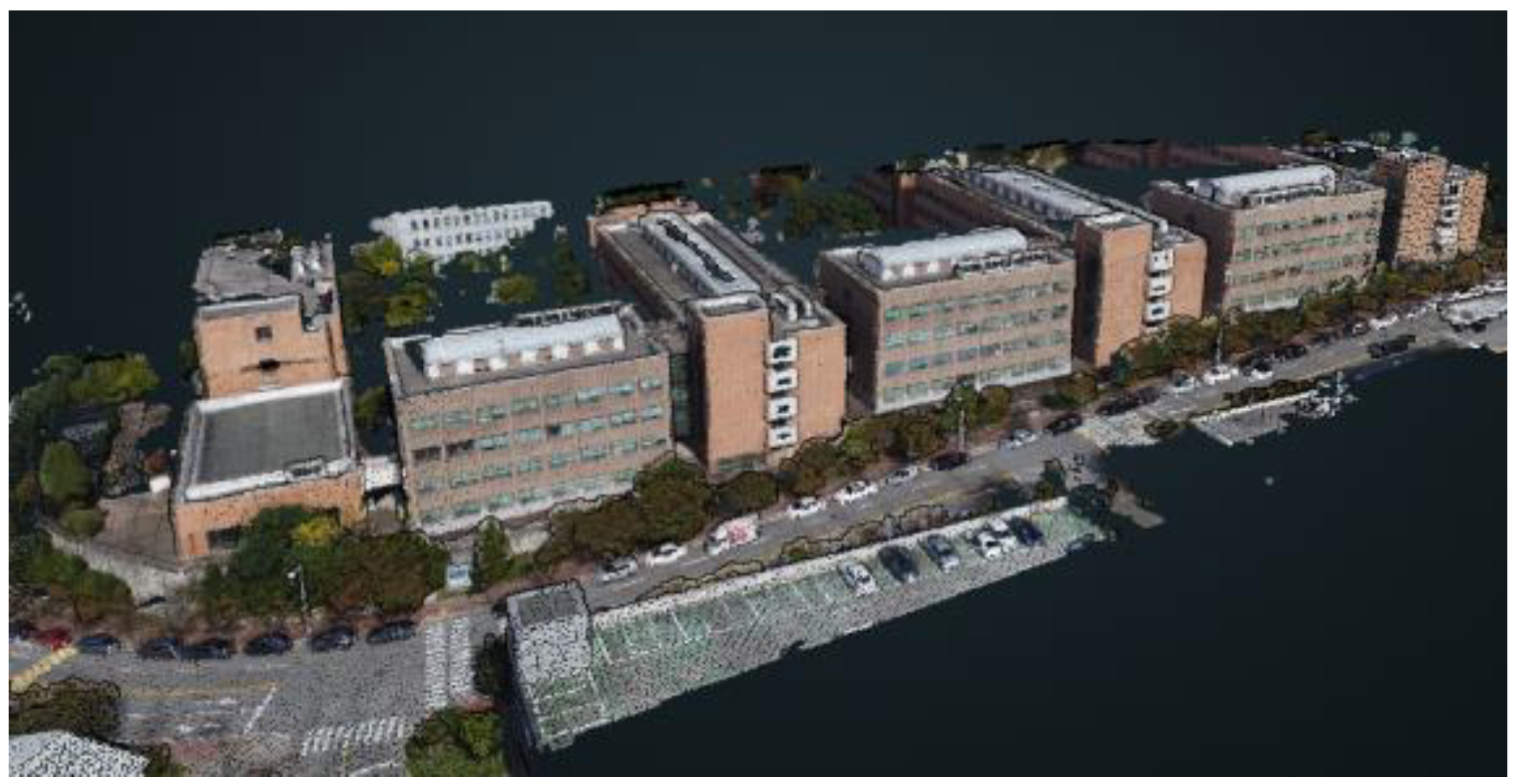

3.2. PCM Generation Using Drones

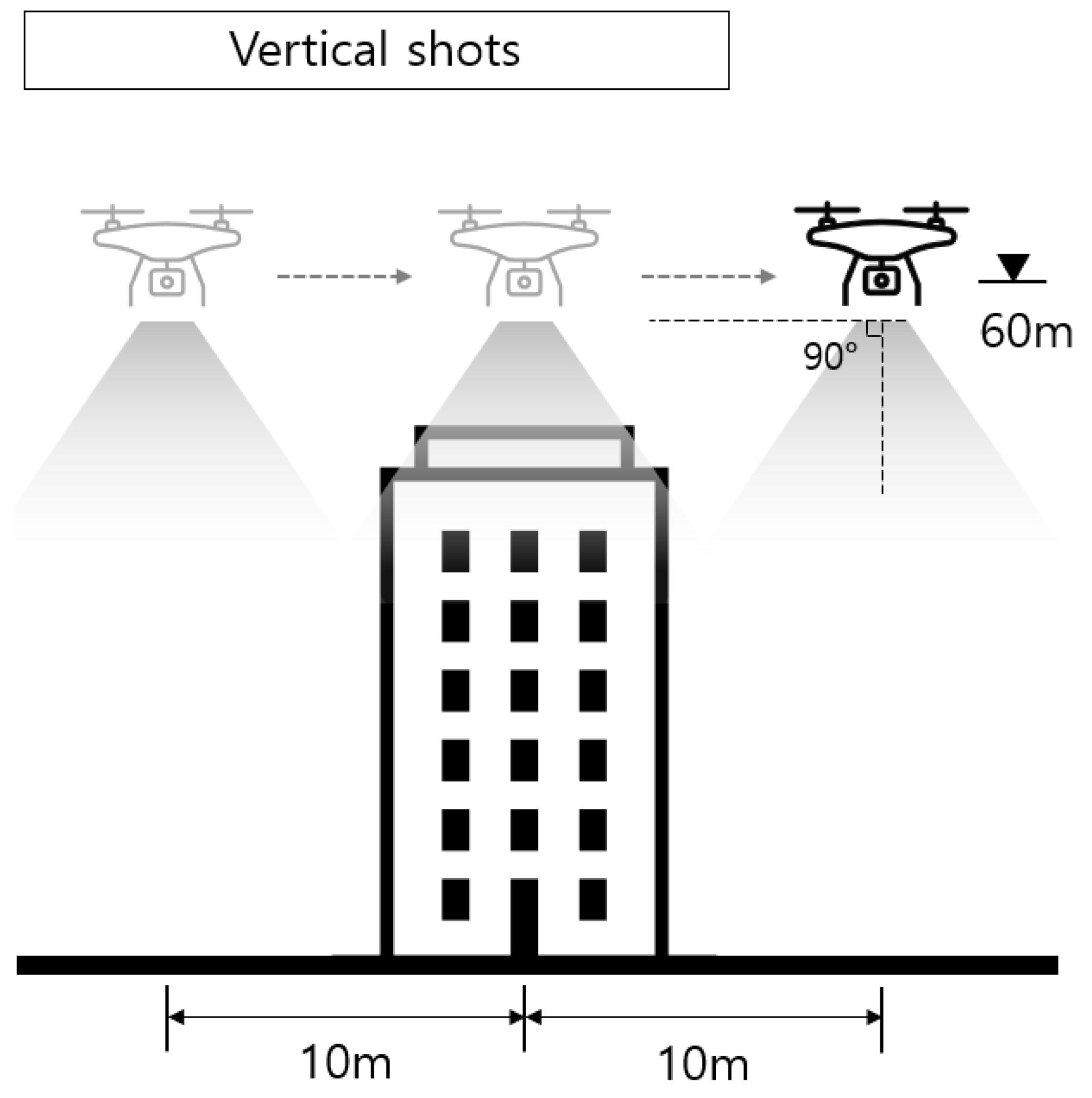

3.3. Vertical Shooting Dataset

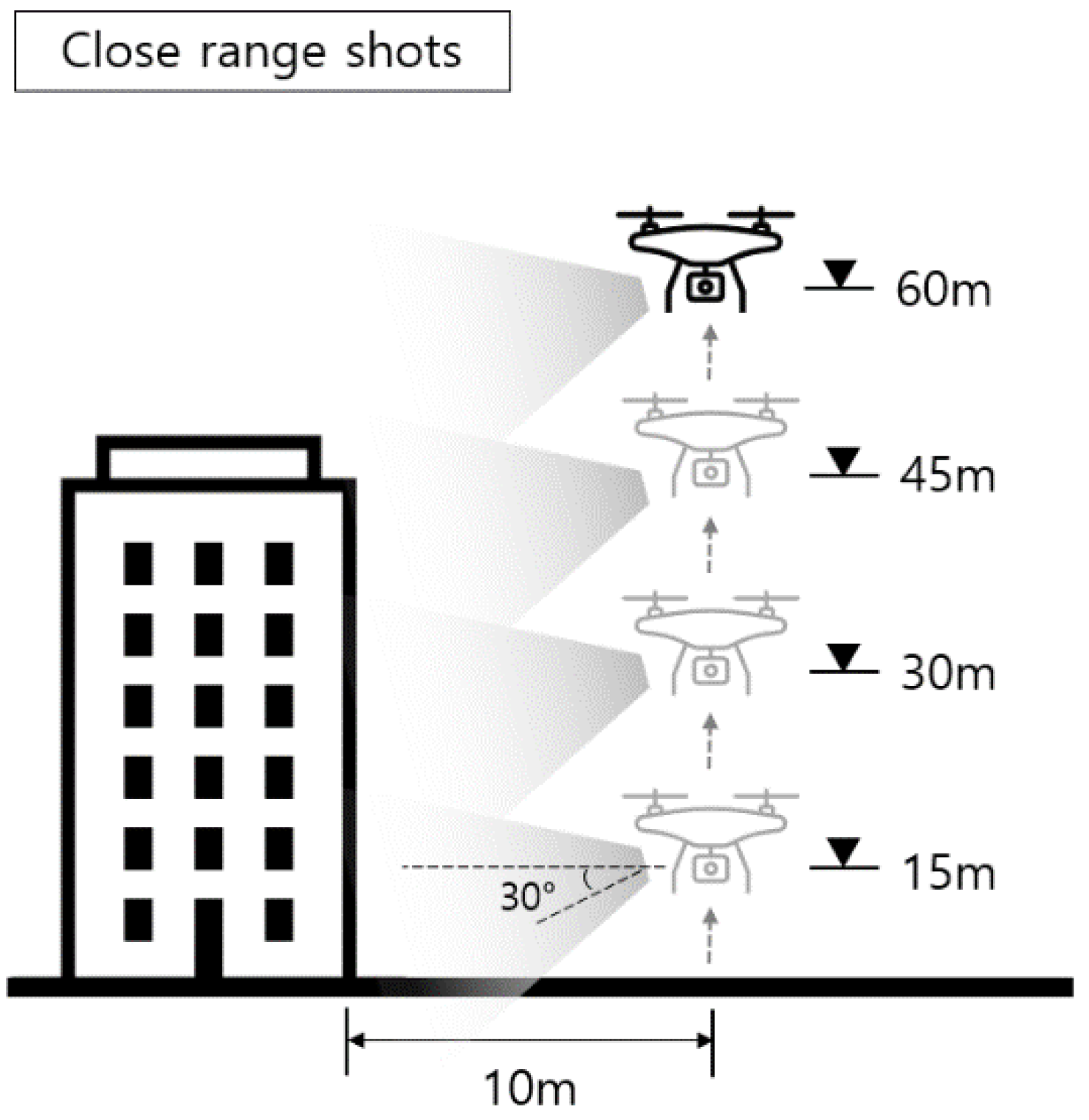

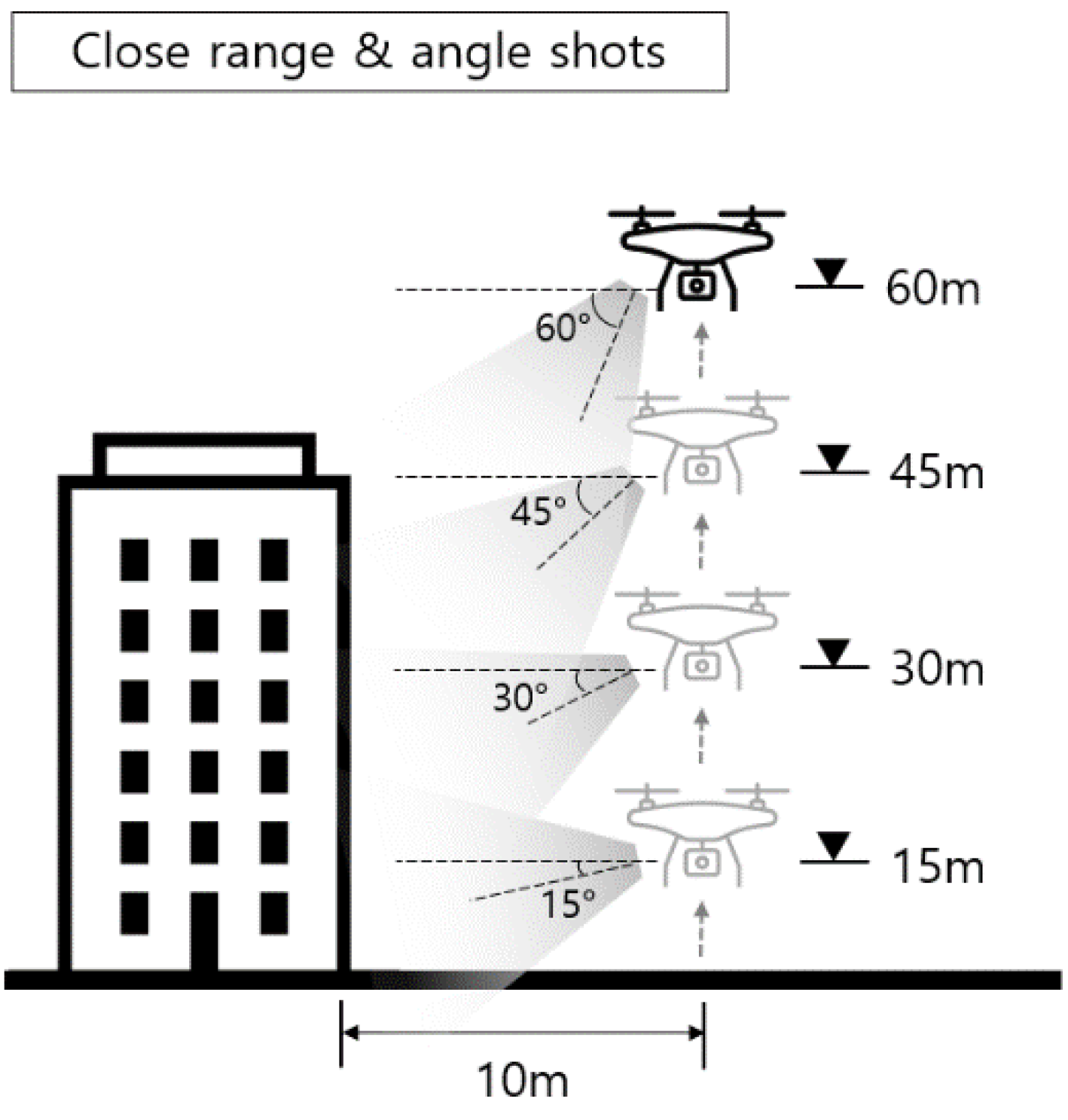

3.4. Close-Range Shooting Dataset

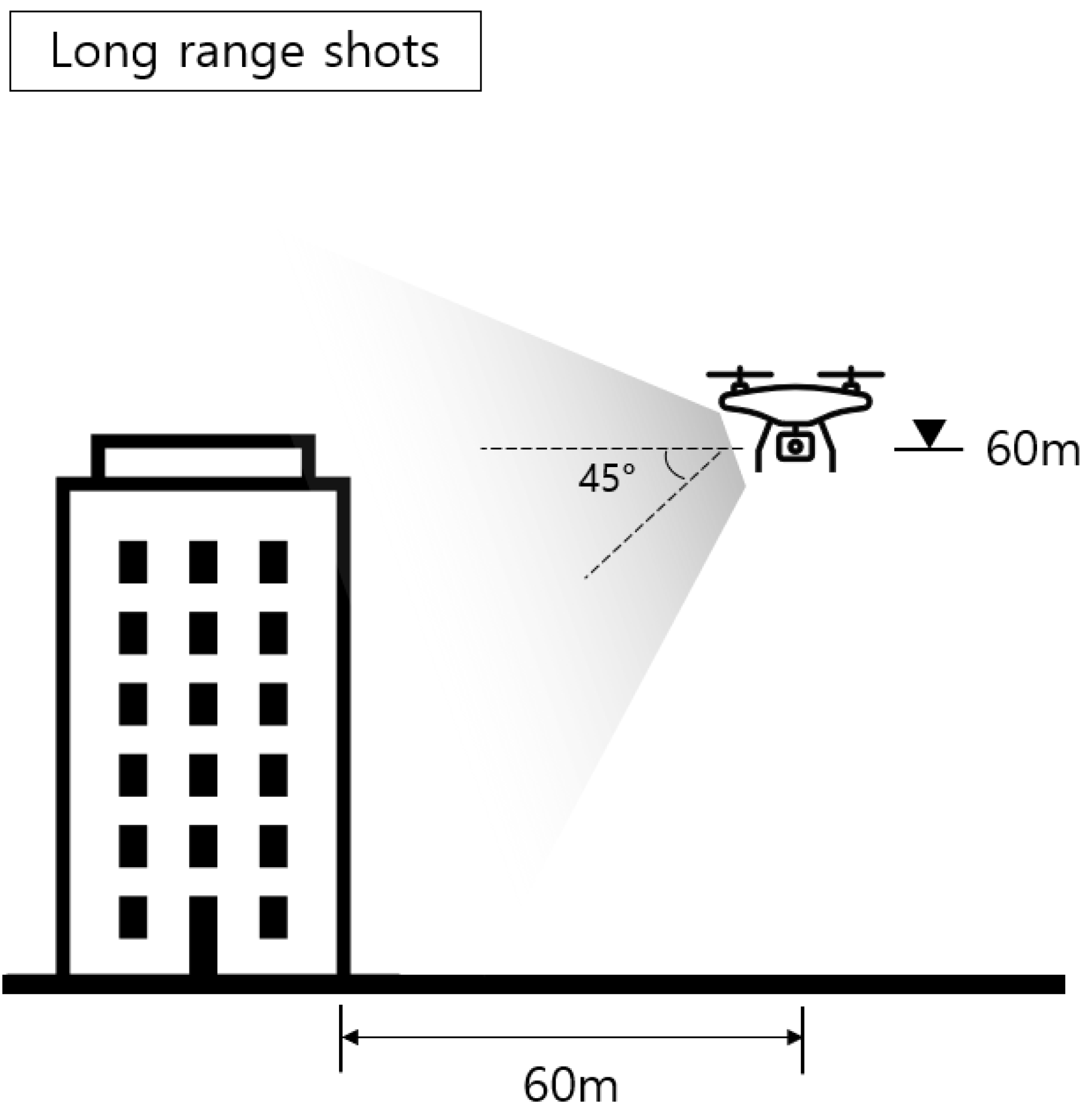

3.5. Long-Range Shooting Dataset

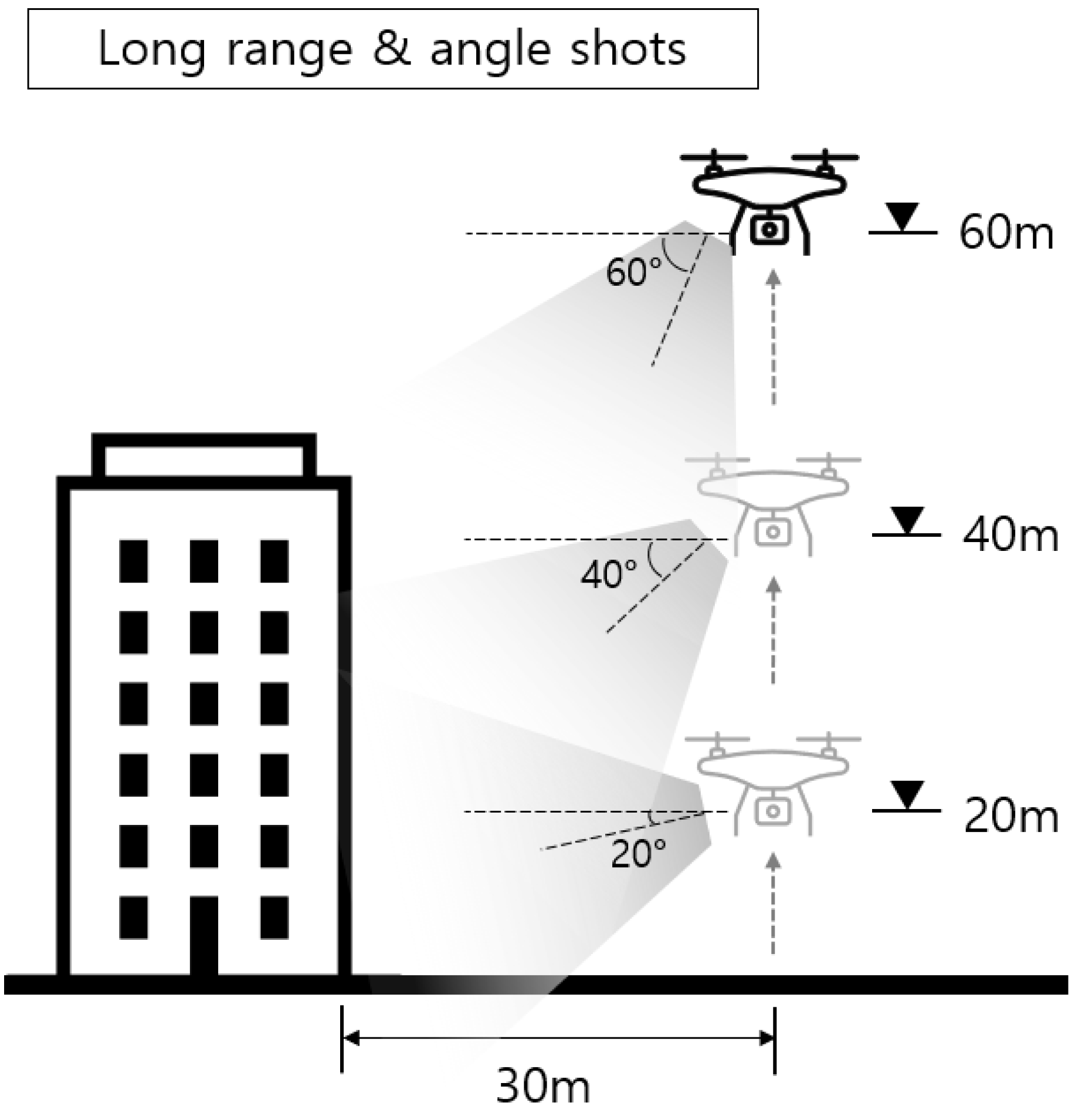

3.6. Very Long-Range Shooting Dataset

4. Discussion

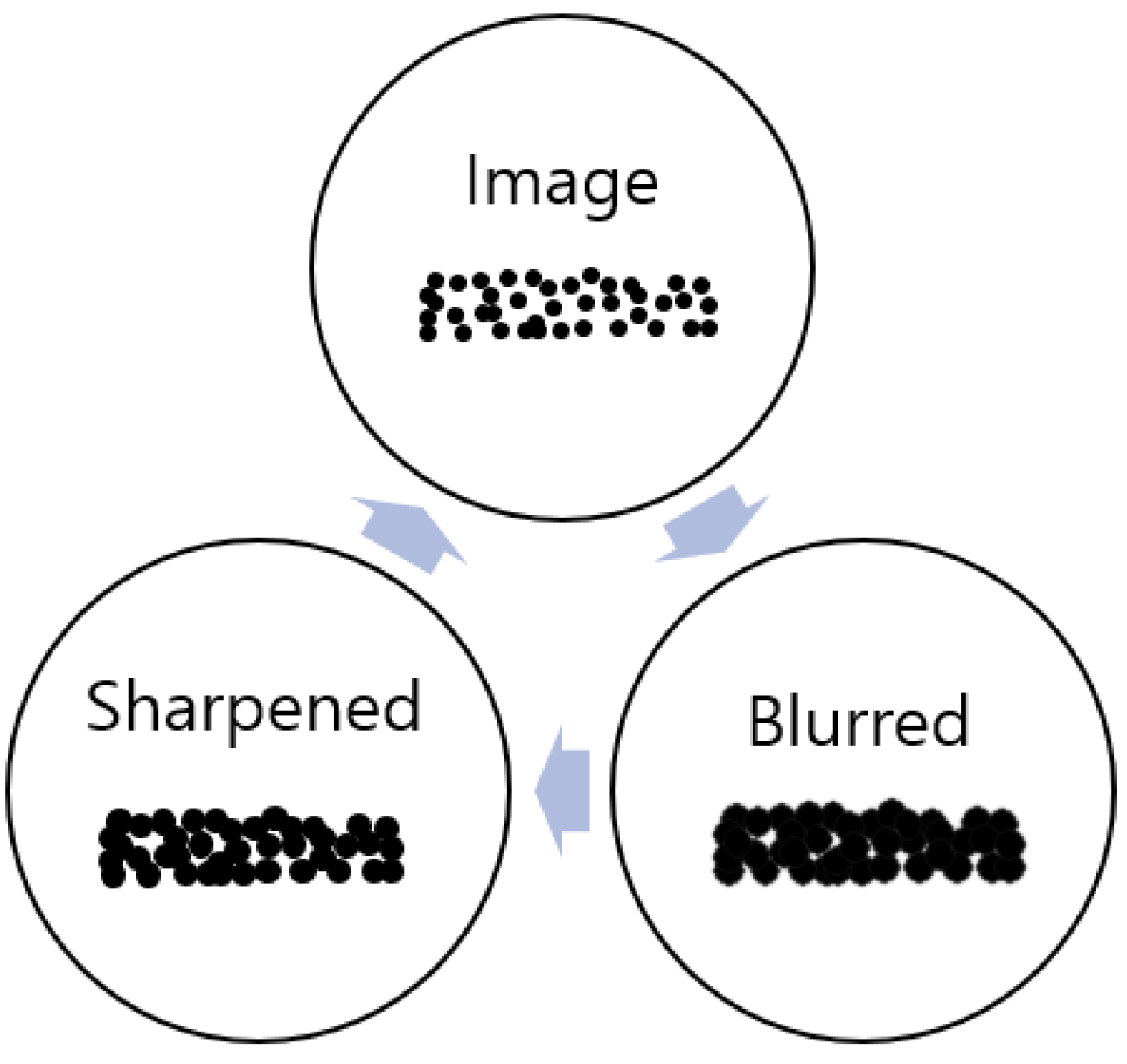

4.1. Data Preprocessing and Noise Reduction

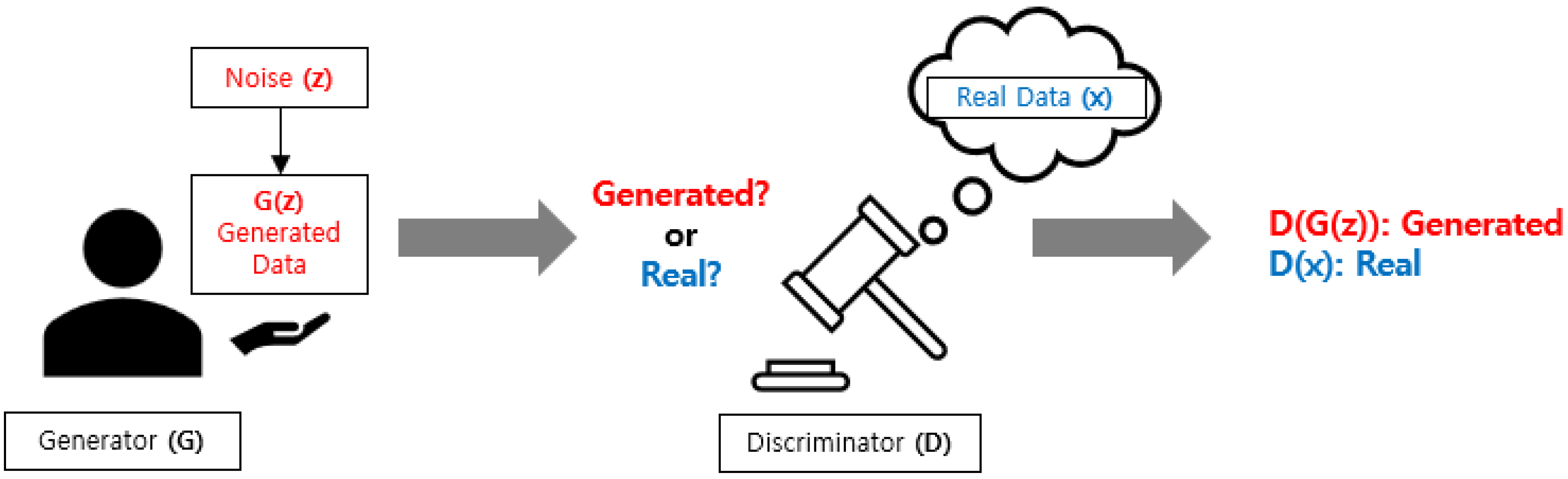

4.2. Generative Adversarial Networks (GAN)

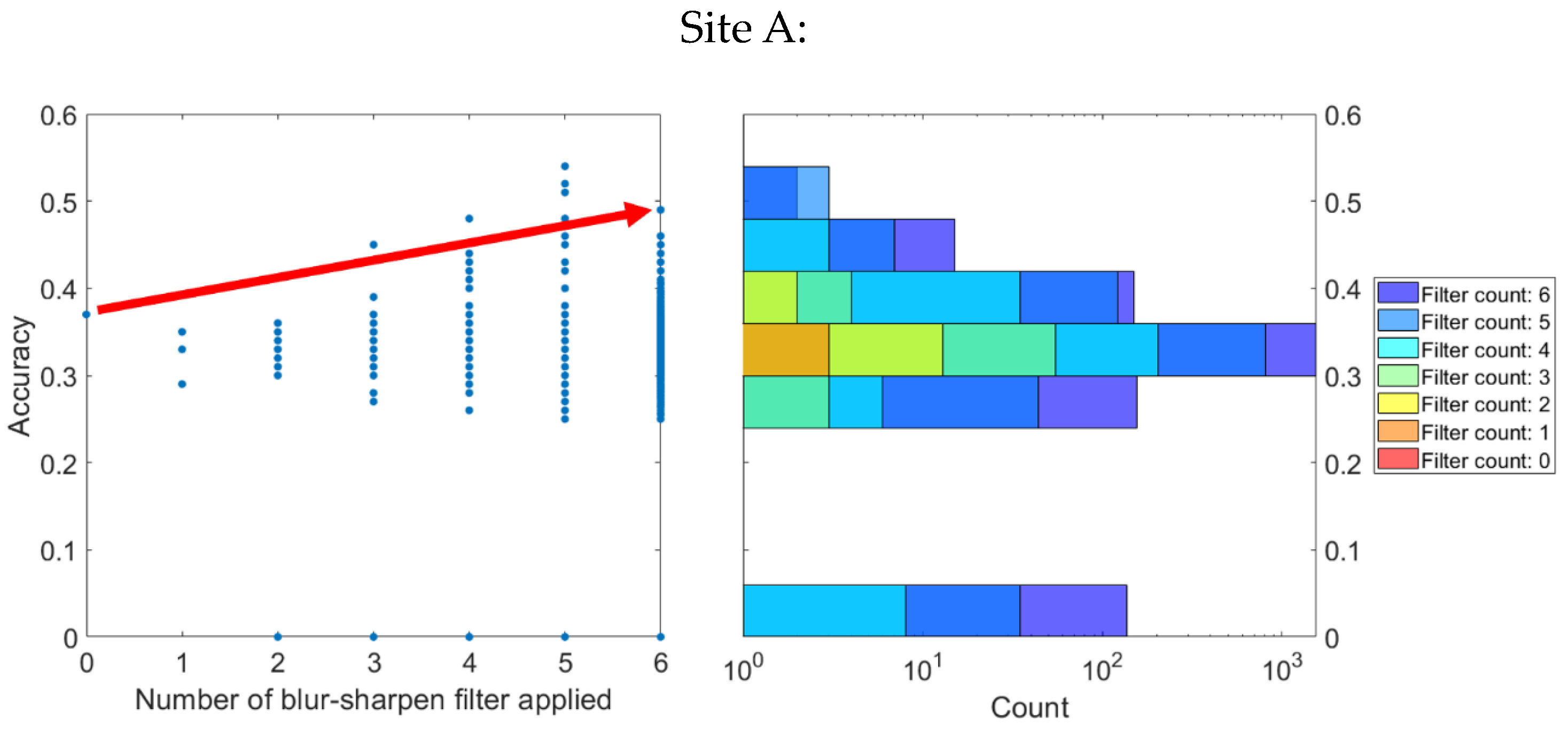

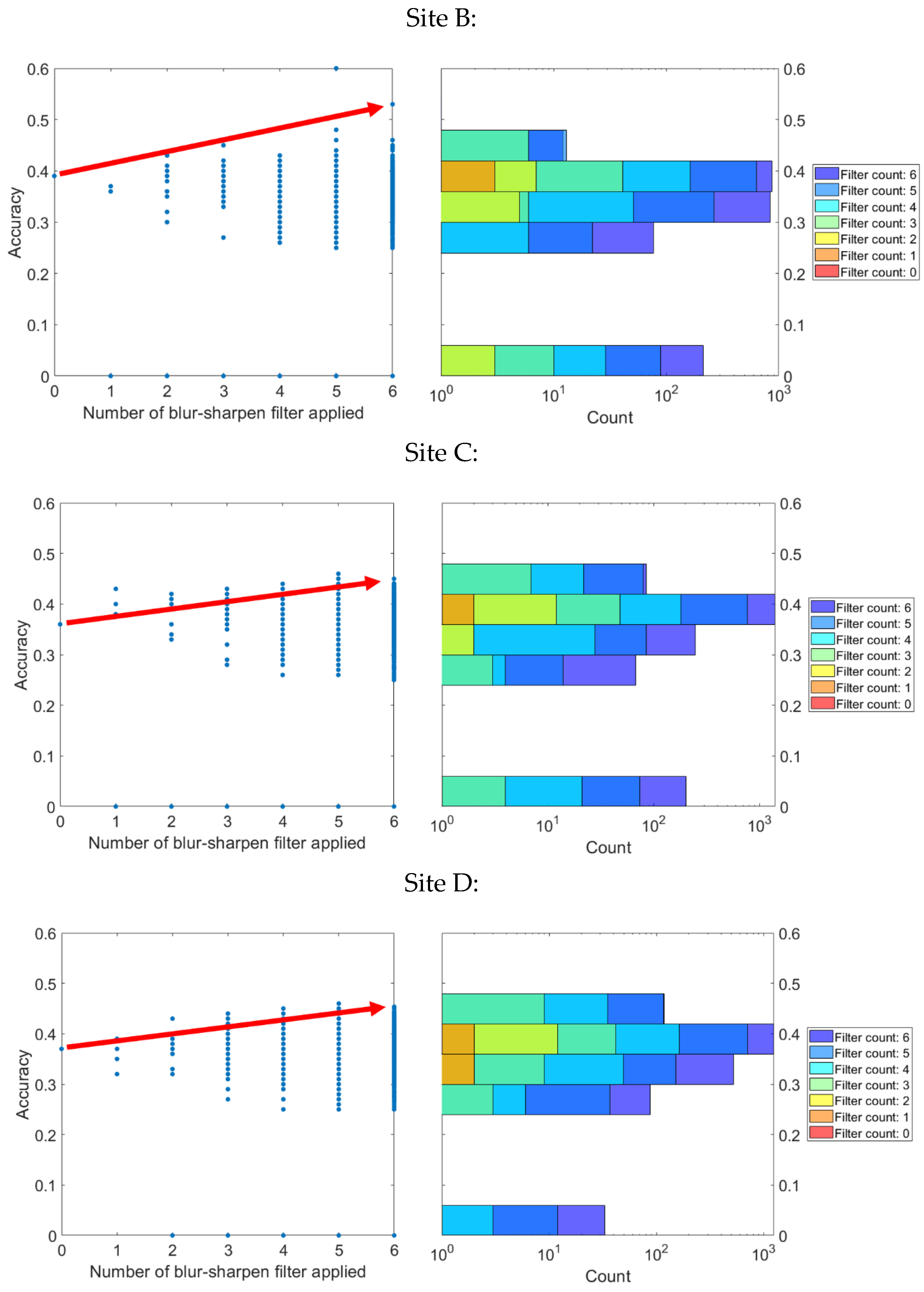

4.3. Fine-Tuning of YOLO v5

4.4. Comparative Analysis of Results

4.5. Limitations

5. Conclusions and Future Research

- (1)

- Enhanced precision in project planning and monitoring: Accurate PCM models allow for detailed site analysis and monitoring, supporting informed decision-making based on precise, real-time data.

- (2)

- Improved safety and risk management: Advanced object recognition capabilities can identify potential safety hazards and ensure compliance with safety protocols, thereby mitigating risks and enhancing onsite safety.

- (3)

- Optimized resource allocation: Detailed insights into site conditions and progress from accurate digital models facilitate better resource allocation, reducing waste and increasing efficiency.

- (4)

- Streamlined collaboration and communication: Digital models that accurately reflect the construction site condition improve communication among stakeholders, facilitating effective collaboration and coordination.

- (a)

- The aim of achieving photorealistic rendering for object detection using blur/sharpen filters and GAN models was not fully met, indicating a need for alternative or refined methods.

- (b)

- The effectiveness of object recognition varied with distance, suggesting further research is needed to optimize recognition at varying distances.

- (c)

- Practical and scalability challenges emerged when attempting to implement high-quality rendering using advanced AI models on construction sites, indicating the methods may not be directly applicable or scalable to diverse environments.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Enshassi, M.A.; Al Hallaq, K.A.; Tayeh, B.A. Limitation factors of building information modeling (BIM) implementation. Open Constr. Build. Technol. J. 2019, 13, 189–196. [Google Scholar] [CrossRef]

- Sun, C.; Jiang, S.; Skibniewski, M.J.; Man, Q.; Shen, L. A literature review of the factors limiting the application of BIM in the construction industry. Technol. Econ. Dev. Econ. 2015, 23, 764–779. [Google Scholar] [CrossRef]

- Tang, S.; Shelden, D.R.; Eastman, C.M.; Pishdad-Bozorgi, P.; Gao, X. A review of building information modeling (BIM) and the internet of things (IoT) devices integration: Present status and future trends. Autom. Constr. 2019, 101, 127–139. [Google Scholar] [CrossRef]

- Azhar, S.; Khalfan, M.; Maqsood, T. Building information modelling (BIM): Now and beyond. Constr. Econ. Build. 2015, 12, 15–28. [Google Scholar] [CrossRef]

- Edirisinghe, R.J.E. Construction and A. Management, Digital skin of the construction site: Smart sensor technologies towards the future smart construction site. Emerald Insight 2019, 26, 184–223. [Google Scholar]

- Cabeza, L.F.; Rincón, L.; Vilariño, V.; Pérez, G.; Castell, A. Life cycle assessment (LCA) and life cycle energy analysis (LCEA) of buildings and the building sector: A review. Renew. Sustain. Energy Rev. 2014, 29, 394–416. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.-K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Kavaliauskas, P.; Fernandez, J.B.; McGuinness, K.; Jurelionis, A. Automation of Construction Progress Monitoring by Integrating 3D Point Cloud Data with an IFC-Based BIM Model. Buildings 2022, 12, 1754. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-driven robotic mapping and registration of 3D point clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Kim, S.; Kim, S.; Lee, D.-E. 3D point cloud and BIM-based reconstruction for evaluation of project by as-planned and as-built. Remote Sens. 2020, 12, 1457. [Google Scholar] [CrossRef]

- Melenbrink, N.; Werfel, J.; Menges, A. On-site autonomous construction robots: Towards unsupervised building. Autom. Constr. 2020, 119, 103312. [Google Scholar] [CrossRef]

- Delgado, J.M.D.; Oyedele, L.; Ajayi, A.; Akanbi, L.; Akinade, O.; Bilal, M.; Owolabi, H. Robotics and automated systems in construction: Understanding industry-specific challenges for adoption. J. Build. Eng. 2019, 26, 100868. [Google Scholar] [CrossRef]

- Huang, Z.; Mao, C.; Wang, J.; Sadick, A.-M. Understanding the key takeaway of construction robots towards construction automation. Eng. Constr. Arch. Manag. 2021, 29, 3664–3688. [Google Scholar] [CrossRef]

- Heo, S.; Na, S.; Han, S.; Shin, Y.; Lee, S. Flip side of artificial intelligence technologies: New labor-intensive industry of the 21st century. J. Comput. Struct. Eng. Inst. Korea 2021, 34, 327–337. [Google Scholar] [CrossRef]

- Jiang, Y. Intelligent building construction management based on BIM digital twin. Comput. Intell. Neurosci. 2021, 2021, 4979249. [Google Scholar] [CrossRef] [PubMed]

- Parusheva, S. Business, and Education, Digitalization and Digital Transformation in Construction-Benefits and Challenges. In Information and Communication Technologies in Business and Education; University of Economics: Varna, Bulgaria, 2019; pp. 126–134. [Google Scholar]

- Lundberg, O.; Nylén, D.; Sandberg, J. Unpacking construction site digitalization: The role of incongruence and inconsistency in technological frames. Constr. Manag. Econ. 2022, 40, 987–1002. [Google Scholar] [CrossRef]

- Nti, I.K.; Adekoya, A.F.; Weyori, B.A.; Nyarko-Boateng, O. Applications of artificial intelligence in engineering and manufacturing: A systematic review. J. Intell. Manuf. 2022, 33, 1581–1601. [Google Scholar] [CrossRef]

- Asadi, K.; Suresh, A.K.; Ender, A.; Gotad, S.; Maniyar, S.; Anand, S.; Noghabaei, M.; Han, K.; Lobaton, E.; Wu, T. An integrated UGV-UAV system for construction site data collection. Autom. Constr. 2020, 112, 103068. [Google Scholar] [CrossRef]

- Rachmawati, T.S.N.; Kim, S. Unmanned aerial vehicles (UAV) integration with digital technologies toward construction 4.0: A systematic literature review. Sustainability 2022, 14, 5708. [Google Scholar] [CrossRef]

- Coupry, C.; Noblecourt, S.; Richard, P.; Baudry, D.; Bigaud, D. BIM-based digital twin and xr devices to improve maintenance procedures in smart buildings: A literature review. Appl. Sci. 2022, 11, 6810. [Google Scholar] [CrossRef]

- Opoku, D.-G.J.; Perera, S.; Osei-Kyei, R.; Rashidi, M. Digital twin application in the construction industry: A literature review. J. Build. Eng. 2021, 40, 102726. [Google Scholar] [CrossRef]

- Semeraro, C.; Lezoche, M.; Panetto, H.; Dassisti, M. Digital twin paradigm: A systematic literature review. Comput. Ind. 2021, 130, 103469. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. A BIM-data mining integrated digital twin framework for advanced project management. Autom. Constr. 2021, 124, 103564. [Google Scholar] [CrossRef]

- Sacks, R.; Brilakis, I.; Pikas, E.; Xie, H.S.; Girolami, M. Construction with digital twin information systems. Data-Centric Eng. 2020, 1, e14. [Google Scholar] [CrossRef]

- Xue, H.; Zhang, S.; Su, Y.; Wu, Z.; Yang, R.J. Effect of stakeholder collaborative management on off-site construction cost performance. J. Clean. Prod. 2018, 184, 490–502. [Google Scholar] [CrossRef]

- Razkenari, M.; Fenner, A.; Shojaei, A.; Hakim, H.; Kibert, C. Perceptions of offsite construction in the United States: An investigation of current practices. J. Build. Eng. 2019, 29, 101138. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Delgado, J.M.D.; Bilal, M.; Akinade, O.O.; Ahmed, A. Artificial intelligence in the construction industry: A review of present status, opportunities and future challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Wu, J.; Zheng, H.; Zhao, B.; Li, Y.; Yan, B.; Liang, R.; Wang, W.; Zhou, S.; Lin, G.; Fu, Y.J. Ai challenger: A large-scale dataset for going deeper in image understanding. arXiv 2017, arXiv:1711.06475. [Google Scholar]

- Zhou, W.; Whyte, J.; Sacks, R. Construction safety and digital design: A review. Autom. Constr. 2012, 22, 102–111. [Google Scholar] [CrossRef]

- Afzal, M.; Shafiq, M.T.; Al Jassmi, H. Improving construction safety with virtual-design construction technologies—A review. J. Inf. Technol. Constr. 2021, 26, 319–340. [Google Scholar] [CrossRef]

- Torrecilla-García, J.A.; Pardo-Ferreira, M.C.; Rubio-Romero, J.C. Overall Introduction to the Framework of BIM-based Digital Twinning in Decision-making in Safety Management in Building Construction Industry. Dir. Organ. 2021, 74, 31–38. [Google Scholar] [CrossRef]

- Collinge, W.H.; Farghaly, K.; Mosleh, M.H.; Manu, P.; Cheung, C.M.; Osorio-Sandoval, C.A. BIM-based construction safety risk library. Autom. Constr. 2022, 141, 104391. [Google Scholar] [CrossRef]

- Guo, H.; Scheepbouwer, E.; Yiu, T.; Gonzalez, V. Overview and Analysis of Digital Technologies for Construction Safety Management; University of Canberra: Canberra, Australia, 2017. [Google Scholar]

- Parsamehr, M.; Perera, U.S.; Dodanwala, T.C.; Perera, P.; Ruparathna, R. A review of construction management challenges and BIM-based solutions: Perspectives from the schedule, cost, quality, and safety management. Asian J. Civ. Eng. 2023, 24, 353–389. [Google Scholar] [CrossRef]

- Nakanishi, Y.; Kaneta, T.; Nishino, S. A Review of Monitoring Construction Equipment in Support of Construction Project Management. Front. Built Environ. 2022, 7, e0632593. [Google Scholar] [CrossRef]

- Forteza, F.J.; Carretero-Gómez, J.M.; Sesé, A. Safety in the construction industry: Accidents and precursors. J. Constr. 2020, 19, 271–281. [Google Scholar] [CrossRef]

- Kang, Y.; Siddiqui, S.; Suk, S.J.; Chi, S.; Kim, C. Trends of Fall Accidents in the U.S. Construction Industry. J. Constr. Eng. Manag. 2017, 143, e0001332. [Google Scholar] [CrossRef]

- Follini, C.; Magnago, V.; Freitag, K.; Terzer, M.; Marcher, C.; Riedl, M.; Giusti, A.; Matt, D.T. BIM-integrated collaborative robotics for application in building construction and maintenance. Robotics 2020, 10, 2. [Google Scholar] [CrossRef]

- Torres-González, M.; Prieto, A.; Alejandre, F.; Blasco-López, F. Digital management focused on the preventive maintenance of World Heritage Sites. Autom. Constr. 2021, 129, 103813. [Google Scholar] [CrossRef]

- Errandonea, I.; Beltrán, S.; Arrizabalaga, S. Digital Twin for maintenance: A literature review. Comput. Ind. 2020, 123, 103316. [Google Scholar] [CrossRef]

- Rødseth, H.; Schjølberg, P.; Marhaug, A. Deep digital maintenance. Adv. Manuf. 2017, 5, 299–310. [Google Scholar] [CrossRef]

- Lu, Q.; Xie, X.; Parlikad, A.K.; Schooling, J.M. Digital twin-enabled anomaly detection for built asset monitoring in operation and maintenance. Autom. Constr. 2020, 118, 103277. [Google Scholar] [CrossRef]

- Zavadskas, E.K. Automation and robotics in construction: International research and achievements. Autom. Constr. 2010, 19, 286–290. [Google Scholar] [CrossRef]

- Bogue, R. What are the prospects for robots in the construction industry? Ind. Robot. Int. J. Robot. Res. Appl. 2018, 45, 1–6. [Google Scholar] [CrossRef]

- Na, S.; Heo, S.; Han, S.; Shin, Y.; Roh, Y. Acceptance model of artificial intelligence (AI)-based technologies in construction firms: Applying the technology acceptance model (tam) in combination with the technology–organisation–environment (TOE) framework. Buildings 2022, 12, 90. [Google Scholar] [CrossRef]

- Chen, X.; Chang-Richards, A.Y.; Pelosi, A.; Jia, Y.; Shen, X.; Siddiqui, M.K.; Yang, N. Implementation of technologies in the construction industry: A systematic review. Eng. Constr. Arch. Manag. 2022, 29, 3181–3209. [Google Scholar] [CrossRef]

- Olanipekun, A.O.; Sutrisna, M. Facilitating digital transformation in construction—A systematic review of the current state of the art. Front. Built Environ. 2021, 7, 660758. [Google Scholar] [CrossRef]

- Zhou, W.; Georgakis, P.; Heesom, D.; Feng, X. Model-based groupware solution for distributed real-time collaborative 4D planning through teamwork. J. Comput. Civ. Eng. 2012, 26, 597–611. [Google Scholar] [CrossRef]

- Zhang, S.; Teizer, J.; Lee, J.-K.; Eastman, C.M.; Venugopal, M. Building information modeling (BIM) and safety: Automatic safety checking of construction models and schedules. Autom. Constr. 2013, 29, 183–195. [Google Scholar] [CrossRef]

- Levin, D.I.W.; Litven, J.; Jones, G.L.; Sueda, S.; Pai, D.K. Eulerian solid simulation with contact. ACM Trans. Graph. 2011, 30, 1–10. [Google Scholar] [CrossRef]

- García, J.M.B. Recording stratigraphic relationships among non-original deposits on a 16th century painting. J. Cult. Herit. 2009, 10, 338–346. [Google Scholar] [CrossRef]

- Cuypers, S.; Bassier, M.; Vergauwen, M. Deep Learning on Construction Sites: A Case Study of Sparse Data Learning Techniques for Rebar Segmentation. Sensors 2021, 21, 5428. [Google Scholar] [CrossRef] [PubMed]

- Akinosho, T.D.; Oyedele, L.O.; Bilal, M.; Ajayi, A.O.; Delgado, M.D.; Akinade, O.O.; Ahmed, A.A. Deep learning in the construction industry: A review of present status and future innovations. J. Build. Eng. 2020, 32, 101827. [Google Scholar] [CrossRef]

- Lee, J.; Lee, S. Construction site safety management: A computer vision and deep learning approach. Sensors 2023, 23, 944. [Google Scholar] [CrossRef] [PubMed]

- Nath, N.D.; Behzadan, A.H. Deep convolutional networks for construction object detection under different visual conditions. Front. Built Environ. 2020, 6, 97. [Google Scholar] [CrossRef]

- Tang, S.; Roberts, D.; Golparvar-Fard, M. Human-object interaction recognition for automatic construction site safety inspection. Autom. Constr. 2020, 120, 103356. [Google Scholar] [CrossRef]

- Muhammad, I.; Ying, K.; Nithish, M.; Xin, J.; Xinge, Z.; Cheah, C.C. Robot-assisted object detection for construction automation: Data and information-driven approach. IEEE/ASME Trans. Mechatron. 2021, 26, 2845–2856. [Google Scholar] [CrossRef]

- Heo, S.; Han, S.; Shin, Y.; Na, S. Challenges of data refining process during the artificial intelligence development projects in the architecture, engineering and construction industry. Appl. Sci. 2021, 11, 10919. [Google Scholar] [CrossRef]

- Shin, Y.; Heo, S.; Han, S.; Kim, J.; Na, S. An image-based steel rebar size estimation and counting method using a convolutional neural network combined with homography. Buildings 2021, 11, 463. [Google Scholar] [CrossRef]

- Sunwoo, H.; Choi, W.; Na, S.; Kim, C.; Heo, S. Comparison of the Performance of Artificial Intelligence Models Depending on the Labelled Image by Different User Levels. Appl. Sci. 2022, 12, 3136. [Google Scholar] [CrossRef]

- Lee, M. Recent Advances in Generative Adversarial Networks for Gene Expression Data: A Comprehensive Review. Mathematics 2023, 11, 3055. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Gašparović, B.; Mauša, G.; Rukavina, J.; Lerga, J. Evaluating YOLOV5, YOLOV6, YOLOV7, and YOLOV8 in Underwater Environment: Is There Real Improvement? In Proceedings of the 8th International Conference on Smart and Sustainable Technologies (SpliTech), Split/Bol, Croatia, 1 August 2023; pp. 1–4. [Google Scholar]

- Halder, S.; Afsari, K.; Serdakowski, J.; DeVito, S.; Ensafi, M.; Thabet, W. Real-Time and Remote Construction Progress Monitoring with a Quadruped Robot Using Augmented Reality. Buildings 2022, 12, 2027. [Google Scholar] [CrossRef]

- Bassier, M.; Vermandere, J.; De Winter, H. Linked building data for construction site monitoring: A test case. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-2-2022, 159–165. [Google Scholar] [CrossRef]

- Universidade Federal do Parana. PKLot Dataset. In Roboflow Universe; Universidade Federal do Parana: Curitiba, Brazil, 2022; Available online: https://public.roboflow.com/object-detection/pklot (accessed on 10 April 2024).

- Istanbul Technical University. facadesArchitecture Dataset. In Roboflow Universe; Istanbul Technical University: Istanbul, Turkey, 2022; Available online: https://universe.roboflow.com/stanbul-technical-university/facadesarchitecture (accessed on 10 April 2024).

- Zhao, M.; Abbeel, P.; James, S. On the effectiveness of fine-tuning versus meta-reinforcement learning. NeurIPS 2022, 35, 26519–26531. [Google Scholar]

- Bhowmik, N.; Wang, Q.; Gaus, Y.F.A.; Szarek, M.; Breckon, T.P.J. The good, the bad and the ugly: Evaluating convolutional neural networks for prohibited item detection using real and synthetically composited X-ray imagery. arXiv 2019, arXiv:1909.11508. [Google Scholar]

- Jeon, M.; Student, R.P.M.; Lee, Y.; Shin, Y.-S.; Jang, H.; Yeu, T.; Kim, A. Synthesizing Image and Automated Annotation Tool for CNN based Under Water Object Detection. J. Korea Robot. Soc. 2019, 14, 139–149. [Google Scholar] [CrossRef]

| Version | Characteristics | Note |

|---|---|---|

| YOLOv1 |

|

|

| YOLOv2 |

|

|

| YOLOv3 |

|

|

| YOLOv4 |

|

|

| YOLOv5 |

|

|

| YOLOv6~8 |

|

|

| Case | Shooting Distance (m) | Shooting Altitude (m) | Shooting Angle from Drone (º) | Number of Images | Time (Sec) | Resolution | Visual Judgment Result |

|---|---|---|---|---|---|---|---|

| Vertical shooting | 0~20 | 70 | 90 | 110 | 420 | 2.5 cm/px | Building well recognized; windows on the side and the walls are not well modeled |

| Close-range shooting | 10 | 15~60 | 30 | 110 | 510 | 0.4 cm/px | Building not recognized |

| Close-range and angled shooting | 10 | 15~60 | 15~60 | 108 | 630 | ~1.6 cm/px | Building not recognized |

| Long-range shooting | 60 | 60 | 45 | 102 | 300 | 2.2 cm/px | Building well recognized |

| Long-range and angled shooting | 30 | 20~60 | 20~60 | 120 | 580 | ~2.5 cm/px | Building well recognized with less empty space |

| Very long-range shooting | 100 | 30~120 | 30~60 | 196 | 460 | ~5 cm/px | Building well recognized except for hard-to-identify small objects |

| Category | Flat Area | Area with Elevation Difference | Insufficient Matching Points | Area with High-Rise Buildings |

|---|---|---|---|---|

| Longitudinal overlap rate | 65% | 75% | 75% | 85% |

| Latitudinal overlap rate | 60% | 70% | 70% | 80% |

| Phase | Explanation | Results |

|---|---|---|

| Blur/sharpen filter |

|  |

| GAN |

|  |

| Fine-tuning |

|  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, W.; Na, S.; Heo, S. Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling. Buildings 2024, 14, 1106. https://doi.org/10.3390/buildings14041106

Choi W, Na S, Heo S. Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling. Buildings. 2024; 14(4):1106. https://doi.org/10.3390/buildings14041106

Chicago/Turabian StyleChoi, Wonjun, Seunguk Na, and Seokjae Heo. 2024. "Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling" Buildings 14, no. 4: 1106. https://doi.org/10.3390/buildings14041106

APA StyleChoi, W., Na, S., & Heo, S. (2024). Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling. Buildings, 14(4), 1106. https://doi.org/10.3390/buildings14041106