A Framework for Auditing Robot-Inclusivity of Indoor Environments Based on Lighting Condition

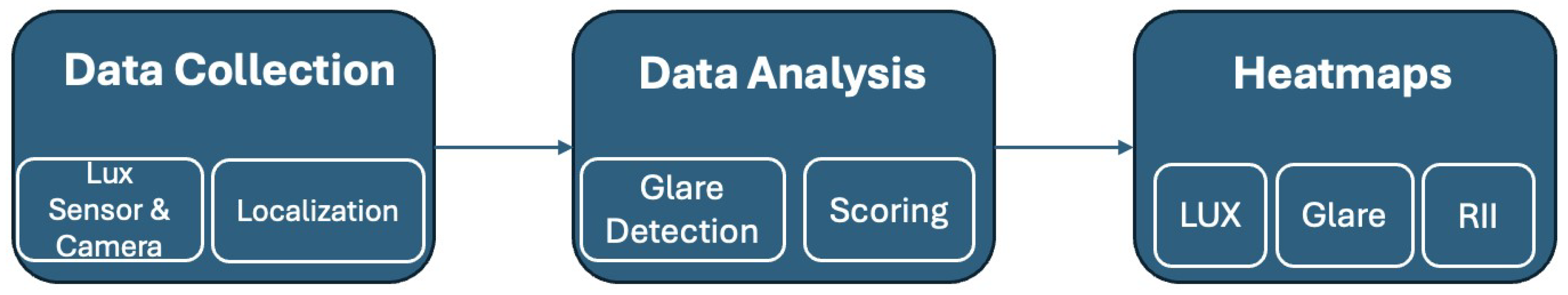

Abstract

:1. Introduction

2. Evaluating Robot-Inclusivity

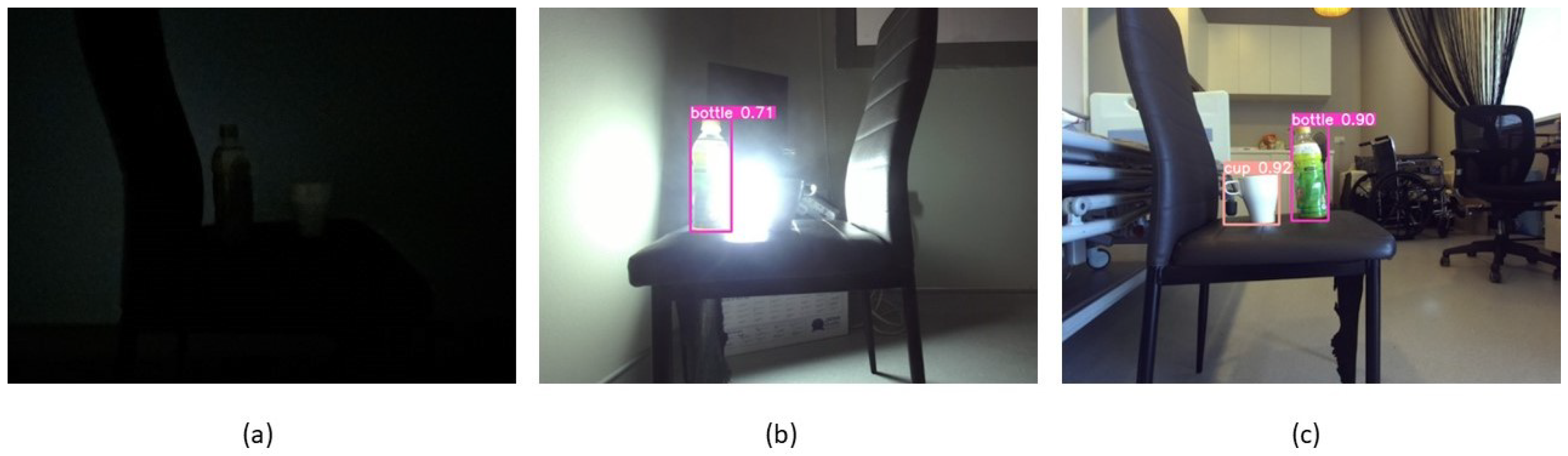

2.1. Effect of Lighting Condition on Object Detection

2.2. Measuring Light Intensity

2.3. Identifying Glare

| Algorithm 1 Calculate Glare Percentage |

|

2.4. Quantifying the Robot-Inclusivity

| Algorithm 2 Calculate RII-Lux |

|

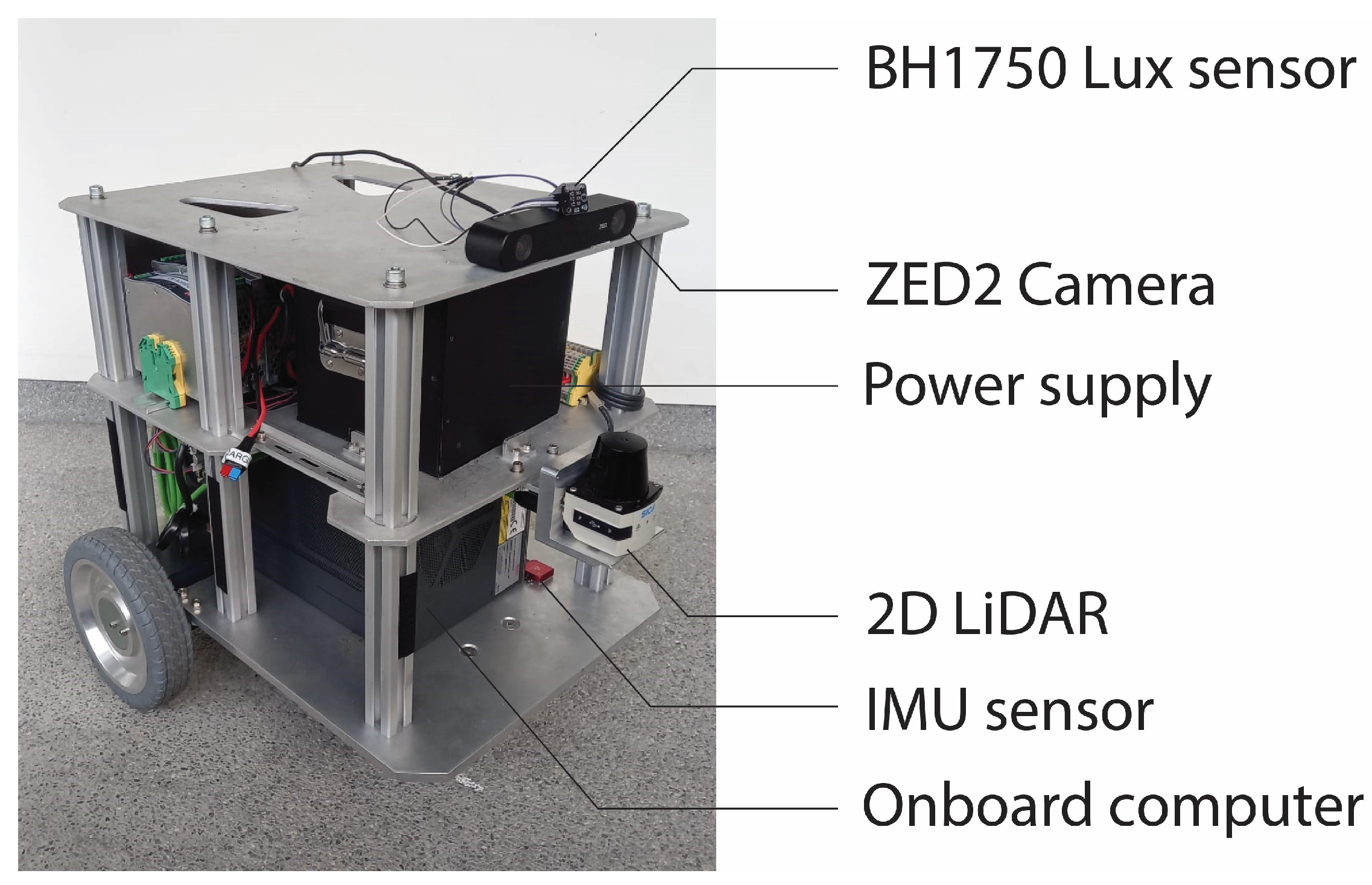

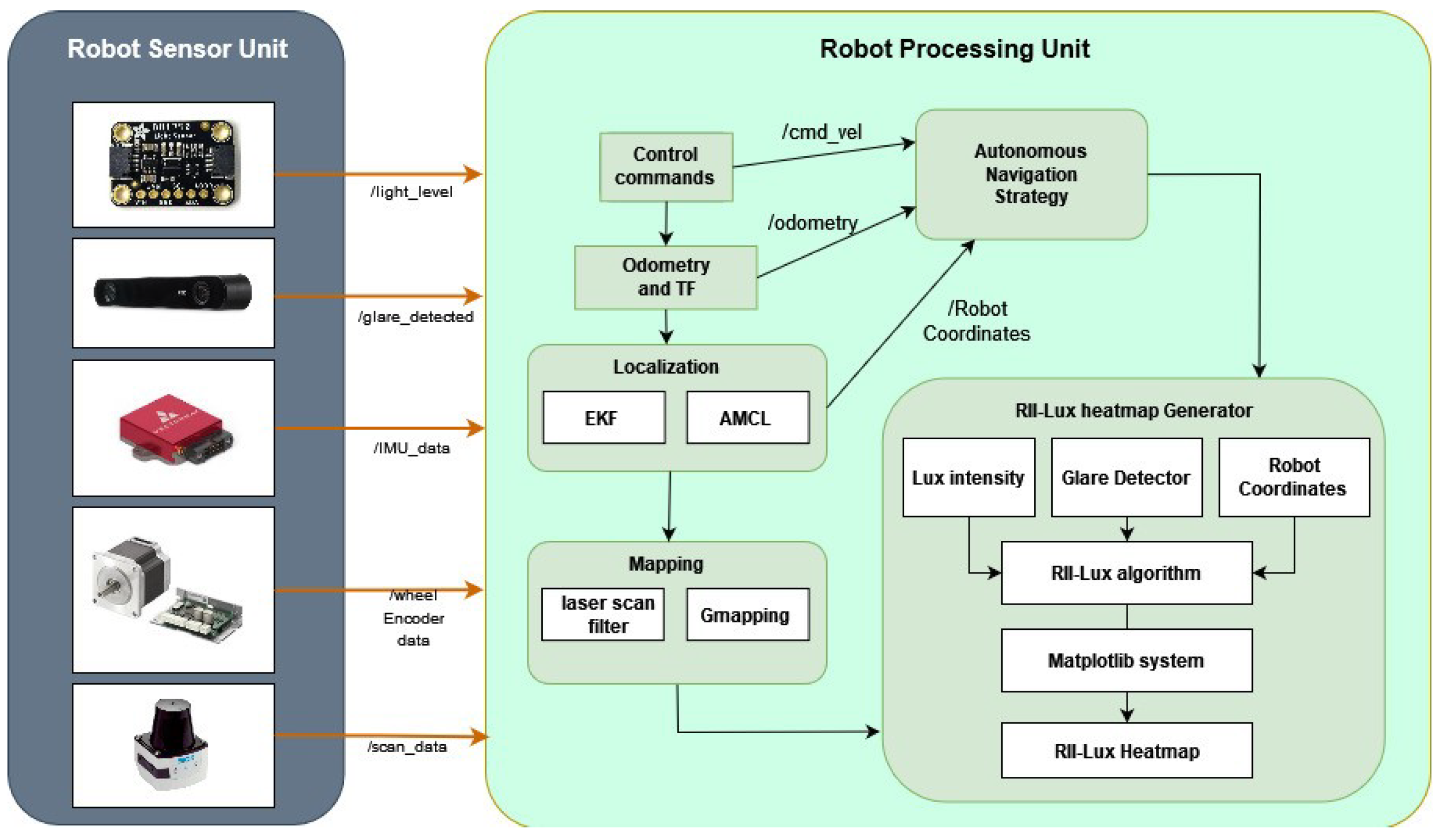

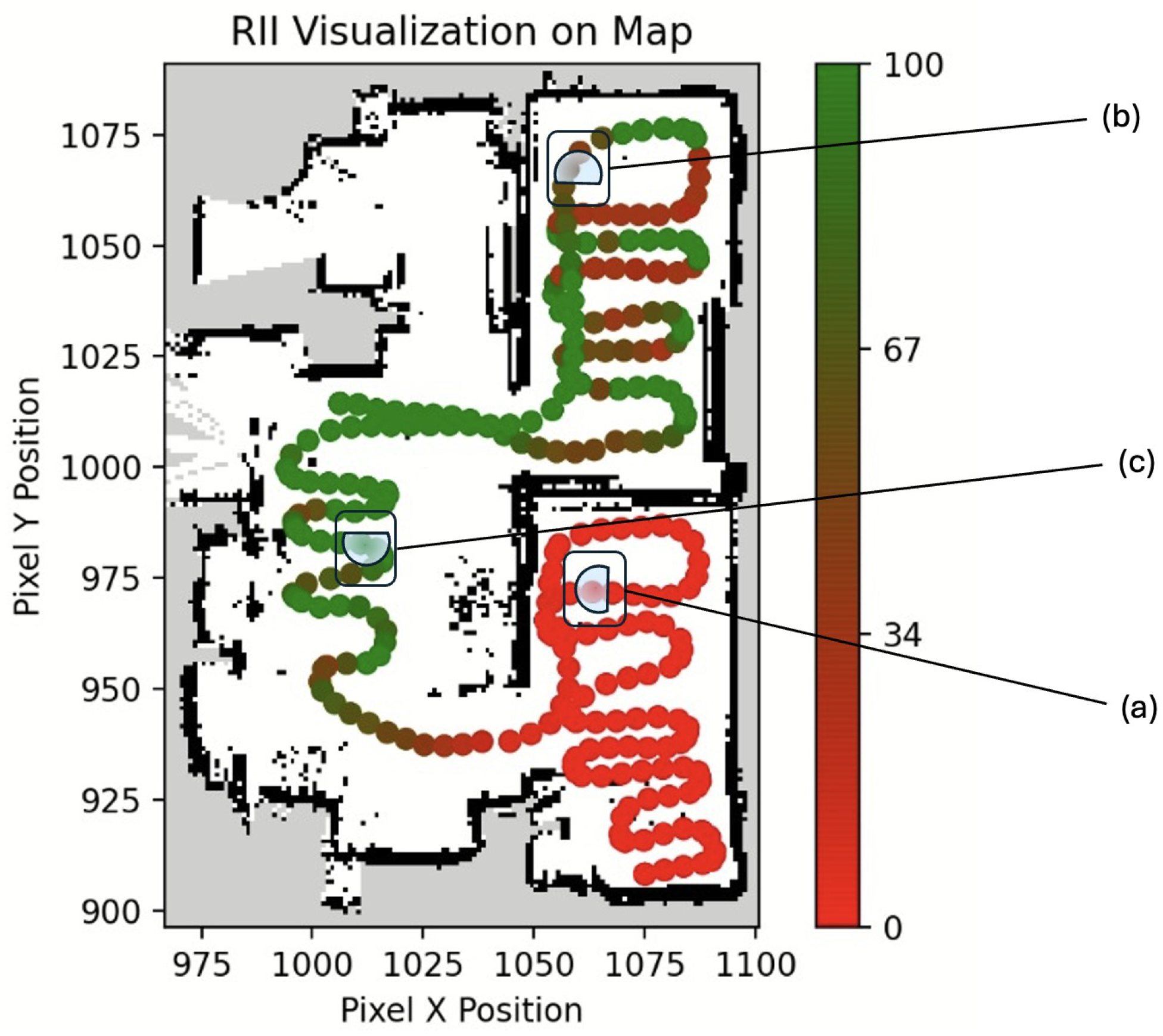

3. Automated RII Map Generation

4. Experimental Validation

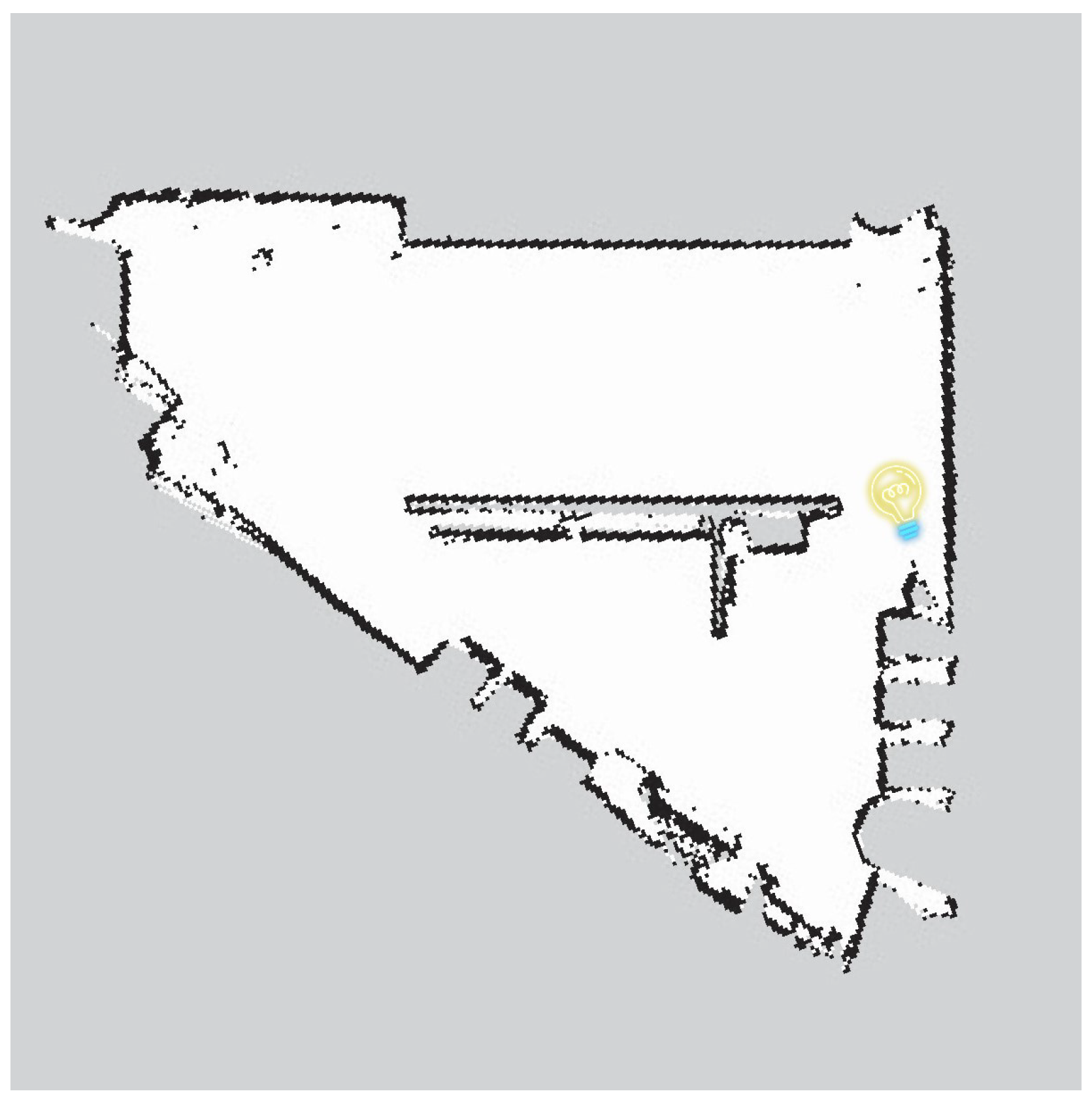

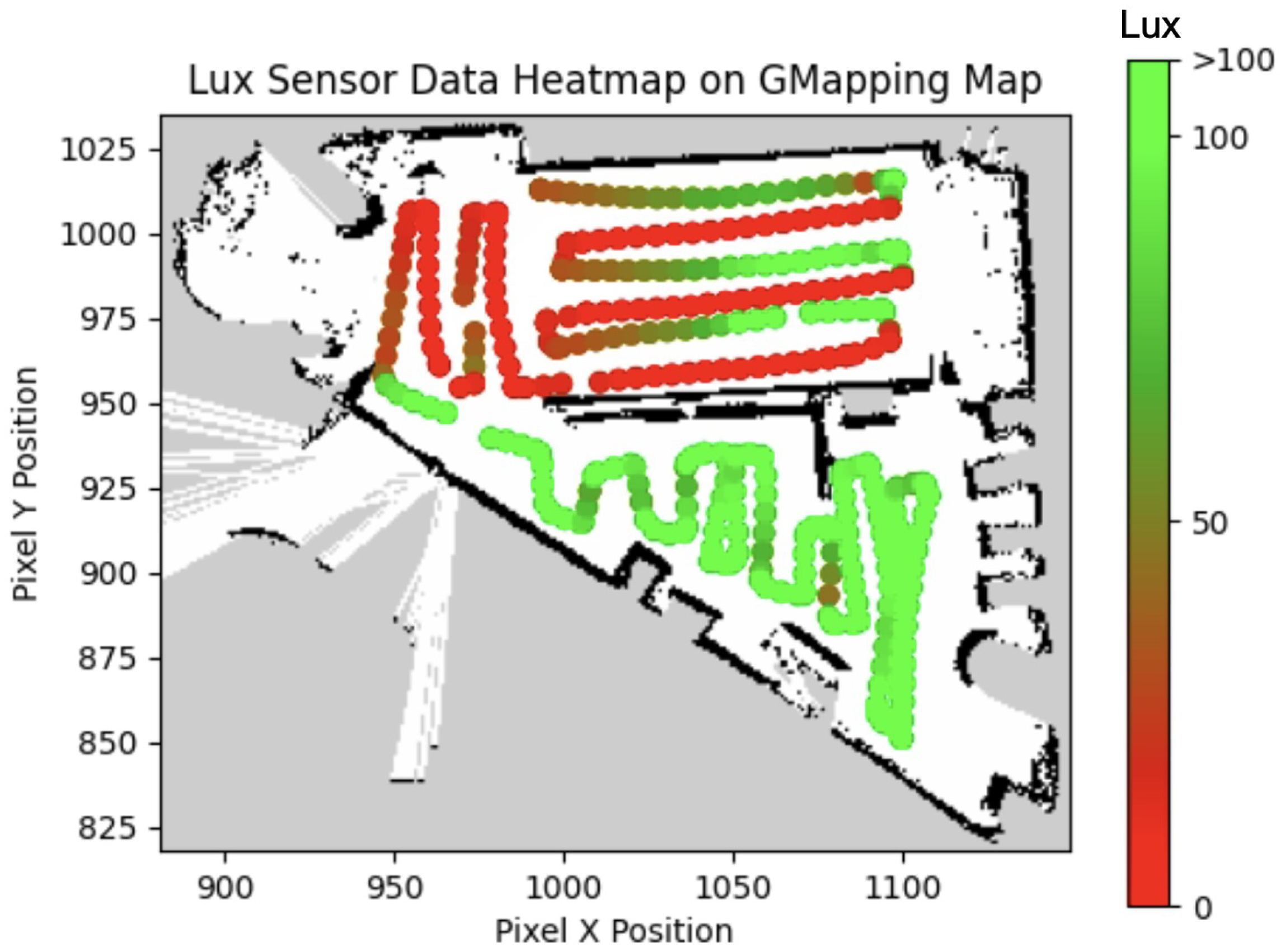

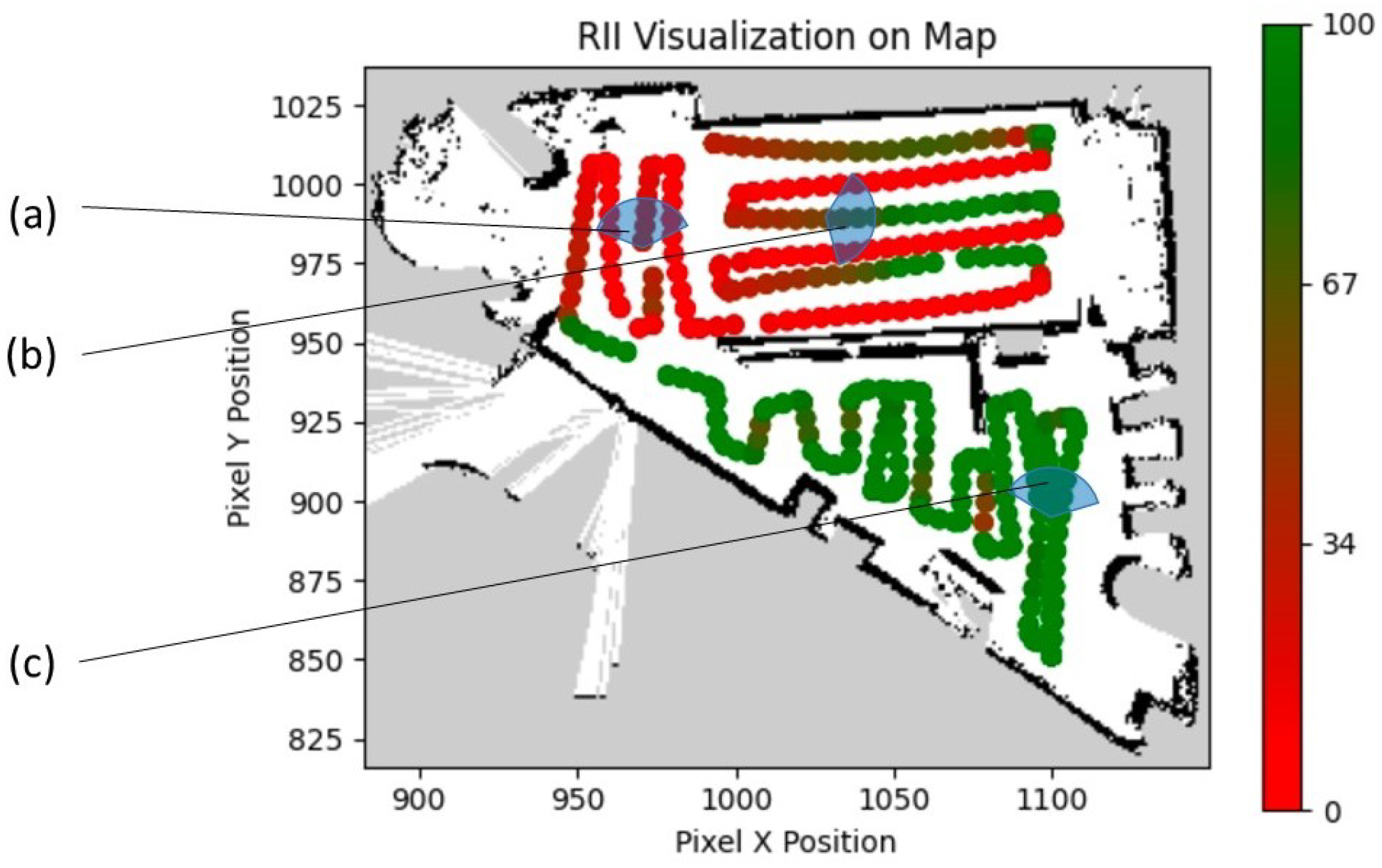

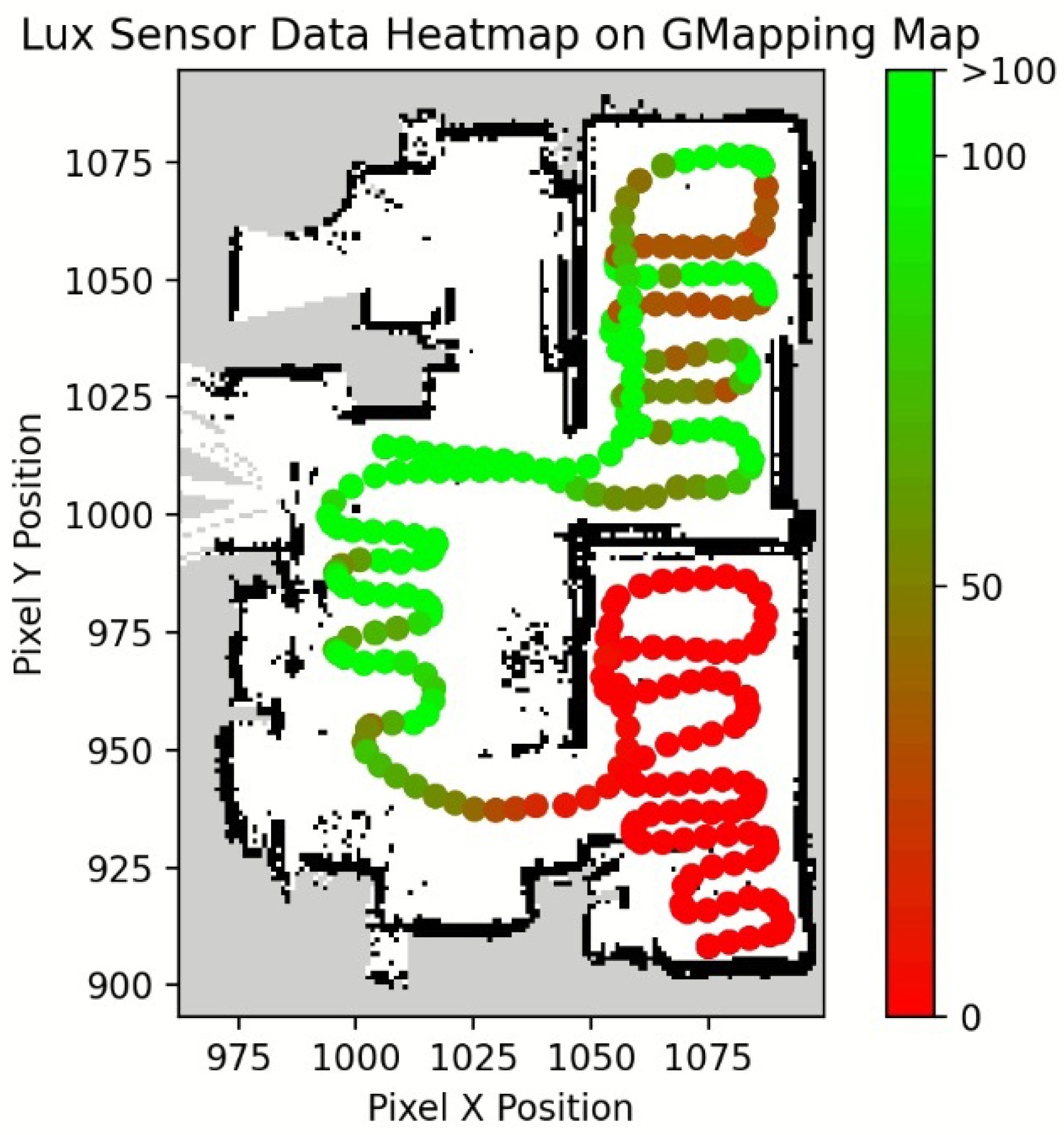

4.1. Site 1: Printing Room

4.2. Site 2: Mock Living Space

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DfR | Design for Robot |

| RII-Lux | Robot-Inclusivity Index based on Lighting |

| RGB | Red-Green-Blue |

| HSV | Hue, Saturation, Value |

| ROS | Robot Operating System |

References

- Chibani, A.; Amirat, Y.; Mohammed, S.; Matson, E.; Hagita, N.; Barreto, M. Ubiquitous robotics: Recent challenges and future trends. Robot. Auton. Syst. 2013, 61, 1162–1172. [Google Scholar] [CrossRef]

- Wijegunawardana, I.D.; Muthugala, M.A.V.J.; Samarakoon, S.M.B.P.; Hua, O.J.; Padmanabha, S.G.A.; Elara, M.R. Insights from autonomy trials of a self-reconfigurable floor-cleaning robot in a public food court. J. Field Robot. 2024, 41, 811–822. [Google Scholar] [CrossRef]

- Santhanaraj, K.K.; MM, R. A survey of assistive robots and systems for elderly care. J. Enabling Technol. 2021, 15, 66–72. [Google Scholar] [CrossRef]

- Bernardo, R.; Sousa, J.M.; Gonçalves, P.J. Survey on robotic systems for internal logistics. J. Manuf. Syst. 2022, 65, 339–350. [Google Scholar] [CrossRef]

- Vásquez, B.P.E.A.; Matía, F. A tour-guide robot: Moving towards interaction with humans. Eng. Appl. Artif. Intell. 2020, 88, 103356. [Google Scholar] [CrossRef]

- Thotakuri, A.; Kalyani, T.; Vucha, M.; Chinnaaiah, M.; Nagarjuna, T. Survey on robot vision: Techniques, tools and methodologies. Int. J. Appl. Eng. Res. 2017, 12, 6887–6896. [Google Scholar]

- Premebida, C.; Ambrus, R.; Marton, Z.C. Intelligent robotic perception systems. In Applications of Mobile Robots; IntechOpen: London, UK, 2018; pp. 111–127. [Google Scholar]

- Asadi, K.; Ramshankar, H.; Pullagurla, H.; Bhandare, A.; Shanbhag, S.; Mehta, P.; Kundu, S.; Han, K.; Lobaton, E.; Wu, T. Vision-based integrated mobile robotic system for real-time applications in construction. Autom. Constr. 2018, 96, 470–482. [Google Scholar] [CrossRef]

- Bodenhagen, L.; Fugl, A.R.; Jordt, A.; Willatzen, M.; Andersen, K.A.; Olsen, M.M.; Koch, R.; Petersen, H.G.; Krüger, N. An adaptable robot vision system performing manipulation actions with flexible objects. IEEE Trans. Autom. Sci. Eng. 2014, 11, 749–765. [Google Scholar] [CrossRef]

- Davison, A.J. Mobile Robot Navigation Using Active Vision. 1999. Available online: https://www.robots.ox.ac.uk/ActiveVision/Papers/davison_dphil1998/davison_dphil1998.pdf (accessed on 10 January 2024).

- Steffens, C.R.; Messias, L.R.V.; Drews, P.J.L., Jr.; da Costa Botelho, S.S. On Robustness of Robotic and Autonomous Systems Perception. J. Intell. Robot. Syst. 2021, 101, 61. [Google Scholar] [CrossRef]

- Amanatiadis, A.; Gasteratos, A.; Papadakis, S.; Kaburlasos, V. Image stabilization in active robot vision. In Robot Vision; Intech Open: London, UK, 2010; pp. 261–274. [Google Scholar]

- Tung, C.; Kelleher, M.R.; Schlueter, R.J.; Xu, B.; Lu, Y.H.; Thiruvathukal, G.K.; Chen, Y.K.; Lu, Y. Large-scale object detection of images from network cameras in variable ambient lighting conditions. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 393–398. [Google Scholar]

- Ali, I.; Suominen, O.; Gotchev, A.; Morales, E.R. Methods for simultaneous robot-world-hand–eye calibration: A comparative study. Sensors 2019, 19, 2837. [Google Scholar] [CrossRef]

- Se, S.; Lowe, D.; Little, J. Local and global localization for mobile robots using visual landmarks. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), Maui, HI, USA, 29 October–3 November 2001; Volume 1, pp. 414–420. [Google Scholar]

- Zhang, T.; Cong, Y.; Dong, J.; Hou, D. Partial visual-tactile fused learning for robotic object recognition. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 4349–4361. [Google Scholar] [CrossRef]

- Tarokh, M.; Merloti, P. Vision-based robotic person following under light variations and difficult walking maneuvers. J. Field Robot. 2010, 27, 387–398. [Google Scholar] [CrossRef]

- Grift, T.; Zhang, Q.; Kondo, N.; Ting, K. A review of automation and robotics for the bio-industry. J. Biomechatron. Eng. 2008, 1, 37–54. [Google Scholar]

- Ge, W.; Chen, S.; Hu, H.; Zheng, T.; Fang, Z.; Zhang, C.; Yang, G. Detection and localization strategy based on YOLO for robot sorting under complex lighting conditions. Int. J. Intell. Robot. Appl. 2023, 7, 589–601. [Google Scholar] [CrossRef]

- Skinner, J.; Garg, S.; Sünderhauf, N.; Corke, P.; Upcroft, B.; Milford, M. High-fidelity simulation for evaluating robotic vision performance. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 2737–2744. [Google Scholar]

- Yeo, M.S.K.; Samarakoon, S.M.B.P.; Ng, Q.B.; Ng, Y.J.; Muthugala, M.A.V.J.; Elara, M.R.; Yeong, R.W.W. Robot-Inclusive False Ceiling Design Guidelines. Buildings 2021, 11, 600. [Google Scholar] [CrossRef]

- Mohan, R.E.; Tan, N.; Tjoelsen, K.; Sosa, R. Designing the robot inclusive space challenge. Digit. Commun. Netw. 2015, 1, 267–274. [Google Scholar] [CrossRef]

- Kiat Yeo, M.S.; Boon Ng, A.Q.; Jin Ng, T.Y.; Mudiyanselage, S.; Samarakoon, B.P.; Muthugala, M.A.V.J.; Mohan, R.E.; Ng, D.T. Robot-Inclusive Guidelines for Drain Inspection. In Proceedings of the 2021 8th International Conference on Information Technology, Computer and Electrical Engineering (ICITACEE), Semarang, Indonesia, 23–24 September 2021; pp. 7–12. [Google Scholar] [CrossRef]

- Yeo, M.S.K.; Samarakoon, S.M.B.P.; Ng, Q.B.; Muthugala, M.A.V.J.; Elara, M.R. Design of Robot-Inclusive Vertical Green Landscape. Buildings 2021, 11, 203. [Google Scholar] [CrossRef]

- Verne, G.B. Adapting to a robot: Adapting gardening and the garden to fit a robot lawn mower. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 34–42. [Google Scholar]

- Tan, N.; Mohan, R.E.; Watanabe, A. Toward a framework for robot-inclusive environments. Autom. Constr. 2016, 69, 68–78. [Google Scholar] [CrossRef]

- Jocelyn, S.; Burlet-Vienney, D.; Giraud, L.; Sghaier, A. Collaborative Robotics: Assessment of Safety Functions and Feedback from Workers, Users and Integrators in Quebec. 2019. Available online: https://www.irsst.qc.ca/media/documents/PubIRSST/R-1030.pdf?v=2021-10-02 (accessed on 10 January 2024).

- Hippertt, M.P.; Junior, M.L.; Szejka, A.L.; Junior, O.C.; Loures, E.R.; Santos, E.A.P. Towards safety level definition based on the HRN approach for industrial robots in collaborative activities. Procedia Manuf. 2019, 38, 1481–1490. [Google Scholar] [CrossRef]

- Saenz, J.; Behrens, R.; Schulenburg, E.; Petersen, H.; Gibaru, O.; Neto, P.; Elkmann, N. Methods for considering safety in design of robotics applications featuring human-robot collaboration. Int. J. Adv. Manuf. Technol. 2020, 107, 2313–2331. [Google Scholar] [CrossRef]

- Sandoval, E.B.; Sosa, R.; Montiel, M. Robot-Ergonomics: A proposal for a framework in HRI. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 233–234. [Google Scholar]

- Chen, S.; Zhang, J.; Zhang, H.; Kwok, N.; Li, Y.F. Intelligent lighting control for vision-based robotic manipulation. IEEE Trans. Ind. Electron. 2011, 59, 3254–3263. [Google Scholar] [CrossRef]

- Konstantzos, I.; Sadeghi, S.A.; Kim, M.; Xiong, J.; Tzempelikos, A. The effect of lighting environment on task performance in buildings—A review. Energy Build. 2020, 226, 110394. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, J.; Zhang, H.; Wang, W.; Li, Y. Active illumination for robot vision. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 411–416. [Google Scholar]

- ISO/CIE 8995-3:2018; Lighting of Work Places. ISO: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/70593.html (accessed on 10 January 2024).

- ISO/TC 274; Light and Lighting. DIN: Berlin, Germany, 2023.

- BS EN 17037:2018+A1:2021; Daylight in Buildings. BSI: London, UK, 2021.

- Sohan, M.; Sai Ram, T.; Reddy, R.; Venkata, C. A Review on YOLOv8 and Its Advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 529–545. [Google Scholar]

| Lighting Environment | Lux Level Range (Lux) | Conditions |

|---|---|---|

| Outdoors | Morning/Evening: 10–1000 Noon: 10000 Overcast/cloudy: 1000 | Outdoors, varying levels/colours due to position of sun in the sky |

| Office | 100–300 | Indoors, batch/area control |

| Retail | 200–500 | Indoors, area control, may have uneven lighting |

| Residential | 200–300 | Indoors, individual control, point/linear/cove lighting |

| Industrial | 300–700 | Indoors, batch control, spotlight lighting, evenly-distributed lighting |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, Z.; Yeo, M.S.K.; Borusu, C.S.C.S.; Muthugala, M.A.V.J.; Budig, M.; Elara, M.R.; Wang, Y. A Framework for Auditing Robot-Inclusivity of Indoor Environments Based on Lighting Condition. Buildings 2024, 14, 1110. https://doi.org/10.3390/buildings14041110

Zeng Z, Yeo MSK, Borusu CSCS, Muthugala MAVJ, Budig M, Elara MR, Wang Y. A Framework for Auditing Robot-Inclusivity of Indoor Environments Based on Lighting Condition. Buildings. 2024; 14(4):1110. https://doi.org/10.3390/buildings14041110

Chicago/Turabian StyleZeng, Zimou, Matthew S. K. Yeo, Charan Satya Chandra Sairam Borusu, M. A. Viraj J. Muthugala, Michael Budig, Mohan Rajesh Elara, and Yixiao Wang. 2024. "A Framework for Auditing Robot-Inclusivity of Indoor Environments Based on Lighting Condition" Buildings 14, no. 4: 1110. https://doi.org/10.3390/buildings14041110

APA StyleZeng, Z., Yeo, M. S. K., Borusu, C. S. C. S., Muthugala, M. A. V. J., Budig, M., Elara, M. R., & Wang, Y. (2024). A Framework for Auditing Robot-Inclusivity of Indoor Environments Based on Lighting Condition. Buildings, 14(4), 1110. https://doi.org/10.3390/buildings14041110