Assessing the Impact of Street Visual Environment on the Emotional Well-Being of Young Adults through Physiological Feedback and Deep Learning Technologies

Abstract

:1. Introduction

1.1. Research Background

1.2. Technology Status

2. Literature Review

2.1. Measurement of Emotion

2.2. Exploring the Impact of Street Visual Environment on Emotions

2.3. Research Goals

- To reveal how specific aspects of the street visual environment impact emotions, providing new theoretical insights into the interaction between street design and emotions.

- To develop and validate a technical framework that combines physiological feedback technology and deep learning methods, aimed at enhancing the accuracy and reliability of research on the impact of emotions through large-scale street sample data.

- Based on the analysis of data, to propose recommendations for street design improvements that promote residents’ emotional well-being and the development of psychologically friendly environments.

3. Data and Methodology

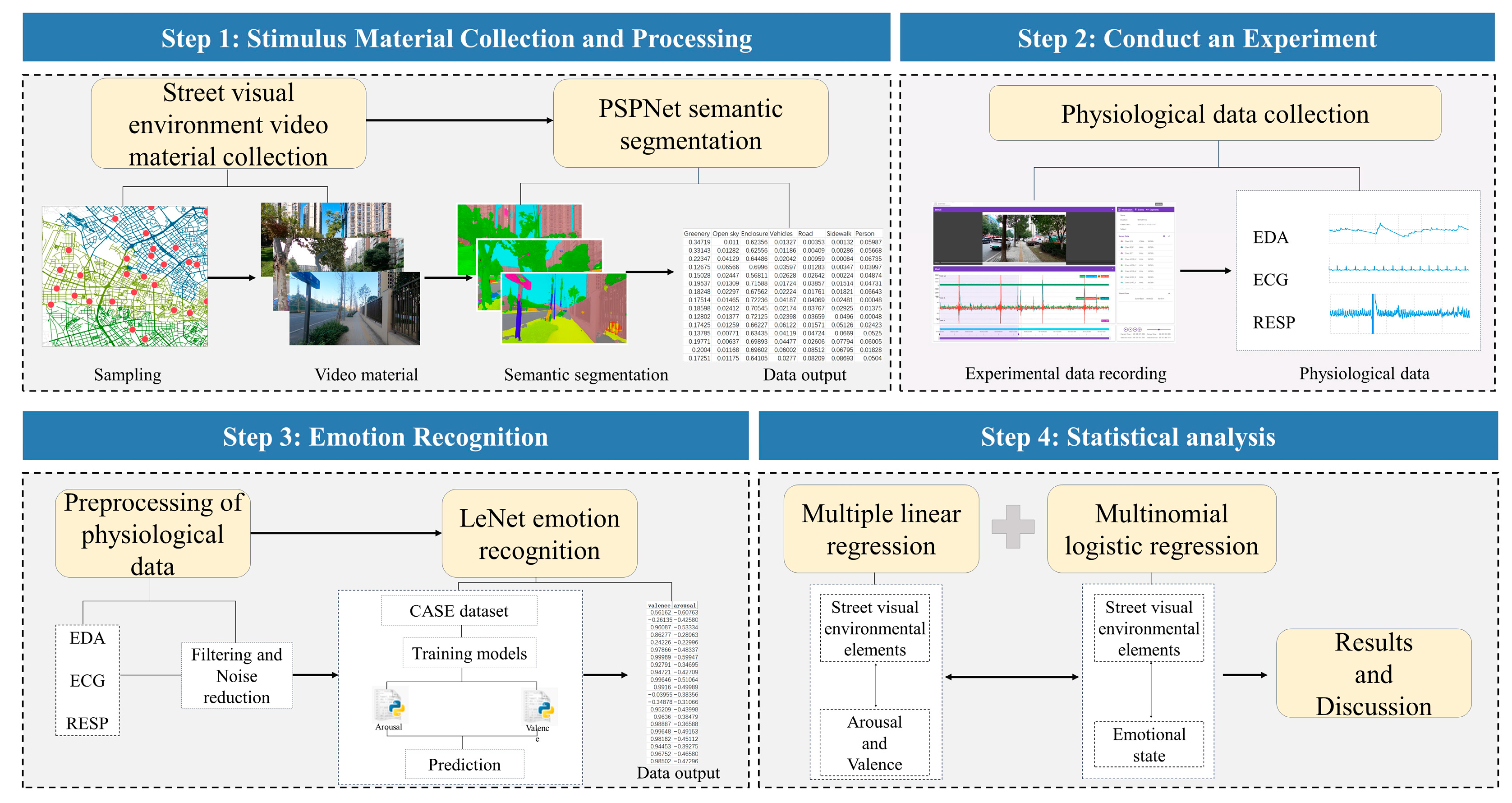

3.1. Research Design

3.2. Study Area and Sample Selection

3.2.1. Study Area

3.2.2. Stimulus Materials

3.3. Experimental Design

3.3.1. Volunteer Recruitment

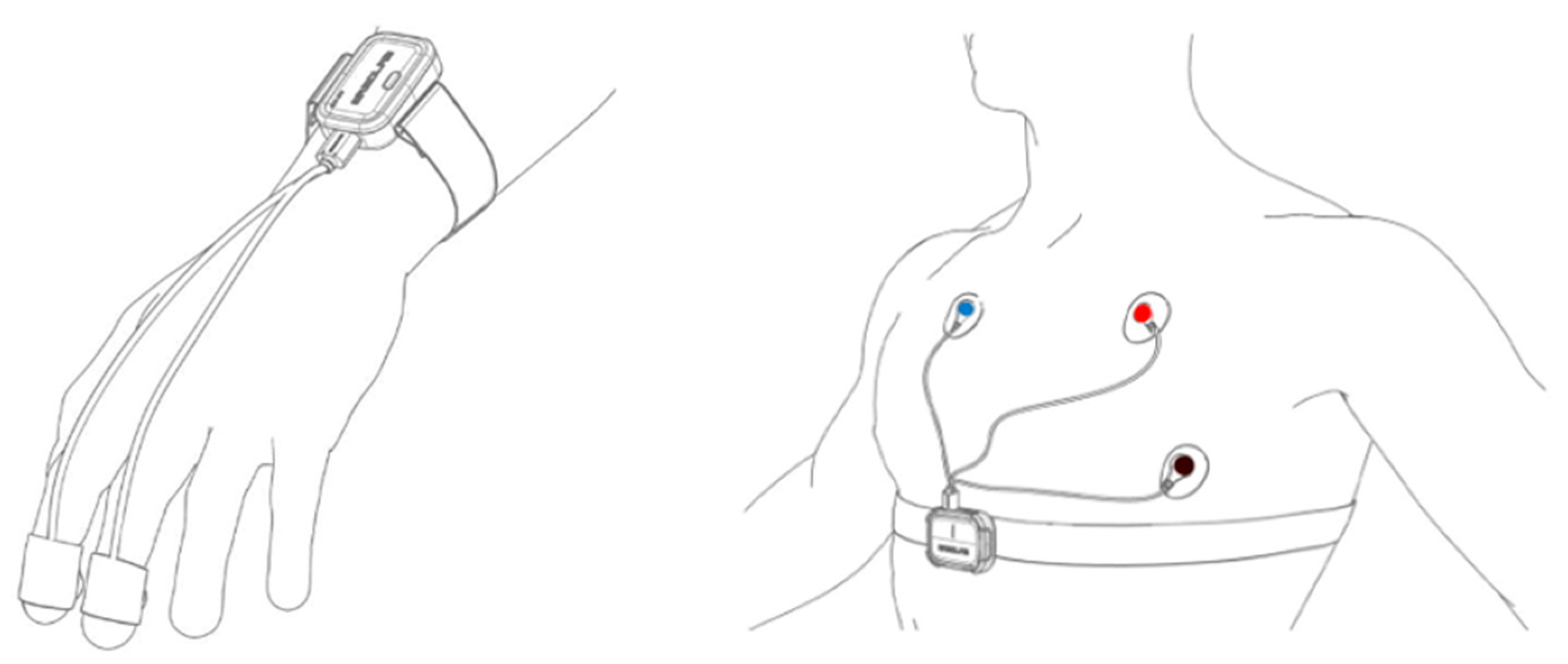

3.3.2. Equipment

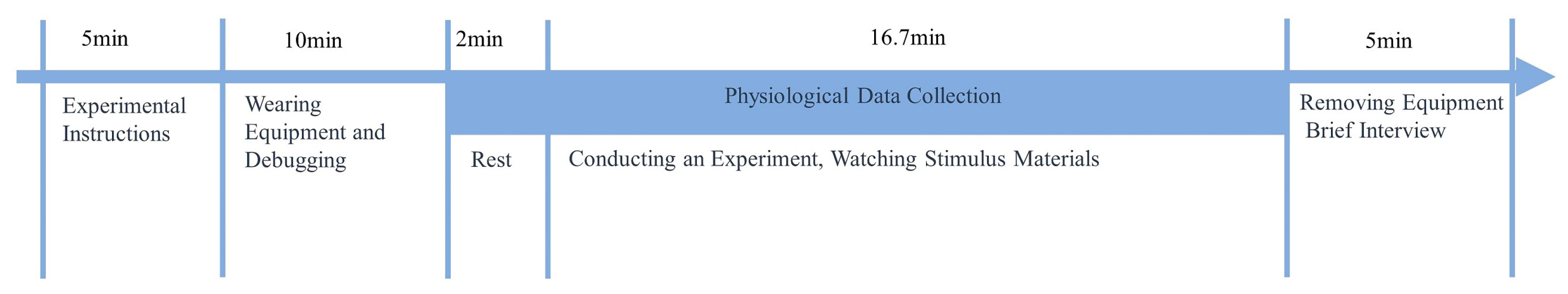

3.3.3. Experimental Process and Data Acquisition

3.4. Data Processing

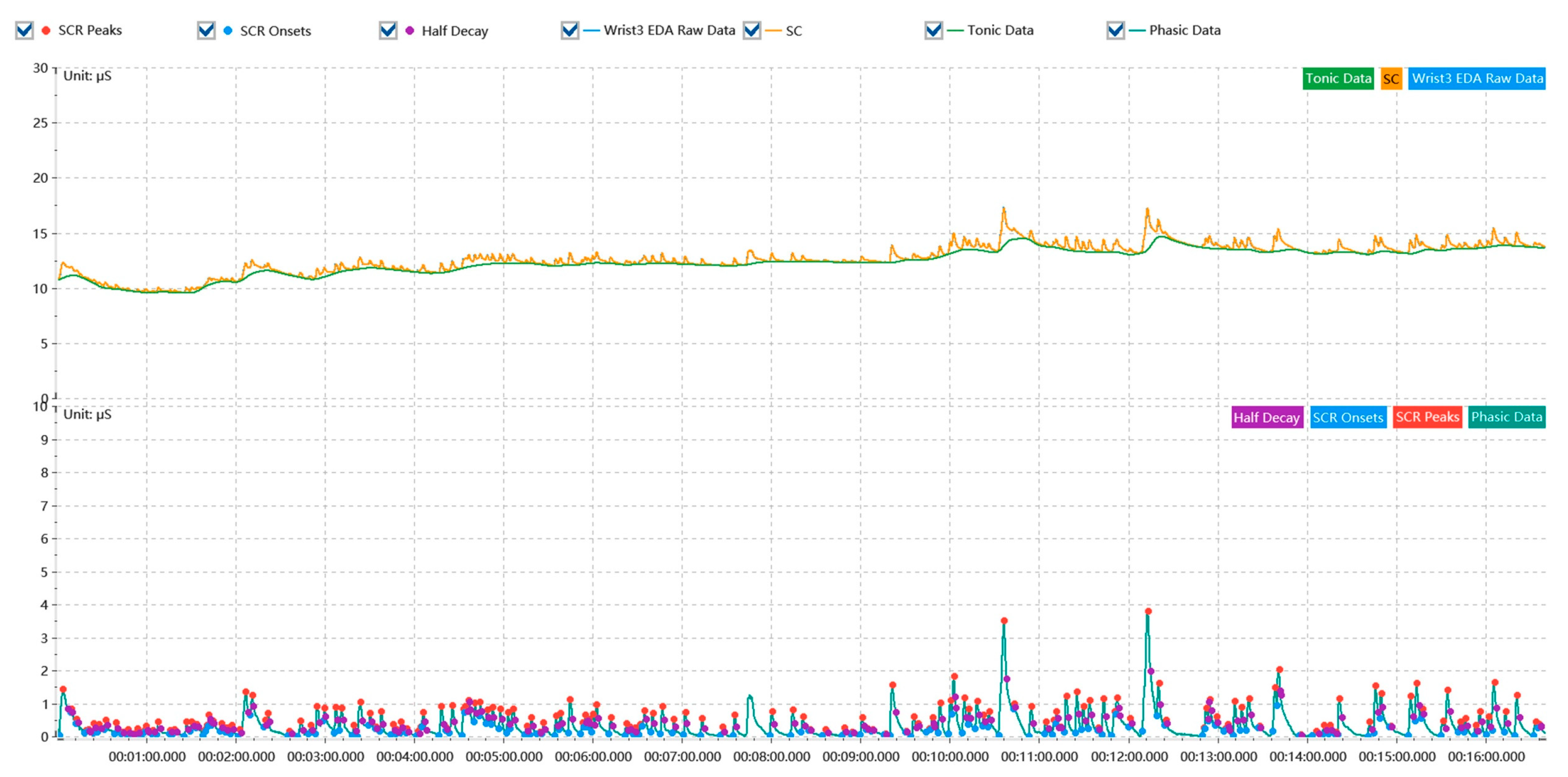

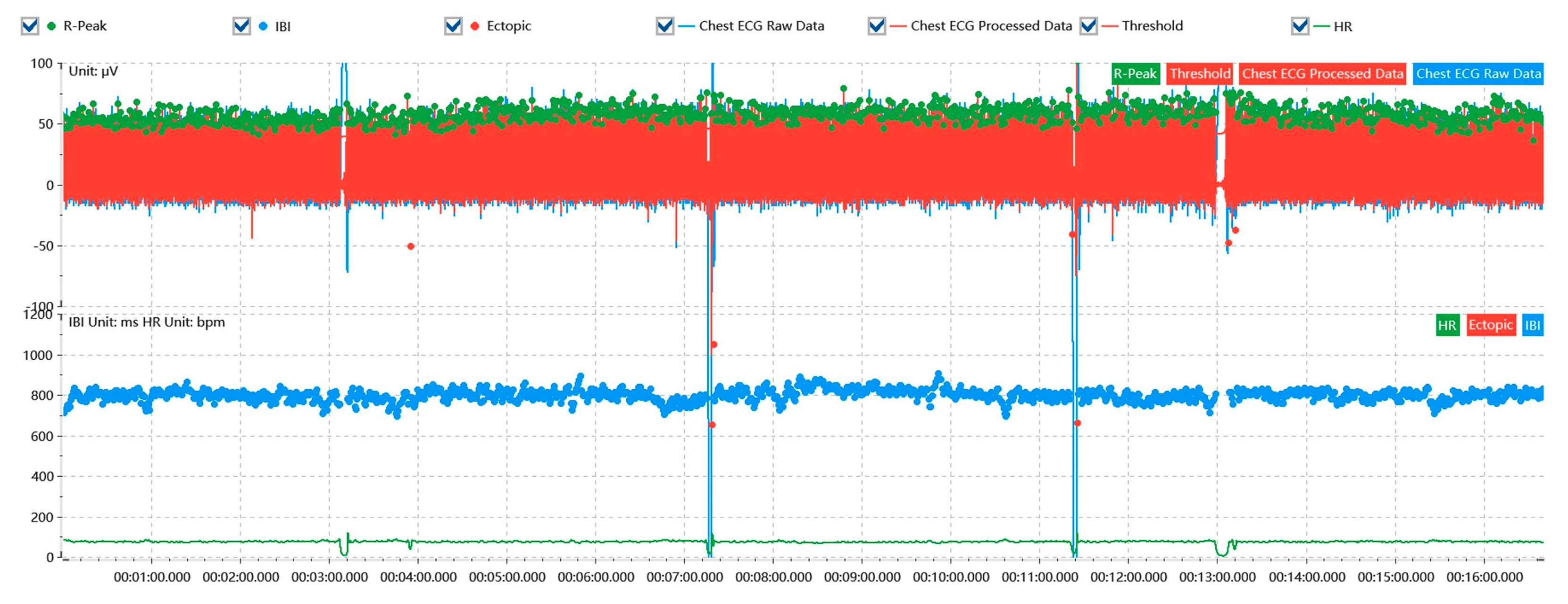

3.4.1. Physiological Data Processing

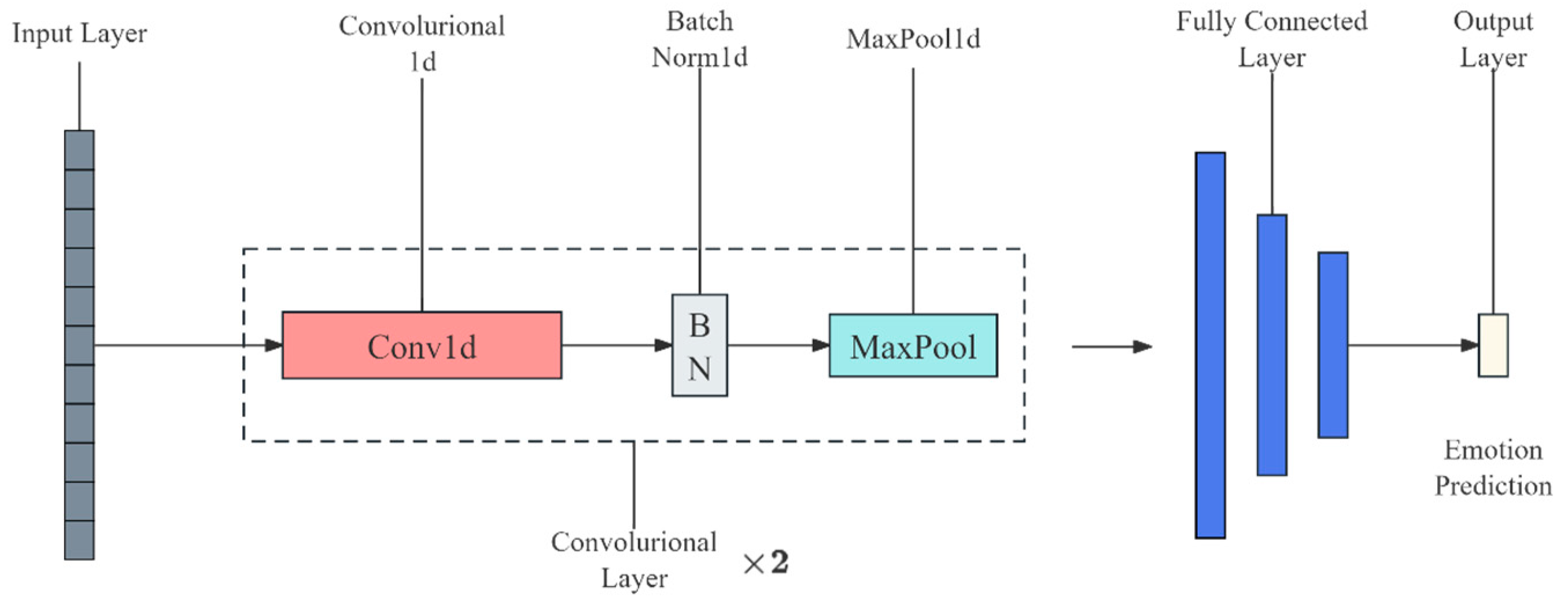

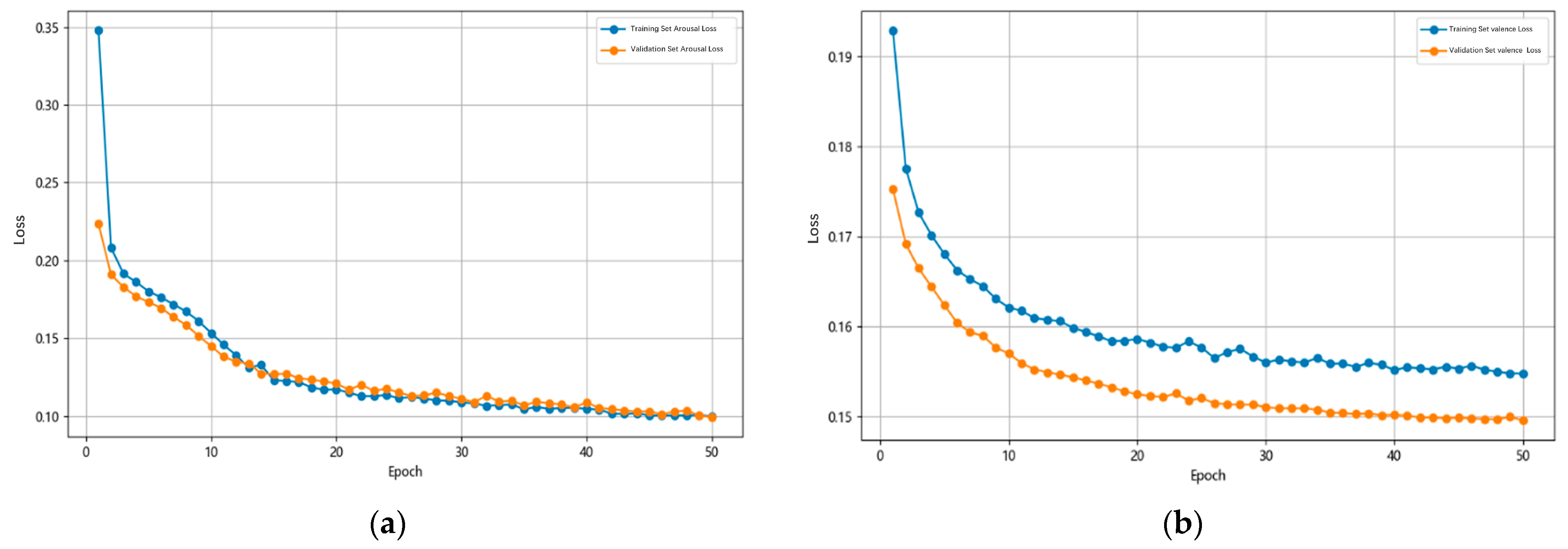

3.4.2. Emotion Signal Recognition

3.4.3. Semantic Segmentation of Visual Images

3.5. Statistical Analysis

3.5.1. Multiple Linear Regression Model

3.5.2. Multinomial Logistic Regression Model

4. Results

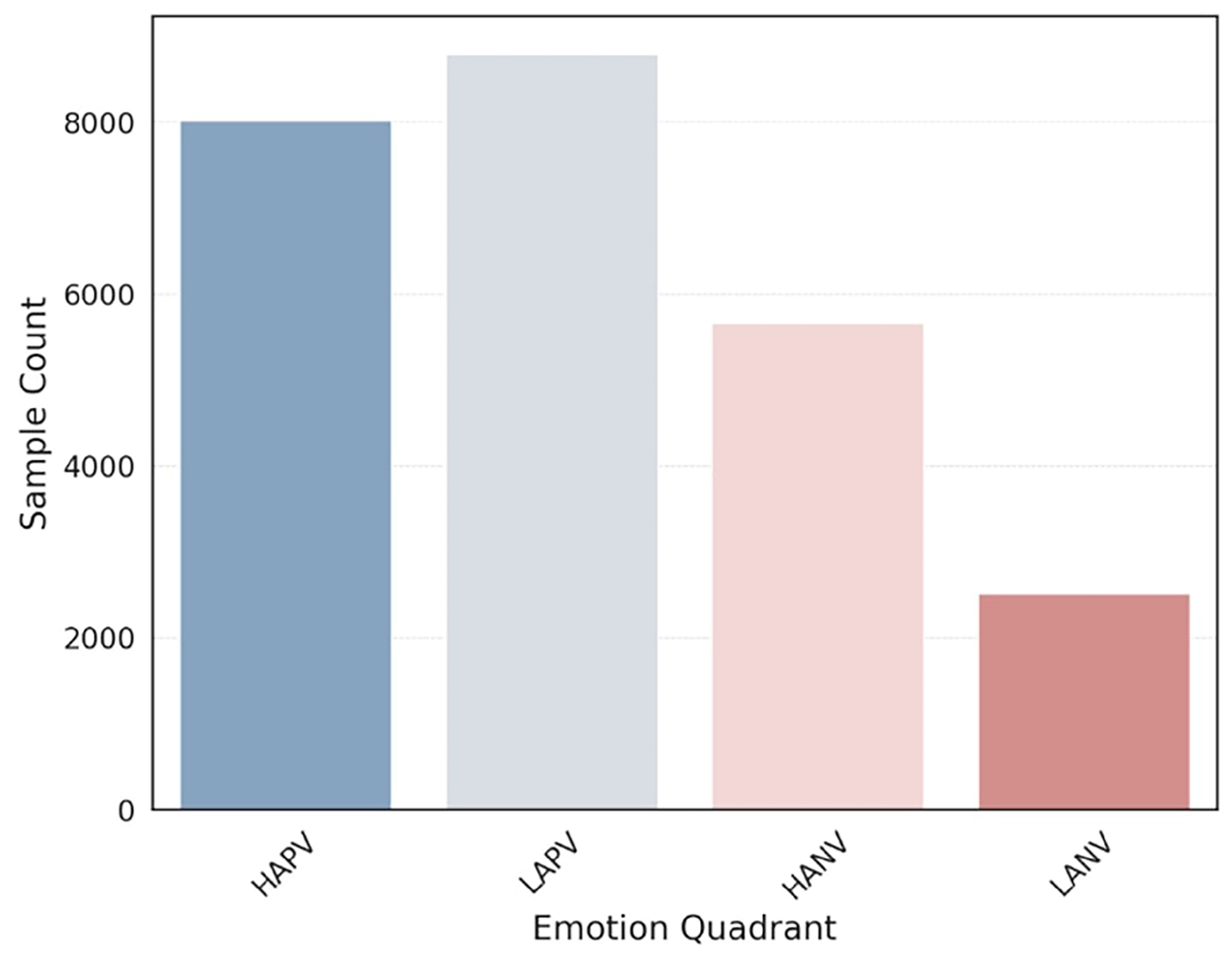

4.1. Descriptive Analysis

4.2. Multiple Linear Regression Model Results

4.3. Multinomial Logistic Regression Model Results

5. Discussion

5.1. The Impact of Street Visual Environment on Emotions

5.2. Advantages and Challenges of the Research Method

5.3. Implications for Street Space Design

5.4. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Andres, L.; Bryson, J.R.; Moawad, P. Temporary Urbanisms as Policy Alternatives to Enhance Health and Well-Being in the Post-Pandemic City. Curr. Environ. Health Rep. 2021, 8, 167–176. [Google Scholar] [CrossRef] [PubMed]

- Esterwood, E.; Saeed, S.A. Past Epidemics, Natural Disasters, COVID19, and Mental Health: Learning from History as We Deal with the Present and Prepare for the Future. Psychiatr. Q. 2020, 91, 1121–1133. [Google Scholar] [CrossRef] [PubMed]

- Jamshaid, S.; Bahadar, N.; Jamshed, K.; Rashid, M.; Imran Afzal, M.; Tian, L.; Umar, M.; Feng, X.; Khan, I.; Zong, M. Pre- and Post-Pandemic (COVID-19) Mental Health of International Students: Data from a Longitudinal Study. Psychol. Res. Behav. Manag. 2023, 16, 431–446. [Google Scholar] [CrossRef] [PubMed]

- Jacobs, J. The Death and Life of Great American Cities; Random House: New York, NY, USA, 1961. [Google Scholar]

- Wu, Y.-T.; Nash, P.; Barnes, L.E.; Minett, T.; Matthews, F.E.; Jones, A.; Brayne, C. Assessing Environmental Features Related to Mental Health: A Reliability Study of Visual Streetscape Images. BMC Public Health 2014, 14, 1094. [Google Scholar] [CrossRef] [PubMed]

- El Barachi, M.; AlKhatib, M.; Mathew, S.; Oroumchian, F. A Novel Sentiment Analysis Framework for Monitoring the Evolving Public Opinion in Real-Time: Case Study on Climate Change. J. Clean. Prod. 2021, 312, 127820. [Google Scholar] [CrossRef]

- Bibri, S.E.; Krogstie, J. Smart Sustainable Cities of the Future: An Extensive Interdisciplinary Literature Review. Sustain. Cities Soc. 2017, 31, 183–212. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and Validation of Brief Measures of Positive and Negative Affect: The PANAS Scales. J. Personal. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Kennedy-Moore, E.; Greenberg, M.A.; Newman, M.G.; Stone, A.A. The Relationship between Daily Events and Mood: The Mood Measure May Matter. Motiv. Emot. 1992, 16, 143–155. [Google Scholar] [CrossRef]

- Gaoua, N.; Grantham, J.; Racinais, S.; El Massioui, F. Sensory Displeasure Reduces Complex Cognitive Performance in the Heat. J. Environ. Psychol. 2012, 32, 158–163. [Google Scholar] [CrossRef]

- Lin, W.; Chen, Q.; Jiang, M.; Tao, J.; Liu, Z.; Zhang, X.; Wu, L.; Xu, S.; Kang, Y.; Zeng, Q. Sitting or Walking? Analyzing the Neural Emotional Indicators of Urban Green Space Behavior with Mobile EEG. J. Urban Health 2020, 97, 191–203. [Google Scholar] [CrossRef]

- Ding, X.; Guo, X.; Lo, T.T.; Wang, K. The Spatial Environment Affects Human Emotion Perception-Using Physiological Signal Modes. In Proceedings of the 27th International Conference of the Association for Computer-Aided Architectural Design Research in Asia (CAADRIA), Sydney, Australia, 9–15 April 2022; pp. 425–434. [Google Scholar] [CrossRef]

- Chen, Z.; Schulz, S.; Qiu, M.; Yang, W.; He, X.; Wang, Z.; Yang, L. Assessing Affective Experience of In-Situ Environmental Walk via Wearable Biosensors for Evidence-Based Design. Cogn. Syst. Res. 2018, 52, 970–977. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhuo, K.; Wei, W.; Li, F.; Yin, J.; Xu, L. Emotional Responses to the Visual Patterns of Urban Streets: Evidence from Physiological and Subjective Indicators. Int. J. Environ. Res. Public Health 2021, 18, 9677. [Google Scholar] [CrossRef] [PubMed]

- Xiang, L.; Cai, M.; Ren, C.; Ng, E. Modeling Pedestrian Emotion in High-Density Cities Using Visual Exposure and Machine Learning: Tracking Real-Time Physiology and Psychology in Hong Kong. Build. Environ. 2021, 205, 108273. [Google Scholar] [CrossRef]

- Zhang, R. Integrating Ergonomics Data and Emotional Scale to Analyze People’s Emotional Attachment to Different Landscape Features in the Wudaokou Urban Park. Front. Archit. Res. 2023, 12, 175–187. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, L.; Wu, W.; Zhong, S.; Wang, M. Locally Contextualized Psycho-Physiological Wellbeing Effects of Environmental Exposures: An Experimental-Based Evidence. Urban For. Urban Green. 2023, 88, 128070. [Google Scholar] [CrossRef]

- Shaoming, Z.; Yuan, Y.; Linting, W. Impacts of Urban Environment on Women’s Emotional Health and Planning Improving Strategies: An Empirical Study of Guangzhou Based on Neuroscience Experiments. China City Plan. Rev. 2023, 32, 17–27. [Google Scholar]

- Kim, J.; Kim, N. Quantifying Emotions in Architectural Environments Using Biometrics. Appl. Sci. 2022, 12, 9998. [Google Scholar] [CrossRef]

- Cai, M.; Xiang, L.; Ng, E. How Does the Visual Environment Influence Pedestrian Physiological Stress? Evidence from High-Density Cities Using Ambulatory Technology and Spatial Machine Learning. Sustain. Cities Soc. 2023, 96, 104695. [Google Scholar] [CrossRef]

- Deng, L.; Li, X.; Luo, H.; Fu, E.-K.; Ma, J.; Sun, L.-X.; Huang, Z.; Cai, S.-Z.; Jia, Y. Empirical Study of Landscape Types, Landscape Elements and Landscape Components of the Urban Park Promoting Physiological and Psychological Restoration. Urban For. Urban Green. 2020, 48, 126488. [Google Scholar] [CrossRef]

- Jiang, B.; Chang, C.-Y.; Sullivan, W.C. A Dose of Nature: Tree Cover, Stress Reduction, and Gender Differences. Landsc. Urban Plan. 2014, 132, 26–36. [Google Scholar] [CrossRef]

- Li, D.; Sullivan, W.C. Impact of Views to School Landscapes on Recovery from Stress and Mental Fatigue. Landsc. Urban Plan. 2016, 148, 149–158. [Google Scholar] [CrossRef]

- Von Leupoldt, A.; Vovk, A.; Bradley, M.M.; Keil, A.; Lang, P.J.; Davenport, P.W. The Impact of Emotion on Respiratory-Related Evoked Potentials. Psychophysiology 2010, 47, 579–586. [Google Scholar] [CrossRef] [PubMed]

- Bower, I.; Tucker, R.; Enticott, P.G. Impact of Built Environment Design on Emotion Measured via Neurophysiological Correlates and Subjective Indicators: A Systematic Review. J. Environ. Psychol. 2019, 66, 101344. [Google Scholar] [CrossRef]

- Thompson, R.A. Emotional Regulation and Emotional Development. Educ. Psychol. Rev. 1991, 3, 269–307. [Google Scholar] [CrossRef]

- Hasnul, M.A.; Aziz, N.A.A.; Alelyani, S.; Mohana, M.; Aziz, A.A. Electrocardiogram-Based Emotion Recognition Systems and Their Applications in Healthcare—A Review. Sensors 2021, 21, 5015. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Russell, J.A. A Circumplex Model of Affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; The MIT Press: Cambridge, MA, USA, 2000. [Google Scholar] [CrossRef]

- Bota, P.J.; Wang, C.; Fred, A.L.N.; Placido Da Silva, H. A Review, Current Challenges, and Future Possibilities on Emotion Recognition Using Machine Learning and Physiological Signals. IEEE Access 2019, 7, 140990–141020. [Google Scholar] [CrossRef]

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074. [Google Scholar] [CrossRef]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward Machine Emotional Intelligence: Analysis of Affective Physiological State. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Birenboim, A.; Dijst, M.; Scheepers, F.E.; Poelman, M.P.; Helbich, M. Wearables and Location Tracking Technologies for Mental-State Sensing in Outdoor Environments. Prof. Geogr. 2019, 71, 449–461. [Google Scholar] [CrossRef]

- Li, Q.; Liu, Y.; Yan, F.; Zhang, Q.; Liu, C. Emotion Recognition Based on Multiple Physiological Signals. Biomed. Signal Process. Control 2023, 85, 104989. [Google Scholar] [CrossRef]

- Jos, J. Brand Experience: What Is It? How Is It Measured? Does It Affect Loyalty? J. Mark. 2009, 73, 52–68. [Google Scholar]

- Kiefer, P.; Giannopoulos, I.; Raubal, M.; Duchowski, A. Eye Tracking for Spatial Research: Cognition, Computation, Challenges. Spat. Cogn. Comput. 2017, 17, 1–19. [Google Scholar] [CrossRef]

- Kaparias, I.; Hirani, J.; Bell, M.G.H.; Mount, B. Pedestrian Gap Acceptance Behavior in Street Designs with Elements of Shared Space. Transp. Res. Rec. 2016, 2586, 17–27. [Google Scholar] [CrossRef]

- Harvey, C.; Aultman-Hall, L.; Hurley, S.E.; Troy, A. Effects of Skeletal Streetscape Design on Perceived Safety. Landsc. Urban Plan. 2015, 142, 18–28. [Google Scholar] [CrossRef]

- Jiang, Y.; Han, Y.; Liu, M.; Ye, Y. Street Vitality and Built Environment Features: A Data-Informed Approach from Fourteen Chinese Cities. Sustain. Cities Soc. 2022, 79, 103724. [Google Scholar] [CrossRef]

- Sarkar, C.; Webster, C.; Gallacher, J. Residential Greenness and Prevalence of Major Depressive Disorders: A Cross-Sectional, Observational, Associational Study of 94,879 Adult UK Biobank Participants. Lancet Planet. Health 2018, 2, e162–e173. [Google Scholar] [CrossRef]

- Zhang, H. Affective Appraisal of Residents and Visual Elements in the Neighborhood: A Case Study in an Established Suburban Community. Landsc. Urban Plan. 2011, 101, 11–21. [Google Scholar] [CrossRef]

- Cullen, G. Concise Townscape; Routledge: London, UK, 2012. [Google Scholar] [CrossRef]

- Zhang, H.; Nam, N.D.; Hu, Y.-C. The Impacts of Visual Factors on Resident’s Perception, Emotion and Place Attachment. Environ.-Behav. Proc. J. 2020, 5, 237–243. [Google Scholar] [CrossRef]

- Olszewska-Guizzo, A.; Sia, A.; Fogel, A.; Ho, R. Features of Urban Green Spaces Associated with Positive Emotions, Mindfulness and Relaxation. Sci. Rep. 2022, 12, 20695. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Zhang, D.; Liu, Y.; Lin, H. Representing Place Locales Using Scene Elements. Comput. Environ. Urban Syst. 2018, 71, 153–164. [Google Scholar] [CrossRef]

- Chen, C.; Li, H.; Luo, W.; Xie, J.; Yao, J.; Wu, L.; Xia, Y. Predicting the Effect of Street Environment on Residents’ Mood States in Large Urban Areas Using Machine Learning and Street View Images. Sci. Total Environ. 2022, 816, 151605. [Google Scholar] [CrossRef]

- Roe, J.; Thompson, C.; Aspinall, P.; Brewer, M.; Duff, E.; Miller, D.; Mitchell, R.; Clow, A. Green Space and Stress: Evidence from Cortisol Measures in Deprived Urban Communities. Int. J. Environ. Res. Public Health 2013, 10, 4086–4103. [Google Scholar] [CrossRef] [PubMed]

- Berman, M.G.; Jonides, J.; Kaplan, S. The Cognitive Benefits of Interacting with Nature. Psychol. Sci. 2008, 19, 1207–1212. [Google Scholar] [CrossRef] [PubMed]

- Liao, B.; Van Den Berg, P.E.W.; Van Wesemael, P.J.V.; Arentze, T.A. Individuals’ Perception of Walkability: Results of a Conjoint Experiment Using Videos of Virtual Environments. Cities 2022, 125, 103650. [Google Scholar] [CrossRef]

- ErgoLAB Human-Machine Environment Synchronous Cloud Platform. Available online: https://www.ergolab.cn/ (accessed on 6 March 2024).

- Sharma, K.; Castellini, C.; Van Den Broek, E.L.; Albu-Schaeffer, A.; Schwenker, F. A Dataset of Continuous Affect Annotations and Physiological Signals for Emotion Analysis. Sci. Data 2019, 6, 196. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.E.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.E.; Jackel, L.D. Handwritten Digit Recognition with a Back-Propagation Network. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 27–30 November 1989. [Google Scholar]

- Zhou, B.; Zhao, H.; Puig, X.; Fidler, S.; Barriuso, A.; Torralba, A. Scene Parsing through ADE20K Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5122–5130. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- De Vries, S.; Van Dillen, S.M.E.; Groenewegen, P.P.; Spreeuwenberg, P. Streetscape Greenery and Health: Stress, Social Cohesion and Physical Activity as Mediators. Soc. Sci. Med. 2013, 94, 26–33. [Google Scholar] [CrossRef]

- Song, Y.; Gee, G.C.; Fan, Y.; Takeuchi, D.T. Do Physical Neighborhood Characteristics Matter in Predicting Traffic Stress and Health Outcomes? Transp. Res. Part F Traffic Psychol. Behav. 2007, 10, 164–176. [Google Scholar] [CrossRef]

- Shi, S.; Gou, Z.; Chen, L.H.C. How Does Enclosure Influence Environmental Preferences? A Cognitive Study on Urban Public Open Spaces in Hong Kong. Sustain. Cities Soc. 2014, 13, 148–156. [Google Scholar] [CrossRef]

- Samus, A.; Freeman, C.; Van Heezik, Y.; Krumme, K.; Dickinson, K.J.M. How Do Urban Green Spaces Increase Well-Being? The Role of Perceived Wildness and Nature Connectedness. J. Environ. Psychol. 2022, 82, 101850. [Google Scholar] [CrossRef]

- Space-Time Analytics of Human Physiology for Urban Planning—ScienceDirect. Available online: https://www.sciencedirect.com/science/article/pii/S0198971520302878 (accessed on 26 February 2024).

- Bibri, S.E. The Anatomy of the Data-Driven Smart Sustainable City: Instrumentation, Datafication, Computerization and Related Applications. J. Big Data 2019, 6, 59. [Google Scholar] [CrossRef]

| Variable | Features | Formula |

|---|---|---|

| Green view factor (GVF) | Percentage of green plant pixels of the street view image | GVF = |

| Sky view factor (Sky VF) | Percentage of sky pixels of the street view image | Sky VF = |

| Visual enclosure (VE) | Percentage of vertical terrain features pixels in the street view image | VE = |

| Vehicles view factor (VVF) | Percentage of non-motorized and motorized vehicle pixels in the street view image | VVF = |

| Road view factor (RVF) | Percentage of road pixels of the street view image | RVF = |

| Sidewalk view factor (SVF) | Percentage of sidewalk pixels of the street view image | SVF = |

| Person view factor (PVF) | Percentage of person pixels of the street view image | PVF = |

| Building view factor (BVF) | Percentage of building pixels of the street view image | BVF = |

| Characteristics of Participants | Variant | N (%) |

|---|---|---|

| Gender | Male | 27 (54%) |

| Female | 23 (46%) | |

| Age | 18–22 years | 4 (8%) |

| 23–25 years | 20 (40%) | |

| Over 25 years | 26 (52%) | |

| Educational Background | Bachelor Student | 20 (40%) |

| Master Student | 28 (56%) | |

| Doctoral Student | 2 (4%) |

| Variables | Mean | SD | Min | Max |

|---|---|---|---|---|

| Street visual environmental characteristics | ||||

| GVF | 0.326 | 0.167 | 0.00005 | 0.759 |

| Sky VF | 0.08 | 0.07 | 0.00006 | 0.364 |

| VE | 0.516 | 0.105 | 0.19 | 0.825 |

| VVF | 0.04 | 0.045 | 0 | 0.257 |

| RVF | 0.086 | 0.062 | 0 | 0.35 |

| SVF | 0.129 | 0.068 | 0 | 0.379 |

| PVF | 0.011 | 0.02 | 0 | 0.179 |

| BVF | 0.214 | 0.129 | 0 | 0.582 |

| participants’ emotional characteristics | ||||

| Arousal | 0.077 | 0.6 | −1 | 1 |

| Adjusted Arousal | 0.016 | 0.616 | −1 | 1 |

| Valence | 0.163 | 0.432 | −0.999 | 0.996 |

| Variables | Model 1 (Arousal) | Model 2 (Adjusted Arousal) | Model 3 (Valence) | VIF |

|---|---|---|---|---|

| Standardized Coefficients | Standardized Coefficients | Standardized Coefficients | ||

| GVF | −0.069 *** | 0.248 *** | 0.295 *** | 2.123 |

| Sky VF | −0.065 *** | 0.195 *** | 0.274 *** | 3.339 |

| VE | −0.003 | −0.059 *** | −0.109 *** | 3.184 |

| VVF | 0.008 | −0.065 *** | −0.156 *** | 1.461 |

| RVF | 0.011 | −0.048 *** | −0.119 *** | 1.448 |

| SVF | −0.047 *** | 0.090 *** | 0.210 *** | 1.284 |

| PVF | 0.019 * | −0.074 *** | −0.141 *** | 1.418 |

| BVF | −0.003 | −0.066 *** | −0.007 | 1.796 |

| R-squared | 0.012 | 0.144 | 0.269 |

| Emotional State | Variables | β | SE | Wald | Exp (β) |

|---|---|---|---|---|---|

| LAPV | GVF *** | 0.668 | 0.027 | 615.566 | 1.950 |

| Sky VF *** | 0.682 | 0.036 | 362.300 | 1.977 | |

| VE *** | −0.163 | 0.032 | 25.584 | 0.850 | |

| VVF *** | −0.297 | 0.021 | 192.398 | 0.743 | |

| RVF *** | −0.233 | 0.021 | 117.830 | 0.792 | |

| SVF *** | 0.431 | 0.021 | 421.793 | 1.539 | |

| PVF *** | −0.331 | 0.023 | 215.981 | 0.718 | |

| BVF | 0.010 | 0.023 | 0.190 | 1.010 | |

| HAPV | GVF *** | 0.692 | 0.028 | 623.493 | 1.997 |

| Sky VF *** | 0.685 | 0.036 | 352.755 | 1.983 | |

| VE *** | −0.212 | 0.033 | 41.223 | 0.809 | |

| VVF *** | −0.370 | 0.022 | 271.004 | 0.691 | |

| RVF *** | −0.263 | 0.022 | 140.296 | 0.769 | |

| SVF *** | 0.452 | 0.022 | 442.022 | 1.572 | |

| PVF *** | −0.319 | 0.023 | 193.756 | 0.727 | |

| BVF | −0.007 | 0.024 | 0.085 | 0.993 | |

| LANV | GVF | 0.001 | 0.035 | 0.001 | 1.001 |

| Sky VF | −0.005 | 0.048 | 0.011 | 0.995 | |

| VE | 0.028 | 0.041 | 0.480 | 1.029 | |

| VVF | 0.040 | 0.026 | 2.396 | 1.041 | |

| RVF | 0.016 | 0.026 | 0.377 | 1.016 | |

| SVF * | −0.063 | 0.027 | 5.298 | 0.939 | |

| PVF * | 0.045 | 0.022 | 4.313 | 1.046 | |

| BVF | −0.026 | 0.029 | 0.819 | 0.974 |

| Street Type | Emotional Goals | Emotional Needs | Optional Design Strategies |

|---|---|---|---|

| Residential Streets | LAPV | Promote positive emotions and well-being | 1. Enhance natural connections and increase street greenery 2. Improve walking experience and prioritize pedestrian traffic 3. Optimize street scale to create comfortable spaces. |

| Commercial Streets | HAPV | ||

| Landscape Streets | LAPV\HAPV | ||

| Specialized Streets | LAPV\HAPV | ||

| Industrial Streets | LAPV | Alleviate negative emotions and stress | 1. Reduce traffic interference and ensure pedestrian needs 2. Improve spatial design to reduce visual distractions 3. Ensure green travel and maintain smooth pedestrian flow |

| Traffic Streets | LAPV |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, W.; Tan, L.; Niu, S.; Qing, L. Assessing the Impact of Street Visual Environment on the Emotional Well-Being of Young Adults through Physiological Feedback and Deep Learning Technologies. Buildings 2024, 14, 1730. https://doi.org/10.3390/buildings14061730

Zhao W, Tan L, Niu S, Qing L. Assessing the Impact of Street Visual Environment on the Emotional Well-Being of Young Adults through Physiological Feedback and Deep Learning Technologies. Buildings. 2024; 14(6):1730. https://doi.org/10.3390/buildings14061730

Chicago/Turabian StyleZhao, Wei, Liang Tan, Shaofei Niu, and Linbo Qing. 2024. "Assessing the Impact of Street Visual Environment on the Emotional Well-Being of Young Adults through Physiological Feedback and Deep Learning Technologies" Buildings 14, no. 6: 1730. https://doi.org/10.3390/buildings14061730