Five Machine Learning Models Predicting the Global Shear Capacity of Composite Cellular Beams with Hollow-Core Units

Abstract

:1. Introduction

2. Background

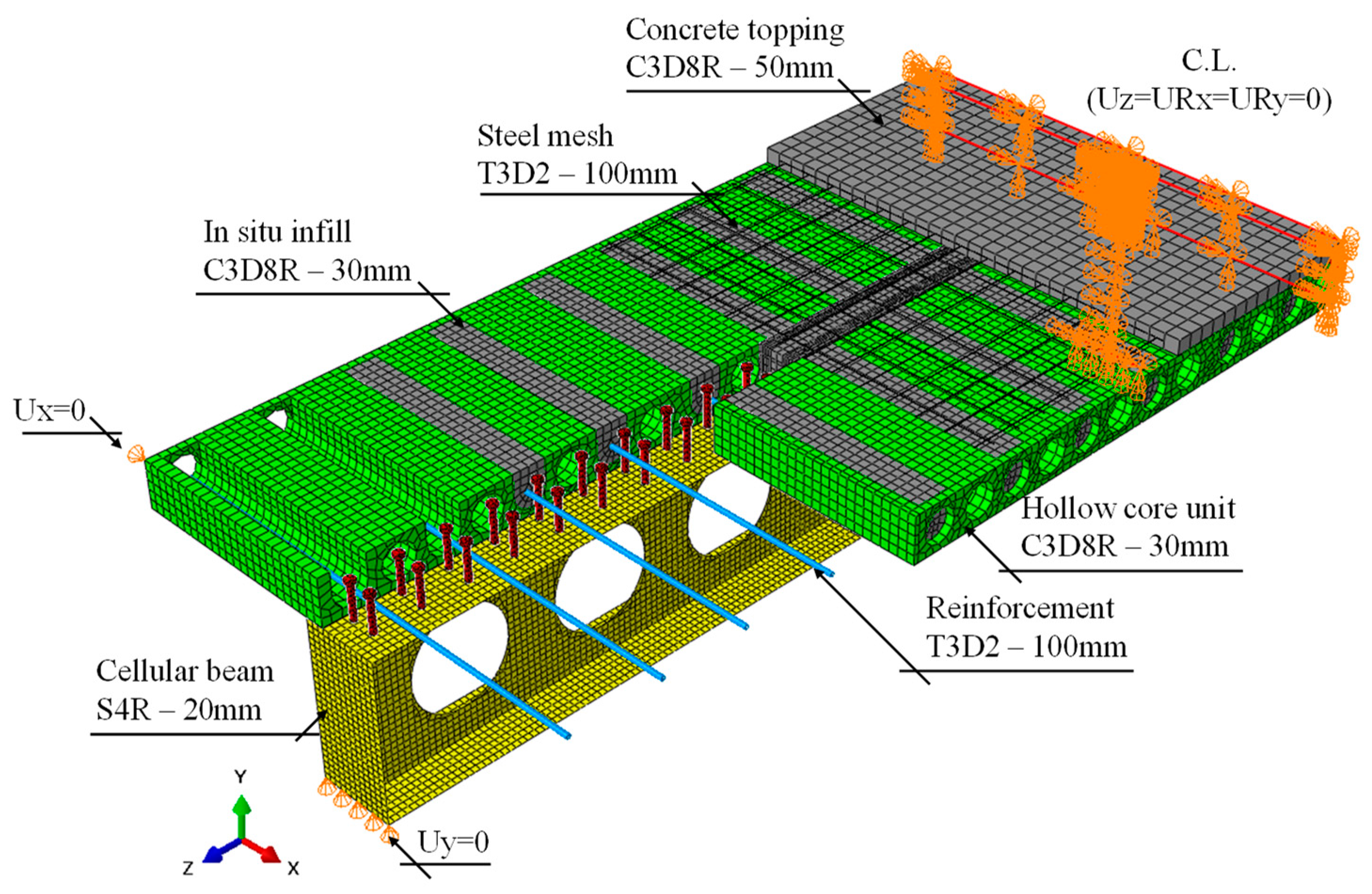

3. Finite Element Method

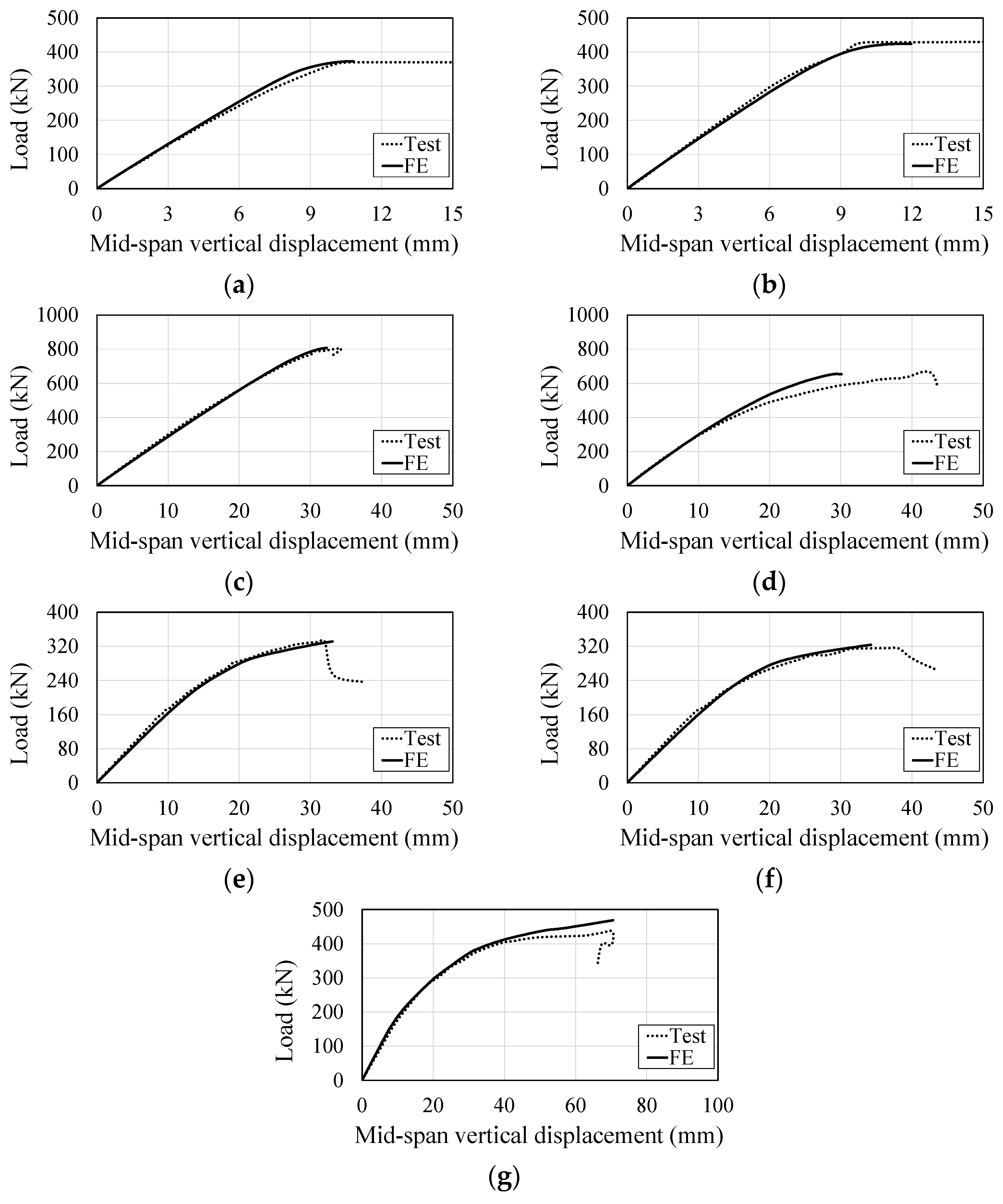

3.1. Validation Results

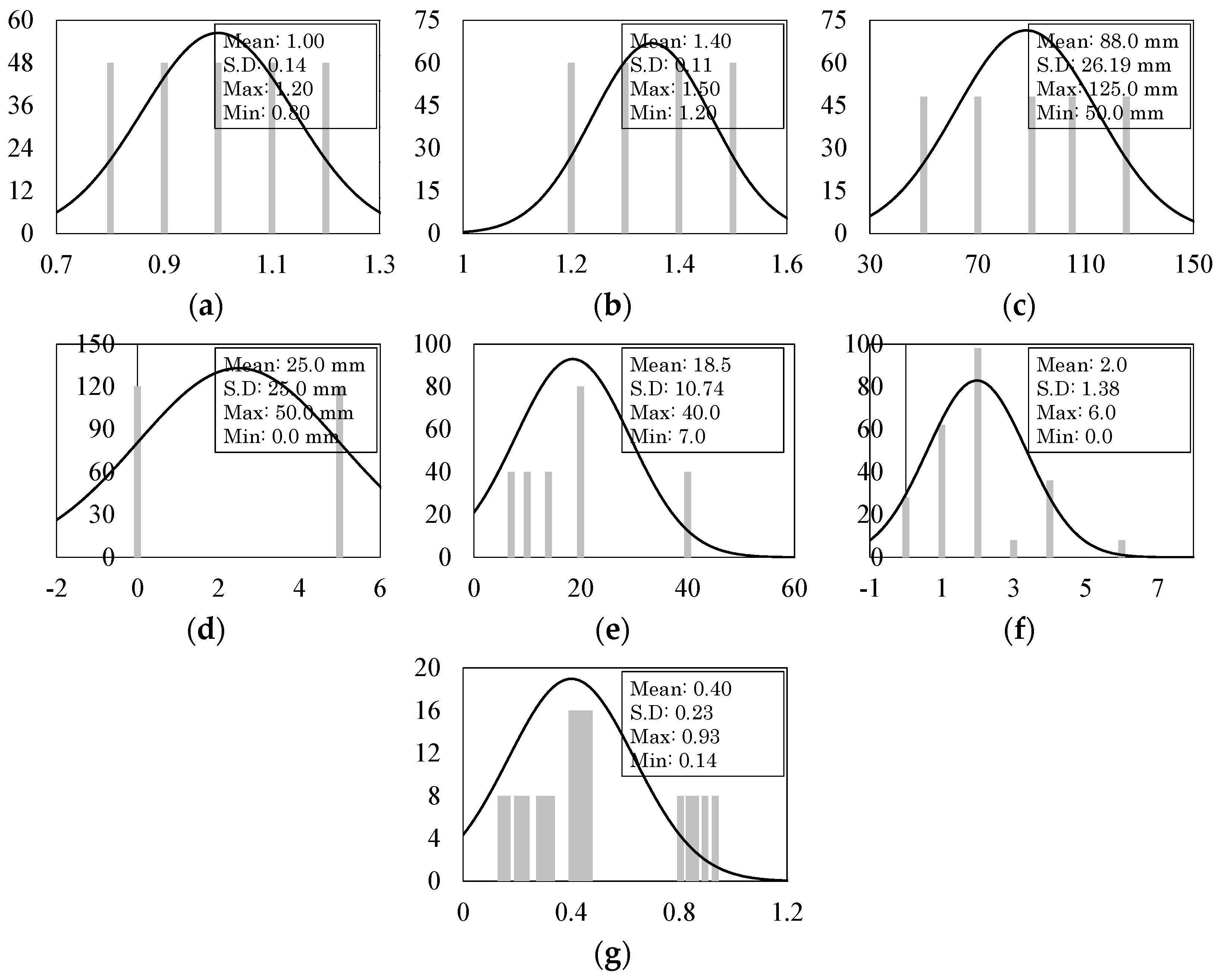

3.2. Parametric Study

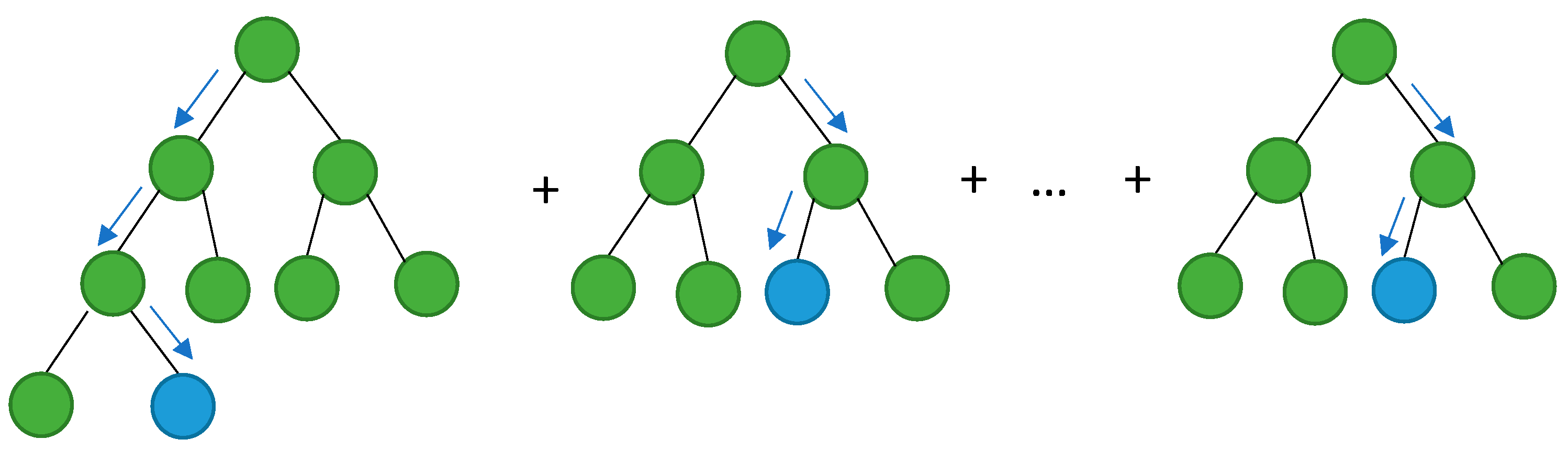

4. Machine Learning Models

4.1. CatBoost

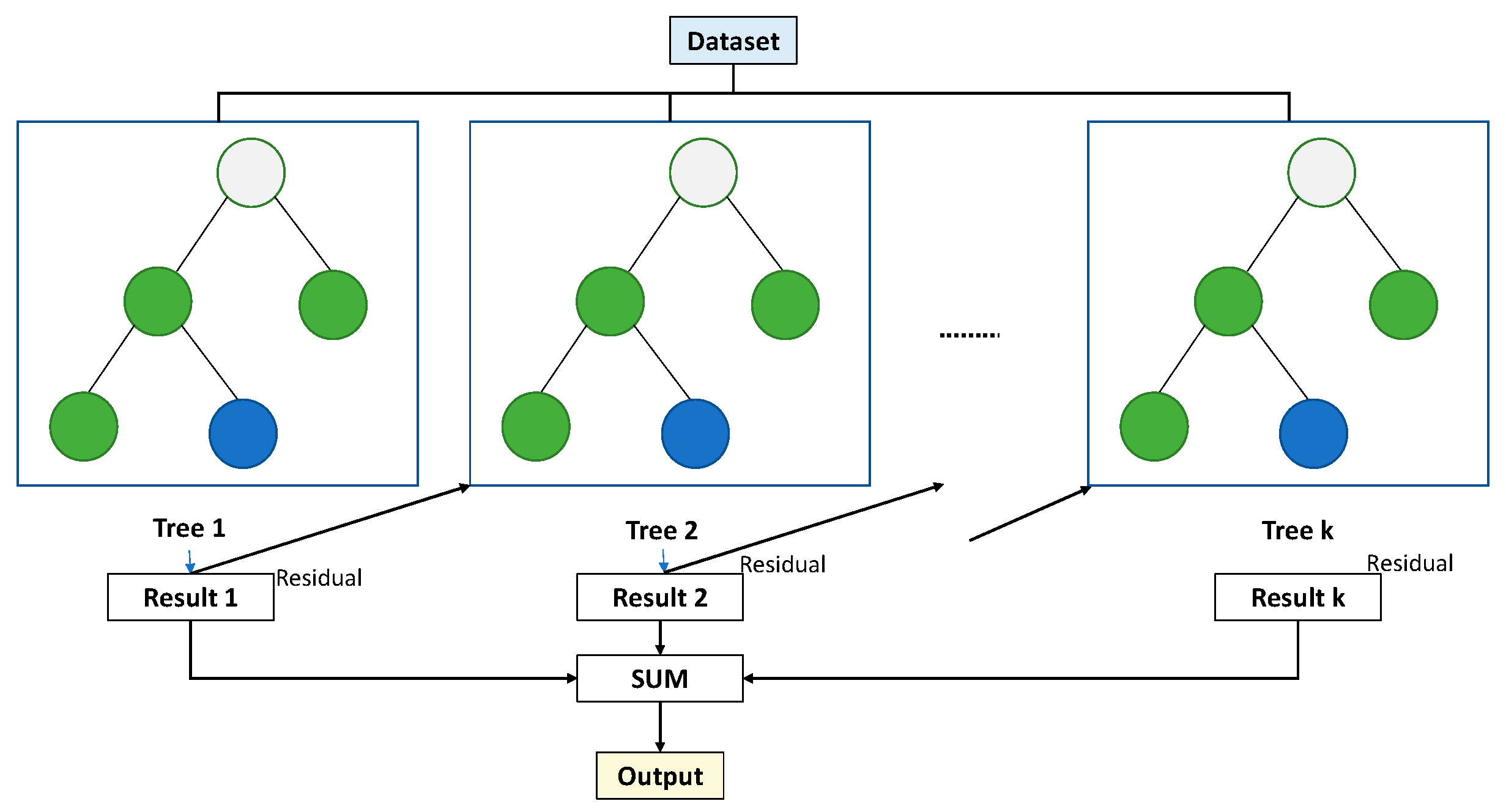

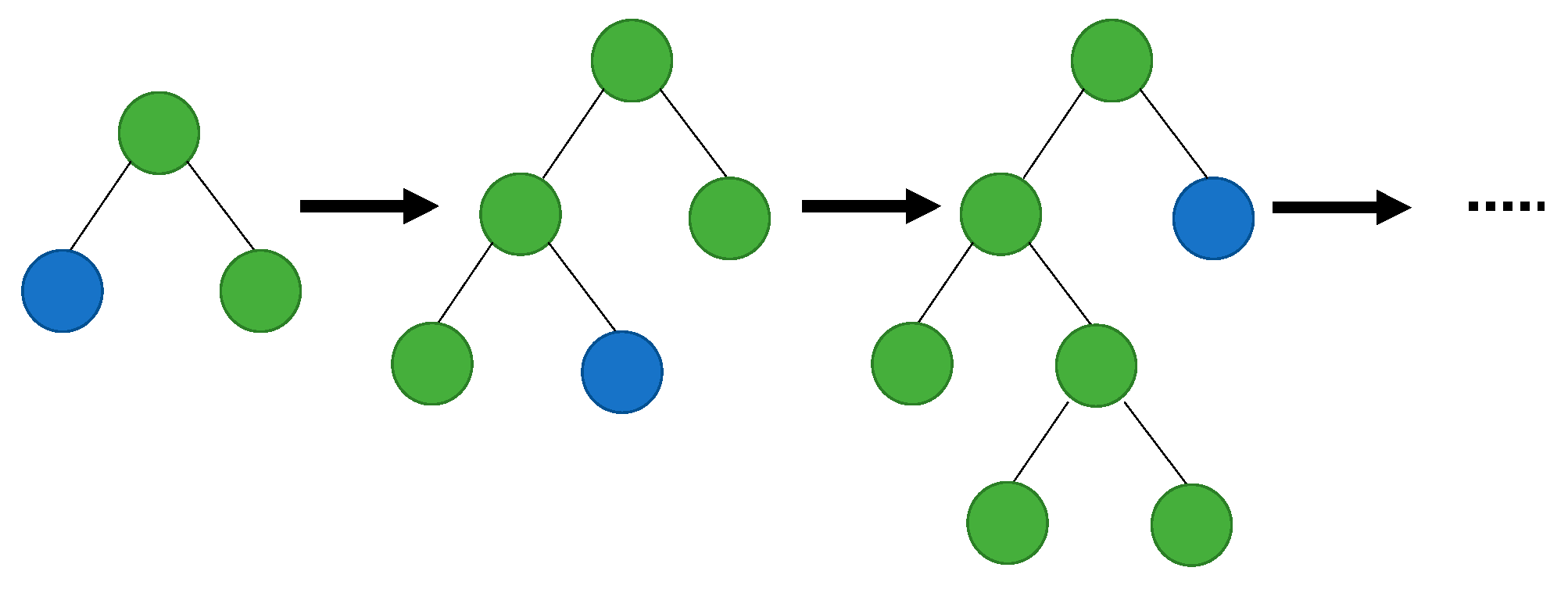

4.2. Gradient Boosting

4.3. Extreme Gradient Boosting

4.4. Light Gradient Boosting Machine

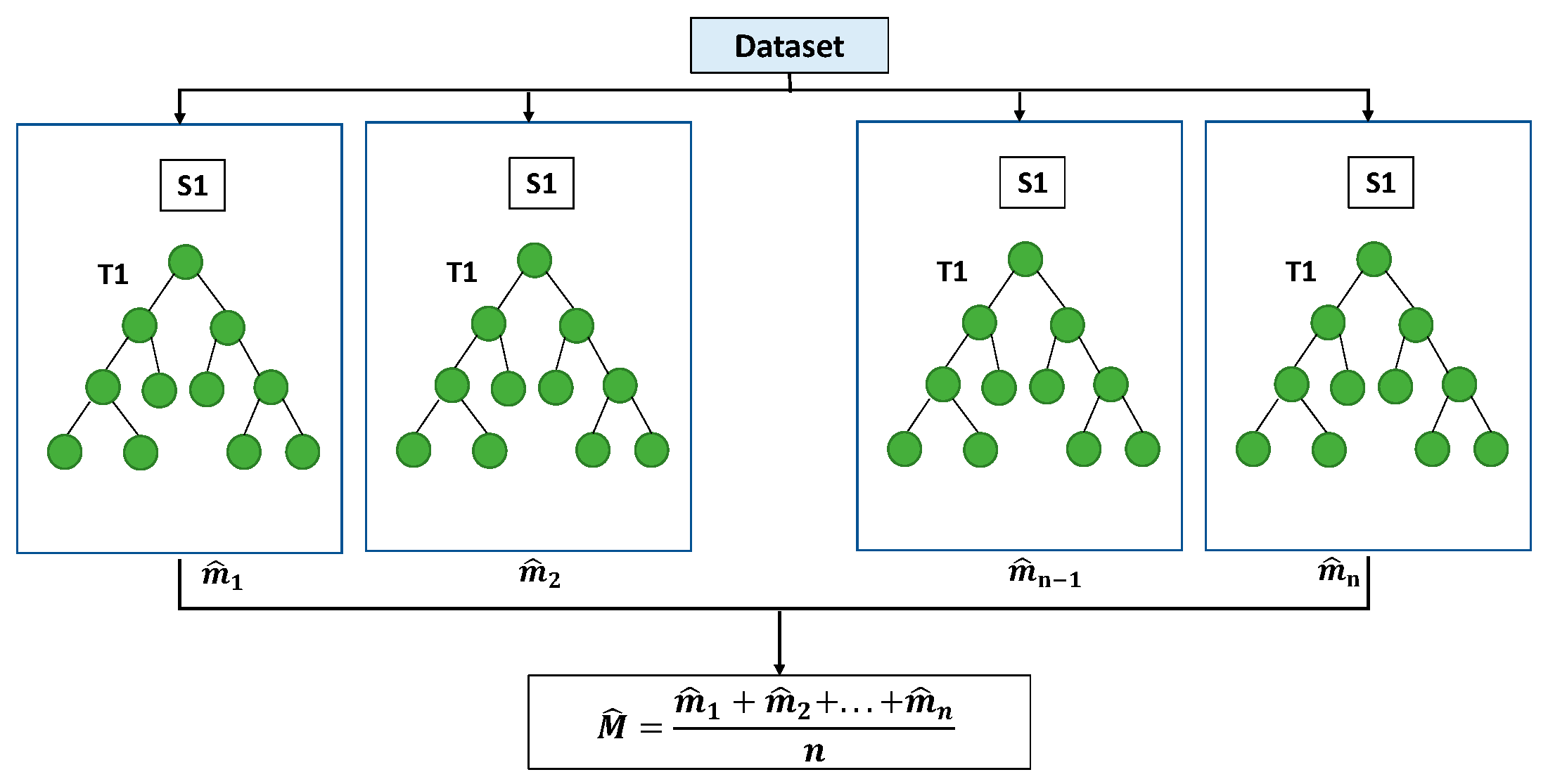

4.5. Random Forest

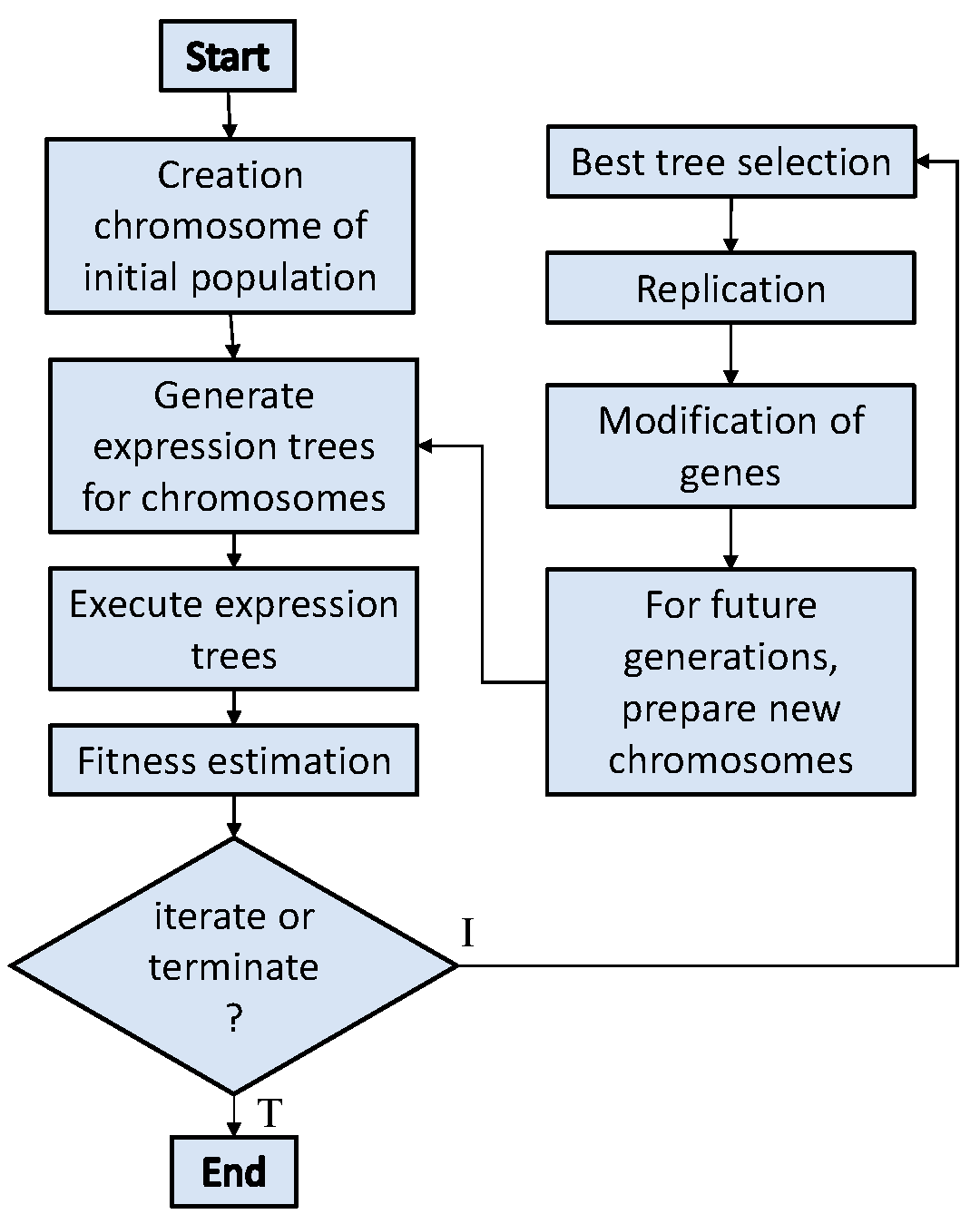

4.6. Gene Expression Programming

5. Assessing the Accuracy of Machine Learning Models

6. Results and Discussion

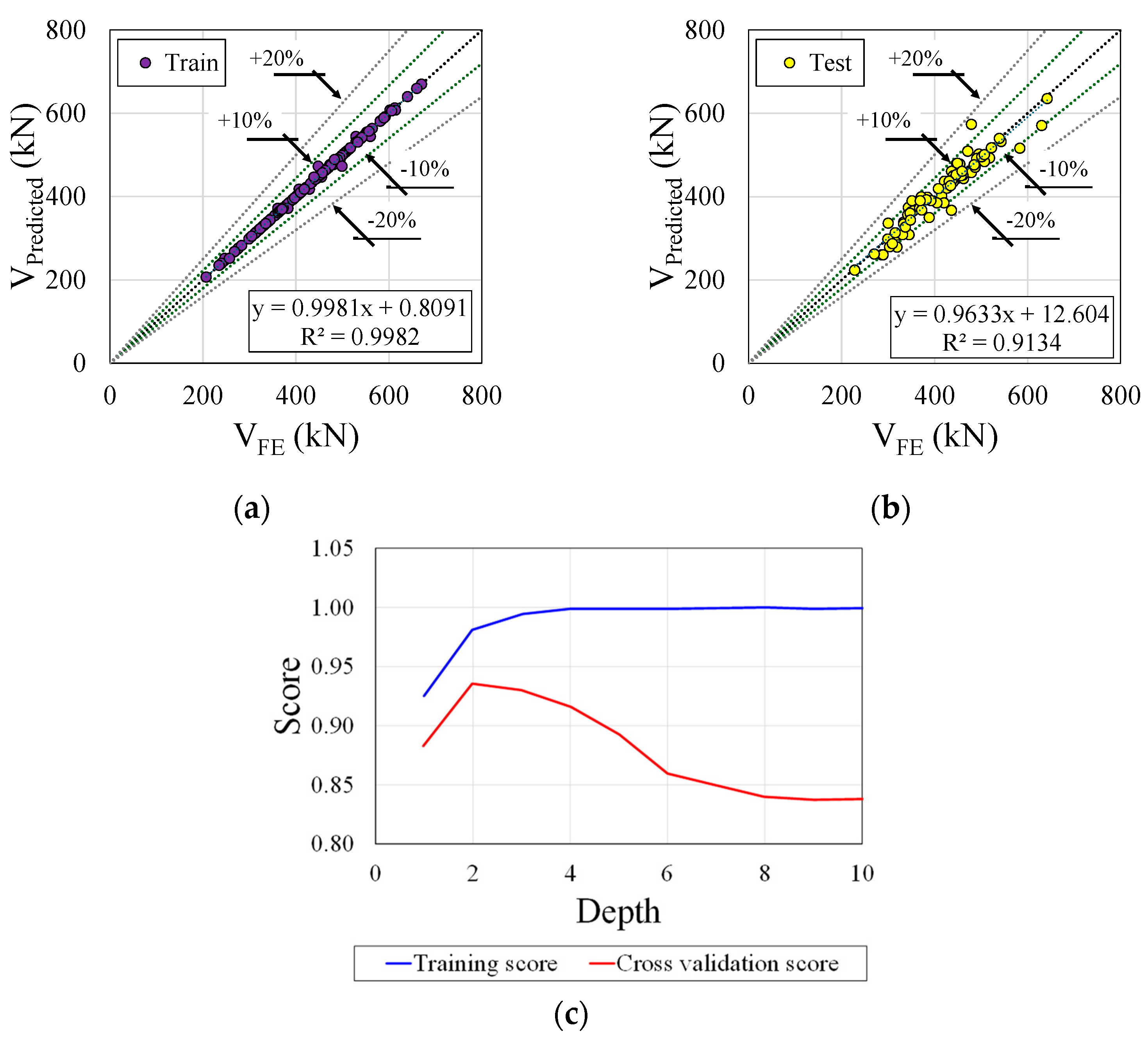

6.1. CatBoost

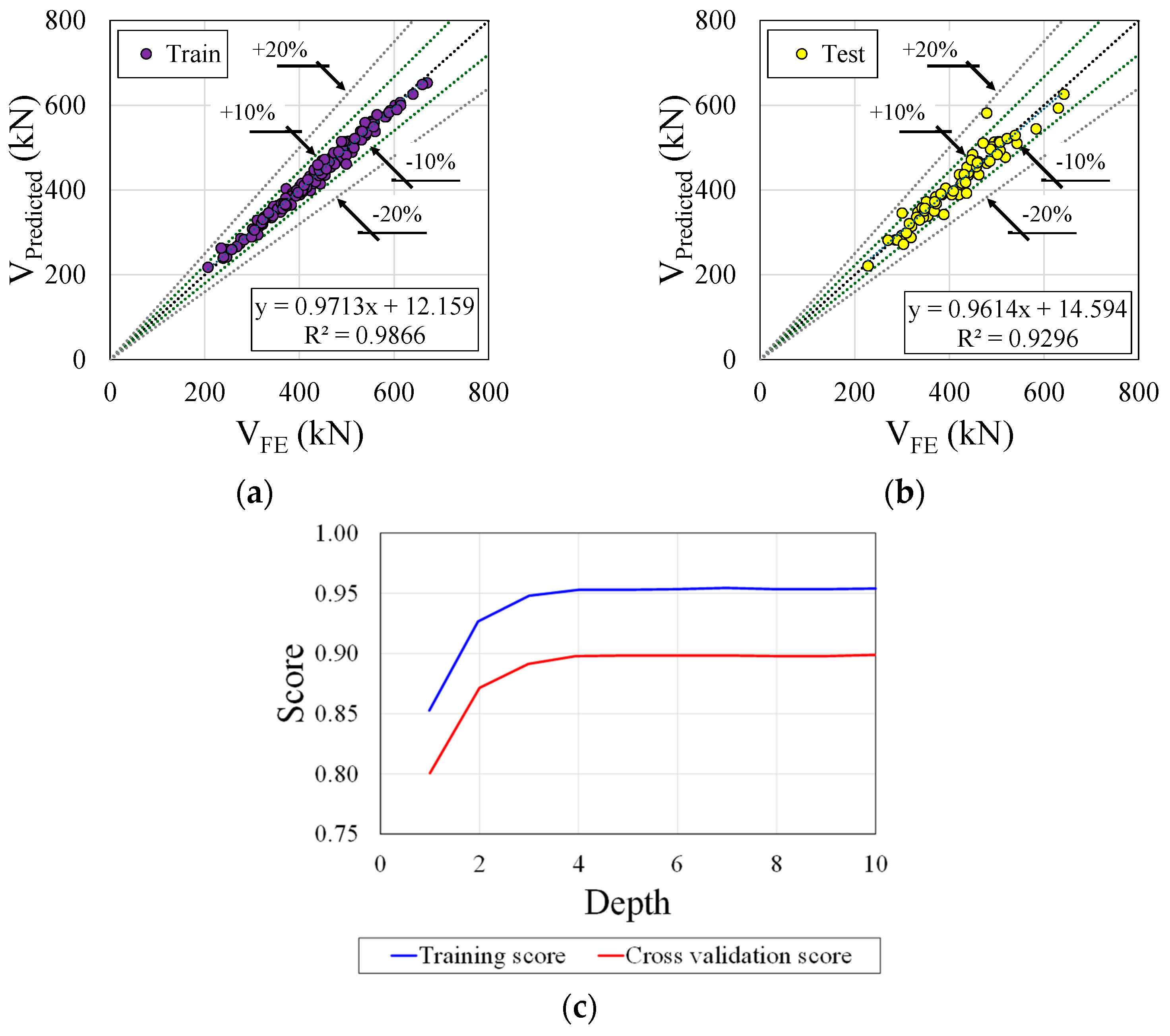

6.2. Gradient Boosting

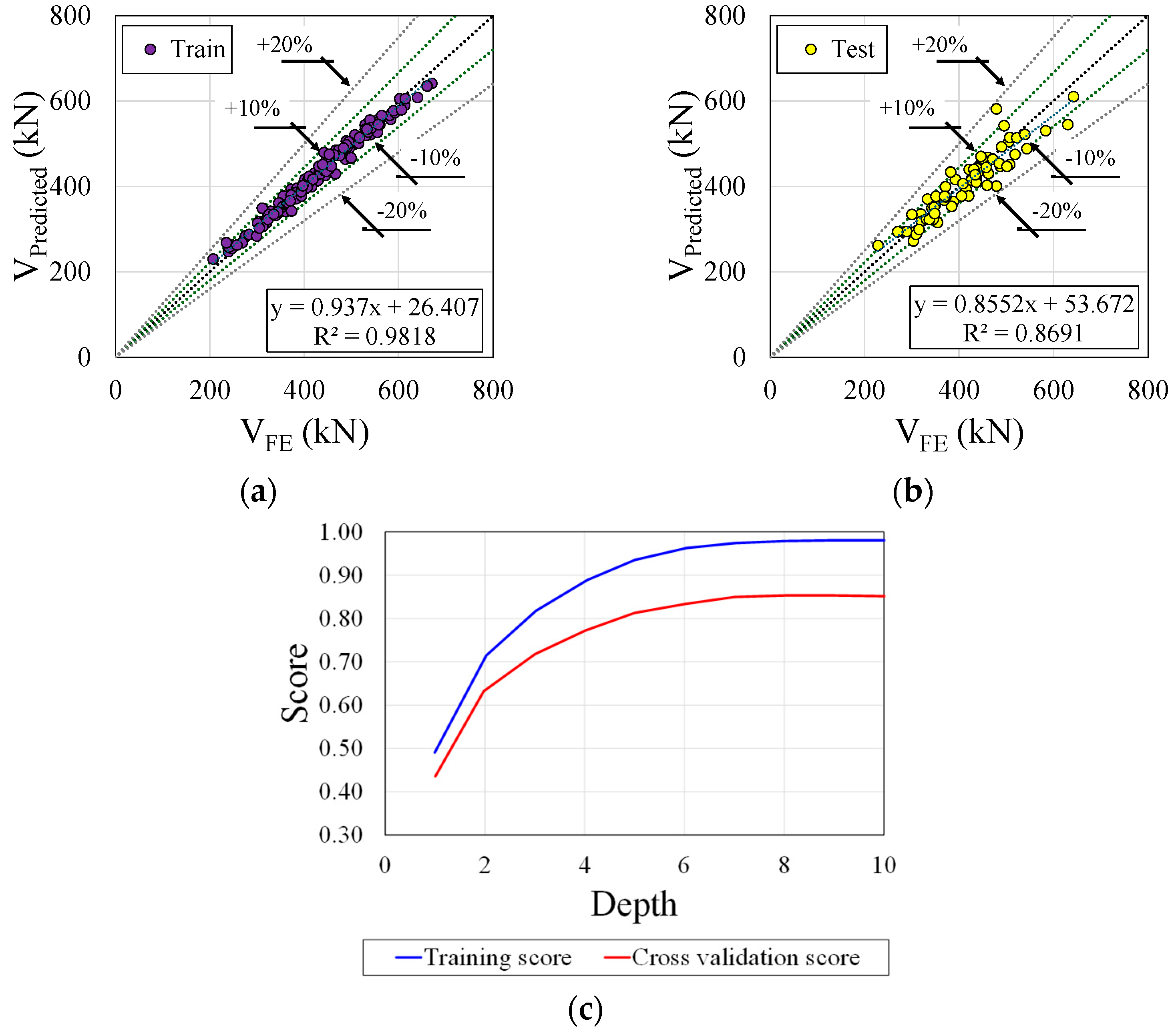

6.3. Extreme Gradient Boosting

6.4. Light Gradient Boosting Machine

6.5. Random Forest

6.6. Feature Importance

7. Proposed Equation by GEP

8. Comparison Analysis

9. Reliability Analysis

10. Conclusions

- i.

- The CatBoost regressor produced an MAE of 6.7814 kN and demonstrated commendable performance with a R2 value of 0.9821, explaining around 98.21% of the variance. This study highlighted the effectiveness of the CatBoost regressor due to its low MAE and high R2 value, providing valuable insights for the design and assessment of steel–concrete composite cellular beams.

- ii.

- With a coefficient of determination (R2) of 0.9531, the gene expression programming model displayed exceptional ability. This indicates that the model predicted approximately 95.31% of the variance in the shear capacity, establishing a strong correlation between predictions and actual values. With its promising results, gene expression programming emerges as a promising alternative for further research.

- iii.

- A GEP-based equation was proposed to predict the global shear of composite cellular beams with PCHCS. The suggested equation for predicting the global shear resistance highlights areas necessitating revisions and offers insights into how these improvements can be achieved. It can contribute to both the safety and cost-effectiveness of steel–concrete composite construction, especially regarding sustainability.

- iv.

- A reliability analysis was performed and the partial safety factor for resistance varied between 1.25 and 1.26.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lawson, R.M.; Lim, J.; Hicks, S.J.J.; Simms, W.I.I. Design of Composite Asymmetric Cellular Beams and Beams with Large Web Openings. J. Constr. Steel Res. 2006, 62, 614–629. [Google Scholar] [CrossRef]

- Lawson, R.M.; Saverirajan, A.H.A. Simplified Elasto-Plastic Analysis of Composite Beams and Cellular Beams to Eurocode 4. J. Constr. Steel Res. 2011, 67, 1426–1434. [Google Scholar] [CrossRef]

- Ahmed, I.M.; Tsavdaridis, K.D. The Evolution of Composite Flooring Systems: Applications, Testing, Modelling and Eurocode Design Approaches. J. Constr. Steel Res. 2019, 155, 286–300. [Google Scholar] [CrossRef]

- Pajari, M.; Koukkari, H. Shear Resistance of PHC Slabs Supported on Beams. I: Tests. J. Struct. Eng. 1998, 124, 1050–1061. [Google Scholar] [CrossRef]

- Lawson, R.M.; Hicks, S.J. Design of Composite Beams with Large Web Openings. SCI P355; The Steel Construction Institute: Berkshire, UK, 2011; ISBN 9781859421970. [Google Scholar]

- Lawson, R.M.; Lim, J.B.P.; Popo-Ola, S.O. Pull-out Forces in Shear Connectors in Composite Beams with Large Web Openings. J. Constr. Steel Res. 2013, 87, 48–59. [Google Scholar] [CrossRef]

- Avci-Karatas, C. Application of Machine Learning in Prediction of Shear Capacity of Headed Steel Studs in Steel–Concrete Composite Structures. Int. J. Steel Struct. 2022, 22, 539–556. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, C.; Zou, X.; Wei, Y.; Chen, D.; Wang, Q.; Wang, L. Prediction of the Shear Resistance of Headed Studs Embedded in Precast Steel–Concrete Structures Based on an Interpretable Machine Learning Method. Buildings 2023, 13, 496. [Google Scholar] [CrossRef]

- Momani, Y.; Tarawneh, A.; Alawadi, R.; Momani, Z. Shear Strength Prediction of Steel Fiber-Reinforced Concrete Beams without Stirrups. Innov. Infrastruct. Solut. 2022, 7, 107. [Google Scholar] [CrossRef]

- Hosseinpour, M.; Rossi, A.; Sander Clemente de Souza, A.; Sharifi, Y. New Predictive Equations for LDB Strength Assessment of Steel–Concrete Composite Beams. Eng. Struct. 2022, 258, 114121. [Google Scholar] [CrossRef]

- Thai, H.-T. Machine Learning for Structural Engineering: A State-of-the-Art Review. Structures 2022, 38, 448–491. [Google Scholar] [CrossRef]

- Ferreira, F.P.V.; Tsavdaridis, K.D.; Martins, C.H.; De Nardin, S. Composite Action on Web-Post Buckling Shear Resistance of Composite Cellular Beams with PCHCS and PCHCSCT. Eng. Struct. 2021, 246, 113065. [Google Scholar] [CrossRef]

- Fares, S.S.; Coulson, J.; Dinehart, D.W. AISC Steel Design Guide 31: Castellated and Cellular Beam Design; American Institute of Steel Construction: Chicago, IL, USA, 2016. [Google Scholar]

- Redwood, R.G.; Poumbouras, G. Tests of Composite Beams with Web Holes. Can. J. Civ. Eng. 1983, 10, 713–721. [Google Scholar] [CrossRef]

- Redwood, R.G.; Poumbouras, G. Analysis of Composite Beams with Web Openings. J. Struct. Eng. 1984, 110, 1949–1958. [Google Scholar] [CrossRef]

- Donahey, R.C.; Darwin, D. Web Openings in Composite Beams with Ribbed Slabs. J. Struct. Eng. 1988, 114, 518–534. [Google Scholar] [CrossRef]

- Cho, S.H.; Redwood, R.G. Slab Behavior in Composite Beams at Openings. I: Analysis. J. Struct. Eng. 1992, 118, 2287–2303. [Google Scholar] [CrossRef]

- Cho, S.H.; Redwood, R.G. Slab Behavior in Composite Beams at Openings. II: Tests and Verification. J. Struct. Eng. 1992, 118, 2304–2322. [Google Scholar] [CrossRef]

- Ferreira, F.P.V.; Martins, C.H.; De Nardin, S. Advances in Composite Beams with Web Openings and Composite Cellular Beams. J. Constr. Steel Res. 2020, 172, 106182. [Google Scholar] [CrossRef]

- Hicks, S.J.; Lawson, R.M. Design of Composite Beams Using Precast Concrete Slabs. SCI P287; The Steel Construction Institute: Berkshire, UK, 2003; ISBN 1859421393. [Google Scholar]

- Gouchman, G.H. Design of Composite Beams Using Precast Concrete Slabs in Accordance with EUROCODE 4. SCI P401; The Steel Construction Institute: Berkshire, UK, 2014; ISBN 9781859422137. [Google Scholar]

- Ferreira, F.P.V.; Tsavdaridis, K.D.; Martins, C.H.; De Nardin, S. Ultimate Strength Prediction of Steel–Concrete Composite Cellular Beams with PCHCS. Eng. Struct. 2021, 236, 112082. [Google Scholar] [CrossRef]

- Nadjai, A.; Vassart, O.; Ali, F.; Talamona, D.; Allam, A.; Hawes, M. Performance of Cellular Composite Floor Beams at Elevated Temperatures. Fire Saf. J. 2007, 42, 489–497. [Google Scholar] [CrossRef]

- Müller, C.; Hechler, O.; Bureau, A.; Bitar, D.; Joyeux, D.; Cajot, L.G.; Demarco, T.; Lawson, R.M.; Hicks, S.; Devine, P.; et al. Large Web Openings for Service Integration in Composite Floors; Technical Steel Research; European Commission, Contract No 7210-PR/315; Final Report 2006. Available online: https://op.europa.eu/en/publication-detail/-/publication/a4af7d1a-b375-4aaa-855e-4e4159737fe3 (accessed on 14 May 2024).

- El-Lobody, E.; Lam, D. Finite Element Analysis of Steel-Concrete Composite Girders. Adv. Struct. Eng. 2003, 6, 267–281. [Google Scholar] [CrossRef]

- Batista, E.M.; Landesmann, A. Análise Experimental de Vigas Mistas de Aço e Concreto Compostas Por Lajes Alveolares e Perfis Laminados; COPPETEC, PEC-18541 2016.

- Dassault Systèmes Simulia Abaqus 6.18 2016; Dassault Systèmes Simulia Corporation: Providence, RI, USA, 2016.

- Hillerborg, A.; Modéer, M.; Petersson, P.-E. Analysis of Crack Formation and Crack Growth in Concrete by Means of Fracture Mechanics and Finite Elements. Cem. Concr. Res. 1976, 6, 773–781. [Google Scholar] [CrossRef]

- Lubliner, J.; Oliver, J.; Oller, S.; Oñate, E. A Plastic-Damage Model for Concrete. Int. J. Solids Struct. 1989, 25, 299–326. [Google Scholar] [CrossRef]

- Lee, J.; Fenves, G.L. Plastic-Damage Model for Cyclic Loading of Concrete Structures. J. Eng. Mech. 1998, 124, 892–900. [Google Scholar] [CrossRef]

- Carreira, D.J.; Chu, K.H. Stress-Strain Relationship for Reinforced Concrete in Tension. ACI J. Proc. 1986, 83, 21–28. [Google Scholar] [CrossRef]

- Carreira, D.J.; Chu, K.H. Stress-Strain Relationship for Plain Concrete in Compression. ACI J. Proc. 1985, 82, 797–804. [Google Scholar] [CrossRef]

- de Lima Araújo, D.; Sales, M.W.R.; de Paulo, S.M.; de Cresce El Debs, A.L.H. Headed Steel Stud Connectors for Composite Steel Beams with Precast Hollow-Core Slabs with Structural Topping. Eng. Struct. 2016, 107, 135–150. [Google Scholar] [CrossRef]

- Yun, X.; Gardner, L. Stress-Strain Curves for Hot-Rolled Steels. J. Constr. Steel Res. 2017, 133, 36–46. [Google Scholar] [CrossRef]

- Guezouli, S.; Lachal, A. Numerical Analysis of Frictional Contact Effects in Push-out Tests. Eng. Struct. 2012, 40, 39–50. [Google Scholar] [CrossRef]

- Ferreira, F.P.V.; Martins, C.H.; De Nardin, S. A Parametric Study of Steel-Concrete Composite Beams with Hollow Core Slabs and Concrete Topping. Structures 2020, 28, 276–296. [Google Scholar] [CrossRef]

- Ferreira, F.P.V.; Martins, C.H.; De Nardin, S. Assessment of Web Post Buckling Resistance in Steel-Concrete Composite Cellular Beams. Thin-Walled Struct. 2021, 158, 106969. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased Boosting with Categorical Features. In Proceedings of the NIPS’18: Proceedings of the 32nd International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 3–8 December 2018; pp. 6638–6648. [Google Scholar]

- Dorogush, A.V.; Ershov, V.; Yandex, A.G. CatBoost: Gradient Boosting with Categorical Features Support. arXiv 2018, arXiv:1810.11363. [Google Scholar]

- Elith, J.; Leathwick, J.R.; Hastie, T. A Working Guide to Boosted Regression Trees. J. Anim. Ecol. 2008, 77, 802–813. [Google Scholar] [CrossRef]

- Guelman, L. Gradient Boosting Trees for Auto Insurance Loss Cost Modeling and Prediction. Expert. Syst. Appl. 2012, 39, 3659–3667. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A Comparative Analysis of Gradient Boosting Algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Zhang, Y.; Beudaert, X.; Argandoña, J.; Ratchev, S.; Munoa, J. A CPPS Based on GBDT for Predicting Failure Events in Milling. Int. J. Adv. Manuf. Technol. 2020, 111, 341–357. [Google Scholar] [CrossRef]

- Si, S.; Zhang, H.; Keerthi, S.S.; Mahajan, D.; Dhillon, I.S.; Hsieh, C.-J. Gradient Boosted Decision Trees for High Dimensional Sparse Output. Proc. Mach. Learn. Res. 2017, 70, 3182–3190. [Google Scholar]

- Meng, Q.; Ke, G.; Wang, T.; Chen, W.; Ye, Q.; Ma, Z.M.; Liu, T.Y. A Communication-Efficient Parallel Algorithm for Decision Tree. In Proceedings of the NIPS’16: Proceedings of the 30th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 5–10 December 2016; pp. 1279–1287. [Google Scholar]

- Liu, J.J.; Liu, J.C. Permeability Predictions for Tight Sandstone Reservoir Using Explainable Machine Learning and Particle Swarm Optimization. Geofluids 2022, 2022, 263329. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Von Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Long Beach, CA, USA, 2017; Volume 30. [Google Scholar]

- Shahani, N.M.; Zheng, X.; Guo, X.; Wei, X. Machine Learning-Based Intelligent Prediction of Elastic Modulus of Rocks at Thar Coalfield. Sustainability 2022, 14, 3689. [Google Scholar] [CrossRef]

- Chen, X.; Ishwaran, H. Random Forests for Genomic Data Analysis. Genomics 2012, 99, 323–329. [Google Scholar] [CrossRef]

- Schonlau, M.; Zou, R.Y. The Random Forest Algorithm for Statistical Learning. Stata J. 2020, 20, 3–29. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Holland, J. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; The MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Koza, J.R. Genetic Programming as a Means for Programming Computers by Natural Selection. Stat. Comput. 1994, 4, 87–112. [Google Scholar] [CrossRef]

- Koza, J. Genetic Programming II: Automatic Discovery of Reusable Programs, 1st ed.; Bradford Books: Bradford, PA, USA, 1994. [Google Scholar]

- Ferreira, C. Gene Expression Programming in Problem Solving. In Soft Computing and Industry; Springer: London, UK, 2002; pp. 635–653. [Google Scholar] [CrossRef]

- Azim, I.; Yang, J.; Javed, M.F.; Iqbal, M.F.; Mahmood, Z.; Wang, F.; Liu, Q.-f. Prediction Model for Compressive Arch Action Capacity of RC Frame Structures under Column Removal Scenario Using Gene Expression Programming. Structures 2020, 25, 212–228. [Google Scholar] [CrossRef]

- Javed, M.F.; Amin, M.N.; Shah, M.I.; Khan, K.; Iftikhar, B.; Farooq, F.; Aslam, F.; Alyousef, R.; Alabduljabbar, H. Applications of Gene Expression Programming and Regression Techniques for Estimating Compressive Strength of Bagasse Ash Based Concrete. Crystals 2020, 10, 737. [Google Scholar] [CrossRef]

- Kapoor, N.R.; Kumar, A.; Kumar, A.; Kumar, A.; Mohammed, M.A.; Kumar, K.; Kadry, S.; Lim, S. Machine Learning-Based CO2 Prediction for Office Room: A Pilot Study. Wirel. Commun. Mob. Comput. 2022, 2022, 9404807. [Google Scholar] [CrossRef]

- Mansouri, E.; Manfredi, M.; Hu, J.W. Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning. Sustainability 2022, 14, 12990. [Google Scholar] [CrossRef]

- Ben Seghier, M.E.A.; Kechtegar, B.; Nait Amar, M.; Correia, J.A.F.O.; Trung, N.-T. Simulation of the Ultimate Conditions of Fibre-Reinforced Polymer Confined Concrete Using Hybrid Intelligence Models. Eng. Fail. Anal. 2021, 128, 105605. [Google Scholar] [CrossRef]

- Ben Seghier, M.E.A.; Carvalho, H.; de Faria, C.C.; Correia, J.A.F.O.; Fakury, R.H. Numerical Analysis and Prediction of Lateral-Torsional Buckling Resistance of Cellular Steel Beams Using FEM and Least Square Support Vector Machine Optimized by Metaheuristic Algorithms. Alex. Eng. J. 2023, 67, 489–502. [Google Scholar] [CrossRef]

- EN 1990; Eurocode—Basis of Structural Design. European Committee for Standardization: Brussels, Belgium, 2002.

- Shamass, R.; Abarkan, I.; Ferreira, F.P.V. FRP RC Beams by Collected Test Data: Comparison with Design Standard, Parameter Sensitivity, and Reliability Analyses. Eng. Struct. 2023, 297, 116933. [Google Scholar] [CrossRef]

- Shamass, R.; Guarracino, F. Numerical and Analytical Analyses of High-Strength Steel Cellular Beams: A Discerning Approach. J. Constr. Steel Res. 2020, 166, 105911. [Google Scholar] [CrossRef]

- Vigneri, V.; Hicks, S.J.; Taras, A.; Odenbreit, C. Design Models for Predicting the Resistance of Headed Studs in Profiled Sheeting. Steel Compos. Struct. 2022, 42, 633–647. [Google Scholar]

| Description | Value |

|---|---|

| Session ID | 1991 |

| Original data shape | (240, 11) |

| Transformed train set shape | (168, 11) |

| Transformed test set shape | (72, 11) |

| Categorical imputation | mode |

| Normalize method | robust |

| Fold generator | KFold |

| Fold number | 10 |

| Transform target method | yeo-johnson |

| Function Set | +, −, *, /, Exp, Ln |

|---|---|

| Number of generations | 365,000 |

| Chromosomes | 200 |

| Head size | 14 |

| Linking function | Addition |

| Number of genes | 3 |

| Mutation rate | 0.044 |

| Inversion rate | 0.1 |

| One-point recombination rate | 0.3 |

| Two-point recombination rate | 0.3 |

| Gene recombination rate | 0.1 |

| Gene transposition rate | 0.1 |

| Constants per gene | 2 |

| Lower/upper bound of constants | −10/10 |

| Analysis | Catboost | Gradient Boosting | Extreme Gradient | Light Gradient Boosting | Random Forest | GEP |

|---|---|---|---|---|---|---|

| R2 | 0.9821 | 0.9694 | 0.9762 | 0.9442 | 0.9186 | 0.9531 |

| RMSE (kN) | 12.1504 | 15.3435 | 16.5446 | 21.5878 | 20.3665 | 30.1683 |

| MAE (kN) | 6.7814 | 10.9457 | 7.4057 | 16.5853 | 14.1428 | 24.8799 |

| Minimum relative error | −13.90% | −11.76% | −18.12% | −11.77% | −16.50% | −12.84% |

| Maximum relative error | 16.54% | 21.41% | 22.99% | 24.00% | 21.48% | 2.69% |

| Mean | 1.000 | 1.000 | 1.000 | 1.000 | 0.998 | 0.945 |

| SD | 2.86% | 3.66% | 3.88% | 5.28% | 4.79% | 4.51% |

| CoV | 2.86% | 3.66% | 3.88% | 5.28% | 4.80% | 4.77% |

| Machine Learning Model | n | Vr | ||||

|---|---|---|---|---|---|---|

| Catboost | 240 | 1.00 | 3.04 | 1.64 | 0.163 | 1.255 |

| Gradient boosting | 240 | 1.002 | 3.04 | 1.64 | 0.163 | 1.257 |

| Extreme gradient | 240 | 0.999 | 3.04 | 1.64 | 0.163 | 1.258 |

| Light gradient boosting | 240 | 1.004 | 3.04 | 1.64 | 0.161 | 1.265 |

| Random forest | 240 | 1.009 | 3.04 | 1.64 | 0.165 | 1.263 |

| GEP | 240 | 1.058 | 3.04 | 1.64 | 0.161 | 1.263 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferreira, F.P.V.; Jeong, S.-H.; Mansouri, E.; Shamass, R.; Tsavdaridis, K.D.; Martins, C.H.; De Nardin, S. Five Machine Learning Models Predicting the Global Shear Capacity of Composite Cellular Beams with Hollow-Core Units. Buildings 2024, 14, 2256. https://doi.org/10.3390/buildings14072256

Ferreira FPV, Jeong S-H, Mansouri E, Shamass R, Tsavdaridis KD, Martins CH, De Nardin S. Five Machine Learning Models Predicting the Global Shear Capacity of Composite Cellular Beams with Hollow-Core Units. Buildings. 2024; 14(7):2256. https://doi.org/10.3390/buildings14072256

Chicago/Turabian StyleFerreira, Felipe Piana Vendramell, Seong-Hoon Jeong, Ehsan Mansouri, Rabee Shamass, Konstantinos Daniel Tsavdaridis, Carlos Humberto Martins, and Silvana De Nardin. 2024. "Five Machine Learning Models Predicting the Global Shear Capacity of Composite Cellular Beams with Hollow-Core Units" Buildings 14, no. 7: 2256. https://doi.org/10.3390/buildings14072256