A Comparative Study of Energy Performance Certificates across Europe

Abstract

1. Introduction

2. Methodologies across Europe

- Inputs gathered during assessment;

- The protocols of the assessment itself (influencing the role and requirements of the assessor);

- A calculation engine used for generating energy/carbon metrics;

- The specific output metrics generated.

2.1. Software and Calculations

2.2. Site Visits and Building Description

2.3. Categories of Energy Consumption

2.4. Specifying Building Systems

2.5. Internal Activity and Occupancy

2.6. Air Exchanges and Temperature Setpoints

3. Categorising and Comparing EPC Methodologies

3.1. Grouping EPC Approaches

3.2. External Differences

- Climate, and how this impacts the balance between heating and cooling;

- Building stock definition, and how country-specific vernacular architecture defines the baseline from which improvements have to be made;

- Cultural approaches to energy use and how (if at all) that can be accommodated into an assessment that generally views occupants in a simplistic way;

- Maturity of markets around heating and cooling technologies, in relation to recommended improvements in EPCs;

- Economies and the building owner’s ability to pay (and financial support for doing this), again related to the tailoring of any energy efficiency recommendations;

- Policy targets around the energy efficiency of buildings (and use of EPCs in those policies).

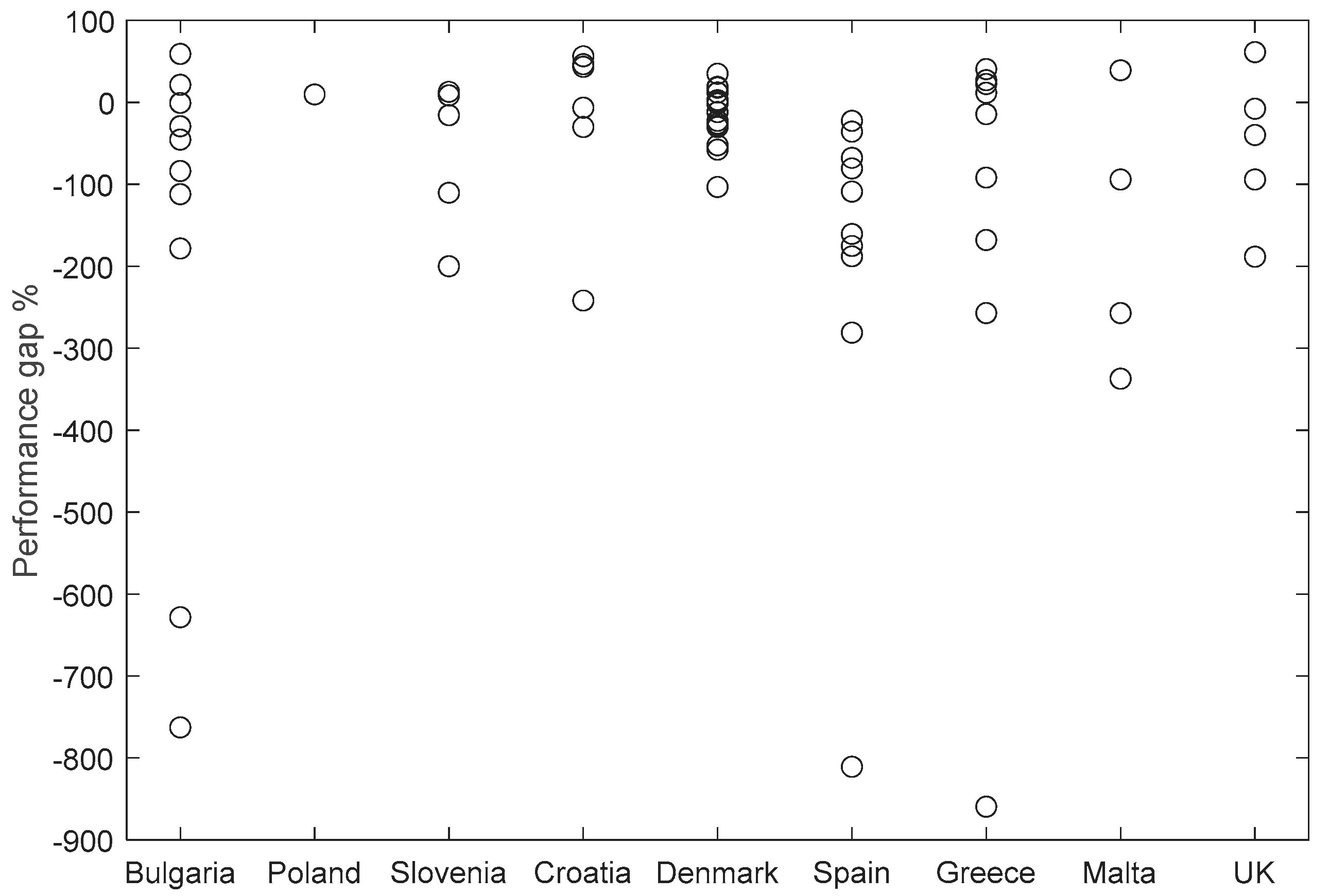

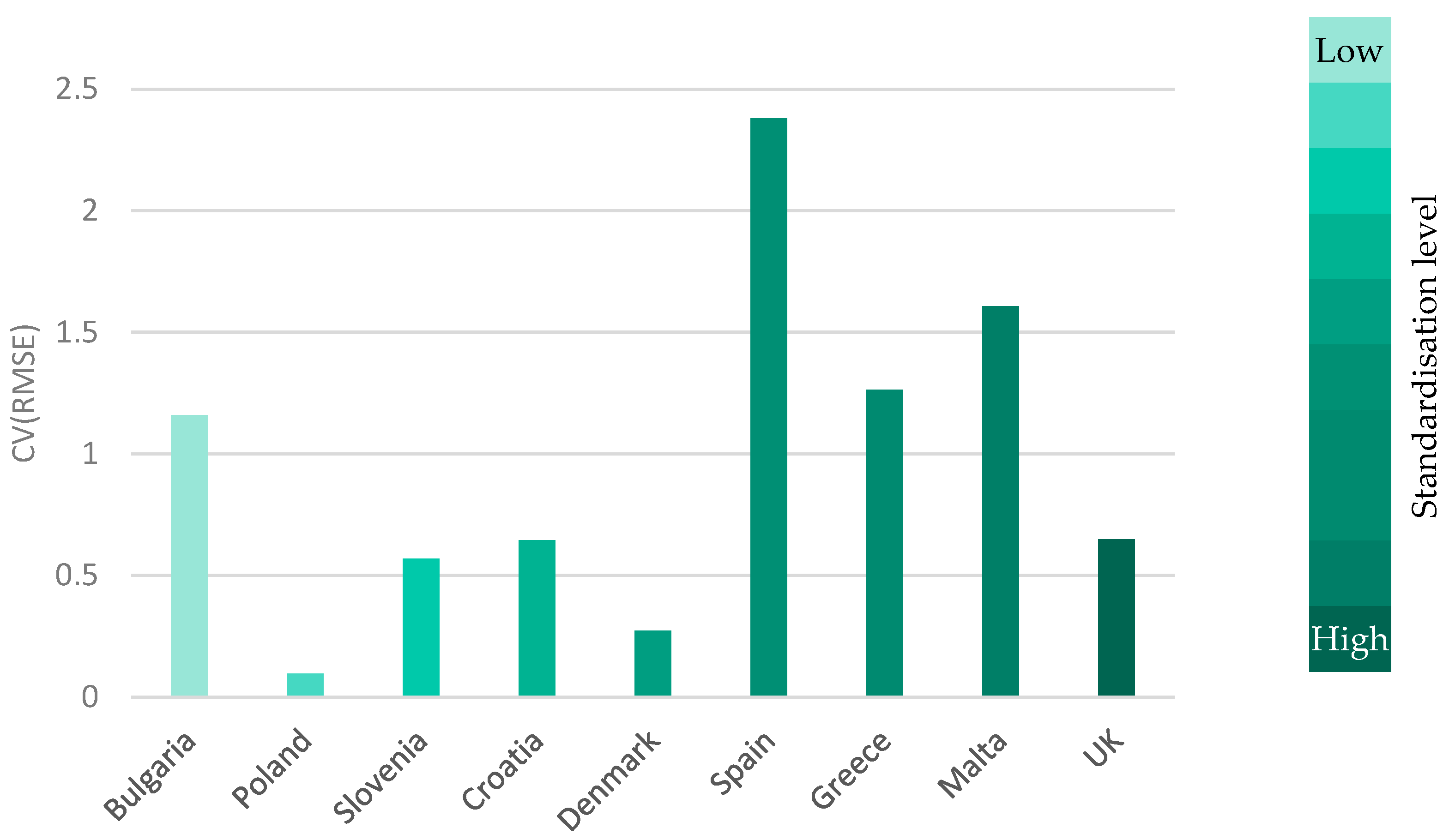

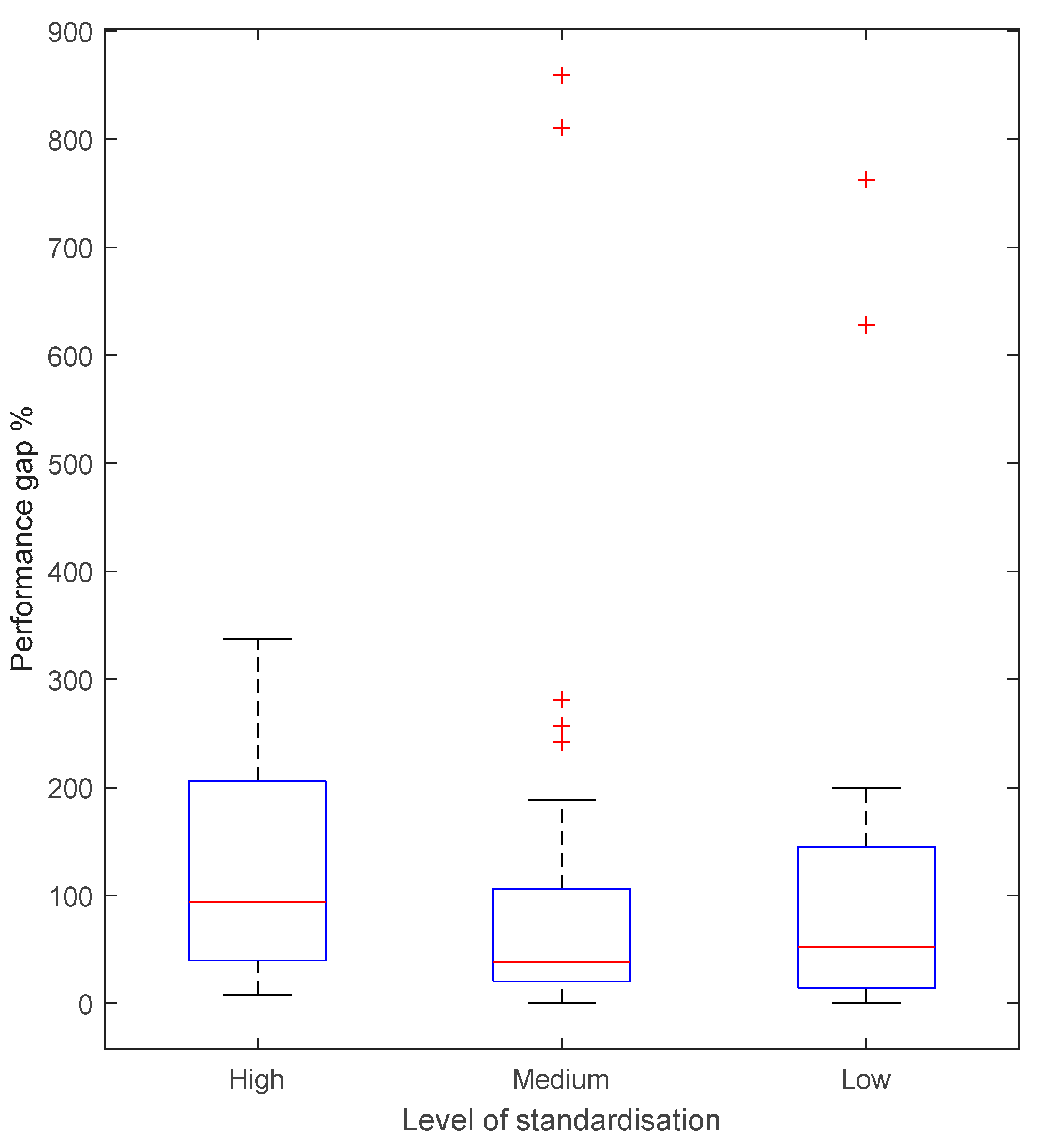

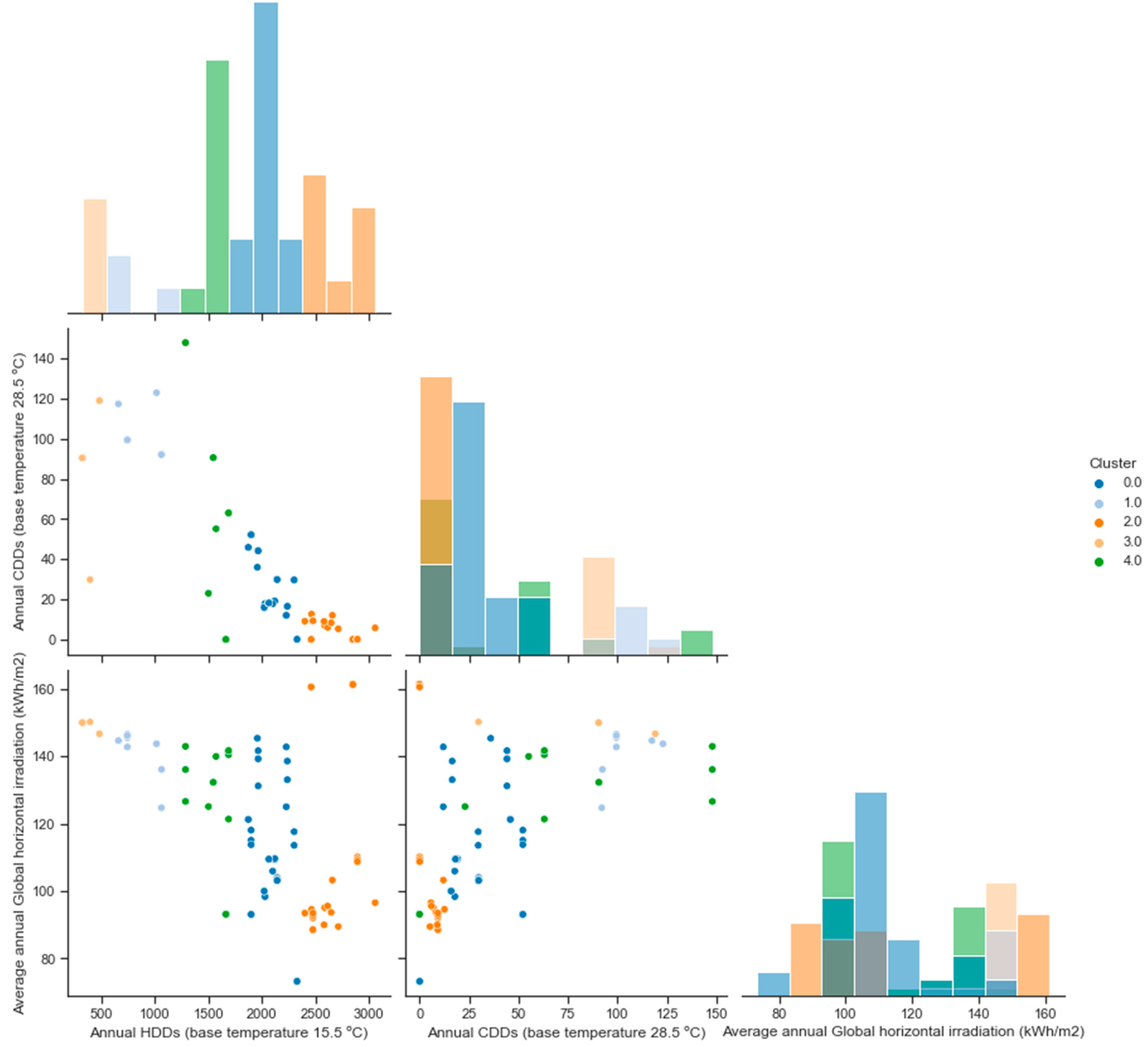

4. Quantifying Implications of Difference

5. Challenges of Direct Comparison of Methods

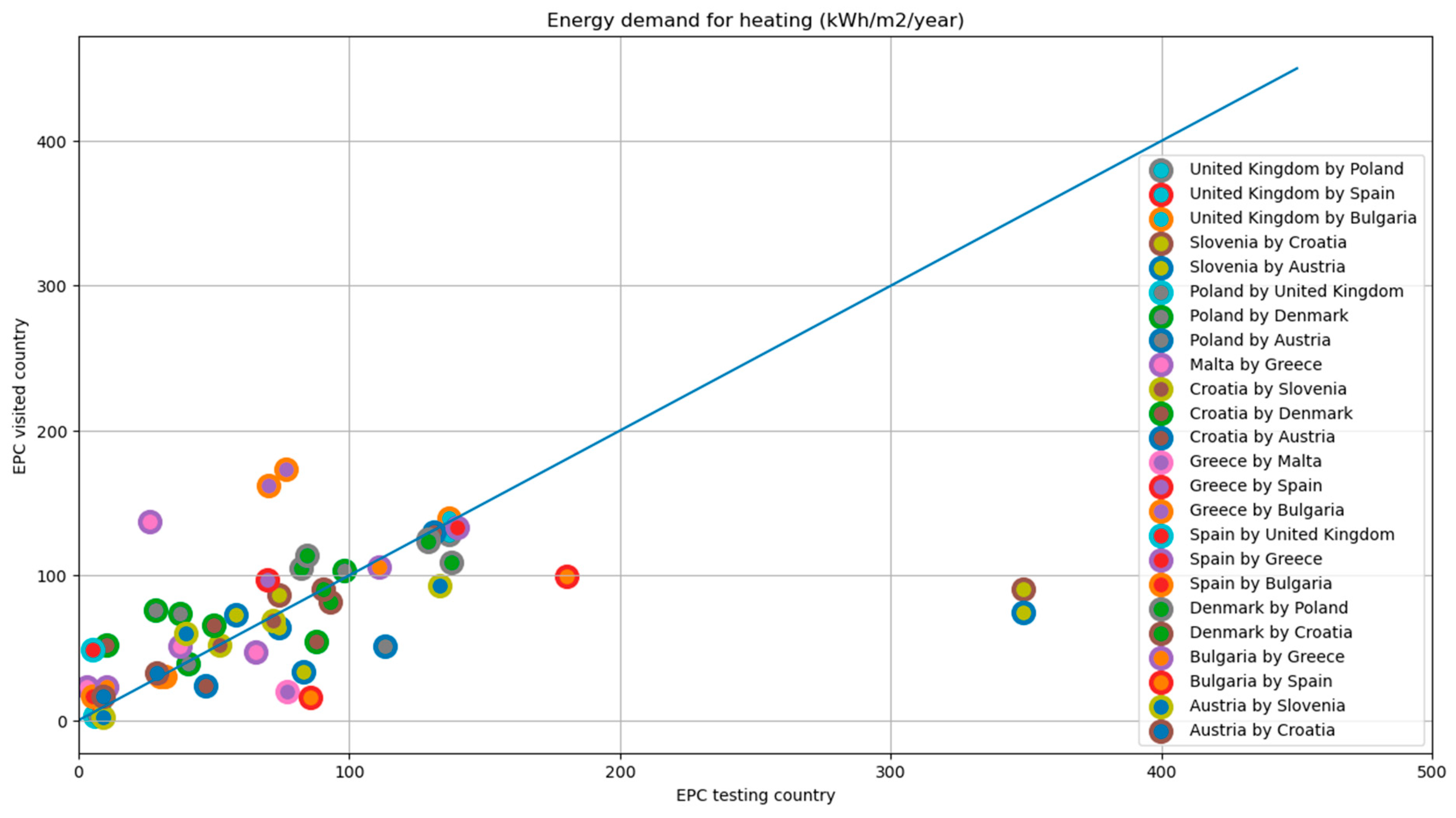

5.1. Cross-Testing Buildings

5.2. Cross-Testing Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- European Commission. Directive (EU) 2024/1275 of the European Parliament and of the Council of 24 April 2024 on the Energy Performance of Buildings (Recast); European Commission: Brussels, Belgium; Luxembourg, 2024. [Google Scholar]

- crossCert. crossCert Project Website. 2024. Available online: www.crosscert.eu (accessed on 31 July 2024).

- OIB. OIB Guidelines|OIB. 2019. Available online: https://www.oib.or.at/en/oib-guidelines (accessed on 5 February 2024).

- Bulgarian Ministry of Regional Development and Public Work. Ordinance No. 7 for the Energy Efficiency of Buildings; SG No. 93; Bulgarian Ministry of Regional Development and Public Work: Sofia, Bulgaria, 2017. [Google Scholar]

- Stapic, Z.; Mijac, M.; Dzeko, M.; Primorac, J.; Tudjan, M.; Bagaric, T.; Jedjud, D. Korisnička Dokumentacija za Rad u Računalnom Programu MGIPU Energetski Certifikator; Sveuculiste u Zagrebu: Zagreb, Croatia, 2017. [Google Scholar]

- Danish Energy Agency. Håndbog for Energikonsulenter (HB2021); Danish Energy Agency: Copenhagen, Denmark, 2021.

- Technical Chamber of Greece. Detailed National Parameter Specifications for the Calculation of the Energy Performance of Buildings and the Issuance of the Energy Performance Certificate; Technical Chamber of Greece: Athens, Greece, 2017. [Google Scholar]

- BRE. Appendix S: Reduced Data SAP for Existing Dwellings; BRE: Hertfordshire, UK, 2012; Available online: https://www.bre.co.uk/filelibrary/SAP/2012/RdSAP-9.93/RdSAP_2012_9.93.pdf (accessed on 10 September 2024).

- Rozporządzenie Ministra Infrastruktury I Rozwoju. Sprawie Metodologii Wyznaczania Charakterystyki Energetycznej Budynku Lub Części Budynku Oraz Świadectw Charakterystyki Energetycznej. 2015. Available online: https://eli.gov.pl/eli/DU/2015/376/ogl (accessed on 10 September 2024).

- PIS. Rulebook on the Methodology for Producing and Issuing Energy Certificates for Buildings (Official Gazette of the Republic of Slovenia); PIS: Ljubljana, Slovenia, 2014. [Google Scholar]

- IETcc-CSIC. Condiciones Técnicas de los Procedimientos para la Evaluación de la Eficiencia Energética; IETcc-CSIC: Madrid, Spain, 2019. [Google Scholar]

- BRE. Standard Assessment Procedure (SAP 10); BRE: Hertfordshire, UK, 2019. Available online: https://www.bregroup.com/sap/sap10/ (accessed on 4 August 2024).

- Gokarakonda, S.; Venjakob, M.; Thomas, S. Report on Local EPC Situation and Cross-Country Comparison Matrix; European Commission: Brussels, Belgium; Luxembourg, 2020. [Google Scholar]

- JJH Associates. DOE2—Website. Published 2024. Available online: https://www.doe2.com (accessed on 1 August 2024).

- EDSL. TAS Engineering Website. Published 2024. Available online: https://www.edsl.net/tas-engineering/ (accessed on 1 August 2024).

- TRNSYS. TRNSYS Tool Website. 2024. Available online: https://www.trnsys.com/ (accessed on 1 August 2024).

- NREL. EnergyPlus Website. 2024. Available online: https://energyplus.net/ (accessed on 1 August 2024).

- UK Government. Green Deal Assessment Mystery Shopping Research; Department of Energy and Climate Change: Whitehall Place, UK, 2014. Available online: https://www.gov.uk/government/publications/green-deal-assessment-mystery-shopping-research (accessed on 30 June 2020).

- D2EPC. D2EPC Manual; European Commission: Brussels, Belgium; Luxembourg, 2022; Available online: https://www.d2epc.eu/en/Project%20Results%20%20Documents/D5.1.pdf (accessed on 4 August 2024).

- UK Government. Display Energy Certificates and Advisory Reports for Public Buildings; UK Government: London, UK, 2015. Available online: https://www.gov.uk/government/publications/display-energy-certificates-and-advisory-reports-for-public-buildings (accessed on 15 November 2023).

- BRE. Simplified Building Energy Model—iSBEM download; BRE: Hertfordshire, UK, 2024. Available online: https://www.uk-ncm.org.uk/disclaimer.jsp (accessed on 1 August 2024).

- Products Characteristics Database. Building Energy Performance Assessment—Support Website: SAP Appendix Q Database. 2020. Available online: https://www.ncm-pcdb.org.uk/sap/page.jsp?id=18 (accessed on 2 July 2020).

- Jenkins, D.; Sayfikar, M. Investigating the Relationship between Energy Assessor Training and Assessment Methodology in Standardised Energy Assessments in Europe. In Proceedings of the 18th IBPSA Conference on Building Simulation, Shanghai, China, 4–6 September 2023. [Google Scholar]

- Sayfikar, M.; Jenkins, D. CrossCert Project Deliverable 3.1—Review of Approaches to EPC Assessment across Chosen Member States; European Commission: Brussels, Belgium; Luxembourg, 2023; Available online: https://www.crosscert.eu/fileadmin/user_upload/crossCert_D3.1_Review_of_approaches_to_EPC_assessment_v4.4.pdf (accessed on 5 February 2024).

- de Wilde, P. The gap between predicted and measured energy performance of buildings: A framework for investigation. Autom. Constr. 2014, 41, 40–49. [Google Scholar] [CrossRef]

- Fueyo, N.; Herrando, M.; Gomez, A. CrossCert Project Deliverable 2.4—EPC Cross-Testing Procedure; European Commission: Brussels, Belgium; Luxembourg, 2022. [Google Scholar]

- International Organisation for Standardisation (ISO). ISO—ISO 3166—Country Codes; ISO: Geneva, Switzerland, 2020; Available online: https://www.iso.org/iso-3166-country-codes.html (accessed on 16 November 2021).

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics; University of California Press: Oakland, CA, USA, 1967; pp. 281–297. Available online: https://projecteuclid.org/euclid.bsmsp/1200512992 (accessed on 4 August 2024).

- Jenkins, D.; McCallum, P.; Patidar, S.; Semple, S. Accommodating new calculation approaches in next-generation energy performance assessments. J. Build. Perform. Simul. 2024, 17, 1–16. [Google Scholar] [CrossRef]

| HVAC Schedules | HVAC Spec. | Lighting and Equipment Schedules | Occupancy | Construction Thermal Parameters | Ventilation Rates | Infiltration Rate | Setpoints | |

|---|---|---|---|---|---|---|---|---|

| Austria | Default based on building type | Default values available | Default values | Default values | Database available | Database available | Default values available | Fixed |

| Bulgaria | Assessor | Actual values | Assessor | Assessor | Database available | Measurement/assessor’s knowledge | Measurement/assessor’s knowledge | Assessor |

| Denmark | Assessor | Default values available | Default values | Default values | Database available | Database available | Default values available | Depends on use type/ activitylevel/ control |

| Greece | Default based on zone activity type | Default values available | Default values | Default values | Database available | Database available | Default values available | Depends on use type/ activity level/ control |

| Malta | Default based on zone activity type | Default values available for some building categories | Default values | Default values | Database available/ Inference based | Database available | Default values available | Depends on use type/ activity level/ control |

| Poland | Assessor | Default values available | Default values or assessor | Assessor | Database available but outdated | Database available | Default values available | Depends on use type/ activity level/ control |

| Slovenia | Default based on zone activity type | Actual values | Default values | Default values or assessor | Database available | Database available | Measurement/assessor’s knowledge | Depends on use type/ activity level/ control |

| Spain | Default based on building type | Actual values | Default values | Default values | Database available/ Inference based | Database available | Default values available | Fixed |

| UK | Default based on zone activity type | Default values available for some building categories | Default values | Default values | Database available/ Inference based | Database available | Default values available for some buildings | Depends on use type/ activity level/ control |

| Standardisation Level | Basis of EPC Metric | Rating Type |

|---|---|---|

| Low | Total primary energy | Calibrated asset rating |

| Medium | Heating energy | Operational rating and asset rating |

| High | Cost | Only asset rating |

| Country | Standardisation Level | Basis Of EPC Metric | Rating Type |

|---|---|---|---|

| Bulgaria | Low | Total primary energy | Calibrated asset rating |

| Poland | Low | Total primary energy | Operational rating and asset rating |

| Slovenia | Low | Heating energy | Operational rating and asset rating |

| Croatia | Medium | Heating energy | Only asset rating |

| Denmark | Medium | Heating energy | Operational rating and asset rating |

| Spain | Medium | Total primary energy | Only asset rating |

| Greece | Medium | Total primary energy | Only asset rating |

| Malta | High | Total primary energy | Only asset rating |

| Austria | High | Heating energy | Only asset rating |

| UK | High | Cost | Only asset rating |

| Code | Measured Total Final Energy Consumption (kWh/m2/year) | EPC Final Energy Consumption (kWh/m2/year) | Country | Code | Measured Total Final Energy Consumption (kWh/m2/year) | EPC Final Energy Consumption (kWh/m2/year) | Country |

|---|---|---|---|---|---|---|---|

| GR100 | 207.8 | 183.8 | Greece | ESR5 | 189.6 | 395.9 | Spain |

| GR101 | 170.4 | 124.5 | Greece | HR-3 | 103.8 | 55.5 | Croatia |

| GR102 | 194.2 | 222.4 | Greece | HR-6 | 72.6 | 77.3 | Croatia |

| GR103 | 232 | 621.9 | Greece | HR-10 | 45.3 | 154.9 | Croatia |

| GR104 | 45.2 | 86.7 | Greece | HR-11 | 86.8 | 49.2 | Croatia |

| GR105 | 67.4 | 40 | Greece | HR-12 | 148 | 192.4 | Croatia |

| GR106 | 87 | 310.7 | Greece | HR-20 | 183.1 | 80.3 | Croatia |

| GR107 | 28.3 | 271.5 | Greece | PL-2 | 31 | 28 | Poland |

| GR108 | 198.3 | 154.1 | Greece | MT-01 | 55 | 33.5 | Malta |

| DK3 | 160.2 | 162.5 | Denmark | MT-10 | 30.4 | 108.5 | Malta |

| DK5 | 68.9 | 88.6 | Denmark | MT-03 | 26.3 | 114.9 | Malta |

| DK11 | 104.8 | 128.6 | Denmark | MT-12 | 47.8 | 92.8 | Malta |

| DK12 | 97.4 | 122.8 | Denmark | SI-1 | 37 | 111 | Slovenia |

| DK13 | 57.9 | 75.3 | Denmark | SI-2 | 145 | 305 | Slovenia |

| DK14 | 114.1 | 74.4 | Denmark | SI-3 | 133 | 116 | Slovenia |

| DK15 | 120.4 | 119.5 | Denmark | SI-4 | 313 | 362 | Slovenia |

| DK16 | 85.5 | 134.9 | Denmark | SI-7 | 95 | 87 | Slovenia |

| DK17 | 125.4 | 102.8 | Denmark | UK1 | 137 | 265.9 | UK |

| DK18 | 59.9 | 58.3 | Denmark | UK2 | 32 | 92.3 | UK |

| DK201 | 142.4 | 115.6 | Denmark | UK4 | 51.4 | 19.9 | UK |

| DK21 | 124.2 | 109.5 | Denmark | UK22 | 140 | 151 | UK |

| DK22 | 114.6 | 128.3 | Denmark | UK23 | 247.44 | 346 | UK |

| DK23 | 92.3 | 140.8 | Denmark | BG08 | 187.01 | 147.2 | Bulgaria |

| DK24 | 34.9 | 71.1 | Denmark | BG1 | 57.41 | 159.8 | Bulgaria |

| ES01 | 70.8 | 645.2 | Spain | BG2 | 60.39 | 87.8 | Bulgaria |

| ES02 | 31.17 | 42.3 | Spain | BG3 | 86.99 | 159.9 | Bulgaria |

| ES03 | 132.4 | 162.3 | Spain | BG4 | 321.95 | 131.7 | Bulgaria |

| ES13 | 114.69 | 315.7 | Spain | BG5 | 14.77 | 127.4 | Bulgaria |

| ES15 | 65.5 | 109.8 | Spain | BG6 | 98.36 | 208.5 | Bulgaria |

| ES17 | 8.03 | 30.6 | Spain | BG7 | 33.94 | 34.2 | Bulgaria |

| ESR2 | 74.22 | 134.1 | Spain | BG9 | 64.85 | 83.9 | Bulgaria |

| ESR3 | 152.57 | 439.2 | Spain | BG10 | 28.88 | 210.3 | Bulgaria |

| ESR4 | 170.29 | 443.7 | Spain |

| Country | Cluster 0 | Cluster 1 | Cluster 2 | Cluster 3 | Cluster 4 |

|---|---|---|---|---|---|

| AT—Austria | AT1-AT7, AT10 | AT8, AT9 | |||

| BG—Bulgaria | BG1, BG3-BG10 | BG2 | |||

| DK—Denmark | DK1-DK10 | ||||

| ES—Spain | ES3, ES4, ES7, ES8, ES9, ES10, ES12, ES14, ES16 | ES1, ES2, ES5, ES6, ES11, ES13, ES15, ES17 | |||

| GR—Greece | GR11, GR15 | GR3, GR4, GR7, GR9, GR10, GR12, GR14, GR17, GR18, GR20 | GR6, GR16 | GR1, GR2, GR5, GR8, GR13, GR19 | |

| HR—Croatia | HR2, HR4, HR5, HR11-HR22 | HR1, HR3, HR6, HR7, HR8, HR9, HR10 | |||

| MT—Malta | MT1-MT12 | ||||

| PL-Poland | PL1-PL15 | ||||

| SI—Slovenia | SL1-SL10 | ||||

| UK—United Kingdom | UK1-UK3 | UK4-UK22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jenkins, D.P.; Sayfikar, M.; Gomez, A.; Fueyo, N. A Comparative Study of Energy Performance Certificates across Europe. Buildings 2024, 14, 2906. https://doi.org/10.3390/buildings14092906

Jenkins DP, Sayfikar M, Gomez A, Fueyo N. A Comparative Study of Energy Performance Certificates across Europe. Buildings. 2024; 14(9):2906. https://doi.org/10.3390/buildings14092906

Chicago/Turabian StyleJenkins, David P., Mahsa Sayfikar, Antonio Gomez, and Norberto Fueyo. 2024. "A Comparative Study of Energy Performance Certificates across Europe" Buildings 14, no. 9: 2906. https://doi.org/10.3390/buildings14092906

APA StyleJenkins, D. P., Sayfikar, M., Gomez, A., & Fueyo, N. (2024). A Comparative Study of Energy Performance Certificates across Europe. Buildings, 14(9), 2906. https://doi.org/10.3390/buildings14092906