As stated before, in the current computer interaction paradigm, articulatory feedback is given visually, while in the real world many movements and manipulative actions are guided by touch, vision, and audition. The rationale behind the work as described in this paper is that it is expected that adding auditory and vibrotactile feedback to the visual articulatory feedback improves the articulation, either in speed or accuracy, and that of these two the vibrotactile feedback will give the greatest benefit as it is the most natural form of feedback in this case.

The work reported here focusses on the physical level of the layered interaction as described before, where the articulation of the gesture takes place. It is at this stage limited to a one degree-of-freedom movement, of a mouse on a flat surface. An essential part of our approach is to leave some freedom for the participants in our experiments to explore.

The research method is described in

Section 2.1.

Section 2.2 describes the experiment, which is set up in five phases. Phase 1 (

Section 2.2.1) is an exploratory phase, where the participants’ task is to examine an area on the screen with varying levels of vibrotactile feedback, as if there were invisible palpable pixels. This phase is structured to gain information about the participants’ sensitivity to the tactual cues, which is then used in the following phases of the experiment. In the second phase (

Section 2.2.2), a menu consisting of a number of items has been enhanced with tactual feedback, and performance of the participants is measured in various conditions. In the third and fourth phase (

Section 2.2.3 and

Section 2.2.4), an on-screen widget, a horizontal slider, has to be manipulated. Feedback conditions are varied in a controlled way, applying different modalities, and performance (reaction times and accuracy) is measured. The last phase (

Section 2.2.5) of the experiment consisted of a questionnaire.

2.1. Method

In the experiments, the participants are given simple tasks, and by measuring the response times and error rates in a controlled situation, differences can be detected. It must be noted that, in the current desktop metaphor paradigm, a translation takes place between hand movement with the mouse (input) and the visual feedback of the pointer (output) on the screen. This translation is convincing due to the way our perceptual systems work. The tactual feedback in the experiments is presented on the hand, where the input takes place, rather than there where the visual feedback is presented. To display the tactual feedback, a custom built vibrotactile element is used. In some of the experiments, auditory articulatory feedback was generated as well, firstly because this often happens in the real world, and secondly to investigate if sound can substitute for tactual feedback. (Mobile phones and touch screens often have such an audible ‘key click’).

A gesture can be defined as a multiple degree-of-freedom meaningful movement. In order to investigate the effect of the feedback, a restricted gesture was chosen. In its simplest form, the gesture has one degree-of-freedom and a certain development over time. As mentioned earlier, there are in fact several forms of tactual feedback that can occur through the tactual modes as described above, discriminating between cutaneous and proprioceptive, active and passive. When interacting with a computer, cues can be generated by the system (active feedback) while other cues can be the result of real-world elements (passive feedback). When moving the mouse with the vibrotactile element, all tactual modes are addressed, actively or passively; it is a haptic experience drawing information from the cutaneous, proprioceptive, and efference copy (because the participant moves actively), while our system only actively addresses the cutaneous sense. All other feedback is passive, i.e., not generated by the system, but it can play some role as articulatory information.

It was a conscious decision not to incorporate additional feedback on the reaching of the goal. This kind of ‘confirmation feedback’ has often been researched in haptic feedback systems, and has proven to be an effective demonstration of positive effects as discussed in

Section 1.1. However, we are interested in situations where the system does not know where the user is aiming for, and are primarily interested in the feedback that supports the articulation of the gesture—not the goal to be reached, as it is not known. For the same reason, we chose not to guide (pull) the users towards the goal, which could be beneficial although following Fitts’s Law (about movement time in relation to distance (‘amplitude’) and target size (

Fitts and Posner 1967, p. 112); what happens in such cases is that effectively the target size gets increased (

Akamatsu and MacKenzie 1996). Another example is the work of Koert van Mensvoort, who simulated the force-feedback and feedforward visually, which is also very effective (

van Mensvoort 2002,

2009).

2.2. Experimental Set-Up

The graphical, object-based programming language MaxMSP was used. MaxMSP is a programme originally developed for musical purposes and has therefore a suitable real time precision (the internal scheduler works at 1 ms) and many built-in features for the generation of sound, images, video clips, and interface widgets. It has been used and proved useful and valid as a tool in several psychometric and HCI-related experiments (

Vertegaal 1998, p. 41;

Bongers 2002;

de Jong and Van der Veer 2022). Experiments can be set up and modified very quickly.

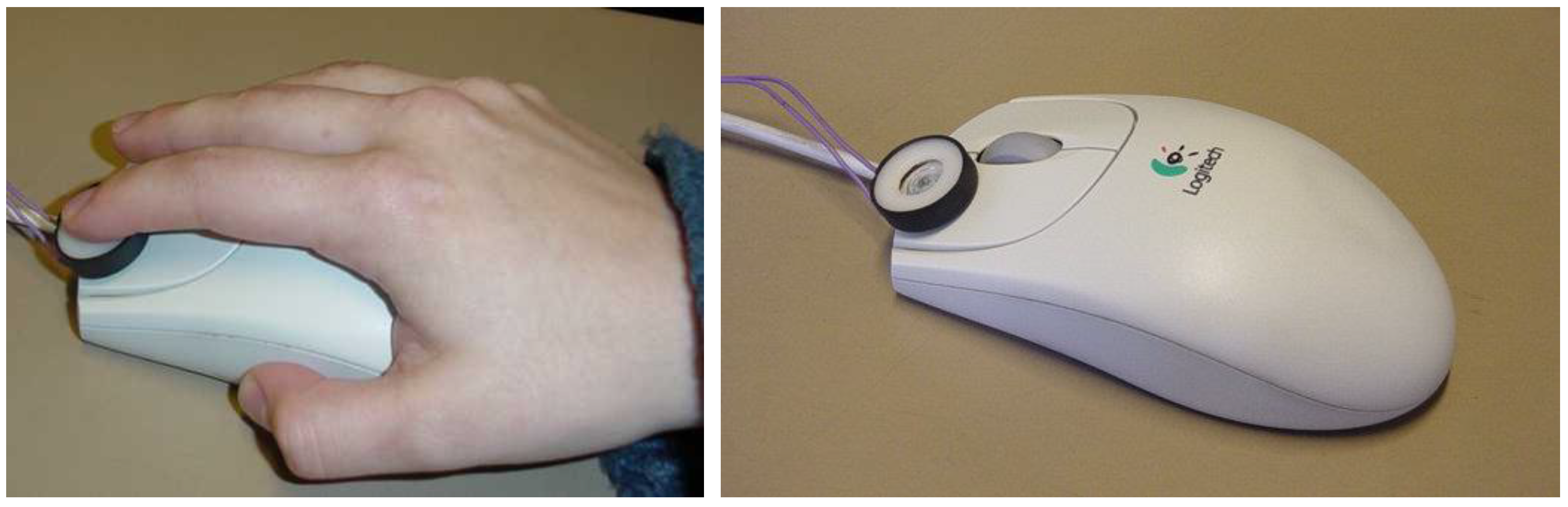

The custom-developed MaxMSP software (‘patches’) for this experiment ran on an Apple PowerBook G3 with OS 8.6 (but also runs under OSX on newer Macs), used in dual-screen mode; the experimenter used the internal screen for monitoring and controlling the experiment, and the participant used an external screen (Philips 19” TFT). The participant carried out the tasks using a standard Logitech mouse (First Pilot Wheel Mouse M-BE58, connected through USB), with the vibrotactile element positioned under the index finger of the participant where the feedback would be expected. For the right-handed participants, this was on the left mouse button; for the left-handed participants, the element could be attached to the right mouse button. The vibrotactile element is a small loudspeaker (Ø 20 mm, 0.1 W, 8 Ω) with a mylar cone, partially covered and protected by a nylon ring, as shown in

Figure 2. The ring allows the user to avoid pressing on the loudspeaker cone which would make the stimulus disappear.

The vibrotactile element is covered by the user’s finger so that frequencies in the auditory domain are further dampened. The signals sent to the vibrotactile element are low frequency sounds, making use of the sound generating capabilities of MaxMSP.

Generally, the tactual stimuli, addressing the Pacinian system which is sensitive between 40 and 1000 Hz, are chosen with a low frequency to avoid interference with the audio range. The wavelengths of the (triangular) tactual pulses were 40 and 12 milliseconds resulting in a base frequency of 25 and 83.3 Hz, respectively. The repeat frequency of the pulses would be depending on the speed of the movement made with the mouse, and in practice is limited by the tracking speed. To investigate whether the tactual display really would be inaudible, measurements were performed with a Brüel and Kjær 2203 Sound Level Meter. The base frequency of the tactual display was set to 83.3 Hz and the amplitude levels at 100% and at 60% as used in the experiment. At a distance of 50 cm and an angle of 45 degrees, the level was 36 dB(A) (decibel adjusted for the human ear) when the device was not covered, and 35 dB(A) when the device was covered by the human finger as in the case of the experiments. At an amplitude of 60%, these values were 35 dB(A) and 33 dB(A), respectively. Therefore, in the most realistic situation, i.e., not the highest amplitude and covered by the finger, the level is 33 dB(A), which is close to the general background noise level in the test environment (30 dB(A)) and furthermore covered by the sound of the mouse moving on the table-top surface (38 dB(A)).

Auditory feedback was presented through a small (mono) loudspeaker next to the participant’s screen (at significantly higher sound pressure levels than the tactile element).

In total, 35 subjects participated in the trials and pilot studies, mainly first and second year students of Computer Science of the VU Amsterdam university. They carried out a combination of experiments and trials, as described below, using their preferred hand to move the mouse and explore or carry out tasks, and were given a form with open questions for qualitative feedback. In all phases of the experiment, the participants could work at their own pace, self-timing when to click the “next” button to generate the next cue (and the software would log their responses).

Not all subjects participated in all experiments. A total of 4 out of 35 were pilot studies, the 31 main ones all participated in the most important phases of the experiment (2, 3, and 5) and 11 of them participated in the 4th phase as well (which was an extension).

Special attention was given to the participants’ posture and movements, as the experiment involved lots of repetitive movements which could contribute to the development of RSI (repetitive strain injury, or WRULD, work related upper limb disorders, in this case particularly carpal tunnel syndrome as can involve the wrist movements—the mouse ratio was set on purpose to encourage larger movements with the whole arm, instead of just wrist rotations). The overall goal of this research is to come up with paradigms and interaction styles that are more varied, precisely to avoid such complaints. At the beginning of each session therefore, participants were instructed and advised on strategies to avoid such problems, and monitored throughout the experiment.

2.2.1. Phase 1: Threshold Levels

The first phase of the experiment was designed to investigate the threshold levels of tactile sensitivity of the participants, in relationship to the vibrotactile element under the circumstances of the test set-up. The program generated in random order a virtual texture, varying the parameters base frequency of the stimulus (25 or 83.3 Hertz), amplitude (30%, 60%, and 100%) and spatial distribution (1, 2, or 4 pixels between stimuli) resulting in 18 different combinations. A case was included with an amplitude of 0, e.g., no stimulus, for control purposes. In total, therefore, there were 24 combinations. The software and experimental set-up was developed by the first author at Cambridge University in 2000 and is described in more detail elsewhere (

Bongers 2002).

The participant had to actively explore an area on the screen, a white square of 400 × 400 pixels, and report if anything could be felt or not. This could be done by selecting the appropriate button on the screen, upon which the next texture was set. Their responses, together with a code corresponding with the specific combination of values of the parameters of the stimuli, were logged by the programme into a file for later analysis. In this phase of the experiment, 26 subjects participated.

This phase also helped to make the participants more familiar with the use of tactual feedback.

2.2.2. Phase 2: Menu Selection

In this part of the experiment, participants had to select an item from a list of numbers (a familiar pop-up menu), matching the random number displayed next to it. There were two conditions, one visual only and the other supported by tactual feedback where every transition between menu items generated a tangible ‘bump’ in addition to the normal visual feedback. The ‘bump’ was a pulse of 12 ms. (83.3 Hz, triangular waveform), generated at each menu item.

The menu contained 20 items, a list of numbers from 100 to 119 as shown in

Figure 3. All 20 values (the cues) would occur twice, from a randomly generated table in a fixed order so that the data across conditions could be easily compared—all distances travelled with the mouse were the same in each condition.

Response times and error rates were logged into a file for further analysis. An error would occur if the participant selected the wrong number, the experiment carried on. In total, 30 subjects participated in this phase of the experiment, balanced in order (15–15) for both conditions.

2.2.3. Phase 3: Slider Manipulation

In this experiment, a visual cue was generated by the system, which moved a horizontal slider on the screen to a certain position (the system slider). The participants were instructed to place their slider to the same position as the system slider, as quickly and as accurately as they could. The participant’s slider was shown just below the system slider, each had 120 steps and was about 15 cm wide on the screen.

Articulatory feedback was given in four combinations of three modalities: visual only (V), visual + tactual (VT), visual + auditory (VA), and visual + auditory + tactual (VAT). The sliders were colour coded to represent the combinations of feedback modalities: green (V), brown (VT), violet (VA), and purple (VAT).

The tactual feedback consisted of a pulse for every step of the slider, a triangular wave form with a base frequency of 83.3 Hz. The auditory feedback was generated with the same frequency, making a clicking sound due to the overtones of the triangular wave shape.

These combinations could be presented in 24 different orders, but the most important effect in the context of user interface applications is from Visual Only to Visual and Tactual and vice versa. It was decided to choose the two that would reveal enough about potential order effects: V-VT-VA-VAT and VAT-VA-VT-V.

Figure 4 shows the experimenter’s screen, with several buttons to control the set-up of the experiment, sliders and numbers that monitor system and participant actions, and numeric data indicating the number of trials, and the participant’s screen with the cue slider and response slider. All the data was automatically logged into files. The participant’s screen had only a system slider (giving the cues), their response slider, and some buttons for advancing the experiment.

All 40 cues were presented from a randomly generated, fixed order table of 20 values, every value occurring twice. Values near or at the extreme ends of the slider were avoided, as it was noted during pre-pilot studies that participants developed a strategy of moving to the particular end very fast knowing the system would ignore overshoot. The sliders were 600 pixels wide, mouse ratio was set to slow in order to encourage the participants to really move. Through the use of the fixed values in the table across conditions, it was ensured that in all conditions the same distance would be travelled in the same order, thereby avoiding having to bother with Fitts’s law or the mouse-pointer ratio (which is proportional to the movement speed, a feature which cannot be turned off in the Apple Macintosh operating systems).

The cues were presented in blocks for each condition, if the conditions would have been mixed participants would have developed a visual only strategy in order to be independent on the secondary (and tertiary) feedback. Their slider was also colour-coded, each condition had its own colour. A total of 31 subjects participated in this phase, and the orders were balanced as well as possible: in 15 times the V-VAT order and 16 times the VAT-V order.

2.2.4. Phase 4: Step Counting

In Phase 4, the participants had to count the steps cued by the system (

Figure 5). It was a variation on the Phase 3 experiment, but made more difficult and challenging for the participants to see if a clearer advantage of the added feedback could be shown. In this experiment, the participants were prompted with a certain number of steps to be taken with a horizontal slider (the slider was similar to the one in the previous experiment). The range of the cue points on the slider was limited to maximum of 20 steps (from the centre) rather than the full 120 steps in this case, to keep it manageable. The number of steps to be taken with the slider were cued by a message, not by a system slider as in Phase 3, as shown in

Figure 5.

The conditions were visual modality only (V) and visual combined with touch modalities (VT). No confirmative feedback was given, the participant would press a button when he or she thought that the goal was reached and then the next cue was generated.

In this phase of the experiment, 11 subjects participated, balanced between the orders in 5 V-VT and 6 VT-V order.

2.2.5. Phase 5: Questionnaire

The last part of the session consisted of a form with questions to obtain qualitative feedback on the chosen modalities, and some personal data such as gender, handiness, and experience with computers and the mouse. This data was acquired for 31 of the participants.

2.3. Results and Analysis

The data from the files compiled by the Max patches logging was assembled in a spreadsheet. The data was then analysed in SPSS.

All phases of the experiment showed a learning effect. This was expected, so the trials were balanced in order (as well as possible) to compensate for this effect.

The errors logged were distinguished in two types: misalignments and mistakes. A mistake is a mishit, for instance when the participant ‘drops’ the slider too early. A mistake can also appear in the measured response times, some values appeared that were below the physically possible minimum response time, as a result of the participant moving the slider by just clicking briefly in the desired position so that the slider jumps to that position (they were instructed not to use this ‘cheating’ but occasionally it occurred). Some values were unrealistically high, probably due to some distraction or other error. All these cases contained no real information, and were therefore omitted from the data as mistakes. The misalignment errors were to be analysed as they might reveal information about the level of performance.

In the sections below, the results of each phase are summarised, and the significant results of the analysis are reported.

2.3.1. Phase 1: Threshold Levels

Of the 19 presented textures (averaged over two runs), 12 were recognised correctly (including four of the six non-textures) by all the participants, and a further 10 with more than 90% accuracy (including two non-textures). There were two lower scores (88% and 75%) which corresponded with the two most difficult to perceive conditions, the combination of the lowest amplitude (30%), low frequency (25 Hz), and the lowest spatial distributions of two respectively four pixels.

2.3.2. Phase 2: Menu Selection

Table 1 below shows the total mean response times. All trials were balanced to compensate for learning effects, and the number of trials was such that individual differences were spread out, so that all trials and participants (N in the table) could be grouped. The response times Visual + Tactual condition were slightly higher than for the Visual Only condition, statistical analysis showed that this was not significant. The error rates were not statistically analysed, in both conditions, they were too low to draw any conclusions from.

2.3.3. Phase 3: Slider Manipulation

The table below (

Table 2) gives an overview of the means and the standard deviations of all response times (for all distances) per condition.

The response times were normally distributed and not symmetrical in the extremes, so two-tailed

t-tests were carried out in order to investigate whether these differences are significant. Of the three possible degrees-of-freedom, the interaction between V and VT as well as VA and VAT was analysed, in order to test the hypothesis of the influence of T (tactual feedback added) on the performance. The results are shown in

Table 3.

This table shows that response times for the Visual only condition were significantly faster than the Visual + Tactual condition, and that the Visual + Auditory condition was significantly faster than the similar condition with tactual feedback added.

The differences in response times between conditions were further analysed for each of the 20 distances, but no effects were found. The differences in error rates were not significant.

2.3.4. Phase 4: Step Counting

It was observed that this phase of the experiment was the most challenging for the participants, as it indeed was designed to be. It relied more on the participants’ cognitive skills than on the sensori-motor loop alone. The total processing time and the standard deviation are reported the

Table 4.

In order to find out if the difference is significant, an analysis of variance (ANOVA) was performed, to discriminate between the learning effect and a possible order effect in the data. The ANOVA systematically controlled for the following variables:

The results of this analysis are summarised in the table below (

Table 5). From this analysis follows that the mean response times for the Visual + Tactile condition are significantly faster than for the Visual Only condition.

The error rates were lower in the VT condition, but not significantly.

2.3.5. Phase 5: Questionnaire

The average age of the 31 participants who filled in the questionnaire was 20 years, the majority was male (26 out of 31) and right-handed (27 out of 31). The left-handed people used the right hand to operate the mouse, which was their own preference (the set-up was designed to be modified for left hand use). They answered a question about their computer and mouse experience with a Likert scale from 0 (no experience) to 5 (lot of experience). The average from this question was 4.4 on this scale, meaning their computer and mousing skills were high.

The qualitative information obtained by the open questions was of course quite varied, the common statements are however categorised and presented in the

Table 6.

There was a question about whether it was thought that the added feedback was useful. For added sound, 16 (out of 31) participants answered “yes”, 4 answered “no”, and 11 thought it would depend on the context (issues were mentioned such as privacy, blind people, precision). For the added touch feedback, 27 participants thought it was useful, and 4 thought it would depend on the context. In

Table 7, the results are summarised in percentages.

When asked which combination they preferred in general, 3 (out of 31) answered Visual Only, 13 answered Visual+Tactual, 3 answered Visual+Auditory, 7 Visual+Auditory+Tactual, and 4 thought it would depend on the context. One participant did not answer. The results are shown in percentages in

Table 8.