Interactive Dairy Goat Image Segmentation for Precision Livestock Farming

Abstract

:Simple Summary

Abstract

1. Introduction

- A novel interactive segmentation network for interactive dairy goat image segmentation is proposed, which can significantly lower the time for pixel-level image annotation.

- A layer-by-layer multi-head feature-fusion method and an upsampling attention mechanism are proposed to enhance the segmentation power of the interactive segmentation model.

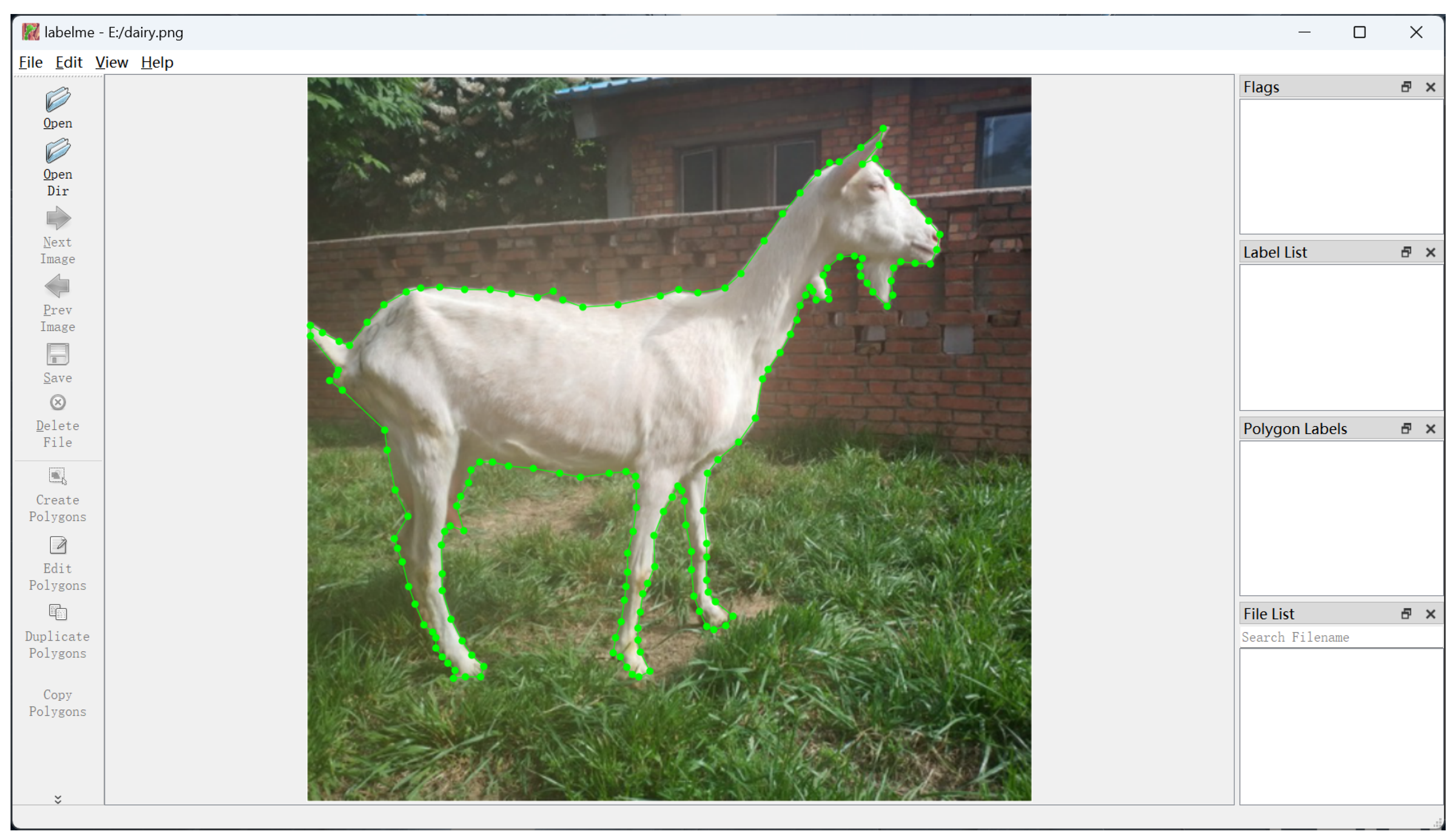

- An interactive dairy goat image-annotation system based on our proposed interactive segmentation model is designed and developed, which only takes 7.12 s on average to annotate a dairy goat instance, five times faster than Labelme.

2. Related Work

3. Material and Methods

3.1. Introduction of Dairy Goat Farm Environment

3.2. Data Collection and Cleaning

3.3. Dataset Annotation and Division

3.4. Proposed Method

3.4.1. Interactive Image-Annotation Process

- (1)

- The user needs to provide some clicks as priors to guide the interactive segmentation network for image segmentation. Specifically, in an image, the pixels in the object to be annotated are the foreground, otherwise the background. Positive and negative clicks are the clicks in the foreground and background, and are used to indicate to the interactive segmentation model that the current pixel belongs to the foreground or background, respectively. As shown in Figure 2, the red dots and green dots in the input image represent the positive clicks and negative clicks provided by the user, respectively.

- (2)

- The positive and negative clicks provided by users are encoded into distance maps of positive clicks and negative clicks, respectively. Specifically, the clicks will be translated into distance maps of clicks that have the same width and height as the input image. We use D to represent the distance map of positive (or negative) clicks. H and W represent the height and width of the input image. Then, the distance map can be calculated according to Equation (1)and , is the value of distance map D at the index of , S represents the positive (or negative) clicks set, represents the Euclidean distance between the point and the point p.

- (3)

- The input image is first concatenated with distance maps of positive and negative clicks, then input into the interactive segmentation network.

- (4)

- If the segmentation accuracy is qualified, the annotation process is over; otherwise, the user needs to provide extra clicks to correct the incorrectly segmented area until the accuracy is qualified or the number of clicks reaches the maximum limit of 20.

3.4.2. Network Architecture

3.4.3. Layer-by-Layer Multi-Head Feature Fusion

| Algorithm 1 The application process of the multi-head feature fusion |

Input: The high-level feature maps of shape , the low-level feature maps of shape Output: The fused feature map of shape

|

3.4.4. Upsampling Attention

- (1)

- Squeeze: Perform global average pooling and global max pooling on the high-level feature maps to obtain two feature vectors of shape , respectively.

- (2)

- Excitation: Perform nonlinear transformations on the two resulting feature vectors with two sequential fully connected layers to obtain two intermediate weight vectors of shape , then perform matrix addition and sigmoid operation on the two intermediate weight vectors to obtain the final weight vector of shape , of which each value represents the weight of low-level feature maps.

- (3)

- Scale: Use the weight vector to weight each channel of the low-level feature map.

4. Results

4.1. Training Settings

4.2. Evaluation Metrics

4.3. User Clicks Generation

- (1)

- The number of positive clicks needs to be between .

- (2)

- The positive clicks should be the pixels in the foreground, which are pixels far away from the background pixels and pixels far away from other positive clicks.

- (3)

- A new positive click comes from a candidate point set , as formulated in Equation (4).

- (1)

- The number of negative clicks needs to be between .

- (2)

- The negative clicks should be the pixels in the background, which are pixels far away from the foreground pixels and pixels far away from other negative clicks.

- (3)

- A new negative click comes from a candidate point set , as formulated in Equation (5).

4.4. Comparisons with Different Interactive Segmentation Models

4.5. Ablation Study

4.6. Visualization of Interactive Dairy Goat Image Segmentation

4.7. Design and Development of Dairy Goat Image-Annotation System

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, D.; Tang, J.; Zhu, W.; Li, H.; Xin, J.; He, D. Dairy goat detection based on Faster R-CNN from surveillance video. Comput. Electron. Agric. 2018, 154, 443–449. [Google Scholar] [CrossRef]

- Su, Q.; Tang, J.; Zhai, J.; Sun, Y.; He, D. Automatic tracking of the dairy goat in the surveillance video. Comput. Electron. Agric. 2021, 187, 106254. [Google Scholar] [CrossRef]

- Su, Q.; Tang, J.; Zhai, M.; He, D. An intelligent method for dairy goat tracking based on Siamese network. Comput. Electron. Agric. 2022, 193, 106636. [Google Scholar] [CrossRef]

- Billah, M.; Wang, X.; Yu, J.; Jiang, Y. Real-time goat face recognition using convolutional neural network. Comput. Electron. Agric. 2022, 194, 106730. [Google Scholar] [CrossRef]

- Qiao, Y.; Truman, M.; Sukkarieh, S. Cattle segmentation and contour extraction based on Mask R-CNN for precision livestock farming. Comput. Electron. Agric. 2019, 165, 104958. [Google Scholar] [CrossRef]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent perception for cattle monitoring: A review for cattle identification, body condition score evaluation, and weight estimation. Comput. Electron. Agric. 2021, 185, 106143. [Google Scholar] [CrossRef]

- Cominotte, A.; Fernandes, A.; Dorea, J.; Rosa, G.; Ladeira, M.; Van Cleef, E.; Pereira, G.; Baldassini, W.; Neto, O.M. Automated computer vision system to predict body weight and average daily gain in beef cattle during growing and finishing phases. Livest. Sci. 2020, 232, 103904. [Google Scholar] [CrossRef]

- Papadopoulos, D.P.; Uijlings, J.R.; Keller, F.; Ferrari, V. Extreme clicking for efficient object annotation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4930–4939. [Google Scholar] [CrossRef]

- Maninis, K.K.; Caelles, S.; Pont-Tuset, J.; Van Gool, L. Deep extreme cut: From extreme points to object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 616–625. [Google Scholar] [CrossRef]

- Xu, N.; Price, B.; Cohen, S.; Yang, J.; Huang, T. Deep grabcut for object selection. arXiv 2017, arXiv:1707.00243. [Google Scholar] [CrossRef]

- Liew, J.; Wei, Y.; Xiong, W.; Ong, S.H.; Feng, J. Regional interactive image segmentation networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA; pp. 2746–2754. [Google Scholar] [CrossRef]

- Jiang, M.; Rao, Y.; Zhang, J.; Shen, Y. Automatic behavior recognition of group-housed goats using deep learning. Comput. Electron. Agric. 2020, 177, 105706. [Google Scholar] [CrossRef]

- Zhang, S.; Liew, J.H.; Wei, Y.; Wei, S.; Zhao, Y. Interactive object segmentation with inside-outside guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 12234–12244. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, Z.; Chen, L.Z.; Cheng, M.M.; Lu, S.P. Interactive image segmentation with first click attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13339–13348. [Google Scholar] [CrossRef]

- Boykov, Y.Y.; Jolly, M.P. Interactive graph cuts for optimal boundary & region segmentation of objects in ND images. In Proceedings of the Proceedings Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. 105–112. [Google Scholar] [CrossRef]

- Boykov, Y.; Funka-Lea, G. Graph cuts and efficient nd image segmentation. Int. J. Comput. Vis. 2006, 70, 109–131. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut” interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. (TOG) 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Grady, L. Random walks for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1768–1783. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.H.; Lee, K.M.; Lee, S.U. Generative image segmentation using random walks with restart. In Proceedings of the Computer Vision–ECCV 2008: 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Proceedings, Part III 10. Springer: Berlin/Heidelberg, Germany, 2008; pp. 264–275. [Google Scholar] [CrossRef]

- Adams, R.; Bischof, L. Seeded region growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef]

- Fan, M.; Lee, T.C. Variants of seeded region growing. IET Image Process. 2015, 9, 478–485. [Google Scholar] [CrossRef]

- Mehnert, A.; Jackway, P. An improved seeded region growing algorithm. Pattern Recognit. Lett. 1997, 18, 1065–1071. [Google Scholar] [CrossRef]

- Beare, R. Regularized seeded region growing. In Proceedings of the VIth International Symposium ISMM 2002, Sydney, Australia, 3–5 April 2002; CSIRO Publishing: Clayton, VIC, Australia, 2002; pp. 91–99. [Google Scholar] [CrossRef]

- Mortensen, E.N.; Barrett, W.A. Interactive segmentation with intelligent scissors. Graph. Model. Image Process. 1998, 60, 349–384. [Google Scholar] [CrossRef]

- Nguyen, T.N.A.; Cai, J.; Zhang, J.; Zheng, J. Robust interactive image segmentation using convex active contours. IEEE Trans. Image Process. 2012, 21, 3734–3743. [Google Scholar] [CrossRef]

- Goldstein, T.; Bresson, X.; Osher, S. Geometric applications of the split Bregman method: Segmentation and surface reconstruction. J. Sci. Comput. 2010, 45, 272–293. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28–36. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. Solo: Segmenting objects by locations. In Computer Vision–ECCV 2020: Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XVIII 16; Springer: Berlin/Heidelberg, Germany, 2020; pp. 649–665. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. Solov2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2019; pp. 9404–9413. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

| Time | Environment | In Total | |

|---|---|---|---|

| Indoor | Outdoor | ||

| Morning (10:00–10:30) | 299 | 332 | 631 |

| Noon (12:00–12:30) | 367 | 401 | 768 |

| Evening (16:30–17:00) | 186 | 244 | 430 |

| In total | 852 | 977 | 1829 |

| Postures | Environment | In Total | |

|---|---|---|---|

| Indoor | Outdoor | ||

| Standing | 246 | 366 | 612 |

| Laying down | 306 | 261 | 567 |

| In total | 552 | 627 | 1179 |

| Number | Shooting Method | In Total | |

|---|---|---|---|

| Fixed Camera | Mobile Phone | ||

| 1 | 533 | 1179 | 1712 |

| 2 | 658 | 0 | 658 |

| 3 | 324 | 0 | 324 |

| 4 | 201 | 0 | 201 |

| 5 | 73 | 0 | 73 |

| >5 | 40 | 0 | 40 |

| Configuration | Value |

|---|---|

| CPU | Intel(R) Xeon(R) Silver 4210 CPU @ 2.20 GHz |

| GPU | Nvidia GeForce RTX 2080 Ti [11 GB] |

| RAM | 125 GB |

| Hard Disk | 10 TB |

| OS | Ubuntu 16.04 (64-bit) |

| Language | python 3.7 |

| Cuda version | 10.2 |

| Framework | Pytorch 1.8 |

| Model | mNoC@85↓ | mNoC@90↓ |

|---|---|---|

| RIS-Net | 5.42 | 8.13 |

| DEXTR | 4 | 6.27 |

| IOG | 3 | 5 |

| FCANet | 2.73 | 6.59 |

| UA-MHFF-DeepLabv3+(Ours) | 1.87 | 4.11 |

| # | DeepLabv3+ | MHFF | UA | mNoC@85↓ | mNoC@90↓ |

|---|---|---|---|---|---|

| 1 | ✓ | ✗ | ✗ | 2.51 | 4.73 |

| 2 | ✓ | ✓ | ✗ | 2.13 | 4.40 |

| 3 | ✓ | ✗ | ✓ | 2.20 | 4.35 |

| 4 | ✓ | ✓ | ✓ | 1.87 | 4.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Han, G.; Qiao, Y.; Xu, L.; Chen, L.; Tang, J. Interactive Dairy Goat Image Segmentation for Precision Livestock Farming. Animals 2023, 13, 3250. https://doi.org/10.3390/ani13203250

Zhang L, Han G, Qiao Y, Xu L, Chen L, Tang J. Interactive Dairy Goat Image Segmentation for Precision Livestock Farming. Animals. 2023; 13(20):3250. https://doi.org/10.3390/ani13203250

Chicago/Turabian StyleZhang, Lianyue, Gaoge Han, Yongliang Qiao, Liu Xu, Ling Chen, and Jinglei Tang. 2023. "Interactive Dairy Goat Image Segmentation for Precision Livestock Farming" Animals 13, no. 20: 3250. https://doi.org/10.3390/ani13203250