Deep Learning-Based Automated Approach for Determination of Pig Carcass Traits

Abstract

:Simple Summary

Abstract

1. Introduction

2. Experimental Materials and Methods

2.1. Experimental Materials

2.2. Data Processing

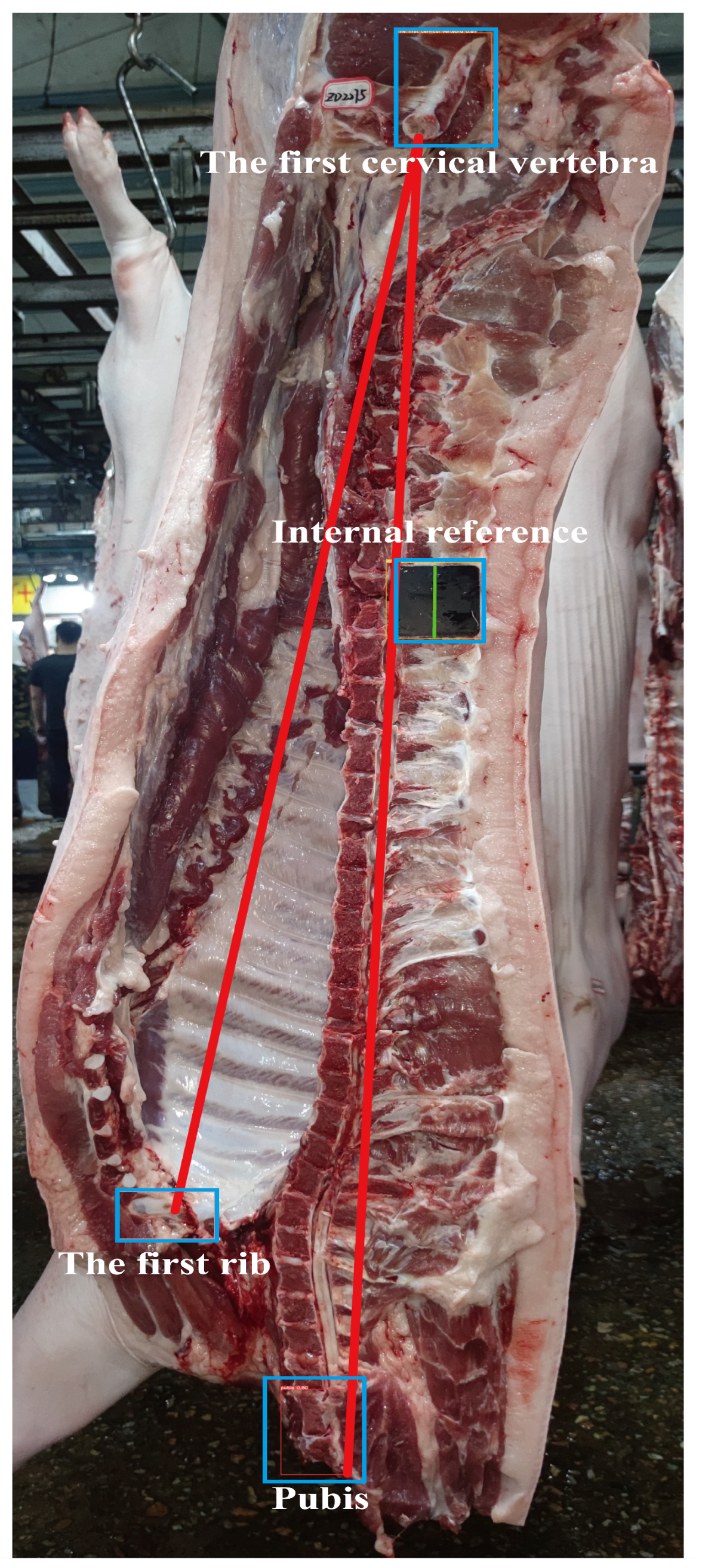

2.3. Construction of Determination Models

2.4. Model Evaluation

2.5. Statistical Analysis

3. Results and Analysis

3.1. Evaluation and Comparison of Model Performance

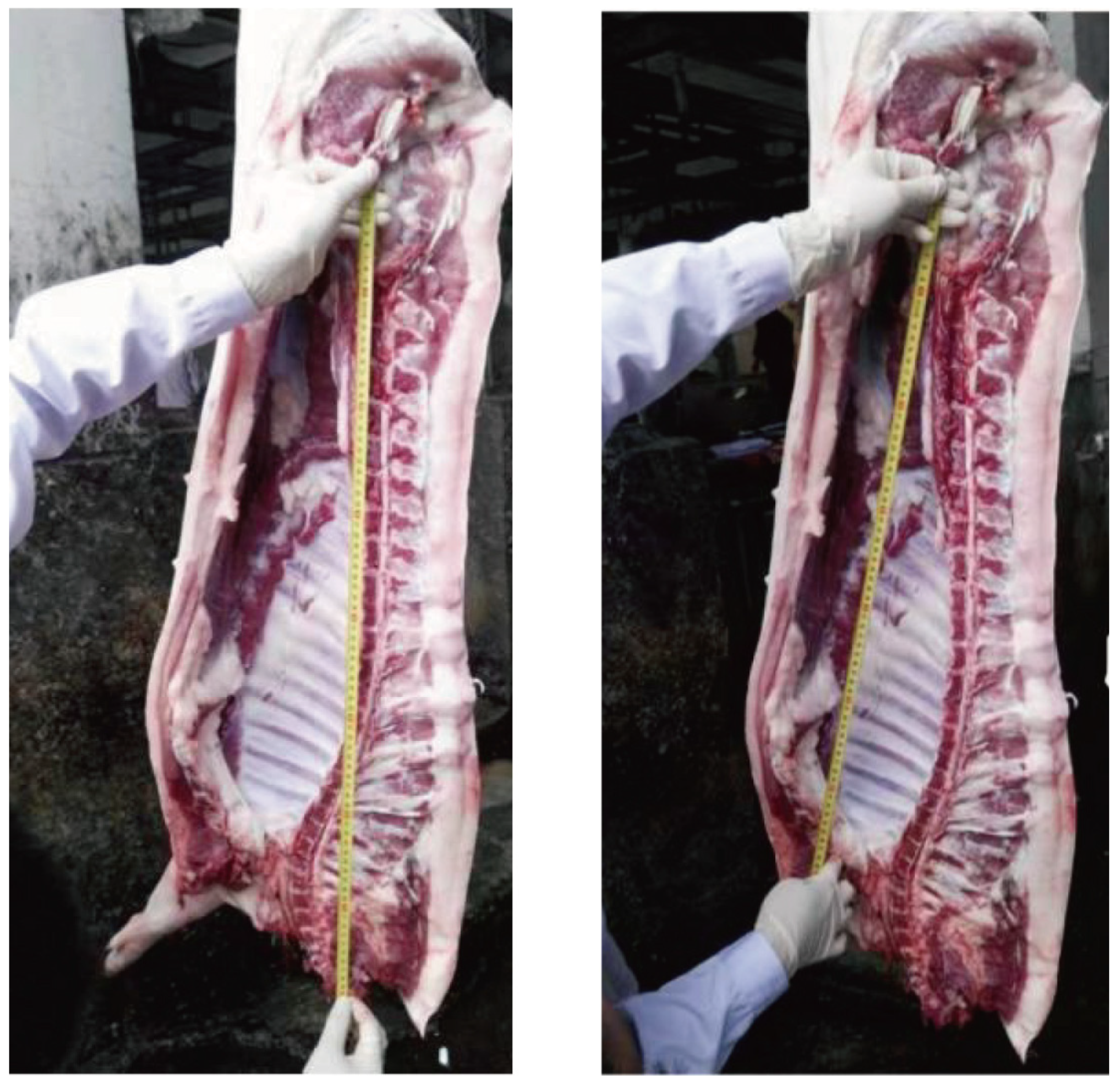

3.2. Results of Training the Carcass Length Determination Model and Comparative Analysis

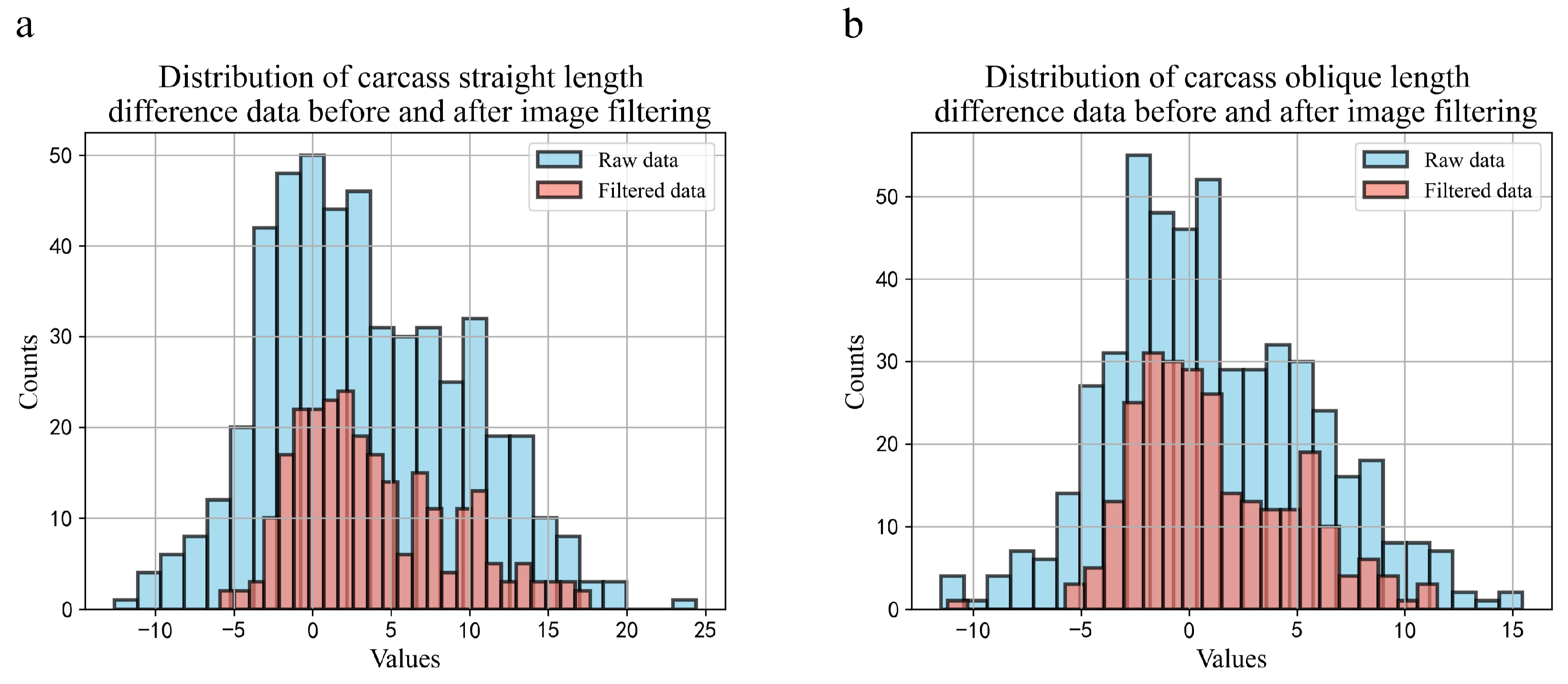

3.3. Comparative Analysis of Data Distribution before and after Image Filtering

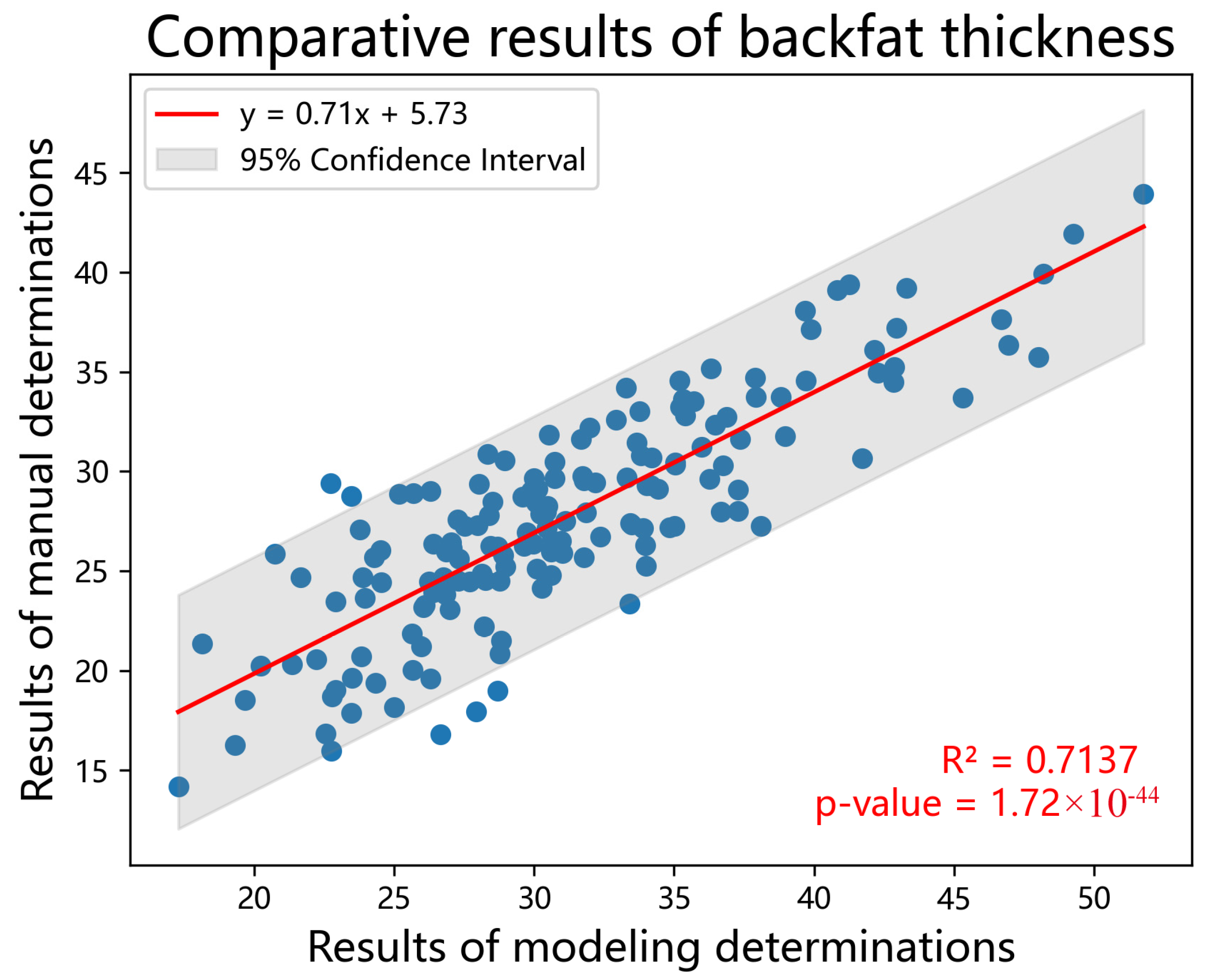

3.4. Training Results of the Backfat Segmentation Model and Comparative Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Roongsitthichai, A.; Tummaruk, P. Importance of backfat thickness to reproductive performance in female pigs. Thai Vet. Med. 2014, 44, 171–178. [Google Scholar] [CrossRef]

- Więcek, J.; Warda, A.; Blicharski, T.; Sońta, M.; Zalewska, A.; Rekiel, A.; Batorska, M. The effect of backfat thickness determined in vivo in breeding gilts on their reproductive performance and longevity. Anim. Sci. Pap. Rep. 2023, 41, 293–304. [Google Scholar] [CrossRef]

- Miar, Y.; Plastow, G.; Bruce, H.; Moore, S.; Manafiazar, G.; Kemp, R.; Charagu, P.; Huisman, A.; van Haandel, B.; Zhang, C.; et al. Genetic and phenotypic correlations between performance traits with meat quality and carcass characteristics in commercial crossbred pigs. PLoS ONE 2014, 9, e110105. [Google Scholar] [CrossRef]

- Zeng, H.; Zhong, Z.; Xu, Z.; Teng, J.; Wei, C.; Chen, Z.; Zhang, W.; Ding, X.; Li, J.; Zhang, Z. Meta-analysis of genome-wide association studies uncovers shared candidate genes across breeds for pig fatness trait. BMC Genom. 2022, 23, 786. [Google Scholar] [CrossRef]

- Gozalo-Marcilla, M.; Buntjer, J.; Johnsson, M.; Batista, L.; Diez, F.; Werner, C.R.; Chen, C.Y.; Gorjanc, G.; Mellanby, R.J.; Hickey, J.M.; et al. Genetic architecture and major genes for backfat thickness in pig lines of diverse genetic backgrounds. Genet. Sel. Evol. GSE 2021, 53, 76. [Google Scholar] [CrossRef] [PubMed]

- Fabbri, M.C.; Zappaterra, M.; Davoli, R.; Zambonelli, P. Genome-wide association study identifies markers associated with carcass and meat quality traits in Italian Large White pigs. Anim. Genet. 2020, 51, 950–952. [Google Scholar] [CrossRef]

- Falker-Gieske, C.; Blaj, I.; Preuß, S.; Bennewitz, J.; Thaller, G.; Tetens, J. GWAS for meat and carcass traits using imputed sequence level genotypes in pooled f2-designs in pigs. G3 Genes Genomes Genet. 2019, 9, 2823–2834. [Google Scholar] [CrossRef]

- Li, L.Y.; Xiao, S.J.; Tu, J.M.; Zhang, Z.K.; Zheng, H.; Huang, L.B.; Huang, Z.Y.; Yan, M.; Liu, X.D.; Guo, Y.M. A further survey of the quantitative trait loci affecting swine body size and carcass traits in five related pig populations. Anim. Genet. 2021, 52, 621–632. [Google Scholar] [CrossRef]

- Li, J.; Peng, S.; Zhong, L.; Zhou, L.; Yan, G.; Xiao, S.; Ma, J.; Huang, L. Identification and validation of a regulatory mutation upstream of the BMP2 gene associated with carcass length in pigs. Genet. Sel. Evol. GSE 2021, 53, 94. [Google Scholar] [CrossRef]

- Liu, K.; Hou, L.; Yin, Y.; Wang, B.; Liu, C.; Zhou, W.; Niu, P.; Li, Q.; Huang, R.; Li, P. Genome-wide association study reveals new QTL and functional candidate genes for the number of ribs and carcass length in pigs. Anim. Genet. 2023, 54, 435–445. [Google Scholar] [CrossRef] [PubMed]

- Prakapenka, D.; Liang, Z.; Zaabza, H.B.; VanRaden, P.M.; Van Tassell, C.P.; Da, Y. Large-sample genome-wide association study of resistance to retained placenta in U.S. Holstein cows. Int. J. Mol. Sci. 2024, 25, 5551. [Google Scholar] [CrossRef]

- Stranger, B.E.; Stahl, E.A.; Raj, T. Progress and promise of genome-wide association studies for human complex trait genetics. Genetics 2011, 187, 367–383. [Google Scholar] [CrossRef]

- Zhou, S.; Ding, R.; Meng, F.; Wang, X.; Zhuang, Z.; Quan, J.; Geng, Q.; Wu, J.; Zheng, E.; Wu, Z.; et al. A meta-analysis of genome-wide association studies for average daily gain and lean meat percentage in two Duroc pig populations. BMC Genom. 2021, 22, 12. [Google Scholar] [CrossRef]

- Robson, J.F.; Denholm, S.J.; Coffey, M. Automated processing and phenotype extraction of ovine medical images using a combined generative adversarial network and computer vision pipeline. Sensors 2021, 21, 7268. [Google Scholar] [CrossRef] [PubMed]

- Weissbrod, A.; Shapiro, A.; Vasserman, G.; Edry, L.; Dayan, M.; Yitzhaky, A.; Hertzberg, L.; Feinerman, O.; Kimchi, T. Automated long-term tracking and social behavioural phenotyping of animal colonies within a semi-natural environment. Nat. Commun. 2013, 4, 2018. [Google Scholar] [CrossRef] [PubMed]

- Al-Tam, F.; Adam, H.; dos Anjos, A.; Lorieux, M.; Larmande, P.; Ghesquière, A.; Jouannic, S.; Shahbazkia, H.R. P-TRAP: A panicle trait phenotyping tool. BMC Plant Biol. 2013, 13, 122. [Google Scholar] [CrossRef]

- Delgado-Pando, G.; Allen, P.; Troy, D.J.; McDonnell, C.K. Objective carcass measurement technologies: Latest developments and future trends. Trends Food Sci. Technol. 2021, 111, 771–782. [Google Scholar] [CrossRef]

- Allen, P. 20—Automated grading of beef carcasses. In Improving the Sensory and Nutritional Quality of Fresh Meat; Kerry, J.P., Ledward, D., Eds.; Woodhead Publishing: Sawston, UK, 2009; pp. 479–492. [Google Scholar]

- Narsaiah, K.; Jha, S.N. Nondestructive methods for quality evaluation of livestock products. J. Food Sci. Technol. 2012, 49, 342–348. [Google Scholar] [CrossRef]

- Narsaiah, K.; Biswas, A.K.; Mandal, P.K. Chapter 3—Nondestructive methods for carcass and meat quality evaluation. In Meat Quality Analysis; Biswas, A.K., Mandal, P.K., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 37–49. [Google Scholar]

- Valous, N.A.; Zheng, L.; Sun, D.W.; Tan, J. Chapter 7—Quality evaluation of meat cuts. In Computer Vision Technology for Food Quality Evaluation, 2nd ed.; Sun, D.-W., Ed.; Academic Press: San Diego, CA, USA, 2016; pp. 175–193. [Google Scholar]

- Jackman, P.; Sun, D.-W.; Allen, P. Recent advances in the use of computer vision technology in the quality assessment of fresh meats. Trends Food Sci. Technol. 2011, 22, 185–197. [Google Scholar]

- Dhanya, V.G.; Subeesh, A.; Kushwaha, N.L.; Vishwakarma, D.K.; Nagesh Kumar, T.; Ritika, G.; Singh, A.N. Deep learning based computer vision approaches for smart agricultural applications. Artif. Intell. Agric. 2022, 6, 211–229. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Qiao, Y.; Guo, Y.; He, D. Cattle body detection based on YOLOv5-ASFF for precision livestock farming. Comput. Electron. Agric. 2023, 204, 107579. [Google Scholar]

- Hao, W.; Ren, C.; Han, M.; Zhang, L.; Li, F.; Liu, Z. Cattle body detection based on YOLOv5-EMA for precision livestock farming. Animals 2023, 13, 3535. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Xia, J.; Lu, H.; Luo, H.; Lv, E.; Zeng, Z.; Li, B.; Meng, F.; Yang, A. Automatic recognition and quantification feeding behaviors of nursery pigs using improved YOLOV5 and feeding functional area proposals. Animals 2024, 14, 569. [Google Scholar] [CrossRef] [PubMed]

- Kalla, H.; Ruthramurthy, B.; Mishra, S.; Dengia, G.; Sarankumar, R. A practical animal detection and collision avoidances system using deep learning model. In Proceedings of the 2022 IEEE 7th International conference for Convergence in Technology (I2CT), Pune, India, 7–9 April 2022; pp. 1–6. [Google Scholar]

- Shen, C.; Liu, L.; Zhu, L.; Kang, J.; Wang, N.; Shao, L. High-throughput in situ root image segmentation based on the improved DeepLabv3+ method. Front. Plant Sci. 2020, 11, 576791. [Google Scholar] [CrossRef]

- Luo, Z.; Yang, W.; Yuan, Y.; Gou, R.; Li, X. Semantic segmentation of agricultural images: A survey. Inf. Process. Agric. 2024, 11, 172–186. [Google Scholar] [CrossRef]

- Pan, Q.; Gao, M.; Wu, P.; Yan, J.; Li, S. A deep-learning-based approach for wheat yellow rust disease recognition from unmanned aerial vehicle images. Sensors 2021, 21, 6540. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Zhang, Y.; Wang, J. A dragon fruit picking detection method based on YOLOv7 and PSP-ellipse. Sensors 2023, 23, 3803. [Google Scholar] [CrossRef]

- Chen, M.; Jin, C.; Ni, Y.; Xu, J.; Yang, T. Online detection system for wheat machine harvesting impurity rate based on DeepLabV3+. Sensors 2022, 22, 7627. [Google Scholar] [CrossRef]

- Lee, H.-J.; Koh, Y.J.; Kim, Y.-K.; Lee, S.H.; Lee, J.H.; Seo, D.W. MSENet: Marbling score estimation network for automated assessment of korean beef. Meat Sci. 2022, 188, 108784. [Google Scholar] [CrossRef]

- Lee, H.-J.; Baek, J.-H.; Kim, Y.-K.; Lee, J.H.; Lee, M.; Park, W.; Lee, S.H.; Koh, Y.J. BTENet: Back-Fat Thickness Estimation Network for Automated Grading of the Korean Commercial Pig. Electronics 2022, 11, 1296. [Google Scholar] [CrossRef]

- NY/T 825-2004; Technical Regulation for Testing of Carcasses Traits in Lean-Type Pig. Guangdong Provincial Academy of Agricultural Sciences: Guangzhou, China, 2004.

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on YOLOv8 and Its Advancements. In Data Intelligence and Cognitive Informatics, Proceedings of the International Conference on Data Intelligence and Cognitive Informatics (ICDICI 2023), Tirunelveli, India, 27–28 June 2023; Algorithms for Intelligent, Systems; Jacob, I.J., Piramuthu, S., Falkowski-Gilski, P., Eds.; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 3431–3440. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. arXiv 2016, arXiv:1612.01105. [Google Scholar] [CrossRef]

- Olsen, E.V.; Christensen, L.B.; Nielsen, D.B. A review of computed tomography and manual dissection for calibration of devices for pig carcass classification—Evaluation of uncertainty. Meat Sci. 2017, 123, 35–44. [Google Scholar] [CrossRef]

- López-Campos, Ó.; Prieto, N.; Juárez, M.; Aalhus, J.L. New technologies available for livestock carcass classification and grading. CABI Rev. 2019, 14, 1–10. [Google Scholar] [CrossRef]

- Leighton, P.; Segura Plaza, J.; Lam, S.; Marcoux, M.; Wei, X.; Lopez-Campos, O.; Soladoye, P.; Dugan, M.; Juárez, M.; Prieto, N. Prediction of carcass composition and meat and fat quality using sensing technologies: A review. Meat Muscle Biol. 2021, 5, 12951. [Google Scholar] [CrossRef]

- Peppmeier, Z.C.; Howard, J.T.; Knauer, M.T.; Leonard, S.M. Estimating backfat depth, loin depth, and intramuscular fat percentage from ultrasound images in swine. Animal 2023, 17, 100969. [Google Scholar] [CrossRef]

| Models | Class 1 | Mean Average Precision | Parameters (M) 2 | FLOPs(G) 3 |

|---|---|---|---|---|

| SSD | Pubis | 97.0 | 24.15 | 137.75 |

| The first rib | 94.5 | |||

| The first cervical vertebra | 98.8 | |||

| Internal reference | 99.1 | |||

| Faster R-CNN | Pubis | 58.5 | 41.14 | 78.13 |

| The first rib | 37.5 | |||

| The first cervical vertebra | 25.0 | |||

| Internal reference | 52.6 | |||

| YOLOV5n | Pubis | 96.3 | 1.76 | 4.2 |

| The first rib | 97.9 | |||

| The first cervical vertebra | 99.0 | |||

| Internal reference | 99.4 | |||

| YOLOV8n | Pubis | 98.2 | 3.01 | 8.1 |

| The first rib | 99.4 | |||

| The first cervical vertebra | 99.1 | |||

| Internal reference | 99.5 |

| Model Name | Mean Accuracy | Mean IoU | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| YOLOV8n-seg | 97.23 | 89.10 | 96.86 | 97.23 | 97.03 |

| U-Net | 96.83 | 86.37 | 95.70 | 96.83 | 96.22 |

| PSP-Net | 93.29 | 79.70 | 95.04 | 93.29 | 94.09 |

| Deeplabv3 | 96.52 | 84.81 | 95.02 | 96.52 | 95.73 |

| YOLOV5n-seg | 97.02 | 88.97 | 96.15 | 97.08 | 96.89 |

| FCN | 92.02 | 69.65 | 89.56 | 92.02 | 90.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, J.; Wu, Y.; Tang, X.; Liu, J.; Huang, Y.; Wu, Z.; Li, X.; Zhang, Z. Deep Learning-Based Automated Approach for Determination of Pig Carcass Traits. Animals 2024, 14, 2421. https://doi.org/10.3390/ani14162421

Wei J, Wu Y, Tang X, Liu J, Huang Y, Wu Z, Li X, Zhang Z. Deep Learning-Based Automated Approach for Determination of Pig Carcass Traits. Animals. 2024; 14(16):2421. https://doi.org/10.3390/ani14162421

Chicago/Turabian StyleWei, Jiacheng, Yan Wu, Xi Tang, Jinxiu Liu, Yani Huang, Zhenfang Wu, Xinyun Li, and Zhiyan Zhang. 2024. "Deep Learning-Based Automated Approach for Determination of Pig Carcass Traits" Animals 14, no. 16: 2421. https://doi.org/10.3390/ani14162421