Improving the Accuracy of Automatic Facial Expression Recognition in Speaking Subjects with Deep Learning

Abstract

1. Introduction

2. Background and Related Works

2.1. What is an Expression?

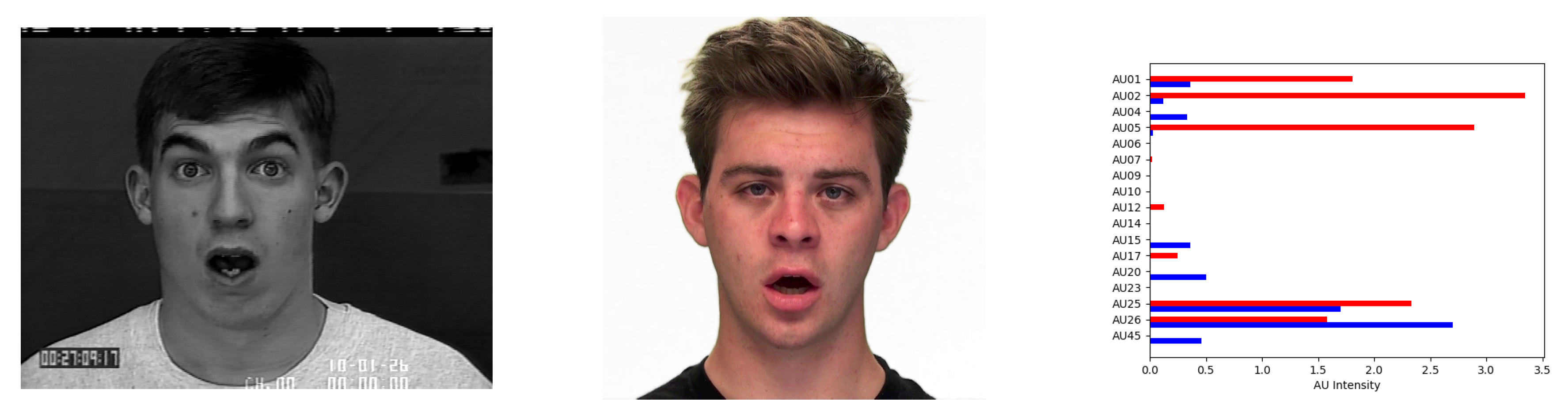

2.2. Problems with the Speaker

3. Method and Experimental Setting

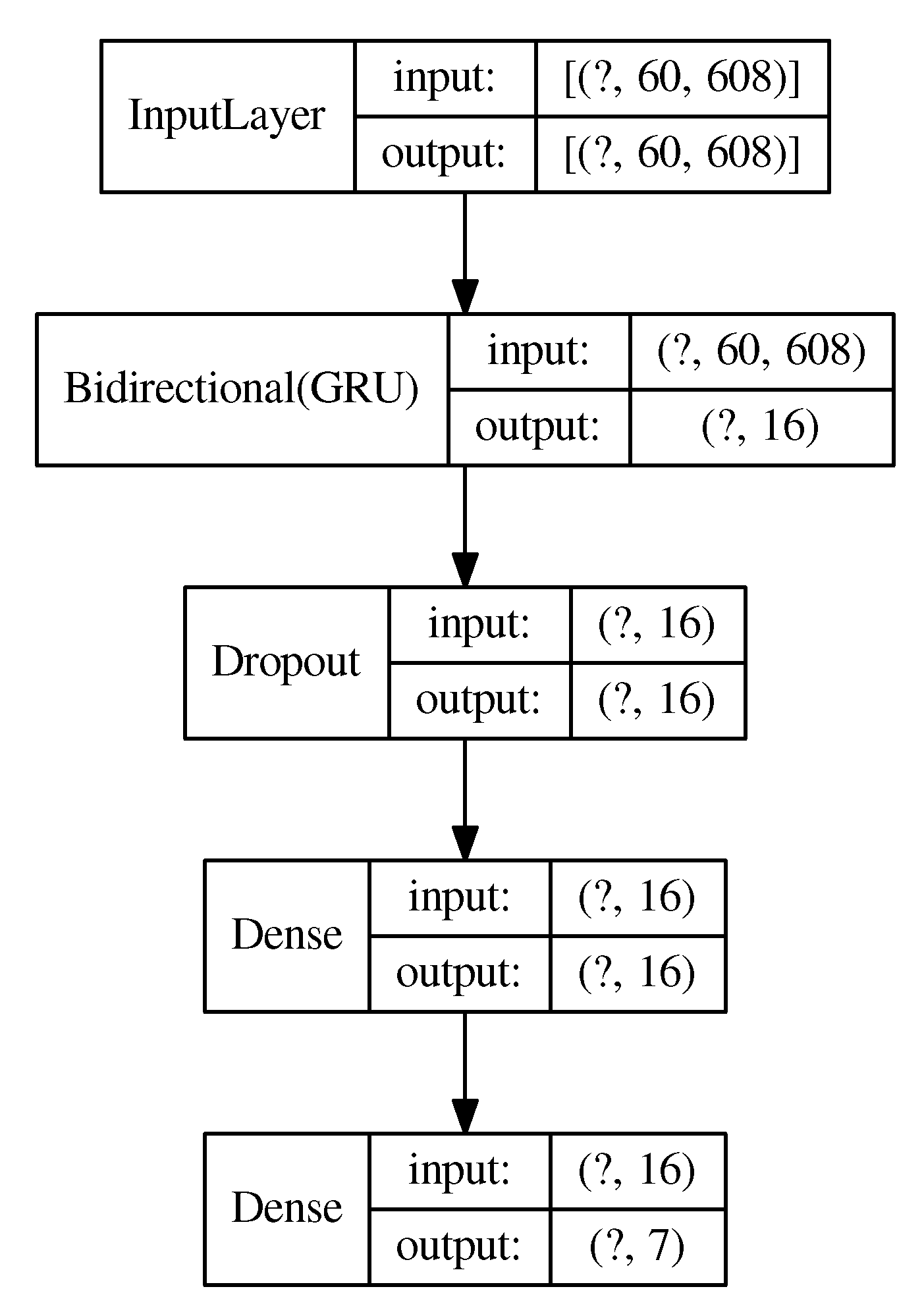

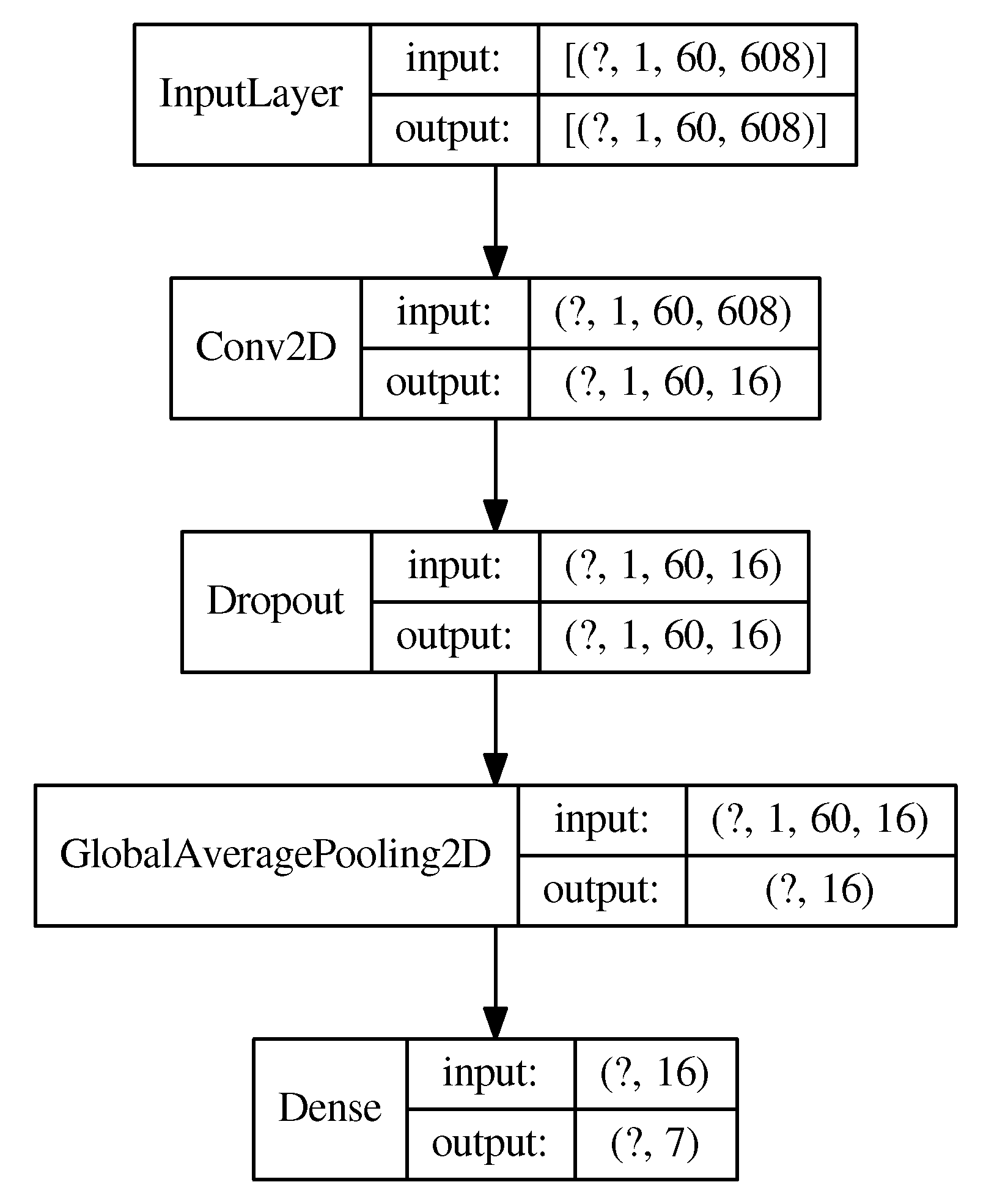

3.1. Models

3.2. Dataset

3.3. Implementation Details

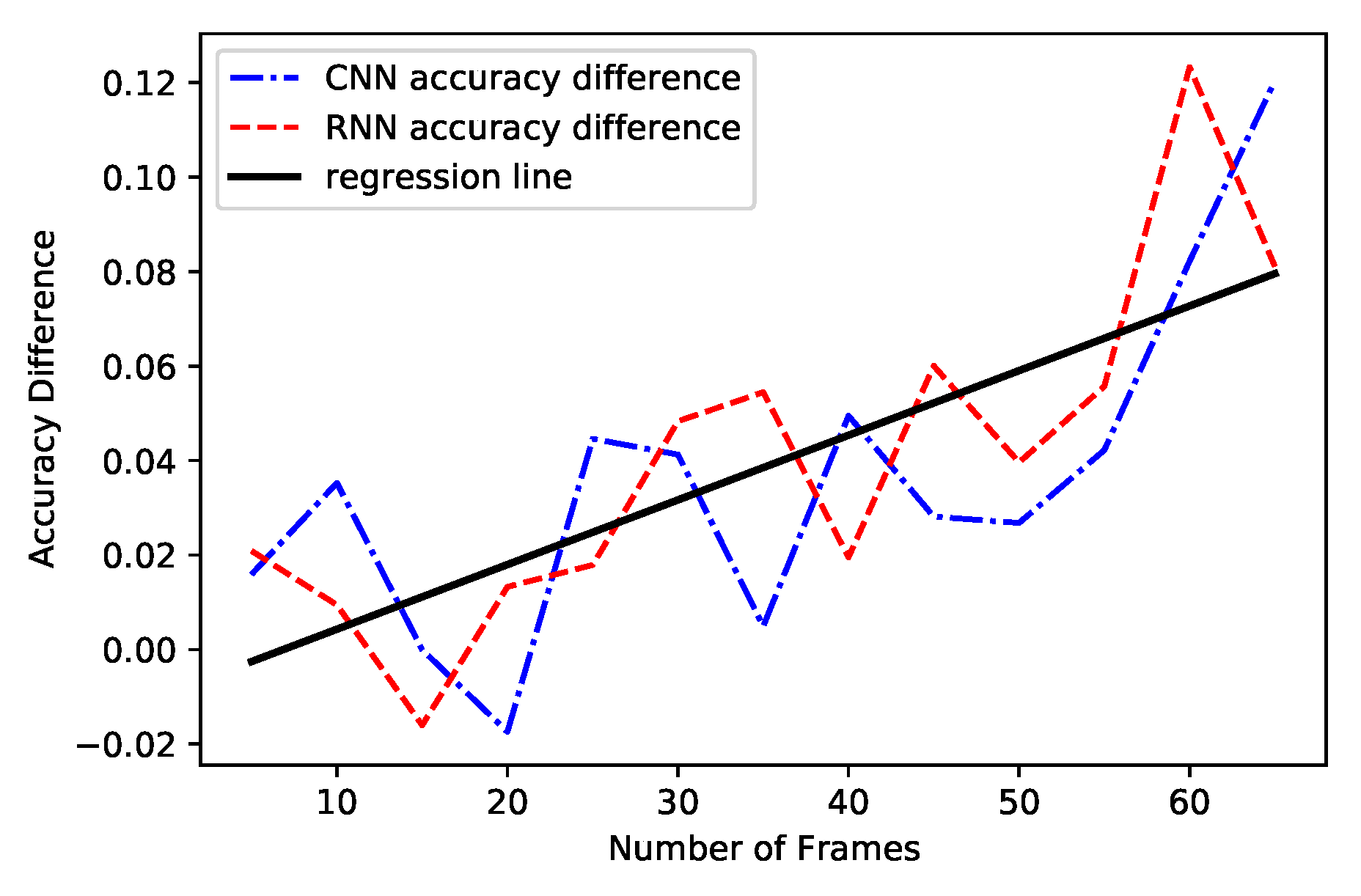

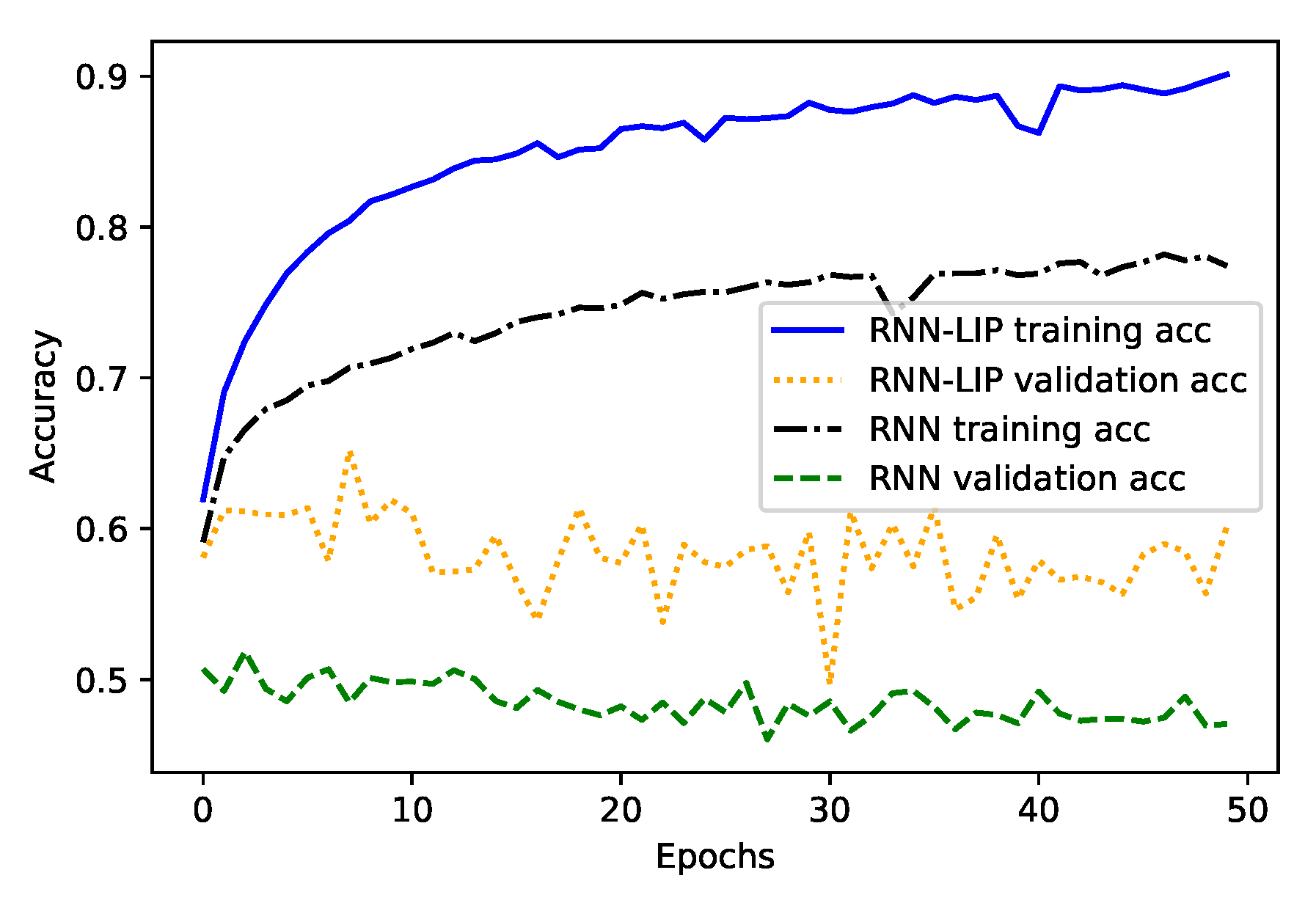

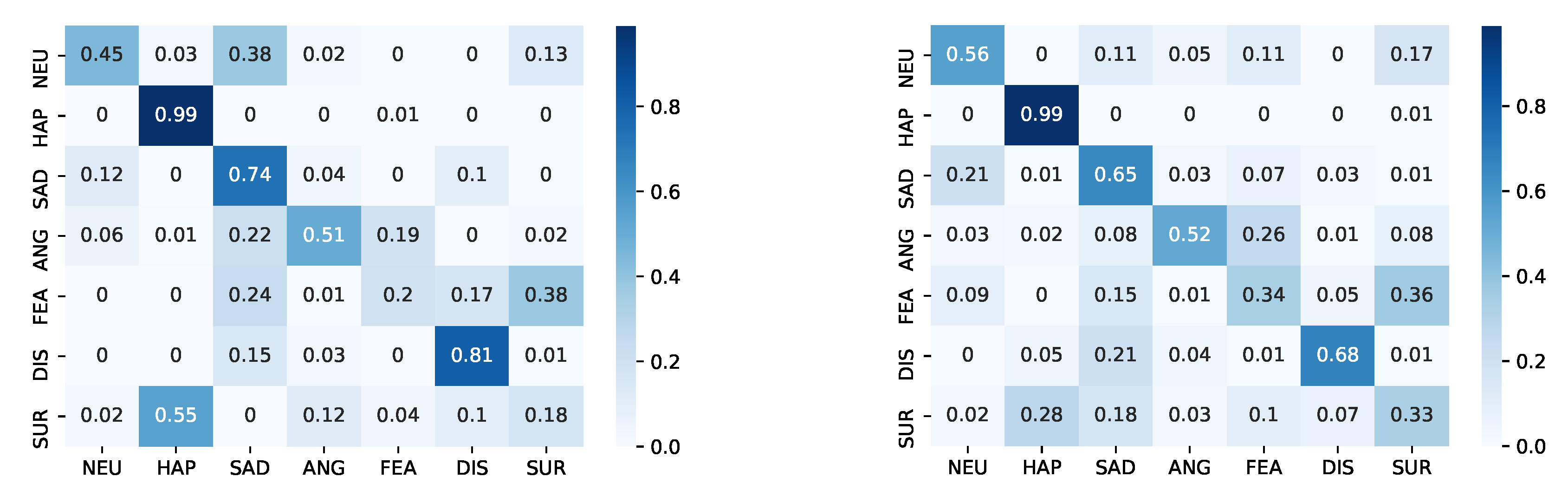

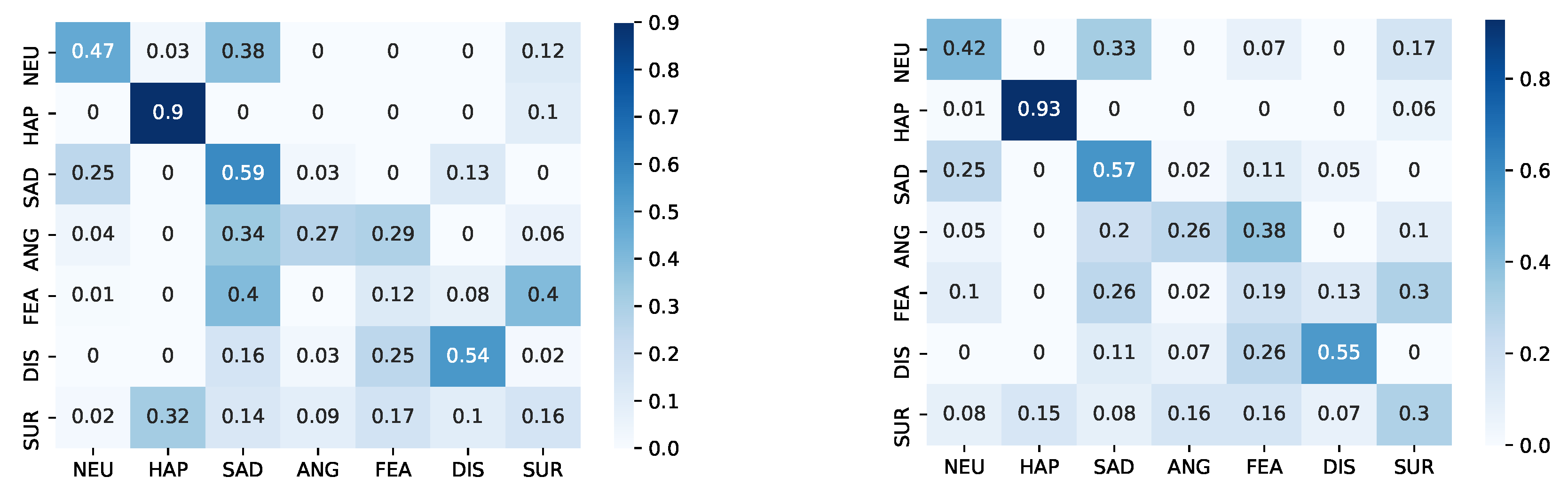

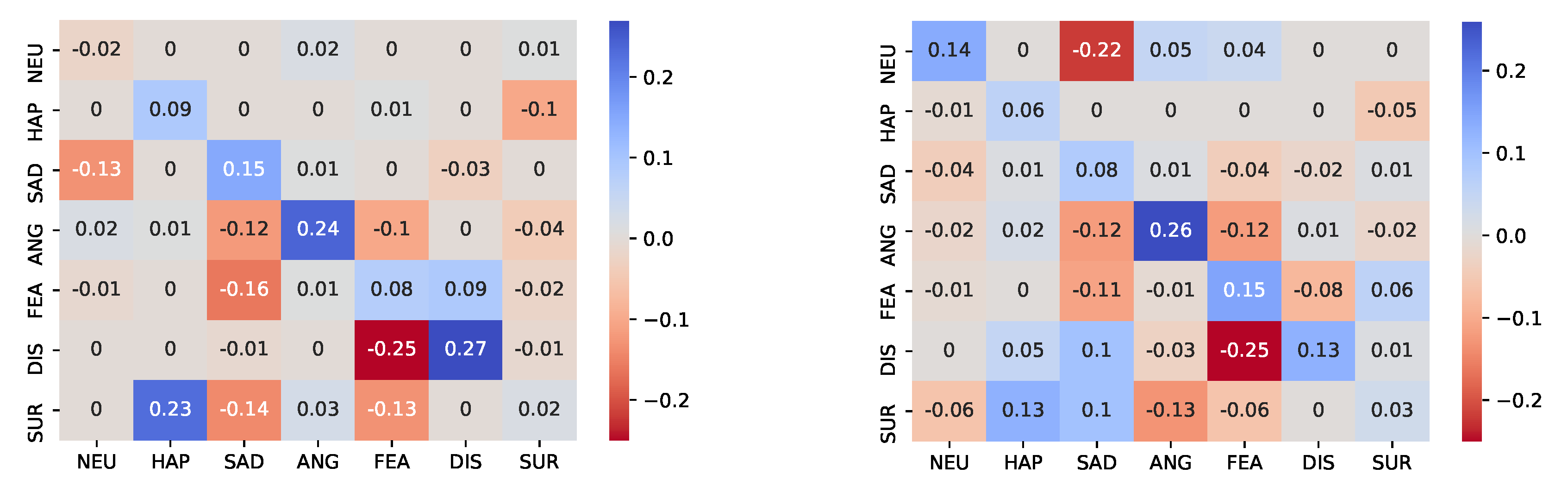

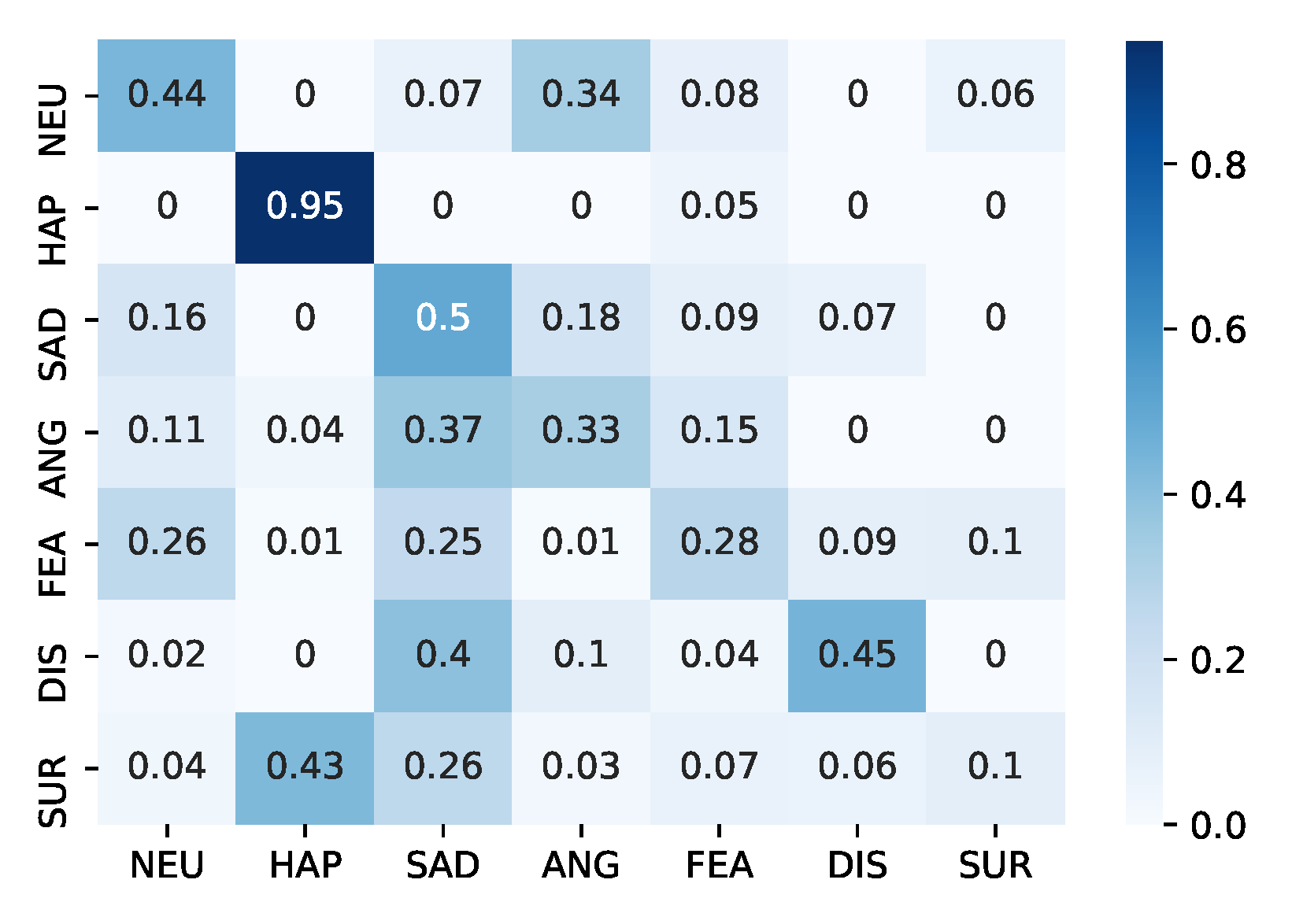

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychol. Sci. Public Int. 2019, 20, 1–68. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Mower Provost, E. Say cheese vs. smile: Reducing speech-related variability for facial emotion recognition. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 27–36. [Google Scholar]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended Cohn-Kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Computer Vision and Pattern Recognition Workshops (CVPRW); IEEE: Piscataway, NJ, USA, 2010; pp. 94–101. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [PubMed]

- Baltrušaitis, T.; Robinson, P.; Morency, L.P. Openface: An open source facial behavior analysis toolkit. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–10. [Google Scholar]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: Oxford, MS, USA, 1997. [Google Scholar]

- Zeng, Z.; Tu, J.; Liu, M.; Zhang, T.; Rizzolo, N.; Zhang, Z.; Huang, T.S.; Roth, D.; Levinson, S. Bimodal HCI-related affect recognition. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 14–15 October 2004; pp. 137–143. [Google Scholar]

- Zeng, Z.; Tu, J.; Liu, M.; Huang, T.S.; Pianfetti, B.; Roth, D.; Levinson, S. Audio-visual affect recognition. IEEE Trans. Multim. 2007, 9, 424–428. [Google Scholar] [CrossRef]

- Bargal, S.A.; Barsoum, E.; Ferrer, C.C.; Zhang, C. Emotion recognition in the wild from videos using images. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 433–436. [Google Scholar]

- Mehrabian, A. Nonverbal Communication; Transaction Publishers: Piscataway, NJ, USA, 1972. [Google Scholar]

- Pantic, M.; Patras, I. Dynamics of facial expression: Recognition of facial actions and their temporal segments from face profile image sequences. IEEE Trans. Syst. Man Cybern. Part B 2006, 36, 433–449. [Google Scholar] [CrossRef]

- Sandbach, G.; Zafeiriou, S.; Pantic, M.; Yin, L. Static and dynamic 3D facial expression recognition: A comprehensive survey. Image Vis. Comput. 2012, 30, 683–697. [Google Scholar] [CrossRef]

- Pantic, M.; Nijholt, A.; Pentland, A.; Huanag, T.S. Human-Centred Intelligent Human? Computer Interaction (HCI2): How far are we from attaining it? Int. J. Autonom. Adapt. Commun. Syst. 2008, 1, 168–187. [Google Scholar] [CrossRef]

- Grossi, G.; Lanzarotti, R.; Napoletano, P.; Noceti, N.; Odone, F. Positive technology for elderly well-being: A review. Patt. Recognit. Lett. 2019, in press. [Google Scholar] [CrossRef]

- Boccignone, G.; de’Sperati, C.; Granato, M.; Grossi, G.; Lanzarotti, R.; Noceti, N.; Odone, F. Stairway to Elders: Bridging Space, Time and Emotions in Their Social Environment for Wellbeing. In International Conference on Pattern Recognition Applications and Methods; SciTePress: Setubal, Portugal, 2020; pp. 548–554. [Google Scholar]

- Ekman, P. Darwin and Facial Expression: A Century of Research in Review; Ishk: Los Altos, CA, USA, 2006. [Google Scholar]

- Ekman, P. Basic emotions. Handb. Cogn. Emot. 1999, 98, 16. [Google Scholar]

- Sariyanidi, E.; Gunes, H.; Cavallaro, A. Automatic analysis of facial affect: A survey of registration, representation, and recognition. IEEE Trans. Patt. Anal. Mach. Intell. 2015, 37, 1113–1133. [Google Scholar] [CrossRef]

- D’Mello, S.K.; Kory, J. A review and meta-analysis of multimodal affect detection systems. ACM Comput. Surv. CSUR 2015, 47, 43. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

- Boccignone, G.; Conte, D.; Cuculo, V.; D’Amelio, A.; Grossi, G.; Lanzarotti, R. Deep Construction of an Affective Latent Space via Multimodal Enactment. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 865–880. [Google Scholar] [CrossRef]

- Kumari, J.; Rajesh, R.; Pooja, K. Facial expression recognition: A survey. Proc. Comput. Sci. 2015, 58, 486–491. [Google Scholar] [CrossRef]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 279–283. [Google Scholar]

- Valstar, M.; Pantic, M. Induced disgust, happiness and surprise: An addition to the MMI facial expression database. In Proc. Int’l Conf. Language Resources and Evaluation; EMOTION: Paris, France, 2010; pp. 65–70. Available online: http://www.cs.nott.ac.uk/~pszmv/Documents/MMI_spontaneous.pdf (accessed on 1 January 2010).

- Boccignone, G.; Conte, D.; Cuculo, V.; Lanzarotti, R. AMHUSE: A multimodal dataset for HUmour SEnsing. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, Skotland, 13–17 November 2017; pp. 438–445. [Google Scholar]

- Haamer, R.E.; Rusadze, E.; Lsi, I.; Ahmed, T.; Escalera, S.; Anbarjafari, G. Review on Emotion Recognition Databases. Hum. Robot Interact. Theor. Appl. 2017, 3, 39–63. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting large, richly annotated facial-expression databases from movies. IEEE Multimedia 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J.; Chen, S.; Shi, Z.; Cai, J. Facial Motion Prior Networks for Facial Expression Recognition. arXiv 2019, arXiv:1902.08788. [Google Scholar]

- Albanie, S.; Nagrani, A.; Vedaldi, A.; Zisserman, A. Emotion recognition in speech using cross-modal transfer in the wild. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 292–301. [Google Scholar]

- Mariooryad, S.; Busso, C. Facial expression recognition in the presence of speech using blind lexical compensation. IEEE Trans. Affec. Comput. 2015, 7, 346–359. [Google Scholar] [CrossRef]

- Mariooryad, S.; Busso, C. Compensating for speaker or lexical variabilities in speech for emotion recognition. Speech Commun. 2014, 57, 1–12. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335. [Google Scholar] [CrossRef]

- Wu, C.H.; Wei, W.L.; Lin, J.C.; Lee, W.Y. Speaking effect removal on emotion recognition from facial expressions based on eigenface conversion. IEEE Trans. Multim. 2013, 15, 1732–1744. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Proc. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Afouras, T.; Chung, J.S.; Zisserman, A. Deep lip reading: A comparison of models and an online application. arXiv 2018, arXiv:1806.06053. [Google Scholar]

- Fernandez-Lopez, A.; Sukno, F.M. Survey on automatic lip-reading in the era of deep learning. Image Vis. Comput. 2018, 78, 53–72. [Google Scholar] [CrossRef]

- Assael, Y.M.; Shillingford, B.; Whiteson, S.; De Freitas, N. Lipnet: End-to-end sentence-level lipreading. arXiv 2016, arXiv:1611.01599. [Google Scholar]

- Ghosal, D.; Majumder, N.; Poria, S.; Chhaya, N.; Gelbukh, A. Dialoguegcn: A graph convolutional neural network for emotion recognition in conversation. arXiv 2019, arXiv:1908.11540. [Google Scholar]

- McKeown, G.; Valstar, M.; Cowie, R.; Pantic, M.; Schroder, M. The semaine database: Annotated multimodal records of emotionally colored conversations between a person and a limited agent. IEEE Trans. Affect. Comput. 2011, 3, 5–17. [Google Scholar] [CrossRef]

- Schuller, B.; Valstar, M.; Eyben, F.; McKeown, G.; Cowie, R.; Pantic, M. Avec 2011–the first international audio/visual emotion challenge. In International Conference on Affective Computing and Intelligent Interaction; Springer: Berlin, Germany, 2011; pp. 415–424. [Google Scholar]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A multimodal multi-party dataset for emotion recognition in conversations. arXiv 2018, arXiv:1810.02508. [Google Scholar]

- Bhavan, A.; Chauhan, P.; Hitkul; Shah, R.R. Bagged support vector machines for emotion recognition from speech. Knowl. Based Syst. 2019, 184, 104886. [Google Scholar] [CrossRef]

- Zamil, A.A.A.; Hasan, S.; Baki, S.M.J.; Adam, J.M.; Zaman, I. Emotion Detection from Speech Signals using Voting Mechanism on Classified Frames. In 2019 International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST); IEEE: Piscataway, NJ, USA, 2019; pp. 281–285. [Google Scholar]

- Jalal, M.A.; Loweimi, E.; Moore, R.K.; Hain, T. Learning temporal clusters using capsule routing for speech emotion recognition. Proc. Intersp. 2019, 2019, 1701–1705. [Google Scholar]

- Haque, A.; Guo, M.; Verma, P.; Fei-Fei, L. Audio-linguistic embeddings for spoken sentences. In ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); IEEE: Piscataway, NJ, USA, 2019; pp. 7355–7359. [Google Scholar]

- Jannat, R.; Tynes, I.; Lime, L.L.; Adorno, J.; Canavan, S. Ubiquitous emotion recognition using audio and video data. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 956–959. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Frames | CNN | CNN Lip | RNN | RNN Lip | Frame |

|---|---|---|---|---|---|

| 5 | 0.429 | 0.446 | 0.423 | 0.444 | 0.413 |

| 10 | 0.413 | 0.448 | 0.443 | 0.452 | 0.421 |

| 15 | 0.458 | 0.458 | 0.458 | 0.442 | 0.431 |

| 20 | 0.459 | 0.442 | 0.462 | 0.475 | 0.437 |

| 25 | 0.465 | 0.509 | 0.452 | 0.470 | 0.439 |

| 30 | 0.475 | 0.516 | 0.465 | 0.513 | 0.441 |

| 35 | 0.479 | 0.485 | 0.476 | 0.530 | 0.449 |

| 40 | 0.459 | 0.509 | 0.485 | 0.505 | 0.450 |

| 45 | 0.452 | 0.479 | 0.473 | 0.533 | 0.457 |

| 50 | 0.465 | 0.492 | 0.479 | 0.519 | 0.449 |

| 55 | 0.466 | 0.508 | 0.482 | 0.538 | 0.444 |

| 60 | 0.462 | 0.544 | 0.470 | 0.594 | 0.447 |

| 65 | 0.448 | 0.569 | 0.482 | 0.563 | 0.454 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bursic, S.; Boccignone, G.; Ferrara, A.; D’Amelio, A.; Lanzarotti, R. Improving the Accuracy of Automatic Facial Expression Recognition in Speaking Subjects with Deep Learning. Appl. Sci. 2020, 10, 4002. https://doi.org/10.3390/app10114002

Bursic S, Boccignone G, Ferrara A, D’Amelio A, Lanzarotti R. Improving the Accuracy of Automatic Facial Expression Recognition in Speaking Subjects with Deep Learning. Applied Sciences. 2020; 10(11):4002. https://doi.org/10.3390/app10114002

Chicago/Turabian StyleBursic, Sathya, Giuseppe Boccignone, Alfio Ferrara, Alessandro D’Amelio, and Raffaella Lanzarotti. 2020. "Improving the Accuracy of Automatic Facial Expression Recognition in Speaking Subjects with Deep Learning" Applied Sciences 10, no. 11: 4002. https://doi.org/10.3390/app10114002

APA StyleBursic, S., Boccignone, G., Ferrara, A., D’Amelio, A., & Lanzarotti, R. (2020). Improving the Accuracy of Automatic Facial Expression Recognition in Speaking Subjects with Deep Learning. Applied Sciences, 10(11), 4002. https://doi.org/10.3390/app10114002