The Application and Improvement of Deep Neural Networks in Environmental Sound Recognition

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

2.1. Environmental Sound Recognition

2.2. Review of Neural Networks

2.3. Feed-Forward Neural Network

2.4. Convolutional Neural Networks

2.5. Convolutional Layers

2.6. Activation Layers

2.7. Pooling Layers

2.8. Fully Connected Layers

2.9. Loss Function

2.10. Model Initialization

2.11. Batch Normalization

3. Method Development

3.1. Data Sets

3.2. Data Preprocessing

3.3. Data Augmentation

3.4. Network Customization

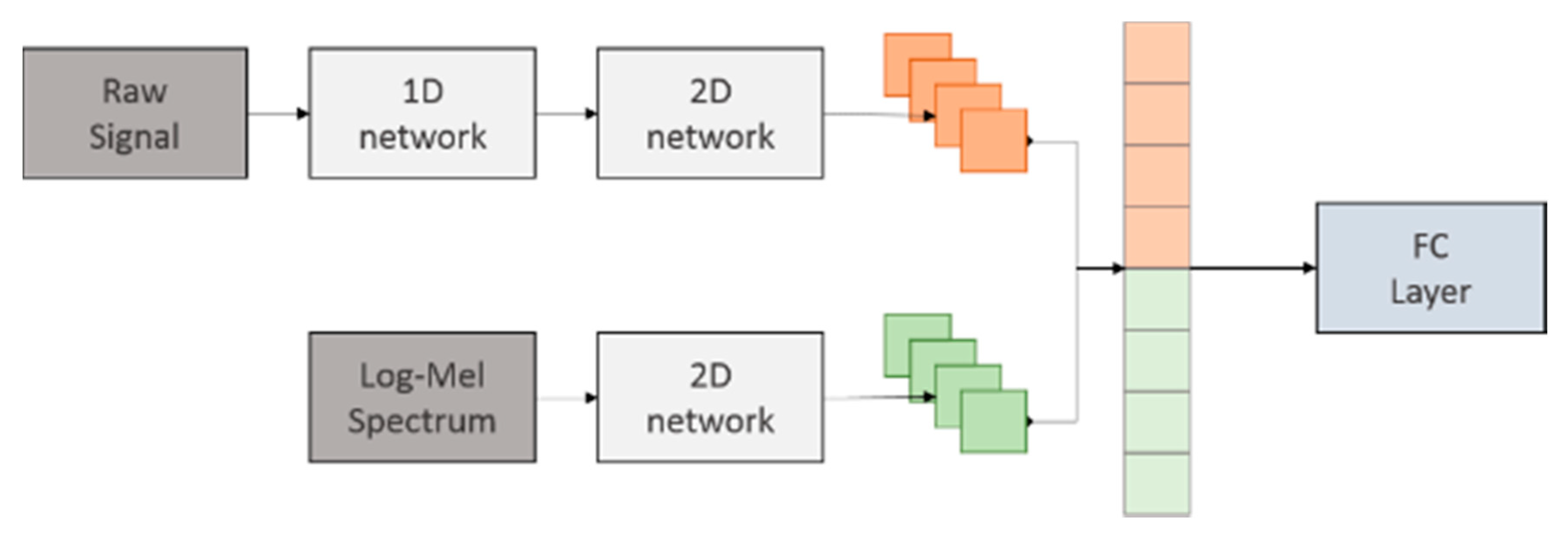

3.5. Network Parallelization

4. Results and Discussion

4.1. Experiment Setup

- Signal length per frame in the 1D network

- Depth of the 1D network

- Channel numbers of the 1D network (the height of the generated map)

- Kernel shape in the 2D network

- The effect of pre-training before parallel

- The effect of the augmentation

4.2. Architecture of the 1D Network

4.3. Network Depth

4.4. Number of Filters

4.5. Architecture of the 2D Network

4.6. Parallel Network: The Effect of Pre-Training

4.7. Data Augmentation

4.8. Network Conclusion

- Our proposed method is an end to end system achieving 81.55% of accuracy in ESC50.

- Our proposed 1D-2D network could properly extract features from raw audio signal, compared with the old works.

- Our proposed ParallelNet could efficiently raising the performance with multiple types of input features.

5. Conclusions and Perspectives

Author Contributions

Funding

Conflicts of Interest

References

- Chen, J.; Cham, A.H.; Zhang, J.; Liu, N.; Shue, L. Bathroom Activity Monitoring Based on Sound. In Proceedings of the International Conference on Pervasive Computing, Munich, Germany, 8–13 May 2005. [Google Scholar]

- Weninger, F.; Schuller, B. Audio Recognition in the Wild: Static and Dynamic Classification on a Real-World Database of Animal Vocalizations. In Proceedings of the Acoustics, Speech and Signal Processing (ICASSP) 2011 IEEE International Conference, Prague, Czech, 22–27 May 2011. [Google Scholar]

- Clavel, C.; Ehrette, T.; Richard, G. Events detection for an audio-based Surveillance system. In Proceedings of the ICME 2005 IEEE International Conference Multimedia and Expo., Amsterdam, The Netherlands, 6–8 July 2005. [Google Scholar]

- Bugalho, M.; Portelo, J.; Trancoso, I.; Pellegrini, T.; Abad, A. Detecting Audio Events for Semantic Video search. In Proceedings of the Tenth Annual Conference of the International Speech Communication Association, Bighton, UK, 6–9 September 2009. [Google Scholar]

- Mohamed, A.-R.; Hinton, G.; Penn, G. Understanding how deep Belief Networks Perform Acoustic Modelling. In Proceedings of the Acoustics, Speech and Signal Processing (ICASSP), 2012 IEEE International Conference, Kyoto, Japan, 23 April 2012. [Google Scholar]

- Sainath, T.N.; Weiss, R.J.; Senior, A.; Wilson, K.W.; Vinyals, O. Learning the speech front-end with raw waveform CLDNNs. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Lee, H.; Pham, P.; Largman, Y.; Ng, A.Y. Unsupervised Feature Learning for Audio Classification Using Convolutional Deep Belief Networks. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009. [Google Scholar]

- Van den Oord, A.; Dieleman, S.; Schrauwen, B. Deep Content-Based Music Recommendation. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Peltonen, V.; Tuomi, J.; Klapuri, A.; Huopaniemi, J.; Sorsa, T. Computational Auditory Scene Recognition. In Proceedings of the Acoustics, Speech, and Signal Processing (ICASSP), 2002 IEEE International Conference, Orlando, FL, USA, 13–17 May 2002. [Google Scholar]

- Rabiner, L. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Lin, C.-Y.; Sun, M.-T.; Tsai, K.-T. Healthcare audio event classification using hidden markov models and hierarchical hidden markov models. In Proceedings of the ICME 2009 IEEE International Conference Multimedia and Expo, Cancun, Mexico, 28 June–3 July 2009. [Google Scholar]

- Elizalde, B.; Kumar, A.; Shah, A.; Badlani, R.; Vincent, E.; Raj, B.; Lane, I. Experiments on the DCASE challenge 2016: Acoustic scene classification and sound event detection in real life recording. arXiv 2016, arXiv:1607.06706. [Google Scholar]

- Wang, J.-C.; Wang, J.-F.; He, K.W.; Hsu, C.-S. Environmental Sound Classification Using Hybrid SVM/KNN Classifier and MPEG-7 Audio Low-Level Descriptor. In Proceedings of the Neural Networks IJCNN’06 International Joint Conference, Vancouver, BC, Canada, 16–21 July 2006. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012. [Google Scholar]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the Machine Learning for Signal Processing (MLSP), 2015 IEEE 25th International Workshop, Boston, MA, USA, 17–20 September 2015. [Google Scholar]

- Stowell, D.; Giannoulis, D.; Benetos, E.; Lagrange, M.; MPlumbley, D. Detection and Classification of Acoustic Scenes and Events. IEEE Trans. Multimed. 2015, 17, 1733–1746. [Google Scholar] [CrossRef]

- DCASE 2017 Workshop. Available online: http://www.cs.tut.fi/sgn/arg/dcase2017/ (accessed on 30 June 2017).

- Aytar, Y.; Vondrick, C.; Torralba, A. Soundnet: Learning Sound Representations from Unlabeled Video. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 892–900. [Google Scholar]

- Dai, W.C.; Dai, S.; Qu, J.; Das, S. Very Deep Convolutional Neural Networks for Raw Waveforms. In Proceedings of the Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference, New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Tokozume, Y.; Harada, T. Learning Environmental Sounds with End-to-End Convolutional Neural Network. In Proceedings of the Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference, New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Tokozume, Y.; Ushiku, Y.; Harada, T. Learning from Between-class Examples for Deep Sound Recognition. In Proceedings of the ICLR 2018 Conference, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, Chia Laguna, Italy, 13–15 May 2010. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on Imagenet Classification. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Piczak, K.J. ESC: Dataset for Environmental Sound Classification. In Proceedings of the 23rd ACM international conference on Multimedia, Brisbane, Australia, 26 October 2015. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition CVPR, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Doll’ar, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Sign. Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Nesterov, Y. Gradient Methods for Minimizing Composite; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Boddapati, V.; Petef, A.; Rasmusson, J.; Lundberg, L. Classifying environmental sounds using image recognition networks. Proc. Comput. Sci. 2017, 112, 2048–2056. [Google Scholar] [CrossRef]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Salamon, J.; Jacoby, C.; Bello, J.P. A Dataset and Taxonomy for Urban Sound Research. In Proceedings of the 22nd ACM international conference on Multimedia, Orlando, FL, USA, 3–7 November 2014. [Google Scholar]

- Zheng, Y.-Y.; Kong, J.-L.; Jin, X.-B.; Wang, X.-Y.; Su, T.-L.; Wang, J.-L. Probability fusion decision framework of multiple deep neural networks for fine-grained visual classification. IEEE Access 2019, 7, 122740–122757. [Google Scholar] [CrossRef]

- Tu, Y.; Du, J.; Lee, C. Speech enhancement based on teacher–student deep learning using improved speech presence probability for noise-robust speech recognition. IEEE ACM Trans. Audio Speech Lang. Process. 2019, 27, 2080–2091. [Google Scholar] [CrossRef]

- Osayamwen, F.; Tapamo, J. Deep learning class discrimination based on prior probability for human activity recognition. IEEE Access 2019, 14747–14756. [Google Scholar] [CrossRef]

| Layer | Ksize | Stride | Padding | #Filters |

|---|---|---|---|---|

| Input (1 × 24,000) | ||||

| Conv1 | 13 | 1 | same | 32 |

| Pool | 2 | 2 | 0 | -- |

| Conv2 | 13 | 1 | same | 64 |

| Conv3 | 15 | 3 | same | 128 |

| Conv4 | 7 | 5 | same | 128 |

| Conv5 | 11 | 8 | same | 128 |

| Channel concatenation (1 × 128 × 100) | ||||

| Layer | Ksize | Stride | #Filters |

|---|---|---|---|

| Input (1 × M × N) | |||

| 3 × Conv (1~3) | (15, 1) | (1, 1) (1, 1) (1, 1) | 32 |

| 3 × Conv (4~6) | (15, 1) | (1, 1) (1, 1) (2, 1) | 64 |

| 3 × Conv (7~9) | (15, 1) | (1, 1) (1, 1) (2, 1) | 128 |

| 3 × Conv (10~12) | (5, 5) | (1, 1) (1, 2) (2, 2) | 256 |

| 3 × Conv (13~15) | (3, 3) | (1, 1) (1, 1) (1, 1) | 256 |

| 3 × Conv (16~18) | (3, 3) | (1, 1) (1, 1) (1, 1) | 512 |

| Conv19 | (3, 3) | (2, 2) | 512 |

| Conv20 | (3, 3) | (1, 1) | 1024 |

| Global max pooling (1024) | |||

| FC1 (2048) | |||

| FC2 (# classes) | |||

| Frame Length | ~25 ms | ~35 ms | ~45 ms |

|---|---|---|---|

| ksize of Conv4 | 7 | 15 | 21 |

| ksize of Conv5 | 11 | 15 | 19 |

| Avg. | 73.55% | 72.45% | 72.55% |

| Std. dev. | 2.89% | 2.57% | 1.54% |

| #Layers | Distribution of 12 Layers | Distribution of 24 Layers | |

|---|---|---|---|

| Location | |||

| Conv2 | 6 | 10 | |

| Conv3 | 3 | 8 | |

| Conv4 | 2 | 5 | |

| Conv5 | 1 | 1 | |

| Depth | 12 + 5 | 24 + 5 |

|---|---|---|

| Avg. | 72.00% | 66.95% |

| Std. | 1.28% | 2.62% |

| #Filters | 64 | 128 | 256 |

|---|---|---|---|

| Avg. | 71.05% | 73.55% | 74.15% |

| Std. | 2.05% | 2.89% | 3.16% |

| Size | (15,1) | (15,15) | (1,15) |

|---|---|---|---|

| Avg. | 73.55% | 67.25% | 70.85% |

| Std. | 2.89% | 2.57% | 2.75% |

| Pre-Train Network | Without Pre-Train | Raw Signal Only | Both Network |

|---|---|---|---|

| Avg. | 78.20% | 75.25% | 81.55% |

| Std. | 2.96% | 2.18% | 2.79% |

| Aug Type | Original with Extra | Overlap with Raw | Overlap with PS/TS |

|---|---|---|---|

| Avg. | 74.05% | 76.95% | 75.20% |

| Std. | 3.55% | 3.59% | 2.65% |

| Aug Type | Original with Extra | Overlap with Raw |

|---|---|---|

| Avg. | 81.40% | 81.35% |

| Std. | 3.04% | 3.03% |

| Models | Features | Accuracy | |

|---|---|---|---|

| ESC50 | ESC10 | ||

| Piczak’s CNN [15] | log-mel spectrogram | 64.5% | 81.0% |

| m18 [19] | raw audio signal | 68.5% [22] | 81.8% [22] |

| EnvNet [21] * | raw audio signal ⊕ log-mel spectrogram | 70.8% | 87.2% |

| SoundNet (5 layers) [18] | raw audio signal | 65.0% | 82.3% |

| AlexNet [36] | spectrogram | 69% | 86% |

| GoogLeNet [36] | spectrogram | 73% | 91% |

| EnvNet with BC [22] * | raw audio signal ⊕ log-mel spectrogram | 75.9% | 88.7% |

| EnvNet–v2 [22] | raw audio signal | 74.4% | 85.8% |

| 1D-2D network (ours) | raw audio signal | 73.55% | 90.00% |

| ParallelNet (ours) | raw audio signal &log-mel spectrogram | 81.55% | 91.30% |

| Human accuracy [30] | 81.3% | 95.7% | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-K.; Su, M.-C.; Hsieh, Y.-Z. The Application and Improvement of Deep Neural Networks in Environmental Sound Recognition. Appl. Sci. 2020, 10, 5965. https://doi.org/10.3390/app10175965

Lin Y-K, Su M-C, Hsieh Y-Z. The Application and Improvement of Deep Neural Networks in Environmental Sound Recognition. Applied Sciences. 2020; 10(17):5965. https://doi.org/10.3390/app10175965

Chicago/Turabian StyleLin, Yu-Kai, Mu-Chun Su, and Yi-Zeng Hsieh. 2020. "The Application and Improvement of Deep Neural Networks in Environmental Sound Recognition" Applied Sciences 10, no. 17: 5965. https://doi.org/10.3390/app10175965

APA StyleLin, Y.-K., Su, M.-C., & Hsieh, Y.-Z. (2020). The Application and Improvement of Deep Neural Networks in Environmental Sound Recognition. Applied Sciences, 10(17), 5965. https://doi.org/10.3390/app10175965