5.1. Feature Extraction

As stated above, the choosing rule aims to adaptively determine the number of principal components of feature extraction, which is also the dimension k after dimension reduction. The appropriate selection of

k value allows us to express more information with fewer components.

Figure 4 shows the eigenvalue curves of three hyperspectral datasets. The positions of the dotted lines represent the selected

k values of the three datasets under the choosing rule.

It shown in

Figure 4, that the choosing rules make the selected k of the three datasets fall in the region where the k value is very small and basically does not change, which means that the first k principal components represent enough data information.

Then we evaluate the choosing rules from the perspective of classification effect and verify the validity of feature extraction, where we choose CMR-SVM [

32] for the classifier. As for the classification effect, several metrics have been used to measure the classification accuracies [

33]. Accuracy is essentially a measure of how many ground truth pixels were classified correctly (in percentage), average accuracy (AA) is the average of the accuracies for each class, and overall accuracy (OA) is the accuracy of each class weighted by the proportion of test samples for that class in the total training set. Kappa statistic (Kappa), computed by weighting the measured accuracies, is a measure of agreement normalized for chance agreement.

We choose k value starting at 2 (to ensure effective classification) and a final value of 25 (greater than the value determined by the choosing rule), and perform a classification test at each k value. In order to meet the situation that there are few labels for individual classes in the dataset, we randomly choose five labeled pixels from each class as training samples and used the remaining labeled pixels as test samples. All experiments are repeated ten times and averaged.

Figure 5 shows the curve of the three metrics, where the dotted lines are the k value determined by the choosing rule. From the curve, it can be seen that as the k value increases, the three metrics all increase sharply and then tend to be saturated. The

k value determined by the choosing rule is basically located at the critical point of the saturation region, fully shows that the choosing rules we proposed can make feature extraction adaptively and flexibly.

At the same time, we evaluate the classification effect of the proposed algorithm by comparing some other feature extraction and classification methods, i.e., the R-SVM (perform SVM on the original dataset directly), the G-PCA (a GPU parallel implementation of PCA), the MRFs method [

34], the LBP-based method [

35] and the SC-MK method [

36]. The relevant parameters are set to default values as stated above, and the number of principal components of G-PCA is the same as that of INAPC, which is the k value determined by the choosing rule.

Similarly, each method was repeated ten times and averaged, and the classification results are shown in

Figure 6. Furthermore,

Figure 6 shows that R-SVM has the worst results because of the redundant and invalid information in the multi-band. Then after different feature extraction, the classification effects of the other methods are much greater than that of the R-SVM. After comparing with the classification results of other methods, the INAPC algorithm shows high-level superiority and stability even if it is not the highest in the Pavia University scene dataset. In practical applications, it is important to have stable and good results for different data, so the INAPC algorithm best meets this point, and its principle is also usually adaptable to implement and explain in practice.

In addition,

Figure 7 shows the visualization results of the three datasets with the same parameter, as stated above. In each dataset, from left to right, are its false color composite image, the ground truth image, and the whole image classification maps obtained by the INAPC method. As can be observed, ground truth as a collection of mutually exclusive classes, the classification results of INAPC clearly show the distribution of real scenes according to the comparison of the false color composite image, even in some detailed multi-class overlapping areas. It shows that the INAPC algorithm can have good classification results in small training pixels, which is of great significance in practice. The results of our flight experiment are given in

Section 5.3.

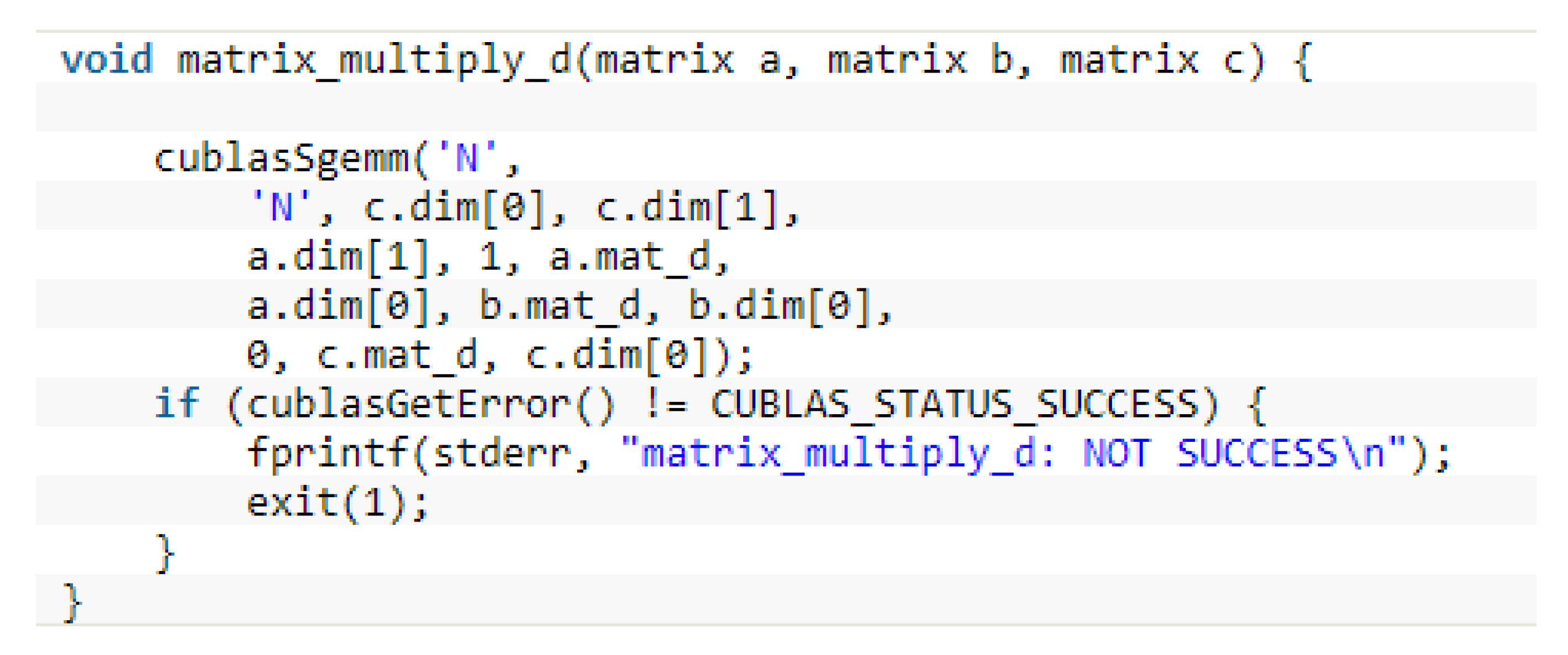

5.2. Parallel Implementation

For the evaluation of the parallel acceleration effect, we give the implementation of three different versions of INAPC. One of them is our GPU parallel implementation based on CUDA (c++), the second is based on OpenCV (c++), and the last is based on the machine learning library PyTorch (python). In order to evaluate the real-time characteristic of our proposed method, different available methods are used to measure the execution time of programs. Using in C++ language and in python language to get the number of seconds used by CPU time, using chrono package to measure the total execution time of the entire program, and using the CUDA event APIs to obtain the elapsed time of each kernel function of the GPU program.

We give the calculation time of the three versions and the speedup factors for each function (the functions’ number as stated in

Table 1) in

Table 6, where speedup1 is obtained from the OpenCV time divided by the CUDA time, and speedup2 represents the value of PyTorch time divided by CUDA time. In

Table 6, the best results (minimum time) are highlighted in bold. It can be seen that the version of CUDA has an absolutely huge advantage in most calculations compared to the other two implementations, especially for the most time-consuming matrix multiplication and noise estimation. It is worth mentioning that PyTorch also has a good performance in matrix operations, and its efficiency of feature decomposition is remarkable, but it has enormous time overhead in noise estimation, because the noise estimation method is equivalent to the plenty of tensor operations in PyTorch, and CUDA only needs to specify the address of the threads in each block start from matrix pointer.

Table 7 lists the run time of the whole INAPC algorithm on the different implementations, respectively. As shown in

Table 7, we obtain different speedup factors with fixed parameters on the three HSI datasets. Because of the vast time cost of noise estimation, PyTorch has the largest run time, and our CUDA parallel implementation shows great superiority and stability. Comparing the results of the three datasets, we can see that as the spatial dimension of the hyperspectral data set is higher, the better the acceleration effect of our algorithm, which is also in line with the development of current hyperspectral data towards high spatial resolution. At the same time, we can see that our parallel time is in a stable numerical region in different datasets, reflecting the parallel potential of parallel implementation and more suitable for real-time processing of big data. Based on the above analysis, in the UAV experiment in

Section 5.3, we only evaluate the implementation of CUDA and OpenCV.

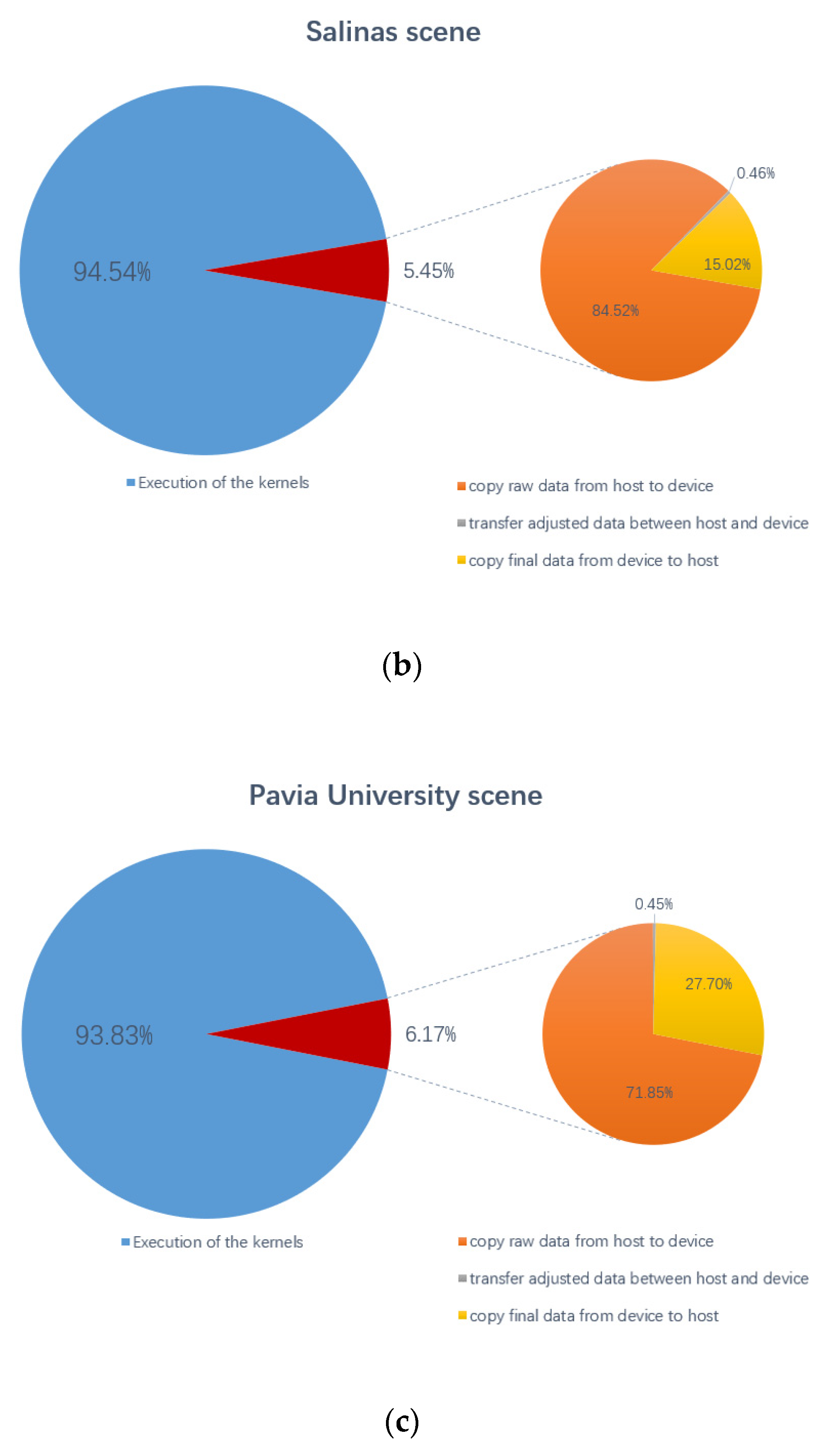

In addition,

Figure 8 shows the percentages of data transfer time between the host and the device for the proposed parallel method and gives the percentage of three specific data transmission operations in the total data transfer time. The transfer of adjusted data between the host and the device includes two processes, one is to transfer the results of the second SVD to the host, and the other is to transfer the reshaped data to the device. They are all marked with red lines in

Figure 3. It can be observed from the

Figure 8 that the data transfer time accounts for a small proportion of the total time of the proposed parallel method, and the maximum is less than 6.5%, which means that the pure calculation time occupies more than 93.5%, which proves the effectiveness of the parallel algorithm, that is algorithm performance depends on processing units themselves (not by data transfers), which is also an important issue for GPU parallelization. At the same time, it can also be obtained that the total time of data transmission is mostly concentrated on the input of the raw data and the output of the final result data. Combined with the results in

Table 7, this parallel algorithm has advantages in solving large-scale problems.

5.3. UAV Experiment

The practice is the only standard for testing truth. In order to reflect the practicality of the proposed parallel algorithm, we build a complete set of UAV photoelectric platform. The hardware platform mainly includes four parts: Flight module (UAV), data acquisition module (hyperspectral camera and attitude adjustment frame), ground station module (ground station computer), algorithm execution module (on-board NVIDIA Jetson Xavier). The photoelectric platform and the schematic diagram of the online operation of the entire platform are shown in

Figure 9.

The entire UAV flight experiment process is as follows. After the system starts, the ground station computer and the on-board computing device separate independent threads to run their respective communication module parts to maintain wireless communication between the two [

37]. Subsequently, the flight controller plans the route and performs hyperspectral imaging according to the predetermined route. At each waypoint, the flight controller informs the data collection module to collect hyperspectral data and hover to stabilize at that waypoint; the data collection module that receives the acquisition command informs the on-board NVIDIA Jetson Xavier after the hyperspectral image is taken, and the on-board computing device runs the specific algorithm and realizes current waypoint to complete the identification effect of the specific target.

The entire calculation process is processed in NVIDIA Jetson Xavier, to ensure that our digital communication module and algorithm execution module are normal and stable, we first simulate it on the ground. NVIDIA Jetson Xavier’s hardware configuration is shown in

Table 5. Besides, it has seven working modes at the software level, and the different modes are as shown in

Table 8.

In order to reflect the performance of the proposed algorithm in different working modes, we select mode_MAXN, mode_10w, and mode_30w_4core. A time test was performed on the three traditional datasets on the ground section. The results are given in

Figure 10.

As shown in

Figure 10, the computational efficiency of the proposed algorithm is related to the working power. The higher the power, the higher the computational efficiency, and the shorter the time overhead. The parallel algorithm also conforms to the characteristics of the larger spatial dimension and the better the acceleration effect. It is worth mentioning that in the three working modes, the speedup factors are essentially unchanged, which also shows the stability, flexibility, and portability of our parallel implementation.

Therefore, we choose mode_MAXN as the basis for further experiments. Since the official working power of mode_MAXN is not given, we will provide it with a mobile power bank that can output 65 W power according to the rated power of the power adapter, i.e., 65 W. The mobile power bank has a capacity of 30,000 mAh, which can make the NVIDIA Jetson Xavier work for a long time, which is far greater than the time of one flight when the UAV is fully charged.

From the flight controller triggers data acquisition to the classification results return to the ground station, the span is taken as the total time of one shutter process, the predominant time-consuming operations, and their proportion are given in

Figure 11 and

Figure 12.

As shown in

Figure 11 and

Figure 12, The data acquisition time is fixed at 5 s and is determined by the camera. After the camera scans (data acquisition), it quickly generates a raw data file in the file system, which is then read by our algorithm as the input. The span of the raw data processing is stable and not time-consuming. Then enter the algorithm execution, as the spatial dimension of hyperspectral data increases, our parallel implementation can always control the algorithm execution within one second and a half, accounting for only about 10% of the total duration, but the OpenCV version of the algorithm process time cost increases rapidly, and takes up about 70% of the total time, which exceeds 40 s. It can be seen that our parallel strategy dramatically reduces the total time, and the total time is dominated by the fixed time of the entire platform, rather than our algorithm itself, which significantly increases the real-time processing capabilities and effects. Considering that the fully loaded safe flight time of the UAV is only 15 min, it has a high practical effect and significance.

Figure 13 shows a schematic diagram of the flight experiment. We fly the UAV to an altitude of 300 m, 38° is the horizontal field angle of the hyperspectral camera (more parameters are shown in

Table 3), we shoot at several fixed flight waypoints (only two waypoints are shown in

Figure 13), and set the distance between each two flight waypoints to 50 m, which can ensure that each image has enough different fields. Hover the UAV at each waypoint, start camera scans, and generate hyperspectral data for a fixed period of 5 s. After that, the drone can be flown to the next waypoint. Taking into account the acceleration and deceleration process, the stability of the UAV posture, etc., it takes about 10 s to move the UAV to the next waypoint according to our extensive tests. At the same time, the algorithm process, which includes raw data processing, algorithm execution, and classification inference, will be executed during the UAV movement. According to

Figure 11 and

Figure 12, the algorithm process of our parallel implementation takes about 3.8 s, which means that we can ensure that we have obtained the results data of the previous waypoint at the next waypoint even if considering the data return time, this is of great significance in real-time applications. In other words, we can quickly make judgments and reactions to the following decisions based on the results of the previous waypoint.

We build a scene with several classes to test the classification results of the flight experiment. We customized the jungle tank model, jungle camouflage net, and put them in the real environment. The information of specific classes is given in the form of legends of a specific color in

Figure 14.

Figure 14 shows the classification results of aerial scenes of our flight experiment. We only label part of scenes as ground truth and use the entire flight shooting data for prediction. It can be seen that the classification results are very clear, especially the classification of the tank model, the camouflage net, and the environmental background (grass and trees) because they have the same naked eye color—this fully reflects the superiority of the hyperspectral algorithm. What is more worth mentioning is that it only takes a few seconds from camera shooting data to the classification results return to the ground station according to our parallel implementation—this is very important and practical.