1. Introduction

Internal Combustion Engines (ICEs) are the major power source for a variety of application including automobiles, aircraft, marine units, lighting plants, machine tools, power tool, etc. According to JATO report, ICEs account for 91% of the passenger car global sales in 2019 [

1]. In order for the vehicle manufactures to comply with increasingly stringent regulations, there is a need to maintain the overall efficiency, performance and emission level in an ICE. Thus, it is always crucial to run an engine in optimal conditions. This can be achieved by the use of the condition-based maintenance scheme for emergent fault diagnosis or detecting any deviations from optimal conditions.

An ICE has various rotating and moving parts, which over time can degrade due to extreme operating conditions. For instance, a spark ignition engine, which is a type of ICE, is subject to maintenance issues such as aged spark plug, damaged oxygen sensor or prolonged knocking, which can deteriorate the performance of the engine or even worse can cause total engine failure in the long run [

2]. Fault detection systems can help identify these faults at an early stage and reduce further damage to the engine, thereby increasing safety and reliability of the vehicle.

Fault diagnosis methods have been categorized into two main types: signal-based and model-based methods [

3]. In this study, we used a signal-based method which includes extracting useful features and comparing them against those of the nominal operational conditions using acoustic information from ICEs. Signal-based methods are less complex, easier to implement and more flexible to operation changes compared to model-based fault diagnosis methods.

Information from ICEs can be gathered in various formats such as acoustic signals, vibration [

4], oil quality [

5] and thermal images [

6], which are widely studied for engine fault diagnosis. Other analysis techniques include in-cylinder pressure mapping [

7] and instantaneous angular speed measurement [

8], which can be tedious and expensive. Recently, acoustic signals have gained increased attention for engine fault diagnosis because of the capacity of taking measurements from a distance, avoiding safety risks and removing the need of temperature sensitive sensors in the case of vibration [

9]. Acoustic analysis has not been used widely in the past due to the fact that these signals are susceptible to high noise content and are easily influenced by local environment. However, this could be mitigated through the use of sophisticated signal processing, denoising and feature mining techniques to extract useful information from a contaminated signal.

The signal-based fault diagnosis system consists of mainly four phases: data collection, signal decomposition, feature extraction and fault condition classification [

10]. The traditional signal processing approach uses time-domain and frequency-domain analysis to distinguish different fault conditions. For example, the authors of [

11] used frequency-domain analysis, including Fast Fourier Transform (FFT), envelope analysis and order tracking, for the recognition of different operating conditions and fault types. The major disadvantage of FFT-based approaches is that it is not adequate to analyze the time-dependent elements in the frequency domain only. This issue can be solved through the use of time–frequency analysis methods, such as Short-time Fourier Transform (STFT) [

12] and wavelet transform. Wavelet transform is particularly effective compared to STFT because of its capability of analyzing signals in multi-resolution time–frequency windows. Therefore, it is been used widely for fault diagnosis of engines [

13,

14,

15,

16,

17]. Although many variations of wavelet transform exist, in this paper, Wavelet Packet Transform (WPT) is used because WPT can transform the signal into both high- and low-level wavelet coefficients. This is significant, since various fault types embedded in different frequency ranges are analyzed here [

18,

19]. After signal decomposition, relevant time–frequency information can be extracted, and it can be used as the input for fault classification.

Most fault diagnosis models in the literature follow a single classification algorithm or attempt to compare among few classification algorithms to find the best model [

20,

21,

22,

23]. However, it has to be noted that each emerging fault has different characteristics and the appropriate algorithm has to be chosen according to their corresponding classification performance. There has been considerable work on optimization of model hyperparameters [

24,

25]. However, there are limited approaches for the combined model selection with hyperparameter optimization in literature. One useful methodology is the utilization of meta learning procedures which employ information from previous data to find the optimal algorithm or hyperparameter configuration [

26]. This is not practical in many cases due to its computational complexity in evaluating a huge amount of previous data. Bayesian optimization is an adequate method to overcome this issue [

27], which has been shown to outperform other optimization techniques on several of challenging benchmark problems [

28,

29]. The objective of Bayesian optimization is to find a point that minimizes an objective function using cross-validation classification error [

30,

31]. The function evaluation itself could involve an onerous procedure, and this is addressed in [

30] by using a conventional dimensionality reduction technique—Principle Component Analysis (PCA). By reducing the feature space to smaller number of Principle Components (PCs), the computational complexity is reduced, lowering the evaluation time [

32,

33]. PCA has been proven to reduce training time and improve the classification performance in very large datasets as shown in [

34]. Nonetheless, the utilization of PCA to reduce the feature set for Bayesian optimization and its effect on the optimization performance have not been addressed critically in the known literature.

The aim of this paper is to present a new fault diagnosis method for a gasoline ICE with various fault conditions by analyzing its acoustic signals. This starts with Model 1, which contains the selection of standard classifiers. It evolves to Model 2, which uses Bayesian optimization for both model selection and model hyperparameter optimization, so that an optimal model with its selected features and parameters is provided. Finally, Model 3 is proposed, which applies PCA alongside Bayesian optimization to reduce the complexity of the feature space for the optimization problem. All three models use WPT for signal analysis and the statistical features computed from the high- and low-level WPT coefficients. Hence, the main contributions of this paper are as follows:

Exploring the viability of the use of acoustic signals for engine fault classification under different fault scenarios and different operating conditions

Evaluating the performance and the use of Bayesian optimization on standard classification models for fault diagnosis of an ICE

Evaluating the effect of PCA on the optimized fault classification model in terms of classification accuracy, other performance metrics and the evaluation time

The remainder of this paper is structured as follows.

Section 2 presents the experimental set up and the engine fault characteristics.

Section 3 describes the general workflow and the detailed methodology employed.

Section 4 describes the results and the comparison of the three models using various performance metrics.

Section 5 concludes the paper.

5. Conclusions

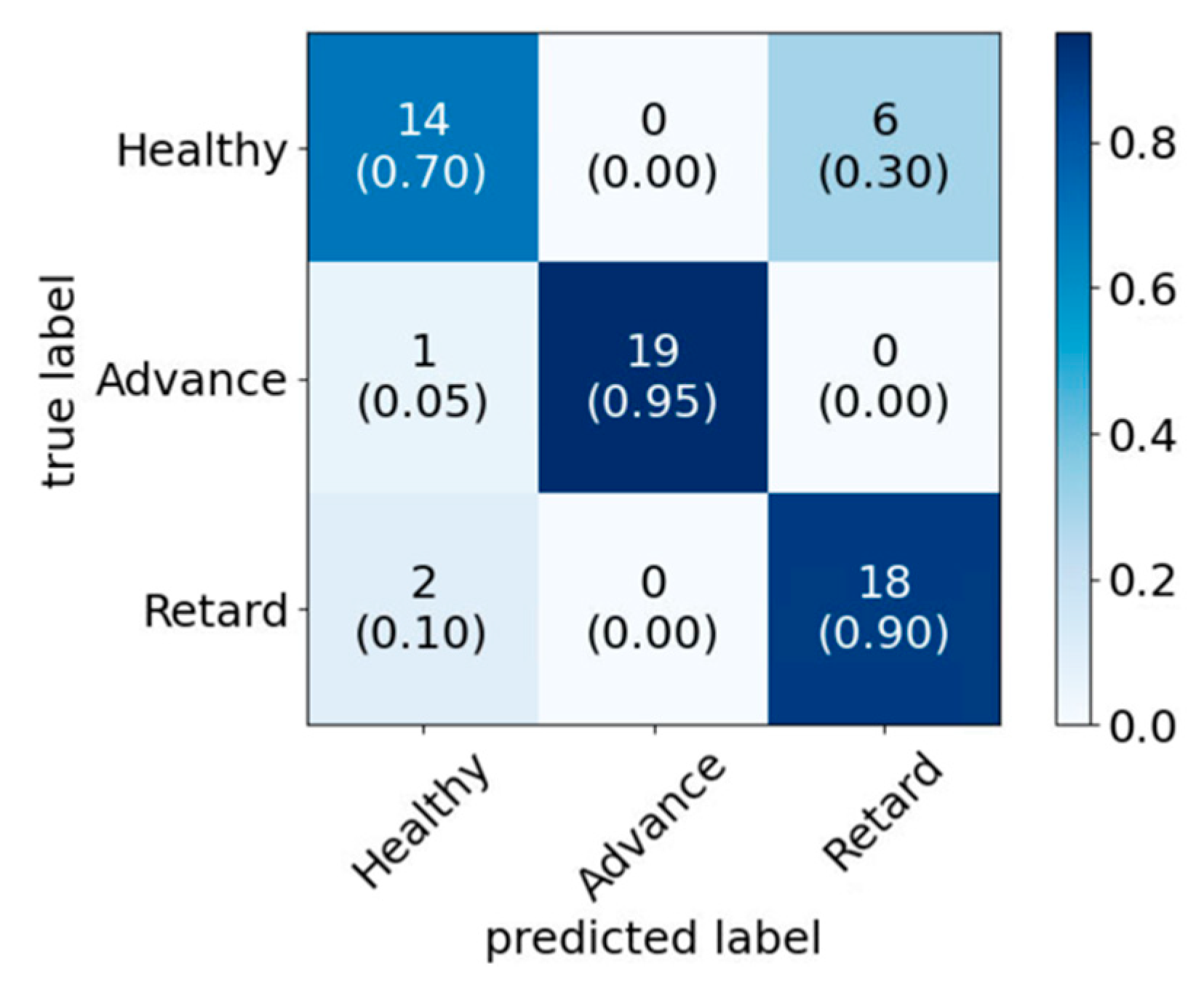

In this study, a data-driven approach based on engine acoustic signals for classification was proposed for ICE fault diagnosis. Three faulty conditions, namely engine misfire, ignition timing variation and AFR variation, were used to train and test the applicability of the approach. Three models were studied, i.e., the standard classification approach (Model 1), the Bayesian optimized model (Model 2) and the use of PCA alongside Bayesian optimization (Model 3). In all cases, WPT was used to decompose the acoustic signals, and the statistical features were extracted from the wavelet coefficients, which were then used as the input of the three classification models. The aim of the study was not only to achieve the highest accuracy, but also to reduce the computational time by eliminating redundant features. In doing so, the feature sets with reduced dimension would be more suitable for the future online implementation in real-time engine fault diagnosis applications. Both Model 2 and Model 3 achieved better accuracy and the other performance metrics than the standard model for all three faults. With similar accuracy level compared to Model 2, Model 3 achieved ~20% less combined training and testing evaluation time and 8–19% less time for testing evaluations for the fault cases. This indicates that the WPT with PCA and Bayesian optimization technique could be a promising tool for real-time acoustic engine fault diagnosis application.

In reality, there is a trade-off between the fault classification accuracy and the processing time saving, and it will depend on the industrial application and their specific requirement. For example, if the application requires high accuracy in the fault diagnosis, as a false alarm can result in excessive downtime costs, the user may prefer to select Model 2 at the expense of additional processing time. On the other hand, if, for example, some combustion faults need to be identified within a short time duration, before they lead to catastrophic component damage, then the user may consider that implementing Model 3 on fast computing electronics will be essentially beneficial for this application.