Abstract

Feature selection has devoted a consistently great amount of effort to dimension reduction for various machine learning tasks. Existing feature selection models focus on selecting the most discriminative features for learning targets. However, this strategy is weak in handling two kinds of features, that is, the irrelevant and redundant ones, which are collectively referred to as noisy features. These features may hamper the construction of optimal low-dimensional subspaces and compromise the learning performance of downstream tasks. In this study, we propose a novel multi-label feature selection approach by embedding label correlations (dubbed ELC) to address these issues. Particularly, we extract label correlations for reliable label space structures and employ them to steer feature selection. In this way, label and feature spaces can be expected to be consistent and noisy features can be effectively eliminated. An extensive experimental evaluation on public benchmarks validated the superiority of ELC.

1. Introduction

For pattern recognition, feature selection is important for its effectiveness in reducing dimensionality. Feature selection methods are divided into supervised, semi-supervised, and unsupervised ones, according to whether the instances are labeled, partially labeled, or not [1,2,3,4]. For supervised cases, class labels are employed for measuring features’ discriminative abilities. Many popular and efficient feature selection methods belong to this group [5,6,7,8,9,10]. Supervised methods are further categorized into three well-known models: filter, wrapper, and embedded [11]. In recent years, some hybrid methods have emerged that combine filter and wrapper processes for enhancing performance and reducing computational cost [12,13].

In another categorization view, existing feature selection approaches can also be grouped to single-label and multi-label ones, whose difference lies in the size of labels that each instance is related with [14]. In single-label FS, instances and labels hold many-to-one connections and the target separability is emphasized in this learning task. With the great potential and success of multi-label learning in many machine learning fields, such as text categorization [15], content annotation [16], and protein location prediction [17], multi-label feature selection has received considerable attention in recent years. We approach the supervised multi-label feature selection in this study.

In multi-label learning, label correlations are the key to combining the complicated relationships among instances, which are typically annotated with multiple labels [18,19]. The mainstream multi-label feature selection strategy is to extract label correlations (via statistical or information-based measurements) and employ them to help find the most remarkable features. A critical issue is, however, this strategy would be trapped by two kinds of features, that is, irrelevant and redundant ones. Irrelevant features represent those lowly discriminative ones. Features of this kind are loosely correlated with learning targets and even may provide misleading information. Compared with irrelevant features, redundant features seem more deceptive. They may exhibit excellent (or comparably superior) performances and mix with remarkable features. Nevertheless, redundant features also lowly contribute to enhancing the discriminative ability of the constructed low-dimensional subspace, because the learning information they provide is redundant with the already distilled information. In general, we regard both irrelevant and redundant features as noisy ones, which may confuse selection processes and compromise the learning performance of downstream tasks.

In this paper, we present an effective multi-label feature selection model by embedding label correlations to eliminate noisy features, named ELC. Our major strategy is to keep feature-label space consistent and explore reliable label structures to drive feature selection. Concretely, we qualitatively assess label correlations in the label space and embed them in feature selection. In this way, the label structure information can be maximally preserved in the constructed low-dimensional subspace, and eventually the consistency between feature and label spaces can be achieved. Furthermore, we devise an efficient framework base on the sparse multi-task learning to optimize ELC, which can help ELC find globally optimal solutions and efficiently converge.

The major contributions of this paper are as follows:

- We present a novel multi-label feature selection model to address the issue of noisy features. This model qualitatively measures label correlations and employs feature-label space consistency to steer feature selection.

- We devised a compact framework to optimize the proposed model. This framework resorts to the multi-task learning strategy and promises globally optimal solutions and efficient convergence.

- Comprehensive experiments on openly available benchmarks were conducted to validate the performance of the proposed model in feature selection and noise elimination.

The remaining parts of this paper are arranged as follows: related works are reviewed in Section 2; the proposed model ELC and its optimization framework are respectively introduced in Section 3 and Section 4; the experimental comparisons of ELC with several popular feature selection approaches are presented in Section 5; finally, conclusions are drawn in Section 6.

2. Related Work

Feature selection approaches are commonly specified to a certain recognition scenario, i.e., single-label learning or multi-label learning, because of the different concerns of the two recognition tasks. The issue of noisy feature elimination is firstly raised in single-label feature selection, focusing on removing irrelevant features and picking out discriminative ones. For example, the popular single-label feature selection family by preserving instance similarity [20] directly highly scores the most discriminative features under various statistical metrics, such as the Laplacian score [7,21], the Fisher score [6], the Hilbert–Schmidt independence criterion [22], and the trace ratio [23], just to name a few. In addition to the above similarity preservation approaches, some traditional distance or instance difference based ones can also be deemed as simply pursuing “target-specific features,” such as ReliefF [10], SPEC [24,25], and SPFS [20]. This denotation arises from the fact that target-specific features are picked based only on whether they are strongly correlated with the learning targets. In other words, those features that have excellent discriminative abilities for targets will prevail. The aforementioned approaches have generally achieved excellent performance in eliminating irrelevant features, while may experience difficulties in improving learning performance due to their scarce attention on removing redundant features.

Recently, some remarkable neural networks-based and fuzzy logic-based feature selection works have been presented, which have received extensive attention due to their excellent feature selection performances [26,27,28]. For example, Verikas and Bacauskiene [26] proposed a feedforward neural network-based approach to find the salient features and remove those yielding the least accurate classifications. Arefnezhad et al. [27] highly scored the features most related to the drowsiness level via an adaptive neuro-fuzzy inference system, which was devised by combining filter and wrapper feature selection approaches. Cateni et al. [28] selected the mostly relevant features for better binary classification by combining several filter approaches through a fuzzy inference system. Generally speaking, the above studies serve as excellent examples of picking out target-specific features, while still leaving aside the underlying negative effects of noisy features.

A salient but redundant feature provides little valuable learning information if selected. Although this issue is ignored by a majority of feature selection approaches, it gains attention from some information-based ones. Among them, the family based on mutual information is regarded as the mainstream redundancy removing approach. The classical mutual information [9] and its variants (e.g., conditional mutual information) [5,29] can effectively position the redundant features and remove them via a greedy search. Nevertheless, an inevitable problem is that the performances of these approaches heavily depend on their probability estimation accuracy. This problem is more complicated in high-dimensional space.

In terms of multi-label feature selection approaches, they can be roughly categorized into two families. The first family directly divides the multi-label learning into multiple subproblems and utilizes single-label feature evaluation metrics to tackle them [4]. For instance, ReliefF is tailed for multi-label learning by dividing its estimations of nearest misses and hits to eight subproblems [30]. In addition, some single-label feature evaluation strategies are also reformulated to the multi-label ones by enforcing on each subgroup, such as class separability and linear discriminant analysis [31,32]. A major drawback of the above subproblem division strategy is that it ignores label correlations, which encode the underlying label structures for recognition and play critical roles in multi-label learning.

On the other hand, the second family of multi-label feature selection can better fix this issue since it incorporates label correlations into model construction. A common strategy of this family is to evaluate instance-label pairs via specific label ranking metrics and select the features by minimizing loss functions [33,34,35,36]. While real-world label relations could be beyond pairwise situations, some high-order correlation approaches have been proposed to model complicated label structures. A feasible solution is to build a common space shared among various labels [16,33,37], which typically suffers from high costs and complex computation. It is noteworthy that in contrast to single-label feature selection approaches, the multi-label ones rarely have the issue of noisy feature elimination. A few approaches specific to ruling out irrelevant features are based on sparse regularization [38]. These approaches neglect the negative effects of redundant features and are not competent in completely removing noisy features.

To comprehensively address the above issues, we will introduce a novel multi-label feature selection model in Section 3, which can effectively filter both kinds of noisy features (including irrelevant and redundant ones) and select the remarkable ones. The proposed model adopts a statistical metric to measure target-related feature redundancy and dispense with any probability estimation. Furthermore, this model extracts label correlations and keeps feature-label space consistency to guide feature selection, which facilitates irrelevant feature exclusion and remarkable feature domination.

3. The Methodology: ELC

3.1. Model Description

In this paper, we use to denote the data set, where represents the instance matrix and instances are characterized by d features in the feature set . denotes the target label matrix, where represents a positive label and corresponds to a negative one.

Then, we formulate the multi-label feature selection by embedding label correlation (ELC) as follows:

where represents the label correlation matrix calculated over the initial label matrix, and k is the number of selected features. is the feature selection matrix, where indicates the importance (also known as weight) of the i-th feature to the j-th label.

Equation (1) is actually the feature evaluation function of ELC, which is essentially a Frobenius-norm quadratic model. The matrix represents the label correlations extracted from the label space, and its each element describes a relation between two target labels. These correlations can be easily obtained by some quantitative measurements, including RBF kernel function, Pearson correlation coefficient, etc. represents the label correlations extracted from the reduced feature space. is differentiated from on account of the disturbance of noisy features. As described in Section 1, noisy features may distort the structure of the feature space and provide negative learning information. Considering this, ELC evaluates features based on their abilities of preserving label correlations in the feature space, that is, keeping feature-label space consistency. The features that can minimize the discrepancy between and will be highly scored by ELC. In this way, ELC can be expected to construct an optimal feature subspace with eliminating different kinds of noisy features.

Under the constraint of the -norm in Equation (1), only k row in is nonzero. This corresponds to the k selected features for l target labels, where 1 represents selected and 0 represents none. Note that k is most likely to be unequal to l. That is, more than one feature may be selected responsible for discriminating the same label, or only one feature is discriminative for more than one label. In the former case, multiple features are unified to recognize one target, while one feature deals with multiple recognition sub-tasks in the latter case.

3.2. Property Analysis

The feature subset that is selected by ELC can be considered as maximally maintaining feature-label space consistency. is expected to be constituted by the remarkable features and exclude the noisy ones. In this subsection, we will further analyze the properties of ELC and reveal its underlying characteristics.

Suppose that each feature in has been standardized to have mean zero and unit length. Then, the following things hold for Equation (1):

This is the objective of ELC. For more clearly illustrating its properties, let and . Then,

Three terms are involved in this equation. Clearly, represents the label correlation information extracted from the label space and is constant in the selection process. Thus, it is easy to conclude that is equivalent to and . Then, two properties of ELC are given as follows:

Property 1.

Label correlation information can be maximally embedded in feature selection by ELC.

Proof.

,

where is the correlation degree of the labels and , and indicates the selected features. Then, the following things holds: . can be regarded as the correlation information of pairwise labels. Therefore, ELC can maximally embed label correlations in its feature selection process. □

Label correlation information is important for multi-label learning. For example, the images about seas may share some common labels for recognition, such as ship, fish, and seagull, and their close correlations may help us distinguish the image category and find their shared features. The existing multi-label learning methods are categorized on the basis of the label correlation orders they consider [39]. Their correlation modeling capabilities directly affect their discriminative performance. As demonstrated in Property 1, ELC can measure the pairwise label correlations. Furthermore, it can also preserve this correlation information in its constructed feature subspace, which is crucial for ELC to eliminate noisy features. In other words, the features that can maximally preserve label correlation information are preferred by ELC. This strategy facilitates ELC building a low-dimensional feature space that is consistent with the label space and also suitable for multi-label learning.

In addition to the above property with respect to maximally embedding label correlations, another important property of ELC is illustrated as follows:

Property 2.

Feature redundancy can be minimized by ELC.

Proof.

where is the standard deviation of the label , and and are the Pearson correlation coefficients of with the features and , respectively. Then, we have

Clearly, n and are constant in the feature selection process. can be regarded as the shared label dependency of the features and , that is, the feature redundancy for recognizing the target . Therefore, ELC can minimize feature redundancy in its feature selection process. □

Note that the term in Property 2 is obtained by introducing the label correlation information. This is a completely novel estimation for the label-specific feature redundancy. The most majority of existing feature selection approaches (including the single-label and multi-label ones) adopt a univariate measurement criterion and merely the top-k features have opportunities to prevail. This strategy largely increases the redundant recognition information shared between features. For example, if we select the genes that are all discriminative for the diabetes type 1, we probably cannot give an accurate diagnosis since these features may be less aware of other types of diabetes. This is why we have to reduce recognition redundancy and enrich recognition information. Some approaches are able to reduce feature redundancy, while their focus is not the label-specific redundancy. For example, is actually reduced in SPFS [20]. This term includes an additional information irrelevant to recognition, and correspondingly, it is inappropriate. In contrast, ELC removes label-specific feature redundancy and is more suitable for multi-label learning with eliminating noisy features.

As discussed above, ELC processes two properties, i.e., maximally preserving label correlation information and minimizing label-specific feature redundancy. These characteristics account for the superior ability of ELC in eliminating noisy features and picking out remarkable ones.

4. Multi-Task Optimization for ELC

Equation (1) describes an integer programming problem, which is NP-hard and complicated to solve. Moreover, the -norm constraint in Equation (1) is non-smooth, which leads to a slow convergence rate. In this section, we devise an efficient framework to address this problem by using the sparse multi-task learning technology in the proximal alternating direction method (PADM) framework [40].

Suppose the spectral decomposition of the correlation matrix can be denoted as

where and are respectively the eigenvector and eigenvalue matrices of . Then, Equation (1) can be reformulated as

where , t is a hyperparameter to constrain to a convex solution, is a feature indicator vector that reflects whether the corresponding features are selected or not (1 for selected and 0 for otherwise), and is the vector with all ones.

On the basis of Equation (2), ELC is actually reformulated as a multivariate regression problem, which enables the multi-task learning technology [41]. This technology aims to learn a common set of features to tackle multiple relevant tasks and excels at various sparse learning formulations, including the optimization problem in Equation (1). Based on the multi-task learning technology, we then obtain the equivalent form of ELC as follows:

where , and is the regularization parameter. Clearly, we can apply the augmented Lagrangian method to solve this problem. Then, Equation (3) is further reformulated as

The Lagrangian function can be defined as

where is the Lagrangian multiplier, and is the penalty parameter.

Equation (5) involves four variables, that is, the auxiliary variable , the feature weight matrix , the feature indicator vector , and the Lagrangian multiplier . Clearly, simultaneously optimizing four variables is impractical. Accordingly, is temporarily fixed for simplification in the following analysis. Then, minimizing is equivalent to the following two subproblems; i.e.,

As to , the following holds:

where and are the i-th row vectors of and , respectively. Then, we reformulate to its close form [41] as

Conducting gradient descent on Equation (7) yields the following optimal solution as

Then, the optimal in iteration can be denoted as

In terms of , we let . The dual problem of is

Since simultaneously solving the both variables and is still tough, we first fix to optimize . Then, the solution of can be obtained as

where is the identity matrix. The structure of is commonly not circulant, and therefore the computation of Equation (11) is involved [42]. Considering this, an approximate term is added to as follows:

where , and is the optimal value of in iteration . Then, the solution of is

The detailed inference can be found in the Appendix A.

Similarly, we can easily obtain the optimal by fixing . Equation (10) is then equivalent to the following minimization problem in this case as follows:

Apparently, the top-k features that minimize can be regarded as the remarkable ones. Their corresponding values in are assigned as 1.

Note that the Lagrangian multiplier is fixed through the above analysis, mainly for simplifying the solution process. We further tackle this problem in the popular PADM framework as illustrated in Algorithm 1. In this framework, can be updated as

| Algorithm 1 ELC |

|

ELC in Algorithm 1 is implemented in the regression framework PADM, which is a fast alternating approach for the well-known alternating direction method (ADM) framework. PADM is effective and efficient in solving the minimization problem of the augmented Lagrangian function, and is able to converge to a certain solution from any starting point for any [40].

In terms of the complexity of ELC, it only takes time to find k remarkable features from the d candidates. Thus, the time consumption for line 3 is . The cost of the while loop in Algorithm 1 mainly lies in lines 6 and 7, which is . As this iteration process is repeated for t times, its total cost is . Suppose . Then, the total complexity of ELC is approximately equal to , where are the numbers of features, instances, labels, selected features, and iterations for convergence, respectively.

5. Experimental Evaluation

Fourteen groups of multi-label data sets fetched from the Mulan library (http://mulan.sourceforge.net/datasets-mlc.html) are taken as the benchmarks in this section, which are shown in Table 1. We compare ELC (the source code is available at https://github.com/wangjuncs/ELC) with the following state-of-the-art multi-label feature selection methods:

- MIFS (multi-label informed feature selection) [33]: a label correlation-based multi-label feature selection approach, which maps label information into a low-dimensional subspace and captures the correlations among multiple labels;

- CMFS (correlated and multi-label feature selection) [35]: a feature selection approach based on non-negative matrix factorization, which exploits the label correlation information in features, labels, and instances to select the relevant features and remove the noisy ones;

- LLSF (learning label-specific features) [36]: a unified multi-label learning framework for both feature selection and classification, which models high-order label correlations to select label-specific features.

More detailed experimental configurations can be found in the Appendix B.

5.1. Example 1: Classification Performance

The average classification performance of each feature selection approach is recorded in Table 2 and the pairwise t-tests at 5% significance level were conducted to validate the statistical significance. In addition to the traditional precision and AUC metrics, hamming loss penalizes incorrect the recognitions of instances to each target label, ranking loss penalizes the misordered labels in pairs, and one-error penalizes the instances whose top-ranked predicted labels are not in the ground-truth label set. Five metrics evaluated the multi-label classification performance from different aspects.

Table 2.

Average multi-label classification performance (mean std.): the best results and those not significantly worse than it are highlighted in bold (pairwise t-test at 5% significance level).

A single metric is insufficient to illustrate the general classification performance on a dataset. For example, the overall performance of ML-KNN classifier [43] on birds is worse than that on enron under the precision metric, while it shows a better performance on birds than on enron under the AUC metric. Therefore, we extensively used five metrics to compare the performances of the compared approaches. As shown in Table 2, ELC outperforms MIFS, CMFS, and LLSF under various metrics. This superiority is attributed to two reasons. That is, ELC can effectively eliminate noisy features from the candidate feature subsets and maximally embed label correlation information into its selection process. The first term rules out the selection disturbance in the feature space, and the second term promises the proper guiding information extracted from the label space. By seamlessly fusing these two terms, ELC is able to find discriminative features for the downstream learning tasks. This point will be further validated in Section 5.2 and Section 5.3.

5.2. Example 2: Eliminating Noisy Features

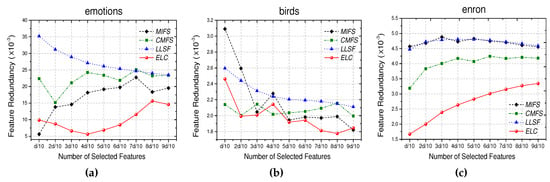

In this section, we evaluate the performances of the compared approaches in eliminating noisy features. We take emotions, birds, and enron as the benchmarks, and measure the residual feature redundancy in the selected feature subset as follows:

where and are the Pearson correlation coefficients of the features and with the target label , and and l are the numbers of the selected features and labels, respectively. When reaches its maximum value, the maximal redundant information exists in , which interprets as the inferior ability of the selection approach in removing noisy features.

The feature redundancy of selected features for each approach is demonstrated in Figure 1, where and d is the total number of original features. It illustrates that ELC is superior in reducing feature redundancy. In other words, ELC can effectively remove redundant features in its multi-label feature selection process. This is one of the crucial factors leading to the excellent discriminative ability of ELC. It should be pointed out that in contrast to the case of single-label feature selection, eliminating noisy features has not received sufficient attention from existing multi-label feature selection approaches. While the issue of noisy features is an obstacle of yielding high selection performance not only for the single-label learning but also for the multi-label cases, we devised ELC to comprehensively tackle this problem. Moreover, the reduced feature redundancy in the majority of redundancy elimination-based approaches is not directly relevant to the target labels. In contrast, ELC quantitatively reduces target-relevant redundancy without any prior probability knowledge, which is conducive to its superiority in multi-label feature selection.

5.3. Example 3: Embedding Label Correlations

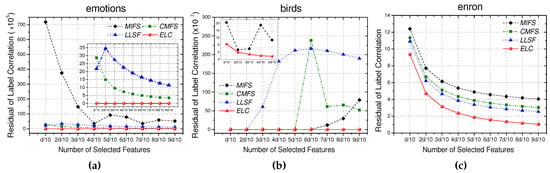

Label correlation information is important for multi-label learning. In the following experiments, we estimate the preserved label correlation information of the selected feature subset as follows:

where denotes the instances characterized by and is the label correlation matrix of the original data. Intuitively, Equation (17) measures the residue scale of label correlation information in the original and reduced feature spaces. A lower value indicates more information preserved. In other words, more label correlation information can be embedded in the feature selection process in this situation.

Similarly to the configuration in Section 5.2, we take emotions, birds, and enron as the benchmarks and record of the features selected by each approach, where . As shown in Figure 2, ELC is better at preserving the class correlation information than the other multi-label feature selection approaches. Actually, the majority of the existing multi-label feature selection approaches take the label correlation information into consideration to some extent. In contrast to these approaches, ELC quantitatively measures this correlation information and maximally embeds it into the feature selection process. This characteristic, which has already been proved in Property 2, can be further revealed by the experimental results in this section.

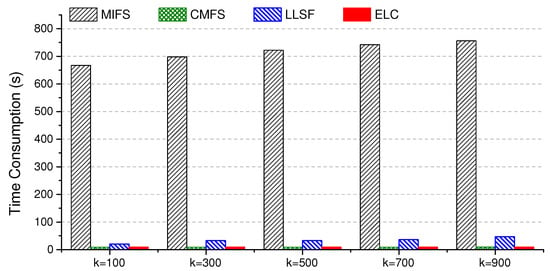

5.4. Example 4: Time Consumption

In this section, we compare the approaches in terms of their feature selection efficiency. The time consumption here merely records the feature selection time, excluding the classification cost. All of the tests were implemented in Matlab on an Intel Core i7-4790 CPU (@3.6GHz) with 32GB memory (Intel Corp., Santa Clara, CA, USA). We respectively selected () features on the enron dataset and recorded the time consumption of each compared approach. As illustrated in Figure 3, ELC and CMFS are comparably efficient to converge, while MIFS is most time-consuming, which may be mainly attributed to its involved label clustering process.

6. Conclusions

A novel multi-label feature selection method called ELC is proposed in this paper. ELC embeds label correlation information in reduced feature subspace to eliminate noisy features. In this way, irrelevant and redundant features can be expected to be removed and a discriminative feature subset is constructed for the downstream learning tasks. These advantages help ELC yield good feature selection performance on a wide broad of multi-label data sets under various evaluation metrics.

In terms of optimizing ELC, we can feed it to some gradient descent frameworks to efficiently yield its optimal values, such as Adam with a self-adaptive learning rate [44]. Another interesting and possible exploration would be the consideration of noisy labels, which would induce negative effects on estimating label correlations. According to our pilot study, noisy labels may distort the label space and provide inaccurate guide information for feature selection. How to eliminate noisy labels may inspire our future work.

Author Contributions

Each author greatly contributed to the preparation of this manuscript. J.W. (Jun Wang) and J.W. (Jinmao Wei) wrote the paper; Y.X. and H.X. designed and performed the experiments; Z.S. and Z.Y. devised the optimization algorithms. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (number 61772288), the Natural Science Foundation of Tianjin City (number 18JCZDJC30900), the Ministry of Education of Humanities and Social Science Project (number 16YJC790123), the National Natural Science Foundation of Shandong Province (number ZR2019MA049), and the Cooperative Education Project of the Ministry of Education of China (number 201902199006).

Acknowledgments

The authors are very grateful to the anonymous reviewers and editor for their helpful and constructive comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

After adding an approximate term to and reformulating it to , we take the derivative of with respect to as follows:

To induce the optimal solution of , we make equal to 0 and obtain:

Then, the optimal solution of in the iteration can be represented as

Appendix B. Experimental Configuration

The correlation (or similarity) matrices involved in experiments are all calculated based on the RBF kernel function. Specifically, the label correlation matrix in ELP is defined as , where . The instance similarity matrix in SPFS and CMFS is calculated as , where . The affinity graph in MIFS is constructed as

where is the p-nearest neighbor of instance .

SPFS is implemented via the sequential forward selection (SFS) strategy. For a fair comparison, we tune the regularization parameter for all approaches via a grid search from . For ELC, the parameter is fixed to , and is set to the spectral radius of in the initial state and updated as in the t-th iteration, where is the i-th row vector of and . The convergence state is reached when any of the following two conditions is satisfied: (1) ; and (2) .

Multi-label k-nearest neighbor (ML-kNN) classifier [43] is built on the features selected by each compared approach, when and d is the total number of features. All of the numerical features are normalized to zero mean and unit variance, and we employ the excellent features selected by the compared approaches to construct the ML-kNN classifiers and compare their classification performances. The 5-fold cross-validation is conducted, and we report the average performance of the ML-kNN classification under five metrics, i.e., precision, AUC, Hamming loss, ranking loss, and one error [39].

References

- Tang, J.; Alelyani, S.; Liu, H. Feature felection for classification: A review. In Data Classification: Algorithms and Applications; CRC Press: Chapman, CA, USA, 2014. [Google Scholar]

- Wang, J.; Wei, J.; Yang, Z. Supervised feature selection by preserving class correlation. In Proceedings of the 25th ACM International Conference on Information and Knowledge Management, Indianapolis, IN, USA, 24–28 October 2016; pp. 1613–1622. [Google Scholar]

- Cai, D.; Zhang, C.; He, X. Efficient and robust feature selection via joint l2,1-norms minimization. In Proceedings of the KDD ’10: The 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 333–342. [Google Scholar]

- Xu, Y.; Wang, J.; An, S.; Wei, J.; Ruan, J. Semi-supervised multi-label feature selection by preserving feature-label space consistency. In Proceedings of the CIKM ’18: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Orino, Italy, 22–26 October 2018; pp. 783–792. [Google Scholar]

- Brown, G.; Pocock, A.; Zhao, M.; Luján, M. Conditional Likelihood Maximisation: A unifying framework for information theoretic feature selection. J. Mach. Learn. Res. 2012, 12, 27–66. [Google Scholar]

- Gu, Q.; Li, Z.; Han, J. Generalized fisher score for feature selection. In Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence, Barcelona, Spain, 14–17 July 2011; pp. 266–273. [Google Scholar]

- He, X.; Cai, D.; Niyogi, P. Laplacian score for feature selection. In Proceedings of the 18th International Conference on Neural Information Processing Systems, Shanghai, China, 13–17 November 2011; pp. 507–514. [Google Scholar]

- Lin, D.; Tang, X. Conditional Infomax Learning: An Integrated Framework for Feature Extraction and Fusion. In Proceedings of the Computer Vision—ECCV 2006, 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 68–82. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and empirical analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Bermejo, P.; Gámez, J.A.; Puerta, J.M. Speeding up incremental wrapper feature subset selection with Naive Bayes classifier. Knowl.-Based Syst. 2014, 55, 140–147. [Google Scholar] [CrossRef]

- Gütlein, M.; Frank, E.; Hall, M.; Karwath, A. Large-scale attribute selection using wrappers. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence and Data Mining, CIDM 2009, Nashville, TN, USA, 30 March–2 April 2009; pp. 332–339. [Google Scholar]

- Xu, Y.; Wang, J.; Wei, J. To avoid the pitfall of missing labels in feature selection: A generative model gives the answer. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 6534–6541. [Google Scholar]

- Chen, W.; Yan, J.; Zhang, B.; Chen, Z.; Yang, Q. Document transformation for multi-label feature selection in text categorization. In Proceedings of the 2007 Seventh IEEE International Conference on Data Mining, Washington, DC, USA, 28–31 October 2007; pp. 451–456. [Google Scholar]

- Ma, Z.; Nie, F.; Yang, Y.; Uijlings, J.R.; Sebe, N. Web image annotation via subspace-sparsity collaborated feature selection. IEEE Trans. Multimedia 2012, 14, 1021–1030. [Google Scholar] [CrossRef]

- Wang, X.; Li, G.Z. Multilabel learning via random label selection for protein subcellular multilocations prediction. IEEE/ACM Trans. Comput. Biol Bioinform. 2013, 10, 436–446. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.L.; Zhou, Z.H. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng. 2014, 26, 1819–1837. [Google Scholar] [CrossRef]

- Rivolli, A.; J, J.R.; Soares, C.; Pfahringer, B.; de Carvalho, A.C. An empirical analysis of binary transformation strategies and base algorithms for multi-label learning. Mach. Learn. 2020, 9, 1–55. [Google Scholar]

- Zhao, Z.; Wang, L.; Liu, H.; Ye, J. On similarity preserving feature selection. IEEE Trans. Knowl. Data Eng. 2013, 25, 619–632. [Google Scholar] [CrossRef]

- Zhao, J.; Lu, K.; He, X. Locality sensitive semi-supervised feature selection. Neurocomputing 2008, 71, 1842–1849. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, Z.H. Multi-label dimensionality reduction via dependence maximization. ACM Trans. Knowl. Discovery Data 2010, 4, 1503–1505. [Google Scholar]

- Nie, F.; Xiang, S.; Jia, Y. Trace ratio criterion for feature selection. In Proceedings of the Twenty-Third AAAI Conference on Artificial Intelligence, AAAI 2008, Chicago, IL, USA, 13–17 July 2008; pp. 671–676. [Google Scholar]

- Zhao, Z.; Liu, H. Spectral feature selection for supervised and unsupervised learning. In Proceedings of the 24th International Conference on Machine Learning, ICML 2007, Corvallis, OR, USA, 20–24 June 2007; pp. 1151–1157. [Google Scholar]

- Zhao, Z.; Wang, L.; Liu, H. Efficient spectral feature selection with minimum redundancy. In Proceedings of the Twenty-Fourth AAAI Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010; pp. 673–678. [Google Scholar]

- Verikas, A.; Bacauskiene, M. Feature selection with neural networks. Pattern Recog. Lett. 2002, 23, 1323–1335. [Google Scholar] [CrossRef]

- Arefnezhad, S.; Samiee, S.; Eichberger, A.; Nahvi, A. Driver drowsiness detection based on steering wheel data applying adaptive neuro-fuzzy feature selection. Sensors 2019, 14, 943. [Google Scholar] [CrossRef]

- Cateni, S.; Colla, V.; Vannucci, M. A fuzzy system for combining filter features selection methods. Int. J. Fuzzy Syst. 2017, 19, 1168–1180. [Google Scholar] [CrossRef]

- Wang, J.; Wei, J.M.; Yang, Z.; Wang, S.Q. Feature selection by maximizing independent classification information. IEEE Trans. Knowl. Data Eng. 2017, 29, 828–841. [Google Scholar] [CrossRef]

- Kong, D.; Ding, C.; Huang, H.; Zhao, H. Multi-label ReliefF and F-statistic feature selections for image annotation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2352–2359. [Google Scholar]

- Ji, S.; Ye, J. Linear dimensionality reduction for multi-label classification. In Proceedings of the 21st International Joint Conference on Artificial Intelligence, Pasadena, CA, USA, 11–17 July 2009; pp. 1077–1082. [Google Scholar]

- Wang, H.; Ding, C.; Huang, H. Multi-label linear discriminant analysis. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 126–139. [Google Scholar]

- Jian, L.; Li, J.; Shu, K.; Liu, H. Multi-label informed feature selection. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 1627–1633. [Google Scholar]

- Huang, J.; Li, G.; Huang, Q.; Wu, X. Joint feature selection and classification for multilabel learning. IEEE Trans. Cybern. 2018, 48, 876–889. [Google Scholar] [CrossRef]

- Braytee, A.; Liu, W.; Catchpoole, D.R.; Kennedy, P.J. Multi-label feature selection using correlation information. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 1649–1656. [Google Scholar]

- Huang, J.; Li, G.; Huang, Q.; Wu, X. Learning label-specific features and class-dependent labels for multi-label classification. IEEE Trans. Knowl. Data Eng. 2016, 28, 3309–3323. [Google Scholar] [CrossRef]

- Ji, S.; Tang, L.; Yu, S.; Ye, J. Extracting shared subspace for multi-label classification. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 381–389. [Google Scholar]

- Nie, F.; Huang, H.; Cai, X.; Ding, C.H. Efficient and robust feature selection via joint 2,1-norms minimization. In Proceedings of the 4th Annual Conference on Neural Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010; pp. 1813–1821. [Google Scholar]

- Zhang, M.L.; Wu, L. LIFT: Multi-label learning with label-specific features. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 107–119. [Google Scholar] [CrossRef]

- Xiao, Y.H.; Song, H.N. An inexact alternating directions algorithm for constrained total variation regularized compressive sensing problems. J. Math Imaging Vision 2012, 44, 114–127. [Google Scholar] [CrossRef]

- Gong, P.; Zhou, J.; Fan, W.; Ye, J. Efficient multi-task feature learning with calibration. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 10–13 August 2014; pp. 761–770. [Google Scholar]

- Horn, R.A.; Johnson, C.R. Matrix Analysis, 2nd ed.; Cambridge University: Cambridge, UK, 2012. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. ML-kNN: A lazy learning approach to multi-label learning. Pattern Recog. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Kingma, D.K.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).