Automatic Colorization of Anime Style Illustrations Using a Two-Stage Generator

Abstract

Featured Application

Abstract

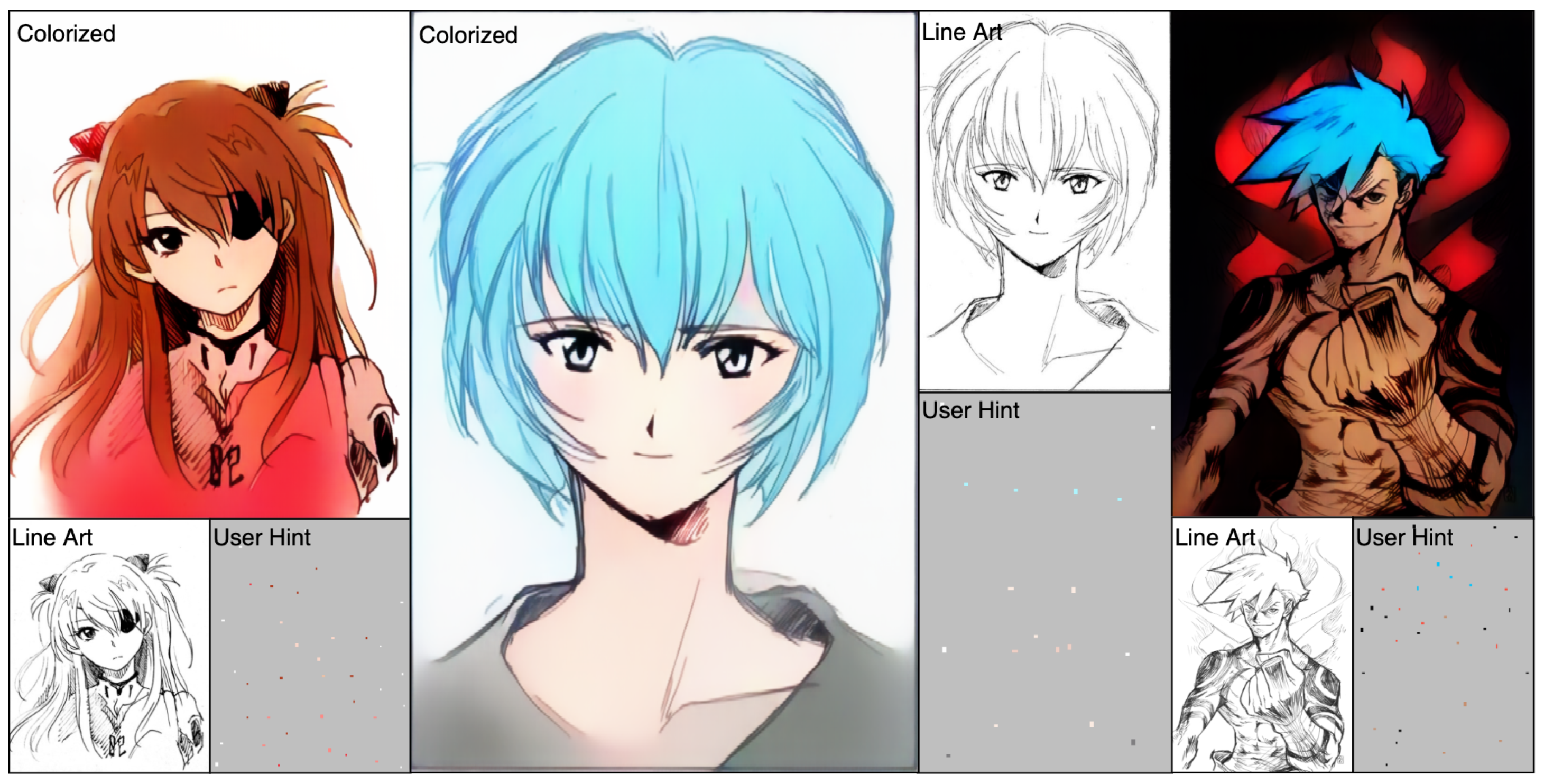

1. Introduction

- Provide flexibility in enhancing the resolution of an image through two stage generators.

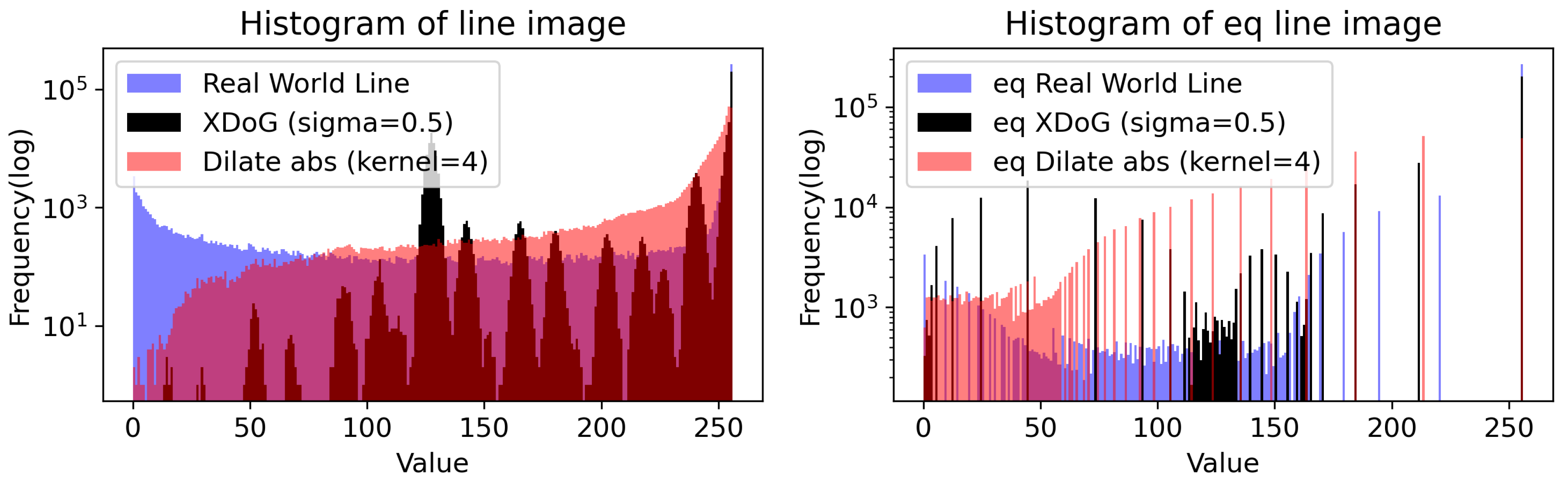

- Introduction of line style distribution generalization via histogram equalization of line-art to provide better colorization performance.

- Randomly use the grayscale transformed image instead of the line-art in data augmentation to increase the line-art generalization performance.

- Introduction of seven segment hint pointing constraints in measuring the performance of line-art colorization.

2. Related Work

2.1. Generative Adversarial Networks

2.2. Fully Automatic Colorization

2.3. Style Transfer-Based Colorization

2.4. Colorization with Color Point Hinting

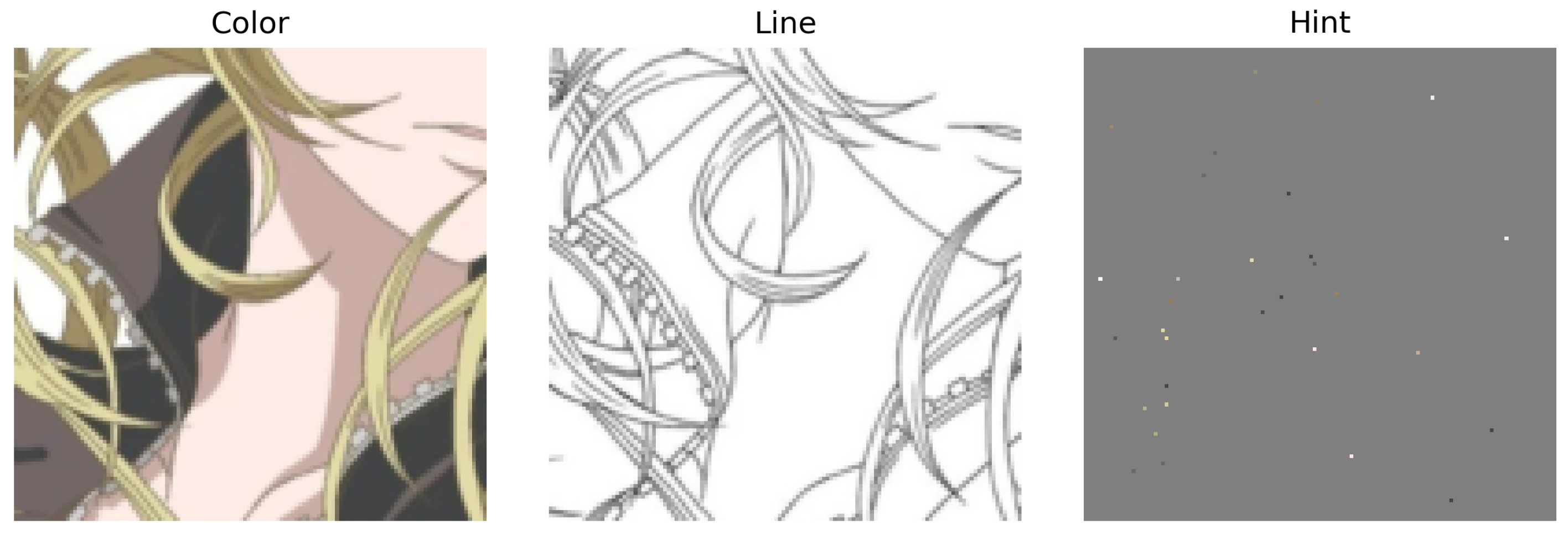

3. Structure of Proposed Method

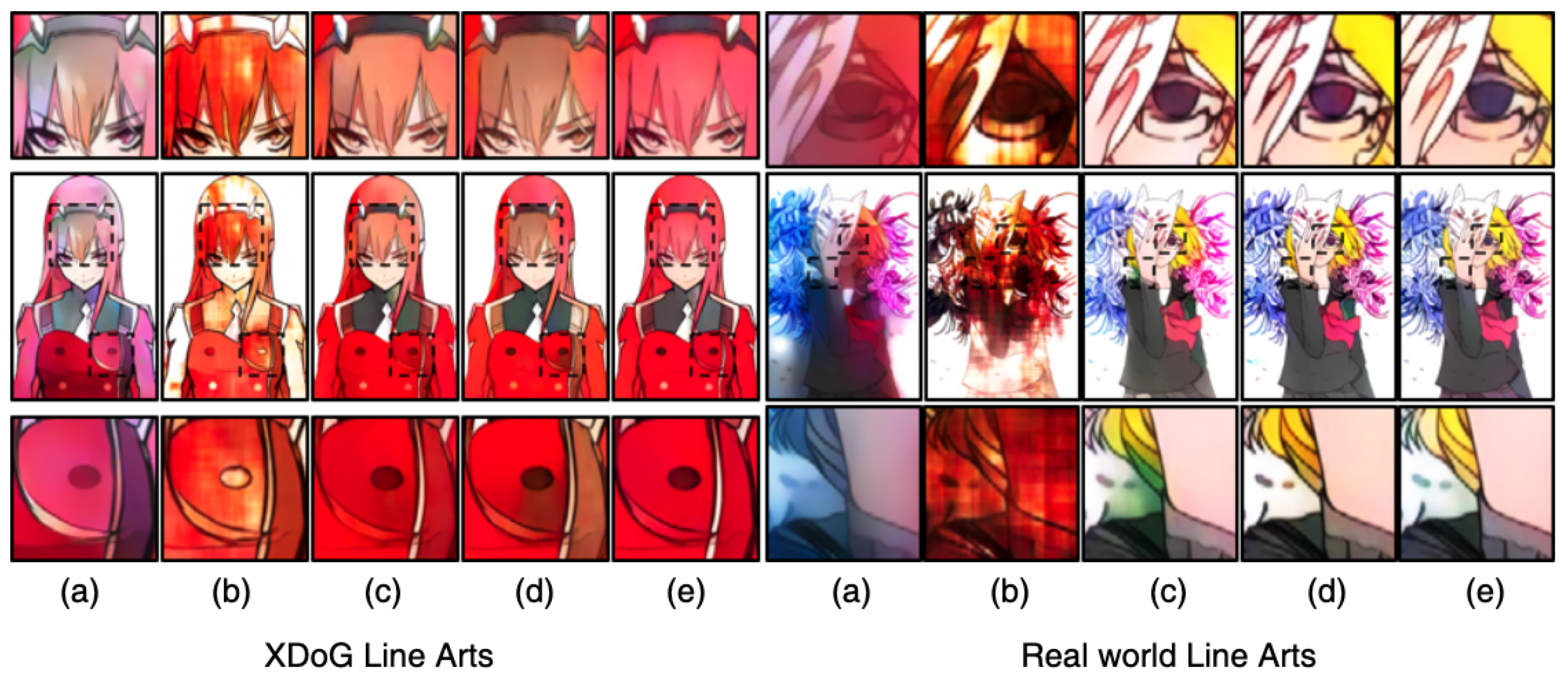

3.1. Line-Art Over-Fitting

3.2. Pre-Processing

| Algorithm 1 Dilate abs sub |

| Require: color image |

| Require: Kernel size K |

3.3. Line Detection Model

3.4. Draft Stage

3.5. Colorization Stage

3.6. Loss Function

4. Experiments and Analysis

4.1. Data Set

4.2. Environment

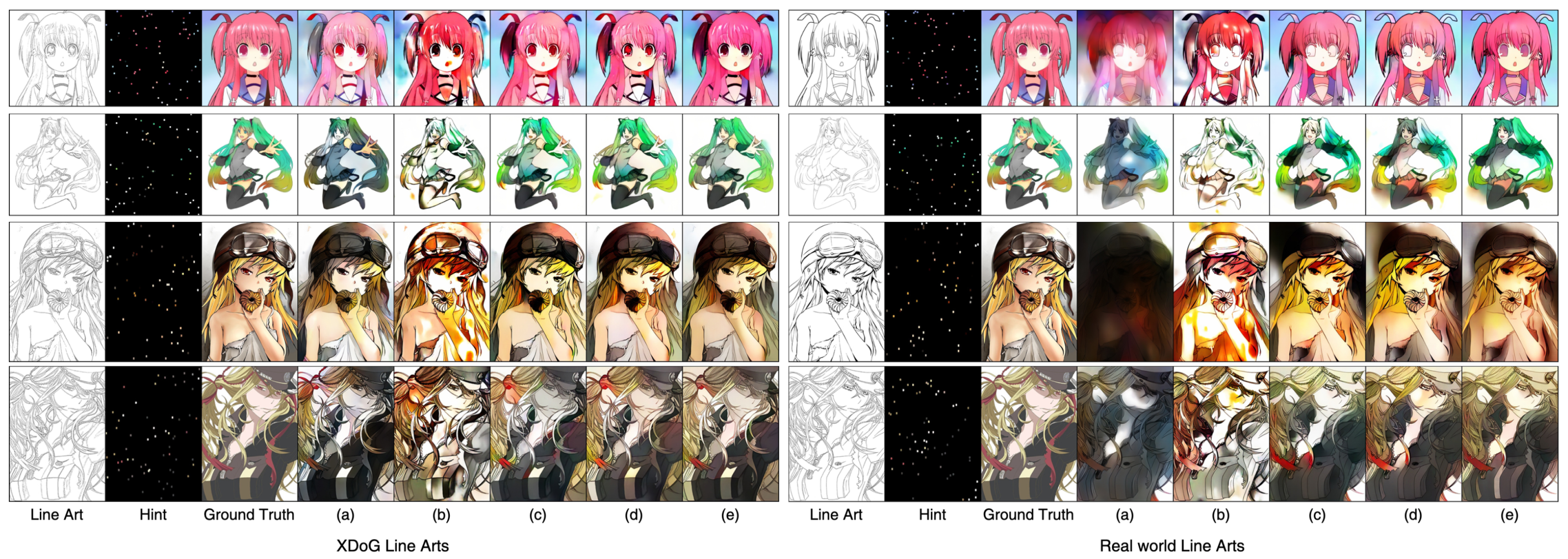

4.3. Visual Analysis

4.4. Mean Opinion Score (MOS) Evaluation

4.5. Quantitative Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Kang, S.; Choo, J.; Chang, J. Consistent comic colorization with pixel-wise background classification. In Proceedings of the NIPS’17 Workshop on Machine Learning for Creativity and Design, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Furusawa, C.; Hiroshiba, K.; Ogaki, K.; Odagiri, Y. Comicolorization: Semi-automatic manga colorization. In Proceedings of the SIGGRAPH Asia 2017 Technical Briefs, Bangkok, Thailand, 27–30 November 2017; pp. 1–4. [Google Scholar]

- Hensman, P.; Aizawa, K. cGAN-based manga colorization using a single training image. In Proceedings of the 2017 IEEE 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 3, pp. 72–77. [Google Scholar]

- Zhang, L.; Ji, Y.; Lin, X.; Liu, C. Style transfer for anime sketches with enhanced residual u-net and auxiliary classifier gan. In Proceedings of the 2017 IEEE 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 506–511. [Google Scholar]

- Sangkloy, P.; Lu, J.; Fang, C.; Yu, F.; Hays, J. Scribbler: Controlling deep image synthesis with sketch and color. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5400–5409. [Google Scholar]

- Liu, Y.; Qin, Z.; Luo, Z.; Wang, H. Auto-painter: Cartoon image generation from sketch by using conditional generative adversarial networks. arXiv 2017, arXiv:1705.01908. [Google Scholar] [CrossRef]

- Frans, K. Outline colorization through tandem adversarial networks. arXiv 2017, arXiv:1704.08834. [Google Scholar]

- Ci, Y.; Ma, X.; Wang, Z.; Li, H.; Luo, Z. User-guided deep anime line art colorization with conditional adversarial networks. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 1536–1544. [Google Scholar]

- Zhang, L.; Li, C.; Wong, T.T.; Ji, Y.; Liu, C. Two-stage sketch colorization. ACM Trans. Graph. (TOG) 2018, 37, 1–14. [Google Scholar] [CrossRef]

- Hati, Y.; Jouet, G.; Rousseaux, F.; Duhart, C. PaintsTorch: A User-Guided Anime Line Art Colorization Tool with Double Generator Conditional Adversarial Network. In Proceedings of the European Conference on Visual Media Production, London, UK, 17–18 December 2019; pp. 1–10. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Bińkowski, M.; Donahue, J.; Dieleman, S.; Clark, A.; Elsen, E.; Casagrande, N.; Cobo, L.C.; Simonyan, K. High fidelity speech synthesis with adversarial networks. arXiv 2019, arXiv:1909.11646. [Google Scholar]

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Synthetic data augmentation using GAN for improved liver lesion classification. In Proceedings of the 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 289–293. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Dai, B.; Fidler, S.; Urtasun, R.; Lin, D. Towards Diverse and Natural Image Descriptions via a Conditional GAN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Winnemöller, H.; Kyprianidis, J.E.; Olsen, S.C. XDoG: An extended difference-of-Gaussians compendium including advanced image stylization. Comput. Graph. 2012, 36, 740–753. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1874–1883. [Google Scholar]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and checkerboard artifacts. Distill 2016, 1, e3. [Google Scholar] [CrossRef]

- Nah, S.; Hyun Kim, T.; Mu Lee, K. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3883–3891. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Bengio, Y., Le Cun, Y., Eds.; 2015. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Branwen, G. Danbooru2019: A Large-Scale Crowdsourced and Tagged Anime Illustration Dataset. Available online: https://www.gwern.net/Danbooru2019 (accessed on 31 July 2019).

- E-Shuushuu—Kawaii Image Board. 2018. Available online: https://e-shuushuu.net/ (accessed on 19 July 2018).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, arXiv:1706.08500. [Google Scholar]

| W/O Color Hint | XDoG Line-Arts | Real-World Line-Arts | ||

|---|---|---|---|---|

| Model | FID | STD | FID | STD |

| Base [10] | 44.70 | 2.01 | 109.39 | 0.40 |

| Pix2pix [2] | 41.14 | 1.51 | 117.24 | 0.38 |

| Ours (LDM enabled line loss only) | 43.87 | 1.91 | 99.40 | 0.32 |

| Ours (Histogram equalization Only) | 39.54 | 1.84 | 92.63 | 0.24 |

| Ours | 39.77 | 1.75 | 98.75 | 0.17 |

| With Color Hint | XDoG Line-Arts | Real-World Line-Arts | ||

| Model | C-FID | STD | C-FID | STD |

| Base [10] | 35.83 | 1.81 | 87.95 | 0.36 |

| Pix2pix [2] | 34.21 | 1.12 | 94.14 | 0.31 |

| Ours (LDM enabled line loss only) | 35.51 | 1.74 | 61.83 | 0.26 |

| Ours (Histogram equalization Only) | 33.29 | 1.51 | 60.45 | 0.24 |

| Ours | 32.16 | 1.57 | 57.51 | 0.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, Y.; Lee, S. Automatic Colorization of Anime Style Illustrations Using a Two-Stage Generator. Appl. Sci. 2020, 10, 8699. https://doi.org/10.3390/app10238699

Lee Y, Lee S. Automatic Colorization of Anime Style Illustrations Using a Two-Stage Generator. Applied Sciences. 2020; 10(23):8699. https://doi.org/10.3390/app10238699

Chicago/Turabian StyleLee, Yeongseop, and Seongjin Lee. 2020. "Automatic Colorization of Anime Style Illustrations Using a Two-Stage Generator" Applied Sciences 10, no. 23: 8699. https://doi.org/10.3390/app10238699

APA StyleLee, Y., & Lee, S. (2020). Automatic Colorization of Anime Style Illustrations Using a Two-Stage Generator. Applied Sciences, 10(23), 8699. https://doi.org/10.3390/app10238699