SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan

Abstract

1. Introduction

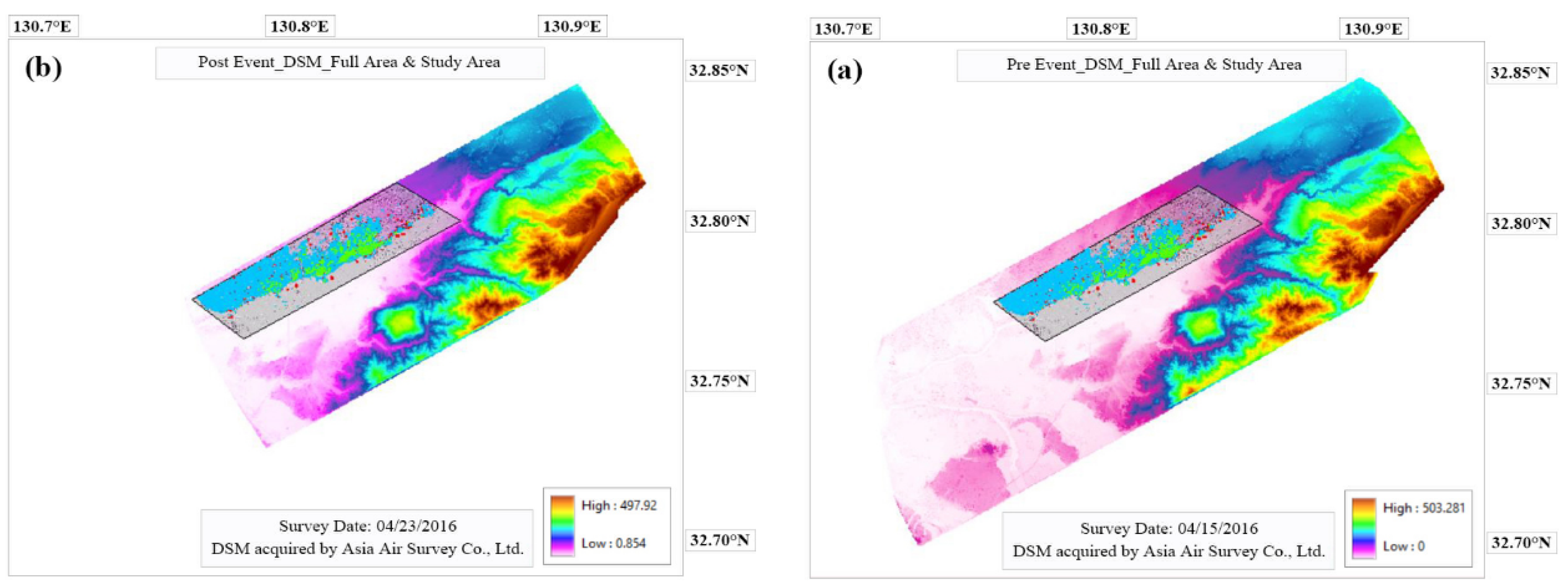

2. Earthquake and Study Area

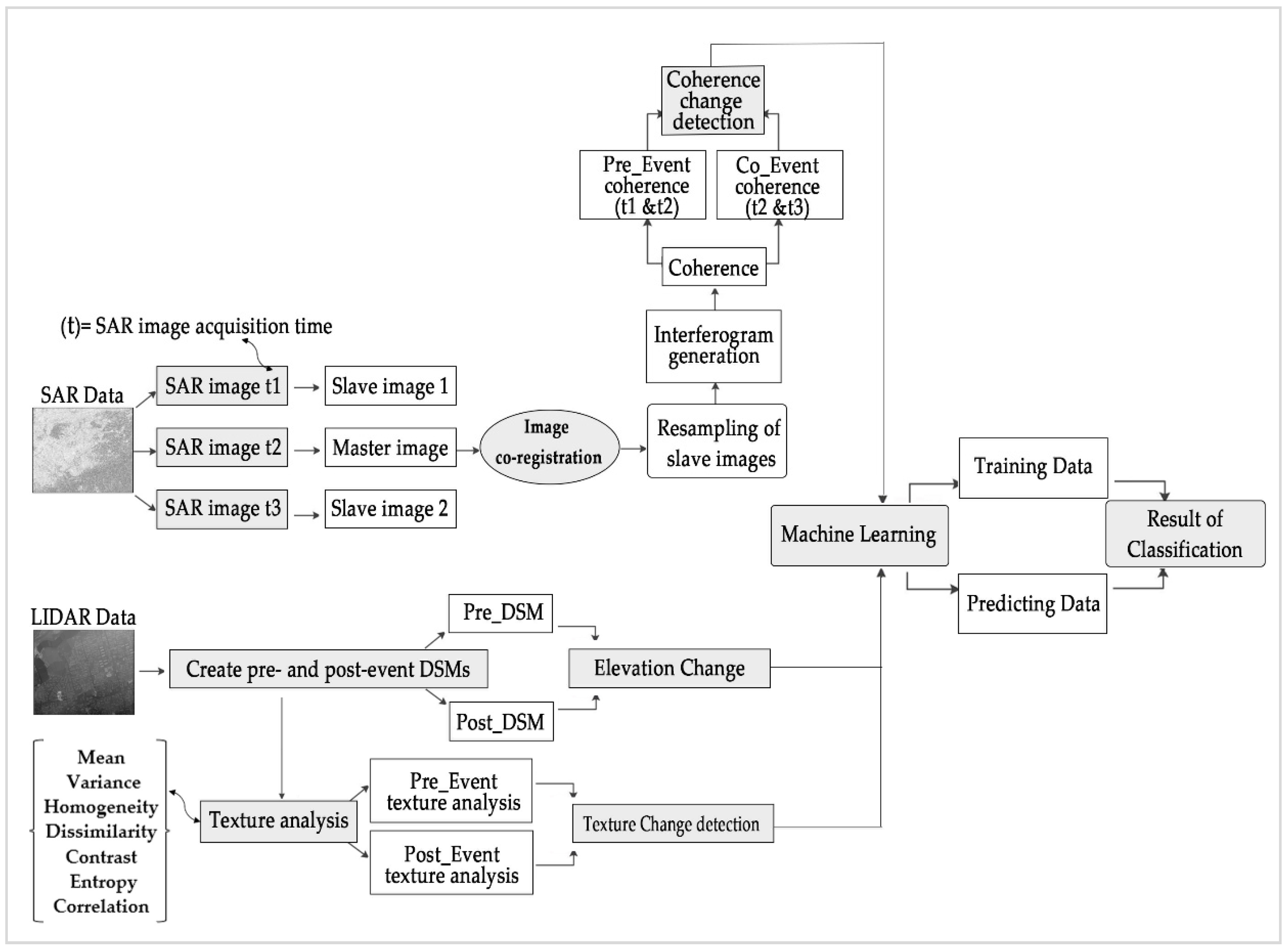

3. Methodology

3.1. Coherence

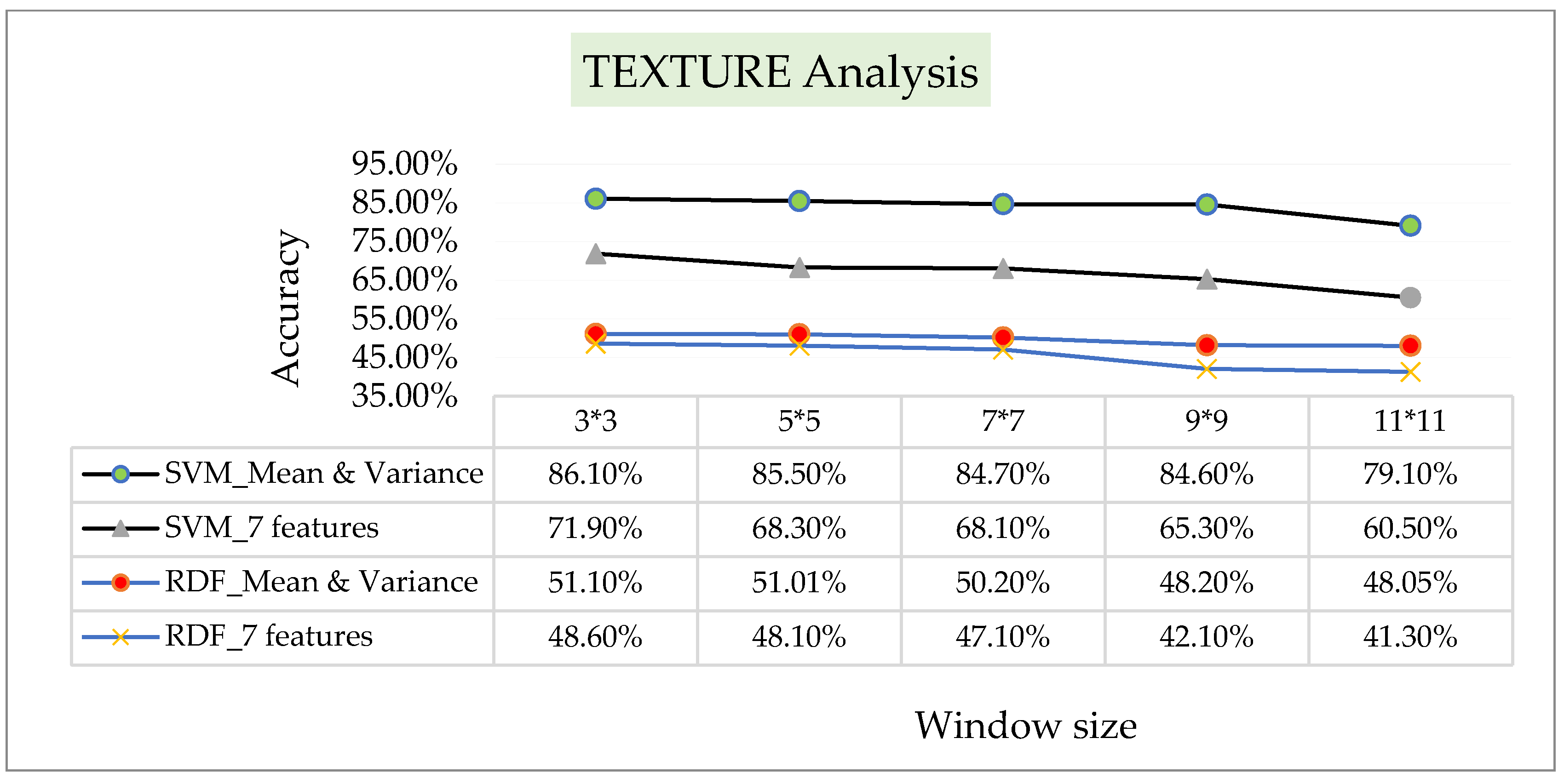

3.2. Texture Analysis

3.3. Machine Learning

3.3.1. Random Forest (RDF)

3.3.2. Support Vector Machine (SVM)

3.4. Damage Classification

3.5. Training and Prediction Dataset

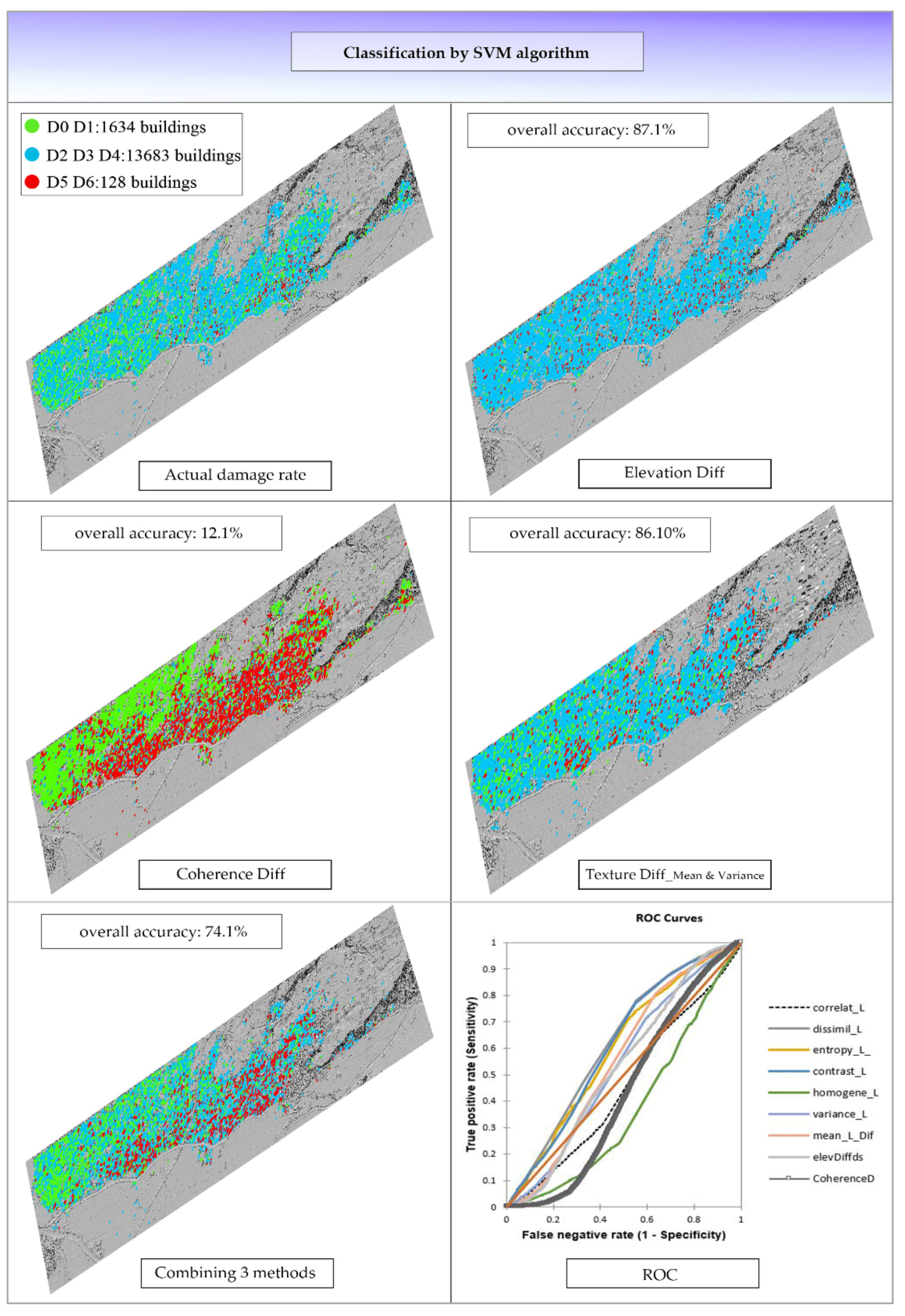

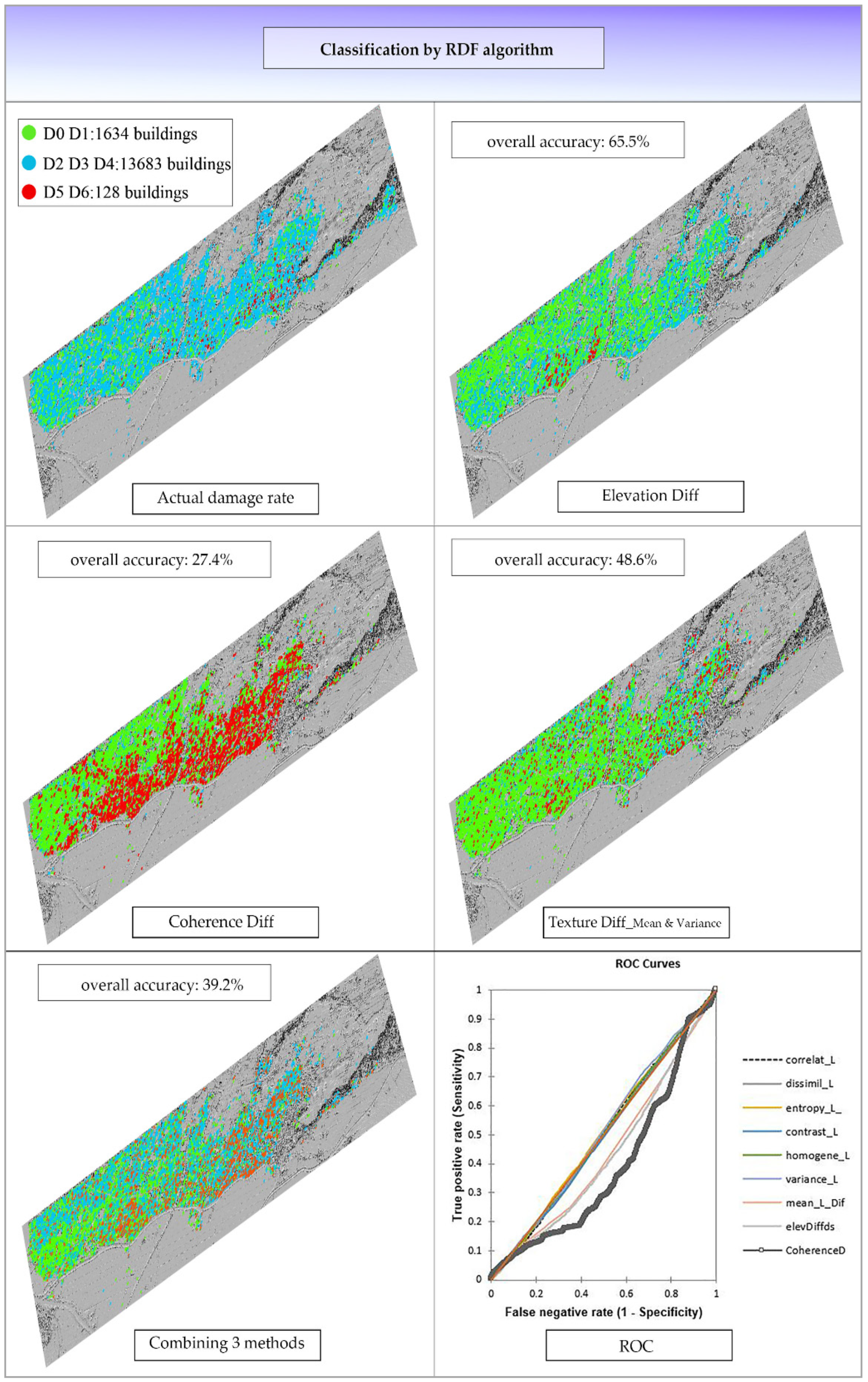

4. Results

5. Discussion

6. Conclusions

- In the future, the LED method can be a good alternative to field research, which is very time consuming and costly.

- Among seven texture properties mentioned in the previous sections, mean and variance played a more effective role in the results. According to the results from this method, it can be considered as a complement to other methods. In a separate experiment with two damage rates, the overall accuracy of this method increased about 10%.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Karimzadeh, S.; Matsuoka, M.; Miyajima, M.; Adriano, B.; Fallahi, A.; Karashi, J. Sequential SAR coherence method for the monitoring of buildings in Sarpole-Zahab, Iran. Remote Sens. 2018, 10, 1255. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Use of satellite SAR intensity imagery for detecting building areas damaged due to earthquakes. Earthq. Spectra 2004, 20, 975–994. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Building damage mapping of the 2003 Bam, Iran, earthquake using Envisat/ASAR intensity imagery. Earthq. Spectra 2005, 21, 285–294. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Matsuoka, M. Building damage characterization for the 2016 Amatrice earthquake using ascending–descending COSMO-SkyMed data and topographic position index. IEEE. J. Sel. Top Appl. Earth. Obs. Remote Sens. 2016, 11, 2668–2682. [Google Scholar] [CrossRef]

- Moya, L.; Yamazaki, F.; Liu, W.; Yamada, M. Detection of collapsed buildings from lidar data due to the 2016 Kumamoto earthquake in Japan. Nat. Hazards Earth Syst. Sci. 2018, 18, 65–78. [Google Scholar] [CrossRef]

- Bai, Y.; Adriano, B.; Mas, E.; Koshimura, S. Machine learning based building damage mapping from the ALOS-2/PALSAR-2 SAR imagery: Case study of 2016 Kumamoto earthquake. J. Disaster Res. 2017, 12, 646–655. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.-H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Liu, W.; Yamazaki, F.; Maruyama, Y. Detection of earthquake-induced landslides during the 2018 Kumamoto earthquake using multitemporal airborne Lidar data. Remote Sens. 2019, 11, 2292. [Google Scholar] [CrossRef]

- Plank, S. Rapid damage assessment by means of multi-temporal SAR—A comprehensive review and outlook to Sentinel-1. Remote Sens. 2014, 6, 4870–4906. [Google Scholar] [CrossRef]

- Sharma, M.; Garg, R.; Badenko, V.; Fedotov, A.; Min, L.; Yao, A. Potential of airborne LiDAR data for terrain parameters extraction. Quat. Int. 2020, in press. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Matsuoka, M. A weighted overlay method for liquefaction-related urban damage detection: A case study of the 6 September 2018 Hokkaido Eastern Iburi earthquake, Japan. Geosciences 2018, 8, 487. [Google Scholar] [CrossRef]

- Muller, J.R.; Harding, D.J. Using LIDAR surface deformation mapping to constrain earthquake magnitudes on the Seattle fault in Washington State, USA. 2007 Urban Remote Sens. Jt. Event 2007, 7, 1–7. [Google Scholar] [CrossRef]

- Yamazaki, F.; Liu, W. Remote sensing technologies for post-earthquake damage assessment: A case study on the 2016 Kumamoto earthquake. In Proceedings of the 6th ASIA Conference on Earthquake Engineering, Cebu City, Philippines, 22–24 September 2016; p. KN4. [Google Scholar]

- Hajeb, M.; Karimzadeh, S.; Fallahi, A. Seismic damage assessment in Sarpole-Zahab town (Iran) using synthetic aperture radar (SAR) images and texture analysis. Nat. Hazards 2020, 103, 1–20. [Google Scholar] [CrossRef]

- Miura, H.; Aridome, T.; Matsuoka, M. Deep learning-based identification of collapsed, non-collapsed and blue tarp-covered buildings from post-disaster aerial images. Remote Sens. 2020, 12, 1924. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Matsuoka, M.; Kuang, J.; Ge, L. Spatial prediction of aftershocks triggered by a major earthquake: A binary machine learning perspective. ISPRS Int. J. Geo-Inf. 2019, 8, 462. [Google Scholar] [CrossRef]

- Bai, Y.; Adriano, B.; Mas, E.; Koshimura, S. Building damage assessment in the 2015 Gorkha, Nepal, earthquake using only post-event dual polarization synthetic aperture radar imagery. Earthq. Spectra 2017, 33, 185–195. [Google Scholar] [CrossRef]

- Yamazaki, F.; Sagawa, Y.; Liu, W. Extraction of landslides in the 2016 Kumamoto earthquake using multi-temporal Lidar data. In Earth Resources and Environmental Remote Sensing/GIS Applications IX, Proceedings of the SPIE Remote Sensing, Berlin, Germany, 11–13 September 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10790, p. 107900H. [Google Scholar] [CrossRef]

- Google Earth. Available online: https://earth.google.com/web/search/Kumamoto,+Japan (accessed on 19 July 2020).

- Pre-Kumamoto Earthquake (16 April 2016) Rupture Lidar Scan. OpenTopography, High-Resolution Topography Data and Tools. Available online: https://portal.opentopography.org/datasetMetadata?otCollectionID=OT.052018.2444.2 (accessed on 12 July 2020).

- Post-Kumamoto Earthquake (16 April 2016) Rupture Lidar Scan. OpenTopography, High-Resolution Topography Data and Tools. Available online: https://portal.opentopography.org/datasetMetadata?otCollectionID=OT.052018.2444.1 (accessed on 12 July 2020).

- Tamkuan, N.; Nagai, M. Sentinel-1A analysis for damage assessment: A case study of Kumamoto earthquake in 2016. MATTER Int. J. Sci. Technol. 2019, 5, 23–35. [Google Scholar] [CrossRef][Green Version]

- Whelley, P.; Glaze, L.S.; Calder, E.S.; Harding, D.J. LiDAR-derived surface roughness texture mapping: Application to Mount St. Helens Pumice Plain deposit analysis. IEEE. Trans. Geosci. Remote Sens. 2013, 52, 426–438. [Google Scholar] [CrossRef]

- Gong, L.; Wang, C.; Wu, F.; Zhang, J.; Zhang, H.; Li, Q. Earthquake-induced building damage detection with post-event sub-meter VHR TerraSAR-X staring spotlight imagery. Remote Sens. 2016, 8, 887. [Google Scholar] [CrossRef]

- Wood, E.M.; Pidgeon, A.M.; Radeloff, V.C.; Keuler, N.S. Image texture as a remotely sensed measure of vegetation structure. Remote Sens. Environ. 2012, 121, 516–526. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Scalco, E.; Fiorino, C.; Cattaneo, G.M.; Sanguineti, G.; Rizzo, G. Texture analysis for the assessment of structural changes in parotid glands induced by radiotherapy. Radiother. Oncol. 2013, 109, 384–387. [Google Scholar] [CrossRef] [PubMed]

- Santos, A.; Figueiredo, E.; Silva, M.; Sales, C.; Costa, J. Machine learning algorithms for damage detection: Kernel-based approaches. J. Sound Vib. 2016, 363, 584–599. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Chicaolmo, M.; Abarca-Hernández, F.; Atkinson, P.M.; Jeganathan, C. Random forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Goto, T.; Miura, H.; Mastuoka, M.; Mochizuki, K.; Koiwa, H. Building damage detection from optical images based on histogram equalization and texture analysis following the 2016 Kumamoto, Japan earthquake. In Proceedings of the International Symposium of Remote Sensing, Nagoya, Japan, 17–19 May 2017. [Google Scholar]

- Okada, S.; Takai, N. Classifications of structural types and damage patterns of buildings for earthquake field investigation. J. Struct. Constr. Eng. (Trans. AIJ) 1999, 64, 65–72. [Google Scholar] [CrossRef]

- Humboldt State University. Accuracy Metrics. Available online: http://gsp.humboldt.edu/OLM/Courses/GSP216Online/lesson6-2/metrics.html/ (accessed on 4 January 2019).

- Singh, M.; Evans, D.; Chevance, J.-B.; Tan, B.S.; Wiggins, N.; Kong, L.; Sakhoeun, S. Evaluating remote sensing datasets and machine learning algorithms for mapping plantations and successional forests in Phnom Kulen National Park of Cambodia. PeerJ 2019, 7, e7841. [Google Scholar] [CrossRef]

- XLstat, Data Analysis Solution. Available online: https://www.xlstat.com/en/solutions/features/roc-curves (accessed on 4 January 2020).

- Rastiveis, H.; Eslamizade, F.; Hosseini-Zirdoo, E. Building damage assessment after earthquake using post-event LiDAR data. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 595–600. [Google Scholar] [CrossRef]

- Axel, C.; van Aardt, J. Building damage assessment using airborne lidar. J. Appl. Remote Sens. 2017, 11, 1. [Google Scholar] [CrossRef]

- Torres, Y.; Arranz-Justel, J.-J.; Gaspar-Escribano, J.M.; Gaspar-Escribano, J.M.; Martínez-Cuevas, S.; Benito, M.B.; Ojeda, J.C. Integration of LiDAR and multispectral images for rapid exposure and earthquake vulnerability estimation. Application in Lorca, Spain. Int. J. Appl. Earth Obs. Geoinf. 2019, 81, 161–175. [Google Scholar] [CrossRef]

- Rezaeian, M. Assessment of Earthquake Damages by Image-Based Techniques. Ph.D. Thesis, Eidgenössischen Technischen Hochschule, Zurich, Switzerland, 2010. [Google Scholar]

| SAR | |||||

| Type | Date | Polarization | Spatial Resolution | Path Direction | Mode |

| Sentinel-1 | 3 March 2016 | VV | 20 m | D | Interferometric Wide |

| Sentinel-1 | 20 April 2016 | VV | 20 m | D | Interferometric Wide |

| Sentinel-1 | 27 June 2016 | VV | 20 m | D | Interferometric Wide |

| LIDAR | |||||

| Collection Platform | Date | Data Provider | Area | Point Density | |

| Airborne LIDAR | 15 April 2016 | Asia Air Survey Co., Ltd. | 151.56 km2 | 2.94 pts/m2 | |

| Airborne LIDAR | 23 April 2016 | Asia Air Survey Co., Ltd. | 95.85 km2 | 4.47 pts/m2 | |

| 4DR | ||||

| Group Name | Damage Level | Number of Total Buildings | Number of Training Buildings | Number of Prediction Buildings |

| D0, D1 | Negligible to Slight | 2634 | 700 | 1934 |

| D2, D3 | Moderate | 13,007 | 700 | 12,307 |

| D4, D5 | Very heavy | 1676 | 700 | 973 |

| D6 | Collapsed | 1128 | 700 | 428 |

| 3DR | ||||

| Group Name | Damage Level | Number of Total Buildings | Number of Training Buildings | Number of Prediction Buildings |

| D0, D1 | Negligible to Slight | 2634 | 1000 | 1634 |

| D2, D3, D4 | Moderate to Heavy | 14,683 | 1000 | 13,683 |

| D5, D6 | Very heavy to Collapsed | 1128 | 1000 | 128 |

| Combination of 3 Methods | ||

|---|---|---|

| Type of Accuracy | SVM (%) | RDF (%) |

| Producer Accuracy (D0, D1) | 26.13 | 14.44 |

| Producer Accuracy (D2, D3, D4) | 55.09 | 94.85 |

| Producer Accuracy (D5, D6) | 91.19 | 5.1 |

| User Accuracy (D0, D1) | 35.01 | 79.06 |

| User Accuracy (D2, D3, D4) | 78.79 | 34.52 |

| User Accuracy (D5, D6) | 61.17 | 66.40 |

| Overall Accuracy (3 category) | 74.1 | 39.2 |

| No | Research | Type of Data | Algorithm | Accuracy | Event |

|---|---|---|---|---|---|

| 1 | Moya et al. [5] | LIDAR | SVM | 92% | Kumamoto earthquake |

| 2 | Rastiveis et al. [37] | LIDAR | SVM | 91.59% | Haiti earthquake |

| 3 | Axel and Aardt [38] | LIDAR | SVM | 78.90% | Haiti earthquake |

| 4 | Torres et al. [39] | LIDAR | SVM | 78.8% | Lorca earthquake |

| 5 | Mehdi Rezaeian [40] | LIDAR | SVM | 80% | Kobe earthquake |

| 6 | Singh et al. [35] | Landsat, ALOS and LIDAR | SVM | 98% | Cambodia forests |

| RDF | 66% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajeb, M.; Karimzadeh, S.; Matsuoka, M. SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan. Appl. Sci. 2020, 10, 8932. https://doi.org/10.3390/app10248932

Hajeb M, Karimzadeh S, Matsuoka M. SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan. Applied Sciences. 2020; 10(24):8932. https://doi.org/10.3390/app10248932

Chicago/Turabian StyleHajeb, Masoud, Sadra Karimzadeh, and Masashi Matsuoka. 2020. "SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan" Applied Sciences 10, no. 24: 8932. https://doi.org/10.3390/app10248932

APA StyleHajeb, M., Karimzadeh, S., & Matsuoka, M. (2020). SAR and LIDAR Datasets for Building Damage Evaluation Based on Support Vector Machine and Random Forest Algorithms—A Case Study of Kumamoto Earthquake, Japan. Applied Sciences, 10(24), 8932. https://doi.org/10.3390/app10248932