Acoustic-Based Position Estimation of an Object and a Person Using Active Localization and Sound Field Analysis

Abstract

:1. Introduction

2. Implementation of Active Localization: Signal Model, Processing, and Feasibility Test

2.1. Signal Model and Definition of Sound Field Variation

2.2. Proposed Algorithm Based on Steered Response Power with Moving Average

2.3. Configuration for the Simulations and Experiments

2.4. Preliminary Experiments in Ideal Conditions

3. Sound Field Simulation and Its Analysis Using Acoustic Parameters

3.1. Simulation Test for the Reverberant Environment

- STEP 1: The error of localization performance was analyzed by changing the absorption coefficient at the boundary of the target control space (2 m × 3 m).

- STEP 2: To examine the correlation between the absorption coefficient of the boundary and the spatial effects, we analyzed the acoustic parameters of the reverberation time (RT20) and early decay time (EDT).

- STEP 3: The operating conditions of the active localization were presented using RT20 and EDT.

3.1.1. Simulation Setup

3.1.2. Simulation Results and Analysis

3.2. Relationship Analysis of Acoustic Parameters and Absorption Coefficients to Propose Operating Conditions

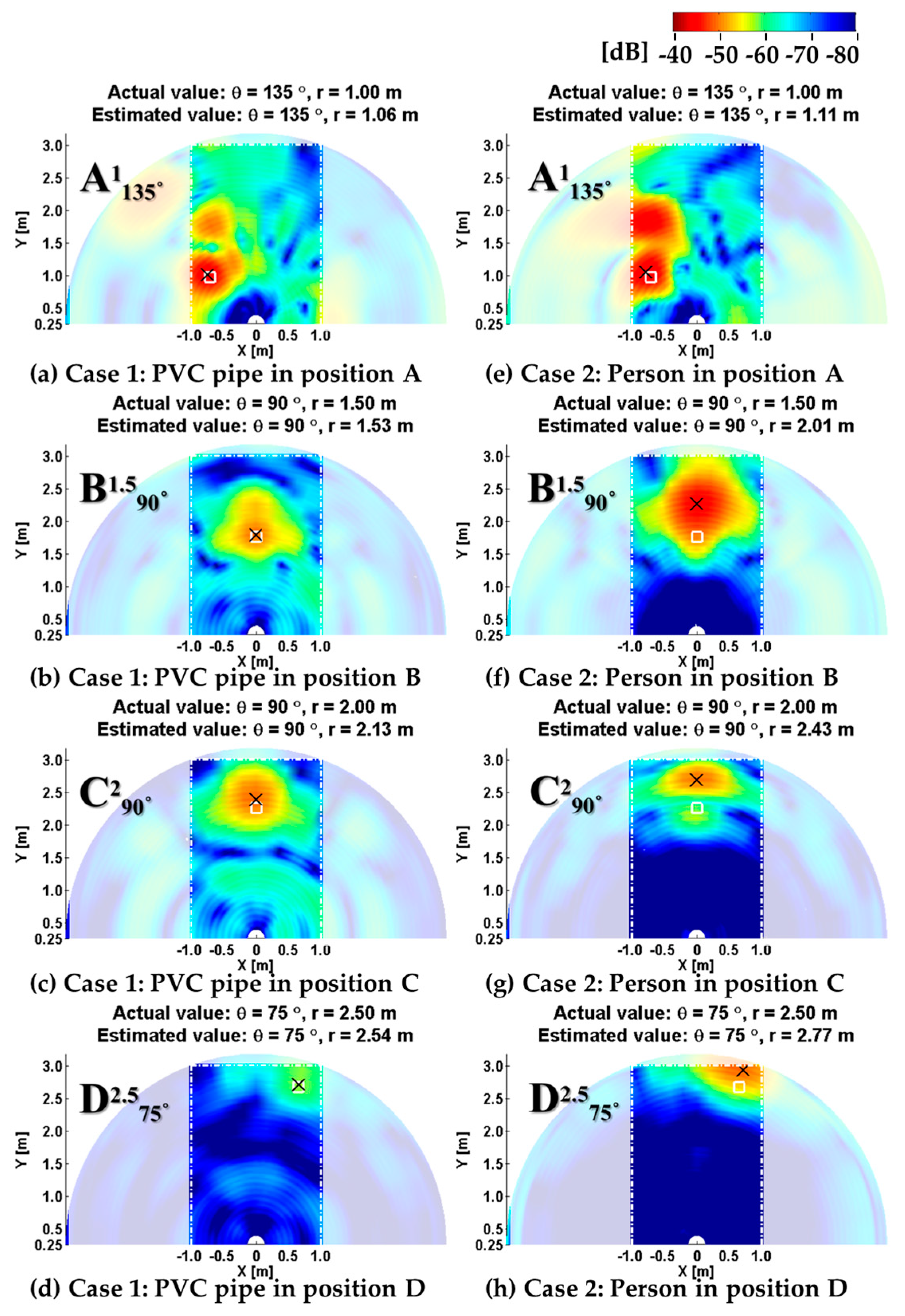

4. Experimental Results of Active Localization in a Reverberant Environment

4.1. Experimental Configuration and Operating Conditions Test

4.2. Localization Performance in a Reverberant Environment

5. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

References

- Ding, D.; Cooper, R.A.; Pasquina, P.F.; Fici-Pasquina, L. Sensor technology for smart homes. Maturitas 2011, 69, 131–136. [Google Scholar] [CrossRef]

- Ueda, K.; Suwa, H.; Arakawa, Y.; Yasumoto, K. Exploring Accuracy-Cost Tradeoff in In-Home Living Activity Recognition based on Power Consumptions and User Positions. In Proceedings of the IEEE International Conference on Computer and Information Technology, Liverpool, UK, 21–23 September 2015; pp. 1130–1137. [Google Scholar]

- Laout, G.; Zhang, Y.; Harrison, C. Synthesis Sensors: Toward General-Purpose Sensing. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 3986–3999. [Google Scholar]

- Chilipirea, C.; Ursache, A.; Popa, D.O.; Pop, F. Energy efficiency robustness for IoT: Building a smart home security. In Proceedings of the 2016 IEEE 12th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 8–10 September 2016; pp. 43–48. [Google Scholar]

- Dahmen, J.; Cook, D.J.; Wang, X.; Honglei, W. Smart secure homes: A survey of smart home technologies that sense, assess, and respond to security threats. J. Reliab. Intell. Environ. 2017, 3, 83–98. [Google Scholar] [CrossRef]

- Itai, A.; Yasukawa, H. Personal Identification using Footstep based on Wavelets. In Proceedings of the 2006 International Symposium on Intelligent Signal Processing and Communications, Tottori, Japan, 12–15 December 2006; pp. 383–386. [Google Scholar]

- Olalekan, O.B.; Toluwani, O.V. Automated Home Security System: A Review. MAYFEB J. Electr. Electron. Eng. 2016, 1, 7–16. [Google Scholar]

- Crocco, M.; Cristani, M.; Trucco, A.; Murino, V. Audio surveillance: A systematic review. ACM Comput. Surv. 2016, 48, 52:1–52:46. [Google Scholar] [CrossRef]

- Foggia, P.; Petkov, N.; Saggese, A.; Striscriuglio, N.; Vento, M. Reliable detection of audio events in highly noisy environments. Pattern Recognit. Lett. 2015, 65, 22–28. [Google Scholar] [CrossRef]

- Jung, K.K.; Shin, H.S.; Kang, S.H.; Eom, K.H. Object tracking for security monitoring system using microphone array. In Proceedings of the International Conference on Control, Automation and Systems, Seoul, Korea, 17–20 October 2007; pp. 2351–2354. [Google Scholar]

- Dostalek, P.; Vasek, V.; Kresalek, V.; Navratil, M. Utilization of audio source localization in security systems. In Proceedings of the 43rd International Conference on Security Technology, Zurich, Switzerland, 5–8 October 2009; pp. 305–311. [Google Scholar]

- Transfield, P.; Martens, U.; Binder, H.; Schypior, T.; Fingscheidt, T. Acoustic event source localization for surveillance in reverberant environments supported by an event onset detection. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2015; pp. 2629–2633. [Google Scholar]

- Abu-EI-Quran, A.R.; Goubran, R.A.; Chan, A.D.C. Security monitoring using microphone arrays and audio classification. IEEE Trans. Instrum. Meas. 2006, 55, 1025–1032. [Google Scholar] [CrossRef]

- Kawamoto, M.; Asano, F.; Kurumatani, K.; Hua, Y. A system for detecting unusual sounds from sound environment observed by microphone arrays. In Proceedings of the 5th International Conference on Information Assurance and Security, Xi’an, China, 18–20 August 2009; pp. 729–732. [Google Scholar]

- Chen, B.W.; Chen, C.Y.; Wang, J.F. Smart Homecare Surveillance System: Behavior Identification Based on State-Transition Support Vector Machines and Sound Directivity Pattern Analysis. IEEE Trans. Syst. Man Cybern. Syst. 2013, 43, 1279–1289. [Google Scholar] [CrossRef]

- Choi, Y.K.; Kim, K.M.; Jung, J.W.; Chun, S.Y.; Park, K.S. Acoustic intruder detection system for home security. IEEE Trans. Consum. Electron. 2005, 51, 130–138. [Google Scholar] [CrossRef]

- Lee, S.Q.; Park, K.H.; Kim, K.; Ryu, H.M.; Wang, S. Intrusion detection based on the sound field variation in audible frequency-general sound space case. In Proceedings of the 19th International Conference on Sound and Vibration (ICSV), Vilnius, Lithuania, 8–12 July 2012; pp. 1–8. [Google Scholar]

- Lee, C.; Kim, D.; Kim, K. Acoustic detection based on coherence bandwidth. Electron. Lett. 2015, 51, 1387–1388. [Google Scholar] [CrossRef]

- Ishigaki, T.; Higuchi, T.; Watanabe, K. An Information Fusion-Based Multiobjective Security System with a Multiple-Imput/Single-Output Sensor. IEEE Sens. J. 2007, 7, 734–742. [Google Scholar] [CrossRef]

- Dhake, P.S.; Borde, S.S. Embedded Surveillance System Using PIR Sensor. Int. J. Adv. Technol. Eng. Sci. 2014, 2, 31–36. [Google Scholar]

- Sonbul, O.; Kalashnikov, A.N. Low Cost Ultrasonic Wireless Distributed Security System for Intrusion Detection. In Proceedings of the 7th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS), Berlin, Germany, 12–14 September 2013; pp. 235–238. [Google Scholar]

- Lee, Y.; Han, D.K.; Ko, H. Acoustic Signal Based Abnormal Event Detection in Indoor Environment using Multiclass Adaboost. IEEE Trans. Consum. Electron. 2013, 59, 615–622. [Google Scholar] [CrossRef]

- Kuc, R. Biomimetic Sonar Locates and Recognizes Objects. IEEE J. Ocean. Eng. 1997, 22, 616–624. [Google Scholar] [CrossRef]

- Reijniers, J.; Peremans, H. Biomimetic Sonar System Performing Spectrum-Based Localization. IEEE Trans. Robot. 2007, 22, 1151–1159. [Google Scholar] [CrossRef]

- Sunil, B.H. Household Security System Based on Ultrasonic Sensor Technology with SMS Notification. Eur. J. Acad. Essays 2014, 1, 6–9. [Google Scholar]

- Sharma, R.; Dhingra, S.K.; Pandey, N.; Garg, R.; Singhal, R. Electric Field and Ultrasonic Sensor Based Security System. In Proceedings of the 2010 International Conference on Intelligent System, Modeling and Simulation, Liverpool, UK, 28–30 July 2010; pp. 423–426. [Google Scholar]

- Kim, K.; Kim, D.; Ryu, H.; Wang, S.; Lee, S.Q.; Park, K.H. Active localization of a silent intruder with audible frequency in 2D security space. In Proceedings of the International Congress and Exposition on Noise Control Engineering, New York, NY, USA, 19–22 August 2012; pp. 1–8. [Google Scholar]

- Dokmanic, I.; Parhizkar, R.; Walther, A.; Lu, M.Y.; Veterli, M. Acoustic echoes reveal room shape. Proc. Natl. Acad. Sci. USA 2013, 110, 12186–12191. [Google Scholar] [CrossRef] [Green Version]

- Dokmanic, I.; Parhizkar, R.; Ranieri, J.; Vetterli, M. Euclidean Distance Matrices. IEEE Signal Process. Mag. 2015, 32, 12–30. [Google Scholar] [CrossRef] [Green Version]

- Krekovic, M.; Dokmanic, I.; Vetterli, M. EchoSLAM: Simultaneous Localization and Mapping with Acoustic Echoes. In Proceedings of the 2016 IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 11–15. [Google Scholar]

- Kim, Y.H. Sound Propagation: An Impedance Based Approach; John Wiley & Sons (Asia) Pte Ltd., Clementi Loop, #02-01: Singapore, 2010; p. 139. [Google Scholar]

- Do, H.; Silverman, H.F.; Yu, Y. A real-time SRP-PHAT source localization implementation using Stochastic Region Contraction (SRC) on a large-aperture microphone array. In Proceedings of the IEEE International Conference on Acoustic, Speech, and Signal Processing (ICASSP), Honolulu, HI, USA, 15–20 April 2007; pp. 121–124. [Google Scholar]

- DiBiase, J.H.; Silverman, H.F.; Brandstein, M.S. Robust Localization in Reverberant Rooms. In Microphone Arrays: Signal Processing Techniques and Applications; Brandstein, M., Ward, D., Eds.; Springer: Berlin, Germany, 2001; pp. 157–180. [Google Scholar]

- Cobos, M.; Marti, A.; Lopez, J.J. A Modified SRP-PHAT Functional for Robust Real-Time Sound Source Localization with Scalable Spatial Sampling. IEEE Signal Process. Lett. 2011, 18, 71–74. [Google Scholar] [CrossRef]

- Lima, M.V.S.; Martins, W.A.; Nunes, L.O.; Biscanho, L.W.P.; Ferreira, T.N.; Costa, M.V.M.; Lee, B. A Volumetric SRP with Refinement Step for Sound Source Localization. IEEE Process. Lett. 2015, 22, 1098–1102. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Florencio, D.; Zhang, Z. Why does PHAT work well in low noise, reverberative environments? In Proceedings of the 2008 IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 31 March–4 April 2008; pp. 2565–2568. [Google Scholar]

- Trees, H.L.V. Optimum Array Processing: Part IV of Detection, Estimation, and Modulation Theory; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2002; p. 66. [Google Scholar]

- Kowalczyk, K. Boundary and Medium Modelling Using Compact Finite Difference Schemes in Simulations of Room Acoustics for Audio and Architectural Design Applications. Ph.D. Thesis, School of Electrical Engineering & Computer Science, Queen’s University Belfast, Belfast, Northern Ireland, 2008. [Google Scholar]

- Botteldooren, D. Finite-difference time-domain simulation of low-frequency room acoustic problems. J. Acoust. Soc. Am. 1995, 98, 3302–3308. [Google Scholar] [CrossRef]

- Tornberg, A.K.; Engquist, B. Consistent boundary conditions for the Yee scheme. J. Comput. Phys. 2008, 227, 6922–6943. [Google Scholar] [CrossRef]

- Yuan, X.; Broup, D.; Wiskin, J.W.; Berggren, M.; Eidens, R.; Johnson, S.A. Formulation and Validation of Berenger’s PML absorbing Boundary for the FDTD Simulation of Acoustic Scattering. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 1997, 44, 816–822. [Google Scholar] [CrossRef]

- Sheaffer, J.; Walstijn, M.V.; Fazenda, B. Physical and numerical constraints in source modeling for finite difference simulation of room acoustics. J. Acoust. Soc. Am. 2014, 135, 251–261. [Google Scholar] [CrossRef] [Green Version]

- Hak, C.C.J.M.; Wenmaekers, R.H.C.; van Luxemburg, L.C.J. Measuring Room Impulse Responses: Impact of the Decay Range on Derived Room Acoustic Parameters. Acta Acust. United Acust. 2012, 98, 907–915. [Google Scholar] [CrossRef] [Green Version]

- Kuttruff, H. Room Acoustics, 4th ed.; Spon Press: London, UK, 2000; p. 33. [Google Scholar]

| A | B | C | D | |||||

|---|---|---|---|---|---|---|---|---|

| 135° | 1 m | 90° | 1.5 m | 90° | 2 m | 75° | 2.5 m | |

| PML | 135 | 1.06 | 90 | 1.56 | 90 | 2.06 | 75 | 2.55 |

| (Δθ = 0°) | (re = 6%) | (Δθ = 0°) | (re = 4%) | (Δθ = 0°) | (re = 3%) | (Δθ = 0°) | (re = 2%) | |

| α = 0.9 | 135 | 1.07 | 90 | 1.48 | 90 | 1.98 | 75 | 2.47 |

| (Δθ = 0°) | (re = 7%) | (Δθ = 0°) | (re = 1.3%) | (Δθ = 0°) | (re = 1%) | (Δθ = 0°) | (re = 1.2%) | |

| α = 0.8 | 135 | 1.16 | 90 | 1.48 | 90 | 1.98 | 75 | 2.47 |

| (Δθ = 0°) | (re = 16%) | (Δθ = 0°) | (re = 1.3%) | (Δθ = 0°) | (re = 1%) | (Δθ = 0°) | (re = 1.2%) | |

| α = 0.7 | 135 | 1.16 | 90 | 1.48 | 90 | 2.38 | 75 | 2.38 |

| (Δθ = 0°) | (re = 16%) | (Δθ = 0°) | (re = 1.3%) | (Δθ = 0°) | (re = 19%) | (Δθ = 0°) | (re = 4.8%) | |

| α = 0.6 | 135 | 1.24 | 90 | 1.48 | 90 | 2.38 | 75 | 2.38 |

| (Δθ = 0°) | (re = 24%) | (Δθ = 0°) | (re = 1.3%) | (Δθ = 0°) | (re = 19%) | (Δθ = 0°) | (re = 4.8%) | |

| α = 0.5 | 135 | 1.24 | 90 | 2.12 | 90 | 2.38 | 75 | 2.84 |

| (Δθ = 0°) | (re = 24%) | (Δθ = 0°) | (re = 41.3%) | (Δθ = 0°) | (re = 19%) | (Δθ = 0°) | (re = 13.6%) | |

| α = 0.4 | 135 | 1.24 | 90 | 2.04 | 90 | 2.39 | 70 | 2.91 |

| (Δθ = 0°) | (re = 24%) | (Δθ = 0°) | (re = 36%) | (Δθ = 0°) | (re = 19.5%) | (Δθ = 5°) | (re = 16.4%) | |

| α = 0.3 | 135 | 1.24 | 90 | 2.04 | 90 | 2.30 | 70 | 2.91 |

| (Δθ = 0°) | (re = 24%) | (Δθ = 0°) | (re = 36%) | (Δθ = 0°) | (re = 15%) | (Δθ = 5°) | (re = 16.4%) | |

| EDT 1 (ms) Max Value | RT20 2 (ms) Median Value | |||

|---|---|---|---|---|

| α | ||||

| in a Linear Array | in Control Space | in a Linear Array | in Control Space | |

| 0.9 | 0.53 | 3.12 | 15.57 | 17.36 |

| 0.8 | 3.10 | 5.42 | 16.73 | 17.66 |

| 0.7 | 6.04 | 10.75 | 17.89 | 21.23 |

| 0.6 | 14.83 | 12.16 | 33.34 | 29.54 |

| 0.5 | 16.53 | 13.36 | 34.02 | 30.33 |

| 0.4 | 17.81 | 18.44 | 50.32 | 43.13 |

| 0.3 | 21.44 | 24.97 | 60.53 | 56.14 |

| Position | EDT (ms) | RT20 (ms) | |

|---|---|---|---|

| 1 | 8.1 | 24.0 | |

| 2 | 2.7 | 20.0 | |

| In a microphone | 3 | 2.0 | 11.6 |

| array | 4 | 2.0 | 5 |

| 5 | 2.1 | 15.5 | |

| 6 | 2.6 | 20.1 | |

| 7 | 9.0 | 23.5 | |

| 8 (A) | 10.5 | 22.7 | |

| In control space | 9 (B) | 22.8 | 23.5 |

| 10 (C) | 13.2 | 23.6 | |

| 11 (D) | 12.2 | 25.3 |

| PVC Pipe | Person | |||

|---|---|---|---|---|

| Position | Anechoic | Classroom | Anechoic | Classroom |

| A1135 | Δθ = 0° | Δθ = 0° | Δθ = 0° | Δθ = 0° |

| Δr = 0.04 m | Δr = 0.06 m | Δr = 0.03 m | Δr = 0.11 m | |

| (4%) | (6%) | (3%) | (11%) | |

| B1.590 | Δθ = 0° | Δθ = 0° | Δθ = 0° | Δθ = 0° |

| Δr = 0.02 m | Δr = 0.03 m | Δr = 0.09 m | Δr = 0.51 m | |

| (1.33%) | (2%) | (6%) | (34%) | |

| C290 | Δθ = 0° | Δθ = 0° | Δθ = 5° | Δθ = 5° |

| Δr = 0.03 m | Δr = 0.13 m | Δr = 0.05 m | Δr = 0.43 m | |

| (1.5%) | (6.5%) | (2.5%) | (21.5%) | |

| D2.575 | Δθ = 0° | Δθ = 0° | Δθ = 0° | Δθ = 0° |

| Δr = 0.01 m | Δr = 0.04 m | Δr = 0.13 m | Δr = 0.27 m | |

| (0.4%) | (1.6%) | (5.2%) | (10.8%) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.; Wang, S.; Ryu, H.; Lee, S.Q. Acoustic-Based Position Estimation of an Object and a Person Using Active Localization and Sound Field Analysis. Appl. Sci. 2020, 10, 9090. https://doi.org/10.3390/app10249090

Kim K, Wang S, Ryu H, Lee SQ. Acoustic-Based Position Estimation of an Object and a Person Using Active Localization and Sound Field Analysis. Applied Sciences. 2020; 10(24):9090. https://doi.org/10.3390/app10249090

Chicago/Turabian StyleKim, Kihyun, Semyung Wang, Homin Ryu, and Sung Q. Lee. 2020. "Acoustic-Based Position Estimation of an Object and a Person Using Active Localization and Sound Field Analysis" Applied Sciences 10, no. 24: 9090. https://doi.org/10.3390/app10249090