FirepanIF: High Performance Host-Side Flash Cache Warm-Up Method in Cloud Computing

Abstract

:1. Introduction

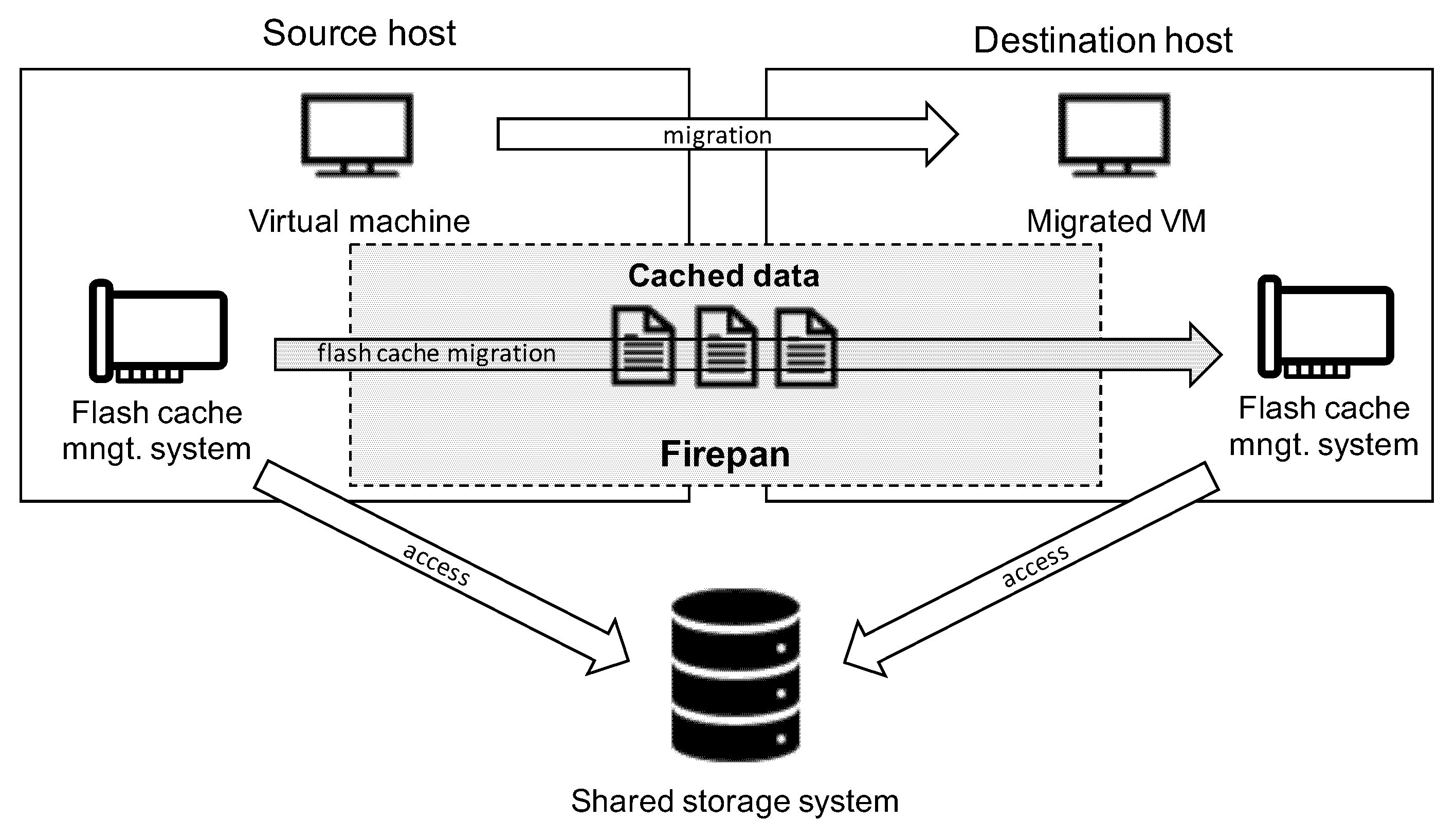

- We propose Firepan and FirepanIF that use the source node’s flash cache as the data source for flash cache warm-up. They can migrate the flash-cache more quickly than conventional methods as they can avoid storage and network congestion on the shared storage server.

- We develop FirepanIF that simultaneously achieves rapid flash-cache migration and minimizes the VM downtime with the invalidation filter, which solves the synchronization problem between the I/O operations generated by the destination VM and flash-cache migration.

- We implement and evaluate the three different flash cache migration techniques in a realistic virtualized environment. FirepanIF demonstrates that it can improve the performance of the I/O workload by up to 21.87% when the size of the flash cache is 20 GB. In addition, the experimental results confirm that our new approach does not generate any negative effects on the neighbor VMs.

2. Background and Related Work

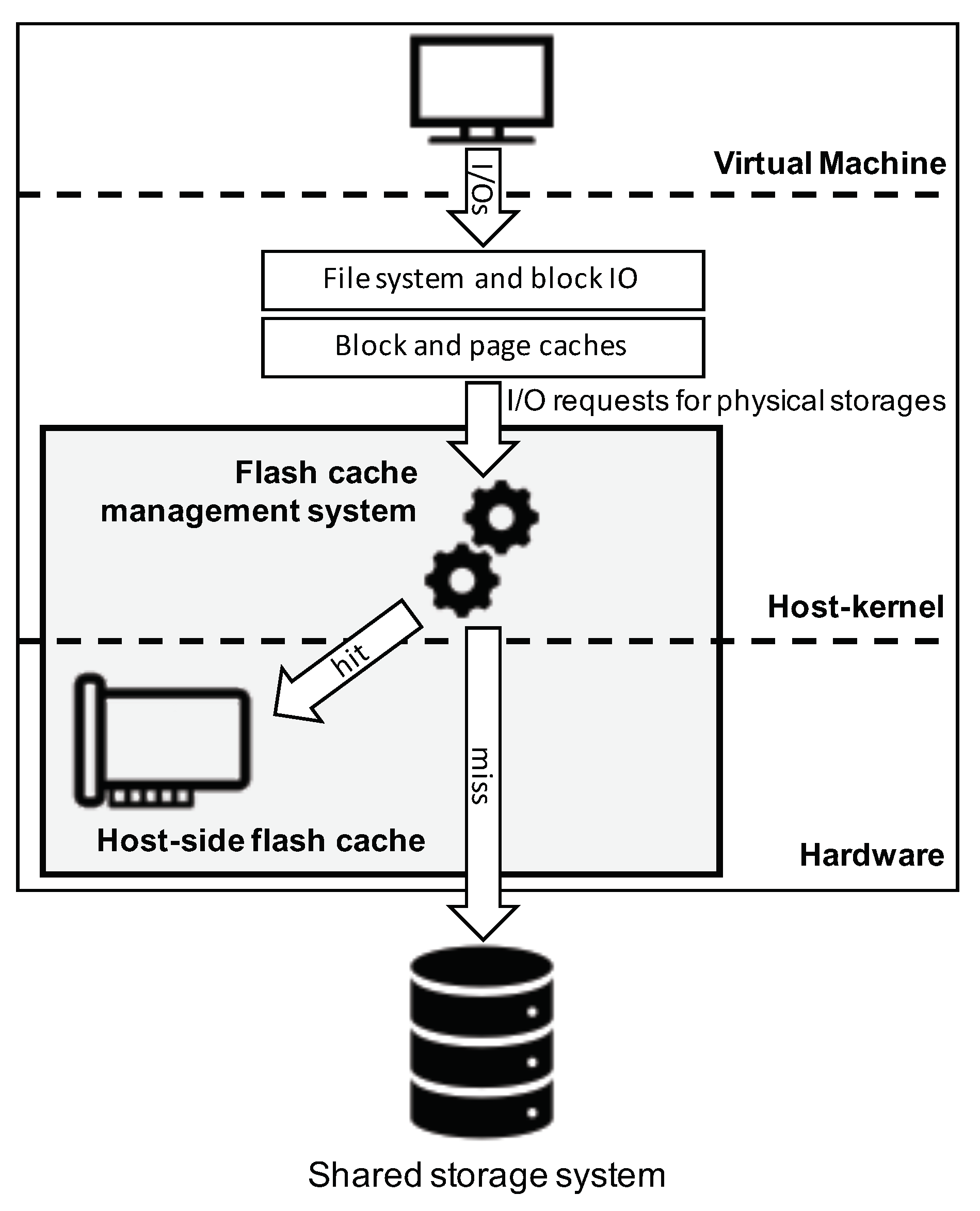

2.1. SSD Caching Software

2.2. Related Work

3. Design

3.1. Cachemior

3.2. Firepan

3.3. FirepanIF

| Algorithm 1: Dispatch with invalidation filtering. |

|

| Algorithm 2: Dispatch functions for the flash cache and the shared storage. |

|

4. Evaluation

4.1. Experimental Setup

4.2. Effectiveness of Firepan and FirepanIF

4.3. Neighborhood Effect

4.3.1. Performance Model for Neighborhood Effect

4.3.2. Experimental Result

4.4. Effect of Flash Cache Capacity

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Milojičić, D.; Llorente, I.M.; Montero, R.S. Opennebula: A cloud management tool. IEEE Internet Comput. 2011, 15, 11–14. [Google Scholar] [CrossRef]

- Sefraoui, O.; Aissaoui, M.; Eleuldj, M. OpenStack: Toward an open-source solution for cloud computing. Int. J. Comput. Appl. 2012, 55, 38–42. [Google Scholar] [CrossRef]

- López, L.; Nieto, F.J.; Velivassaki, T.H.; Kosta, S.; Hong, C.H.; Montella, R.; Mavroidis, I.; Fernández, C. Heterogeneous secure multi-level remote acceleration service for low-power integrated systems and devices. Procedia Comput. Sci. 2016, 97, 118–121. [Google Scholar] [CrossRef] [Green Version]

- Varghese, B.; Leitner, P.; Ray, S.; Chard, K.; Barker, A.; Elkhatib, Y.; Herry, H.; Hong, C.; Singer, J.; Tso, F.; et al. Cloud Futurology. Computer 2019, 52, 68–77. [Google Scholar] [CrossRef] [Green Version]

- Park, H.; Yoo, S.; Hong, C.H.; Yoo, C. Storage SLA guarantee with novel ssd i/o scheduler in virtualized data centers. IEEE Trans. Parallel Distrib. Syst. 2015, 27, 2422–2434. [Google Scholar] [CrossRef]

- Hong, C.H.; Lee, K.; Kang, M.; Yoo, C. qCon: QoS-Aware Network Resource Management for Fog Computing. Sensors 2018, 18, 3444. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Badshah, A.; Ghani, A.; Shamshirband, S.; Chronopoulos, A.T. Optimising infrastructure as a service provider revenue through customer satisfaction and efficient resource provisioning in cloud computing. IET Commun. 2019, 13, 2913–2922. [Google Scholar] [CrossRef]

- Rehman, A.; Hussain, S.S.; ur Rehman, Z.; Zia, S.; Shamshirband, S. Multi-objective approach of energy efficient workflow scheduling in cloud environments. Concurr. Comput. Pract. Exp. 2019, 31, e4949. [Google Scholar] [CrossRef]

- Osanaiye, O.; Chen, S.; Yan, Z.; Lu, R.; Choo, K.K.R.; Dlodlo, M. From cloud to fog computing: A review and a conceptual live VM migration framework. IEEE Access 2017, 5, 8284–8300. [Google Scholar] [CrossRef]

- Jo, C.; Gustafsson, E.; Son, J.; Egger, B. Efficient live migration of virtual machines using shared storage. ACM Sigplan Not. 2013, 48, 41–50. [Google Scholar] [CrossRef]

- Luo, T.; Ma, S.; Lee, R.; Zhang, X.; Liu, D.; Zhou, L. S-cave: Effective ssd caching to improve virtual machine storage performance. In Proceedings of the 22nd International Conference on Parallel Architectures and Compilation Techniques, Edinburgh, UK, 7–11 September 2013; pp. 103–112. [Google Scholar]

- Clark, C.; Fraser, K.; Hand, S.; Hansen, J.G.; Jul, E.; Limpach, C.; Pratt, I.; Warfield, A. Live migration of virtual machines. In Proceedings of the 2nd conference on Symposium on Networked Systems Design & Implementation, Berkeley, CA, USA, 2–4 May 2005; Volume 2, pp. 273–286. [Google Scholar]

- Meng, X.; Pappas, V.; Zhang, L. Improving the scalability of data center networks with traffic-aware virtual machine placement. In Proceedings of the 2010 Proceedings IEEE INFOCOM, San Diego, CA, USA, 14–19 March 2010; pp. 1–9. [Google Scholar]

- Arteaga, D.; Zhao, M. Client-side flash caching for cloud systems. In Proceedings of the International Conference on Systems and Storage, Haifa, Israel, 30 June–2 July 2014; pp. 1–11. [Google Scholar]

- Park, J.Y.; Park, H.; Yoo, C. Design and Implementation of Host-side Cache Migration Engine for High Performance Storage in A Virtualization Environment. KIISE Trans. Comput. Pract. 2016, 22, 278–283. [Google Scholar] [CrossRef]

- Koutoupis, P. Advanced hard drive caching techniques. Linux J. 2013, 2013, 2. [Google Scholar]

- Zhang, Y.; Soundararajan, G.; Storer, M.W.; Bairavasundaram, L.N.; Subbiah, S.; Arpaci-Dusseau, A.C.; Arpaci-Dusseau, R.H. Warming Up Storage-Level Caches with Bonfire. In Proceedings of the 11th USENIX Conference on File and Storage Technologies (FAST 13), San Jose, CA, USA, 12–15 February 2013; pp. 59–72. [Google Scholar]

- Lu, T.; Huang, P.; Stuart, M.; Guo, Y.; He, X.; Zhang, M. Successor: Proactive cache warm-up of destination hosts in virtual machine migration contexts. In Proceedings of the IEEE INFOCOM 2016—The 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Hwang, J.; Uppal, A.; Wood, T.; Huang, H. Mortar: Filling the gaps in data center memory. In Proceedings of the 10th ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments, Salt Lake City, UT, USA, 1–2 March 2014; pp. 53–64. [Google Scholar]

- Bhagwat, D.; Patil, M.; Ostrowski, M.; Vilayannur, M.; Jung, W.; Kumar, C. A practical implementation of clustered fault tolerant write acceleration in a virtualized environment. In Proceedings of the 13th USENIX Conference on File and Storage Technologies (FAST 15), Santa Clara, CA, USA, 16–19 February 2015; pp. 287–300. [Google Scholar]

- Kgil, T.; Mudge, T. FlashCache: A NAND flash memory file cache for low power web servers. In Proceedings of the 2006 International Conference on Compilers, Architecture and Synthesis for Embedded Systems, Seoul, Korea, 22–25 October 2006; pp. 103–112. [Google Scholar]

- Byan, S.; Lentini, J.; Madan, A.; Pabon, L.; Condict, M.; Kimmel, J.; Kleiman, S.; Small, C.; Storer, M. Mercury: Host-side flash caching for the data center. In Proceedings of the IEEE 28th Symposium on Mass Storage Systems and Technologies (MSST), San Diego, CA, USA, 16–20 April 2012; pp. 1–12. [Google Scholar]

- Documentation of the vSphere Flash Read Cache v6.5. Available online: https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-07ADB946-2337-4642-B660-34212F237E71.html (accessed on 17 December 2019).

- Meng, F.; Zhou, L.; Ma, X.; Uttamchandani, S.; Liu, D. vCacheShare: Automated server flash cache space management in a virtualization environment. In Proceedings of the 2014 USENIX Annual Technical Conference (USENIX ATC 14), Philadelphia, PA, USA, 19–20 June 2014; pp. 133–144. [Google Scholar]

- Lee, H.; Cho, S.; Childers, B.R. CloudCache: Expanding and shrinking private caches. In Proceedings of the 2011 IEEE 17th International Symposium on High Performance Computer Architecture, San Antonio, TX, USA, 12–16 February 2011; pp. 219–230. [Google Scholar]

- Koller, R.; Marmol, L.; Rangaswami, R.; Sundararaman, S.; Talagala, N.; Zhao, M. Write policies for host-side flash caches. In Proceedings of the 11th USENIX Conference on File and Storage Technologies (FAST 13), San Jose, CA, USA, 12–15 February 2013; pp. 45–58. [Google Scholar]

- Holland, D.A.; Angelino, E.; Wald, G.; Seltzer, M.I. Flash caching on the storage client. In Proceedings of the 2013 USENIX Annual Technical Conference (USENIX ATC 13), San Jose, CA, USA, 26–28 June 2013; pp. 127–138. [Google Scholar]

- Qin, D.; Brown, A.D.; Goel, A. Reliable writeback for client-side flash caches. In Proceedings of the 2014 USENIX Annual Technical Conference (USENIX ATC 14), Philadelphia, PA, USA, 19–20 June 2014; pp. 451–462. [Google Scholar]

- Arteaga, D.; Otstott, D.; Zhao, M. Dynamic block-level cache management for cloud computing systems. In Proceedings of the Conference on File and Storage Technologies, San Jose, CA, USA, 14–17 February 2012. [Google Scholar]

- Axboe, J. Flexible i/o Tester. 2016. Available online: https://github.com/axboe/fio (accessed on 17 December 2019).

| NoCache Migration (Baseline) | Cachemior | Firepan | FirepanIF | |

|---|---|---|---|---|

| 2000s | 41.89 | 45.25 (+8.02%) | 43.11 (+2.93%) | 48.67 (+16.18%) |

| 2100s | 49.58 | 51.16 (+3.19%) | 61.69 (+24.44%) | 61.88 (+24.82%) |

| 2200s | 53.48 | 55.90 (+4.53%) | 60.13 (+12.44%) | 62.18 (+16.26%) |

| 2300s | 55.88 | 58.46 (+4.62%) | 62.98 (+12.71%) | 61.59 (+10.22%) |

| avg. (first 400 s) | 50.21 | 52.69 (+4.94%) | 56.98 (+13.49%) | 58.58 (+16.68%) |

| 2400s | 56.09 | 62.21 (+10.91%) | 61.23 (+9.17%) | 60.72 (+8.26%) |

| 2500s | 57.28 | 61.66 (+7.65%) | 61.04 (+6.57%) | 60.42 (+5.48%) |

| 2600s | 56.99 | 63.26 (+11.00%) | 62.36 (+9.41%) | 61.39 (+7.73%) |

| 2700s | 58.57 | 61.91 (+5.70%) | 59.89 (+2.25%) | 64.47 (+10.07%) |

| avg. (total 800 s) | 53.72 | 57.48 (+7.00%) | 59.05 (+9.93%) | 60.16 (+12.00%) |

| NoCache Migration (Baseline) | Cachemior | Firepan | FirepanIF | |

|---|---|---|---|---|

| 5 GB | 43.62 | 44.55 (+2.14%) | 46.23 (+5.99%) | 46.23 (+5.97%) |

| 10 GB | 50.21 | 52.69 (+4.94%) | 56.98(+13.49%) | 58.58 (+16.68%) |

| 20 GB | 48.75 | 48.02 (-1.51%) | 56.61 (+16.11%) | 59.41 (+21.87%) |

| NoCache Migration (Baseline) | Cachemior | Firepan | FirepanIF | |

|---|---|---|---|---|

| 5 GB | 45.64 | 46.36 (+1.57%) | 47.17 (+3.34%) | 46.97 (+2.92%) |

| 10 GB | 53.72 | 57.48 (+7.00%) | 59.05 (+9.93%) | 60.16 (+12.00%) |

| 20 GB | 54.86 | 57.93 (+5.60%) | 61.89 (+12.80%) | 62.79 (+14.45%) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.; Lee, M.; Hong, C.-H. FirepanIF: High Performance Host-Side Flash Cache Warm-Up Method in Cloud Computing. Appl. Sci. 2020, 10, 1014. https://doi.org/10.3390/app10031014

Park H, Lee M, Hong C-H. FirepanIF: High Performance Host-Side Flash Cache Warm-Up Method in Cloud Computing. Applied Sciences. 2020; 10(3):1014. https://doi.org/10.3390/app10031014

Chicago/Turabian StylePark, Hyunchan, Munkyu Lee, and Cheol-Ho Hong. 2020. "FirepanIF: High Performance Host-Side Flash Cache Warm-Up Method in Cloud Computing" Applied Sciences 10, no. 3: 1014. https://doi.org/10.3390/app10031014

APA StylePark, H., Lee, M., & Hong, C.-H. (2020). FirepanIF: High Performance Host-Side Flash Cache Warm-Up Method in Cloud Computing. Applied Sciences, 10(3), 1014. https://doi.org/10.3390/app10031014