Temporal Saliency-Based Suspicious Behavior Pattern Detection

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Aquisition

2.2. Description of Proposed Method

2.2.1. Preprocessing for Denoising

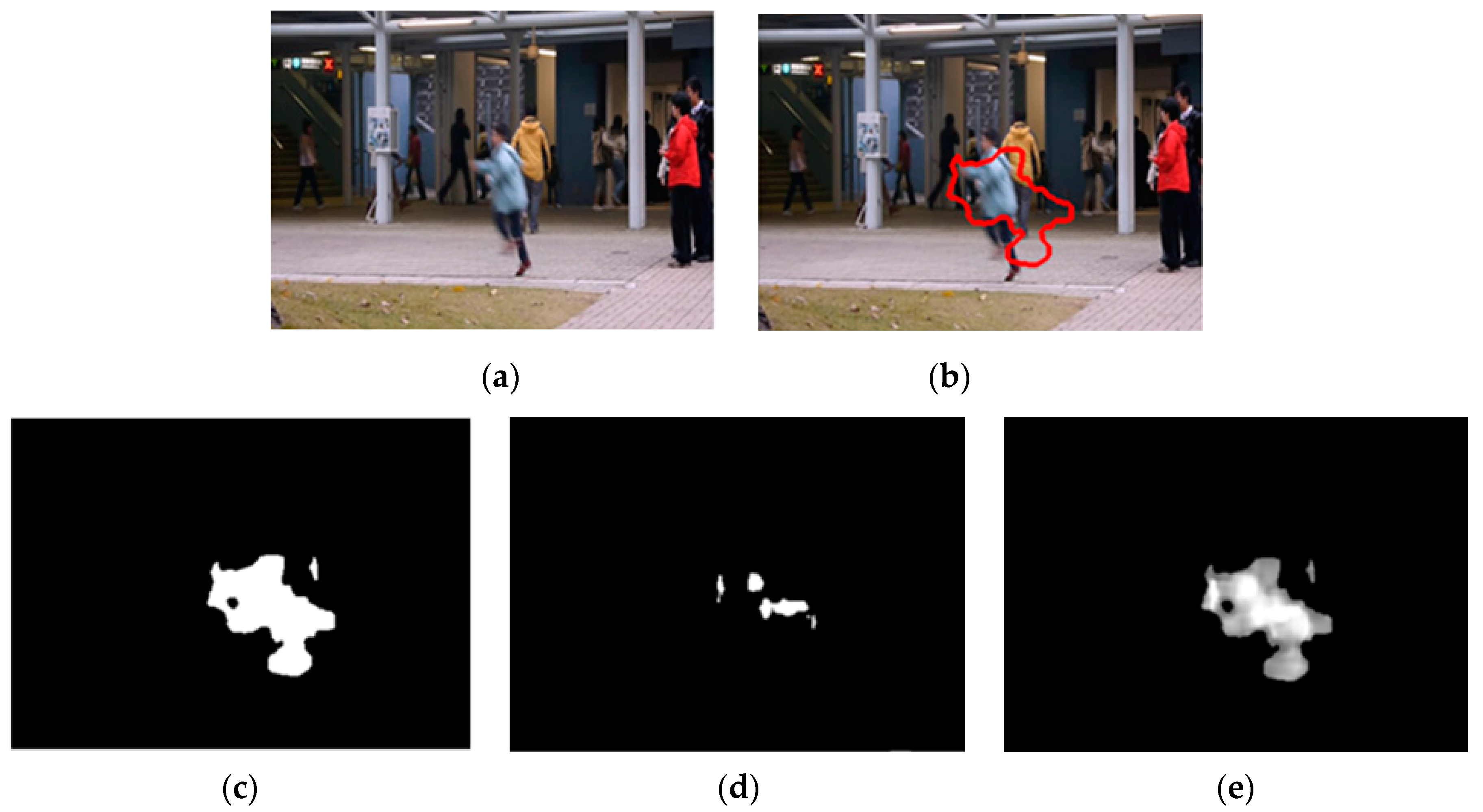

2.2.2. Feature Map Generation

2.2.3. Reactivity Map Generation

3. Results and Discussion

3.1. Comparison Results from Experiments with UMN Dataset and Avenue Dataset

3.2. Analysis of Examples of Detection Results for Abnormalities with 10 Different Types of Video Sequences

3.3. Overall Performance Evaluation Results of 10 Different Types of Video Sequences

4. Conclusions

Funding

Conflicts of Interest

References

- Why Vision Is the Most Important Sense Organ. Available online: https://medium.com/@SmartVisionLabs/why-vision-is-the-most-important-sense-organ-60a2cec1c164 (accessed on 30 October 2019).

- Beckermann, A. Visual Information Processing and Phenomenal Consciousness. In Conscious Experience; Schöningh: Paderborn, Germany, 1995; pp. 409–424. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer-Verlag: London, UK, 2011. [Google Scholar]

- Sage, K.; Young, S. Computer vision for security applications. In Proceedings of the IEEE 32nd Annual 1998 International Carnahan Conference on Security Technology, Alexandria, VA, USA, 12–14 October 1998; pp. 210–215. [Google Scholar]

- Stubbington, B.; Keenan, P. Intelligent scene monitoring; technical aspects and practical experience. In Proceedings of the 29th Annual 1995 International Carnahan Conference on Security Technology, Sanderstead, Surrey, UK, 18–20 October 1995; pp. 364–375. [Google Scholar]

- Davies, A.; Velastin, S. A Progress Review of Intelligent CCTV Surveillance Systems. In Proceedings of the IDAACS’05 Workshop, Sofia, Bulgaria, 5–7 September 2005; pp. 417–423. [Google Scholar]

- Sanderson, C.; Bigdeli, A.; Shan, T.; Chen, S.; Berglund, E.; Lovel, B.C. Intelligent CCTV for Mass Transport Security: Challenges and Opportunities for Video and Face Processing. In Progress in Computer Vision and Image Analysis; Bunke, H., Villanueva, J.J., Sanchez, G., Eds.; World Scientific: Singapore, 2010; Volume 73, pp. 557–573. [Google Scholar]

- Direkoglu, C.; Sah, M.; O’Connor, N.E. Abnormal crowd behavior detection using novel optical flow-based features. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Xiang, J.; Fan, H.; Xu, J. Abnormal behavior detection based on spatial-temporal features. In Proceedings of the International Conference on Machine Learning and Cybernetics, Tianjin, China, 14–17 July 2013; pp. 871–876. [Google Scholar]

- Mehran, R.; Oyama, A.; Shah, M. Abnormal crowd behavior detection using social force model. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 935–942. [Google Scholar]

- Wu, S.; Moore, B.E.; Shah, M. Chaotic invariants of Lagrangian particle trajectories for anomaly detection in crowded scenes. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2054–2060. [Google Scholar]

- Cong, Y.; Yuan, J.; Liu, J. Abnormal event detection in crowded scenes using sparse representation. Pattern Recognit. 2013, 46, 1851–1864. [Google Scholar] [CrossRef]

- Roshtkhari, M.J.; Levine, M.D. Online Dominant and Anomalous Behavior Detection in Videos. In Proceedings of the 2013 International Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2611–2618. [Google Scholar]

- Halbe, M.; Vyas, V.; Vaidya, Y. Abnormal Crowd Behavior Detection Based on Combined Approach of Energy Model and Threshold. In Proceedings of the 7th International Conference on Pattern Recognition and Machine Intelligence, Kolkata, India, 5–8 December 2017; pp. 187–195. [Google Scholar]

- Yu, T.H.; Moon, Y. Unsupervised Abnormal Behavior Detection for Real-time Surveillance Using Observed History. In Proceedings of the 2009 IAPR Conference on Machine Vision Applications, Yokohama, Japan, 20–22 May 2009; pp. 166–169. [Google Scholar]

- Li, N.; Zhang, Z. Abnormal Crowd Behavior Detection using Topological Method. In Proceedings of the 12th ACIS International Conference on Software Engineering, Networking and Parallel/Distributed Computing, Sydney, NSW, Australia, 6–8 July 2011; pp. 13–18. [Google Scholar]

- Chaudharya, S.; Khana, M.A.; Bhatnagara, C. Multiple Anomalous Activity Detection in Videos. In Proceedings of the 6th International Conference on Smart Computing and Communications, Kurukshetra, India, 7–8 December 2017; pp. 336–345. [Google Scholar]

- Zhong, H.; Shi, J.; Visontai, M. Detecting unusual activity in video. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 819–826. [Google Scholar]

- Hamid, R.; Johnson, A.; Batta, S.; Bobick, A.; Isbell, C.; Coleman, G. Detection and explanation of anomalous activities: Representing activities as bags of event n-grams. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 1031–1038. [Google Scholar]

- Hassner, T.; Itcher, Y.; Kliper-Gross, O. Violent flows: Real-time detection of violent crowd behavior. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 1–6. [Google Scholar]

- Zhou, S.; Shen, W.; Zeng, D.; Fang, M.; Wei, Y.; Zhang, Z. Spatial-temporal convolutional neural networks for anomaly detection and localization in crowded scenes. Signal Process. Image Commun. 2016, 47, 358–368. [Google Scholar] [CrossRef]

- Boiman, O.; Irani, M. Detecting Irregularities in Images and in Video. Int. J. Comput. Vis. 2007, 74, 11–31. [Google Scholar] [CrossRef] [Green Version]

- Vu, H.; Nguyen, T.D.; Travers, A.; Venkatesh, S.; Phung, D. Energy-Based Localized Anomaly Detection in Video Surveillance. In Proceedings of the 21st Pacific-Asia Conference on Knowledge Discovery and Data Mining, Jeju, South Korea, 23–26 May 2017; pp. 641–653. [Google Scholar]

- Giorno, D.A.; Bagnell, J.; Hebert, M. A Discriminative Framework for Anomaly Detection in Large Videos. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 334–349. [Google Scholar]

- Wu, S.; Wong, H.; Yu, Z. A Bayesian Model for Crowd Escape Behavior Detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 85–98. [Google Scholar] [CrossRef]

- Li, S.; Yang, Y.; Liu, C. Anomaly detection based on two global grid motion templates. Signal Process. Image Commun. 2018, 60, 6–12. [Google Scholar] [CrossRef]

- Al-Dhamari, A.; Sudirman, R.; Mahmood, N.H. Abnormal behavior detection in automated surveillance videos: A review. J. Theor. Appl. Inf. Technol. 2017, 95, 5245–5263. [Google Scholar]

- Cong, Y.; Yuan, J.; Liu, J. Sparse reconstruction cost for abnormal event detection. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 3449–3456. [Google Scholar]

- Hu, X.; Huang, Y.; Duan, Q.; Ci, W.; Dai, J.; Yang, H. Abnormal event detection in crowded scenes using histogram of oriented contextual gradient descriptor. EURASIP J. Adv. Signal Process. 2018, 54. [Google Scholar] [CrossRef]

- Using Optical Flow to Find Direction of Motion. Available online: http://www.cs.utah.edu/~ssingla/CV/Project/OpticalFlow.html (accessed on 12 November 2019).

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 1981 DARPA Image Understanding Workshop, 23 April 1981; pp. 121–130. [Google Scholar]

- Adelson, E.H.; Anderson, C.H.; Bergen, J.R.; Burt, P.J.; Ogden, J.M. Pyramid methods in image processing. RCA Eng. 1984, 29, 33–41. [Google Scholar]

- Adelson, E.H.; Bergen, J.R. Spatiotemporal energy models for the perception of motion. J. Opt. Soc. Am. A 1985, 2, 284–299. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the 2003 Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

| No | Dataset | Suspicious Behaviors Description |

|---|---|---|

| 1 | UMN dataset: Lawn | Multiple people are running away in multiple directions simultaneously with an explosion sound. |

| 2 | UMN dataset: Indoor | |

| 3 | UMN dataset: Plaza | |

| 4 | Avenue dataset | A few people are jumping and running in front of the building while others are walking. |

| 5 | Walk dataset | People are walking normally. There are not any abnormal behaviors. |

| 6 | Bump data | A man is smashed against an obstacle. |

| 7 | Fall down data | A man is falling over an obstacle. |

| 8 | Water data | A man is walking on the road falls into the water. |

| 9 | Stairs fall down data | The man who came down the stairs is falling down. |

| 10 | CCTV violent robbery data in South Kensington | Two men are assaulting one man. |

| UMN Dataset | Proposed Method | [8] | [25] | [11] | [10] | [12] |

|---|---|---|---|---|---|---|

| Scene 1: Lawn | 99.20% | 99.10% | 99.03% | 90.62% | 84.41% | 90.52% |

| Scene 2: Indoor | 97.10% | 94.85% | 95.36% | 85.06% | 82.35% | 78.48% |

| Scene 3: Plaza | 93.20% | 97.76% | 96.63% | 91.58% | 90.83% | 92.70% |

| average | 96.50% | 96.46% | 96.40% | 87.91% | 85.09% | 84.70% |

| Proposed Method | [26] | [29] | [21] | |

|---|---|---|---|---|

| Avenue dataset | 90.18% | 87.70% | 87.19% | 80.30% |

| No. | Dataset | Accuracy | Precision | Recall |

|---|---|---|---|---|

| 1 | UMN dataset: Lawn | 99.2% | 99.8% | 91.1% |

| 2 | UMN dataset: Lobby | 97.1% | 99.5% | 93.7% |

| 3 | UMN dataset: Park | 93.2% | 98.9% | 92.5% |

| 4 | Avenue dataset | 90.1% | 93.2% | 94.5% |

| 5 | Walk dataset | 100% | 100% | 100% |

| 6 | Bump data | 95.8% | 100% | 94.4% |

| 7 | Fall down data | 95% | 91.6% | 100% |

| 8 | Water data | 82.7% | 79.1% | 100% |

| 9 | Stairs fall down data | 94.4% | 100% | 84.6% |

| 10 | CCTV violent robbery data in South Kensington | 91.4% | 100% | 90.6% |

| average | 93.89% | 96.21% | 94.9% |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheoi, K.J. Temporal Saliency-Based Suspicious Behavior Pattern Detection. Appl. Sci. 2020, 10, 1020. https://doi.org/10.3390/app10031020

Cheoi KJ. Temporal Saliency-Based Suspicious Behavior Pattern Detection. Applied Sciences. 2020; 10(3):1020. https://doi.org/10.3390/app10031020

Chicago/Turabian StyleCheoi, Kyung Joo. 2020. "Temporal Saliency-Based Suspicious Behavior Pattern Detection" Applied Sciences 10, no. 3: 1020. https://doi.org/10.3390/app10031020