Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot

Abstract

:1. Introduction

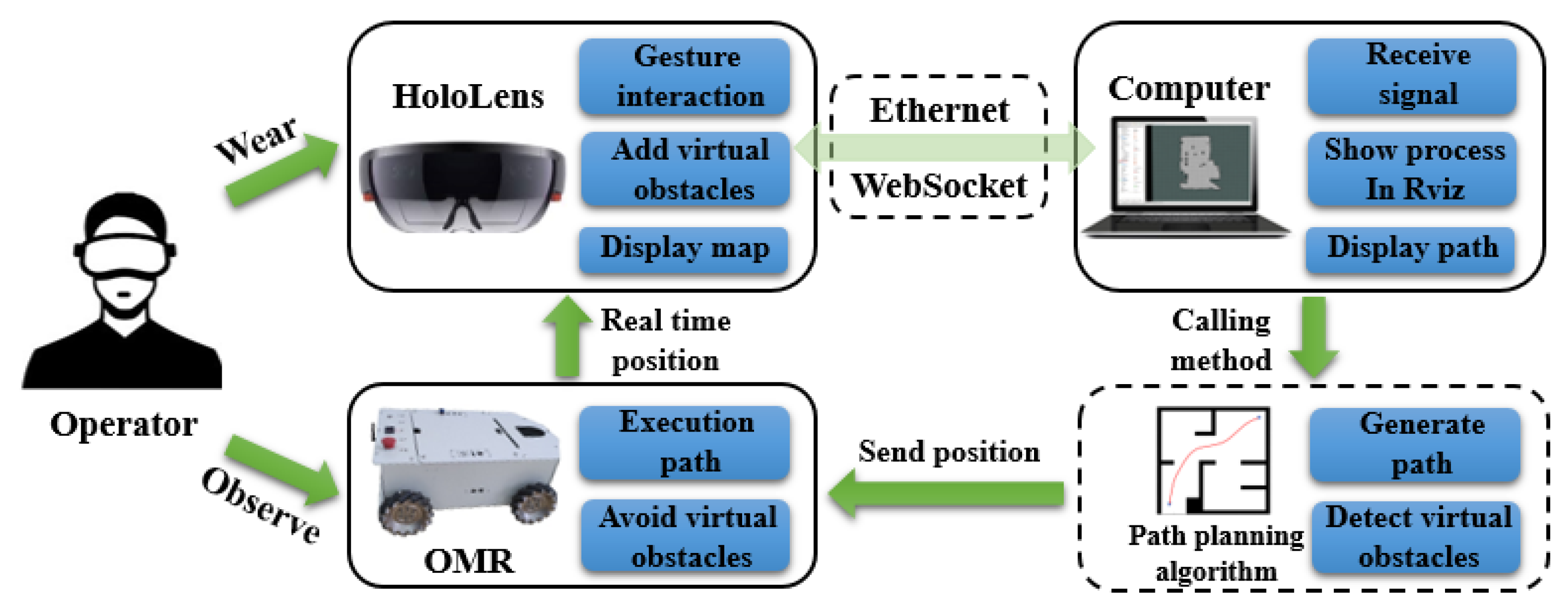

2. System Description

3. Mixed Reality System

3.1. Mixed Reality

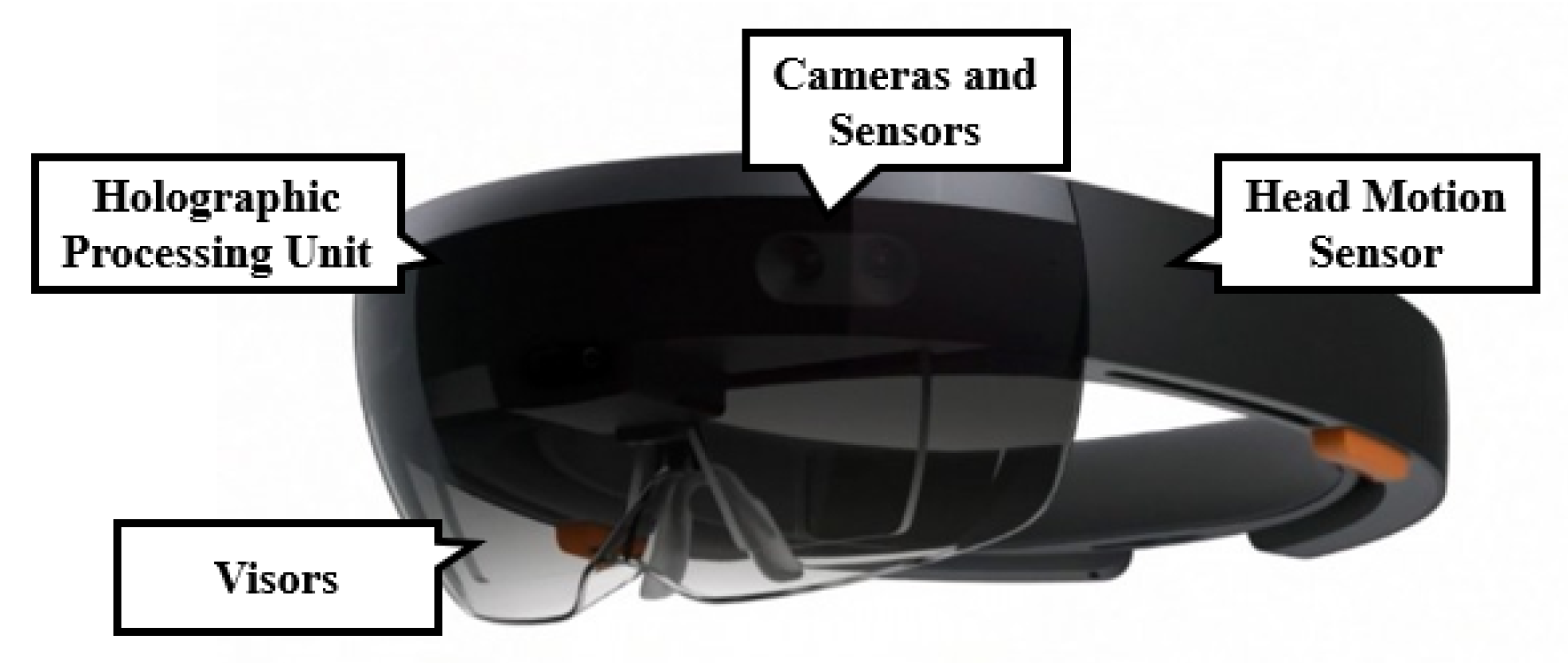

3.2. Microsoft HoloLens

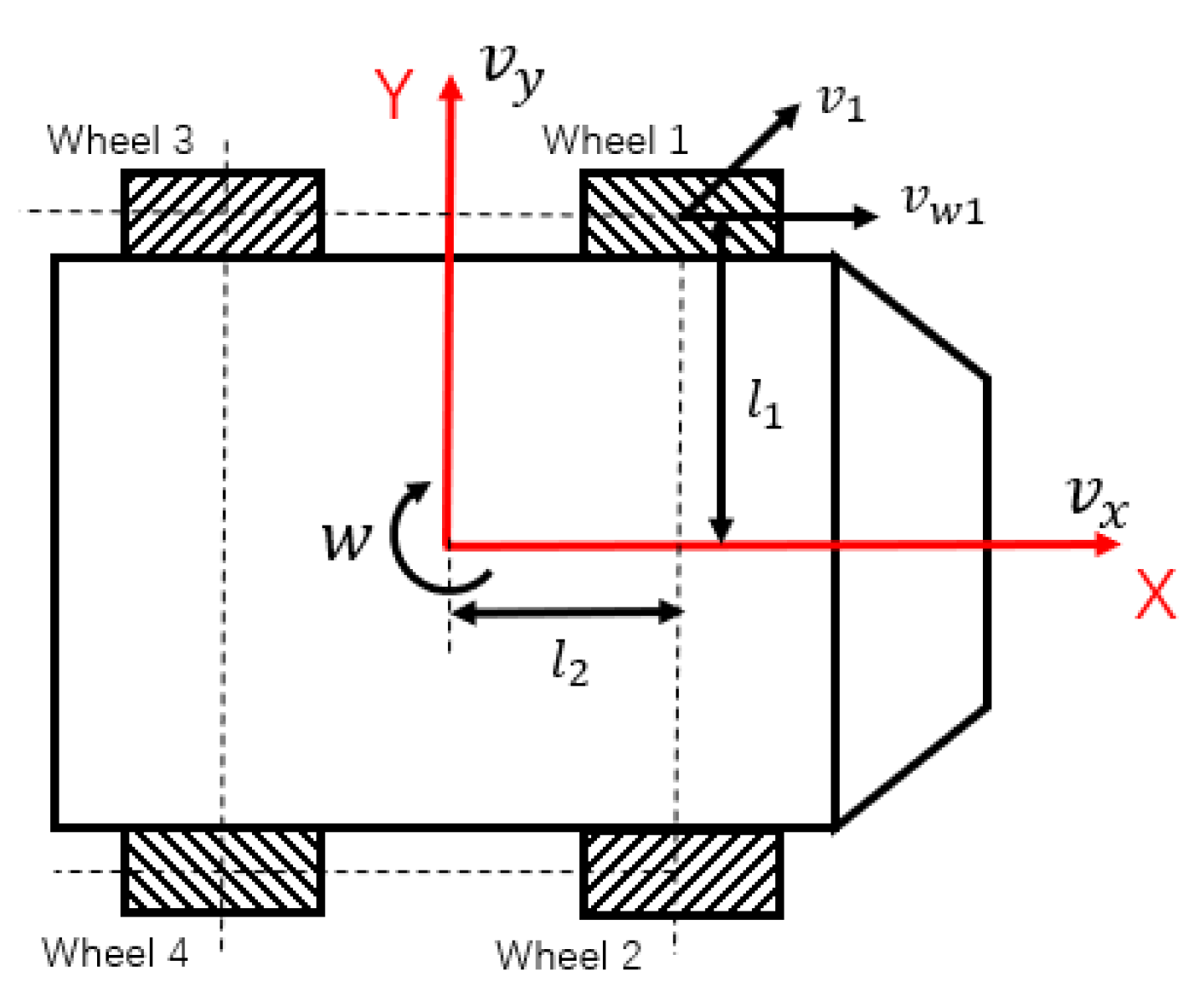

4. Kinematics of the Omnidirectional Mobile Robot

5. The Description of VFH* Algorithm

5.1. The VFH* Algorithm

- (1)

- Generating the polar histogram: The VFH+ algorithm divides the active region of the current robot position into multiple sectors and calculates the obstacle density in each sector. Then the density of each sector is arranged into histogram according to the sector number.

- (2)

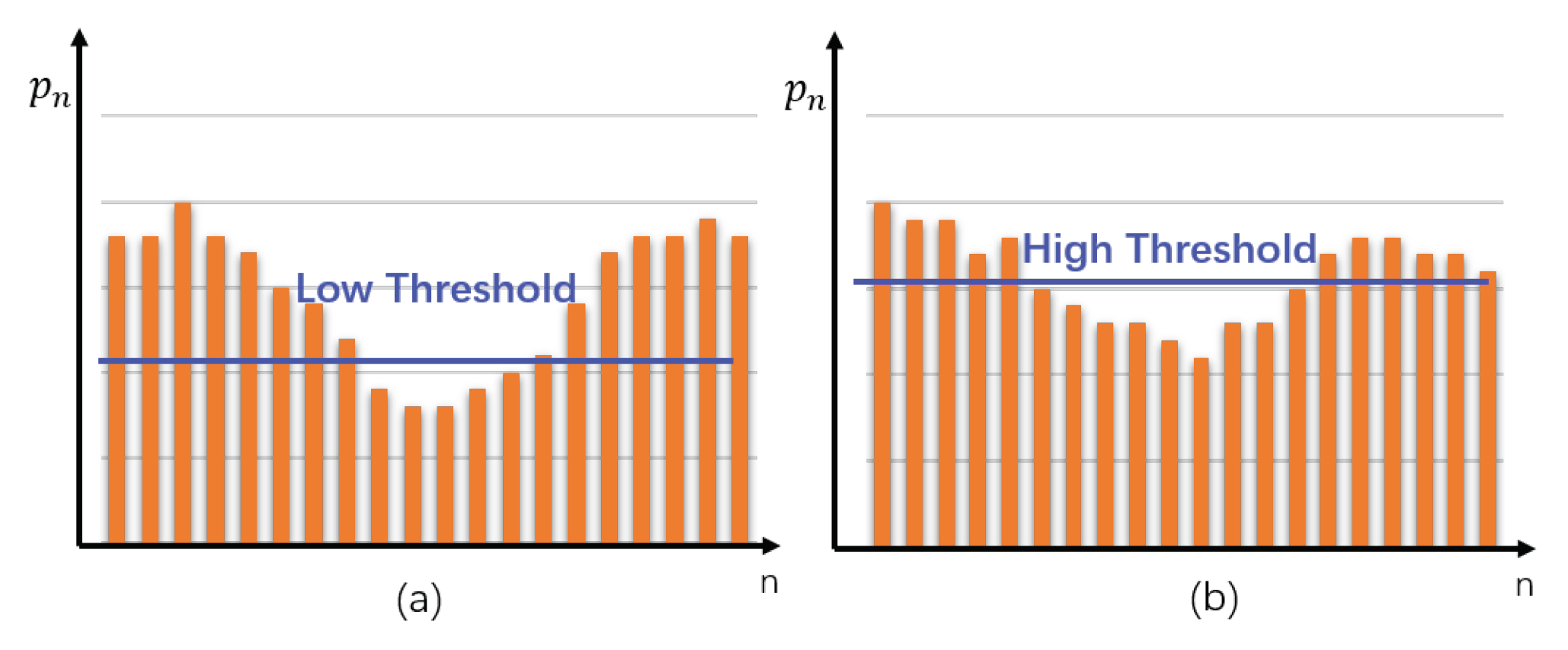

- Binarization polar histogram: One must select the appropriate threshold according to the actual situation, and binarize the histogram generated in the previous step. Sectors above the threshold are set as impassable areas, while sectors below the threshold are set as passable areas.

- (3)

- Mask polar histogram: Considering the kinematics and the dynamics characteristics of the robot, the current inaccessible sectors are set as the impassable areas.

- (4)

- Determining the direction of motion: The passable areas in the polar histogram are used as the candidate direction; the cost is calculated according to the cost function; and the cost of the passable area is sorted. The commonly used cost function is shown as follows:

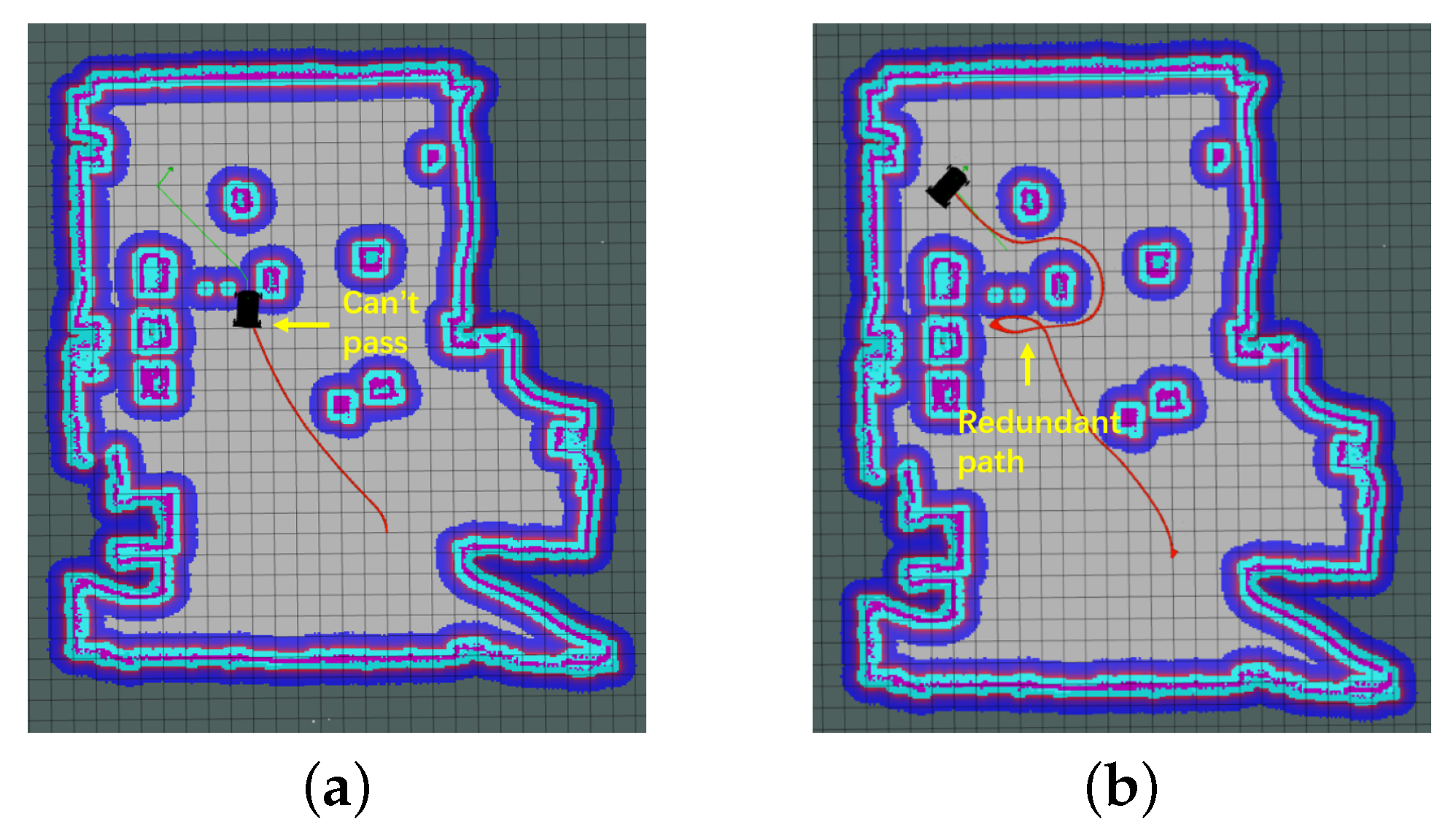

5.2. Modification of VFH* Algorithm

5.2.1. The Threshold Setting

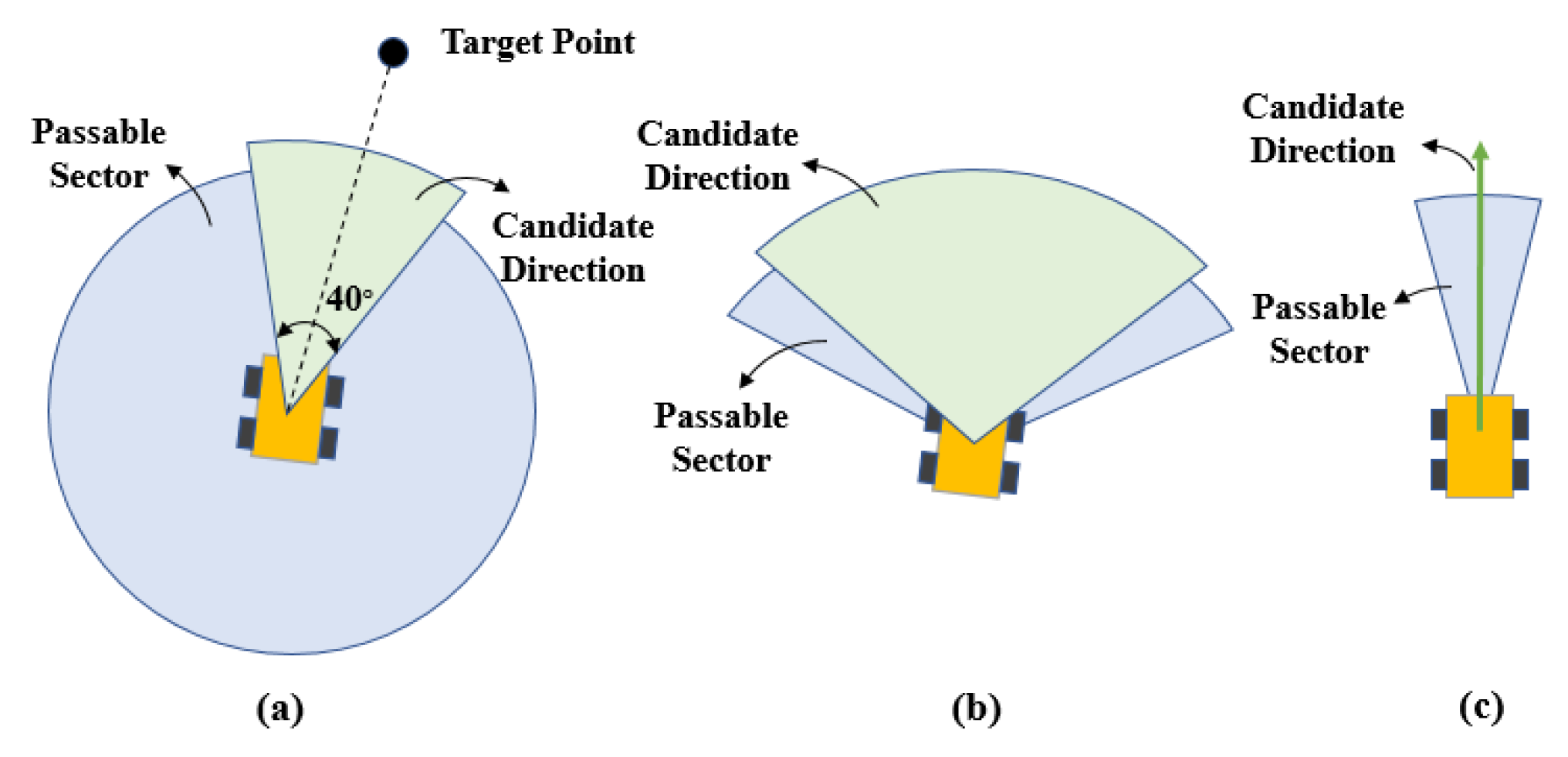

5.2.2. The Candidate Direction Selection

5.2.3. The Cost Function

| Algorithm 1 The improved VFH* algorithm |

| Input: Map information, start point and target point location; |

| Output: Trajectory; |

|

6. Experimental Results and Analysis

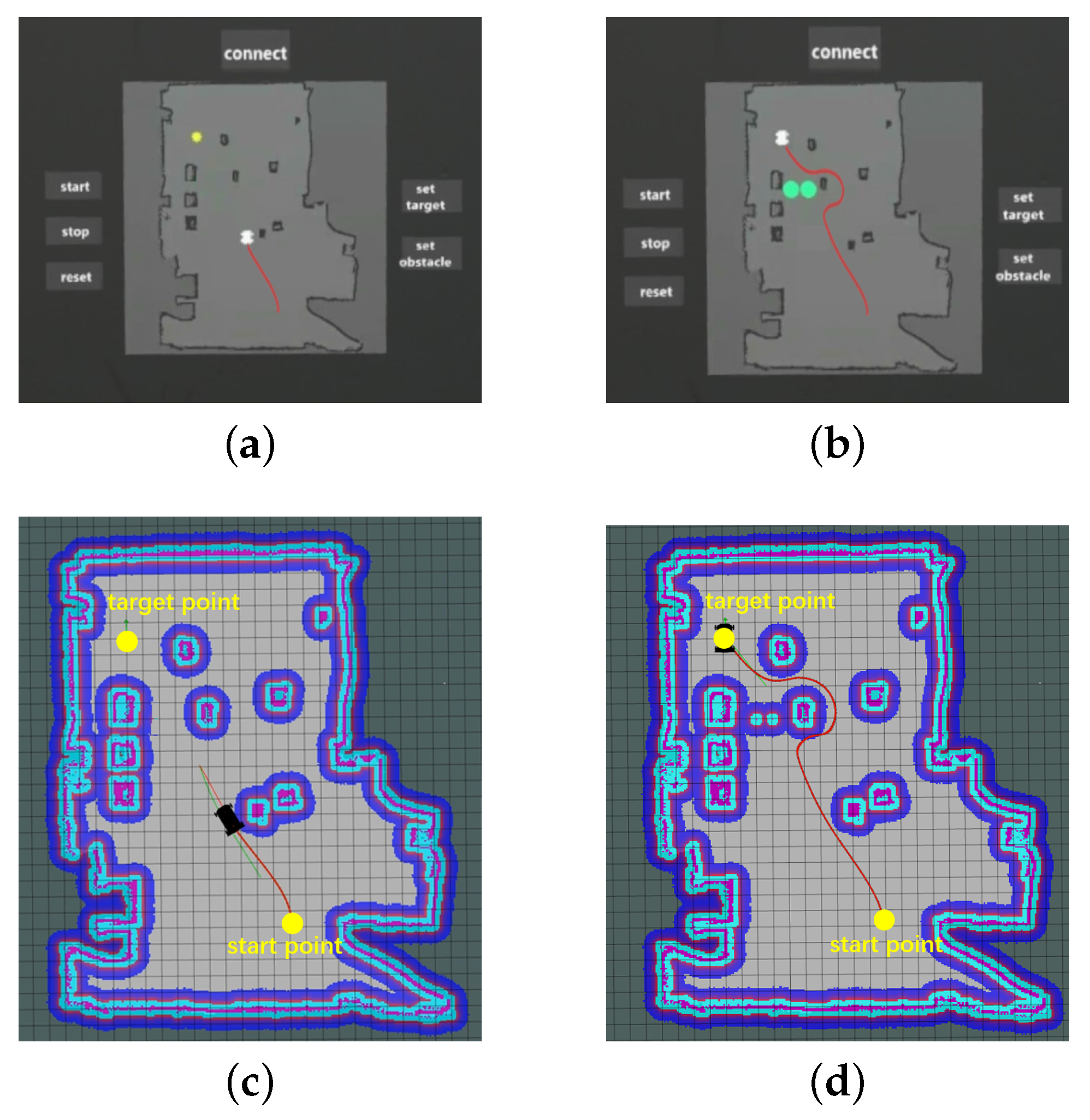

6.1. The Design of the Interactive System

- (1)

- Establish a connection to synchronize the pre-acquired map and the initial coordinates of the mobile robot;

- (2)

- Set the moving target point and start the robot;

- (3)

- In the process of robot movement, choose whether to add virtual obstacles and their positions;

- (4)

- Transmit the current position of the real robot to the virtual panel in real-time until it reaches the target point.

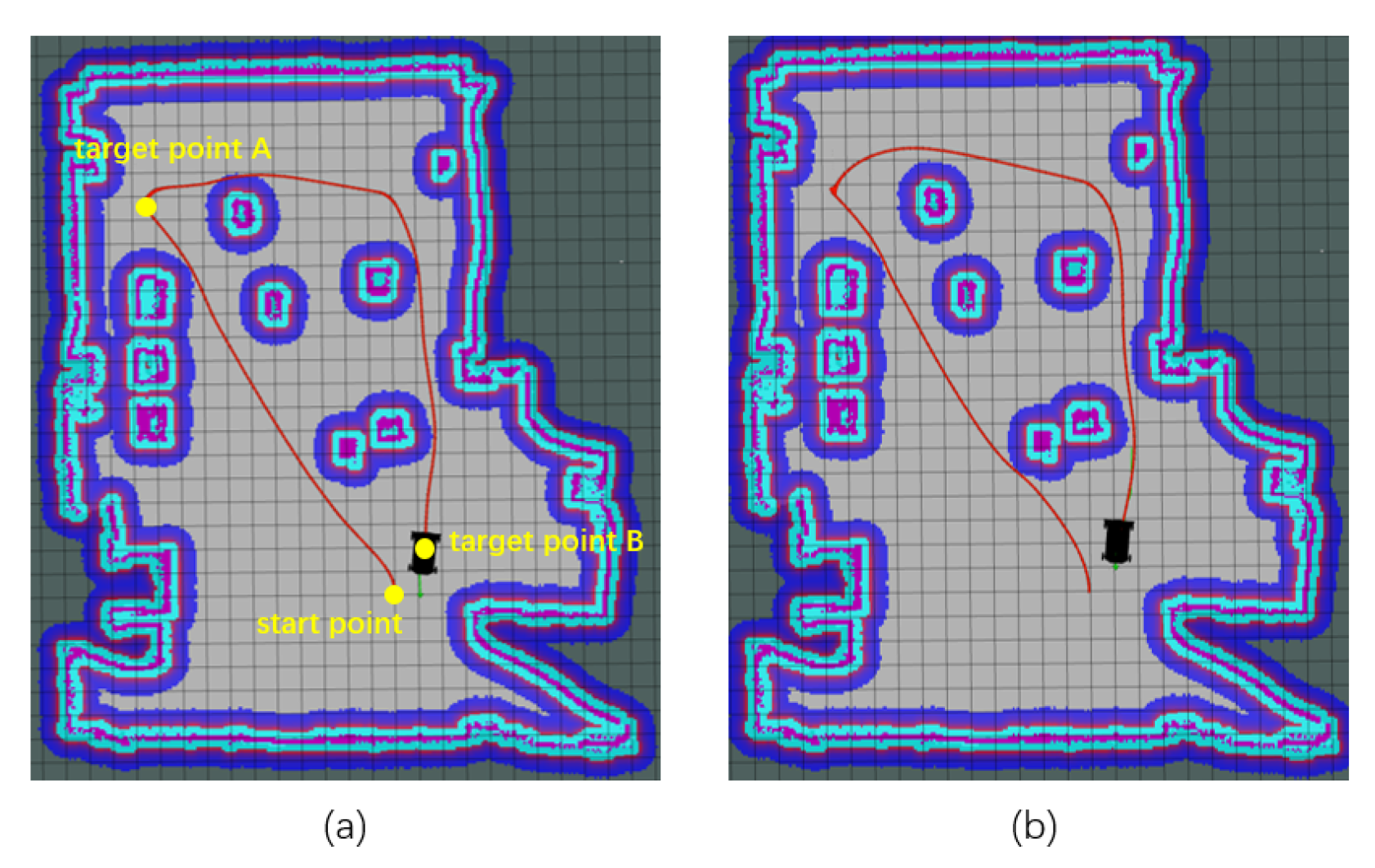

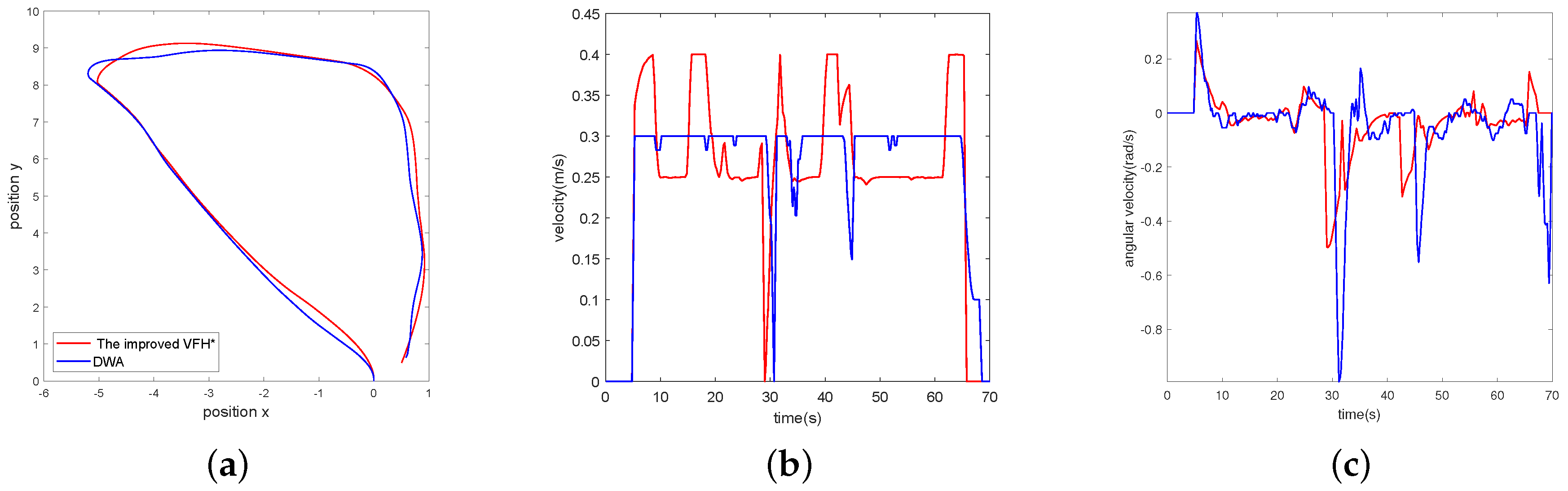

6.2. Path Planning without Virtual Obstacles

6.3. Path Planning with Virtual Obstacles

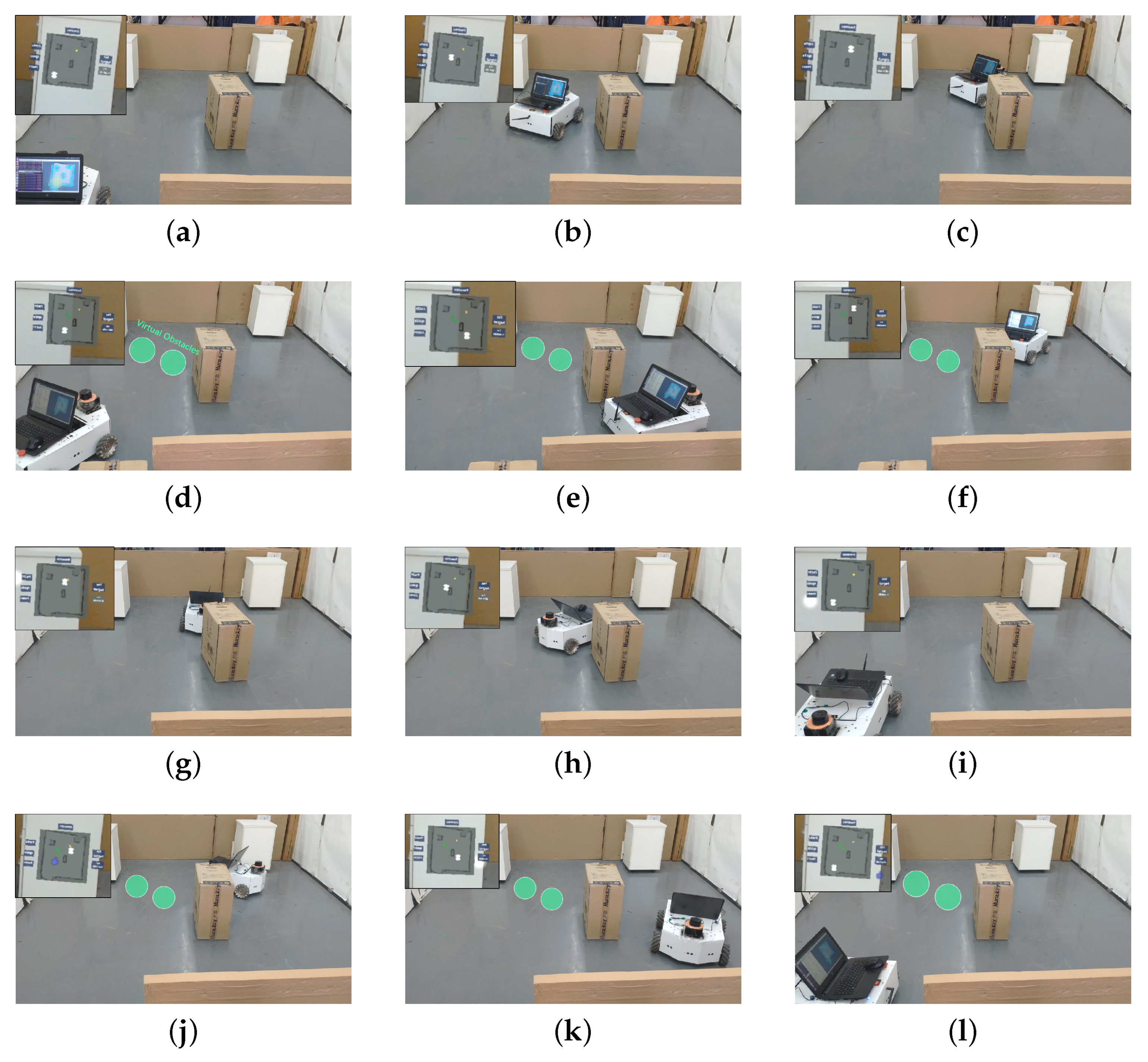

6.4. Real Experimental Validation

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ge, S.S.; Cui, Y.J. New potential functions for mobile robot path planning. IEEE Trans. Robot. Autom. 2000, 16, 615–620. [Google Scholar] [CrossRef] [Green Version]

- Kretzschmar, H.; Spies, M.; Sprunk, C.; Burgard, W. Socially compliant mobile robot navigation via inverse reinforcement learning. Int. J. Robot. Res. 2016, 35, 1289–1307. [Google Scholar] [CrossRef]

- Pandey, A.; Pandey, S.; Parhi, D. Mobile robot navigation and obstacle avoidance techniques: A review. Int. Robot. Autom. J. 2017, 2, 00022. [Google Scholar] [CrossRef] [Green Version]

- Pivtoraiko, M.; Knepper, R.A.; Kelly, A. Differentially constrained mobile robot motion planning in state lattices. J. Field Robot. 2009, 26, 308–333. [Google Scholar] [CrossRef]

- Ge, S.S.; Cui, Y.J. Dynamic motion planning for mobile robots using potential field method. Auton. Robot. 2002, 13, 207–222. [Google Scholar] [CrossRef]

- Nakamura, Y.; Hanafusa, H. Inverse kinematic solutions with singularity robustness for robot manipulator control. J. Dynam. Syst. Meas. Control 1986, 108, 163–171. [Google Scholar] [CrossRef]

- Maani, C.V.; Hoffman, H.G.; Morrow, M.; Maiers, A.; Gaylord, K.; McGhee, L.L.; DeSocio, P.A. Virtual reality pain control during burn wound debridement of combat-related burn injuries using robot-like arm mounted VR goggles. J. Trauma 2011, 71, S125. [Google Scholar] [CrossRef] [Green Version]

- Pin, F.G.; Killough, S.M. A new family of omnidirectional and holonomic wheeled platforms for mobile robots. IEEE Trans. Robot. Autom. 1994, 10, 480–489. [Google Scholar] [CrossRef]

- Salih, J.E.M.; Rizon, M.; Yaacob, S.; Adom, A.H.; Mamat, M.R. Designing omni-directional mobile robot with mecanum wheel. Am. J. Appl. Sci. 2006, 3, 1831–1835. [Google Scholar]

- Liu, Y.; Wu, X.; Zhu, J.J.; Lew, J. Omni-directional mobile robot controller design by trajectory linearization. In Proceedings of the 2003 American Control Conference, Denver, CO, USA, 4–6 June 2003; Volume 4, pp. 3423–3428. [Google Scholar] [CrossRef]

- Wang, C.; Liu, X.; Yang, X.; Hu, F.; Jiang, A.; Yang, C. Trajectory tracking of an omni-directional wheeled mobile robot using a model predictive control strategy. Appl. Sci. 2018, 8, 231. [Google Scholar] [CrossRef] [Green Version]

- Kondo, Y.; Miyoshi, T.; Terashima, K.; Kitagawa, H. Navigation Guidance Control Using Haptic Feedback for Obstacle Avoidance of Omni-directional Wheelchair. In Proceedings of the 2008 Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Reno, NE, USA, 13–14 March 2008; pp. 437–444. [Google Scholar] [CrossRef]

- Yao, J.; Lin, C.; Xie, X.; Wang, J.A.; Hung, C.C. Path Planning for Virtual Human Motion Using Improved A Star Algorithm. In Proceedings of the Seventh International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 12–14 April 2010. [Google Scholar]

- Ferguson, D.; Stentz, A. Using interpolation to improve path planning: The Field D algorithm. J. Field Robot. 2010, 23, 79–101. [Google Scholar] [CrossRef] [Green Version]

- Qixin, C.; Yanwen, H.; Jingliang, Z. An Evolutionary Artificial Potential Field Algorithm for Dynamic Path Planning of Mobile Robot. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3331–3336. [Google Scholar] [CrossRef]

- Ulrich, I.; Borenstein, J. VFH/sup */: Local obstacle avoidance with look-ahead verification. In Proceedings of the 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 3, pp. 2505–2511. [Google Scholar] [CrossRef]

- Borenstein, J.; Koren, Y. The vector field histogram-fast obstacle avoidance for mobile robots. IEEE Trans. Robot. Autom. 1991, 7, 278–288. [Google Scholar] [CrossRef] [Green Version]

- Qu, P.; Xue, J.; Ma, L.; Ma, C. A constrained VFH algorithm for motion planning of autonomous vehicles. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 700–705. [Google Scholar] [CrossRef]

- Jayaram, S.; Connacher, H.I.; Lyons, K.W. Virtual assembly using virtual reality techniques. Comput. Aided Des. 1997, 29, 575–584. [Google Scholar] [CrossRef]

- Steuer, J. Defining virtual reality: Dimensions determining telepresence. J. Commun. 1992, 42, 73–93. [Google Scholar] [CrossRef]

- Larsen, E.; Umminger, F.; Ye, X.; Rimon, N.; Stafford, J.R.; Lou, X. Methods and Systems for User Interaction Within Virtual Reality Scene Using Head Mounted Display. US Patent Application 10,073,516, 11 September 2018. [Google Scholar]

- Yamada, H.; Muto, T. Development of a Hydraulic Tele-Operated Construction Robot using Virtual Reality. Int. J. Fluid Power 2003, 4, 35–42. [Google Scholar] [CrossRef]

- Narang, S.; Best, A.; Randhavane, T.; Shapiro, A.; Manocha, D. PedVR: Simulating gaze-based interactions between a real user and virtual crowds. In Proceedings of the 22nd ACM conference on virtual reality software and technology, Munich Germany, 2–4 November 2016; pp. 91–100. [Google Scholar]

- Pan, Z.; Cheok, A.D.; Yang, H.; Zhu, J.; Shi, J. Virtual reality and mixed reality for virtual learning environments. Comput. Graph. 2006, 30, 20–28. [Google Scholar] [CrossRef]

- Han, K.; Shah, S.H.H.; Lee, J.W. Holographic Mixed Reality System for Air Traffic Control and Management. Appl. Sci. 2019, 9, 3370. [Google Scholar] [CrossRef] [Green Version]

- Makris, S.; Karagiannis, P.; Koukas, S.; Matthaiakis, A.S. Augmented reality system for operator support in human–robot collaborative assembly. CIRP Ann. 2016, 65, 61–64. [Google Scholar] [CrossRef]

- Ding, C.J.; Ping, D.; Zhang, M.L.; Zhang, Y.F. Design of mobile robot teleoperation system based on virtual reality. In Proceedings of the 2015 3rd International Conference on Control, Engineering & Information Technology (CEIT), Tlemcen, Algeria, 25–27 May 2015. [Google Scholar]

- Liang, J.S.; Chao, K.M.; Ivey, P. VR-based wheeled mobile robot in application of remote real-time;assembly. Int. J. Adv. Manuf. Technol. 2013, 64, 1765–1779. [Google Scholar] [CrossRef]

- Kato, Y. A remote navigation system for a simple tele-presence robot with virtual reality. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4524–4529. [Google Scholar] [CrossRef]

- Desai, P.R.; Desai, P.N.; Ajmera, K.D.; Mehta, K. A review paper on oculus rift-a virtual reality headset. arXiv 2014, arXiv:1408.1173. [Google Scholar]

- Tamura, H.; Yamamoto, H.; Katayama, A. Mixed reality: Future dreams seen at the border between real and virtual worlds. IEEE Comput. Graph. Appl. 2001, 21, 64–70. [Google Scholar] [CrossRef] [Green Version]

- Tepper, O.M.; Rudy, H.L.; Lefkowitz, A.; Weimer, K.A.; Marks, S.M.; Stern, C.S.; Garfein, E.S. Mixed reality with HoloLens: Where virtual reality meets augmented reality in the operating room. Plastic Reconstr. Surg. 2017, 140, 1066–1070. [Google Scholar] [CrossRef] [PubMed]

- Guhl, J.; Tung, S.; Kruger, J. Concept and architecture for programming industrial robots using augmented reality with mobile devices like microsoft HoloLens. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Luo, J.; Lin, Z.; Li, Y.; Yang, C. A Teleoperation Framework for Mobile Robots Based on Shared Control. IEEE Robot. Autom. Lett. 2020, 5, 377–384. [Google Scholar] [CrossRef] [Green Version]

- De Villiers, M.; Tlale, N. Development of a control model for a four wheel mecanum vehicle. J. Dynam. Syst. Meas. Control 2012, 134, 011007. [Google Scholar] [CrossRef]

- Ulrich, I.; Borenstein, J. VFH+: Reliable obstacle avoidance for fast mobile robots. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No.98CH36146), Leuven, Belgium, 20 May 1998; Volume 2, pp. 1572–1577. [Google Scholar] [CrossRef]

| Methods | DWA | The Improved VFH* |

|---|---|---|

| Path length (m) | 17.91 | 17.36 |

| Run time (s) | 64.6 | 62.2 |

| Passing obstacle | 5 | 5 |

| Sum of the distance from the obstacle (m) | 2.95 | 3.40 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, M.; Dai, S.-L.; Yang, C. Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot. Appl. Sci. 2020, 10, 1135. https://doi.org/10.3390/app10031135

Wu M, Dai S-L, Yang C. Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot. Applied Sciences. 2020; 10(3):1135. https://doi.org/10.3390/app10031135

Chicago/Turabian StyleWu, Mulun, Shi-Lu Dai, and Chenguang Yang. 2020. "Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot" Applied Sciences 10, no. 3: 1135. https://doi.org/10.3390/app10031135

APA StyleWu, M., Dai, S.-L., & Yang, C. (2020). Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot. Applied Sciences, 10(3), 1135. https://doi.org/10.3390/app10031135