Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Research Design

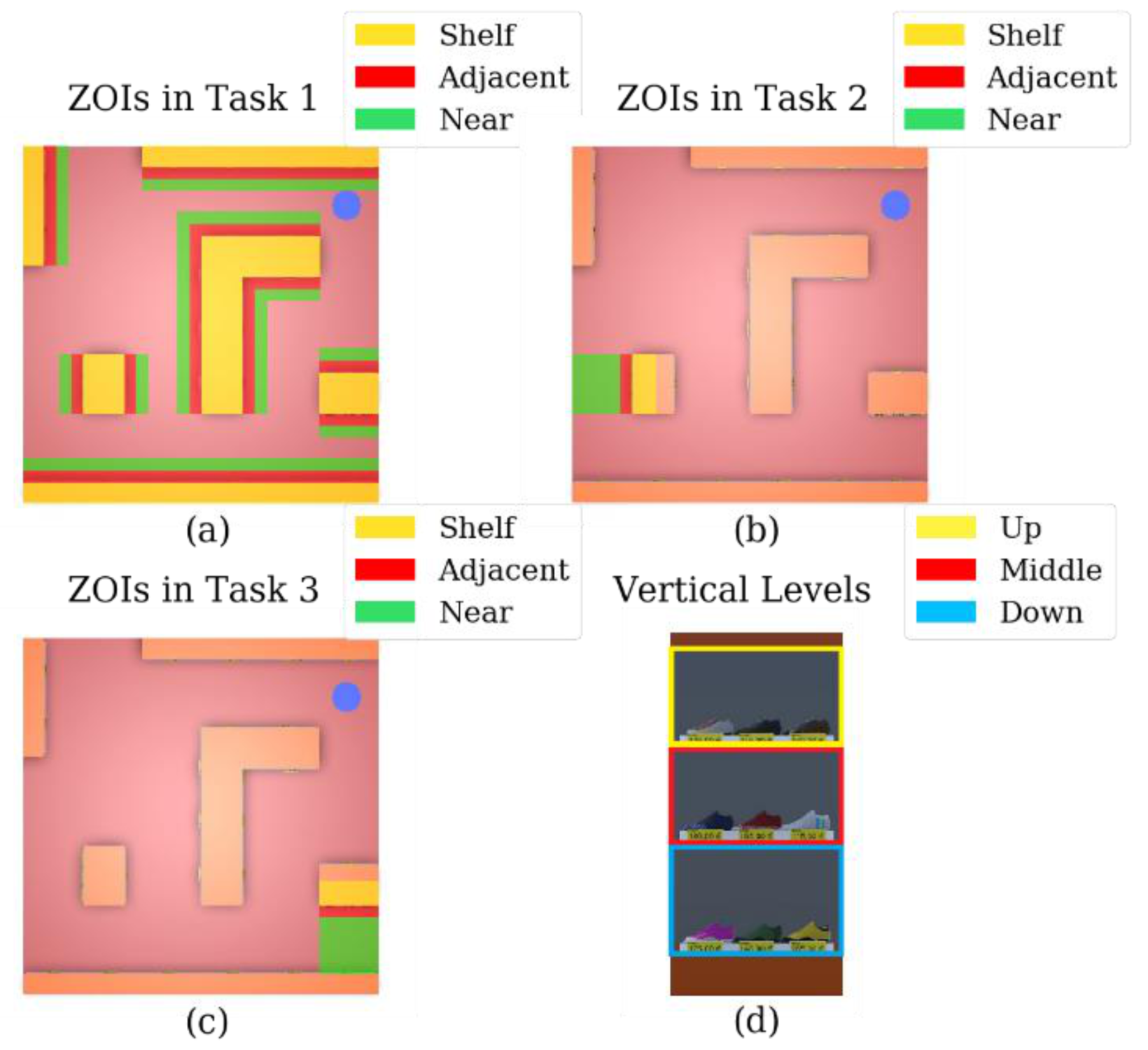

2.2.1. Description of the Virtual Environments

2.2.2. The Training Task

2.2.3. The Exploration Task

2.2.4. The First Planned Task: Buying Snacks

2.2.5. The Second Planned Task: Buying Sneakers

2.3. Apparatus and Signals

2.4. Data Preprocessing

Feature Extraction

2.5. Characterization of Impulsivity

2.6. Machine Learning

2.6.1. Normalization

2.6.2. Feature Selection

- The standard deviation of the feature vector is zero, or the normalized standard deviation to the mean is infinitesimal (σ/µ < 10−10);

- The feature is zero for over 80% of the subjects;

- The feature vector is highly correlated to another feature vector (Pearson correlation coefficient > 0.95).

2.6.3. Classification with Cross-Validation

3. Results

3.1. Impulsivity Self-Assessment

3.2. Recognition of Impulsivity

3.2.1. Results for Accuracy

3.2.2. Results of Feature Selection

4. Discussion

4.1. Results Discussion

4.2. Limitations

5. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Meißner, M.; Pfeiffer, J.; Peukert, C.; Dietrich, H.; Pfeiffer, T. How virtual reality affects consumer choice. J. Bus. Res. 2020, 117, 219–231. [Google Scholar] [CrossRef]

- Earnshaw, R.A. Virtual Reality Systems; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Li, D.; Hu, X.; Zhang, G.; Duan, H. Hot-Redundancy CPCI Measurement and Control System Based on Probabilistic Neural Networks. In Advances in Neural Networks—ISNN 2016; Cheng, L., Liu, Q., Ronzhin, A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 356–364. [Google Scholar]

- Xi, N.; Hamari, J. VR shopping: A review of literature. In Proceedings of the 25th Americas Conference on Information Systems (AMCIS), Cancun, Mexico, 15–17 August 2019; pp. 1–10. [Google Scholar]

- Mar, J.; Llinares, C.; Guixeres, J.; Alcañiz, M. Emotion Recognition in Immersive Virtual Reality: From Statistics to Affective Computing. Sensors 2020, 20, 5163. [Google Scholar] [CrossRef]

- Alcañiz, M.; Baños, R.; Botella, C.; Rey, B. The emma project: Emotions as a determinant of presence. Psychology J. 2003, 1, 141–150. [Google Scholar]

- Alcañiz, M.; Bigné, E.; Guixeres, J. Virtual reality in marketing: A framework, review, and research agenda. Front. Psychol. 2019, 10, 1–15. [Google Scholar] [CrossRef]

- Gold, L.N. Virtual Reality Now a Research Reality. Mark. Res. 1993, 5, 50–51. [Google Scholar]

- Hoffman, D.L.; Novak, T.P. A new marketing paradigm for electronic commerce. Inf. Soc. 1997, 13, 43–54. [Google Scholar] [CrossRef]

- Pantano, E.; Servidio, R. Modeling innovative points of sales through virtual and immersive technologies. J. Retail. Consum. Serv. 2012, 19, 279–286. [Google Scholar] [CrossRef]

- Waterlander, W.E.; Jiang, Y.; Steenhuis, I.H.M.; Ni Mhurchu, C. Using a 3D virtual supermarket to measure food purchase behavior: A validation study. J. Med. Internet Res. 2015, 17, e107. [Google Scholar] [CrossRef] [Green Version]

- Massara, F.; Liu, S.S.; Melara, R.D. Adapting to a retail environment: Modeling consumer-environment interactions. J. Bus. Res. 2010, 63, 673–681. [Google Scholar] [CrossRef]

- Van Herpen, E.; Pieters, R.; Zeelenberg, M. When demand accelerates demand: Trailing the bandwagon. J. Consum. Psychol. 2009, 19, 302–312. [Google Scholar] [CrossRef]

- Van Herpen, E.; van den Broek, E.; van Trijp, H.C.M.; Yu, T. Can a virtual supermarket bring realism into the lab? Comparing shopping behavior using virtual and pictorial store representations to behavior in a physical store. Appetite 2016, 107, 196–207. [Google Scholar] [CrossRef]

- Chicchi Giglioli, I.A.; Pravettoni, G.; Sutil Martín, D.L.; Parra, E.; Raya, M.A. A Novel Integrating Virtual Reality Approach for the Assessment of the Attachment Behavioral System. Front. Psychol. 2017, 8, 959. [Google Scholar] [CrossRef]

- Bigné, E.; Alcañiz, M.; Guixeres, J. Visual Attention in Virtual Reality Settings: An Abstract. In Finding New Ways to Engage and Satisfy Global Customers; Rossi, P., Krey, N., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 253–254. [Google Scholar]

- Ketoma, V.K.; Schäfer, P.; Meixner, G. Development and evaluation of a virtual reality grocery shopping application using a multi-kinect walking-in-place approach. Adv. Intell. Syst. Comput. 2018, 722, 368–374. [Google Scholar] [CrossRef]

- Meißner, M.; Pfeiffer, J.; Pfeiffer, T.; Oppewal, H. Combining virtual reality and mobile eye tracking to provide a naturalistic experimental environment for shopper research. J. Bus. Res. 2019, 100, 445–458. [Google Scholar] [CrossRef]

- Moghaddasi, M.; Khatri, J.; Llanes-Jurado, J.; Spinella, L.; Marín-Morales, J.; Guixeres, J.; Alcañiz, M. Segmentation of Areas of Interest Inside a Virtual Reality Store. In HCI International 2020—Posters; Stephanidis, C., Antona, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 92–98. [Google Scholar]

- Speicher, M.; Hell, P.; Daiber, F.; Simeone, A.; Krüger, A. A virtual reality shopping experience using the apartment metaphor. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces, Castiglione della Pescaia, Italy, 29 May–1 June 2018. [Google Scholar] [CrossRef]

- Siegrist, M.; Ung, C.Y.; Zank, M.; Marinello, M.; Kunz, A.; Hartmann, C.; Menozzi, M. Consumers’ food selection behaviors in three-dimensional (3D) virtual reality. Food Res. Int. 2019, 117, 50–59. [Google Scholar] [CrossRef]

- Liang, H.N.; Lu, F.; Shi, Y.; Nanjappan, V.; Papangelis, K. Evaluating the effects of collaboration and competition in navigation tasks and spatial knowledge acquisition within virtual reality environments. Futur. Gener. Comput. Syst. 2019, 95, 855–866. [Google Scholar] [CrossRef]

- Khatri, J.; Moghaddasi, M.; Llanes-Jurado, J.; Spinella, L.; Marín-Morales, J.; Guixeres, J.; Alcañiz, M. Optimizing Virtual Reality Eye Tracking Fixation Algorithm Thresholds Based on Shopper Behavior and Age. In HCI International 2020—Posters; Stephanidis, C., Antona, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 64–69. [Google Scholar]

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Front. Psychol. 2018, 9, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Lui, T.W.; Piccoli, G.; Ives, B. Marketing Strategies in Virtual Worlds. Data Base Adv. Inf. Syst. 2007, 38, 77–80. [Google Scholar] [CrossRef]

- Farah, M.F.; Ramadan, Z.B.; Harb, D.H. The examination of virtual reality at the intersection of consumer experience, shopping journey and physical retailing. J. Retail. Consum. Serv. 2019, 48, 136–143. [Google Scholar] [CrossRef]

- Bonetti, F.; Warnaby, G.; Quinn, L. Augmented Reality and Virtual Reality in Physical and Online Retailing: A Review, Synthesis and Research Agenda. In Augmented Reality and Virtual Reality: Empowering Human, Place and Business; Jung, T., Tom Dieck, M.C., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 119–132. ISBN 978-3-319-64027-3. [Google Scholar]

- Stone, M.; D’Onfro, J. The Inspiring Life Story of Alibaba Founder Jack Ma, Now the Richest Man in China. Business Insider 2014, 2. Available online: https://www.businessinsider.com/the-inspiring-life-story-of-alibaba-founder-jack-ma-2014-10 (accessed on 10 January 2021).

- Hollebeek, L.D.; Clark, M.K.; Andreassen, T.W.; Sigurdsson, V.; Smith, D. Virtual reality through the customer journey: Framework and propositions. J. Retail. Consum. Serv. 2020, 55, 102056. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Gentili, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Real vs. immersive-virtual emotional experience: Analysis of psycho-physiological patterns in a free exploration of an art museum. PLoS ONE 2019, 14, e0223881. [Google Scholar] [CrossRef] [PubMed]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; De-Juan-Ripoll, C.; Llinares, C.; Guixeres, J.; Iñarra, S.; Alcañiz, M. Navigation Comparison between a Real and a Virtual Museum: Time-dependent Differences using a Head Mounted Display. Interact. Comput. 2019, 31, 208–220. [Google Scholar] [CrossRef]

- Lehmann, K.S.; Ritz, J.P.; Maass, H.; Çakmak, H.K.; Kuehnapfel, U.G.; Germer, C.T.; Bretthauer, G.; Buhr, H.J. A prospective randomized study to test the transfer of basic psychomotor skills from virtual reality to physical reality in a comparable training setting. Ann. Surg. 2005, 241, 442–449. [Google Scholar] [CrossRef] [PubMed]

- Tian, P.; Wang, Y.; Lu, Y.; Zhang, Y.; Wang, X.; Wang, Y. Behavior analysis of indoor escape route-finding based on head-mounted vr and eye tracking. In Proceedings of the 2019 International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Atlanta, GA, USA, 14–17 July 2019; pp. 422–427. [Google Scholar] [CrossRef]

- Fuentes-Hurtado, F.; Diego-Mas, J.A.; Naranjo, V.; Alcañiz, M. Finding the Importance of Facial Features in Social Trait Perception. In Intelligent Data Engineering and Automated Learning—IDEAL 2018; Yin, H., Camacho, D., Novais, P., Tallón-Ballesteros, A.J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 35–45. [Google Scholar]

- Pfeiffer, J.; Pfeiffer, T.; Meißner, M.; Weiß, E. Eye-Tracking-Based Classification of Information Search Behavior Using Machine Learning: Evidence from Experiments in Physical Shops and Virtual Reality Shopping Environments. Inf. Syst. Res. 2020. [Google Scholar] [CrossRef]

- Lin, C.-H.; Chuang, S.-C. The Effect of Individual Differences on Adolescents’ Impulsive Buying Behavior. Adolescence 2005, 40, 551–558. [Google Scholar]

- Hussain, S.; Siddiqui, D.A. The Influence of Impulsive Personality Traits and Store Environment on Impulse Buying of Consumer in Karachi. Int. J. Bus. Adm. 2019, 10, 50. [Google Scholar] [CrossRef]

- Zaman, S.I.; Jalees, T.; Jiang, Y.; Kazmi, S.H.A. Testing and incorporating additional determinants of ethics in counterfeiting luxury research according to the theory of planned behavior. Psihologija 2018, 51, 163–196. [Google Scholar] [CrossRef] [Green Version]

- Miao, M.; Jalees, T.; Qabool, S.; Zaman, S.I. The effects of personality, culture and store stimuli on impulsive buying behavior: Evidence from emerging market of Pakistan. Asia Pacific J. Mark. Logist. 2019, 32, 188–204. [Google Scholar] [CrossRef]

- Akram, U.; Hui, P.; Kaleem Khan, M.; Tanveer, Y.; Mehmood, K.; Ahmad, W. How website quality affects online impulse buying: Moderating effects of sales promotion and credit card use. Asia Pacific J. Mark. Logist. 2018, 30, 235–256. [Google Scholar] [CrossRef]

- Kollat, D.T.; Willett, R.P. Customer impulse purchasing behavior. J. Mark. Res. 1967, 4, 21–31. [Google Scholar] [CrossRef]

- Shahjehan, A.; Qureshi, J.A. Personality and impulsive buying behaviors. A necessary condition analysis. Econ. Res. Istraz. 2019, 32, 1060–1072. [Google Scholar] [CrossRef] [Green Version]

- Singh, R.; Nayak, J.K. Effect of family environment on adolescent compulsive buying: Mediating role of self-esteem. Asia Pacific J. Mark. Logist. 2016, 28, 396–419. [Google Scholar] [CrossRef]

- Rook, D. W. The Buying Impulse. J. Consum. Res. 1987, 14, 189–199. [Google Scholar] [CrossRef]

- Rook, D.W.; Fisher, R.J. Normative Behavior Influences on Impulsive Buying Trait Aspects of Buying Impulsiveness. J. Consum. Res. 1995, 22, 305–313. [Google Scholar] [CrossRef]

- Parboteeah, D.V.; Valacich, J.S.; Wells, J.D. The influence of website characteristics on a consumer’s urge to buy impulsively. Inf. Syst. Res. 2009, 20, 60–78. [Google Scholar] [CrossRef]

- Zhang, X.; Prybutok, V.R.; Koh, C.E. The role of impulsiveness in a TAM-based online purchasing behavior model. Inf. Resour. Manag. J. 2006, 19, 54–68. [Google Scholar] [CrossRef] [Green Version]

- Wells, J.D.; Parboteeah, V.; Valacich, J.S. Online Impulse Buying: Understanding the Interplay between Consumer Impulsiveness and Website Quality. J. Assoc. Inf. Syst. 2011, 12, 32–56. [Google Scholar] [CrossRef] [Green Version]

- Whiteside, S.P.; Lynam, D.R. The Five Factor Model and impulsivity: Using a structural model of personality to understand impulsivity. Pers. Individ. Dif. 2001, 30, 669–689. [Google Scholar] [CrossRef]

- Buss, A.H.; Plomin, R. A Temperament Theory of Personality Development; Wiley & Sons: Hoboken, NJ, USA, 1975. [Google Scholar]

- Eysenck, S.B.G.; Pearson, P.R.; Easting, G.; Allsopp, J.F. Age norms for impulsiveness, venturesomeness and empathy in adults. Pers. Individ. Dif. 1985, 6, 613–619. [Google Scholar] [CrossRef]

- Dickman, S.J. Functional and Dysfunctional Impulsivity: Personality and Cognitive Correlates. J. Pers. Soc. Psychol. 1990, 58, 95–102. [Google Scholar] [CrossRef]

- Patton, J.H.; Stanford, M.; Barratt, E.S. Factor structure of the Barratt Impulsiveness Scale. J. Clin. Psychol. 1995, 51, 768–774. [Google Scholar]

- Kovaleva, A.; Beierlein, C.; Kemper, C.; Rammstedt, B. Die Skala Impulsives Verhalten-8 (I-8) [The Scale Impulsive Behaviour-8 (I-8)]. Zs. Soz. Items Skalen 2014, 8, 1–21. [Google Scholar] [CrossRef]

- Philipsen, R.; Brell, T.; Brost, W.; Eickels, T.; Ziefle, M. Running on empty—Users’ charging behavior of electric vehicles versus traditional refueling. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 475–492. [Google Scholar] [CrossRef]

- Ahrends, C.; Bravo, F.; Kringelbach, M.L.; Vuust, P.; Rohrmeier, M.A. Pessimistic outcome expectancy does not explain ambiguity aversion in decision-making under uncertainty. Sci. Rep. 2019, 9, 1–11. [Google Scholar] [CrossRef]

- Lindheimerid, N.; Nicolai, J.; Moshagen, M. General rather than specific: Cognitive deficits in suppressing task irrelevant stimuli are associated with buying-shopping disorder. PLoS ONE 2020, 15, e0237093. [Google Scholar] [CrossRef]

- Fischer, S.; Anderson, K.G.; Smith, G.T. Coping with distress by eating or drinking: Role of trait urgency and expectancies. Psychol. Addict. Behav. 2004, 18, 269. [Google Scholar] [CrossRef]

- Pearson, M.R.; Murphy, E.M.; Doane, A.N. Impulsivity-like traits and risky driving behaviors among college students. Accid. Anal. Prev. 2013, 53, 142–148. [Google Scholar] [CrossRef] [Green Version]

- Riley, E.N.; Combs, J.L.; Jordan, C.E.; Smith, G.T. Negative Urgency and Lack of Perseverance: Identification of Differential Pathways of Onset and Maintenance Risk in the Longitudinal Prediction of Nonsuicidal Self-Injury. Behav. Ther. 2015, 46, 439–448. [Google Scholar] [CrossRef] [Green Version]

- Afolabi, I.T.; Oladipupo, O.; Worlu, R.E.; Akinyemi, I.O. A systematic review of consumer behaviour prediction studies. Covenant J. Bus. Soc. Sci. 2016, 7, 41–60. [Google Scholar]

- Jain, P.K.; Pamula, R. A systematic literature review on machine learning applications for consumer sentiment analysis using online reviews. arXiv 2020, arXiv:2008.10282. Available online: https://arxiv.org/abs/2008.10282 (accessed on 10 January 2021).

- Alcañiz Raya, M.; Marín-Morales, J.; Minissi, M.E.; Teruel Garcia, G.; Abad, L.; Chicchi Giglioli, I.A. Machine Learning and Virtual Reality on Body Movements’ Behaviors to Classify Children with Autism Spectrum Disorder. J. Clin. Med. 2020, 9, 1260. [Google Scholar] [CrossRef]

- Lopes, P.; Tian, N.; Boulic, R. Exploring Blink-Rate Behaviors for Cybersickness Detection in VR. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 795–796. [Google Scholar] [CrossRef]

- Sorensen, H. Inside the Mind of the Shopper: The Science of Retailing; FT Press: Upper Saddle River, NJ, USA, 2016. [Google Scholar]

- De-Juan-Ripoll, C.; Llanes-Jurado, J.; Giglioli, I.A.C.; Marín-Morales, J.; Alcañiz, M. An Immersive Virtual Reality Game for Predicting Risk Taking through the Use of Implicit Measures. Appl. Sci. 2021, 11, 825. [Google Scholar] [CrossRef]

- De-Juan-Ripoll, C.; Soler-Domínguez, J.L.; Chicchi Giglioli, I.A.; Contero, M.; Alcañiz, M. The Spheres & Shield Maze Task: A Virtual Reality Serious Game for the Assessment of Risk Taking in Decision Making. Cyberpsychol. Behav. Soc. Netw. 2020, 23, 773–781. [Google Scholar] [CrossRef]

- © 2021 Unity Technologies. Available online: https://unity.com/ (accessed on 10 January 2021).

- © 2021–2021 HTC Corporation. Available online: https://www.vive.com/ (accessed on 10 January 2021).

- VIVE Pro Eye Specs & User Guide. Available online: https://developer.vive.com/resources/vive-sense/hardware-guide/vive-pro-eye-specs-user-guide/ (accessed on 10 January 2021).

- Llanes-Jurado, J.; Marín-Morales, J.; Guixeres, J.; Alcañiz, M. Development and calibration of an eye-tracking fixation identification algorithm for immersive virtual reality. Sensors 2020, 20, 4956. [Google Scholar] [CrossRef]

- Dietterich, T. Overfitting and Undercomputing in Machine Learning. ACM Comput. Surv. 1995, 27, 326–327. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Yang, C.-M. Defensive Postures Decrease Consumption Desire and Purchase Intention. Asia Pac. Adv. Consum. Res. 2015, 11, 90–94. [Google Scholar]

- Bharadwaj, S.R.; Candy, T.R. Accommodative and vergence responses to conflicting blur and disparity stimuli during development. J. Vis. 2009, 9, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Cho, J.; Kim, Y.; Jung, S.H.; Shin, H.; Kim, T. Screen Door Effect Mitigation and Its Quantitative Evaluation in VR Display. SID Symp. Dig. Tech. Pap. 2017, 48, 1154–1156. [Google Scholar] [CrossRef]

|

| Dimension | Balance | Centers (Min, Max) of Low Group | Centers (Min, Max) of High Group | p-Value | Cronbach’s Alpha | |

|---|---|---|---|---|---|---|

| Main scale | Impulsivity | 24–33 | 2.31 (1.50–2.62) | 3.20 (2.75–4.38) | <10−5 | 0.72 |

| Subscales | Urgency 1 | 23–34 | 1.65 (1.00–2.00) | 3.07 (2.50–5.00) | <10−5 | 0.73 |

| Premeditation 1 | 18–39 | 2.67 (2.00–3.00) | 4.04 (3.50–5.00) | <10−5 | 0.80 | |

| Perseverance 1 | 19–38 | 2.05 (1.00–2.50) | 3.76 (3.00–5.00) | <10−5 | 0.64 | |

| Sensation-seeking 1 | 27–30 | 3.00 (2.00–3.50) | 4.15 (4.00–5.00) | <10−5 | 0.58 | |

| Data | Task | Main Scale | Subscale | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Impulsivity | Urgency | Premeditation | Perseverance | Sensation-Seeking | |||||||

| Acc. 1 | Kap. 2 | Acc. | Kap. | Acc. | Kap. | Acc. | Kap. | Acc. | Kap. | ||

| ET | Exploration | 0.65 (0.05) | 0.27 (0.11) | 0.61 (0.03) | 0.05 (0.07) | 0.70 (0.02) | 0.05 (0.08) | 0.68 (0.02) | 0.02 (0.07) | 0.71 (0.05) | 0.43 (0.11) |

| Planned 1 | 0.63 (0.03) | 0.19 (0.08) | 0.65 (0.06) | 0.18 (0.14) | 0.70 (0.02) | 0.05 (0.07) | 0.74 (0.04) | 0.23 (0.13) | 0.69 (0.05) | 0.38 (0.11) | |

| Planned 2 | 0.69 (0.05) | 0.33 (0.11) | 0.74 (0.05) | 0.40 (0.12) | 0.71 (0.03) | 0.10 (0.12) | 0.74 (0.04) | 0.25 (0.14) | 0.69 (0.06) | 0.40 (0.11) | |

| NAV | Exploration | 0.74 (0.03) | 0.45 (0.06) | 0.60 (0.02) | 0.02 (0.05) | 0.74 (0.04) | 0.20 (0.13) | 0.69 (0.02) | 0.09 (0.08) | 0.64 (0.07) | 0.29 (0.14) |

| Planned 1 | 0.66 (0.03) | 0.25 (0.06) | 0.64 (0.03) | 0.12 (0.09) | 0.69 (0.01) | 0.01 (0.02) | 0.70 (0.03) | 0.10 (0.10) | 0.64 (0.05) | 0.26 (0.11) | |

| Planned 2 | 0.66 (0.03) | 0.25 (0.08) | 0.72 (0.04) | 0.36 (0.10) | 0.70 (0.02) | 0.05 (0.08) | 0.68 (0.01) | 0.01 (0.03) | 0.64 (0.06) | 0.31 (0.12) | |

| POS & INT | Exploration | 0.83 (0.03) | 0.64 (0.06) | 0.65 (0.05) | 0.19 (0.13) | 0.79 (0.06) | 0.39 (0.17) | 0.72 (0.04) | 0.16 (0.15) | 0.76 (0.05) | 0.51 (0.10) |

| Planned 1 | 0.70 (0.04) | 0.36 (0.09) | 0.70 (0.06) | 0.32 (0.14) | 0.71 (0.03) | 0.10 (0.12) | 0.72 (0.06) | 0.26 (0.20) | 0.78 (0.05) | 0.56 (0.11) | |

| Planned 2 | 0.69 (0.05) | 0.32 (0.12) | 0.75 (0.04) | 0.44 (0.10) | 0.76 (0.05) | 0.28 (0.15) | 0.75 (0.06) | 0.38 (0.15) | 0.74 (0.05) | 0.50 (0.09) | |

| Task | Main Scale | Subscales | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Impulsivity | Urgency | Premeditation | Perseverance | Sensation-Seeking | ||||||

| Acc. | Kap. | Acc. | Kap. | Acc. | Kap. | Acc. | Kap. | Acc. | Kap. | |

| Exploration task | 0.86 (0.03) | 0.71 (0.05) | 0.69 (0.06) | 0.28 (0.15) | 0.79 (0.05) | 0.40 (0.17) | 0.76 (0.06) | 0.32 (0.17) | 0.80 (0.04) | 0.59 (0.08) |

| Planned tasks | 0.79 (0.04) | 0.56 (0.09) | 0.78 (0.05) | 0.52 (0.11) | 0.80 (0.04) | 0.45 (0.14) | 0.85 (0.05) | 0.63 (0.15) | 0.85 (0.05) | 0.70 (0.10) |

| All the tasks | 0.87 (0.03) | 0.73 (0.06) | 0.82 (0.05) | 0.59 (0.11) | 0.84 (0.04) | 0.55 (0.13) | 0.87 (0.05) | 0.68 (0.11) | 0.86 (0.04) | 0.72 (0.07) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moghaddasi, M.; Marín-Morales, J.; Khatri, J.; Guixeres, J.; Chicchi Giglioli, I.A.; Alcañiz, M. Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality. Appl. Sci. 2021, 11, 4399. https://doi.org/10.3390/app11104399

Moghaddasi M, Marín-Morales J, Khatri J, Guixeres J, Chicchi Giglioli IA, Alcañiz M. Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality. Applied Sciences. 2021; 11(10):4399. https://doi.org/10.3390/app11104399

Chicago/Turabian StyleMoghaddasi, Masoud, Javier Marín-Morales, Jaikishan Khatri, Jaime Guixeres, Irene Alice Chicchi Giglioli, and Mariano Alcañiz. 2021. "Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality" Applied Sciences 11, no. 10: 4399. https://doi.org/10.3390/app11104399

APA StyleMoghaddasi, M., Marín-Morales, J., Khatri, J., Guixeres, J., Chicchi Giglioli, I. A., & Alcañiz, M. (2021). Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality. Applied Sciences, 11(10), 4399. https://doi.org/10.3390/app11104399