Automatic Segmentation of Choroid Layer Using Deep Learning on Spectral Domain Optical Coherence Tomography

Abstract

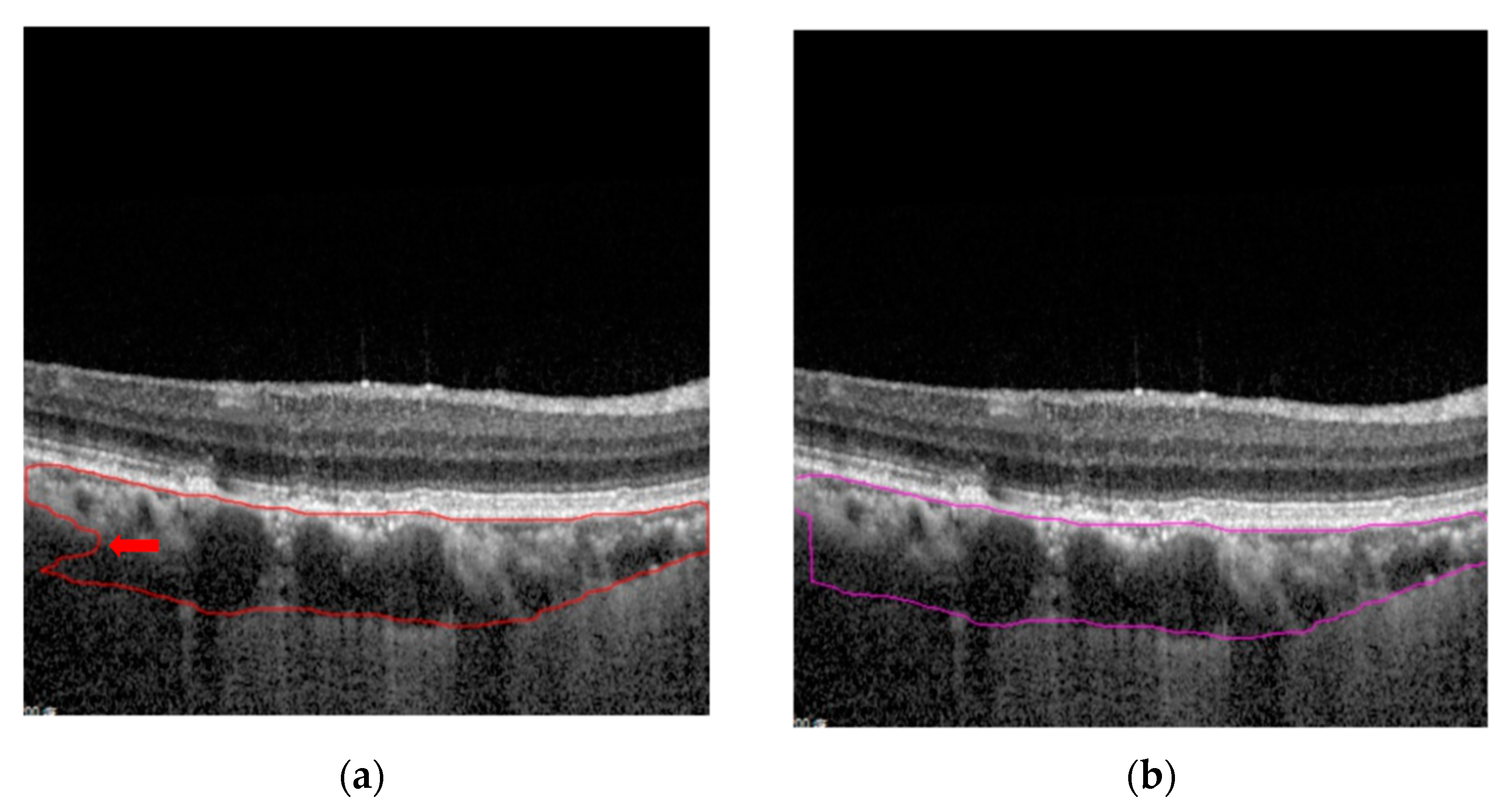

:1. Introduction

2. Materials and Methods

2.1. Data Acquisition

2.2. Deep-Learning Model

2.3. ResNet and FPN

2.4. Transfer Learning with COCO Dataset

2.5. Model Ensembles

2.6. Post-Processing

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, S.; Lingham, G.; Alonso-Caneiro, D.; Chen, F.K.; Yazar, S.; Hewitt, A.W.; Mackey, D.A. Choroidal Thickness in Young Adults and its Association with Visual Acuity. Am. J. Ophthalmol. 2020, 214, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Dhoot, D.S.; Huo, S.; Yuan, A.; Xu, D.; Srivistava, S.; Ehlers, J.P.; Traboulsi, E.; Kaiser, P.K. Evaluation of choroidal thickness in retinitis pigmentosa using enhanced depth imaging optical coherence tomography. Br. J. Ophthalmol. 2013, 97, 66–69. [Google Scholar] [CrossRef]

- Cheung, C.; Lee, W.K.; Koizumi, H.; Dansingani, K.; Lai, T.; Freund, K.B. Pachychoroid disease. Eye 2019, 33, 14–33. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Paranjape, A.S.; Elmaanaoui, B.; Dewelle, J.; Rylander, H.G., 3rd; Markey, M.K.; Milner, T.E. Quality assessment for spectral domain optical coherence tomography (OCT) images. Proc. SPIE Int. Soc. Opt. Eng. 2009, 7171, 71710X. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Langs, G.; Schmidt-Erfurth, U. f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks. Med. Image. Anal. 2019, 54, 30–44. [Google Scholar] [CrossRef]

- He, F.; Chun, R.; Qiu, Z.; Yu, S.; Shi, Y.; To, C.H.; Chen, X. Choroid Segmentation of Retinal OCT Images Based on CNN Classifier and l2-lq Fitter. Comput. Math. Methods Med. 2021, 2021, 8882801. [Google Scholar] [CrossRef]

- Wong, I.Y.; Koizumi, H.; Lai, W.W. Enhanced depth imaging optical coherence tomography. Ophthalmic Surg. Lasers Imaging Retin. 2011, 42 (Suppl. S75–S84). [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–27 July 2017; pp. 936–944. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet Pre-Training. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4917–4926. [Google Scholar] [CrossRef] [Green Version]

- Singh, S.R.; Vupparaboina, K.K.; Goud, A.; Dansingani, K.K.; Chhablani, J. Choroidal imaging biomarkers. Surv. Ophthalmol. 2019, 64, 312–333. [Google Scholar] [CrossRef]

- Nickla, D.L.; Wallman, J. The multifunctional choroid. Prog. Retin. Eye Res. 2010, 29, 144–168. [Google Scholar] [CrossRef] [Green Version]

- Esmaeelpour, M.; Ansari-Shahrezaei, S.; Glittenberg, C.; Nemetz, S.; Kraus, M.F.; Hornegger, J.; Fujimoto, J.G.; Drexler, W.; Binder, S. Choroid, Haller’s, and Sattler’s layer thickness in intermediate age-related macular degeneration with and without fellow neovascular eyes. Investig. Ophthalmol. Vis. Sci. 2014, 55, 5074–5080. [Google Scholar] [CrossRef] [Green Version]

- Baek, J.; Lee, J.H.; Jung, B.J.; Kook, L.; Lee, W.K. Morphologic features of large choroidal vessel layer: Age-related macular degeneration, polypoidal choroidal vasculopathy, and central serous chorioretinopathy. Graefes Arch. Clin. Exp. Ophthalmol. 2018, 256, 2309–2317. [Google Scholar] [CrossRef] [PubMed]

- Foo, V.; Gupta, P.; Nguyen, Q.D.; Chong, C.; Agrawal, R.; Cheng, C.Y.; Yanagi, Y. Decrease in Choroidal Vascularity Index of Haller’s layer in diabetic eyes precedes retinopathy. BMJ Open Diabetes Res. Care 2020, 8, e001295. [Google Scholar] [CrossRef]

- Fang, L.; Cunefare, D.; Wang, C.; Guymer, R.H.; Li, S.; Farsiu, S. Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search. Biomed. Opt. Express 2017, 8, 2732–2744. [Google Scholar] [CrossRef] [Green Version]

- Kugelman, J.; Alonso-Caneiro, D.; Read, S.A.; Vincent, S.J.; Collins, M.J. Automatic segmentation of OCT retinal boundaries using recurrent neural networks and graph search. Biomed. Opt. Express 2018, 9, 5759–5777. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Han, C.; Kitamura, Y.; Kudo, A.; Ichinose, A.; Rundo, L.; Furukawa, Y.; Umemoto, K.; Li, Y.; Nakayama, H. Synthesizing Diverse Lung Nodules Wherever Massively: 3D Multi-Conditional GAN-Based CT Image Augmentation for Object Detection. In Proceedings of the International Conference on 3D Vision, Québec, QC, Canada, 16–19 September 2019; pp. 729–737. [Google Scholar] [CrossRef] [Green Version]

- Han, C.; Rundo, L.; Murao, K.; Noguchi, T.; Shimahara, Y.; Milacski, Z.Á.; Koshino, S.; Sala, E.; Nakayama, H.; Satoh, S. MADGAN: Unsupervised medical anomaly detection GAN using multiple adjacent brain MRI slice reconstruction. BMC Bioinform. 2021, 22 (Suppl. 2), 31. [Google Scholar] [CrossRef] [PubMed]

- Masood, S.; Fang, R.; Li, P.; Li, H.; Sheng, B.; Mathavan, A.; Wang, X.; Yang, P.; Wu, Q.; Qin, J.; et al. Automatic Choroid Layer Segmentation from Optical Coherence Tomography Images Using Deep Learning. Sci. Rep. 2019, 9, 3058. [Google Scholar] [CrossRef] [PubMed]

- Kugelman, J.; Alonso-Caneiro, D.; Read, S.A.; Hamwood, J.; Vincent, S.J.; Chen, F.K.; Collins, M.J. Automatic choroidal segmentation in OCT images using supervised deep learning methods. Sci. Rep. 2019, 9, 13298. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Yun, J.; Cho, Y.; Shin, K.; Jang, R.; Bae, H.J.; Kim, N. Deep Learning in Medical Imaging. Neurospine 2019, 16, 657–668. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.G.; Jun, S.; Cho, Y.W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep Learning in Medical Imaging: General Overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Spaide, R.F.; Koizumi, H.; Pozzoni, M.C. Enhanced depth imaging spectral-domain optical coherence tomography. Am. J. Ophthalmol. 2008, 146, 496–500. [Google Scholar] [CrossRef]

- Kong, M.; Choi, D.Y.; Han, G.; Song, Y.M.; Park, S.Y.; Sung, J.; Hwang, S.; Ham, D.I. Measurable Range of Subfoveal Choroidal Thickness With Conventional Spectral Domain Optical Coherence Tomography. Transl. Vis. Sci. Technol. 2018, 7, 16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ayyildiz, O.; Kucukevcilioglu, M.; Ozge, G.; Koylu, M.T.; Ozgonul, C.; Gokce, G.; Mumcuoglu, T.; Durukan, A.H.; Mutlu, F.M. Comparison of peripapillary choroidal thickness measurements via spectral domain optical coherence tomography with and without enhanced depth imaging. Postgrad. Med. 2016, 128, 439–443. [Google Scholar] [CrossRef]

- Akkaya, S. Macular and Peripapillary Choroidal Thickness in Patients with Keratoconus. Ophthalmic Surg. Lasers Imaging Retin. 2018, 49, 664–673. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Giocanti-Aurégan, A.; Benhatchi, N.; Greliche, N.; Beaussier, H.; Sustronck, P.; Hammoud, S.; Jeanteur, M.N.; Kretz, G.; Abitbol, O.; et al. Increased choroidal thickness in primary angle closure measured by swept-source optical coherence tomography in Caucasian population. Int. Ophthalmol. 2020, 40, 195–203. [Google Scholar] [CrossRef]

- Pablo, L.E.; Cameo, B.; Bambo, M.P.; Polo, V.; Larrosa, J.M.; Fuertes, M.I.; Güerri, N.; Ferrandez, B.; Garcia-Martin, E. Peripapillary Choroidal Thickness Analysis Using Swept-Source Optical Coherence Tomography in Glaucoma Patients: A Broader Approach. Ophthalmic Res. 2018, 59, 7–13. [Google Scholar] [CrossRef] [PubMed]

- Keenan, T.D.; Klein, B.; Agrón, E.; Chew, E.Y.; Cukras, C.A.; Wong, W.T. Choroidal Thickness And Vascularity Vary with Disease Severity And Subretinal Drusenoid Deposit Presence in Nonadvanced Age-related Macular Degeneration. Retina 2020, 40, 632–642. [Google Scholar] [CrossRef]

- Jordan-Yu, J.M.; Teo, K.; Chakravarthy, U.; Gan, A.; Tan, A.; Cheong, K.X.; Wong, T.Y.; Cheung, C. Polypoidal Choroidal Vasculopathy Features Vary According To Subfoveal Choroidal Thickness. Retina 2021, 41, 1084–1093. [Google Scholar] [CrossRef]

- Nikkhah, H.; Feizi, M.; Abedi, N.; Karimi, S.; Yaseri, M.; Esfandiari, H. Choroidal Thickness in Acute Non-arteritic Anterior Ischemic Optic Neuropathy. J. Ophthalmic Vis. Res. 2020, 15, 59–68. [Google Scholar] [CrossRef] [PubMed]

- Yeung, S.C.; You, Y.; Howe, K.L.; Yan, P. Choroidal thickness in patients with cardiovascular disease: A review. Surv. Ophthalmol. 2020, 65, 473–486. [Google Scholar] [CrossRef] [PubMed]

- Robbins, C.B.; Grewal, D.S.; Thompson, A.C.; Powers, J.H.; Soundararajan, S.; Koo, H.Y.; Yoon, S.P.; Polascik, B.W.; Liu, A.; Agrawal, R.; et al. Choroidal Structural Analysis in Alzheimer Disease, Mild Cognitive Impairment, and Cognitively Healthy Controls. Am. J. Ophthalmol. 2021, 223, 359–367. [Google Scholar] [CrossRef]

- Iovino, C.; Pellegrini, M.; Bernabei, F.; Borrelli, E.; Sacconi, R.; Govetto, A.; Vagge, A.; Di Zazzo, A.; Forlini, M.; Finocchio, L.; et al. Choroidal Vascularity Index: An In-Depth Analysis of This Novel Optical Coherence Tomography Parameter. J. Clin. Med. 2020, 9, 595. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kida, T.; Osuka, S.; Fukumoto, M.; Sato, T.; Harino, S.; Oku, H.; Ikeda, T. Long-Term Follow-Up Changes of Central Choroidal Thickness Thinning after Repeated Anti-VEGF Therapy Injections in Patients with Central Retinal Vein Occlusion-Related Macular Edema with Systemic Hypertension. Ophthalmologica 2020, 243, 102–109. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.H.; Kim, S.H.; Lee, J.M.; Kang, E.C.; Koh, H.J. Peripapillary Choroidal Thickness Change of Polypoidal Choroidal Vasculopathy after Anti-vascular Endothelial Growth Factor. Korean J. Ophthalmol. 2017, 31, 431–438. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Model Parameters | |

|---|---|

| Num workers | 4 |

| Batch size | 4 |

| Learning rate | 0.003 |

| Iterations | 85,000 |

| Thresholds | 0.5 |

| Freeze layers | 3 |

| R101 Model + Post-Processing | R50 Model + Post-Processing | R101 ∪ R50 (OR Model) | R101 ∩ R50 (AND Model) | |

|---|---|---|---|---|

| Average error (pixels) (Std. Dev.) | 5.06 (4.05) | 4.85 (3.72) | 4.86 (4.13) | 5.04 (3.74) |

| Average execution time (seconds) (Std. Dev.) | 7.25 (0.22) | 6.97 (0.29) | 5.30 (0.06) | 4.60 (0.11) |

| Average accuracy of average choroidal thickness (%) (Std. Dev.) | 89.5 (0.02) | 90.0 (0.01) | 88.6 (0.04) | 89.9 (0.005) |

| Average accuracy of average subfoveal choroidal thickness (%) (Std. Dev.) | 89.3 (0.01) | 89.9 (0.01) | 88.5 (0.01) | 89.8 (0.008) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsia, W.P.; Tse, S.L.; Chang, C.J.; Huang, Y.L. Automatic Segmentation of Choroid Layer Using Deep Learning on Spectral Domain Optical Coherence Tomography. Appl. Sci. 2021, 11, 5488. https://doi.org/10.3390/app11125488

Hsia WP, Tse SL, Chang CJ, Huang YL. Automatic Segmentation of Choroid Layer Using Deep Learning on Spectral Domain Optical Coherence Tomography. Applied Sciences. 2021; 11(12):5488. https://doi.org/10.3390/app11125488

Chicago/Turabian StyleHsia, Wei Ping, Siu Lun Tse, Chia Jen Chang, and Yu Len Huang. 2021. "Automatic Segmentation of Choroid Layer Using Deep Learning on Spectral Domain Optical Coherence Tomography" Applied Sciences 11, no. 12: 5488. https://doi.org/10.3390/app11125488