On a Vector towards a Novel Hearing Aid Feature: What Can We Learn from Modern Family, Voice Classification and Deep Learning Algorithms

Abstract

:1. Introduction

1.1. Voice Familiarity: Improved Outcomes and Reduced Listening Effort

1.2. Related Works

1.3. Summary

2. Materials and Methods

2.1. Selection of Representative Voice Samples

2.2. Data Cleaning of Audio Samples: Envelope

2.3. Feature Extraction

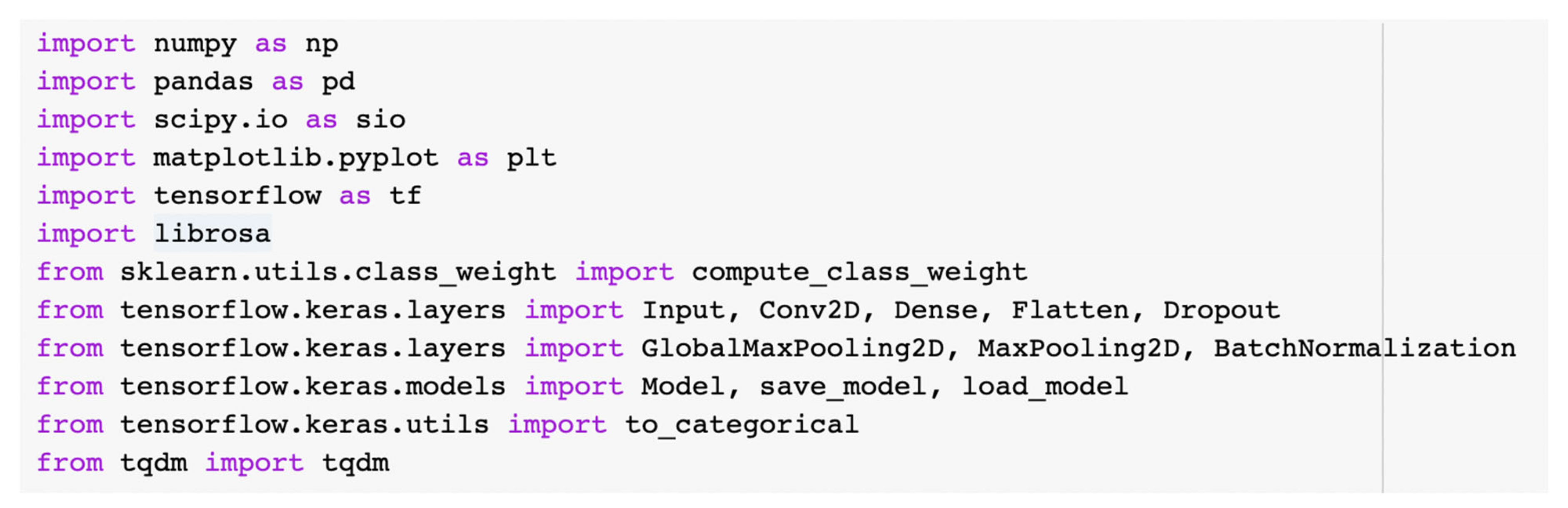

Convolutional Neural Network Model: Training

3. Result

3.1. Convolutional Neural Network Model: Testing

3.2. Model Overfitting Considerations

4. Discussion

4.1. Limitations and Future Directions

4.2. Bigger Picture: What Does Monday Look Like?

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Pichora-Fuller, M.K. How Social Psychological Factors May Modulate Auditory and Cognitive Functioning During Listening. Ear Hear. 2016, 37, 92S–100S. [Google Scholar] [CrossRef]

- Blackmore, K.J.; Kernohan, M.D.; Davison, T.; Johnson, I.J.M. Bone-anchored hearing aid modified with directional microphone: Do patients benefit? J. Laryngol. Otol. 2007, 121, 822–825. [Google Scholar] [CrossRef] [PubMed]

- Geetha, C.; Tanniru, K.; Rajan, R.R. Efficacy of Directional Microphones in Hearing Aids Equipped with Wireless Synchronization Technology. J. Int. Adv. Otol. 2017, 13, 113. [Google Scholar] [CrossRef] [PubMed]

- Hodgetts, W.; Scott, D.; Maas, P.; Westover, L. Development of a Novel Bone Conduction Verification Tool Using a Surface Microphone: Validation With Percutaneous Bone Conduction Users. Ear Hear. 2018, 39, 1157–1164. [Google Scholar] [CrossRef] [PubMed]

- Kompis, M.; Dillier, N. Noise reduction for hearing aids: Combining directional microphones with an adaptive beamformer. J. Acoust. Soc. Am. 1994, 96, 1910–1913. [Google Scholar] [CrossRef] [PubMed]

- McCreery, R. How to Achieve Success with Remote-Microphone HAT. Hear. J. 2014, 67, 30. [Google Scholar] [CrossRef]

- Oeding, K.; Valente, M.; Kerckhoff, J. Effectiveness of the Directional Microphone in the ®Baha® Divino™. J. Am. Acad. Audiol. 2010, 21, 546–557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Picou, E.M.; Ricketts, T.A. An Evaluation of Hearing Aid Beamforming Microphone Arrays in a Noisy Laboratory Setting. J. Am. Acad. Audiol. 2019, 30, 131–144. [Google Scholar] [CrossRef] [PubMed]

- Wesarg, T.; Aschendorff, A.; Laszig, R.; Beck, R.; Schild, C.; Hassepass, F.; Kroeger, S.; Hocke, T.; Arndt, S. Comparison of Speech Discrimination in Noise and Directional Hearing With 2 Different Sound Processors of a Bone-Anchored Hearing System in Adults With Unilateral Severe or Profound Sensorineural Hearing Loss. Otol. Neurotol. 2013, 34, 1064–1070. [Google Scholar] [CrossRef]

- Zhang, X. Benefits and Limitations of Common Directional Microphones in Real-World Sounds. Clin. Med. Res. 2018, 7, 103. [Google Scholar] [CrossRef] [Green Version]

- Ng, E.H.N.; Rudner, M.; Lunner, T.; Rönnberg, J. Noise Reduction Improves Memory for Target Language Speech in Competing Native but Not Foreign Language Speech. Ear Hear. 2015, 36, 82–91. [Google Scholar] [CrossRef]

- Davies-Venn, E.; Souza, P.; Brennan, M.; Stecker, G.C. Effects of Audibility and Multichannel Wide Dynamic Range Compression on Consonant Recognition for Listeners with Severe Hearing Loss. Ear Hear. 2009, 30, 494–504. [Google Scholar] [CrossRef] [Green Version]

- Håkansson, B.; Hodgetts, W. Fitting and verification procedure for direct bone conduction hearing devices. J. Acoust. Soc. Am. 2013, 133, 611. [Google Scholar] [CrossRef]

- Hodgetts, W.E.; Scollie, S.D. DSL prescriptive targets for bone conduction devices: Adaptation and comparison to clinical fittings. Int. J. Audiol. 2017, 56, 521–530. [Google Scholar] [CrossRef] [Green Version]

- Scollie, S. Modern hearing aids: Verification, outcome measures, and follow-up. Int. J. Audiol. 2016, 56, 62–63. [Google Scholar] [CrossRef]

- Seewald, R.; Moodie, S.; Scollie, S.; Bagatto, M. The DSL Method for Pediatric Hearing Instrument Fitting: Historical Perspective and Current Issues. Trends Amplif. 2005, 9, 145–157. [Google Scholar] [CrossRef] [Green Version]

- Barbour, D.L.; Howard, R.T.; Song, X.D.; Metzger, N.; Sukesan, K.A.; DiLorenzo, J.C.; Snyder, B.R.D.; Chen, J.Y.; Degen, E.A.; Buchbinder, J.M.; et al. Online Machine Learning Audiometry. Ear Hear. 2019, 40, 918–926. [Google Scholar] [CrossRef] [PubMed]

- Heisey, K.L.; Walker, A.M.; Xie, K.; Abrams, J.M.; Barbour, D.L. Dynamically Masked Audiograms with Machine Learning Audiometry. Ear Hear. 2020, 41, 1692–1702. [Google Scholar] [CrossRef] [PubMed]

- Jensen, N.S.; Hau, O.; Nielsen, J.B.B.; Nielsen, T.B.; Legarth, S.V. Perceptual Effects of Adjusting Hearing-Aid Gain by Means of a Machine-Learning Approach Based on Individual User Preference. Trends Hear. 2019, 23, 233121651984741. [Google Scholar] [CrossRef]

- Ilyas, M.; Nait-Ali, A. Machine Learning Based Detection of Hearing Loss Using Auditory Perception Responses. In Proceedings of the 2019 15th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Sorrento, Italy, 26–29 November 2019; pp. 146–150. [Google Scholar]

- Picou, E.M.; Ricketts, T.A. Increasing motivation changes subjective reports of listening effort and choice of coping strategy. Int. J. Audiol. 2014, 53, 418–426. [Google Scholar] [CrossRef]

- Westover, L.; Ostevik, A.; Aalto, D.; Cummine, J.; Hodgetts, W.E. Evaluation of word recognition and word recall with bone conduction devices: Do directional microphones free up cognitive resources? Int. J. Audiol. 2020, 59, 367–373. [Google Scholar] [CrossRef] [PubMed]

- Beauchemin, M.; De Beaumont, L.; Vannasing, P.; Turcotte, A.; Arcand, C.; Belin, P.; Lassonde, M. Electrophysiological markers of voice familiarity. Eur. J. Neurosci. 2006, 23, 3081–3086. [Google Scholar] [CrossRef]

- Birkett, P.B.; Hunter, M.D.; Parks, R.W.; Farrow, T.; Lowe, H.; Wilkinson, I.D.; Woodruff, P.W. Voice familiarity engages auditory cortex. NeuroReport 2007, 18, 1375–1378. [Google Scholar] [CrossRef]

- Johnsrude, I.S.; Mackey, A.; Hakyemez, H.; Alexander, E.; Trang, H.P.; Carlyon, R.P. Swinging at a Cocktail Party. Psychol. Sci. 2013, 24, 1995–2004. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Newman, R.; Evers, S. The effect of talker familiarity on stream segregation. J. Phon. 2007, 35, 85–103. [Google Scholar] [CrossRef]

- Yonan, C.A.; Sommers, M.S. The effects of talker familiarity on spoken word identification in younger and older listeners. Psychol. Aging 2000, 15, 88–99. [Google Scholar] [CrossRef] [PubMed]

- Lemke, U.; Besser, J. Cognitive Load and Listening Effort: Concepts and Age-Related Considerations. Ear Hear. 2016, 37, 77S–84S. [Google Scholar] [CrossRef]

- Zhang, Y.; Pezeshki, M.; Brakel, P.; Zhang, S.; Laurent, C.; Bengio, Y.; Courville, A. Towards End-to-End Speech Recognition with Deep Convolutional Neural Networks. arXiv 2017, arXiv:1701.02720. [Google Scholar]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech Recognition Using Deep Neural Networks: A Systematic Review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Adams, S. Instrument-Classifier. 18 September 2018. Available online: https://github.com/seth814/Instrument-Classifier/blob/master/audio_eda.py (accessed on 15 June 2020).

- You, S.D.; Liu, C.-H.; Chen, W.-K. Comparative study of singing voice detection based on deep neural networks and ensemble learning. Hum. Cent. Comput. Inf. Sci. 2018, 8, 34. [Google Scholar] [CrossRef]

- Syed, S.A.; Rashid, M.; Hussain, S.; Zahid, H. Comparative Analysis of CNN and RNN for Voice Pathology Detection. BioMed Res. Int. 2021, 2021, 1–8. [Google Scholar] [CrossRef]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.W.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar]

- Sunitha, C.; Chandra, E. Speaker Recognition using MFCC and Improved Weighted Vector Quantization Algorithm. Int. J. Eng. Technol. 2015, 7, 1685–1692. [Google Scholar]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. Librosa: Audio and Music Signal Analysis in Python. Scientific Computing with Python, Austin, Texas. Available online: http://conference.scipy.org/proceedings/scipy2015/pdfs/brian_mcfee.pdf (accessed on 15 June 2020).

- Gori, M. Chapter 1: The big picture. In Machine Learning: A Constraint-Based Approach; Morgan Kaufmann: Cambridge, MA, USA, 2018; pp. 2–58. [Google Scholar]

- Cornelisse, D. An intuitive guide to Convolutional Neural Networks. 26 February 2018. Available online: https://www.freecodecamp.org/news/an-intuitive-guide-to-convolutional-neural-networks-260c2de0a050/ (accessed on 19 June 2020).

- Singh, S. Fully Connected Layer: The Brute Force Layer of a Machine Learning Model. 2 March 2019. Available online: https://iq.opengenus.org/fully-connected-layer/ (accessed on 19 June 2020).

- Brownlee, J. Loss and Loss Functions for Training Deep Learning Neural Networks. 22 October 2019. Available online: https://machinelearningmastery.com/loss-and-loss-functions-for-training-deep-learning-neural-networks/ (accessed on 19 June 2020).

- Ying, X. An Overview of Overfitting and its Solutions. In Journal of Physics: Conference Series; Institute of Physics Publishing: Philadelphia, PA, USA, 2019; Volume 1168, p. 022022. [Google Scholar]

- Datascience, E. Overfitting in Machine Learning: What it Is and How to Prevent it? 2020. Available online: https://elitedatascience.com/overfitting-in-machine-learning (accessed on 25 June 2020).

- Badshah, A.M.; Ahmad, J.; Rahim, N.; Baik, S.W. Speech Emotion Recognition from Spectrograms with Deep Convolutional Neural Network. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Korea, 13–15 February 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative Study of CNN and RNN for Natural Language Processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Al-Kaltakchi, M.T.S.; Abdullah, M.A.M.; Woo, W.L.; Dlay, S.S. Combined i-Vector and Extreme Learning Machine Approach for Robust Speaker Identification and Evaluation with SITW 2016, NIST 2008, TIMIT Databases. Circuits Syst. Signal Process. 2021, 1–21. [Google Scholar] [CrossRef]

- Yadav, S.; Rai, A. Learning Discriminative Features for Speaker Identification and Verification. Interspeech 2018, 2018, 1–5. [Google Scholar] [CrossRef] [Green Version]

- Tacotron2: WaveNet-basd Text-to-Speech Demo. (n.d.). Available online: https://colab.research.google.com/github/r9y9/Colaboratory/blob/master/Tacotron2_and_WaveNet_text_to_speech_demo.ipynb (accessed on 1 July 2020).

- Mohammed, M.A.; Abdulkareem, K.H.; Mostafa, S.A.; Ghani, M.K.A.; Maashi, M.S.; Garcia-Zapirain, B.; Oleagordia, I.; AlHakami, H.; Al-Dhief, F.T. Voice Pathology Detection and Classification Using Convolutional Neural Network Model. Appl. Sci. 2020, 10, 3723. [Google Scholar] [CrossRef]

| Name | Time (s) |

|---|---|

| Alex | 95.06 |

| Cameron | 103.28 |

| Claire | 100.93 |

| Gloria | 101.84 |

| Haley | 97.04 |

| Jay | 97.54 |

| Luke | 99.17 |

| Manny | 100.74 |

| Mitchell | 105.04 |

| Phil | 107.07 |

| Models | Basic CNN Model | Advanced CNN Model | ||||

|---|---|---|---|---|---|---|

| Batch size | 32 | 32 | ||||

| Iteration | 1262 | 1262 | ||||

| Duration of each Iteration | 8–9 ms | 16–17 ms | ||||

| Epochs | 50 | 50 | ||||

| Duration of each epoch | 10–12 s | 20–21 s | ||||

| Learning Rate | 0.01 | 0.01 | ||||

| Optimizer function | Adam | Adam | ||||

| Loss function | Sparse categorical cross entropy | Sparse categorical cross entropy | ||||

| Hidden layer details | Hidden layer | Hidden units | Activation function | Hidden layer | Hidden units | Activation function |

| Convolutional layer | 32 | Rectified Linear Unit | ||||

| Convolutional layer | 32 | Rectified Linear Unit | ||||

| Convolutional layer | 32 | Rectified Linear Unit | Convolutional layer | 64 | Rectified Linear Unit | |

| Convolutional layer | 64 | Rectified Linear Unit | ||||

| Convolutional layer | 128 | Rectified Linear Unit | ||||

| Dense layer | 1024 | Rectified Linear Unit | Convolutional layer | 128 | Rectified Linear Unit | |

| Dense layer | 1024 | Rectified Linear Unit | ||||

| Class | Accuracy (%) |

|---|---|

| Alex | 99.60 |

| Cameron | 100 |

| Claire | 99.79 |

| Gloria | 99.60 |

| Haley | 99.80 |

| Jay | 100 |

| Luke | 99.81 |

| Manny | 100 |

| Mitchell | 100 |

| Phil | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hodgetts, W.; Song, Q.; Xiang, X.; Cummine, J. On a Vector towards a Novel Hearing Aid Feature: What Can We Learn from Modern Family, Voice Classification and Deep Learning Algorithms. Appl. Sci. 2021, 11, 5659. https://doi.org/10.3390/app11125659

Hodgetts W, Song Q, Xiang X, Cummine J. On a Vector towards a Novel Hearing Aid Feature: What Can We Learn from Modern Family, Voice Classification and Deep Learning Algorithms. Applied Sciences. 2021; 11(12):5659. https://doi.org/10.3390/app11125659

Chicago/Turabian StyleHodgetts, William, Qi Song, Xinyue Xiang, and Jacqueline Cummine. 2021. "On a Vector towards a Novel Hearing Aid Feature: What Can We Learn from Modern Family, Voice Classification and Deep Learning Algorithms" Applied Sciences 11, no. 12: 5659. https://doi.org/10.3390/app11125659

APA StyleHodgetts, W., Song, Q., Xiang, X., & Cummine, J. (2021). On a Vector towards a Novel Hearing Aid Feature: What Can We Learn from Modern Family, Voice Classification and Deep Learning Algorithms. Applied Sciences, 11(12), 5659. https://doi.org/10.3390/app11125659