A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems

Abstract

:1. Introduction

2. Related Work

2.1. Cache Management Methods

- LRU: A least-recently-used (LRU) method [1] is a common replacement strategy based on temporal locality. Pages that have been frequently and recently accessed will have a high chance of being accessed again after a while. Therefore, when the cache space is full, an LRU method will select the least-recently-used (LRU) page as a victim and remove it. An LRU method generally uses a linked list. The newly inserted page will be at the head of the list. When any page is hit in the cache, the page will be moved to the head of the list. When the cache space is full, the least-recently-used (LRU) page at the tail of the list will be selected as a victim. However, the hot data stored in the cache could be easily evicted to cause performance degradation due to a lot of sequential accessing.

- Two-Level LRU: As shown in Figure 1, a two-level LRU method [2] uses two LRU linked lists. The newly inserted page that does not exist in the cache will be placed at the head of the first list. When any page is hit in the cache, the page will be moved to the head of the second list. If the second list is full, the page at the tail of the second list will be moved to the head of the first list. If the first list is full, the page at the tail of the first list will be evicted from the list. Compared with the LRU method, when encountering a lot of sequential accesses, it will only affect the first list and will not affect the hot data in the second list. So, the two-level LRU method can effectively improve the cache hit ratio. However, different length settings of the two LRU lists will affect its performance, and how to dynamically and efficiently adjust the lengths of the two LRU lists for different workloads will be described later (e.g., ARC).

- ARC: As shown in Figure 2, an adaptive replacement cache (ARC) [3] also uses two LRU linked lists: L1 and L2. L1 stores the pages that appear only once, and L2 stores the pages that appear two or more times. L1 is divided into T1 and B1, and L2 is divided into T2 and B2. T1 and T2 store the contents of the pages in the cache and the related metadata in the cache directory, but B1 and B2 (e.g., ghost buffers) only store the related metadata evicted from T1 and T2, respectively. Assuming that the cache can buffer c pages, the length limits of ARC are 0 ≤ |L1| ≤ c, 0 ≤ |L1| + |L2| ≤ 2c, 0 ≤ |T1| + |T2| ≤ c, 0 ≤ |B1| + |B2| ≤ c. If a newly inserted page does not exist in L1 and L2, it will be placed at the head of T1; otherwise, it will be placed at the head of T2. The space of T1 is decided by a target size p. When a new page is inserted to the cache that is full, we have to remove one victim page from T1 or T2 according to the following condition—if |T1| ≥ p, the LRU page of T1 will be evicted and then its related metadata will be inserted to B1; otherwise, if |T1| < p, the LRU page of T2 will be evicted and then its related metadata will be inserted to B2. If a hit occurs in B1, the target size p of T1 will be increased by the maximum of {|B2|/|B1|, 1}. If a hit occurs in B2, the target size p of T1 will be decreased by the maximum of {|B1|/|B2|, 1}. ARC can dynamically and efficiently adjust the lengths of L1 and L2 for different workloads to achieve the best performance.

- VBBMS: As shown in Figure 3, VBBMS [4] is a typical flash-aware buffer method to combine consecutive pages in the same virtual block. When the cache space is insufficient, VBBMS will evict the entire virtual block so that the data written to flash memory can be more sequential. By the eviction of the entire virtual block, VBBMS can easily make a block to have more invalid pages and reduce the overhead of the garbage collection. In addition, because random requests and sequential requests have completely different characteristics, VBBMS uses an LRU list to manage random requests and a FIFO queue to manage sequential requests. In addition, the parameter setting (such as the size of a virtual block and the distributions of an LRU list and a FIFO queue) could have a large impact on the results.

2.2. Association Analysis

3. Motivation

4. A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems

4.1. Overview

4.2. Request Association Analyzer

4.2.1. Request Sampling

4.2.2. Request Association Analysis

4.2.3. Request Association Pairs Management

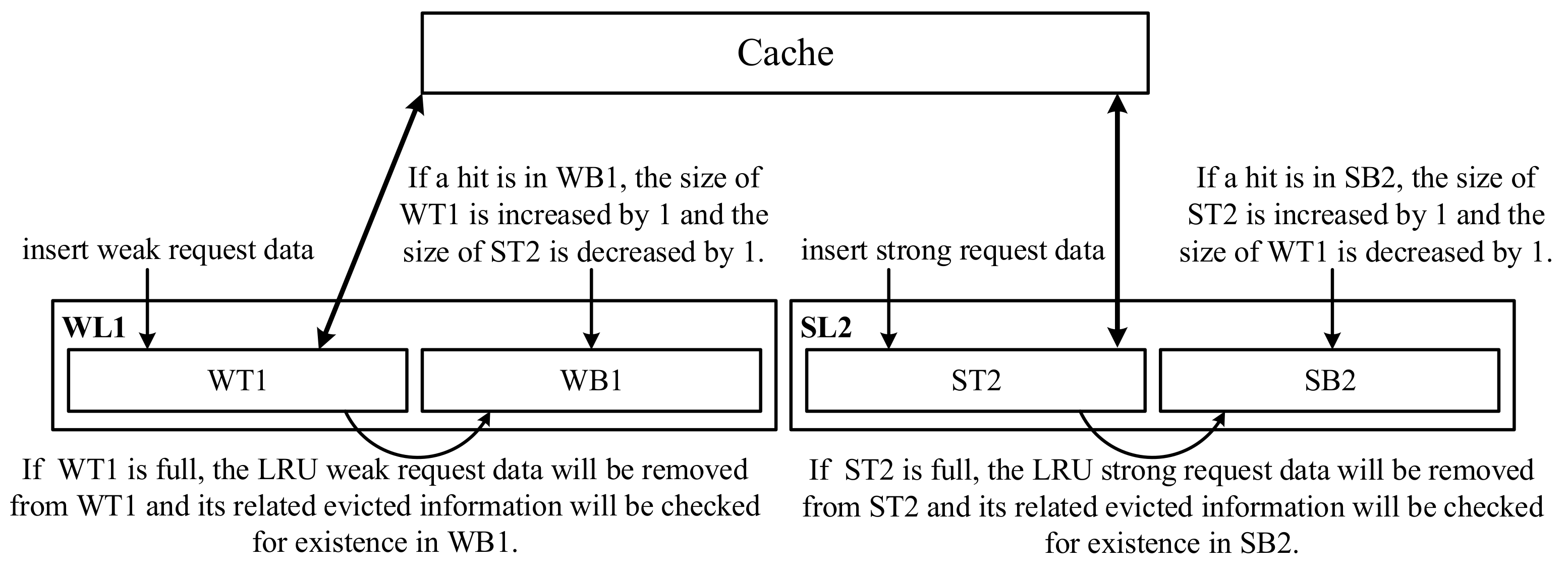

4.3. Request Association Cache

4.3.1. Strong/Weak Request Data Management

4.3.2. Request Strength Threshold to Separate Strong and Weak Request Data

4.4. Execution Delay of Request Association Analyzer

5. Performance Evaluation

5.1. Experimental Setup and Performance Metrics

5.2. Experimental Results: Improvement of the Average Hit Ratio

5.3. Discussion and Overhead Analysis

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Johnson, T.; Shasha, D. 2Q: A Low Overhead High Performance Buffer Management Replacement Algorithm. In Proceedings of the 20th International Conference on Very Large Data Bases (VLDB), San Francisco, CA, USA, 12–15 September 1994; pp. 439–450. [Google Scholar]

- Chang, L.P.; Kuo, T.W. An Adaptive Striping Architecture for Flash Memory Storage Systems of Embedded Systems. In Proceedings of the 8th IEEE Real-Time and Embedded Technology and Applications Symposium, San Jose, CA, USA, 25–27 September 2002; pp. 187–196. [Google Scholar]

- Megiddo, N.; Modha, D.S. ARC: A Self-Tuning, Low Overhead Replacement Cache. In Proceedings of the 2nd USENIX Conference on File and Storage Technologies, San Francisco, CA, USA, 31 March–2 April 2003; pp. 115–130. [Google Scholar]

- Du, C.; Yao, Y.; Zhou, J.; Xu, X. VBBMS: A Novel Buffer Management Strategy for NAND Flash Storage Devices. IEEE Trans. Consum. Electron. 2019, 65, 134–141. [Google Scholar] [CrossRef]

- Chen, R.; Shao, Z.; Li, T. Bridging the I/O performance gap for big data workloads: A new NVDIMM-based approach. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Wu, C.; Chang, Y.; Yang, M.; Kuo, T. Joint Management of CPU and NVDIMM for Breaking Down the Great Memory Wall. IEEE Trans. Comput. 2020, 69, 722–733. [Google Scholar] [CrossRef]

- Chen, R.; Shao, Z.; Liu, D.; Feng, Z.; Li, T. Towards Efficient NVDIMM-based Heterogeneous Storage Hierarchy Management for Big Data Workloads. In Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture, Columbus, OH, USA, 12–16 October 2019; pp. 849–860. [Google Scholar]

- Mutlu, O.; Moscibroda, T. Parallelism-Aware Batch Scheduling: Enhancing Both Performance and Fairness of Shared DRAM Systems. SIGARCH Comput. Archit. News 2008, 36, 63–74. [Google Scholar] [CrossRef]

- Micheloni, R.; Aritome, S.; Crippa, L. Array Architectures for 3-D NAND Flash Memories. Proc. IEEE 2017, 105, 1634–1649. [Google Scholar] [CrossRef]

- Fukami, A.; Ghose, S.; Luo, Y.; Cai, Y.; Mutlu, O. Improving the Reliability of Chip-Off Forensic Analysis of NAND Flash Memory Devices. Digit. Investig. 2017, 20, S1–S11. [Google Scholar] [CrossRef]

- Cai, Y.; Ghose, S.; Haratsch, E.F.; Luo, Y.; Mutlu, O. Error Characterization, Mitigation, and Recovery in Flash-Memory-based Solid-State Drives. Proc. IEEE 2017, 105, 1666–1704. [Google Scholar] [CrossRef]

- Luo, Y.; Ghose, S.; Cai, Y.; Haratsch, E.F.; Mutlu, O. HeatWatch: Improving 3D NAND Flash Memory Device Reliability by Exploiting Self-Recovery and Temperature Awareness. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), Vienna, Austria, 24–28 February 2018; pp. 504–517. [Google Scholar]

- Luo, Y. Architectural Techniques for Improving NAND Flash Memory Reliability. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2012. [Google Scholar]

- Park, S.Y.; Jung, D.; Kang, J.U.; Kim, J.; Lee, J. CFLRU: A replacement algorithm for flash memory. In Proceedings of the 2006 International Conference on Compilers, Architecture and Synthesis for Embedded Systems, Seoul, Korea, 22–25 October 2006. [Google Scholar]

- Kim, H.; Ahn, S. BPLRU: A Buffer Management Scheme for Improving Random Writes in Flash Storage. In Proceedings of the 6th USENIX Conference on File and Storage Technologies (FAST 08); USENIX Association: San Jose, CA, USA; 26–29 February 2008.

- Jin, P.; Ou, Y.; Härder, T.; Li, Z. AD-LRU: An efficient buffer replacement algorithm for flash-based databases. Data Knowl. Eng. 2012, 72, 83–102. [Google Scholar] [CrossRef]

- Yuan, Y.; Shen, Y.; Li, W.; Yu, D.; Yan, L.; Wang, Y. PR-LRU: A Novel Buffer Replacement Algorithm Based on the Probability of Reference for Flash Memory. IEEE Access 2017, 5, 12626–12634. [Google Scholar] [CrossRef]

- Kang, D.H.; Han, S.J.; Kim, Y.C.; Eom, Y.I. CLOCK-DNV: A write buffer algorithm for flash storage devices of consumer electronics. IEEE Trans. Consum. Electron. 2017, 63, 85–91. [Google Scholar] [CrossRef]

- Agrawal, R.; Srikant, R. Fast Algorithms for Mining Association Rules. In Proceedings of the 1994 International Conference on Very Large Data Bases (VLDB), Santiago de Chile, Chile, 12–15 September 1994; Volume 1215, pp. 487–499. [Google Scholar]

- Han, J.; Pei, J.; Yin, Y. Mining Frequent Patterns without Candidate Generation. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 1–12. [Google Scholar]

- Li, J.; Xu, X.; Huang, B.; Liao, J. Frequent Pattern-based Mapping at Flash Translation Layer of Solid-State Drives. IEEE Access 2019, 7, 95233–95239. [Google Scholar] [CrossRef]

- Gupta, A.; Kim, Y.; Urgaonkar, B. DFTL: A Flash Translation Layer Employing Demand-based Selective Caching of Page-level Address Mappings. In Proceedings of the 14th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Washington, DC, USA, 7–11 March 2009; pp. 229–240. [Google Scholar]

- Narayanan, D.; Donnelly, A.; Rowstron, A. Write Off-Loading: Practical Power Management for Enterprise Storage. ACM Trans. Storage (TOS) 2008, 4, 1–23. [Google Scholar] [CrossRef]

- Lee, C.; Kumano, T.; Matsuki, T.; Endo, H.; Fukumoto, N.; Sugawara, M. Understanding Storage Traffic Characteristics on Enterprise Virtual Desktop Infrastructure. In Proceedings of the 10th ACM International Systems and Storage Conference, Haifa, Israel, 22–24 May 2017; pp. 1–11. [Google Scholar]

- Yamashita, R.; Magia, S.; Higuchi, T.; Yoneya, K.; Yamamura, T.; Mizukoshi, H.; Zaitsu, S.; Yamashita, M.; Toyama, S.; Kamae, N.; et al. 11.1 A 512Gb 3b/cell flash memory on 64-word-line-layer BiCS technology. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 196–197. [Google Scholar]

- Nie, S.; Zhang, Y.; Wu, W.; Yang, J. Layer RBER variation aware read performance optimization for 3D flash memories. In Proceedings of the 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–6. [Google Scholar]

| CPU | Dual-Core NVIDIA Denver 2 64-Bit CPU |

| Quad-Core ARM Cortex-A57 MPCore | |

| RAM | 8 GB 128-bit LPDDR4 Memory |

| Storage | 32 GB eMMC 5.1 |

| OS | Ubuntu 16.04 |

| Workload | Number of | Write | Average Read | Average Write |

|---|---|---|---|---|

| Requests | Ratio (%) | Request Size (KB) | Request Size (KB) | |

| MSR-usr_0 | 2,237,889 | 59.58 | 40.92 | 10.28 |

| Systor’17-LUN_0 | 1,768,140 | 22.24 | 27.54 | 8.84 |

| Systor’17-LUN_1 | 1,390,948 | 27.66 | 20.33 | 16.47 |

| Systor’17-LUN_2 | 3,615,901 | 12.38 | 19 | 16.7 |

| Systor’17-LUN_3 | 2,161,973 | 20.49 | 19.5 | 14.38 |

| Systor’17-LUN_4 | 2,496,148 | 17.66 | 28.73 | 22.98 |

| Systor’17-LUN_6 | 2,146,801 | 17.09 | 21.76 | 16.55 |

| Financial | 5,334,987 | 76.84 | 2.25 | 3.73 |

| Workload | RAA when Compared with LRU, ARC, and VBBMS | RAA-Delay when Compared with LRU, ARC, and VBBMS |

|---|---|---|

| MSR-usr_0 | 42.25%/28.77%/35.27% | 28.76%/17.09%/22.75% |

| Systor’17-LUN_0 | 60.45%/27.68%/40.94% | 55.80%/24.29%/37.04% |

| Systor’17-LUN_1 | 39.99%/17.99%/27.85% | 34.60%/13.62%/23.01% |

| Systor’17-LUN_2 | 29.46%/21.65%/24.20% | 27.98%/20.29%/22.81% |

| Systor’17-LUN_3 | 43.07%/18.52%/29.26% | 33.75%/10.89%/20.88% |

| Systor’17-LUN_4 | 28.68%/14.73%/21.39% | 24.38%/11.05%/17.41% |

| Systor’17-LUN_6 | 33.18%/16.15%/24.82% | 30.16%/13.59%/22.01% |

| Financial | 3.58%/2%/4.6% | 3.48%/1.91%/4.5% |

| (Normalized to 4MB LRU) |

| Workload | RAA Ave. Computation Interval | RAA-Delay Ave. Computation Interval | Ave. Computation Time of Association Analysis |

|---|---|---|---|

| MSR-usr_0 | 21.15 | 721.85 | 0.31 |

| Systor’17-LUN_0 | 0.97 | 2.604 | 0.31 |

| Systor’17-LUN_1 | 0.87 | 3.146 | 0.32 |

| Systor’17-LUN_2 | 0.96 | 1.50 | 0.32 |

| Systor’17-LUN_3 | 0.82 | 1.87 | 0.32 |

| Systor’17-LUN_4 | 0.78 | 1.34 | 0.32 |

| Systor’17-LUN_6 | 1.03 | 1.69 | 0.32 |

| Financial | 0.80 | 1.03 | 0.31 |

| (Unit: Sec) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, C.-H.; Wu, C.-H. A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems. Appl. Sci. 2021, 11, 6623. https://doi.org/10.3390/app11146623

Su C-H, Wu C-H. A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems. Applied Sciences. 2021; 11(14):6623. https://doi.org/10.3390/app11146623

Chicago/Turabian StyleSu, Chi-Hsiu, and Chin-Hsien Wu. 2021. "A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems" Applied Sciences 11, no. 14: 6623. https://doi.org/10.3390/app11146623

APA StyleSu, C.-H., & Wu, C.-H. (2021). A Cache Policy Based on Request Association Analysis for Reliable NAND-Based Storage Systems. Applied Sciences, 11(14), 6623. https://doi.org/10.3390/app11146623