Head-Related Transfer Functions for Dynamic Listeners in Virtual Reality

Abstract

:1. Introduction

2. HRTF Sets and Analysis

2.1. HRTF Sets

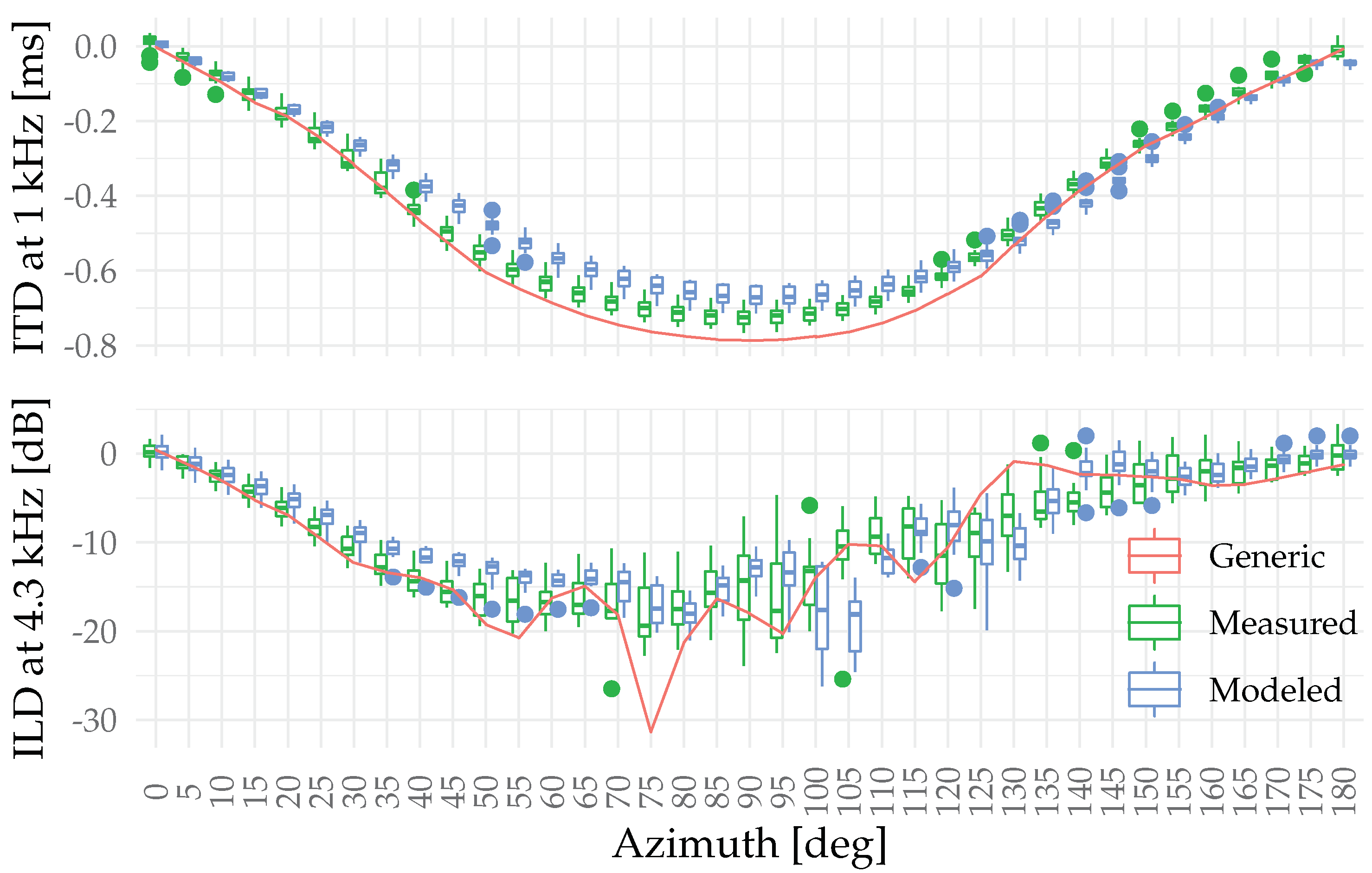

2.2. Objective Analysis

3. Subjective Experiments Overview

4. Experiment I: Static Localization

4.1. Stimuli

4.2. Procedure

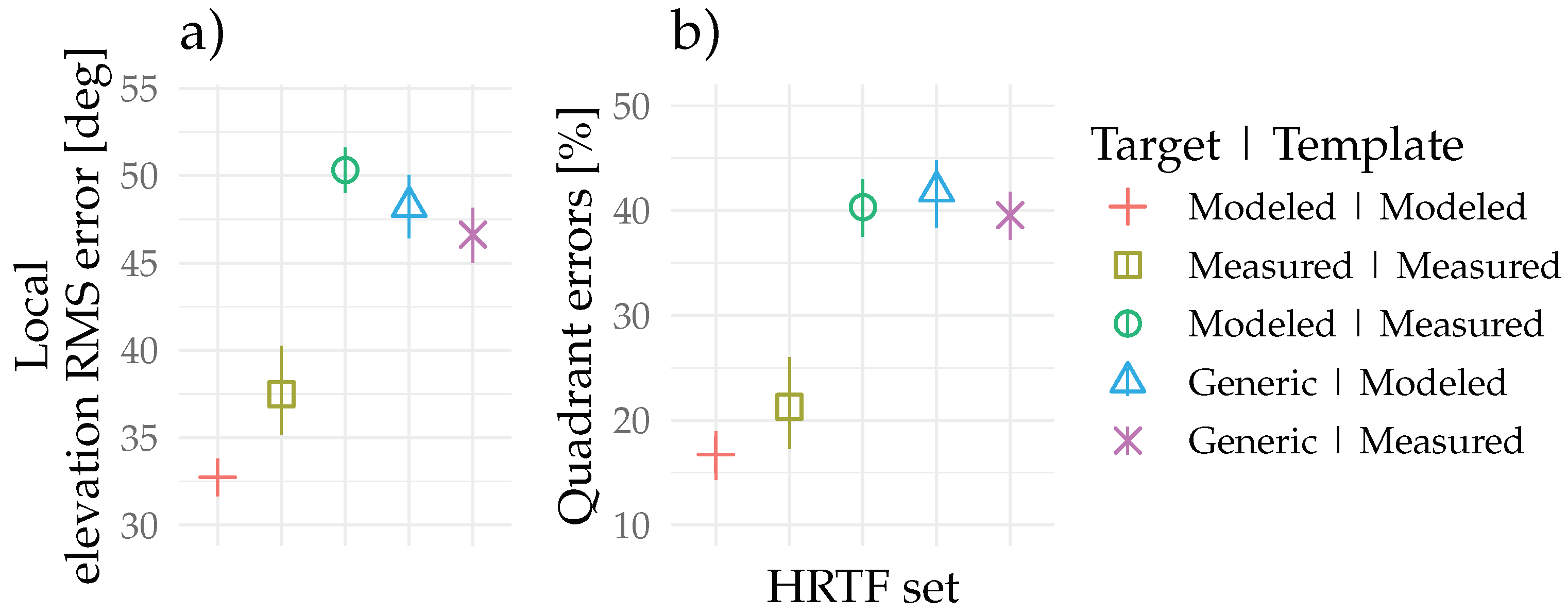

4.3. Results

5. Experiment II: Dynamic 6-DoF Virtual Reality

5.1. Virtual Reality Environment

5.2. Stimuli and Conditions

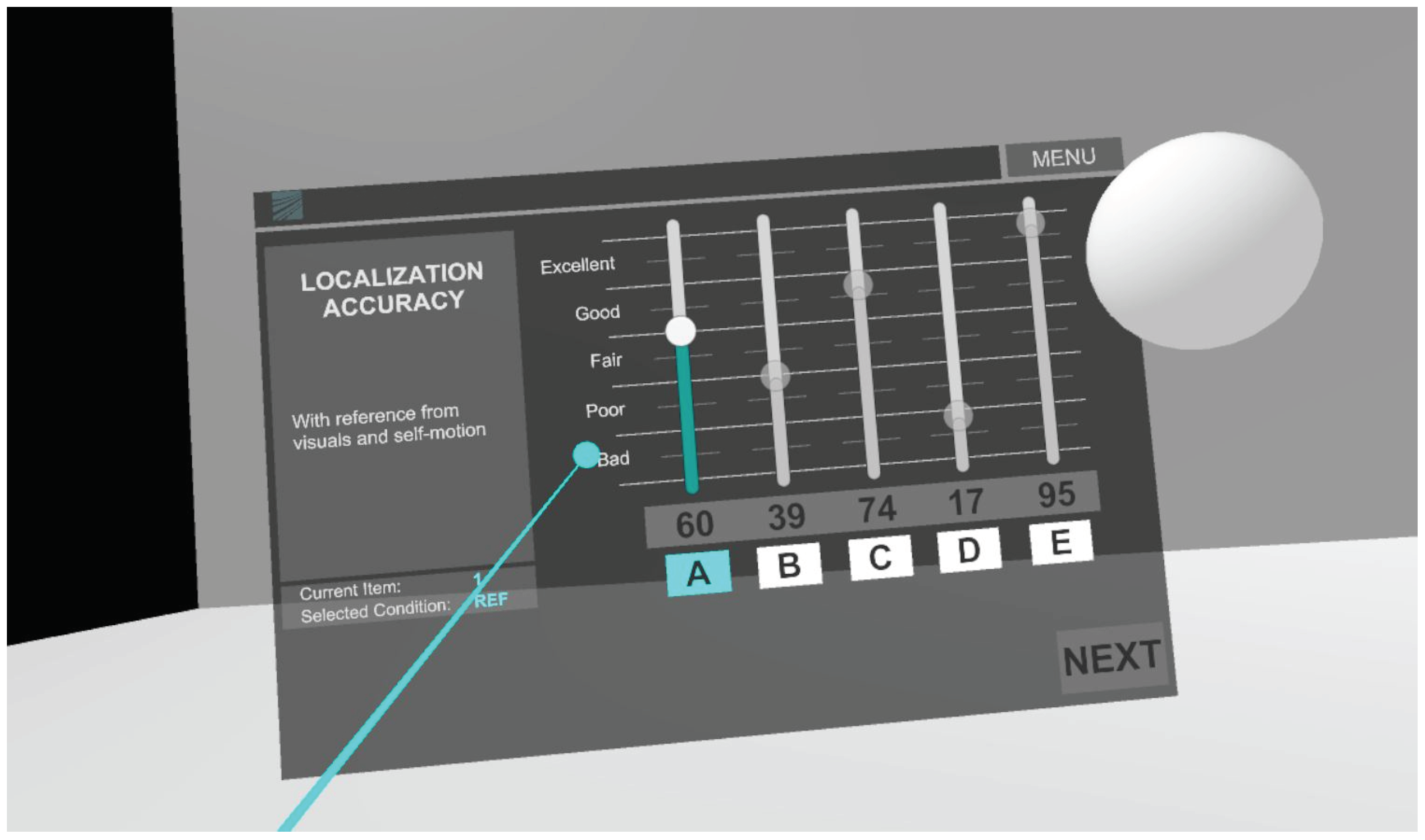

5.3. Procedure

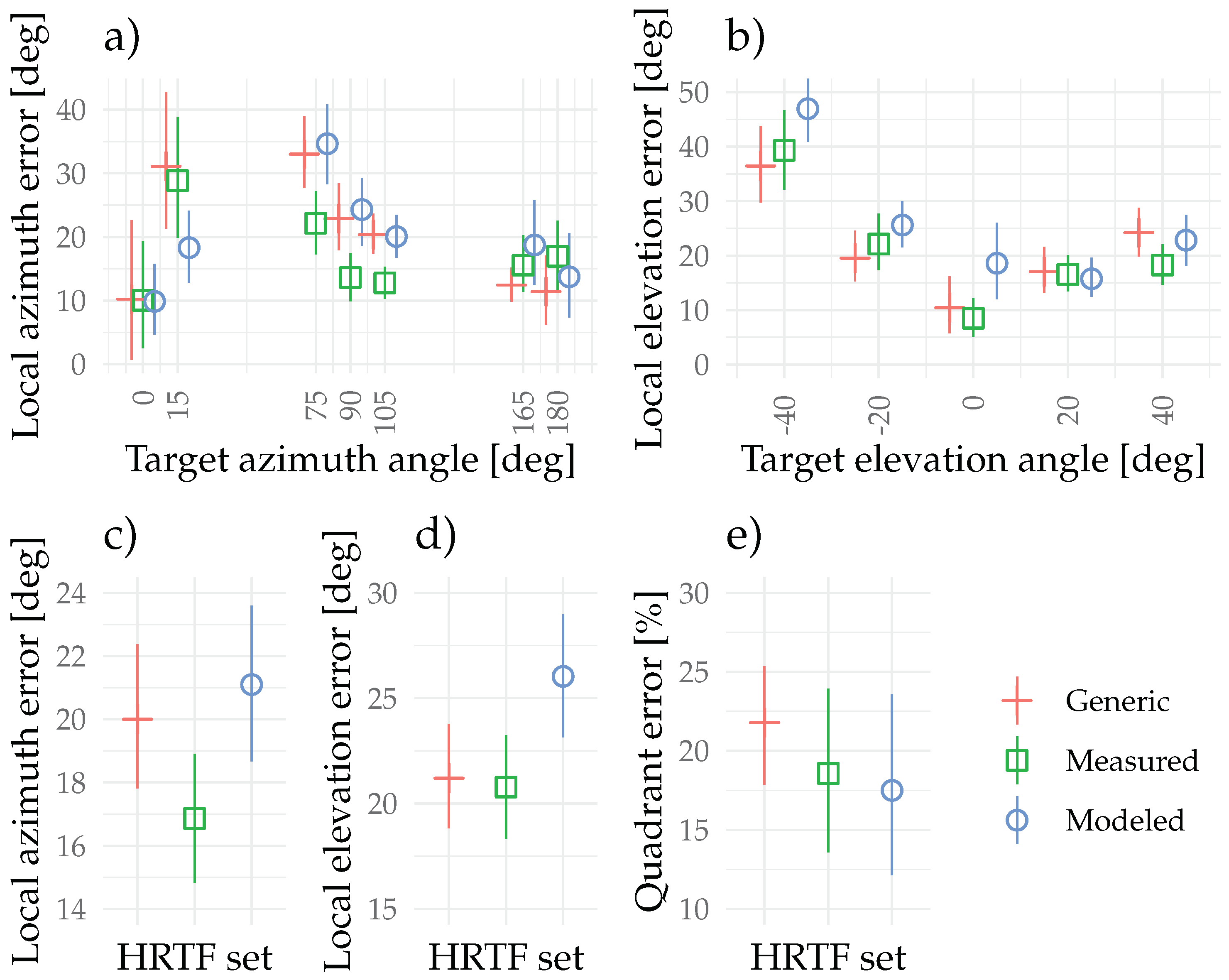

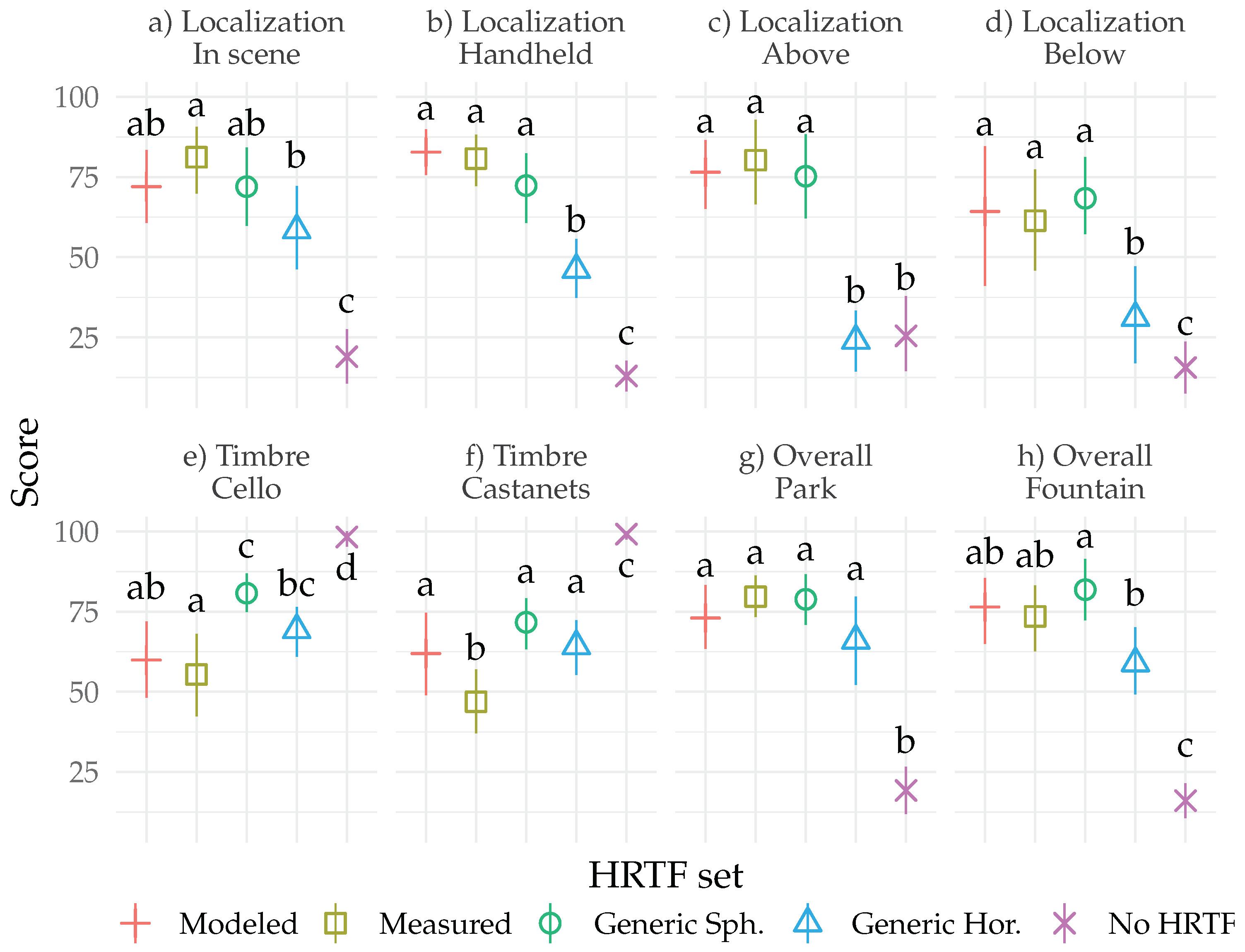

5.4. Results

6. Discussion

6.1. Objective Deviations

6.2. Static Localization

6.3. Dynamic Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Møller, H.; Sørensen, M.F.; Hammershøi, D.; Jensen, C.B. Head related transfer functions of human subjects. J. Audio Eng. Soc. 1995, 43, 300–321. [Google Scholar]

- Wenzel, E.M.; Arruda, M.; Kistler, D.J.; Wightman, F.L. Localization using nonindividualized head-related transfer functions. J. Acoust. Soc. Am. 1993, 94, 111–123. [Google Scholar] [CrossRef]

- Bronkhorst, A.W. Localization of real and virtual sound sources. J. Acoust. Soc. Am. 1995, 98, 2542–2553. [Google Scholar] [CrossRef]

- Middlebrooks, J.C. Virtual localization improved by scaling non-individualized external-ear transfer functions in frequency. J. Acoust. Soc. Am. 1999, 106, 1493–1510. [Google Scholar] [CrossRef] [PubMed]

- Oberem, J.; Richter, J.G.; Setzer, D.; Seibold, J.; Koch, I.; Fels, J. Experiments on localization accuracy with non-individual and individual HRTFs comparing static and dynamic reproduction methods. bioRxiv 2020, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Best, V.; Baumgartner, R.; Lavandier, M.; Majdak, P.; Kopčo, N. Sound externalization: A review of recent research. Trends Hear. 2020, 24. [Google Scholar] [CrossRef]

- Wallach, H. On sound localization. J. Acoust. Soc. Am. 1939, 10, 270–274. [Google Scholar] [CrossRef]

- Begault, D.R.; Wenzel, E.M.; Anderson, M.R. Direct comparison of the impact of head tracking, reverberation, and individualized head-related transfer functions on the spatial perception of a virtual speech source. J. Audio Eng. Soc. 2001, 49, 904–916. [Google Scholar] [PubMed]

- Romigh, G.D.; Brungart, D.S.; Simpson, B.D. Free-field localization performance with a head-tracked virtual auditory display. IEEE J. Sel. Top. Signal Process. 2015, 9, 943–954. [Google Scholar] [CrossRef]

- McAnally, K.I.; Martin, R.L. Sound localization with head movement: Implications for 3-d audio displays. Front. Neurosci. 2014, 8, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Rummukainen, O.S.; Schlecht, S.J.; Habets, E.A.P. Self-translation induced minimum audible angle. J. Acoust. Soc. Am. 2018, 144, EL340–EL345. [Google Scholar] [CrossRef] [Green Version]

- Ben-Hur, Z.; Alon, D.L.; Robinson, P.W.; Mehra, R. Localization of virtual sounds in dynamic listening using sparse HRTFs. In Proceedings of the Audio Engineering Society International Conference on Audio for Virtual and Augmented Reality, Online, 13 August 2020; pp. 1–9. [Google Scholar]

- Jenny, C.; Reuter, C. Usability of individualized head-related transfer functions in virtual reality: Empirical study with perceptual attributes in sagittal plane sound localization. JMIR Serious Games 2020, 8, e17576. [Google Scholar] [CrossRef]

- Rummukainen, O.S.; Robotham, T.; Plinge, A.; Wefers, F.; Herre, J.; Habets, E.A.P. Listening tests with individual versus generic head-related transfer functions in six-degrees-of-freedom virtual reality. In Proceedings of the 5th International Conference on Spatial Audio (ICSA), Ilmenau, Germany, 26–28 September 2019; pp. 55–62. [Google Scholar] [CrossRef]

- Blau, M.; Budnik, A.; Fallahi, M.; Steffens, H.; Ewert, S.D.; van de Par, S. Toward realistic binaural auralizations—Perceptual comparison between measurement and simulation-based auralizations and the real room for a classroom scenario. Acta Acust. 2021, 5. [Google Scholar] [CrossRef]

- Armstrong, C.; Thresh, L.; Murphy, D.; Kearney, G. A perceptual evaluation of individual and non-individual HRTFs: A case study of the SADIE II database. Appl. Sci. 2018, 8, 2029. [Google Scholar] [CrossRef] [Green Version]

- Pelzer, R.; Dinakaran, M.; Brinkmann, F.; Lepa, S.; Grosche, P.; Weinzierl, S. Head-related transfer function recommendation based on perceptual similarities and anthropometric features. J. Acoust. Soc. Am. 2020, 148, 3809–3817. [Google Scholar] [CrossRef]

- Spagnol, S. HRTF selection by anthropometric regression for improving horizontal localization accuracy. IEEE Signal Process. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Sikström, E.; Geronazzo, M.; Kleimola, J.; Avanzini, F.; de Götzen, A.; Serafin, S. Virtual reality exploration with different head-related transfer functions. In Proceedings of the 15th Sound and Music Computing Conference, Limassol, Cyprus, 4–7 July 2018; pp. 85–92. [Google Scholar] [CrossRef]

- Poirier-Quinot, D.; Katz, B.F. Assessing the impact of head-related transfer function individualization on task performance: Case of a virtual reality shooter game. J. Audio Eng. Soc. 2020, 68, 248–260. [Google Scholar] [CrossRef]

- Ernst, M.O.; Bülthoff, H.H. Merging the senses into a robust percept. Trends Cogn. Sci. 2004, 8, 162–169. [Google Scholar] [CrossRef]

- Parseihian, G.; Katz, B.F.G. Rapid head-related transfer function adaptation using a virtual auditory environment. J. Acoust. Soc. Am. 2012, 131, 2948–2957. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berger, C.C.; Gonzalez-Franco, M.; Tajadura-Jiménez, A.; Florencio, D.; Zhang, Z. Generic HRTFs may be good enough in virtual reality. Improving source localization through cross-modal plasticity. Front. Neurosci. 2018, 12, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Stitt, P.; Picinali, L.; Katz, B.F. Auditory accommodation to poorly matched non-individual spectral localization cues through active learning. Sci. Rep. 2019, 9, 1063. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Steadman, M.A.; Kim, C.; Lestang, J.H.; Goodman, D.F.; Picinali, L. Short-term effects of sound localization training in virtual reality. Sci. Rep. 2019, 9, 18284. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Valzolgher, C.; Campus, C.; Rabini, G.; Gori, M.; Pavani, F. Updating spatial hearing abilities through multisensory and motor cues. Cognition 2020, 204, 104409. [Google Scholar] [CrossRef] [PubMed]

- Trapeau, R.; Aubrais, V.; Schönwiesner, M. Fast and persistent adaptation to new spectral cues for sound localization suggests a many-to-one mapping mechanism. J. Acoust. Soc. Am. 2016, 140, 879–890. [Google Scholar] [CrossRef] [PubMed]

- Richter, J.G.; Behler, G.; Fels, J. Evaluation of a fast HRTF measurement system. In Proceedings of the Audio Engineering Society 140th Convention, Paris, France, 4–7 June 2016; pp. 1–7. [Google Scholar]

- Gumerov, N.A.; O’Donovan, A.E.; Duraiswami, R.; Zotkin, D.N. Computation of the head-related transfer function via the fast multipole accelerated boundary element method and its spherical harmonic representation. J. Acoust. Soc. Am. 2010, 127, 370–386. [Google Scholar] [CrossRef] [Green Version]

- Larcher, V.; Jot, J.M.; Vandernoot, G. Equalization methods in binaural technology. In Proceedings of the Audio Engineering Society 105th Convention, San Francisco, CA, USA, 26–29 September 1998; pp. 1–28. [Google Scholar]

- Søndergaard, P.; Majdak, P. The auditory modeling toolbox. In The Technology of Binaural Listening; Blauert, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 33–56. [Google Scholar] [CrossRef]

- Dietz, M.; Ewert, S.D.; Hohmann, V. Auditory model based direction estimation of concurrent speakers from binaural signals. Speech Commun. 2011, 53, 592–605. [Google Scholar] [CrossRef]

- Klockgether, S.; van de Par, S. Just noticeable differences of spatial cues in echoic and anechoic acoustical environments. J. Acoust. Soc. Am. 2016, 140, EL352–EL357. [Google Scholar] [CrossRef] [Green Version]

- Baumgartner, R.; Majdak, P.; Laback, B. Modeling sound-source localization in sagittal planes for human listeners. J. Acoust. Soc. Am. 2014, 136, 791–802. [Google Scholar] [CrossRef] [Green Version]

- Schinkel-Bielefeld, N.; Lotze, N.; Nagel, F. Audio quality evaluation by experienced and inexperienced listeners. In Proceedings of the Meetings on Acoustics, Montreal, QC, Canada, 2–7 June 2013; Volume 19, pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Stecker, G.C. Exploiting envelope fluctuations to enhance binaural perception. In Proceedings of the Audio Engineering Society 140th Convention, Paris, France, 4–7 June 2016; pp. 1–7. [Google Scholar]

- Robotham, T.; Rummukainen, O.; Habets, E.A.P. Evaluation of binaural renderers in virtual reality environments: Platform and examples. In Proceedings of the Audio Engineering Society 145th Convention, New York, NY, USA, 17–20 October 2018; pp. 1–5. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988; p. 283. [Google Scholar] [CrossRef]

- Brinkmann, F.; Dinakaran, M.; Pelzer, R.; Grosche, P.; Voss, D.; Weinzierl, S. A cross-evaluated database of measured and simulated HRTFs including 3D head meshes, anthropometric features, and headphone impulse responses. J. Audio Eng. Soc. 2019, 67, 705–718. [Google Scholar] [CrossRef]

- Wightman, F.L.; Kistler, D.J. Headphone simulation of free-field listening. II: Psychophysical validation. J. Acoust. Soc. Am. 1989, 85, 868–878. [Google Scholar] [CrossRef]

- Majdak, P.; Goupell, M.J.; Laback, B. 3-D localization of virtual sound sources: Effects of visual environment, pointing method, and training. Atten. Percept. Psychophys. 2010, 72, 454–469. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rummukainen, O.; Wang, J.; Li, Z.; Robotham, T.; Yan, Z.; Li, Z.; Xie, X.; Nagel, F.; Habets, E.A.P. Influence of visual content on the perceived audio quality in virtual reality. In Proceedings of the 145th Audio Engineering Society International Convention, New York, NY, USA, 17–20 October 2018; pp. 1–10. [Google Scholar]

- Langendijk, E.H.A.; Bronkhorst, A.W. Fidelity of three-dimensional-sound reproduction using a virtual auditory display. J. Acoust. Soc. Am. 2000, 107, 528–537. [Google Scholar] [CrossRef] [PubMed]

- Catic, J.; Santurette, S.; Dau, T. The role of reverberation-related binaural cues in the externalization of speech. J. Acoust. Soc. Am. 2015, 138, 1154–1167. [Google Scholar] [CrossRef] [PubMed]

- Werner, S.; Klein, F.; Mayenfels, T.; Brandenburg, K. A summary on acoustic room divergence and its effect on externalization of auditory events. In Proceedings of the 8th International Conference on Quality of Multimedia Experience, Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

| Template | Target | Unsigned Elevation Error | Quadrant Errors |

|---|---|---|---|

| Modeled | Modeled | (CI to ) | (CI to ) |

| Measured | Measured | (CI to ) | (CI to ) |

| Measured | Modeled | (CI to ) | (CI to ) |

| Modeled | Generic | (CI to ) | (CI to ) |

| Measured | Generic | (CI to ) | (CI to ) |

| Scene | Task | Content |

|---|---|---|

| In Scene | Localization accuracy | One audio object: Pink noise |

| Handheld | Localization accuracy | One audio object: Pink noise |

| Above | Localization accuracy | One audio object: Pink noise |

| Below | Localization accuracy | One audio object: Pink noise |

| Cello | Timbral fidelity | One audio object: Anechoic cello |

| Castanets | Timbral fidelity | One audio object: Anechoic castanets |

| Park | Overall audio quality | Two audio objects: Ducks and tree clipping |

| Fountain | Overall audio quality | Two audio objects: Piano and fountain |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rummukainen, O.S.; Robotham, T.; Habets, E.A.P. Head-Related Transfer Functions for Dynamic Listeners in Virtual Reality. Appl. Sci. 2021, 11, 6646. https://doi.org/10.3390/app11146646

Rummukainen OS, Robotham T, Habets EAP. Head-Related Transfer Functions for Dynamic Listeners in Virtual Reality. Applied Sciences. 2021; 11(14):6646. https://doi.org/10.3390/app11146646

Chicago/Turabian StyleRummukainen, Olli S., Thomas Robotham, and Emanuël A. P. Habets. 2021. "Head-Related Transfer Functions for Dynamic Listeners in Virtual Reality" Applied Sciences 11, no. 14: 6646. https://doi.org/10.3390/app11146646