Dynamic Binaural Rendering: The Advantage of Virtual Artificial Heads over Conventional Ones for Localization with Speech Signals

Abstract

:1. Introduction

2. Virtual Artificial Head: Review of Methods and Configurations Chosen for the Present Study

2.1. Calculation of Spectral Weights Using Constrained Optimization

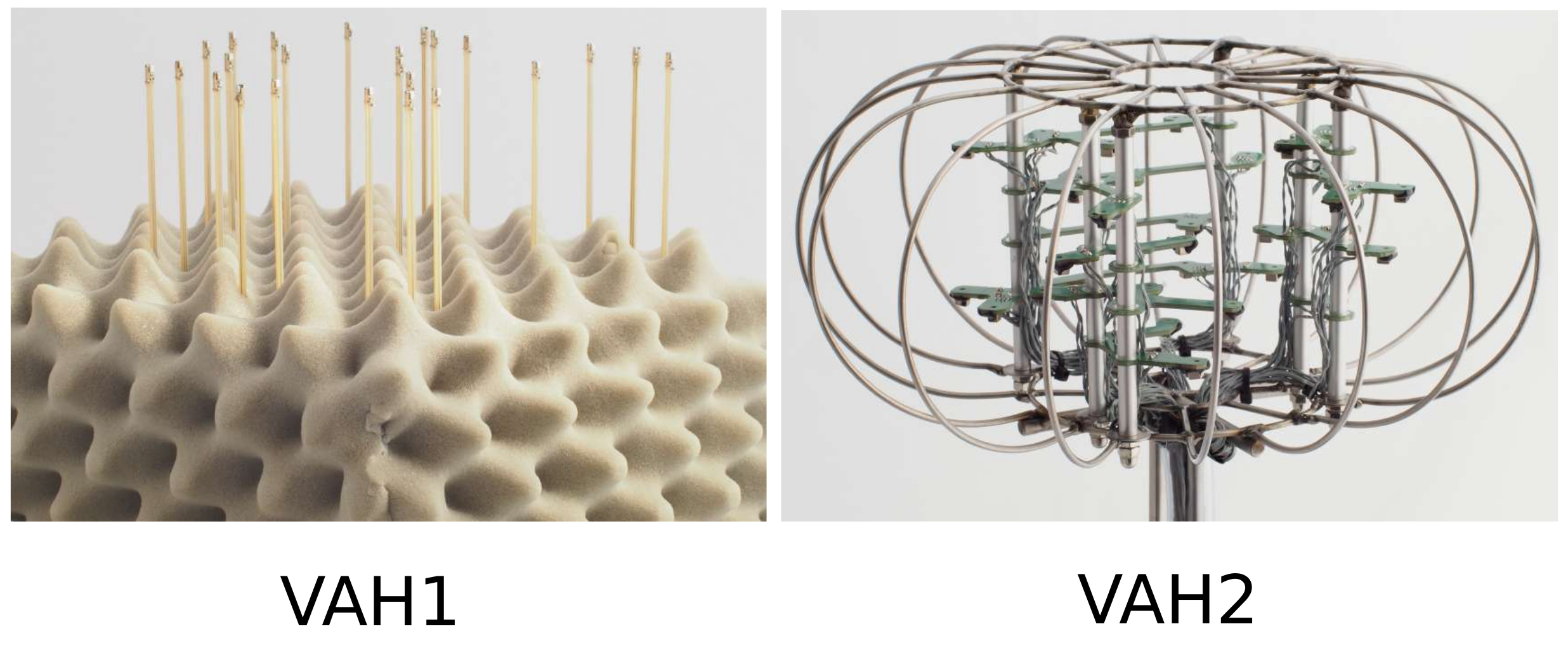

2.2. VAH Implementations and Constraint Parameters Used in This Study

3. Part I: Localization of Real and Virtual Sources in the Absence of Visual Cues

3.1. Experiment Design

3.1.1. Target Sources’ Positions in the Room

3.1.2. Localization of Real Sound Sources (TestReal)

3.1.3. Localization of Virtual Sources (TestVR)

3.1.4. Response Method

3.1.5. Subjects and Test Signal

3.2. Results

3.3. Discussion

4. Part II: The Impact of Head Tracking on the Localization Performance

4.1. Experiment Design

4.1.1. Response Method: GUI

4.1.2. Experimental Setup, Subjects and Test Signal

4.2. Results

4.3. Discussion

5. General Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wenzel, E.M.; Arruda, M.; Kistler, D.J.; Wightman, F.L. Localization using nonindividualized head-related transfer functions. J. Acoust. Soc. Am. 1993, 94, 111–123. [Google Scholar] [CrossRef]

- Møller, H.; Sørensen, M.F.; Jensen, C.B.; Hammershøi, D. Binaural Technique: Do we need individual recordings? J. Audio Eng. Soc. 1996, 44, 451–469. [Google Scholar]

- Oberem, J.; Richter, J.G.; Setzer, D.; Seibold, J.; Koch, I.; Fels, J. Experiments on localization accuracy with non-individual and individual HRTFs comparing static and dynamic reproduction methods. bioRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Begault, D.R.; Wenzel, E.M.; Anderson, M.R. Direct Comparison of the Impact of Head Tracking, Reverberation, and Individualized Head-Related Transfer Functions on the Spatial Perception of a Virtual Speech Source. J. Audio Eng. Soc. 2001, 49, 904–916. [Google Scholar] [PubMed]

- Brimijoin, W.O.; Boyd, A.W.; Akeroyd, M.A. The Contribution of Head Movement to the Externalization and Internalization of Sounds. PLoS ONE 2013, 8, e83068. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Romigh, G.D.; Brungart, D.S.; Simpson, B.D. Free-Field Localization Performance With a Head-Tracked Virtual Auditory Display. IEEE J. Sel. Top. Signal Process. 2015, 9, 943–954. [Google Scholar] [CrossRef]

- Sandvad, J. Dynamic aspects of auditory virtual environments. In Proceedings of the 100th AES Convention, Copenhagen, Denmark, 11–14 May 1996. [Google Scholar]

- Bronkhorst, A.W. Localization of real and virtual sound sources. J. Acoust. Soc. Am. 1995, 98, 2542–2553. [Google Scholar] [CrossRef]

- Rasumow, E.; Hansen, M.; van de Par, S.; Püschel, D.; Mellert, V.; Doclo, S.; Blau, M. Regularization Approaches for Synthesizing HRTF Directivity Patterns. IEEE/ACM Trans. Audio, Speech Lang. Process. 2016, 24, 215–225. [Google Scholar] [CrossRef]

- Rasumow, E.; Blau, M.; Doclo, S.; van de Par, S.; Hansen, M.; Püschel, D.; Mellert, V. Perceptual Evaluation of Individualized Binaural Reproduction Using a Virtual Artificial Head. J. Audio Eng. Soc. 2017, 65, 448–459. [Google Scholar] [CrossRef]

- Fallahi, M.; Hansen, M.; Doclo, S.; van de Par, S.; Mellert, V.; Püschel, D.; Blau, M. High spatial resolution binaural sound reproduction using a virtual artificial head. In Proceedings of the Fortschritte der Akustik-DAGA, Kiel, Germany, 6–9 March 2017; pp. 1061–1064. [Google Scholar]

- Blau, M.; Budnik, A.; Fallahi, M.; Steffens, H.; Ewert, S.D.; van de Par, S. Toward realistic binaural auralizations-perceptual comparison between measurement and simulation-based auralizations and the real room for a classroom scenario. Acta Acust. 2021, 5. [Google Scholar] [CrossRef]

- Fallahi, M.; Hansen, M.; Doclo, S.; van de Par, S.; Püschel, D.; Blau, M. Evaluation of head-tracked binaural auralizations of speech signals generated with a virtual artificial head in anechoic and classroom environments. Acta Acust. 2021, 5. [Google Scholar] [CrossRef]

- Golomb, S.W.; Taylor, H. Two-Dimensional Synchronization Patterns for Minimum Ambiguity. IEEE Trans. Inf. Theory 1982, 28, 600–604. [Google Scholar] [CrossRef]

- Rasumow, E.; Blau, M.; Hansen, M.; Doclo, S.; van de Par, S.; Mellert, V.; Püschel, D. Robustness of virtual artificial head topologies with respect to microphone positioning. In Proceedings of the Forum Acusticum, Aalborg, Denmark, 27 June–1 July 2011; pp. 2251–2256. [Google Scholar]

- Blauert, J. Spatial Hearing: The Psychophysics of Human Sound Localization, revised ed.; MIT Press: Cambridge, MA, USA, 1997; Chapter 2; pp. 36–200. [Google Scholar]

- Kirkeby, O.; Nelson, P.A. Digital Filter Design for Inversion Problems in Sound Reproduction. J. Audio Eng. Soc. 1999, 47, 583–595. [Google Scholar]

- Jaeger, H.; Bitzer, J.; Simmer, U.; Blau, M. Echtzeitfähiges binaurales Rendering mit Bewegungssensoren von 3-D Brillen. In Proceedings of the Fortschritte der Akustik-DAGA, Kiel, Germany, 6–9 March 2017. [Google Scholar]

- Hartmann, W.M.; Wittenberg, A. On the externalization of sound images. J. Acoust. Soc. Am. 1996, 99, 3678–3688. [Google Scholar] [CrossRef] [PubMed]

- Hendrickx, E.; Stitt, P.; Messonnier, C.; Lyzwa, J.M.; Katz, B.F.; de Boishéraud, C. Influence of head tracking on the externalization of speech stimuli for non-individualized binaural synthesis. J. Acoust. Soc. Am. 2017, 141, 2011–2023. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Siegel, S.; Castellan, N.J. Non Parametric Statistics for Behavioural Sciences, 2nd ed.; McGraw-Hill, Inc.: New York, NY, USA, 1988; Chapter 7; pp. 168–189. [Google Scholar]

- Macpherson, E.A. Cue weighting and vestibular mediation of temporal dynamics in sound localization via head rotation. Proc. Meet. Acoust. 2013, 19, 050131. [Google Scholar]

- Wallach, H. The role of head movements and vestibular and visual cues in sound localization. J. Exp. Psychol. 1940, 27, 339–368. [Google Scholar] [CrossRef] [Green Version]

- Ackermann, D.; Fiedler, F.; Brinkmann, F.; Schneider, M.; Weinzierl, S. On the Acoustic Qualities of Dynamic Pseudobinaural Recordings. J. Audio Eng. Soc. 2020, 68, 418–427. [Google Scholar] [CrossRef]

- Wightman, F.L.; Kistler, D.J. Resolution of front-back ambiguity in spatial hearing by listener and source movement. J. Acoust. Soc. Am. 1999, 105, 2841–2853. [Google Scholar] [CrossRef] [PubMed]

- Perrett, S.; Noble, W. The effect of head rotations on vertical plane sound localization. J. Acoust. Soc. Am. 1997, 102, 2325–2332. [Google Scholar] [CrossRef]

- Thurlow, W.R.; Runge, P.S. Effect of induced head movements on localization of direction of sound. J. Acoust. Soc. Am. 1967, 42, 480–488. [Google Scholar] [CrossRef] [PubMed]

- Begault, D.R.; Wenzel, E.M. Headphone localization of speech. Hum. Factors 1993, 35, 361–376. [Google Scholar] [CrossRef]

- Folds, D.J. The elevation illusion in virtual audio. In Proceedings of the Human Factors and Ergonomics Society 50th Annual Meeting, San Francisco, CA, USA, 16–20 October 2006; pp. 1576–1579. [Google Scholar]

- Middlebrooks, J.C. Virtual localization improved by scaling nonindividualized external-ear transfer function in frequency. J. Acoust. Soc. Am. 1999, 106, 1493–1510. [Google Scholar] [CrossRef] [PubMed]

- Asano, F.; Suzuki, Y.; Sone, T. Role of spectral cues in median plane localization. J. Acoust. Soc. Am. 1990, 88, 159–168. [Google Scholar] [CrossRef] [PubMed]

- Shaw, E.A.G.; Teranishi, R. Sound pressure generated in an external-ear replica and real human ears by a nearby point source. J. Acoust. Soc. Am. 1968, 44, 240–249. [Google Scholar] [CrossRef] [PubMed]

| Label | Constraint Parameter P and the Used VAH |

|---|---|

| V11 | VAH1 - P = 72 (Elevation: 0) |

| V13 | VAH1 - P = 3 × 72 = 216 (Elevations: −15, 0, 15) |

| V21 | VAH2 - P = 72 (Elevation: 0) |

| V23 | VAH2 - P = 3 × 72 = 216 (Elevations: −15, 0, 15) |

| Condition | Real Source | V11 | V13 | V21 | V23 | HTK |

|---|---|---|---|---|---|---|

| Reversal rate | 1.4% | 1.4% | 4.2% | 0.47% | 1.9% | 0.95% |

| BRIRs | V11 | V21 | V23 | HTK | V11 | V21 | V23 | HTK |

|---|---|---|---|---|---|---|---|---|

| Reversal rate | 0.47% | 1.90% | 1.42% | 0.47% | 15.24% | 23.33% | 30% | 20% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fallahi, M.; Hansen, M.; Doclo, S.; van de Par, S.; Püschel, D.; Blau, M. Dynamic Binaural Rendering: The Advantage of Virtual Artificial Heads over Conventional Ones for Localization with Speech Signals. Appl. Sci. 2021, 11, 6793. https://doi.org/10.3390/app11156793

Fallahi M, Hansen M, Doclo S, van de Par S, Püschel D, Blau M. Dynamic Binaural Rendering: The Advantage of Virtual Artificial Heads over Conventional Ones for Localization with Speech Signals. Applied Sciences. 2021; 11(15):6793. https://doi.org/10.3390/app11156793

Chicago/Turabian StyleFallahi, Mina, Martin Hansen, Simon Doclo, Steven van de Par, Dirk Püschel, and Matthias Blau. 2021. "Dynamic Binaural Rendering: The Advantage of Virtual Artificial Heads over Conventional Ones for Localization with Speech Signals" Applied Sciences 11, no. 15: 6793. https://doi.org/10.3390/app11156793

APA StyleFallahi, M., Hansen, M., Doclo, S., van de Par, S., Püschel, D., & Blau, M. (2021). Dynamic Binaural Rendering: The Advantage of Virtual Artificial Heads over Conventional Ones for Localization with Speech Signals. Applied Sciences, 11(15), 6793. https://doi.org/10.3390/app11156793