Multiple Optimizations-Based ESRFBN Super-Resolution Network Algorithm for MR Images

Abstract

:1. Introduction

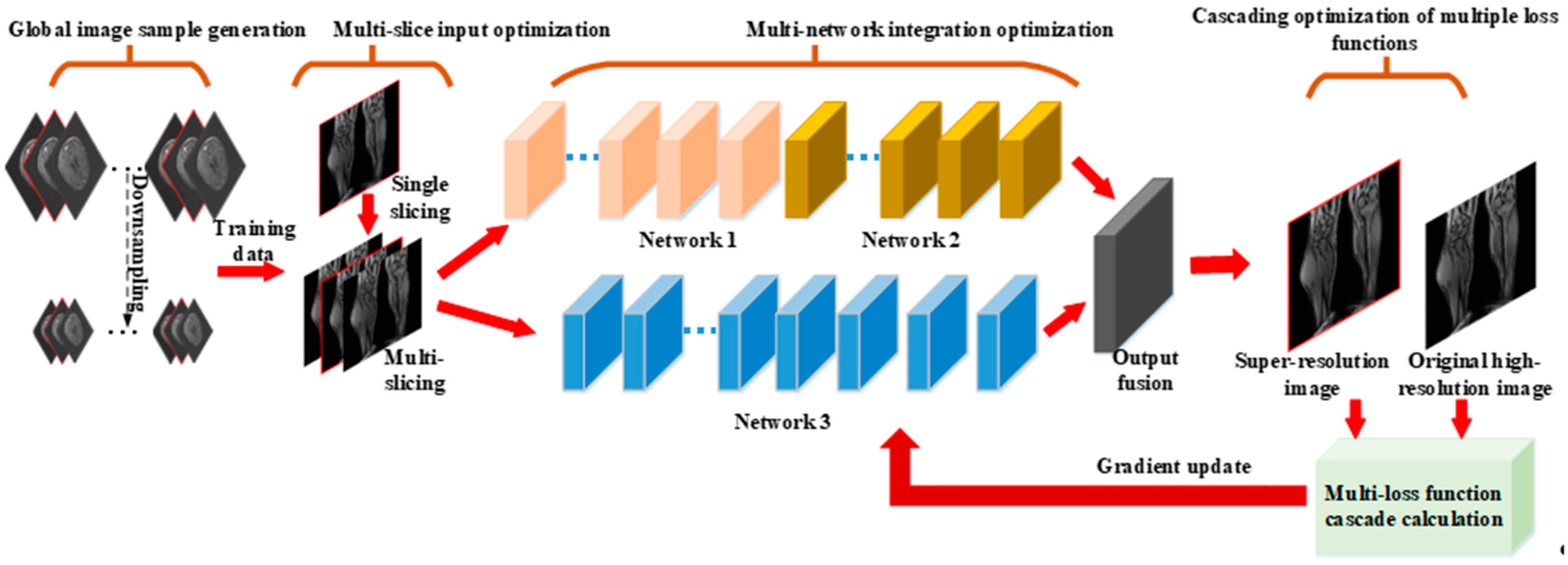

2. Algorithm Architecture

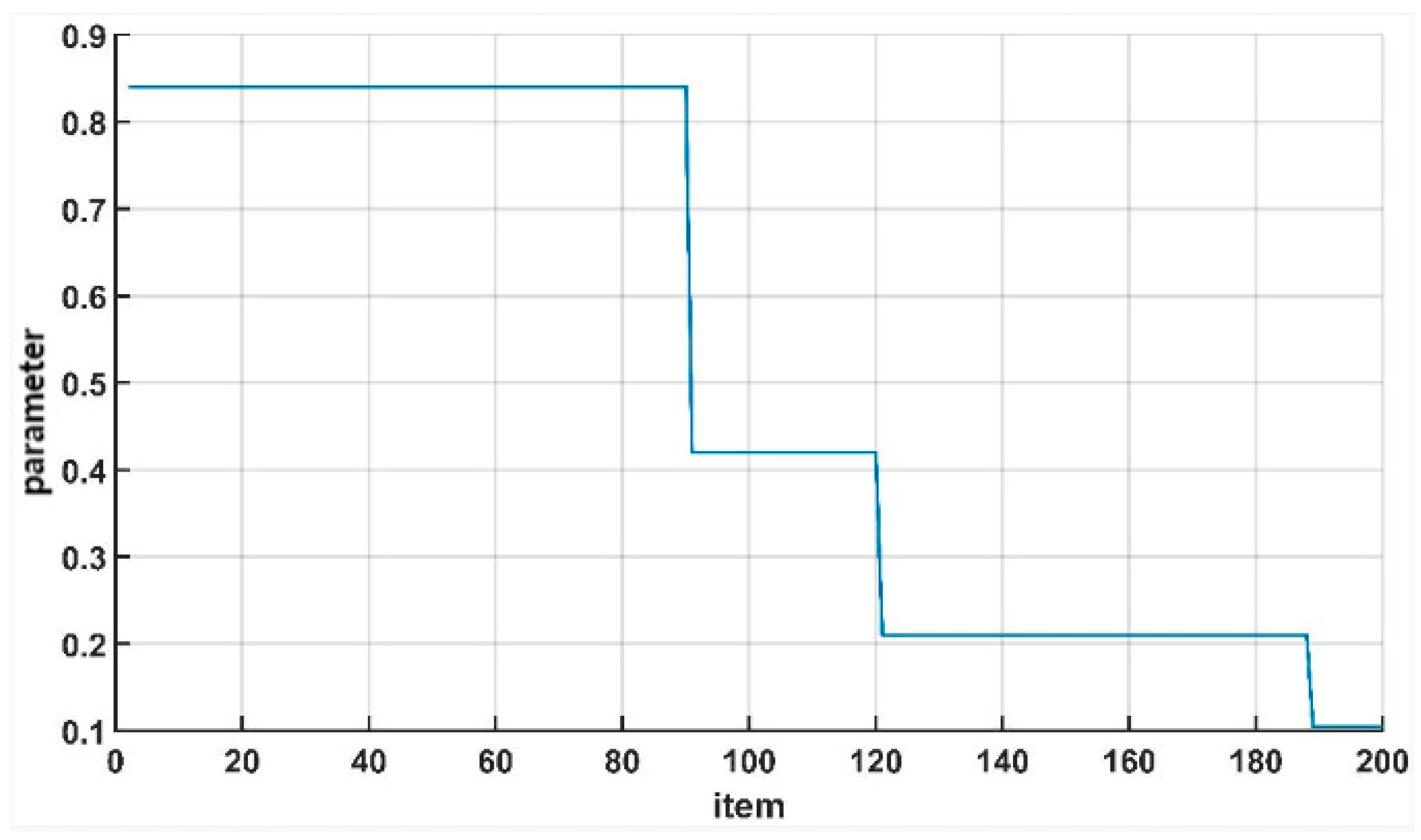

2.1. Cascade Optimization of Loss Function Based on Joint PSNR-SSIM

- Step 1. Calculate

- Step 2. Calculate

- Step 3. Setting of α

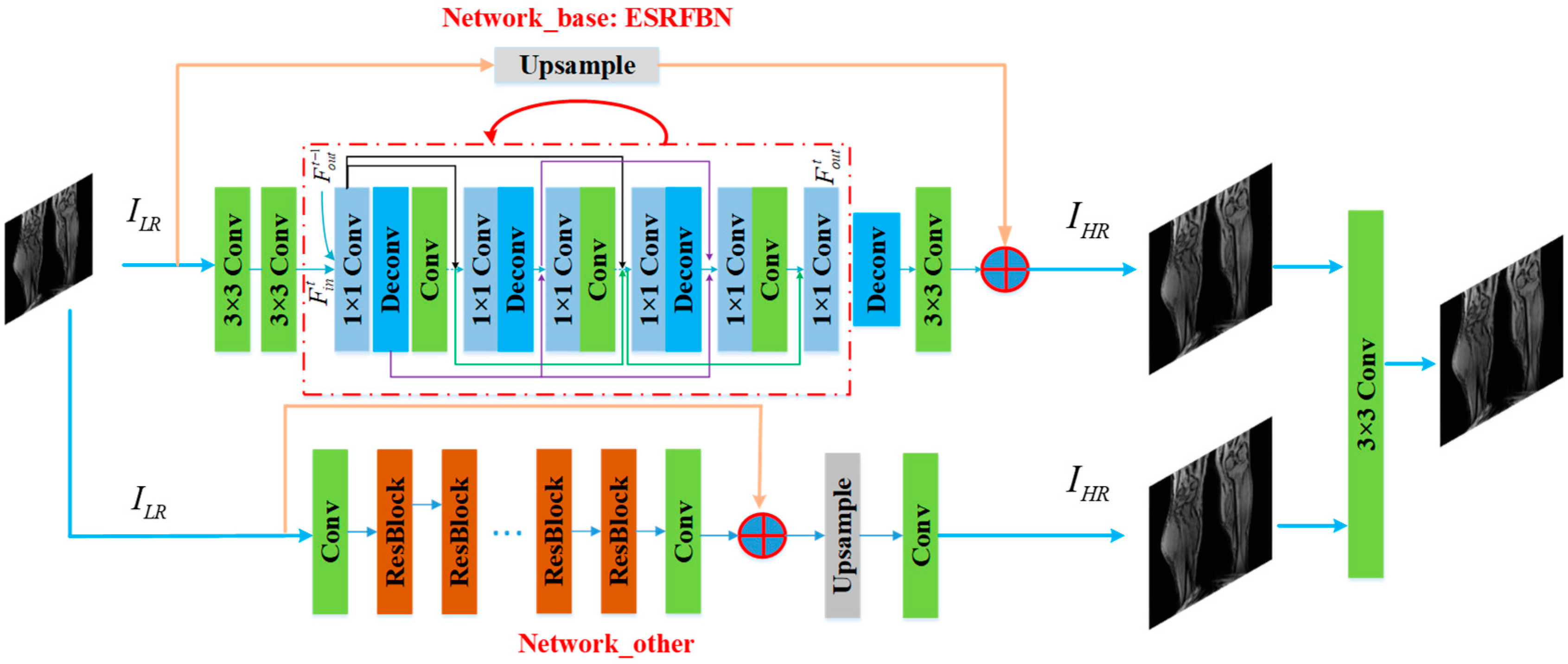

2.2. ESRFBN Network Improvement Based on Contextual Network Fusion

2.3. Algorithm Flow

2.4. Discussion

3. Experimental Results and Analysis

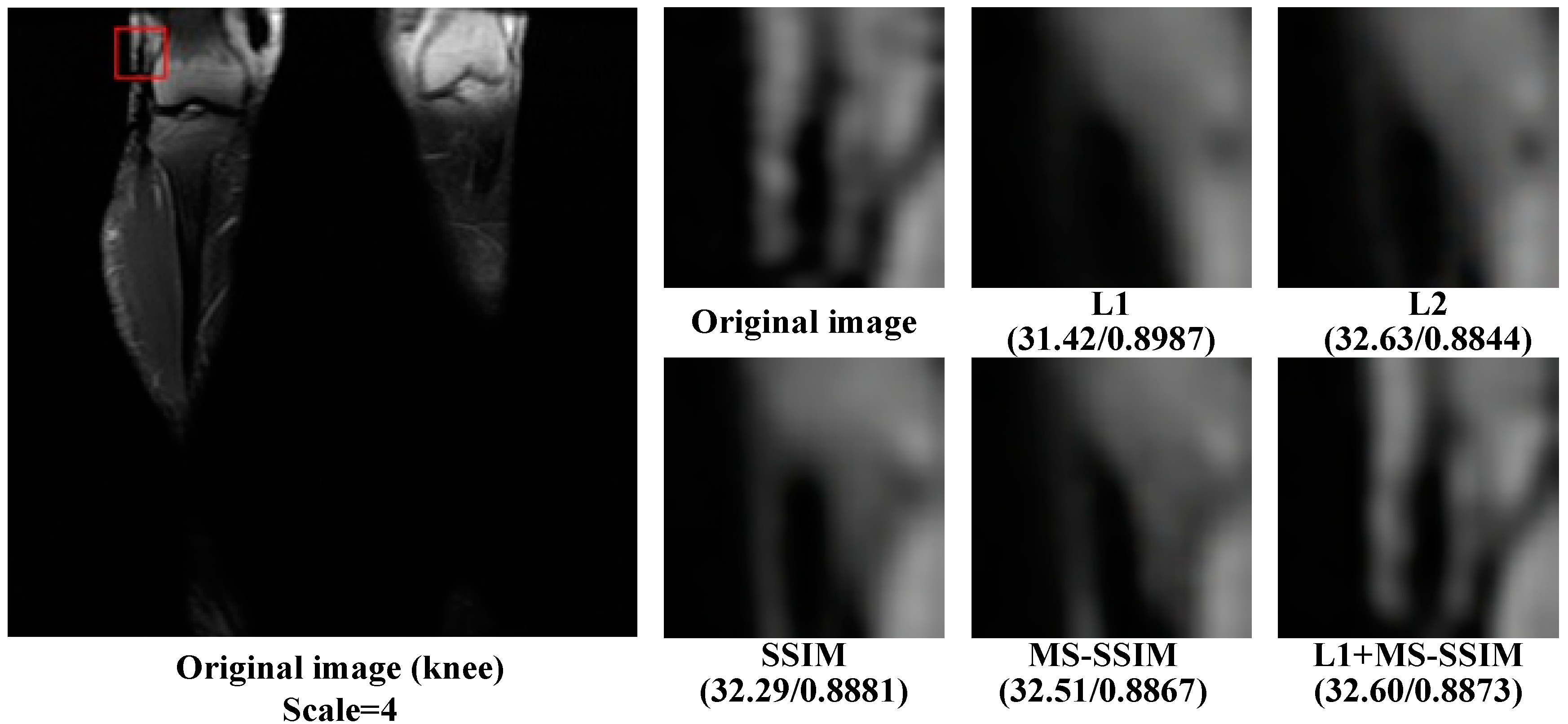

3.1. Experiment 1

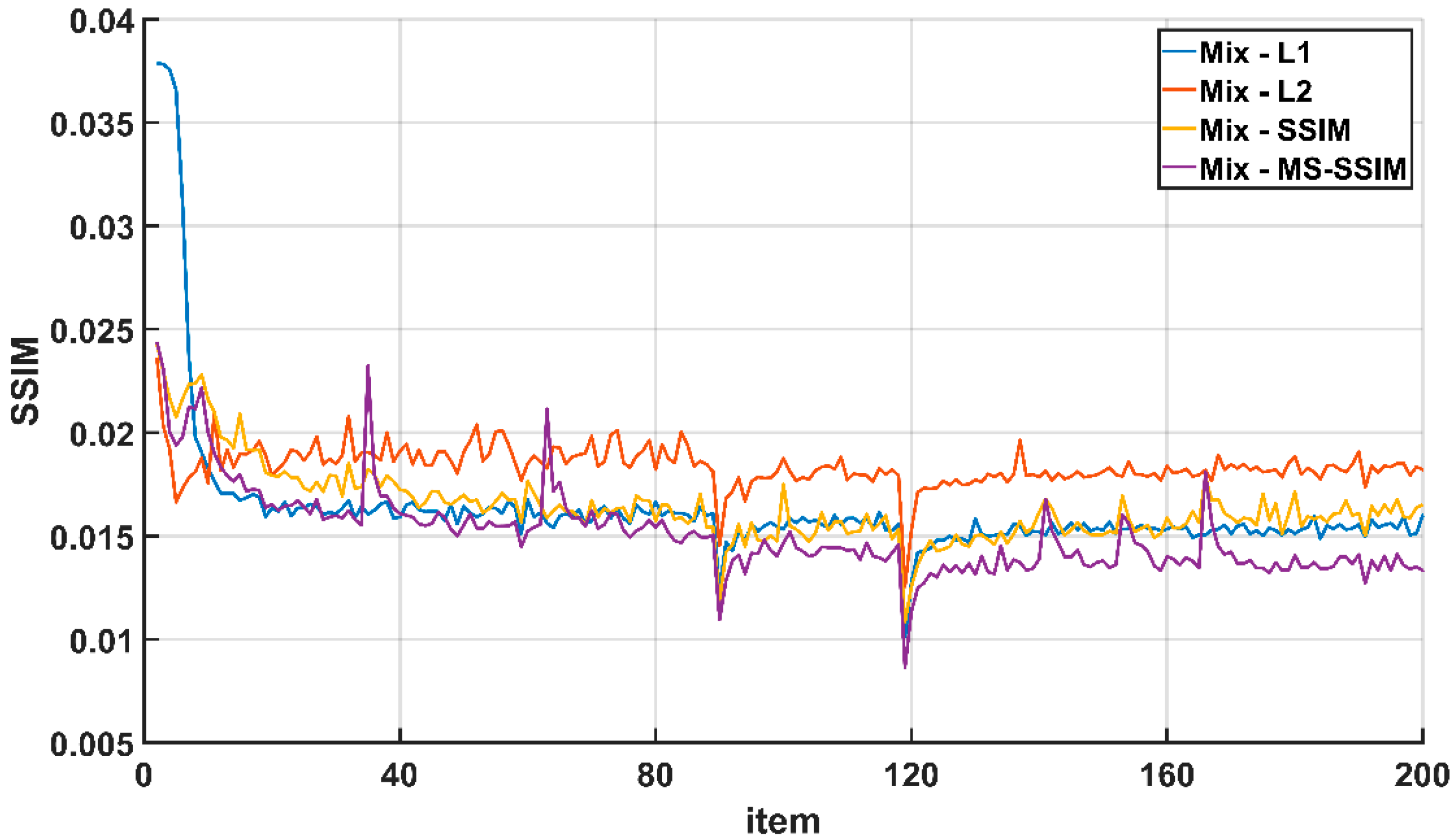

3.2. Experiment 2: Analysis of the Convergence of Each Loss Function Index during the Training Process

3.3. Performance Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Algorithm A1 ESRFBN super-resolution network training based on multiple optimization. |

| Input: Multi-slice low-resolution image set , Multi-slice high-resolution image set Output: ESRFBN network model parameters , EDSR network model parameters 1: Hyper-parameter settings: Batch: batch_size, Number of iterations: epoch 2: Pre-trained model loading 3.1: Independent network model training for 1 to epoch do for 1 to m/batch_size do for 1 to batch_size do (1) ESRFBN network forward calculation , where represents the output result of ESRFBN network Error calculation , where , Parameter optimization (2) EDSR network forward calculation , where represents the output result of EDSR network Error calculation , where , Parameter optimization end for end for end for 3.2: Fusion layer parameter learning (1) The parameters of the fusion layer are randomly initialized by a zero-mean Gaussian distribution with a standard deviation of 0.001. (2) Independent network outputs their respective super-resolution results (3) The fusion layer performs the fusion of the output results of each network , where represents the parameters of the fusion layer, a total of 30 parameters (4) Error calculation , where , (5) Update of fusion layer parameters 4: Model parameter output , , |

| Algorithm A2 ESRFBN super-resolution network test based on multiple optimizations. |

| Input: Multi-slice low-resolution image set Multi-slice high-resolution image set Deep model parameters , , Output: Evaluation value PSNR, SSIM. 1: Model parameter loading 2: Image super-resolution for 1 to k do (1) Independent network outputs their respective super-resolution results (2) The fusion layer performs the fusion of the output results of each network end for 3: PSNR, SSIM calculation 4: Output the value of PSNR, SSIM |

References

- El Hakimi, W.; Wesarg, S. Accurate super-resolution reconstruction for CT and MR images. In Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems, Porto, Portugal, 20–22 June 2013; pp. 445–448. [Google Scholar]

- Zhao, C.; Lu, J.; Li, K.; Wang, X.; Wang, H.; Zhang, M. Optimization of scanning time for clinical application of resting fMRI. Chin. J. Mod. Neurol. Dis. 2011, 3, 51–55. [Google Scholar]

- Kaur, P.; Sao, A.K.; Ahuja, C.K. Super Resolution of Magnetic Resonance Images. J. Imaging 2021, 7, 101. [Google Scholar] [CrossRef]

- Ramasubramanian, V.; Paliwal, K.K. An efficient approximation-elimination algorithm for fast nearest-neighbour search based on a spherical distance coordinate formulation. Pattern Recognit. Lett. 1992, 13, 471–480. [Google Scholar] [CrossRef]

- Stark, H.; Oskoui, P. High-resolution image recovery from image-plane arrays, using convex projections. J. Opt. Soc. Am. A Opt. Image Sci. 1989, 6, 1715. [Google Scholar] [CrossRef] [PubMed]

- Schultz, R.R.; Stevenson, R.L. Improved definition image expansion. In Proceedings of the IEEE International Conference on Acoustics, San Francisco, CA, USA, 23–26 March 1992. [Google Scholar]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution Via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Chen, J.; Hoi, S. Deep Learning for Image Super-resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 99, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5892–5900. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep Back-Projection Networks for Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, Z.; Yang, J.; Liu, Z.; Yang, X.; Jeon, G.; Wu, W. Feedback Network for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Irani, M.; Peleg, S. Improving resolution by image registration. CVGIP Graph. Model. Image Process. 1991, 53, 231–239. [Google Scholar] [CrossRef]

- Li, J.B.; Liu, H.; Pan, J.S.; Yao, H. Training samples-optimizing based dictionary learning algorithm for MR sparse superresolution reconstruction. Biomed. Signal Process. Control 2018, 39, 177–184. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Liu, H.; Liu, J.; Li, J.; Pan, J.S.; Yu, X. PSR: Unified Framework of Parameter-Learning-Based MR Image Superresolution. J. Healthc. Eng. 2021, 2021, 5591660. [Google Scholar] [PubMed]

- Xue, X.; Wang, Y.; Li, J.; Jiao, Z.; Ren, Z.; Gao, X. Progressive Sub-Band Residual-Learning Network for MR Image Super Resolution. IEEE J. Biomed. Health Inform. 2019, 24, 377–386. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Zhen, B.; Chen, A.; Qi, F.; Hao, X.; Qiu, B. A hybrid convolutional neural network for super-resolution reconstruction of MR images. Med. Phys. 2020, 47, 3013–3022. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.; Zhu, J. Arbitrary Scale Super-Resolution for Brain MRI Images. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Neos Marmaras, Greece, 5–7 June 2020. [Google Scholar]

- Hu, X.; Mu, H.; Zhang, X.; Wang, Z.; Tan, T.; Sun, J. Meta-SR: A magnification-arbitrary network for super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1575–1584. [Google Scholar]

- Xu, J.; Gong, E.; Pauly, J.; Zaharchuk, G. 200x Low-dose PET Reconstruction using Deep Learning. arXiv 2017, arXiv:1712.04119. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss Functions for Image Restoration with Neural Networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Ren, H.; El-Khamy, M.; Lee, J. Image Super Resolution Based on Fusing Multiple Convolution Neural Networks. In Proceedings of the Computer Vision & Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Data | Scale | Bicubic | L1 | L2 | SSIM | MS-SSIM | L1+MS-SSIM |

|---|---|---|---|---|---|---|---|

| neck | ×2 | 25.44 | 37.89 | 40.41 | 40.24 | 40.15 | 40.47 |

| ×4 | 21.58 | 30.28 | 30.90 | 30.50 | 30.67 | 30.92 | |

| breast | ×2 | 28.56 | 35.13 | 41.46 | 42.90 | 42.83 | 42.97 |

| ×4 | 20.50 | 30.78 | 32.88 | 32.60 | 32.91 | 32.93 | |

| knee | ×2 | 30.60 | 38.39 | 40.12 | 40.04 | 40.12 | 40.20 |

| ×4 | 22.69 | 31.42 | 32.63 | 32.29 | 32.51 | 32.64 | |

| head | ×2 | 20.32 | 39.42 | 38.55 | 38.19 | 38.28 | 39.39 |

| ×4 | 18.60 | 34.04 | 32.97 | 32.20 | 31.95 | 34.11 |

| Data | Scale | Bicubic | L1 | L2 | SSIM | MS-SSIM | L1+MS-SSIM |

|---|---|---|---|---|---|---|---|

| neck | ×2 | 0.6928 | 0.9876 | 0.9900 | 0.9900 | 0.9898 | 0.9897 |

| ×4 | 0.5442 | 0.9308 | 0.9349 | 0.9367 | 0.9358 | 0.9392 | |

| breast | ×2 | 0.8848 | 0.9492 | 0.9763 | 0.9802 | 0.9797 | 0.9816 |

| ×4 | 0.4830 | 0.8979 | 0.9031 | 0.9070 | 0.9075 | 0.9082 | |

| knee | ×2 | 0.9185 | 0.9760 | 0.9633 | 0.9641 | 0.9637 | 0.9774 |

| ×4 | 0.5649 | 0.8987 | 0.8844 | 0.8881 | 0.8867 | 0.8873 | |

| head | ×2 | 0.6025 | 0.9605 | 0.9592 | 0.9597 | 0.9593 | 0.9609 |

| ×4 | 0.5623 | 0.9128 | 0.9064 | 0.9064 | 0.9011 | 0.9609 |

| Data | Scale | Bicubic | L1 | L2 | SSIM | MS-SSIM | L1+MS-SSIM |

|---|---|---|---|---|---|---|---|

| neck | ×2 | 15.80 | 24.16 | 24.32 | 23.98 | 24.24 | 24.36 |

| ×4 | 9.29 | 18.54 | 19.76 | 18.85 | 19.26 | 19.77 | |

| breast | ×2 | 13.52 | 31.75 | 31.82 | 31.78 | 31.96 | 31.98 |

| ×4 | 7.68 | 25.62 | 25.94 | 25.15 | 25.98 | 25.98 | |

| knee | ×2 | 11.83 | 31.49 | 31.52 | 30.81 | 31.09 | 31.73 |

| ×4 | 5.97 | 26.09 | 26.31 | 24.86 | 25.48 | 26.49 | |

| head | ×2 | 6.29 | 27.89 | 29.87 | 26.98 | 27.97 | 28.76 |

| ×4 | 2.95 | 20.75 | 20.43 | 20.97 | 18.53 | 20.98 |

| Data | Scale | Bicubic | L1 | L2 | SSIM | MS-SSIM | L1+MS-SSIM |

|---|---|---|---|---|---|---|---|

| neck | ×2 | 0.6500 | 0.8923 | 0.8979 | 0.8997 | 0.8980 | 0.8998 |

| ×4 | 0.3399 | 0.8201 | 0.8255 | 0.8292 | 0.8268 | 0.8315 | |

| breast | ×2 | 0.4967 | 0.9123 | 0.9211 | 0.9206 | 0.9189 | 0.9213 |

| ×4 | 0.0487 | 0.8027 | 0.8078 | 0.8111 | 0.8178 | 0.8185 | |

| knee | ×2 | 0.3647 | 0.9008 | 0.9072 | 0.9073 | 0.9054 | 0.9078 |

| ×4 | 0.0919 | 0.7840 | 0.7908 | 0.7858 | 0.7864 | 0.7864 | |

| head | ×2 | 0.3126 | 0.8065 | 0.8211 | 0.8997 | 0.8075 | 0.9609 |

| ×4 | 0.2081 | 0.6434 | 0.6336 | 0.6503 | 0.6508 | 0.6539 |

| Data | Scale | BIC | IBP | POCS | MAP | JDL | IJDL | RDN | EDSR | DBPN | SRGAN | ESRGAN | SRFBN | ESRFBN | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| neck | ×2 | 25.44 | 29.35 | 28.86 | 30.01 | 35.95 | 36.12 | 29.05 | 37.34 | 35.74 | 37.69 | 36.16 | 38.03 | 37.89 | 42.96 |

| ×4 | 21.58 | 24.72 | 24.28 | 25.27 | 28.53 | 28.82 | 24.53 | 29.25 | 29.28 | 28.78 | 29.11 | 30.23 | 30.28 | 36.22 | |

| breast | ×2 | 28.56 | 30.06 | 29.76 | 31.21 | 33.34 | 34.08 | 33.50 | 34.57 | 31.94 | 34.06 | 32.91 | 34.28 | 35.13 | 41.03 |

| ×4 | 20.50 | 21.56 | 22.38 | 23.09 | 27.87 | 29.16 | 23.68 | 30.00 | 28.13 | 27.99 | 26.31 | 30.68 | 30.78 | 36.85 | |

| knee | ×2 | 30.60 | 32.80 | 33.01 | 34.36 | 35.94 | 36.87 | 36.34 | 37.49 | 37.67 | 36.93 | 36.00 | 37.95 | 38.39 | 43.81 |

| ×4 | 22.69 | 24.74 | 25.25 | 26.66 | 28.48 | 30.11 | 30.02 | 28.34 | 31.29 | 29.63 | 28.75 | 31.35 | 31.42 | 37.07 | |

| head | ×2 | 20.32 | 28.22 | 28.04 | 31.24 | 33.43 | 35.11 | 38.05 | 38.03 | 33.82 | 35.80 | 35.89 | 39.19 | 39.42 | 42.86 |

| ×4 | 18.60 | 20.35 | 22.29 | 24.68 | 28.65 | 28.60 | 29.70 | 32.22 | 29.39 | 30.95 | 31.32 | 33.60 | 34.04 | 38.72 |

| Data | Scale | BIC | IBP | POCS | MAP | JDL | IJDL | RDN | EDSR | DBPN | SRGAN | ESRGAN | SRFBN | ESRFBN | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| neck | ×2 | 0.6928 | 0.8024 | 0.8257 | 0.8458 | 0.9155 | 0.9386 | 0.8824 | 0.9861 | 0.9800 | 0.9868 | 0.9821 | 0.9879 | 0.9880 | 0.9899 |

| ×4 | 0.5442 | 0.7589 | 0.8016 | 0.8274 | 0.9001 | 0.9318 | 0.7144 | 0.9164 | 0.9182 | 0.9093 | 0.9158 | 0.9304 | 0.9308 | 0.9524 | |

| breast | ×2 | 0.8848 | 0.8943 | 0.9010 | 0.9046 | 0.9200 | 0.9308 | 0.9373 | 0.9447 | 0.9243 | 0.9332 | 0.9256 | 0.9428 | 0.9492 | 0.9683 |

| ×4 | 0.4830 | 0.7592 | 0.7759 | 0.7900 | 0.8594 | 0.8747 | 0.6118 | 0.8631 | 0.8351 | 0.8137 | 0.7900 | 0.8735 | 0.8979 | 0.9401 | |

| knee | ×2 | 0.9185 | 0.9231 | 0.9210 | 0.9342 | 0.9591 | 0.9660 | 0.9641 | 0.9719 | 0.9727 | 0.9670 | 0.9634 | 0.9744 | 0.9760 | 0.9865 |

| ×4 | 0.5649 | 0.6491 | 0.6507 | 0.6875 | 0.8476 | 0.8522 | 0.8597 | 0.8968 | 0.8940 | 0.8643 | 0.9077 | 0.8964 | 0.9054 | 0.9641 | |

| head | ×2 | 0.6025 | 0.8522 | 0.8483 | 0.8427 | 0.9018 | 0.9274 | 0.9565 | 0.9565 | 0.9062 | 0.9234 | 0.9241 | 0.9600 | 0.9605 | 0.9811 |

| ×4 | 0.5623 | 0.7295 | 0.7535 | 0.7624 | 0.8358 | 0.8601 | 0.7962 | 0.8886 | 0.7906 | 0.8500 | 0.8611 | 0.9089 | 0.9128 | 0.9546 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Shao, M.; Pan, J.-S.; Li, J. Multiple Optimizations-Based ESRFBN Super-Resolution Network Algorithm for MR Images. Appl. Sci. 2021, 11, 8150. https://doi.org/10.3390/app11178150

Liu H, Shao M, Pan J-S, Li J. Multiple Optimizations-Based ESRFBN Super-Resolution Network Algorithm for MR Images. Applied Sciences. 2021; 11(17):8150. https://doi.org/10.3390/app11178150

Chicago/Turabian StyleLiu, Huanyu, Mingmei Shao, Jeng-Shyang Pan, and Junbao Li. 2021. "Multiple Optimizations-Based ESRFBN Super-Resolution Network Algorithm for MR Images" Applied Sciences 11, no. 17: 8150. https://doi.org/10.3390/app11178150

APA StyleLiu, H., Shao, M., Pan, J.-S., & Li, J. (2021). Multiple Optimizations-Based ESRFBN Super-Resolution Network Algorithm for MR Images. Applied Sciences, 11(17), 8150. https://doi.org/10.3390/app11178150