Copper Strip Surface Defect Detection Model Based on Deep Convolutional Neural Network

Abstract

:Featured Application

Abstract

1. Introduction

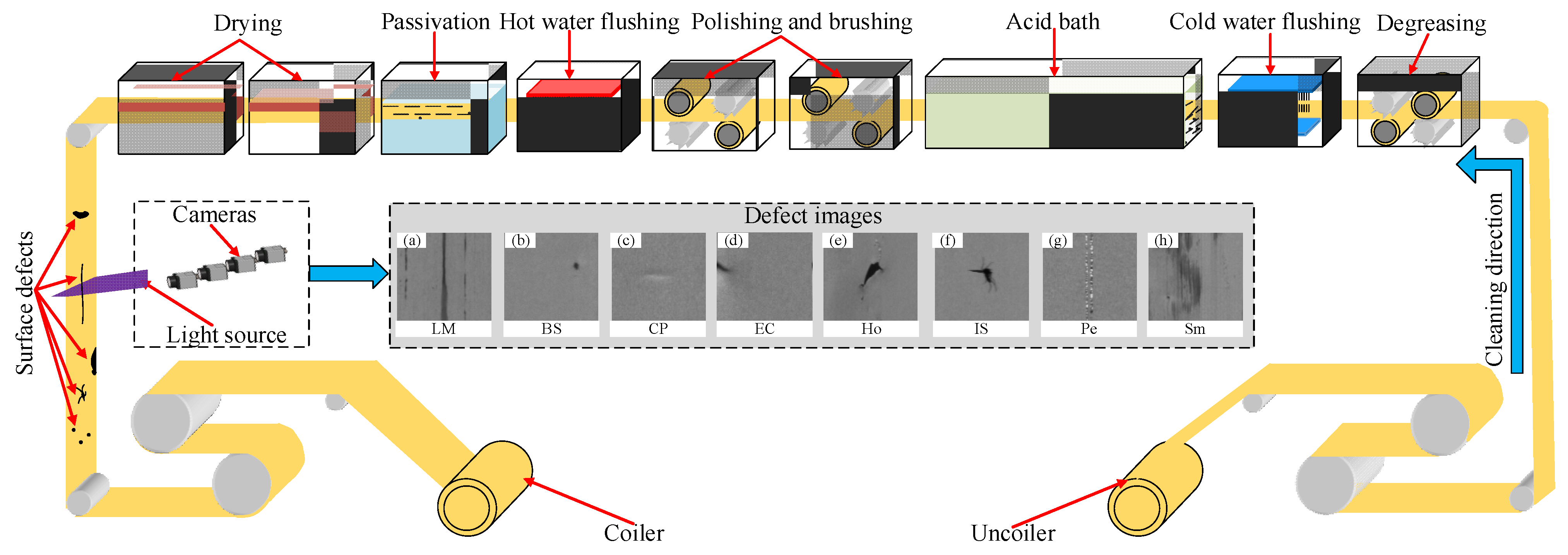

2. Surface Defect of Copper Strip

2.1. Classifications and Characteristics of Surface Defects

2.2. Surface Defect Dataset

3. Surface Defect Detection Model

3.1. Surface Defect Discrimination Model

3.2. Surface Defect Recognition Model of CNN

3.3. Surface Defect Recognition Model of EfficientNet

4. Experiments and Results

4.1. Experimental Method

4.2. Experimental Results and Analysis

5. Conclusions

- Combining the practical requirement, the common surface defects of copper strip were divided into 8 classifications: line mark (LM), black spot (BS), concave–convex pit (CP), edge crack (EC), hole (Ho), insect spot (IS), peeling (Pe), and smudge (Sm). Image data were collected in a production line, and a dataset of copper strip surface defects was established (YSU_CSC).

- The gray values of the perfect image clustered and distributed around the median value of 127.5, whilst a part of the gray values of the defect image was distributed in the range of <100 and >200. The perfect and defect images could be quickly distinguished.

- Compared with the performance of VGG16, MobileNetV2, and ResNet50 on the same testing set, the surface defect recognition model of copper strip based on the EfficientNet convolutional neural network had the highest accuracy, reaching 93.05%. The average recognition time of a single defect image was 197 ms. The model has a good generalisation capability, and its calculation speed is fast, which can meet the actual engineering requirements and has the best overall performance.

- On the testing set, the model improved the overall recognition performance on LM, CP, and Sm, with low error rates of 0%, 4.44%, and 2.22%, respectively. The defect recognition error rates for the three classifications of BS, Ho, and IS were relatively high at 8.89%, 15.56%, and 11.11%, respectively. The CAM shows that the model learned the key feature information for various classifications of defects. Consideration will be given in the future to further improve the overall performance of the model from three aspects: increasing the number of defect image data, subdividing similar defective images, and improving the model structure.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations and Symbols

| Abbreviation | Description |

| LM | Line Mark |

| BS | Black Spot |

| CP | Concave–convex Pit |

| EC | Edge Crack |

| Ho | Hole |

| IS | Insect Spot |

| Pe | Peeling |

| Sm | Smudge |

| YSU_CSC | A Surface Defect Dataset of Copper Strip |

| CNN | Convolution Neural Network |

| MBConv | Mobile Inverted Bottleneck Convolution Module Layers, |

| VGG | Visual Geometry Group Network |

| MobileNet | Efficient Convolutional Neural Networks for Mobile Vision Applications |

| ResNet | Residual Network |

| EfficientNet | Variable Model Scale Convolution Neural Network |

| CAM | Class Activation Mapping |

| Symbols | Description |

| Original image | |

| The added noise | |

| Image after adding noise | |

| Probability density function of noise | |

| Coordinate of any point in the original image | |

| The coordinate after the rotation angle becomes | |

| Gray values in the original image | |

| Gray value after brightness adjustment | |

| Brightness adjustment factor | |

| Perfect images | |

| Defect images | |

| , | Discrimination coefficients |

| , , | Zoom factors of width, depth, and resolution |

| , , | Resource allocation coefficients that can be determined by grid search, and these resources are allocated to width, depth, and resolution |

| N | Network model |

| Predefined network layer structure | |

| Predefined number of layers | |

| , | Predefined resolution |

| Predefined number of channels | |

| X | Adjustment factor |

| Memory(N) | Number of parameters of the network |

| FLOPS(N) | Amount of floating-point calculation on the network |

| Model building operation | |

| taget_memory | Threshold value of the parameter quantity |

| target_flpos | Threshold value of the floating-point calculation quantity |

| max Accuracy | Maximum accuracy of the model (objective function value) |

References

- Jin, P.; Liu, C.M.; Yu, X.D.; Yuan, F.S. Present situation of Chinese copper processing industry and its development trend. Nonferrous Met. Eng. Res. 2015, 36, 32–35. [Google Scholar]

- Li, Y.; Wang, A.J.; Chen, Q.S.; Liu, Q.Y. Influence factors analysis for the next 20 years of Chinese copper resources demand. Adv. Mater. Res. 2013, 734–737, 117–121. [Google Scholar] [CrossRef]

- Zhang, W.Q.; Zheng, C.F. Surface quality control and technical actuality of copper and copper alloy strip. Nonferrous Met. Mater. Eng. 2016, 37, 125–131. [Google Scholar] [CrossRef]

- Zhang, Y.J. Discussion on surface quality control of copper sheet and strip. Nonferrous Met. Process. 2005, 34, 27–29. [Google Scholar]

- Song, Q. Applications of machine vision to the quality test of copper strip surfaces. Shanghai Nonferrous Met. 2012, 77–80. [Google Scholar] [CrossRef]

- Yuan, H.Z.; Fu, W.; Guo, Y.K. An algorithm of detecting surface defects of copper plating based on dynamic threshold. J. Yanshan Univ. 2010, 34, 336–339. [Google Scholar] [CrossRef]

- Shen, Y.M.; Yang, Z.B. Techniques of machine vision applied in detection of copper strip surface’s defects. Electron. Meas. Technol. 2010, 33, 65–67. [Google Scholar] [CrossRef]

- Zhang, X.W.; Ding, Y.Q.; Duan, D.Q.; Gong, F.; Xu, L.Z.; Shi, A.Y. Surface defects inspection of copper strips based on vision bionic. J. Image Graph. 2011, 16, 593–599. [Google Scholar]

- Li, J.H. Research on Key Technology of Surface Defect Detection in Aluminum/Copper Strip. Master’s Thesis, Henan University of Science and Technology, Luoyang, China, 2019. [Google Scholar]

- Meng, F.M. Development of Copper Strip Defect Online Detection System Based on ARM and DSP. Master’s Thesis, China Jiliang University, Hangzhou, China, 2019. [Google Scholar] [CrossRef]

- Zhang, X.W.; Gong, F.; Xu, L.Z. Inspection of surface defects in copper strip using multivariate statistical approach and SVM. Inter. J. Comput. Appl. Technol. 2012, 43, 44–50. [Google Scholar] [CrossRef]

- Peres, R.S.; Jia, X.D.; Lee, J.; Sun, K.Y.; Colombo, A.W.; Barata, J. Industrial artificial intelligence in industry 4.0-systematic review, challenges and outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Song, K.C.; Yan, Y.H. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- He, Y.; Song, K.C.; Meng, Q.G.; Yan, Y.H. An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE Trans. Instrum. Meas. 2019, 64, 1493–1504. [Google Scholar] [CrossRef]

- Saiz, F.A.; Serrano, I.; Barandiaran, I.; Sanchez, J.R. A robust and fast deep learning-based method for defect classification in steel surfaces. In Proceedings of the 9th International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 455–460. [Google Scholar]

- Xiang, K.; Li, S.S.; Luan, M.H.; Yang, Y.; He, H.M. Aluminum product surface defect detection method based on improved Faster RCNN. Chin. J. Sci. Instrum. 2021, 42, 191–198. [Google Scholar] [CrossRef]

- Zheng, X.; Huang, D.J. Defect detection on aluminum surfaces based on deep learning. J. East. China Norm. Univ. (Nat. Sci.) 2020, 105–114. [Google Scholar] [CrossRef]

- Ye, G.; Li, Y.B.; Ma, Z.X.; Cheng, J. End-to-end aluminum strip surface defects detection and recognition method based on ViBe. J. Zhejiang Univ. (Eng. Sci.) 2020, 54, 1906–1914. [Google Scholar] [CrossRef]

- Gao, Y.P.; Gao, L.; Li, X.Y.; Yan, X.G. A semi-supervised convolutional neural network-based method for steel surface defect recognition. Robot. Comput.-Integr. Manuf. 2020, 61, 101825. [Google Scholar] [CrossRef]

- He, Y.; Song, K.C.; Dong, H.W.; Yan, Y.H. Semi-supervised defect classification of steel surface based on multi-training and generative adversarial network. Opt. Lasers Eng. 2019, 122, 294–302. [Google Scholar] [CrossRef]

- Naseri, M.; Beaulieu, N.C. Fast simulation of additive generalized Gaussian noise environments. IEEE Commun. Lett. 2020, 24, 1651–1654. [Google Scholar] [CrossRef]

- Thanh, D.N.H.; Hai, N.H.; Prasath, V.B.S.; Hieu, L.M.; Tavares, J.M.R.S. A two-stage filter for high density salt and pepper denoising. Multimed. Tools Appl. 2020, 79, 21013–21035. [Google Scholar] [CrossRef]

- Lee, S.Y.; Tama, B.A.; Moon, S.J.; Lee, S. Steel surface defect diagnostics using deep convolutional neural network and class activation map. Appl. Sci. 2019, 9, 5449. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.C.; Xu, Y.H.; Duan, B.W.; Wang, Y.M.; Song, M.M.; Yu, H.X.; Liu, H.M. Intelligent recognition model of hot rolling strip edge defects based on deep learning. Metals 2021, 11, 223. [Google Scholar] [CrossRef]

- Piltan, F.; Prosvirin, A.E.; Jeong, I.; Im, K.; Kim, J.M. Rolling-element bearing fault diagnosis using advanced machine learning-based observer. Appl. Sci. 2019, 9, 5404. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.L.; Cheng, L.; Hao, S.; Gao, W.Y.; Lai, Y.J. Optimization design of RBF-ARX model and application research on flatness control system. Optim. Control Appl. Methods 2017, 38, 19–35. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef] [Green Version]

- Alhichri, H.; Alsuwayed, A.; Bazi, Y.; Ammour, N.; Alajlan, N.A. Classification of remote sensing images using EfficientNet-B3 CNN Model with Attention. IEEE Access 2021, 9, 14078–14094. [Google Scholar] [CrossRef]

- Duong, L.T.; Nguyen, P.T.; Di Sipio, C.; Di Ruscio, D. Automated fruit recognition using EfficientNet and MixNet. Comput. Electron. Agric. 2020, 171, 105326. [Google Scholar] [CrossRef]

- Tan, M.X.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Bazi, Y.; Al Rahhal, M.M.; Alhichri, H.; Alajlan, N. Simple yet effective fine-tuning of deep CNNs using an auxiliary classification loss for remote sensing scene classification. Remote Sens. 2019, 11, 2908. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.J.; Zhang, B.F.; Yu, X. Infrared handprint classification using deep convolution neural network. Neural. Process. Lett. 2021, 53, 1065–1079. [Google Scholar] [CrossRef]

- Lyu, Y.Q.; Jiang, J.; Zhang, K.; Hua, Y.L.; Cheng, M. Factorizing and reconstituting large-kernel MBConv for lightweight face recognition. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27 October–2 November 2019; pp. 2689–2697. [Google Scholar] [CrossRef]

- Gao, F.; Li, Z.; Xiao, G.; Yuan, X.Y.; Han, Z.G. An online inspection system of surface defects for copper strip based on computer vision. In Proceedings of the 2012 5th International Congress on Image and Signal Processing (CISP), Chongqing, China, 16–18 October 2012; pp. 1200–1204. [Google Scholar] [CrossRef]

| Classifications | Characteristics |

|---|---|

| LM | Single or multiple lines appear on the surface, with continuous or intermittent distribution and different lengths. |

| BS | Single or multiple round black spots on the surface, usually single spot point is common. |

| CP | Pits or bulges of different sizes on the surface. |

| EC | Cracks on the sides of the two sides extend from the outside to the inside. |

| Ho | Holes with different sizes and irregular shapes on the surface. |

| IS | Most are embedded in the surface of copper strip, with insect appearance. |

| Pe | Serious upwarp appear on the surface. |

| Sm | Irregular dispersive residue marks appear on the surface. |

| Defects Classification | Image Augmentation | |||||

|---|---|---|---|---|---|---|

| Original Image | Gaussian Noise | Salt Pepper Noise | Angle Rotation | Brightness Reduction | Brightness Enhancement | |

| LM |  |  |  |  |  |  |

| CP |  |  |  |  |  |  |

| EC |  |  |  |  |  |  |

| Dataset | LM | BS | CP | EC | Ho | IS | Pe | Sm | Total |

|---|---|---|---|---|---|---|---|---|---|

| Training set | 210 | 210 | 210 | 210 | 210 | 210 | 210 | 210 | 1680 |

| Validation set | 45 | 45 | 45 | 45 | 45 | 45 | 45 | 45 | 360 |

| Testing set | 45 | 45 | 45 | 45 | 45 | 45 | 45 | 45 | 360 |

| Total | 300 | 300 | 300 | 300 | 300 | 300 | 300 | 300 | 2400 |

| Recognition Models | Testing Set Accuracy (%) | Recognition Time of Single Defect Image (ms) |

|---|---|---|

| VGG16 | 75.27 | 2412 |

| ResNet50 | 82.78 | 1205 |

| MobileNetV2 | 65.83 | 165 |

| Ours method | 93.05 | 197 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Wang, D.; Duan, B.; Yu, H.; Liu, H. Copper Strip Surface Defect Detection Model Based on Deep Convolutional Neural Network. Appl. Sci. 2021, 11, 8945. https://doi.org/10.3390/app11198945

Xu Y, Wang D, Duan B, Yu H, Liu H. Copper Strip Surface Defect Detection Model Based on Deep Convolutional Neural Network. Applied Sciences. 2021; 11(19):8945. https://doi.org/10.3390/app11198945

Chicago/Turabian StyleXu, Yanghuan, Dongcheng Wang, Bowei Duan, Huaxin Yu, and Hongmin Liu. 2021. "Copper Strip Surface Defect Detection Model Based on Deep Convolutional Neural Network" Applied Sciences 11, no. 19: 8945. https://doi.org/10.3390/app11198945

APA StyleXu, Y., Wang, D., Duan, B., Yu, H., & Liu, H. (2021). Copper Strip Surface Defect Detection Model Based on Deep Convolutional Neural Network. Applied Sciences, 11(19), 8945. https://doi.org/10.3390/app11198945