Object-Level Semantic Map Construction for Dynamic Scenes

Abstract

Featured Application

Abstract

1. Introduction

- An object motion detection method involving object-oriented 3D tracking against the camera pose based on prior knowledge of a previously constructed object database is provided.

- This approach enables dynamic objects to be found in the current frame by creating associations between the results of the instance segmentation algorithm combining the semantic and geometric cues and the object database updated by object motion detection.

- The camera motion estimation method reduces the overall drift by leveraging the 3D static model for frame-to-model alignment, as well as dynamic object culling in the current frame.

- To achieve an accurate 3D object-level semantic map representation of a scene, conduct object motion detection, and perform accurate instance segmentation and camera pose estimation, ElasticFusion is utilized, creating a complete and novel dynamic RGB-D SLAM framework.

2. Related Work

2.1. Dense SLAM

2.2. Object Semantic SLAM

2.3. Dynamic SLAM

3. Methodology

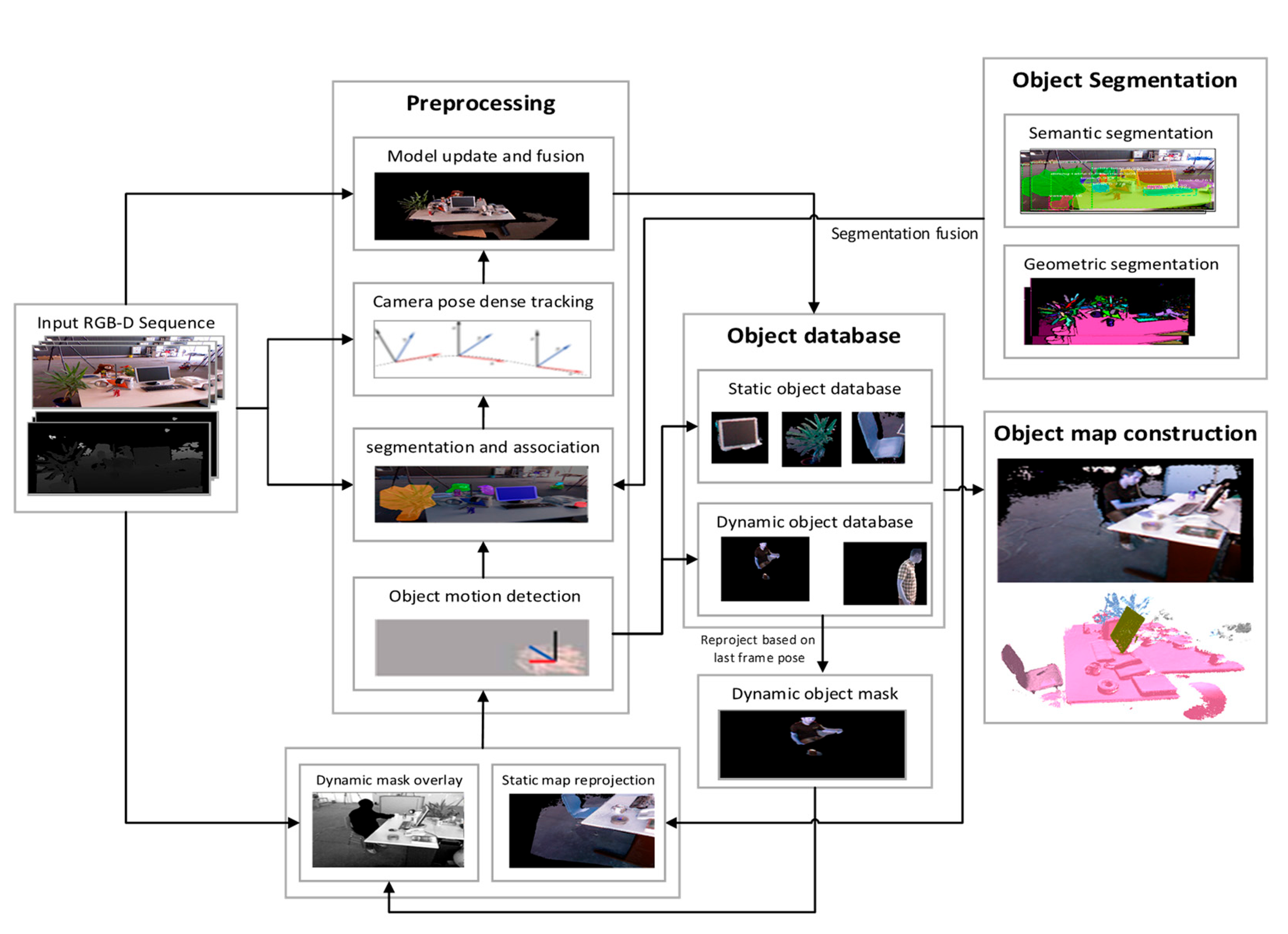

3.1. System Overview

- (1)

- Firstly, object motion detection is performed by 3D object tracking based on a priori knowledge of the object model database updated by the previous frame to find the moving object in the object database.

- (2)

- Secondly, object instance accuracy segmentation is achieved using a combination of semantic and geometric cues in the current frame, then associated with the object database to find the dynamic part of the current image data.

- (3)

- Thirdly, the camera pose is obtained precisely through dynamic part elimination in the current frame and each object model pose in the database is updated using the accurate camera pose.

- (4)

- Fourthly, the object model is updated and fused with each frame input, and the 3D object-level semantic map is reconstructed incrementally.

3.2. Notation and Preliminaries

3.3. Object Motion Detection through 3D Tracking Based on Object Model Database

3.3.1. Geometric Error Term

3.3.2. Photometric Error Term

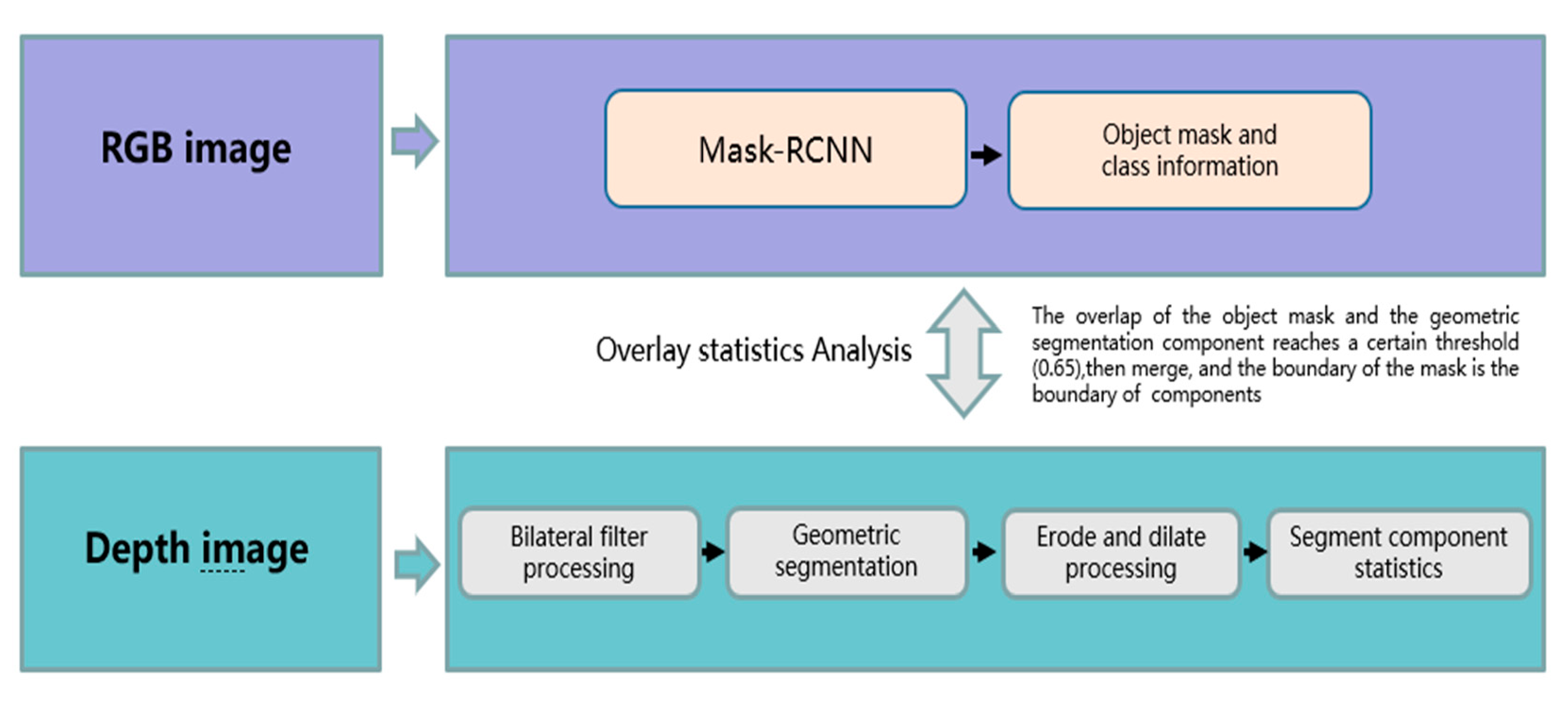

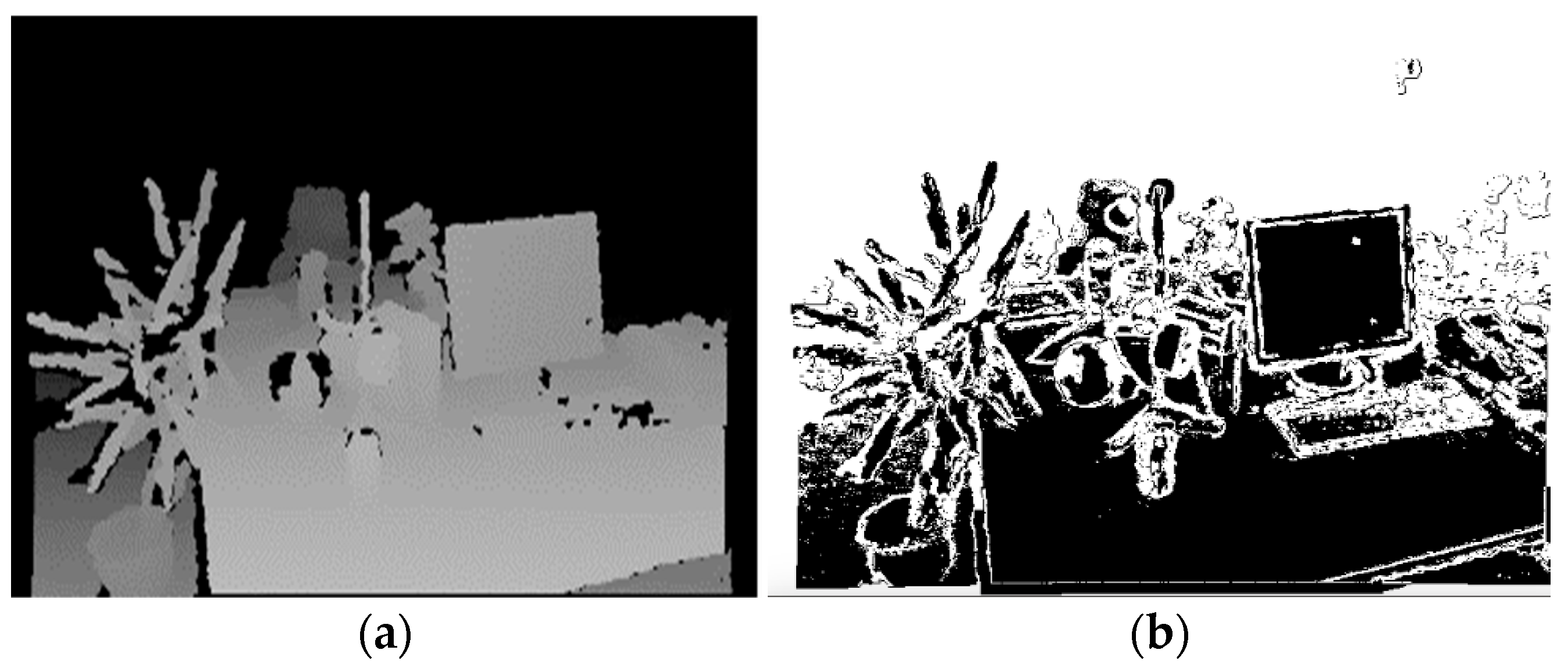

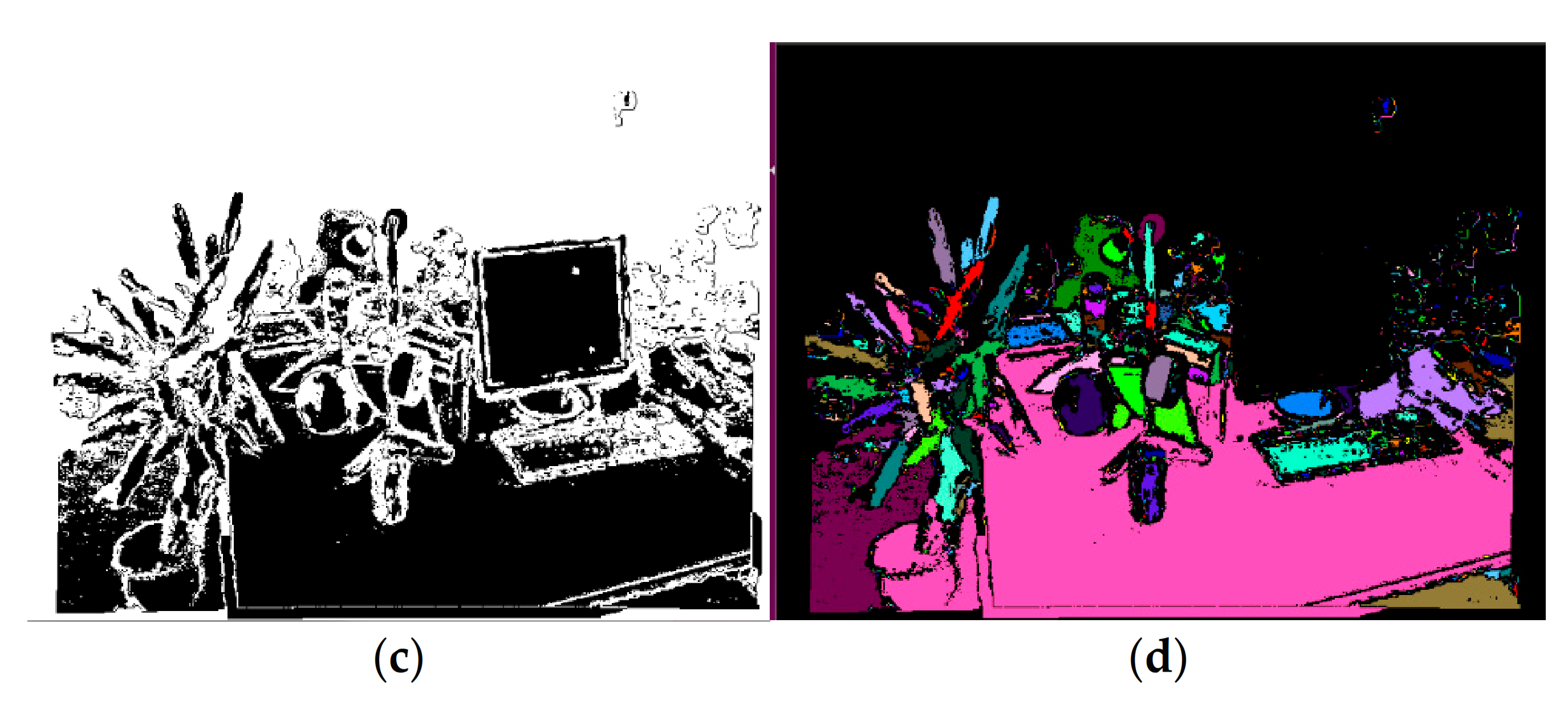

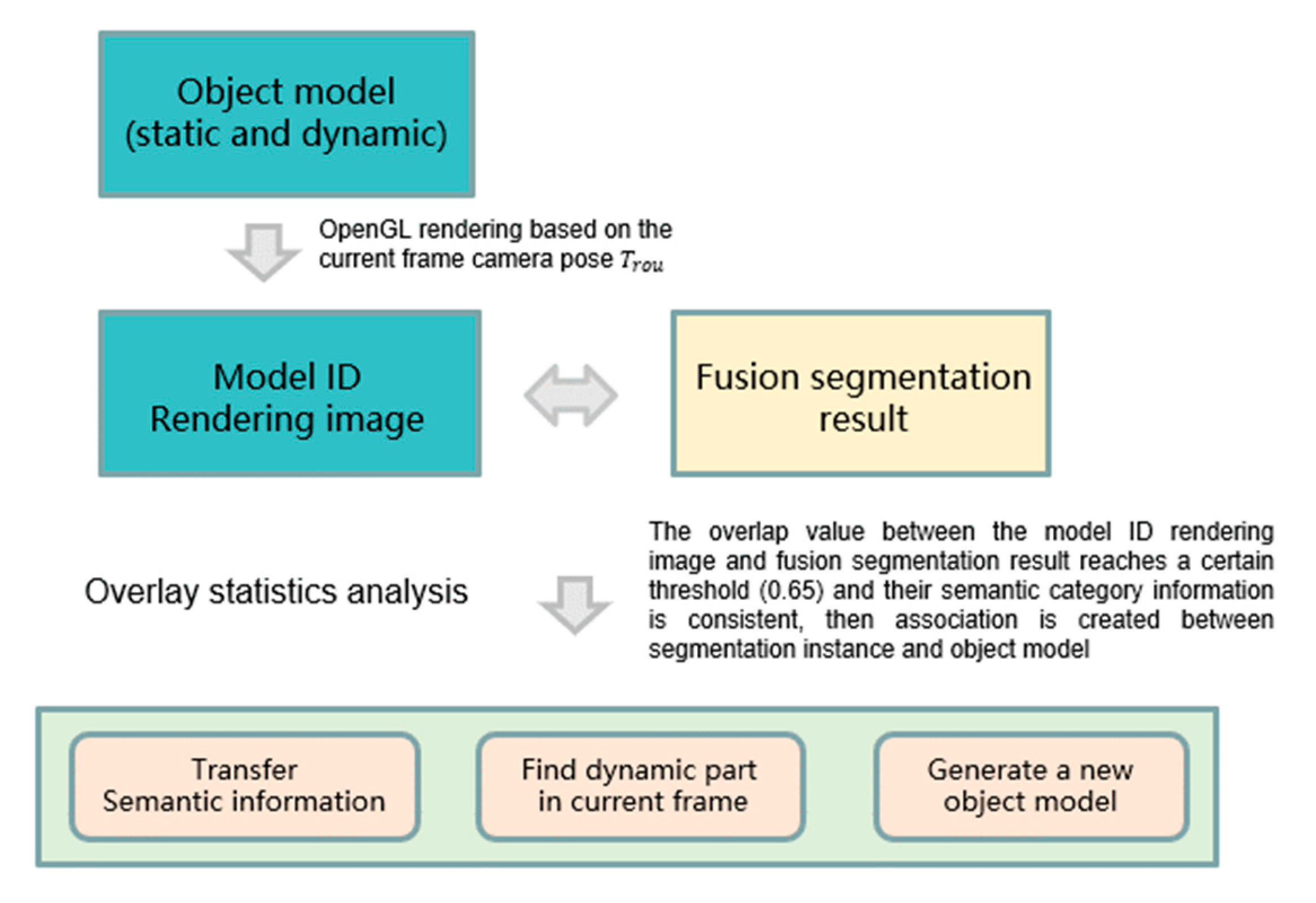

3.4. Object Instance Segmentation and Data Association with Object Database

3.5. Accurate Camera Pose Tracking

3.6. Object Model Fusion, Semantic Map Optimization, and Construction

4. Results

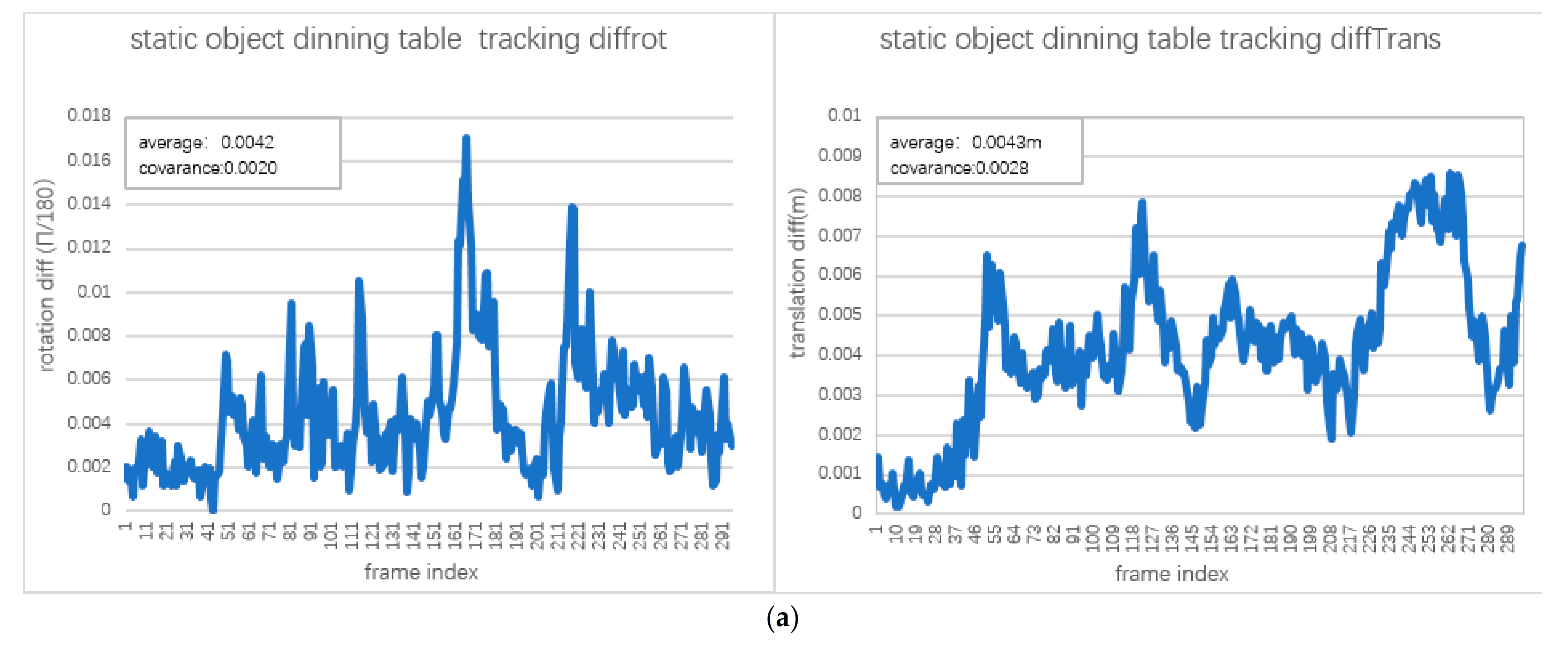

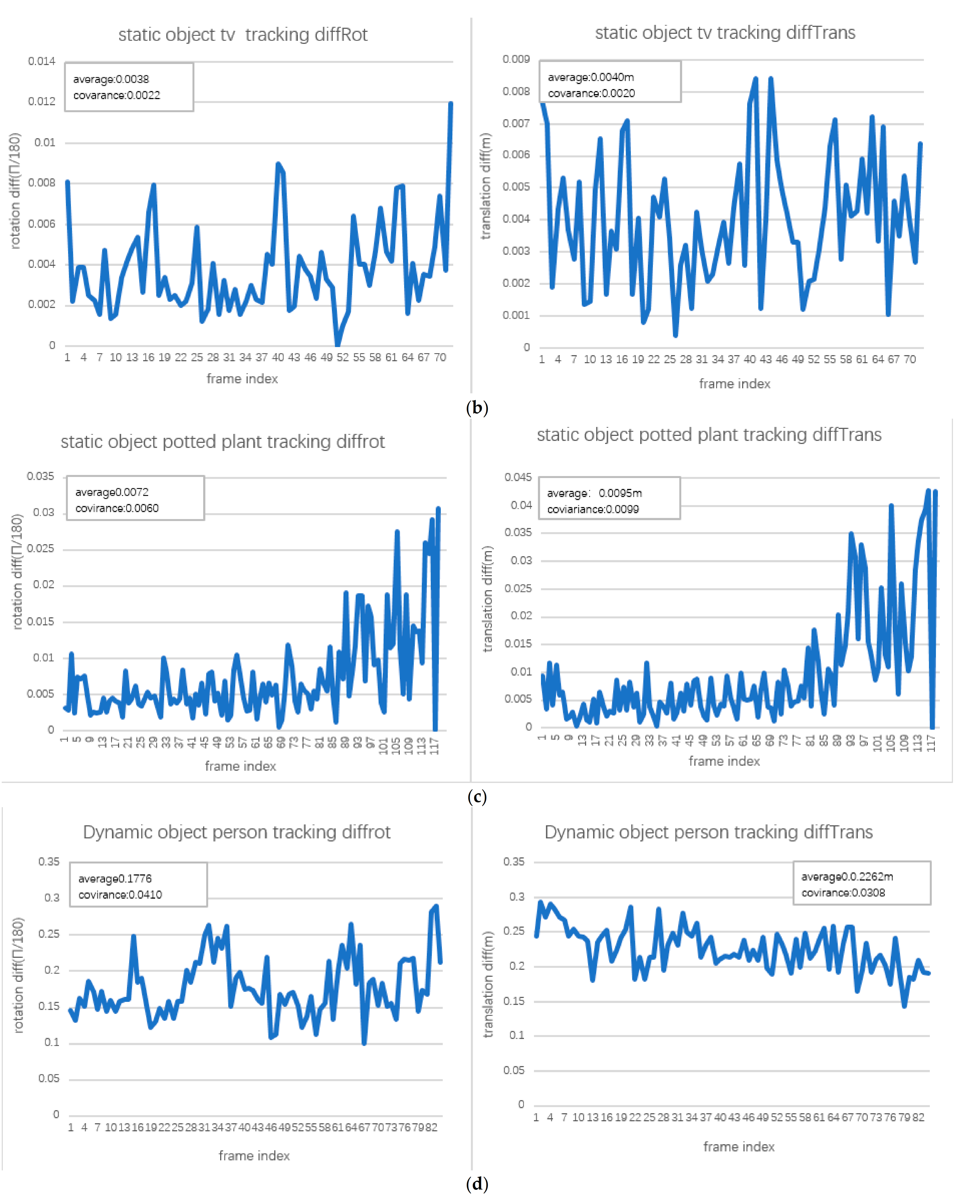

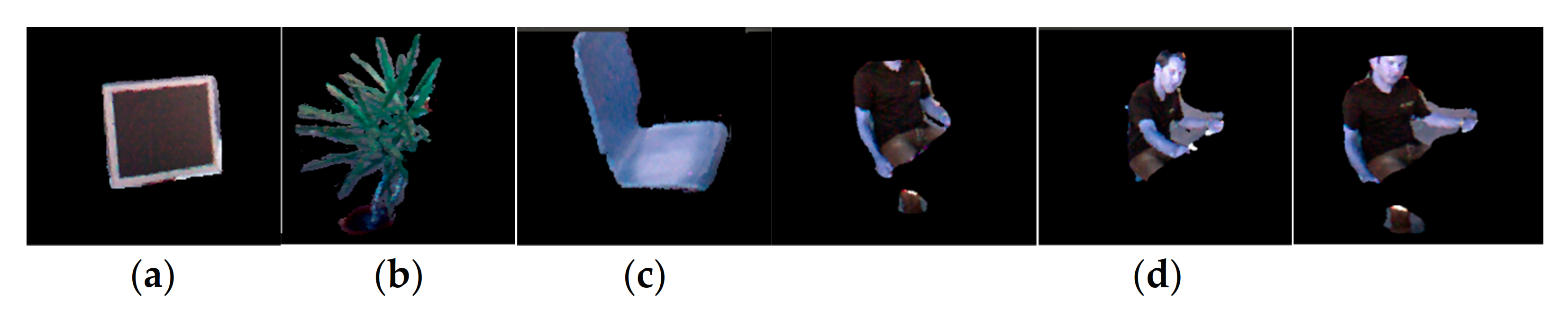

4.1. Object 3D Tracking for Motion Detection

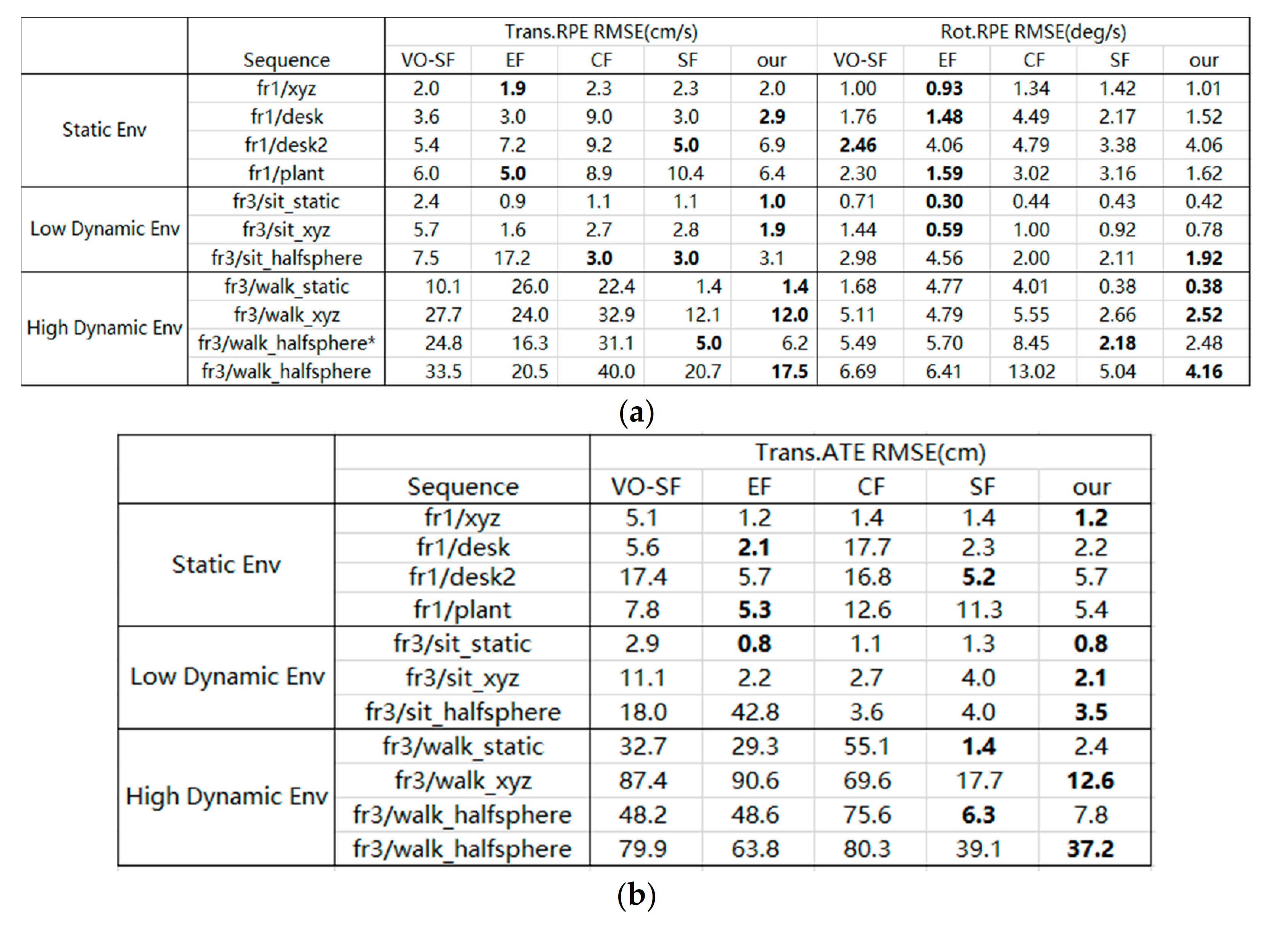

4.2. Camera Pose Estimation Accuracy

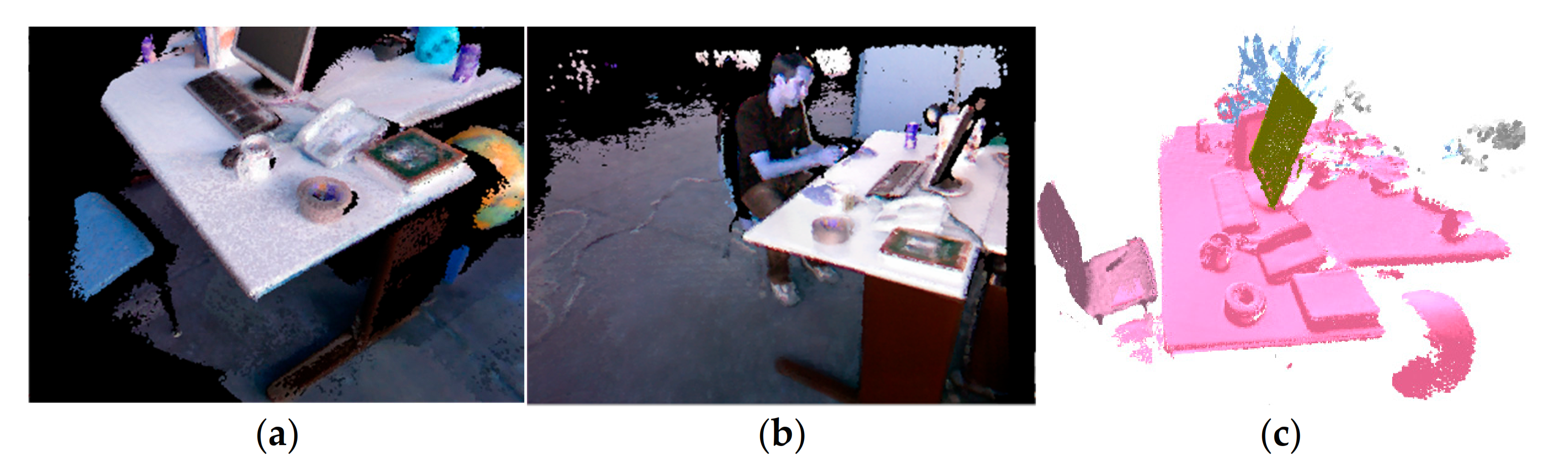

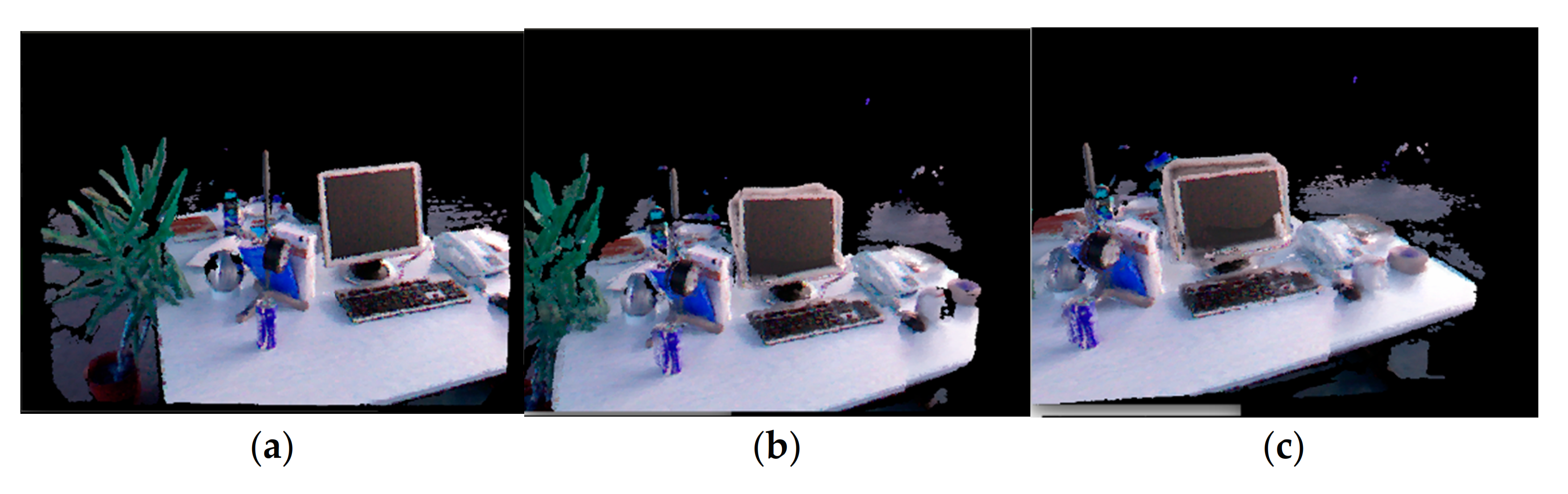

4.3. Map Construction

5. Discussion

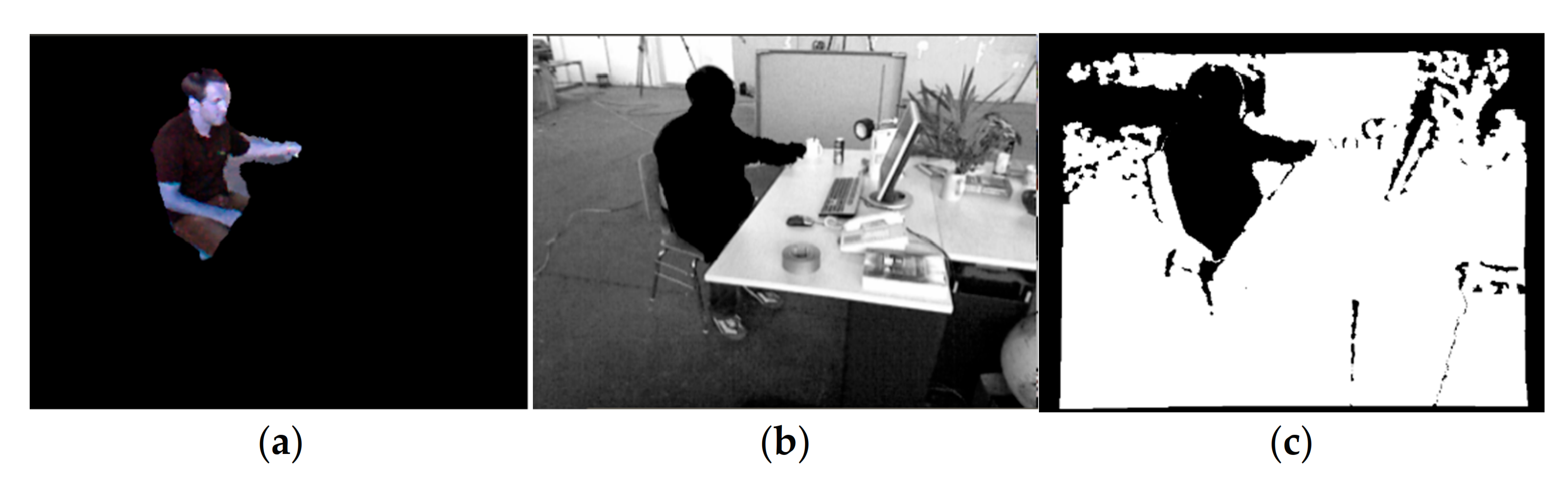

5.1. Object Motion Detection

5.2. Object Instance Segmentation and Association

5.3. Camera Pose Estimation Accuracy

5.4. Object Model Fusion and Updating

5.5. System Efficiency

6. Conclusions

Author Contributions

Funding

Institutional Review Board

Informed Consent

Data Availability Statement

Conflicts of Interest

References

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A.W. KinectFusion: Real-time Dense Surface Mapping and Tracking. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Whelan, T.; Kaess, M.; Fallon, M.; Johannsson, H.; Mcdonald, J. Kintinuous: Spatially Extended KinectFusion. Robot. Auton. Syst. 2012, 69, 3–14. [Google Scholar] [CrossRef]

- Whelan, T.; Leutenegger, S.; Moreno, R.; Glocker, B.; Davison, A. ElasticFusion: Dense SLAM Without A Pose Graph. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. DynamicFusion: Reconstruction and Tracking of Non-rigid Scenes in Real-time. In Proceedings of the Computer Vision & Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV); IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Keller, M.; Lefloch, D.; Lambers, M.; Izadi, S.; Weyrich, T.; Kolb, A. Real-Time 3D Reconstruction in Dynamic Scenes Using Point-Based Fusion. In Proceedings of the International Conference on 3d Vision, Seattle, WA, USA, 29 June–1 July 2013. [Google Scholar]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2013, 31, 647–663. [Google Scholar] [CrossRef]

- Steinbrücker, F.; Sturm, J.; Cremers, D. Volumetric 3D Mapping in Real-time on a CPU. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 2021–2028. [Google Scholar]

- Kerl, C.; Sturm, J.; Cremers, D. Dense Visual SLAM for RGB-D Cameras. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2100–2106. [Google Scholar]

- Lefloch, D.; Weyrich, T.; Kolb, A. Anisotropic Point-based Fusion. In Proceedings of the International Conference on Information Fusion, Washington, DC, USA, 6–9 July 2015. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.A.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.J. KinectFusion: Real-time 3D Reconstruction and Interaction using a Moving Depth Camera. In Proceedings of the Acm Symposium on User Interface Software & Technology, Santa Barbara, CA, USA, 16–19 October 2011. [Google Scholar]

- Kerl, C.; Sturm, J.; Cremers, D. Robust Odometry Estimation for RGB-D Cameras. In Proceedings of the Robotics and Automation (ICRA), 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Chen, T.; Lu, S. Object-Level Motion Detection from Moving Cameras. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2333–2343. [Google Scholar] [CrossRef]

- Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D Lidar Data in Non-flat Urban Environments using a Local Convexity Criterion. In Proceedings of the Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009. [Google Scholar]

- Whelan, T.; Kaess, M.; Finman, R.E.; Leonard, J.J. Efficient Incremental Map Segmentation in Dense RGB-D Maps. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Karpathy, A.; Miller, S.; Li, F.F. Object Discovery in 3D Scenes via Shape Analysis. In Proceedings of the IEEE International Conference on Robotics & Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Stein, S.C.; Schoeler, M.; Papon, J.; Bernstein, F.W. Object Partitioning Using Local Convexity. In Proceedings of the Computer Vision & Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Tateno, K.; Tombari, F.; Navab, N. Real-time and Scalable Incremental Segmentation on Dense SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Uckermann, A.; Elbrechter, C.; Haschke, R.; Ritter, H. 3D Scene Segmentation for Autonomous Robot Grasping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Vilamoura, Portugal, 7–12 October 2012. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Computer Vision—ECCV 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Sunderhauf, N.; Pham, T.T.; Latif, Y.; Milford, M.; Reid, I. Meaningful Maps with Object-oriented Semantic Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 5079–5085. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.J.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- Stückler, J.; Behnke, S. Model Learning and Real-Time Tracking using Multi-Resolution Surfel Maps. In Proceedings of the Twenty-sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012. [Google Scholar]

- Tateno, K.; Tombari, F.; Navab, N. When 2.5D is not Enough: Simultaneous Reconstruction, Segmentation and Recognition on Dense SLAM. In Proceedings of the IEEE the International Conference on Robotics & Automation, Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Pillai, S.; Leonard, J. Monocular SLAM Supported Object Recognition. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM: Tracking and Mapping Recognizable Features. In Proceedings of the Workshop on Multi View Geometry in Robotics, Berkeley, CA, USA, 13 July 2014. [Google Scholar]

- Dharmasiri, T.; Lui, V.; Drummond, T. MO-SLAM: Multi Object SLAM with Run-time Object Discovery through Duplicates. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Daejeon, South Korea, 9–14 October 2016. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G.R. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Jaimez, M.; Kerl, C.; Gonzalez-Jimenez, J.; Cremers, D. Fast Odometry and Scene Flow from RGB-D Cameras based on Geometric Clustering. In Proceedings of the IEEE International Conference on Robotics & Automation, Singapore, Singapore, 29 May–3 June 2017. [Google Scholar]

- Scona, R.; Jaimez, M.; Petillot, Y.R.; Fallon, M.; Cremers, D. StaticFusion: Background Reconstruction for Dense RGB-D SLAM in Dynamic Environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–9. [Google Scholar]

- Barnes, D.; Maddern, W.; Pascoe, G.; Posner, I. Driven to Distraction: Self-Supervised Distractor Learning for Robust Monocular Visual Odometry in Urban Environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1894–1900. [Google Scholar]

- Berta, B.; Facil, J.M.; Javier, C.; Jose, N. DynaSLAM: Tracking, Mapping and Inpainting in Dynamic Scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar]

- Zhao, L.; Liu, Z.; Chen, J.; Cai, W.; Wang, W.; Zeng, L. A Compatible Framework for RGB-D SLAM in Dynamic Scenes. IEEE Access 2019, 7, 75604–75614. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, Q.H. Active Perception for Foreground Segmentation: An RGB-D Data-Based Background Modeling Method. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1596–1609. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, M.Q.H. Motion Removal for Reliable RGB-D SLAM in Dynamic Environments. Robot. Auton. Syst. 2018, 108, 115–128. [Google Scholar] [CrossRef]

- Rünz, M.; Agapito, L. Co-Fusion: Real-time Segmentation, Tracking and Fusion of Multiple Objects. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, Singapore, 29 May–3 June 2017. [Google Scholar]

- Rünz, M.; Agapito, L. MaskFusion: Real-Time Recognition, Tracking and Reconstruction of Multiple Moving Objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018. [Google Scholar]

- Wang, C.C.; Thorpe, C.; Hebert, M.; Thrun, S.; Durrant-Whyte, H. Simultaneous Localization, Mapping and Moving Object Tracking. Int. J. Robot. Res. 2007, 26, 889–916. [Google Scholar] [CrossRef]

- Xu, B.; Li, W.; Tzoumanikas, D.; Bloesch, M.; Leutenegger, S. MID-Fusion: Octree-based Object-Level Multi-Instance Dynamic SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Barsan, I.A.; Liu, P.; Pollefeys, M.; Geiger, A. Robust Dense Mapping for Large-Scale Dynamic Environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Singapore, Singapore, 29 May–3 June 2017. [Google Scholar]

- Derome, M.; Plyer, A.; Sanfourche, M.; Besnerais, G.L. Moving Object Detection in Real-Time Using Stereo from a Mobile Platform. Unmanned Syst. 2015, 3, 253–266. [Google Scholar] [CrossRef]

- Kundu, A.; Krishna, K.M.; Sivaswamy, J. Moving Object Detection by Multi-view Geometric Techniques from a Single Camera Mounted Robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Bayona, Á.; SanMiguel, J.C.; Martínez, J.M. Stationary Foreground Detection using Background Subtraction and Temporal Difference in Video Surveillance. In Proceedings of the 2010 IEEE International Conference on Image Processing, 26–29 September 2010; pp. 4657–4660. [Google Scholar]

- Sun, Y.; Liu, M.; Meng, Q.H. Invisibility: A Moving-object Removal Approach for Dynamic Scene Modelling using RGB-D Camera. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017. [Google Scholar]

- Leonardo, S.; André, C.; Luiz, G.A.; Tiago, N. Stairs and Doors Recognition as Natural Landmarks Based on Clouds of 3D Edge-Points from RGB-D Sensors for Mobile Robot Localization. Sensors 2017, 17, 1–16. [Google Scholar]

- Yazdi, M.; Bouwmans, T. New Trends on Moving Object Detection in Video Images Captured by a moving Camera: A Survey. Comput. Sci. Rev. 2018, 28, 157–177. [Google Scholar] [CrossRef]

- Vertens, J.; Valada, A.; Burgard, W. SMSnet: Semantic Motion Segmentation using Deep Convolutional Neural Networks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2018. [Google Scholar]

- Tan, W.; Liu, H.; Dong, Z.; Zhang, G.; Bao, H. Robust Monocular SLAM in Dynamic Environments. In Proceedings of the IEEE International Symposium on Mixed & Augmented Reality, Adelaide, SA, Australia, 1–4 October 2013. [Google Scholar]

- Wangsiripitak, S.; Murray, D.W. Avoiding Moving Outliers in Visual SLAM by tracking Moving Objects. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 375–380. [Google Scholar]

- Salas-Moreno, R.F.; Glocken, B.; Kelly, P.H.J.; Davison, A.J. Dense Planar SLAM. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 157–164. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A Benchmark for the Evaluation of RGB-D SLAM Systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Vilamoura, Portugal, 7–12 October 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, X.; Li, J.; Fan, X.; Jian, H.; Xu, C. Object-Level Semantic Map Construction for Dynamic Scenes. Appl. Sci. 2021, 11, 645. https://doi.org/10.3390/app11020645

Kang X, Li J, Fan X, Jian H, Xu C. Object-Level Semantic Map Construction for Dynamic Scenes. Applied Sciences. 2021; 11(2):645. https://doi.org/10.3390/app11020645

Chicago/Turabian StyleKang, Xujie, Jing Li, Xiangtao Fan, Hongdeng Jian, and Chen Xu. 2021. "Object-Level Semantic Map Construction for Dynamic Scenes" Applied Sciences 11, no. 2: 645. https://doi.org/10.3390/app11020645

APA StyleKang, X., Li, J., Fan, X., Jian, H., & Xu, C. (2021). Object-Level Semantic Map Construction for Dynamic Scenes. Applied Sciences, 11(2), 645. https://doi.org/10.3390/app11020645