Enhancement of Multi-Target Tracking Performance via Image Restoration and Face Embedding in Dynamic Environments

Abstract

:1. Introduction

- We present three methods to enhance the performance for multiple object tracking in a dynamic environment. Those three methods overcoming the dynamic situation contributes to improved detection, improved identification, and a lower chance of association failures, respectively.

- Since each of the proposed methods has modularity, there is no cost for re-learning the entire framework, so it can be easily attached to various trackers.

- To demonstrate the effectiveness of the proposed methodology, we constructed a benchmark set on a real robot environment and verified our approaches through experimental ablation studies.

2. Related Work

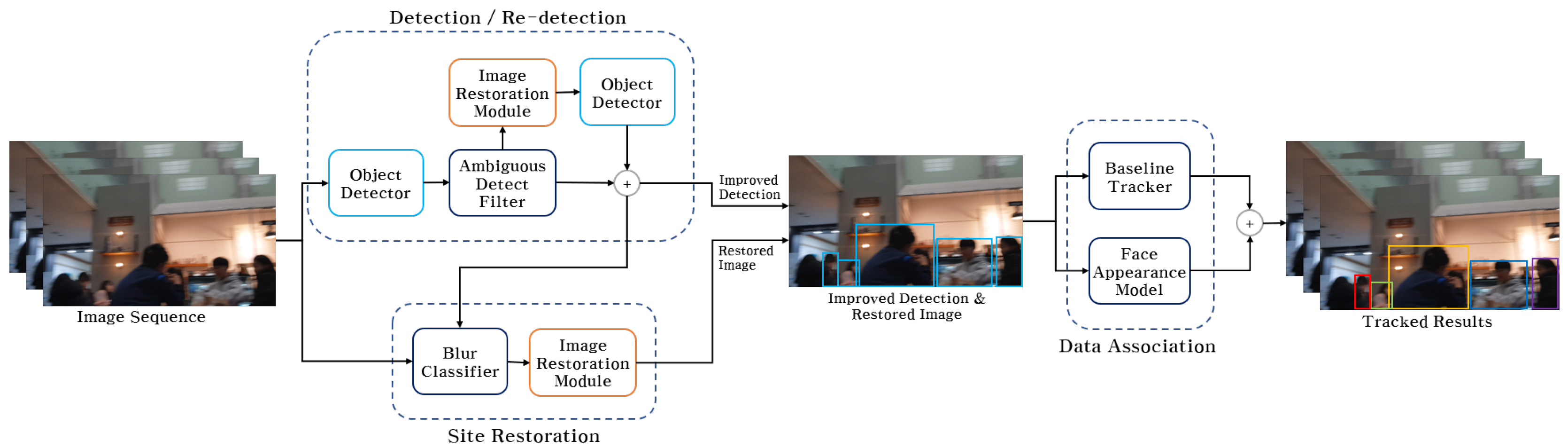

3. Method

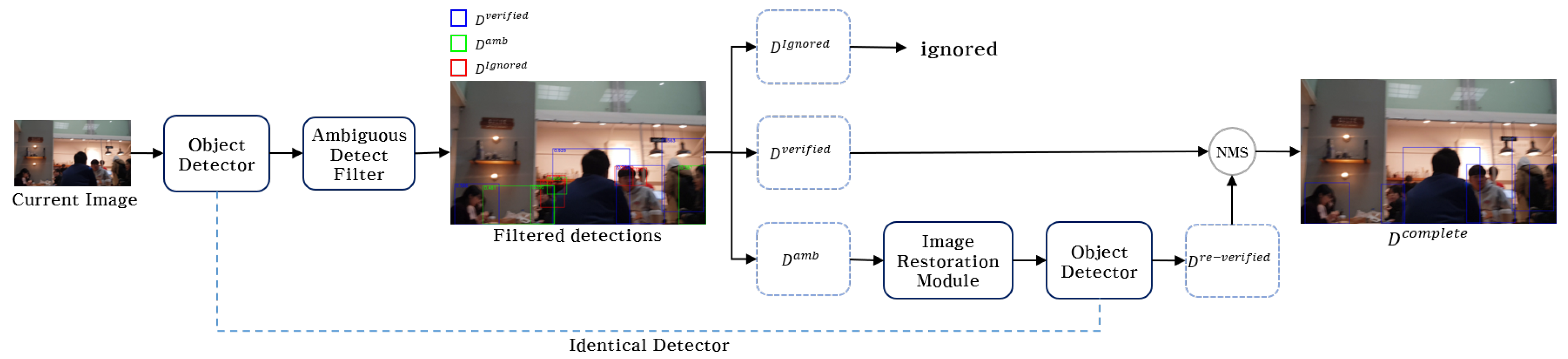

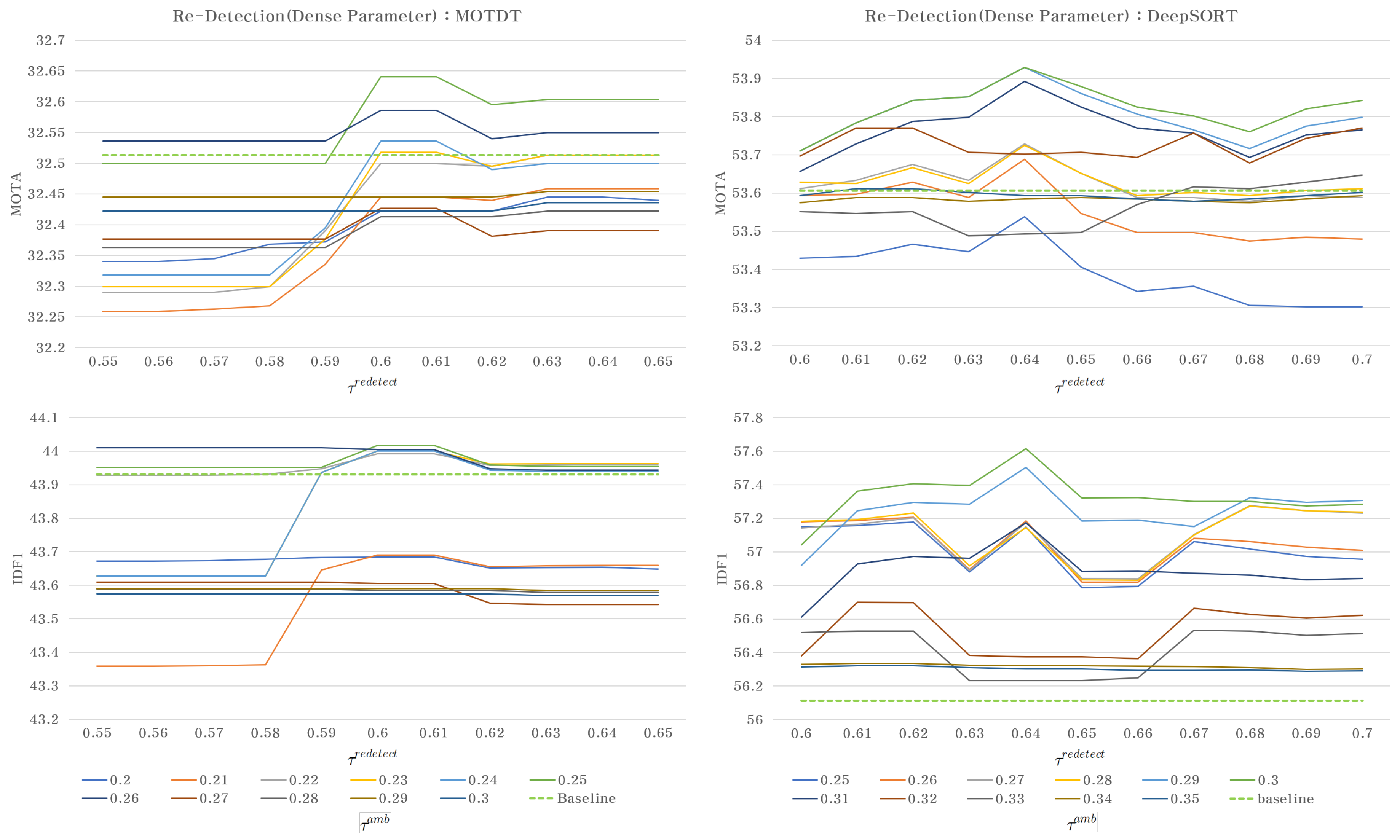

Section 3.1 Re-detection: Re-detection is performed in the detection/re-detection process. This section describes the process of defining ambiguous detection results and re-detecting them after image restoration to increase the reliability of the detection results.

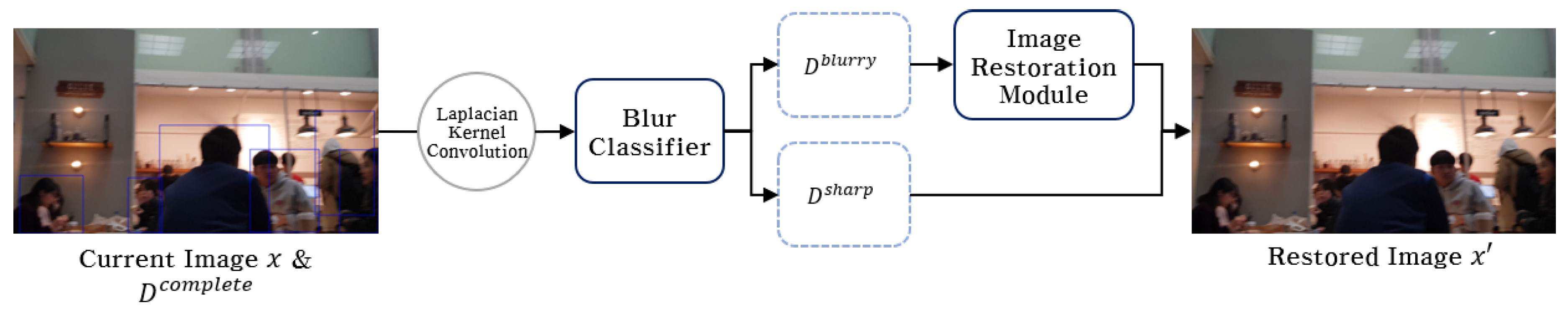

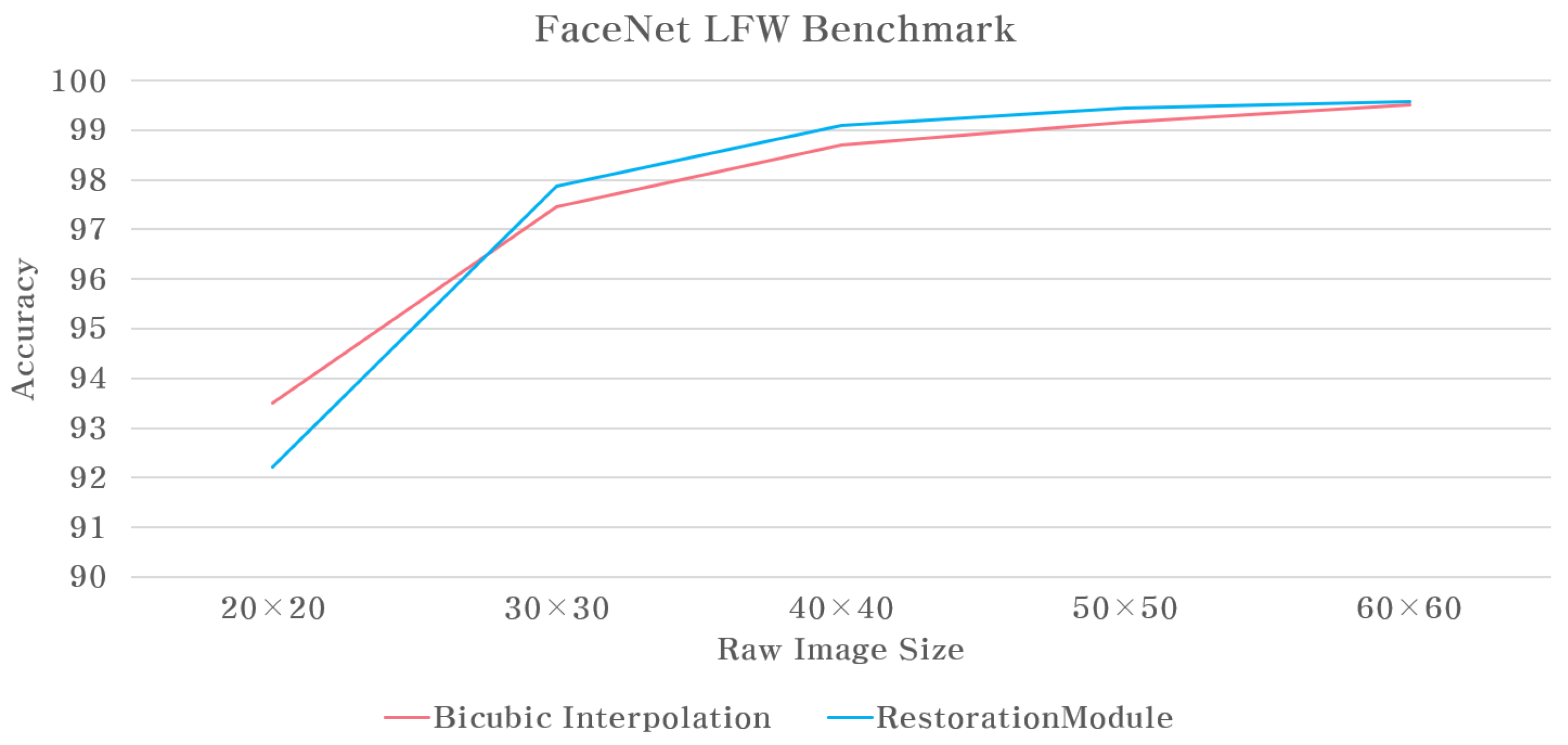

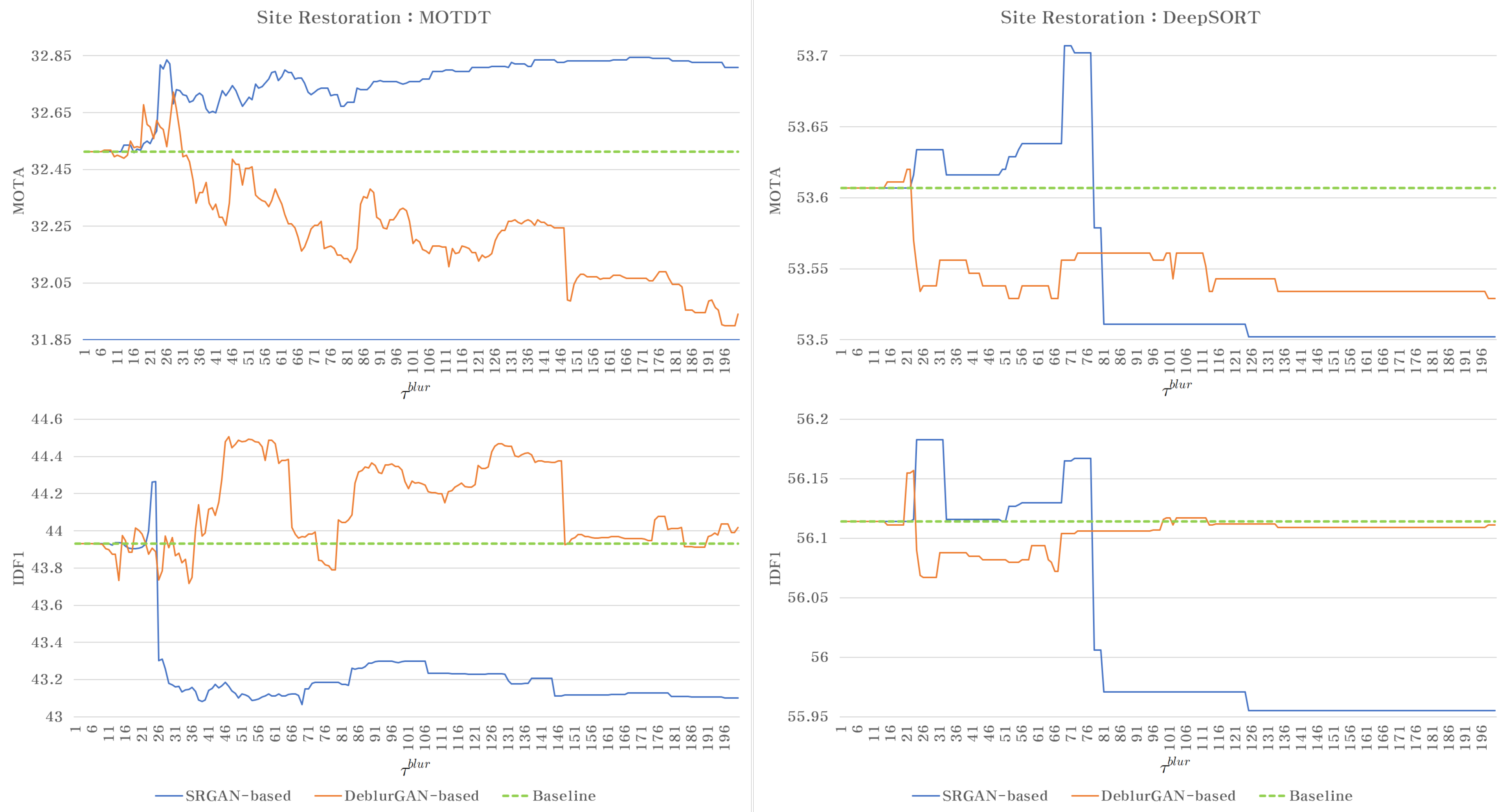

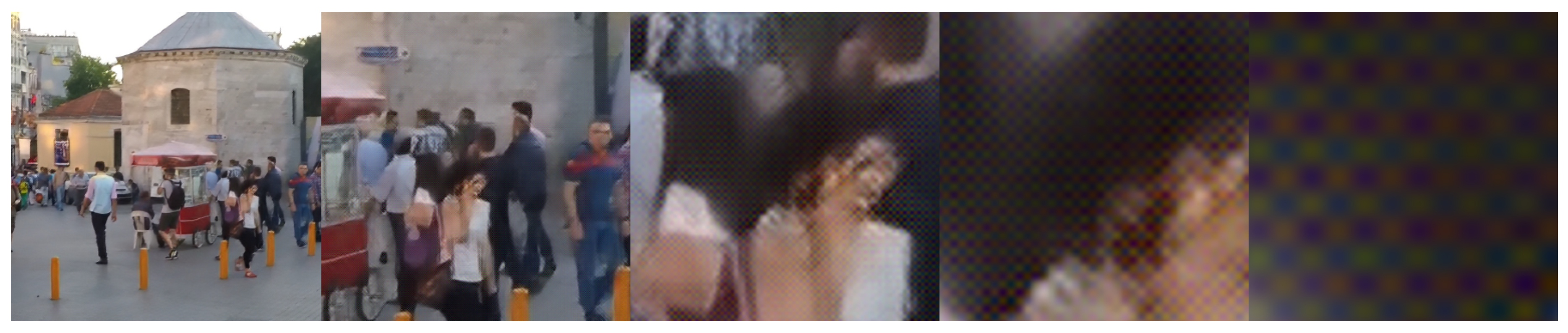

Section 3.2 Site restoration: This section presents the site restoration process of defining the noisy area and restoring the image of such an area to improve the reliability of the image to be used in the appearance model.

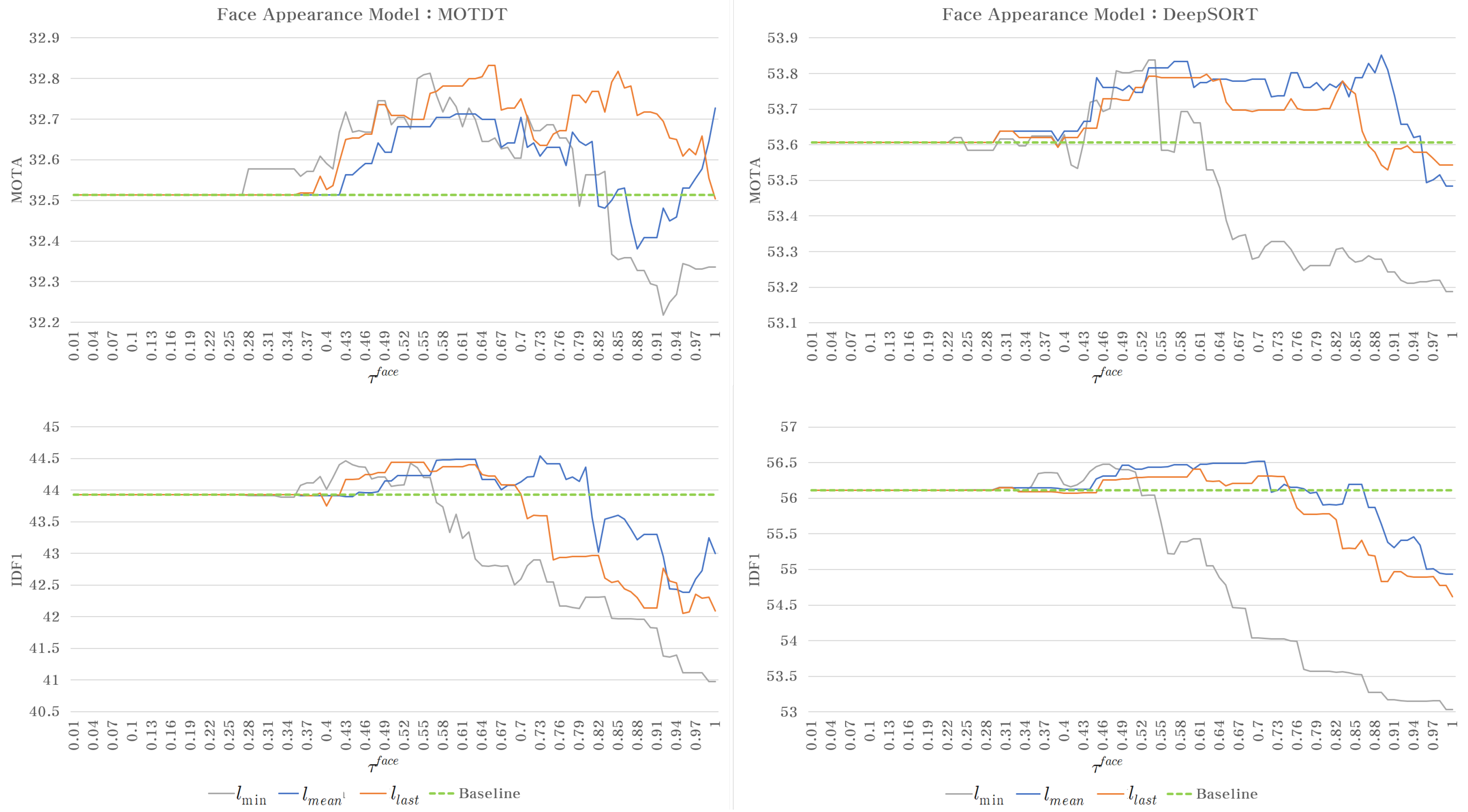

Section 3.3 Face appearance model: the face appearance model is performed in the data association process, and defines how to associate the appearance model that uses face features to overcome connection failures of baseline trackers.

3.1. Re-Detection

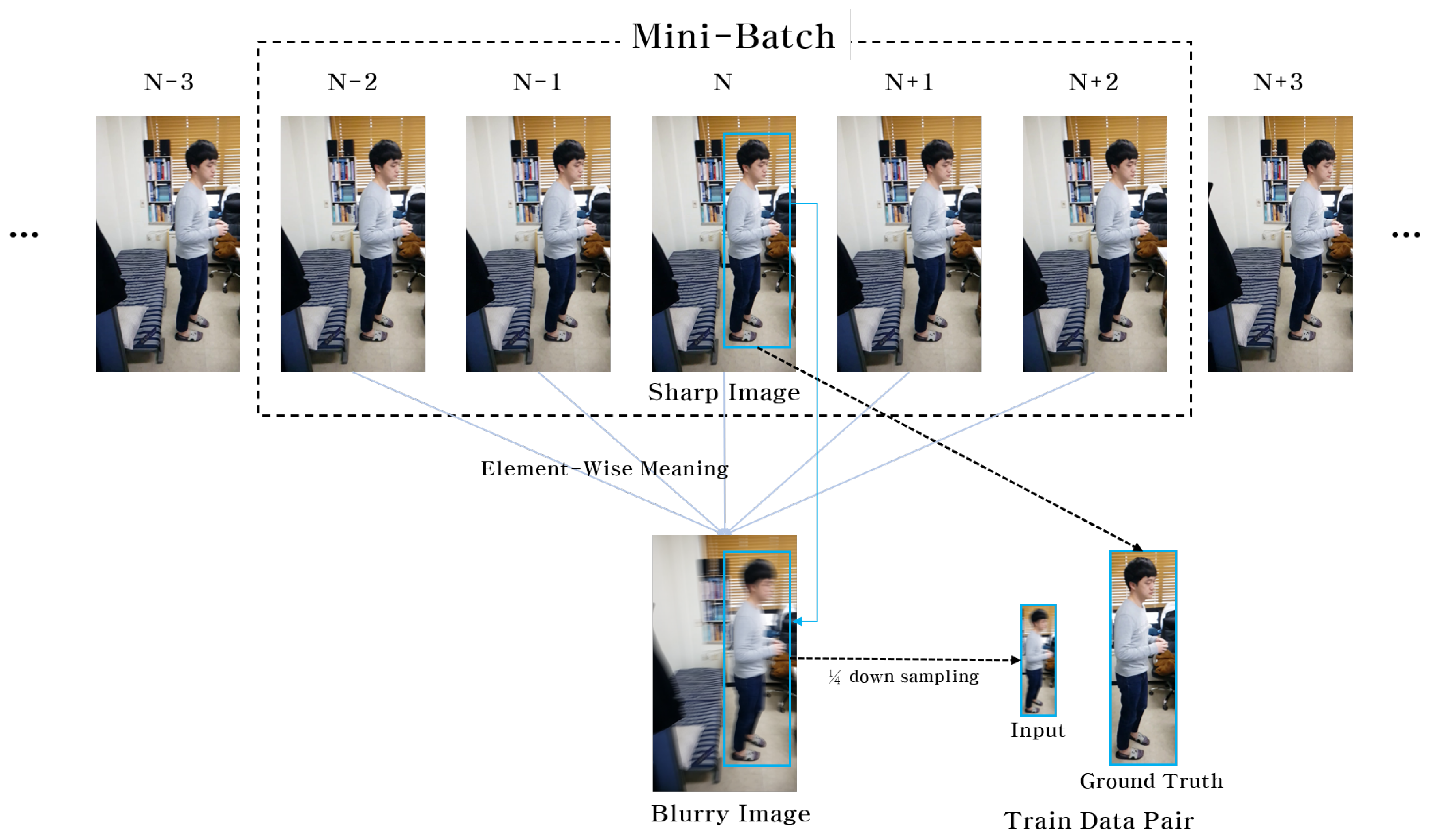

3.2. Site Restoration

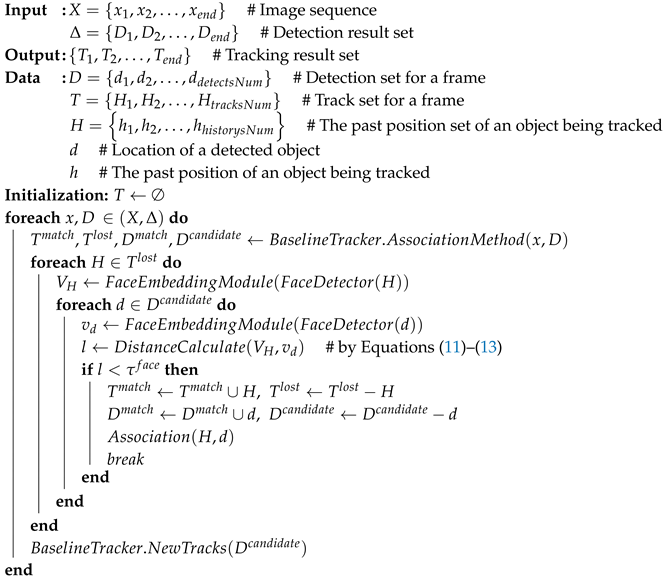

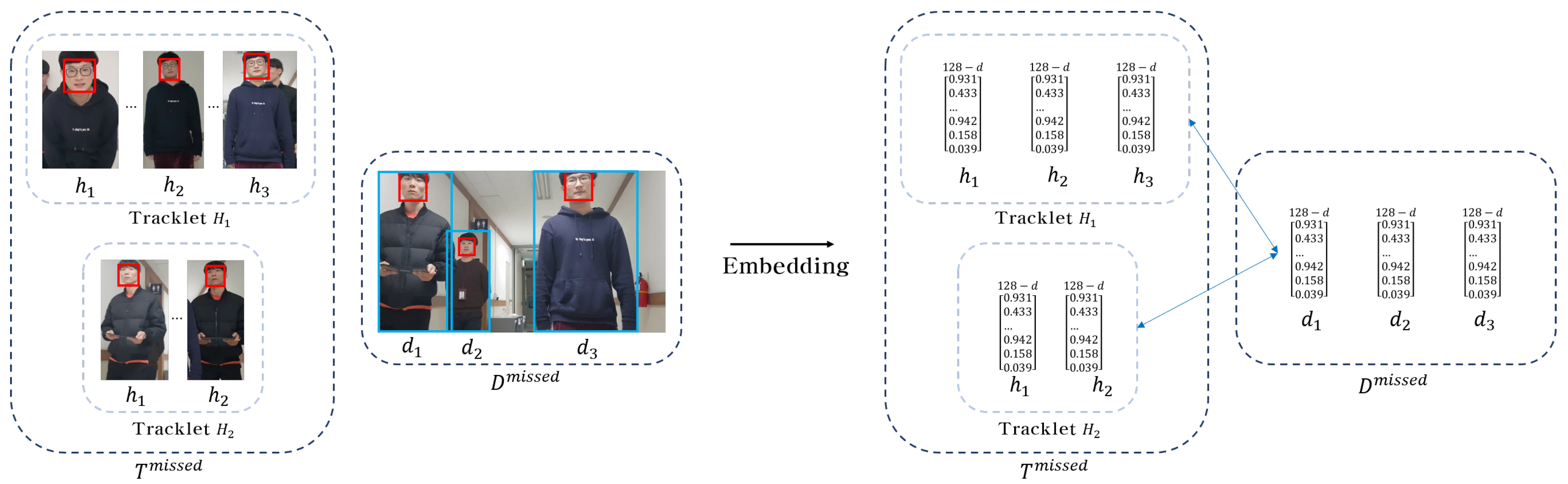

3.3. Face Appearance Model

| Algorithm 1: Proposed face appearance model algorithm |

|

4. Experiment

4.1. Experiment Configuration

- It should aim for people who are not included in the benchmark set for fair evaluation.

- To simulate a natural and precise blurry image, the image must be taken at a high refresh rate with dynamic movement.

4.2. Benchmark Set

4.3. Experiment Results

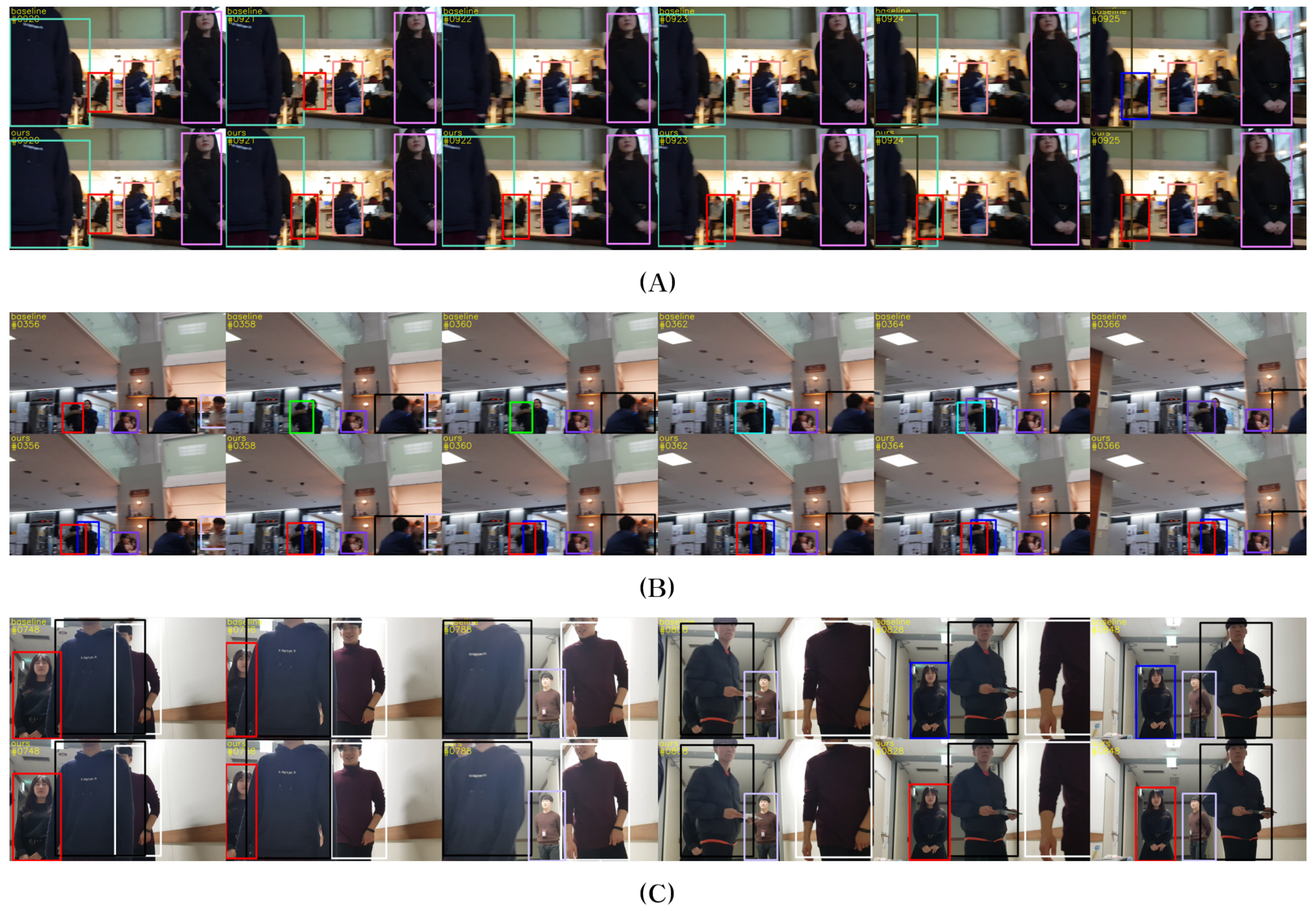

- (A)

- shows the selected frames in the Patrol dataset. This dataset causes severe motion blur, especially for distant objects due to the camera rotation. In the case of the baseline, the detection of the object fails, which is marked in red, and the tracking is terminated. On the other hand, the ID is constantly tracked in red on our model because it maintains the detection in a high success rate, even for blurry objects, by performing the re-detection based on the restoration functionality.

- (B)

- shows an example of the experimental results in the Interaction-2 dataset that has a lot of occlusions between objects that are relatively far apart. In the case of the baseline, the association failure of the object indicated by the red bounding box occurs due to the noise generated by the dynamic movement. On the contrary, our method maintains the object’s ID constantly because the accuracy of the appearance model is improved by restoring the noise using the site restoration method.

- (C)

- includes the experimental examples on the Interaction-1 dataset in which humans are observed at close range and many occlusions are detected due to movements. In particular, for the occlusion of the objects indicated by the red and black bounding boxes, the tracking is terminated after the occlusion and a new ID is assigned in the case of the baseline. The reason for the mistracking is the confusion of the appearance model caused by the mixed features. On the other hand, in our method, the ID remains intact because the use of a face feature alleviates mixing of features.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gu, R.; Wang, G.; Hwang, J.N. Efficient multi-person hierarchical 3D pose estimation for autonomous driving. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 163–168. [Google Scholar]

- Hsu, H.M.; Huang, T.W.; Wang, G.; Cai, J.; Lei, Z.; Hwang, J.N. Multi-Camera Tracking of Vehicles based on Deep Features Re-ID and Trajectory-Based Camera Link Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 416–424. [Google Scholar]

- Tang, Z.; Naphade, M.; Liu, M.Y.; Yang, X.; Birchfield, S.; Wang, S.; Kumar, R.; Anastasiu, D.; Hwang, J.N. Cityflow: A city-scale benchmark for multi-target multi-camera vehicle tracking and re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8797–8806. [Google Scholar]

- Tang, Z.; Wang, G.; Xiao, H.; Zheng, A.; Hwang, J.N. Single-camera and inter-camera vehicle tracking and 3D speed estimation based on fusion of visual and semantic features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 108–115. [Google Scholar]

- Wang, G.; Yuan, X.; Zheng, A.; Hsu, H.M.; Hwang, J.N. Anomaly Candidate Identification and Starting Time Estimation of Vehicles from Traffic Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 382–390. [Google Scholar]

- Tian, W.; Lauer, M.; Chen, L. Online multi-object tracking using joint domain information in traffic scenarios. IEEE Trans. Intell. Transp. Syst. 2019, 21, 374–384. [Google Scholar]

- Wang, X.; Fan, B.; Chang, S.; Wang, Z.; Liu, X.; Tao, D.; Huang, T.S. Greedy batch-based minimum-cost flows for tracking multiple objects. IEEE Trans. Image Process. 2017, 26, 4765–4776. [Google Scholar] [CrossRef]

- Keuper, M.; Tang, S.; Zhongjie, Y.; Andres, B.; Brox, T.; Schiele, B. A multi-Cut Formulation for Joint Segmentation and Tracking of Multiple Objects. arXiv 2016, arXiv:1607.06317. [Google Scholar]

- Yang, B.; Nevatia, R. Multi-target tracking by online learning of non-linear motion patterns and robust appearance models. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1918–1925. [Google Scholar]

- Wang, B.; Wang, G.; Luk Chan, K.; Wang, L. Tracklet association with online target-specific metric learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 1234–1241. [Google Scholar]

- Wei, J.; Yang, M.; Liu, F. Learning spatio-temporal information for multi-object tracking. IEEE Access 2017, 5, 3869–3877. [Google Scholar] [CrossRef]

- Milan, A.; Rezatofighi, S.H.; Dick, A.; Reid, I.; Schindler, K. Online multi-target tracking using recurrent neural networks. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Fu, Z.; Feng, P.; Angelini, F.; Chambers, J.; Naqvi, S.M. Particle PHD filter based multiple human tracking using online group-structured dictionary learning. IEEE Access 2018, 6, 14764–14778. [Google Scholar] [CrossRef]

- Chu, Q.; Ouyang, W.; Li, H.; Wang, X.; Liu, B.; Yu, N. Online multi-object tracking using CNN-based single object tracker with spatial-temporal attention mechanism. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4836–4845. [Google Scholar]

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef] [Green Version]

- Pinho, R.R.; Tavares, J.M.R.; Correia, M.V. A movement tracking management model with Kalman filtering, global optimization techniques and mahalanobis distance. Adv. Comput. Methods Sci. Eng. 2005, 4, 1–3. [Google Scholar]

- Pérez, P.; Hue, C.; Vermaak, J.; Gangnet, M. Color-based probabilistic tracking. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2002; pp. 661–675. [Google Scholar]

- Li, Y.; Ai, H.; Yamashita, T.; Lao, S.; Kawade, M. Tracking in low frame rate video: A cascade particle filter with discriminative observers of different life spans. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1728–1740. [Google Scholar] [PubMed]

- Dai, S.; Wu, Y. Motion from blur. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar]

- Cho, S.; Matsushita, Y.; Lee, S. Removing non-uniform motion blur from images. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision (ICCV), Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Potmesil, M.; Chakravarty, I. Modeling motion blur in computer-generated images. ACM Siggraph Comput. Graph. 1983, 17, 389–399. [Google Scholar] [CrossRef]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-time multiple people tracking with deeply learned candidate selection and person re-identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Ning, G.; Zhang, Z.; Huang, C.; Ren, X.; Wang, H.; Cai, C.; He, Z. Spatially supervised recurrent convolutional neural networks for visual object tracking. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Chen, L.; Ai, H.; Shang, C.; Zhuang, Z.; Bai, B. Online multi-object tracking with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 645–649. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 June 2014; pp. 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Puerto Rico, USA, 24–30 June 2017; pp. 1125–1134. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning(ICML), Sydney, Australia, 6–11 August 2017; pp. 1857–1865. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Puerto Rico, USA, 24–30 June 2017; pp. 4681–4690.

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8183–8192. [Google Scholar]

- Cheng, D.; Gong, Y.; Zhou, S.; Wang, J.; Zheng, N. Person re-identification by multi-channel parts-based cnn with improved triplet loss function. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–30 June 2016; pp. 1335–1344. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.B.; Ramesh, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; Technical Report 07-49; University of Massachusetts Amherst: Amherst, MA, USA, 2008. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. Eurasip J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016; pp. 17–35. [Google Scholar]

| Interaction-1 | Interaction-2 | Patrol | |

|---|---|---|---|

| Frame | 2700 | 1242 | 2868 |

| Bounding box | 5814 | 6201 | 9913 |

| ID | 38 | 68 | 133 |

| Explanation | destination guidance 1 | destination guidance 2 | patrol |

| FPS | 30 | 30 | 30 |

| Image size | 1920 × 1080 | 1920 × 1080 | 1920 × 1080 |

| Length(sec) | 90 | 41.4 | 95.6 |

| Crowd | low | middle | high |

| Baseline | Method | MOTA (↑) | IDF1 (↑) | MT (↑) | ML (↓) | ID sw (↓) |

|---|---|---|---|---|---|---|

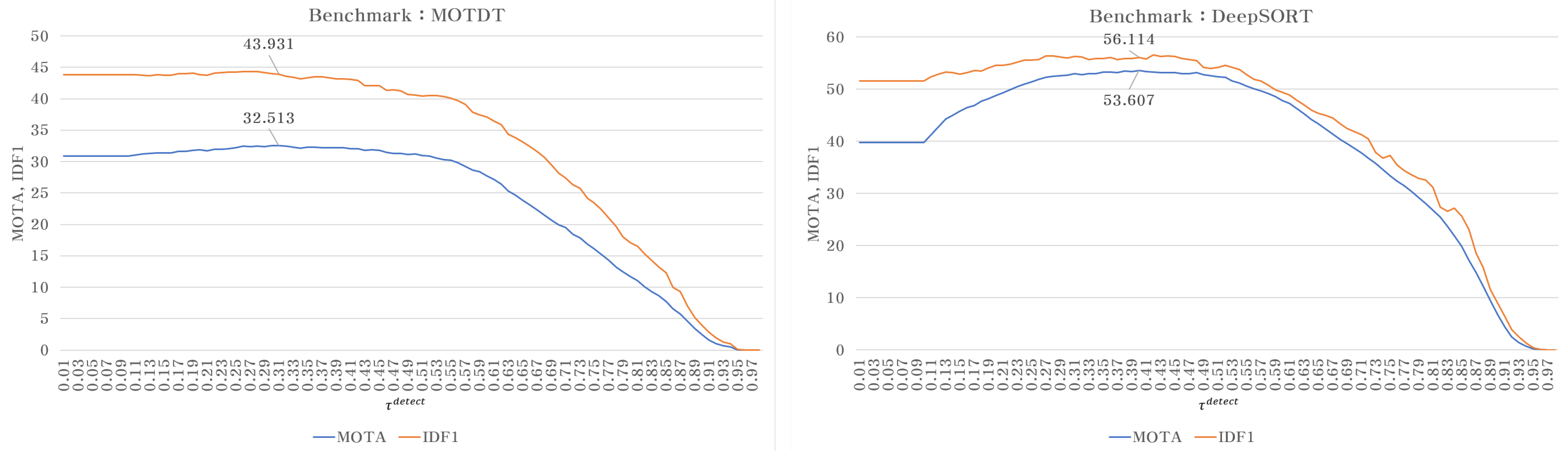

| MOTDT | - | 32.513 | 43.931 | 40 | 120 | 162 |

| re-detection | 32.641 | 44.017 | 41 | 118 | 172 | |

| site restoration | 32.818 | 44.263 | 41 | 120 | 163 | |

| face appearance | 32.832 | 44.226 | 42 | 120 | 159 | |

| re-detection site restoration | 32.959 | 44.355 | 42 | 118 | 173 | |

| re-detection face appearance | 32.886 | 44.492 | 43 | 118 | 163 | |

| site restoration face appearance | 33.118 | 44.419 | 43 | 120 | 160 | |

| re-detection site restoration face appearance | 33.164 | 44.531 | 44 | 118 | 167 | |

| DeepSORT | - | 53.607 | 56.114 | 83 | 69 | 132 |

| re-detection | 53.929 | 57.616 | 84 | 67 | 137 | |

| site restoration | 53.707 | 56.165 | 84 | 69 | 130 | |

| face appearance | 53.834 | 56.471 | 83 | 68 | 123 | |

| re-detection site restoration | 54.007 | 57.656 | 85 | 67 | 137 | |

| re-detection face appearance | 54.093 | 57.943 | 85 | 67 | 134 | |

| site restoration face appearance | 53.989 | 56.709 | 84 | 68 | 117 | |

| re-detection site restoration face appearance | 54.180 | 57.906 | 85 | 67 | 130 |

| Baseline | Test Data | Method | MOTA (↑) | IDF1 (↑) | MT (↑) | ML (↓) | ID sw (↓) |

|---|---|---|---|---|---|---|---|

| MOTDT | MOT16-05 | - | 36.037 | 47.269 | 9 | 51 | 53 |

| ours | 36.066 | 46.419 | 9 | 51 | 57 | ||

| MOT16-10 | - | 13.492 | 22.463 | 3 | 40 | 46 | |

| ours | 13.785 | 23.129 | 4 | 38 | 48 | ||

| MOT16-11 | - | 39.481 | 42.308 | 5 | 39 | 31 | |

| ours | 39.863 | 42.91 | 5 | 38 | 34 | ||

| MOT16-13 | - | 3.7642 | 11.057 | 0 | 94 | 14 | |

| ours | 3.8253 | 11.188 | 0 | 93 | 13 | ||

| DeepSORT | MOT16-05 | - | 38.09 | 45.215 | 13 | 53 | 41 |

| ours | 37.87 | 45.293 | 13 | 53 | 41 | ||

| MOT16-10 | - | 7.9477 | 17.072 | 1 | 40 | 34 | |

| ours | 8.1507 | 18.532 | 1 | 40 | 35 | ||

| MOT16-11 | - | 34.794 | 32.311 | 7 | 39 | 67 | |

| ours | 34.848 | 32.968 | 8 | 39 | 66 | ||

| MOT16-13 | - | 2.5328 | 7.8663 | 0 | 97 | 8 | |

| ours | 2.5764 | 8.3659 | 0 | 97 | 7 |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.S.; Chang, D.S.; Choi, Y.S. Enhancement of Multi-Target Tracking Performance via Image Restoration and Face Embedding in Dynamic Environments. Appl. Sci. 2021, 11, 649. https://doi.org/10.3390/app11020649

Kim JS, Chang DS, Choi YS. Enhancement of Multi-Target Tracking Performance via Image Restoration and Face Embedding in Dynamic Environments. Applied Sciences. 2021; 11(2):649. https://doi.org/10.3390/app11020649

Chicago/Turabian StyleKim, Ji Seong, Doo Soo Chang, and Yong Suk Choi. 2021. "Enhancement of Multi-Target Tracking Performance via Image Restoration and Face Embedding in Dynamic Environments" Applied Sciences 11, no. 2: 649. https://doi.org/10.3390/app11020649

APA StyleKim, J. S., Chang, D. S., & Choi, Y. S. (2021). Enhancement of Multi-Target Tracking Performance via Image Restoration and Face Embedding in Dynamic Environments. Applied Sciences, 11(2), 649. https://doi.org/10.3390/app11020649