1. Introduction

Advancement in artificial intelligence technologies has shaped the landscape of education profoundly in recent years, with technological innovations such as virtual learning environments and adaptive learning systems drastically improving the quality of teaching and learning at all levels [

1]. Among these, virtual reality (VR) merits our special attention due to its unique cognitive and psychological benefits [

2,

3]. VR is a digital environment based on computer simulation and 3D modeling technology that closely simulates the physical environment in terms of visual, audio, tactile, and interactive experience [

4]. According to the degree of immersion, the application of VR in instruction can be divided into desktop-based virtual reality (DVR) and immersive virtual reality (IVR). The literature has shown that IVR can boost learning engagement, knowledge transfer, empathy, and learner agency [

5,

6]. In recent years, with the advancement in function and the reduction in costs, IVR technology has gradually been accepted in the field of education and applied to various disciplines such as sciences [

7], humanities [

8], and second language acquisition [

5]. IVR can also simulate the authenticity and complexity of dangerous situations while ensuring learners’ physical safety. It is, thus, also widely used in various safety education and training programs, such as fire escape [

9], mine safety [

10], and pedestrian safety [

11].

Although IVR has more advanced technical features such as stereoscopic display, depth of field (DoF) modeling and tracking, high-resolution 3D rendering, and natural interaction, its actual effect in educational practice has been disappointing. The substantial increase in immersion and interactivity has not yielded superior learning performance. For example, Wu et al. (2020) conducted a meta-analysis of 35 experiments from 2013 to 2019 and found that the overall learning effect of IVR (Hedge’s

g) was 0.24, which was smaller than the overall effect of DVR (

g = 0.41–0.51) [

12]; Luo et al. (2021) reached a similar conclusion through meta-analysis of 149 experimental studies [

2]. One possible reason for this phenomenon is that IVR isolates learners from physical reality completely, which causes a lack of interaction with teachers and peers during the learning process, and is, thus, not conducive to the deployment of teaching strategies. Compared with the technical affordances of IVR, the selection and application of proper teaching strategies play a more critical role in improving the effectiveness of IVR-based instruction [

12].

Debriefing is a simple and easy-to-use teaching strategy that can potentially improve the learning effect of IVR. This strategy guides learners to recall, analyze, and evaluate their own or others’ behavioral decisions in virtual scenarios through visualized and shared cognitive processes, thereby promoting reflective learning and knowledge construction in experiential learning [

13]. Debriefing is widely practiced in military training and medical education, and has achieved positive learning results. For example, Moldjor et al. (2015) showed that listening to experienced military personnel’s reflective reports before conducting military training and rescue operations could increase trust and understanding among team members [

14]; Brown et al. (2018) revealed that one-on-one debriefing after simulation training obtained better results in subsequent medical knowledge tests [

15]. In recent years, researchers have applied debriefing strategies in various educational contexts, including teacher training [

16], transnational cultural education [

17], and psychological therapy [

18], and have reported significantly improved learning outcomes. However, most debriefing strategies described in the literature were implemented in traditional classrooms [

19] with non-immersive simulation interventions [

15,

16], with adults such as college students and corporate workers as target learners [

16]. There is a lack of rigorous empirical evidence concerning whether or not the debriefing strategy is also applicable to the IVR learning environment, whether it can improve the learning performance of younger learners, and to what extent.

In summary, informed by the experiential learning theory, IVR-based instruction has the potential to improve students’ learning performance and experience. However, the current literature of VR-based instruction suffers from three limitations. First, many studies focused on the technical features of the IVR learning environment and ignored the implementation and application of teaching strategies outside IVR, such as debriefing. Second, although the effectiveness of debriefing strategies has been verified in the traditional classroom, it is still unclear whether the relevant conclusions are applicable to the IVR learning environment, as the moderating effect of the debriefing on IVR learning performance lacks rigorous and systematic investigation. Third, the target population of debriefing strategies were usually adult learners, children as potential IVR learners have not been sufficiently investigated. In view of those limitations, we designed and implemented a debriefing strategy for an IVR-based instruction as part of a child pedestrian safety education project and investigated the moderating effect of this teaching strategy on the IVR learning outcomes in terms of knowledge acquisition and behavioral performance.

3. Methods

This study utilized a randomized experimental design to investigate the moderating effect of debriefing on IVR learning outcomes. The presence of the debriefing activity is the grouping variable that defined the treatment and control group. The dependent variables are the two types of IVR learning outcomes: knowledge acquisition and behavioral performance. The specifics of the research methods are elaborated on below.

3.1. Participants

To ensure sufficient power for the experiment design, this study calculated the sample size required for a repeated measurement variance mixed experiment to obtain a large effect (ηp2 > 0.14). The results indicated that the sample size of this experiment (N = 77) is much larger than the minimum required sample size (N = 24). This experiment selected all of the students in the second and third grades of a school who agreed to participate in the experiment. These students came from towns and suburbs and had diverse family backgrounds and academic abilities. Before the start of the experiment, we had already obtained written informed consent from all the parents of the students. In addition, all of the students reported that their vision was normal or normal after putting on glasses, and stated that they had little or no experience with IVR. Students were randomly divided into two groups to participate in the experiment: the treatment group (n = 39) and the control group (n = 38). There were 24 male students in the treatment group and 21 male students in the control group. Those students were also randomly assigned to five different IVR learning sites. Each site was operated by four research assistants who were responsible for informing students about how to walk in the virtual environment (Coordinator), ensuring the safety of the students during the IVR experience (Safety Guider), videotaping the whole process (Video Manager), and guiding students in the reflection (Debriefer). To minimize the potential individual influence of facilitation, all research assistants received week-long training on standardized facilitation protocols.

3.2. IVR Learning Environment

We used Unity 3D game engine and Oculus Rift development kit to create the IVR learning environment investigated in this study. This environment was a virtual road traffic scene constructed by simulating the road traffic in the city center. The virtual “avatar” and the vehicles behaved as in the real environment so that the learner could be deeply immersed in the virtual environment. For a detailed introduction to the IVR program, please refer to the research team’s prior paper [

11].

In the IVR learning environment, learners wore HMDs and held sensor controllers to allow free exploration within the tracking space. As shown in

Figure 1, participants needed to complete three challenges at the three intersections designed in the IVR program: (1) When an avatar friend on the opposite side beckons the participant to join him, the participant needs to choose whether to cross the street immediately or wait for the next green light to cross at the pedestrian crossing (C1); (2) participants need to correctly interpret the meaning of a flashing green pedestrian light and make decisions regarding whether to cross a wide street (C2); (3) when the pedestrian light is green but a backing school bus is ready to drive away, the participant needs to choose whether to continue crossing the street or to wait for the bus to leave (C3). To successfully accomplish the three pedestrian challenges, participants need to demonstrate five correct pedestrian behaviors: B1—do not dash into the street; B2—cross the street when the pedestrian light is green; B3–when the pedestrian light is blinking green, do not cross the street when at the sidewalk or quickly cross it when in the middle of the crosswalk; B4—exam the incoming traffic when crossing the street; B5—be alert to the backing vehicle and give it priority over traffic light for safety.

3.3. Debriefing Strategy

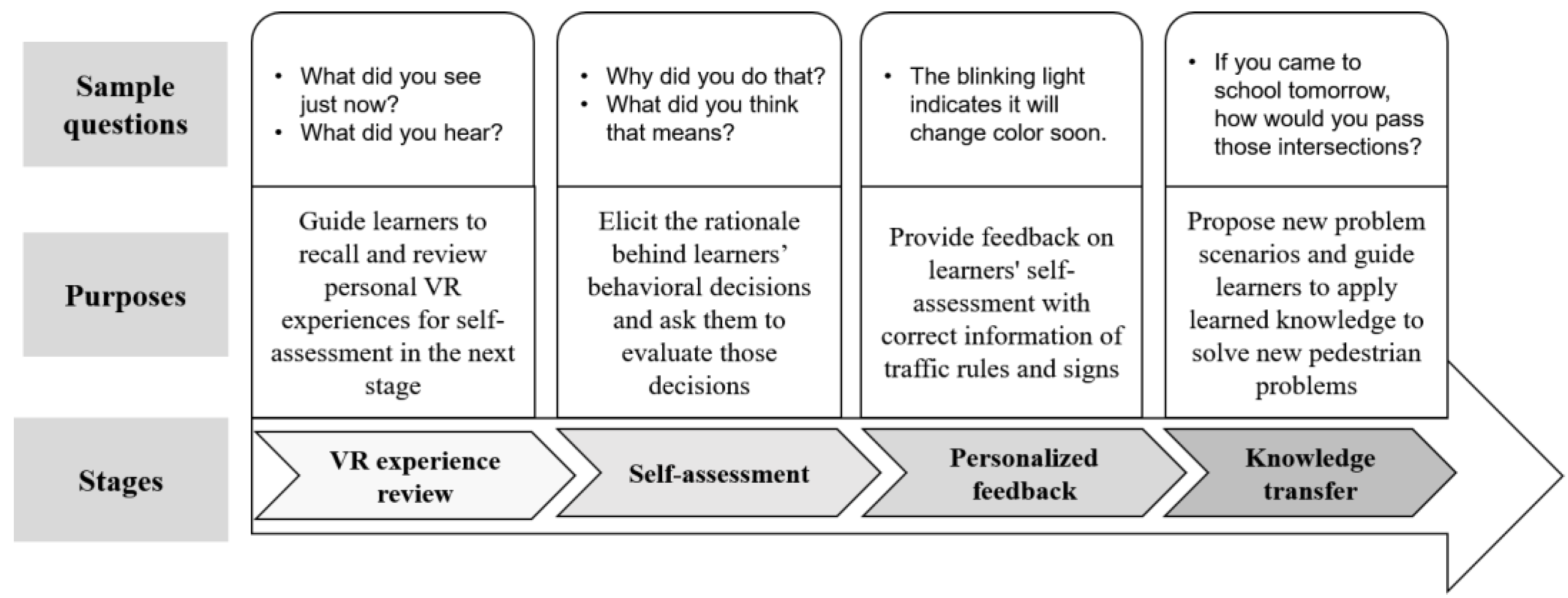

This research designed an individual debriefing strategy based on an IVR learning environment. When the students completed the three crossroad challenges in the IVR environment, the instructor observed and recorded the participant’s reactions and decisions they made in a timely manner. Then, according to the participant’s decisions made, the instructor would give the students one-on-one individual guidance after the task was finished. Based on the three stages of debriefing proposed by Lederman (1992), this study proposed an individualized debriefing strategy that consisted of four stages: VR experience review, guided self-assessment, individualized feedback, and knowledge transfer [

24].

As shown in

Figure 2, at the first stage, the instructor asked students what they heard, saw, and felt during the IVR learning process, this helped students to systematically recall the experiences of going through the three pedestrian challenges. At the second stage, the instructor uncovered students’ conception of traffic rules based on their performance in the IVR environment, and helped them to carry out self-examination and reflection. At the third stage, the instructor provided individualized feedback regarding the correct traffic knowledge relevant to students’ risky behaviors. At the fourth stage, the instructor proposed one or two hypothetical situations and guided students to apply the learned traffic knowledge to solve novel pedestrian problems within the situations.

3.4. Experiment Procedure

The study employed a two-factor mixed experimental design to examine the impact of IVR on children’s learning performance and the moderating effect of the proposed debriefing strategy. The internal factor tested was time (before and after the IVR-based instruction), and the within-subject factor tested was the intervention (debriefing and no debriefing strategy). The experimental process consisted of four phases and six activities as shown in

Figure 3, and lasted about 30 min.

The first phase included a knowledge pretest and a behavior pre-assessment. First, all participants were required to complete a paper-based knowledge test; afterwards, they had their first IVR learning experience by completing the three pedestrian challenges. The IVR learning experience also served as a behavior pre-assessment as all participants’ pedestrian behaviors were video recorded for future analysis. Proceeding into the second phase, the debriefing session received by the treatment group was considered as an instructional intervention, which lasted for about 5 min. The control group did not participate in the debriefing session and took a 5-min break during this period.

The third phase was identical with the first phase. All participants had a second IVR learning experience, which also served as the behavior post-assessment, and then proceeded directly into the knowledge posttest that was the same as the knowledge pretest. The fourth phase was the interview. The researcher asked the participants about the IVR learning experience and what they had learned based on the first three phases, using questions to help them make a summary promptly and boost knowledge transfer, such as “How did your learning go today?” or “What will you do next time when you cross the street?” To ensure all participants obtained the same quality of pedestrian safety education, the control group also participated in the identical debriefing session after the experiment.

The entire experiment process was captured with video cameras, including participants’ interactions with the IVR program and their performance in the debriefing sessions (if applicable). Additionally, we also used the computer screen recording function to record the first-person view of participants’ behaviors within the virtual world created by the IVR.

3.5. Data Collection and Analysis

There were two main types of data collected in this study: the pedestrian knowledge test scores and pedestrian behaviors within the IVR program. The two types of data were used to measure participants’ knowledge acquisition and behavioral performance. An independent sample t-test and factorial repeated measure ANOVA were conducted separately to examine the differences in the posttest scores and the moderating effect of debriefing. IBM SPSS 21 software was the selected tool for statistical analysis.

The instrument for collecting participants’ knowledge acquisition was a paper-based knowledge test developed by the researchers (The knowledge test is accessible at

https://www.doi.org/10.17632/tffr34c5fr.1, accessed on 2 November 2021). There were 10 single-choice questions in the knowledge test divided into two dimensions: interpretation (4 questions) and decision making (6 questions). Interpretation questions tested students’ mastery of traffic rules, such as “What does zebra crossing/crosswalk mean?” and “When a pedestrian traffic light is green and flashing, what does it mean?”. The decision-making questions were designed to measure students’ ability to make correct pedestrian choices in the face of complex traffic situations, such as “Your soccer ball is rolling across the street, and there is a crosswalk 100 m in front of you. How should you get your soccer ball?” The full mark for the knowledge test was 10, with each item counting for one point.

The participants’ pedestrian behavioral data were captured by both the onsite video recording and the first-person point of view screen capture. The video-recorded behavioral performances were analyzed and rated by two researchers using an observation rubric (see

Appendix A) that dictated the following behavioral scoring rules: For a particular behavior, every time a participant made the decision on that behavior, the correct and incorrect decisions were marked as 1 and 0, respectively. Accordingly, the performance score for that behavior was measured by the percentage of correct decisions. For example, if a participant checked the traffic before crossing streets once out of three street-crossing instances, her final performance on this behavior (checking traffic) was calculated as 0.33 (1/3). Further, a participant’s overall pedestrian performance was scored by dividing the total points she gained by the total number of behaviors observed. Consequently, the scores for the overall pedestrian performance as well as individual pedestrian behaviors were between 0 (0% correctness, failed completely) to 1 (100% correctness, flawless performance).

5. Discussion and Conclusions

Based on an IVR pedestrian safety education program, this study employed a randomized controlled trial to investigate the effects of a debriefing strategy on promoting knowledge acquisition and behavior performance in the IVR environment. The statistical results support the overall effectiveness of IVR as an educational technology and reveal the moderating effects of debriefing as an essential teaching strategy for IVR-based instruction. In sum, there are four main findings of the present study, which are discussed below.

First, the within-subjects effects confirm that the IVR learning environment can effectively improve children’s pedestrian knowledge and performance. This finding is consistent with the previous literature [

31,

32,

33] that supports the effectiveness of IVR in promoting experiential learning through a technology-mediated presence. The unique affordances of IVR such as immersion, interaction, and safety are known to create high-fidelity simulation that is authentic, hazard-proof, and cost-effective [

9,

10,

11].

Second, the results support the essential role of debriefing for IVR-based instruction due to its moderating effect on learning outcomes. A possible reason is that the debriefing activity provided students with an opportunity to assume an active role in both cognitive and meta-cognitive processes, such as recalling, analyzing, and self-evaluating their own IVR experiences. This finding corroborates the existing literature on debriefing and experiential learning: a structured debriefing can assist learners to integrate declarative knowledge and procedural knowledge [

34], improve critical thinking skills [

35], and promote knowledge construction and transfer [

36], thereby enabling learners to assimilate the concrete learning experiences into their cognitive structure [

24].

Third, although debriefing had a positive effect on knowledge acquisition and behavior improvement in the IVR environment, the moderating effect was found to be significant on behavior improvement only. This result is consistent with the findings of Schwebel et al. (2014), which revealed no obvious correlation between knowledge acquisition and behavior performance in IVR-based instruction, with behavior improvement identified as the main learning outcome [

26]. A plausible explanation is that the IVR environment is often used for practice-oriented pedagogy where the behavior improvement is likely the result of trial-and-error and reinforcement rather than rational cognitive decisions. Unfortunately, the debriefing strategy in this study cannot effectively address this shortcoming of IVR-based instruction: the diagnostic analysis of pedestrian performance in the debriefing session might not reveal all the misconceptions of traffic rules, as some may be hidden behind correct behaviors. As Ormrod (2016) argued, learners’ behavioral performance and cognitive representations are not completely mapped during the learning process [

25].

Lastly, we found that the magnitude of the debriefing effect on IVR learning outcomes varied with different types of knowledge content and performance tasks. This finding indicates the complexity of simulated learning, which is supported by the debriefing literature. For example, the systematic review of Lee et al. (2020) showed that the structured debriefing strategies in general lead to improved clinical knowledge acquisition, yet the effect of debriefing differs between clinical reasoning and clinical judgement [

37]. Similarly, Chronister et al. (2012) found that medical students’ resuscitation skills showed no significant improvement after debriefing because of the contrastively diverse performance on different resuscitating tasks such as ventricular tachycardia recognition, rescue breaths, cardiopulmonary resuscitation, and defibrillation shock delivery [

38]. However, the rationale behind the variance in debriefing outcomes remains largely unknown, which calls for future research to systematically investigate the relationship between debriefing strategies and learning outcomes.

6. Implications for Practice

Three implications for applying IVR in educational practice can be drawn from the research findings. Foremostly, the unique affordances of IVR support risk-free trial-and-error practice in virtual scenarios of safety hazard, which holds great potential for reforming and innovating safety education. Moreover, instructor-guided debriefing is a convenient yet highly effective teaching strategy that can greatly improve the effectiveness of IVR learning. Finally, when designing debriefing strategies for IVR-based instruction, the varying impact of debriefing on knowledge and behavior learning outcomes should be carefully considered. Accordingly, we put forward the following suggestions for designing and implementing IVR-based instruction.

First, the IVR experience should be considered as only one phase of experiential learning rather than the whole learning process. While acknowledging the affordances of IVR, the learning activities outside the virtual environment should not be ignored, and the facilitating role of the teacher should be further emphasized. The IVR environment provides students with personalized and contextual learning experiences, while the face-to-face debriefing can better support real-time communication and natural interaction between teachers and students, which is conducive to developing meaningful teaching and learning activities such as evaluation, diagnosis, feedback, and reflection. The two complement each other well in IVR-based instruction.

Second, the general process of “experience–debriefing–experience” is recommended for IVR-based instruction. The initial experience session allows free exploration in the virtual world when completing the learning tasks. This instructional session engages students in situational recognition and embodied experiences, and serves as an assessment of students’ initial knowledge level and behavioral performance. In the debriefing session, an instructor can provide students with personalized facilitation based on their performance in the previous session. This session enables abstract conceptualization of concrete experiences, diagnosis of problematic behaviors, construction of meaning, and knowledge transfer. Finally, the second experience in the IVR environment not only consolidates reflective learning but also serves as a post-intervention test for simulation and debriefing experiences. The effectiveness of such an IVR instructional process has been verified in this study.

Third, in the process of debriefing, attention should still be paid to the teaching of the knowledge content. Systematic instruction and personalized guidance should be integrated into the debriefing session to promote knowledge acquisition in particular. This study found that the effect of debriefing on promoting knowledge learning was less satisfactory. Therefore, a focused content instruction activity should be introduced to the debriefing session: Instructors can use diverse media resources such as mind maps and micro-lectures to give focused explanations of key knowledge points, evaluate the mastery of knowledge, and ensure that students’ improved behaviors are accompanied by the improvement of their knowledge structure.