Psychoacoustic Principle, Methods, and Problems with Perceived Distance Control in Spatial Audio

Abstract

1. Introduction

2. Psychoacoustic Principles of Auditory Distance Perception

2.1. Cues for Auditory Distance Perception

2.2. Compared with Directional Localization Cues

3. General Consideration of Perceived Distance Control in Spatial Audio Reproduction

4. Perceived Distance Control in Spatial Audio with Sound-Field-Based Methods

5. Perceived Distance Control in Sound Field Approximation and Psychoacoustic-Based Methods

6. Perceived Distance Control in Binaural-Based Methods

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, B.S. Spatial Sound—History, principle, progress and challenge. Chin. J. Electron. 2020, 29, 397–416. [Google Scholar] [CrossRef]

- Blauert, J. Spatial Hearing: The Psychophysics of Human Sound Localization; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Anderson, P.W.; Zahorik, P. Auditory/visual distance estimation: Accuracy and variability. Front. Psychol. 2014, 5, 1097. [Google Scholar] [CrossRef]

- Zahorik, P. Auditory display of sound source distance. In Proceedings of the 2002 International Conference on Auditory Display, Kyoto, Japan, 2–5 July 2002; pp. 326–332. [Google Scholar]

- Zahorik, P.; Brungart, D.S.; Bronkhorst, A.W. Auditory distance perception in humans: A summary of past and present research. Acta Acust. Acust. 2005, 91, 409–420. [Google Scholar]

- Kolarik, A.J.; Moore, B.C.J.; Zahorik, P.; Cirstea, S.; Pardhan, S. Auditory distance perception in humans: A review of cues, development, neuronal bases, and effects of sensory loss. Atten. Percept. Psychophys. 2016, 78, 373–395. [Google Scholar] [CrossRef] [PubMed]

- Xie, B.S. Head-Related Transfer Function and Virtual Auditory Display, 2nd ed.; J Ross Publishing: New York, NY, USA, 2013. [Google Scholar]

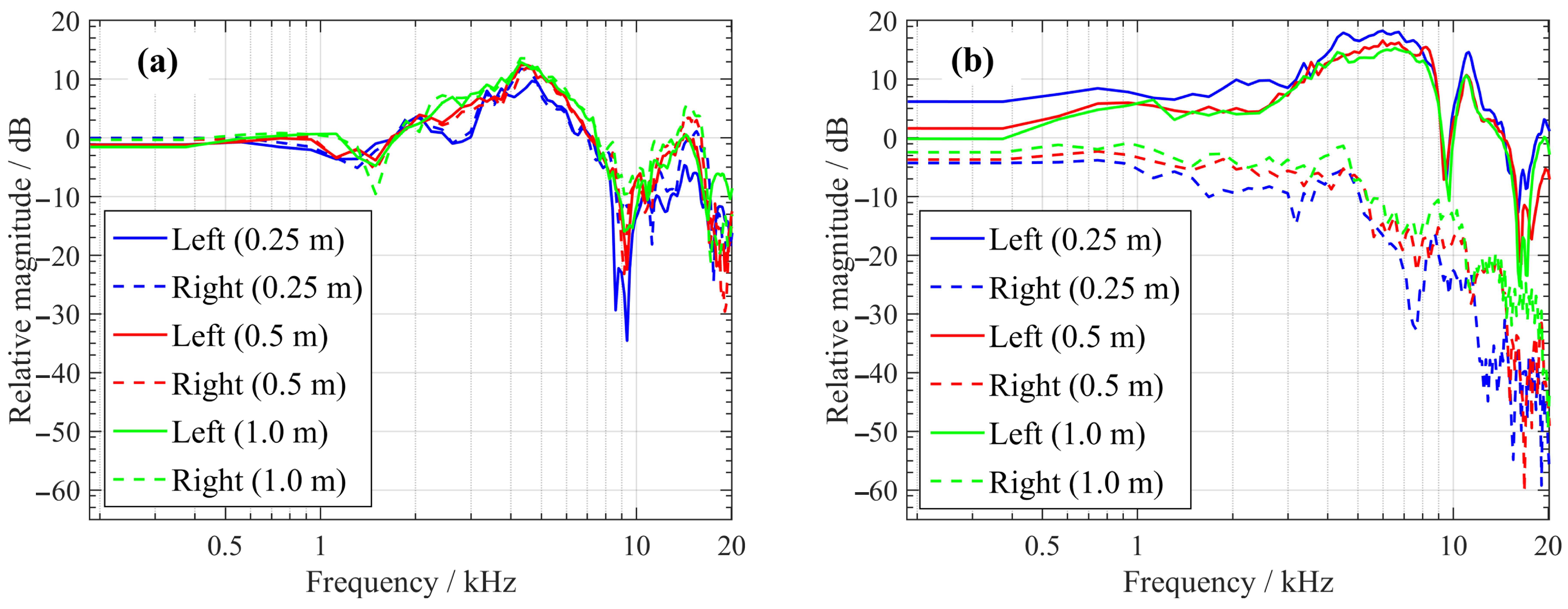

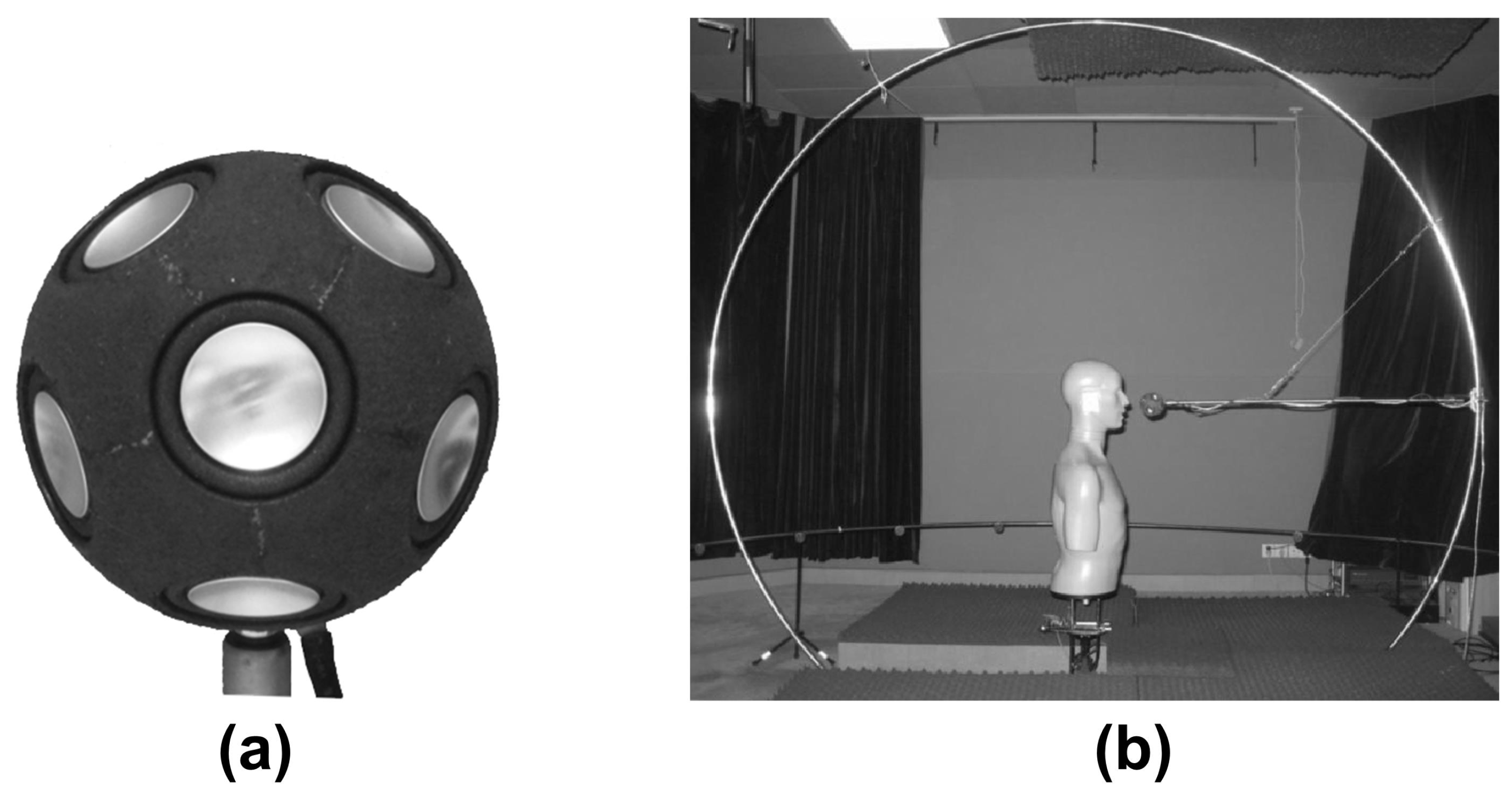

- Yu, G.Z.; Wu, R.X.; Liu, Y.; Xie, B. Near-field head-related transfer-function measurement and database of human subjects. J. Acoust. Soc. Am. 2018, 143, EL194–EL198. [Google Scholar] [CrossRef]

- Brungart, D.S.; Rabinowitz, W.M. Auditory localization of nearby sources I, head-related transfer functions. J. Acoust. Soc. Am. 1999, 106, 1465–1479. [Google Scholar] [CrossRef]

- Brungart, D.S.; Durlach, N.I.; Rabinowitz, W.M. Auditory localization of nearby sources II, localization of a broadband source. J. Acoust. Soc. Am. 1999, 106, 1956–1968. [Google Scholar] [CrossRef]

- Brungart, D.S. Auditory localization of nearby sources III, Stimulus effects. J. Acoust. Soc. Am. 1999, 106, 3589–3602. [Google Scholar] [CrossRef]

- Brungart, D.S. Auditory parallax effects in the HRTF for nearby sources. In Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 20–20 October 1999; pp. 171–174. [Google Scholar]

- Suzuki, Y.; Kim, H.Y.; Takane, S.; Sone, T. A modeling of distance perception based on auditory parallax model. J. Acoust. Soc. Am. 1998, 103, 3083. [Google Scholar] [CrossRef]

- Kuttruff, H. Room Acoustics, 5th ed.; Spon Press: Abingdon, UK, 2009. [Google Scholar]

- Bronkhorst, A.W.; Houtgast, T. Auditory distance perception in rooms. Nature 1999, 397, 517–520. [Google Scholar] [CrossRef] [PubMed]

- Prud’homme, L.; Lavandier, M. Do we need two ear to perceive the distance of a virtual frontal sound source? J. Acoust. Soc. Am. 2020, 148, 1614–1623. [Google Scholar] [CrossRef] [PubMed]

- Kopčo, N.; Shinn-Cunningham, B.G. Effect of stimulus spectrum on distance perception for nearby sources. J. Acoust. Soc. Am. 2011, 130, 1530–1541. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, S.H. Auditory distance perception in different rooms. J. Audio Eng. Soc. 1993, 41, 755–770. [Google Scholar]

- Ronsse, L.M.; Wang, L.M. Effects of room size and reverberation, receiver location, and source rotation on acoustical metrics related to source localization. Acta Acust. Acust. 2012, 98, 768–775. [Google Scholar] [CrossRef]

- Jiang, J.L.; Xie, B.S.; Mai, H.M.; Liu, L.L.; Yi, K.L.; Zhang, C.Y. The role of dynamic cue in auditory vertical localization. Appl. Acoust. 2019, 146, 398–408. [Google Scholar] [CrossRef]

- Wightman, F.L.; Kistler, D.J. The dominant role of low-frequency interaural time difference in sound localization. J. Acoust. Soc. Am. 1992, 91, 1648–1661. [Google Scholar] [CrossRef]

- Pöntynen, H.; Santala, O.; Pulkki, V. Conflicting dynamic and spectral directional cues form separate auditory images. In Proceedings of the 140th Audio Engineering Society Convention, Paris, France, 4–7 June 2016; p. 9582. [Google Scholar]

- Macpherson, E.A. Head Motion, Spectral Cues, and Wallach’s “Principle of Least Displacement” in Sound Localization, in Principles and Applications of Spatial Hearing; World Scientific Publishing Co. Pte. Ltd.: Singapore, 2011; pp. 103–120. [Google Scholar]

- Brimijoin, W.O.; Akeroyd, M.A. The role of head movements and signal spectrum in an auditory front/back illusion. i-Perception 2012, 3, 179–182. [Google Scholar] [CrossRef] [PubMed]

- Leakey, D.M. Some measurements on the effects of interchannel intensity and time differences in two channel sound systems. J. Acoust. Soc. Am. 1959, 31, 977–986. [Google Scholar] [CrossRef]

- Makita, Y. On the directional localization of sound in the stereophonic sound field. EBU Rev. 1962, 73, 102–108. [Google Scholar]

- Gerzon, M.A. General metatheory of auditory localisation. In Proceedings of the 92nd Audio Engineering Society Convention, Vienna, Austria, 24–27 March 1992; p. 3306. [Google Scholar]

- Xie, B.S.; Mai, H.M.; Rao, D.; Zhong, X.L. Analysis of and experiments on vertical summing localization of multichannel sound reproduction with amplitude panning. J. Audio Eng. Soc. 2019, 67, 382–399. [Google Scholar] [CrossRef]

- Lee, H.; Rumsey, F. Level and time panning of phantom images for musical sources. J. Audio. Eng. Soc. 2013, 61, 978–998. [Google Scholar]

- Litovsky, R.Y.; Colburn, H.S.; Yost, W.A.; Guzman, S.J. The precedence effect. J. Acoust. Soc. Am. 1999, 106, 1633–1654. [Google Scholar] [CrossRef]

- Daniel, J.; Nicol, R.; Moreau, S. Further investigations of high-order Ambisonics and wavefield synthesis for holophonic sound imaging. In Proceedings of the 114th Audio Engineering Society Convention, Amsterdam, The Netherlands, 22–25 March 2003; p. 5788. [Google Scholar]

- Rumsey, F. Spatial Audio; Focal Press: Oxford, UK, 2001. [Google Scholar]

- Jot, J.M.; Wardle, S.; Larcher, V. Approaches to binaural synthesis. In Proceedings of the 105th Audio Engineering Society Convention, San Francisco, CA, USA, 26–29 September 1998; p. 4861. [Google Scholar]

- Bauck, J.; Cooper, D.H. Generalized transaural stereo and applications. J. Audio Eng. Soc. 1996, 44, 683–705. [Google Scholar]

- Gerzon, M.A. Ambisonics in multichannel broadcasting and video. J. Audio Eng. Soc. 1985, 33, 859–871. [Google Scholar]

- Poletti, M.A. Three-dimensional surround sound systems based on spherical harmonics. J. Audio Eng. Soc. 2005, 53, 1004–1025. [Google Scholar]

- Daniel, J. Spatial sound encoding including near field effect: Introducing distance coding filters and a viable, new Ambisonic format. In Proceedings of the 23rd Audio Engineering Society International Conference, Copenhagen, Denmark, 23–25 May 2003. [Google Scholar]

- Boone, M.M.; Verheijen, E.N.G.; Van Tol, P.F. Spatial sound field reproduction by wave field synthesis. J. Audio Eng. Soc. 1995, 43, 1003–1012. [Google Scholar] [CrossRef]

- Spors, S.; Rabenstain, R.; Ahrens, J. The theory of wave field synthesis revisited. In Proceedings of the 124th Audio Engineering Society Convention, Amsterdam, The Netherlands, 17–20 May 2008; p. 7358. [Google Scholar]

- Spors, S.; Wierstorf, H.; Geier, M.; Ahrens, J. Physical and perceptual properties of focused virtual sources in wave field synthesis. In Proceedings of the 127th Audio Engineering Society Convention, New York, NY, USA, 9–12 October 2009; p. 7914. [Google Scholar]

- Ward, D.B.; Abhayapala, T.D. Reproduction of a plane-wave sound field using an array of loudspeakers. IEEE Trans. Speech Audio Process. 2001, 9, 697–707. [Google Scholar] [CrossRef]

- Liu, Y.; Xie, B.S. Analysis with binaural auditory model and experiment on the timbre of Ambisonics recording and reproduction. Chin. J. Acoust. 2015, 34, 337–356. [Google Scholar]

- Morrell, M.J.; Reiss, J.D. A comparative approach to sound localization within a 3-D sound field. In Proceedings of the 126th Audio Engineering Society Convention, Munich, Germany, 7–10 May 2009; p. 7663. [Google Scholar]

- Power, P.; Davies, W.J.; Hirst, J.; Dunn, C. Localisation of Elevated Virtual Sources in Higher Order Ambisonics Sound Fields; Institute of Acoustics: Brighton, UK, 2012. [Google Scholar]

- Bertet, S.; Daniel, J.; Parizet, E.; Warusfel, O. Investigation on localisation accuracy for first and higher order Ambisonics reproduced sound sources. Acta Acust. Acust. 2013, 99, 642–657. [Google Scholar] [CrossRef]

- Wierstorf, H.; Raake, A.; Spors, S. Localization of a virtual point source within the listening area for wave field synthesis. In Proceedings of the 133rd Audio Engineering Society Convention, San Francisco, CA, USA, 26–29 October 2012; p. 8743. [Google Scholar]

- Favrot, S.; Buchholz, J.M. Reproduction of nearby sound sources using higher-order Ambisonics with practical loudspeaker arrays. Acta Acust. Acust. 2012, 98, 48–60. [Google Scholar] [CrossRef]

- Badajoz, J.; Chang, J.H.; Agerkvist, F.T. Reproduction of nearby sources by imposing true interaural differences on a sound field control approach. J. Acoust. Soc. Am. 2015, 138, 2387–2398. [Google Scholar] [CrossRef]

- Kearnev, G.; Gorzel, M.; Rice, H.; Boland, F. Distance perception in interactive virtual acoustic environment using first and higher order Ambisonic sound fields. Acta Acust. Acust. 2012, 98, 61–71. [Google Scholar] [CrossRef]

- Wierstorf, H.; Raake, A.; Geier, M.; Spors, S. Perception of focused sources in wave field synthesis. J. Audio Eng. Soc. 2013, 61, 5–16. [Google Scholar]

- Sontacchi, A.; Hoeldrich, R. Enhanced 3D sound field synthesis and reproduction using system by compensating interfering reflections. In Proceedings of the DAFX-00, Verona, Italy, 7–9 December 2000. [Google Scholar]

- Lecomte, P.; Gauthier, P.A.; Langrenne, C.; Berry, A.; Garcia, A. Cancellation of room reflections over an extended area using Ambisonics. J. Acoust. Soc. Am. 2018, 143, 811–828. [Google Scholar] [CrossRef] [PubMed]

- Damaske, P.; Ando, Y. Interaural crosscorrelation for multichannel loudspeaker reproduction. Acta Acust. Acust. 1972, 27, 232–238. [Google Scholar]

- Lee, H. Multichannel 3D microphone arrays: A review. J. Audio Eng. Soc. 2021, 69, 5–26. [Google Scholar] [CrossRef]

- Yi, K.L.; Xie, B.S. Local Ambisonics panning method for creating virtual source in the vertical plane of the frontal hemisphere. Appl. Acoust. 2020, 165, 107319. [Google Scholar] [CrossRef]

- Zahorik, P. Assessing auditory distance perception using virtual acoustics. J. Acoust. Soc. Am. 2002, 111, 1832–1846. [Google Scholar] [CrossRef]

- Zhang, C.Y.; Xie, B.S. Platform for dynamic virtual auditory environment real-time rendering system. Chin. Sci. Bull. 2013, 58, 316–327. [Google Scholar] [CrossRef][Green Version]

- Yu, G.Z.; Wang, L.L. Effect of individualized head-related transfer functions on distance perception in virtual reproduction for a nearby sound source. Arch. Acoust. 2019, 44, 251–258. [Google Scholar]

- Qu, T.S.; Xiao, Z.; Gong, M.; Huang, Y.; Li, X.; Wu, X. Distance-dependent head-related transfer functions measured with high spatial resolution using a spark gap. IEEE Trans. Audio Speech Lang. Proc. 2009, 17, 1124–1132. [Google Scholar] [CrossRef]

- Arend, J.M.; Neidhardt, A.; Hristoph Porschmann, C. Measurement and perceptual evaluation of a spherical near-field HRTF set. In Proceedings of the 29th Tonmeistertagung-Vdt International Convention, Cologne, Germany, 17–20 November 2016. [Google Scholar]

- Yu, G.Z.; Xie, B.S.; Rao, D. Near-field head-related transfer functions of an artificial head and its characteristics (in Chinese). Acta Acust. 2012, 37, 378–385. [Google Scholar]

- Yu, G.Z.; Xie, B.S.; Rao, D. Directivity of Spherical Polyhedron Sound Source Used in Near-Field HRTF Measurements. Chin. Phys. Lett. 2010, 27, 109–112. [Google Scholar] [CrossRef]

- Duda, R.O.; Martens, W.L. Range dependence of the response of a spherical head model. J. Acoust. Soc. Am. 1998, 104, 3048–3058. [Google Scholar] [CrossRef]

- Gumerov, N.A.; Duraiswami, R.; Tang, Z. Numerical study of the influence of the torso on the HRTF. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Orlando, FL, USA, 13–17 May 2002; pp. 1965–1968. [Google Scholar]

- Chen, Z.W.; Yu, G.Z.; Xie, B.S.; Guan, S.Q. Calculation and analysis of near-field head-related transfer functions from a simplified head-neck-torso model. Chin. Phys. Lett. 2012, 29, 034302. [Google Scholar] [CrossRef]

- Otani, M.; Hirahara, T.; Ise, S. Numerical study on source-distance dependency of head-related transfer functions. J. Acoust. Soc. Am. 2009, 125, 3253–3261. [Google Scholar] [CrossRef] [PubMed]

- Salvador, G.D.; Sakamoto, S.; Treviño, J.; Suzuki, Y. Dataset of near-distance head-related transfer functions calculated using the boundary element method. In Proceedings of the Audio Engineering Society Convention on Spatial Reproduction, Tokyo, Japan, 6–9 August 2018. [Google Scholar]

- Duraiswami, R.; Zotkin, D.N.; Gumerov, N.A. Interpolation and range extrapolation of HRTFs. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; pp. 45–48. [Google Scholar]

- Pollow, M.; Nguyen, K.V.; Warusfel, O.; Carpentier, T.; Müller-Trapet, M.; Vorländer, M.; Noisternig, M. Calculation of head-related transfer functions for arbitrary field points using spherical harmonics decomposition. Acta Acust. Acust. 2012, 98, 72–82. [Google Scholar] [CrossRef]

- Kan, A.; Jin, C.; Schaik, A.V. A psychophysical evaluation of near-field head-related transfer functions synthesized using a distance variation function. J. Acoust. Soc. Am. 2009, 125, 2233–2242. [Google Scholar] [CrossRef]

- Menzie, D.; Marwan, A.A. Nearfield binaural synthesis and ambisonics. J. Acoust. Soc. Am. 2007, 121, 1559–1563. [Google Scholar] [CrossRef]

- Xie, B.S.; Liu, L.L.; Jiang, J.L. Dynamic binaural Ambisonics scheme for rendering distance information of free-field virtual sources. Acta Acust. 2021, 46, 1223–1233. (In Chinese) [Google Scholar]

- Saviojia, L.; Huopaniemi, J.; Lokki, T.; Väänänen, R. Creating interactive virtual acoustic environments. J. Audio Eng. Soc. 1999, 47, 675–705. [Google Scholar]

- Werner, S.; Liebetrau, J. Effects of shaping of binaural room impulse responses on localization. In Proceedings of the Fifth International Workshop on Quality of Multimedia Experience (QoMEX), Klagenfurt am Wörthersee, Austria, 3–5 July 2013; pp. 88–93. [Google Scholar]

- Lee, H.; Mironovs, M.; Johnson, D. Binaural Rendering of Phantom Image Elevation using VHAP. In Proceedings of the 146th Audio Engineering Society Convention, Dublin, Ireland, 20–23 March 2019. [Google Scholar]

| Condition | Distance Cue | ||||

|---|---|---|---|---|---|

| Level | Spectra | HRTF | DRR | ||

| ILD | Parallax | ||||

| Free field | Yes | Yes | Yes | Yes | No |

| Reverberation field | Yes | Yes | Yes ? | ? | Yes |

| Absolute or relative distance perception | Relative | Relative | Absolute | Absolute | Absolute |

| Source direction | Frontal and lateral | Frontal and lateral | Lateral | Frontal | Frontal and lateral |

| Source distance | Proximal and distant | >15 m | Proximal | Proximal | Proximal and distant |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, B.; Yu, G. Psychoacoustic Principle, Methods, and Problems with Perceived Distance Control in Spatial Audio. Appl. Sci. 2021, 11, 11242. https://doi.org/10.3390/app112311242

Xie B, Yu G. Psychoacoustic Principle, Methods, and Problems with Perceived Distance Control in Spatial Audio. Applied Sciences. 2021; 11(23):11242. https://doi.org/10.3390/app112311242

Chicago/Turabian StyleXie, Bosun, and Guangzheng Yu. 2021. "Psychoacoustic Principle, Methods, and Problems with Perceived Distance Control in Spatial Audio" Applied Sciences 11, no. 23: 11242. https://doi.org/10.3390/app112311242

APA StyleXie, B., & Yu, G. (2021). Psychoacoustic Principle, Methods, and Problems with Perceived Distance Control in Spatial Audio. Applied Sciences, 11(23), 11242. https://doi.org/10.3390/app112311242