A Tool to Retrieve Alert Dwell Time from a Homegrown Computerized Physician Order Entry (CPOE) System of an Academic Medical Center: An Exploratory Analysis

Abstract

:1. Introduction

2. Materials and Methods

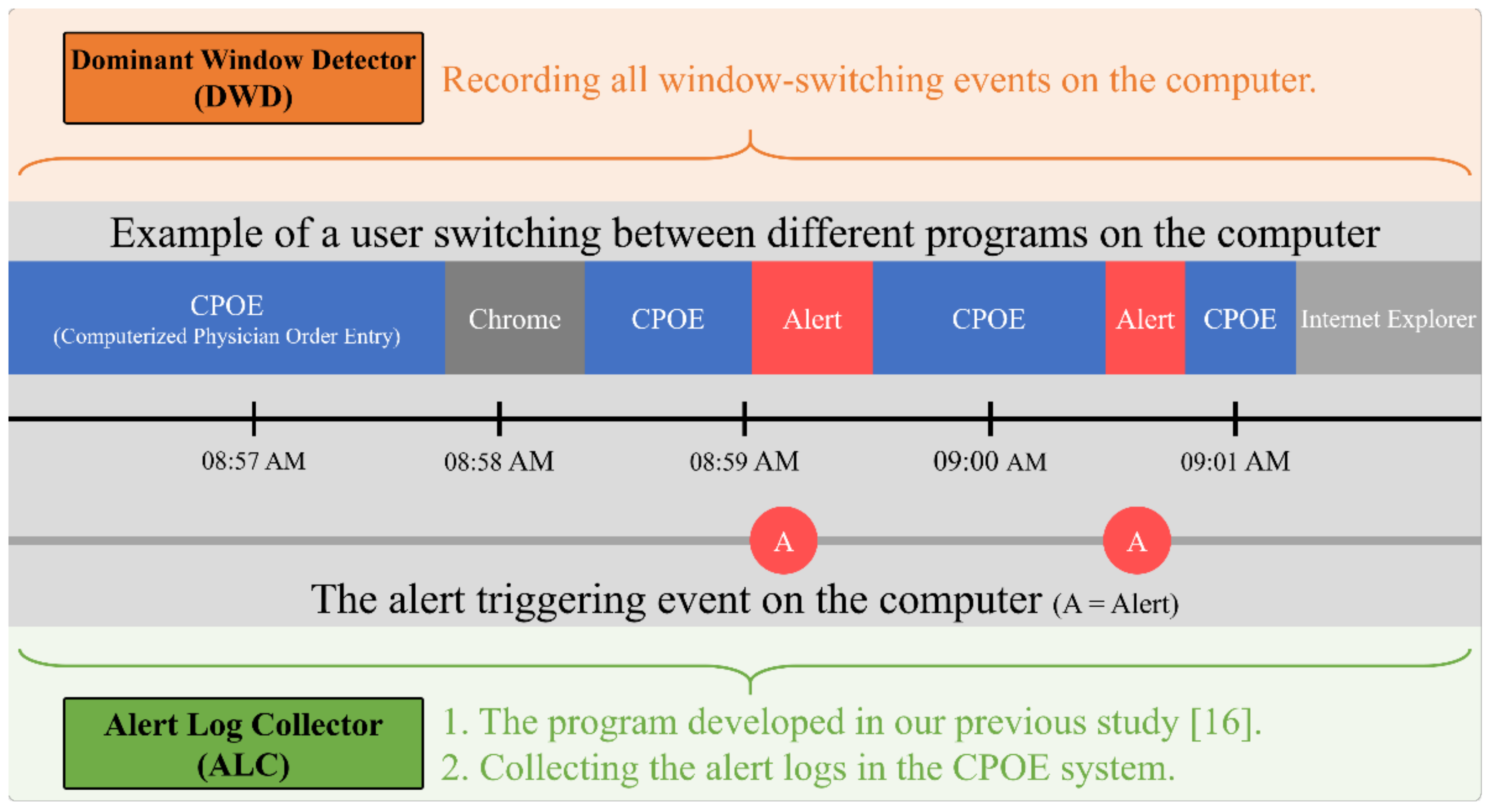

2.1. The Implementation of Alert Log Collector and Dominant Window Detector

2.2. Data Combination Process

2.3. Data Analysis

3. Results

3.1. Alert Distribution

3.2. Normality Test and Descriptive Statistics

3.3. Top 10 Most Frequent Alert Categories and Correlation Analysis

4. Discussion

Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Koppel, R.J.C.C. What do we know about medication errors made via a CPOE system versus those made via handwritten orders? Crit. Care 2005, 9, 427. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Riedmann, D.; Jung, M.; Hackl, W.O.; Stühlinger, W.; van der Sijs, H.; Ammenwerth, E. Development of a context model to prioritize drug safety alerts in CPOE systems. BMC Med. Inform. Decis. Mak. 2011, 11, 35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Galanter, W.; Falck, S.; Burns, M.; Laragh, M.; Lambert, B.L. Indication-based prescribing prevents wrong-patient medication errors in computerized provider order entry (CPOE). J. Am. Med. Inform. Assoc. 2013, 20, 477–481. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abookire, S.A.; Teich, J.M.; Sandige, H.; Paterno, M.D.; Martin, M.T.; Kuperman, G.J.; Bates, D.W. Improving allergy alerting in a computerized physician order entry system. In Proceedings of the AMIA Symposium, Virtual, 14–18 November 2020; p. 2. [Google Scholar]

- Bates, D.W.; Gawande, A.A. Improving safety with information technology. N. Engl. J. Med. 2003, 348, 2526–2534. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bright, T.J.; Wong, A.; Dhurjati, R.; Bristow, E.; Bastian, L.; Coeytaux, R.R.; Samsa, G.; Hasselblad, V.; Williams, J.W.; Musty, M.D.; et al. Effect of clinical decision-support systems: A systematic review. Ann. Intern. Med. 2012, 157, 29–43. [Google Scholar] [CrossRef] [PubMed]

- Jaspers, M.W.M.; Smeulers, M.; Vermeulen, H.; Peute, L. Effects of clinical decision-support systems on practitioner performance and patient outcomes: A synthesis of high-quality systematic review findings. J. Am. Med. Inform. Assoc. 2011, 18, 327–334. [Google Scholar] [CrossRef] [Green Version]

- Phansalkar, S.; van der Sijs, H.; Tucker, A.D.; Desai, A.A.; Bell, D.S.; Teich, J.M.; Middleton, B.; Bates, D.W. Drug-Drug interactions that should be non-interruptive in order to reduce alert fatigue in electronic health records. J. Am. Med. Inform. Assoc. 2012, 20, 489–493. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Horsky, J.; Phansalkar, S.; Desai, A.; Bell, D.; Middleton, B. Design of decision support interventions for medication prescribing. Int. J. Med. Inform. 2013, 82, 492–503. [Google Scholar] [CrossRef]

- Blecker, S.; Austrian, J.S.; Horwitz, L.I.; Kuperman, G.; Shelley, D.; Ferrauiola, M.; Katz, S.D. Interrupting providers with clinical decision support to improve care for heart failure. Int. J. Med. Inform. 2019, 131, 103956. [Google Scholar] [CrossRef]

- Carspecken, C.W.; Sharek, P.J.; Longhurst, C.; Pageler, N.M. A clinical case of electronic health record drug alert fatigue: Consequences for patient outcome. Pediatrics 2013, 131, e1970–e1973. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van Der Sijs, H.; Aarts, J.; Vulto, A.; Berg, M. Overriding of drug safety alerts in computerized physician order entry. J. Am. Med. Inform. Assoc. 2006, 13, 138–147. [Google Scholar] [CrossRef]

- Ratwani, R.M.; Gregory Trafton, J. A generalized model for predicting postcompletion errors. Top. Cogn. Sci. 2010, 2, 154–167. [Google Scholar] [CrossRef] [PubMed]

- Topaz, M.; Seger, D.L.; Lai, K.; Wickner, P.G.; Goss, F.; Dhopeshwarkar, N.; Chang, F.; Bates, D.W.; Zhou, L. High Override Rate for Opioid Drug-allergy Interaction Alerts: Current Trends and Recommendations for Future. Stud. Health Technol. Inform. 2015, 216, 242–246. [Google Scholar] [CrossRef] [PubMed]

- Miller, A.M.; Boro, M.S.; Korman, N.E.; Davoren, J.B. Provider and pharmacist responses to warfarin drug-drug interaction alerts: A study of healthcare downstream of CPOE alerts. J. Am. Med. Inform. Assoc. 2011, 18, i45–i50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chien, S.-C.; Chin, Y.-P.; Yoon, C.H.; Islam, M.M.; Jian, W.-S.; Hsu, C.-K.; Chen, C.-Y.; Chien, P.-H.; Li, Y.-C. A novel method to retrieve alerts from a homegrown Computerized Physician Order Entry (CPOE) system of an academic medical center: Comprehensive alert characteristic analysis. PLoS ONE 2021, 16, e0246597. [Google Scholar] [CrossRef] [PubMed]

- Chused, A.E.; Kuperman, G.J.; Stetson, P.D. Alert Override Reasons: A Failure to Communicate. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 8–12 November 2008; p. 111. [Google Scholar]

- Van Der Sijs, H.; Aarts, J.; Van Gelder, T.; Berg, M.; Vulto, A. Turning off frequently overridden drug alerts: Limited opportunities for doing it safely. J. Am. Med. Inform. Assoc. 2008, 15, 439–448. [Google Scholar] [CrossRef] [Green Version]

- Isaac, T.; Weissman, J.S.; Davis, R.B.; Massagli, M.; Cyrulik, A.; Sands, D.Z.; Weingart, S.N. Overrides of medication alerts in ambulatory care. Arch. Intern. Med. 2009, 169, 305–311. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shah, N.R.; Seger, A.C.; Seger, D.L.; Fiskio, J.M.; Kuperman, G.J.; Blumenfeld, B.; Recklet, E.G.; Bates, D.W.; Gandhi, T.K. Improving Acceptance of Computerized Prescribing Alerts in Ambulatory Care. J. Am. Med. Inform. Assoc. 2006, 13, 5–11. [Google Scholar] [CrossRef]

- Baysari, M.T.; Zheng, W.Y.; Tariq, A.; Heywood, M.; Scott, G.; Li, L.; Van Dort, B.A.; Rathnayake, K.; Day, R.; Westbrook, J.I. An experimental investigation of the impact of alert frequency and relevance on alert dwell time. Int. J. Med. Inform. 2019, 133, 104027. [Google Scholar] [CrossRef]

- McDaniel, R.B.; Burlison, J.D.; Baker, D.K.; Hasan, M.R.M.; Robertson, J.; Hartford, C.; Howard, S.C.; Sablauer, A.; Hoffman, J.M. Alert dwell time: Introduction of a measure to evaluate interruptive clinical decision support alerts. J. Am. Med. Inform. Assoc. 2015, 23, e138–e141. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schreiber, R.; Gregoire, J.A.; Shaha, J.E.; Shaha, S.H. Think time: A novel approach to analysis of clinicians’ behavior after reduction of drug-drug interaction alerts. Int. J. Med. Inform. 2017, 97, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Balasuriya, L.; Vyles, D.; Bakerman, P.; Holton, V.; Vaidya, V.; Garcia-Filion, P.; Westdorp, J.; Sanchez, C.; Kurz, R. Computerized Dose Range Checking Using Hard and Soft Stop Alerts Reduces Prescribing Errors in a Pediatric Intensive Care Unit. J. Patient Saf. 2017, 13, 144–148. [Google Scholar] [CrossRef] [PubMed]

- Simpao, A.F.; Ahumada, L.M.; Desai, B.R.; Bonafide, C.P.; Gálvez, J.A.; Rehman, M.A.; Jawad, A.F.; Palma, K.L.; Shelov, E.D. Optimization of drug-drug interaction alert rules in a pediatric hospital’s electronic health record system using a visual analytics dashboard. J. Am. Med. Inform. Assoc. 2015, 22, 361–369. [Google Scholar] [CrossRef] [Green Version]

- Pirnejad, H.; Amiri, P.; Niazkhani, Z.; Shiva, A.; Makhdoomi, K.; Abkhiz, S.; van der Sijs, H.; Bal, R. Preventing potential drug-drug interactions through alerting decision support systems: A clinical context based methodology. Int. J. Med. Inform. 2019, 127, 18–26. [Google Scholar] [CrossRef] [PubMed]

- Pfistermeister, B.; Sedlmayr, B.; Patapovas, A.; Suttner, G.; Tektas, O.; Tarkhov, A.; Kornhuber, J.; Fromm, M.F.; Bürkle, T.; Prokosch, H.-U.; et al. Development of a Standardized Rating Tool for Drug Alerts to Reduce Information Overload. Methods Inf. Med. 2016, 55, 507–515. [Google Scholar] [CrossRef] [PubMed]

- Akoglu, H. User’s guide to correlation coefficients. Turk. J. Emerg. Med. 2018, 18, 91–93. [Google Scholar] [CrossRef]

- Berner, E.S.; La Lande, T.J. Overview of Clinical Decision Support Systems. In Clinical Decision Support Systems; Springer: New York, NY, USA, 2007; pp. 3–22. [Google Scholar]

- Kuperman, G.J.; Gibson, R.F. Computer physician order entry: Benefits, costs, and issues. Ann. Intern. Med. 2003, 139, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Horng, S.; Joseph, J.W.; Calder, S.; Stevens, J.P.; O’Donoghue, A.L.; Safran, C.; Nathanson, L.A.; Leventhal, E.L. Assessment of Unintentional Duplicate Orders by Emergency Department Clinicians Before and After Implementation of a Visual Aid in the Electronic Health Record Ordering System. JAMA Netw. Open 2019, 2, e1916499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khajouei, R.; Jaspers, M.J.M. The impact of CPOE medication systems’ design aspects on usability, workflow and medication orders. Methods Inf. Med. 2010, 49, 3–19. [Google Scholar]

- Ancker, J.S.; Edwards, A.; Nosal, S.; Hauser, D.; Mauer, E.; Kaushal, R. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Med. Inform. Decis. Mak. 2017, 17, 36. [Google Scholar] [CrossRef] [Green Version]

- Zimmerman, J.; deMayo, R.; Perry, S. Novel Cross-Care Continuum Medication Alerts Decrease Duplicate Medication Errors For Pediatric Care Transitions. Am. Acad. Pediatr. 2019, 144, 35. [Google Scholar]

- Ai, A.; Wong, A.; Amato, M.G.; Wright, A. Communication failure: Analysis of prescribers’ use of an internal free-text field on electronic prescriptions. J. Am. Med. Inform. Assoc. 2018, 25, 709–714. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Smith, W.R. Evidence for the Effectiveness of Techniques To Change Physician Behavior. Chest 2000, 118, 8S–17S. [Google Scholar] [CrossRef] [PubMed]

- Beckman, H.B.; Frankel, R.M. The effect of physician behavior on the collection of data. Ann. Intern. Med. 1984, 101, 692–696. [Google Scholar] [CrossRef]

- McDonald, C.J. Use of a computer to detect and respond to clinical events: Its effect on clinician behavior. Ann. Intern. Med. 1976, 84, 162–167. [Google Scholar] [CrossRef] [PubMed]

- Burgener, A.M. Enhancing communication to improve patient safety and to increase patient satisfaction. Health Care Manag. 2020, 39, 128–132. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.K.; Mejia, A.F.; Senior, T.; Jose, J. Improving Patient Safety through Medical Alert Management: An Automated Decision Tool to Reduce Alert Fatigue. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 13–17 November 2010; pp. 417–421. [Google Scholar]

- Rush, J.L.; Ibrahim, J.; Saul, K.; Brodell, R.T. Improving Patient Safety by Combating Alert Fatigue. J. Grad. Med. Educ. 2016, 8, 620–621. [Google Scholar] [CrossRef] [Green Version]

| Mean | SD(σ) | MIN | Q1 | MED | Q3 | MAX | |

|---|---|---|---|---|---|---|---|

| Number of alerts | 32,465 | 111,836 | 1201 | 2420 | 5380 | 11,927 | 760,690 |

| Alert Message Length | 23 | 20 | 4 | 10 | 15 | 28 | 278 |

| Alert Dwell Time | 1.3 | 0.4 | 0.03 | 1.2 | 1.3 | 1.4 | 3 |

| Physician | 1.3 | 0.3 | 0.03 | 1.2 | 1.3 | 1.4 | 2.3 |

| Nurse | 1.5 | 0.5 | 0.03 | 1.2 | 1.4 | 1.7 | 3.1 |

| Other | 1.3 | 0.4 | 0.00 | 1.2 | 1.3 | 1.4 | 3.1 |

| # | Title & Message Content (Categories) | Number of Alerts | Dwell Time (Secs) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total (100%) | Professional Group | Dwell Time | MED | Mean | MIN | MAX | |||||||

| PHY | NU | OTH | <1 sec | 1~10 Secs | 10~100 Secs | >100 Secs | |||||||

| 1 | Notification of online health insurance open function! | 760,690 | 516,099 | 123,857 | 120,734 | 231,505 | 516,160 | 11,122 | 1903 | 1.169 | 2.40 | 0.017 | 6283.24 |

| Failed to open the online medication record, please check the card reader! | 67.8% | 16.3% | 15.9% | 30.4% | 67.9% | 1.5% | 0.3% | ||||||

| 2 | TOCC fill | 733,417 | 565,791 | 65,850 | 101,776 | 151,243 | 564,200 | 15,935 | 2039 | 1.297 | 2.80 | 0.016 | 4761.46 |

| Please fill in the patient’s TOCC record indeed! | 77.1% | 9.0% | 13.9% | 20.6% | 76.9% | 2.2% | 0.3% | ||||||

| 3 | Remind | 348,655 | 151,904 | 156,218 | 40,533 | 174,764 | 169,623 | 3805 | 463 | 1.000 | 1.86 | 0.015 | 4855.09 |

| Are you sure you want to cancel? It will not save while the health insurance card is removed. | 43.6% | 44.8% | 11.6% | 50.1% | 48.7% | 1.1% | 0.1% | ||||||

| 4 | Remind | 220,916 | 1914 | 210,237 | 8765 | 54,444 | 96,756 | 60,743 | 8973 | 2.635 | 19.54 | 0.018 | 9186.90 |

| The data has been saved on the health insurance card, now you may take it out. | 0.9% | 95.2% | 4.0% | 24.6% | 43.8% | 27.5% | 4.1% | ||||||

| 5 | Remind | 127,627 | 98,305 | 5561 | 23,761 | 41,501 | 83,814 | 2049 | 263 | 1.160 | 2.29 | 0.016 | 1038.24 |

| This is a primary healthcare diagnosis! | 77.0% | 4.4% | 18.6% | 32.5% | 65.7% | 1.6% | 0.2% | ||||||

| 6 | Alternative medication remind | 96,084 | 82,349 | 3539 | 10,196 | 15,466 | 79,086 | 1467 | 65 | 1.391 | 2.19 | 0.020 | 726.14 |

| [Drug A] has been suspended. Do you want to use [Drug B] as an alternative option? | 85.7% | 3.7% | 10.6% | 16.1% | 82.3% | 1.5% | 0.1% | ||||||

| 7 | Remind! | 87,561 | 72,612 | 3303 | 11,646 | 7744 | 77,912 | 1867 | 38 | 1.476 | 2.42 | 0.020 | 1284.53 |

| Do you want to prescribe the drug at the patient’s own expense? | 82.9% | 3.8% | 13.3% | 8.8% | 89.0% | 2.1% | 0.0% | ||||||

| 8 | Remind! | 78,554 | 51,956 | 15,264 | 11,334 | 76,384 | 2159 | 11 | 0 | 0.027 | 0.12 | 0.010 | 28.49 |

| Do you want to reprint the invoice? | 66.1% | 19.4% | 14.4% | 97.2% | 2.7% | 0.0% | 0.0% | ||||||

| 9 | Notification of Online health insurance open function! | 59,919 | 46,078 | 3910 | 9931 | 7888 | 49,321 | 2534 | 176 | 1.445 | 3.58 | 0.018 | 3284.53 |

| The information of the patient and IC card did not match. Please confirm whether the IC card is his/her card? | 76.9% | 6.5% | 16.6% | 13.2% | 82.3% | 4.2% | 0.3% | ||||||

| 10 | Remind! | 45,780 | 39,953 | 515 | 5312 | 13,594 | 30,892 | 1222 | 72 | 1.338 | 2.70 | 0.018 | 600.54 |

| [Drug A] was prescribed by another physician to [YYYYMMDD] and still has [N] days of medication remaining. Do you want to continue prescribing? | 87.3% | 1.1% | 11.6% | 29.7% | 67.5% | 2.7% | 0.2% | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chien, S.-C.; Chin, Y.-P.; Yoon, C.-H.; Chen, C.-Y.; Hsu, C.-K.; Chien, C.-H.; Li, Y.-C. A Tool to Retrieve Alert Dwell Time from a Homegrown Computerized Physician Order Entry (CPOE) System of an Academic Medical Center: An Exploratory Analysis. Appl. Sci. 2021, 11, 12004. https://doi.org/10.3390/app112412004

Chien S-C, Chin Y-P, Yoon C-H, Chen C-Y, Hsu C-K, Chien C-H, Li Y-C. A Tool to Retrieve Alert Dwell Time from a Homegrown Computerized Physician Order Entry (CPOE) System of an Academic Medical Center: An Exploratory Analysis. Applied Sciences. 2021; 11(24):12004. https://doi.org/10.3390/app112412004

Chicago/Turabian StyleChien, Shuo-Chen, Yen-Po Chin, Chang-Ho Yoon, Chun-You Chen, Chun-Kung Hsu, Chia-Hui Chien, and Yu-Chuan Li. 2021. "A Tool to Retrieve Alert Dwell Time from a Homegrown Computerized Physician Order Entry (CPOE) System of an Academic Medical Center: An Exploratory Analysis" Applied Sciences 11, no. 24: 12004. https://doi.org/10.3390/app112412004