A Mobility Prediction-Based Relay Cluster Strategy for Content Delivery in Urban Vehicular Networks

Abstract

1. Introduction

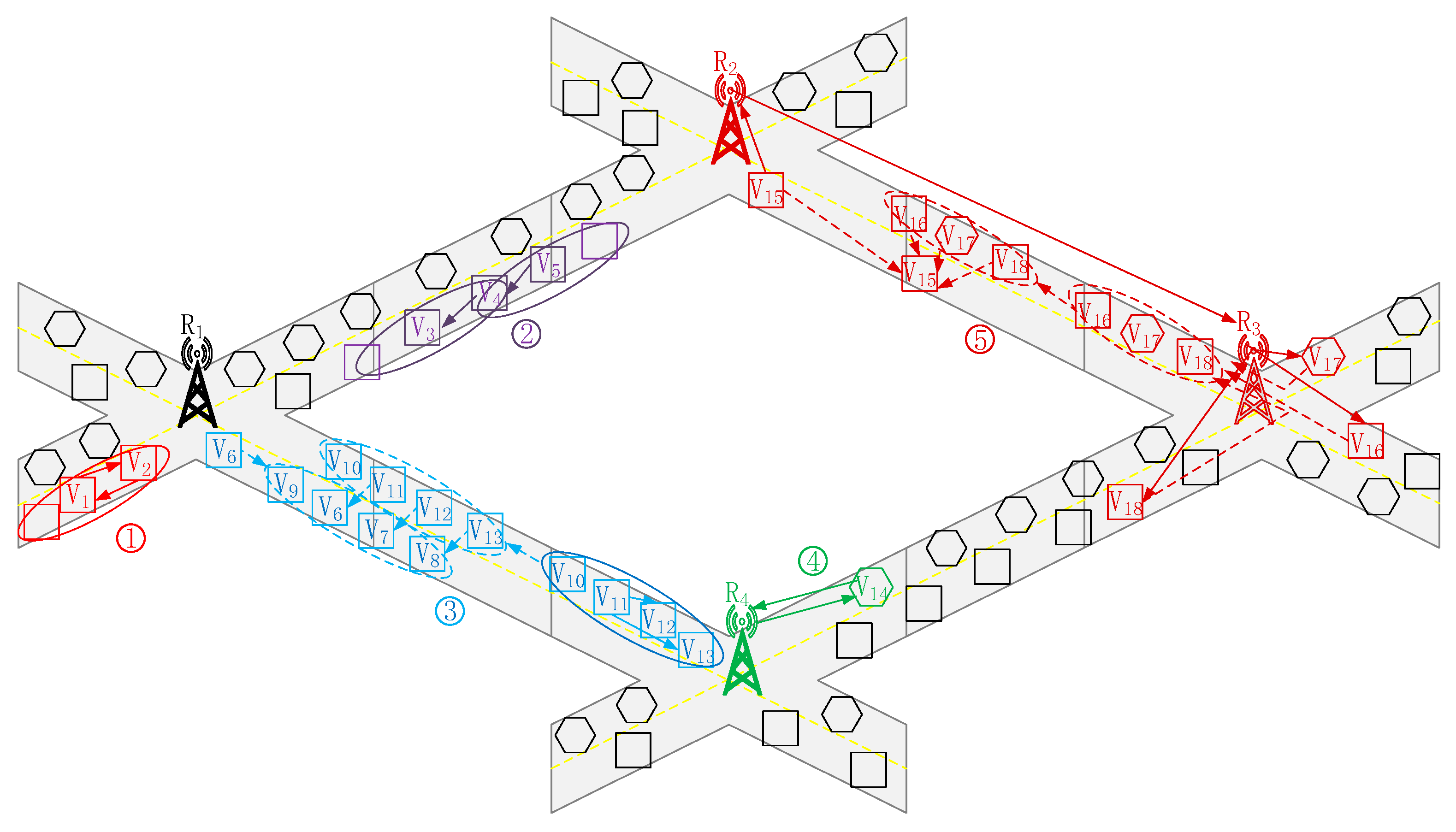

- We propose a proactive caching strategy based on the cluster’s prediction trajectory, which divides vehicles into clusters according to the vehicles’ prediction trajectory. RSUs proactively cache content chunks at a cluster that will meet the request vehicle on the prediction trajectory. By letting vehicles receive content chunks from vehicles on the opposite road one by one, we enlarge the communication duration between the request vehicle and content source vehicle and increase the success probability of content delivery. Besides, in order to increase the success probability of content delivery between the request cluster and content source cluster, we introduce the multiuser multichannel transmission mode into the communication process between clusters.

- Aiming at increasing stability of clusters, we treat vehicles’ prediction trajectory as one of considerations of cluster division. By giving CMs the same prediction trajectory, we can obtain the cluster’s prediction trajectory, which is the same as that of the vehicles’ in the cluster.

- Based on the prediction trajectory of the request vehicle and clusters, as well as the vehicle’s speed, the RSU proactively caches content chunks at multiple vehicles in the corresponding cluster. During the process, the RSU formulates the optimal number of content chunks to maximize the success probability of content delivery.

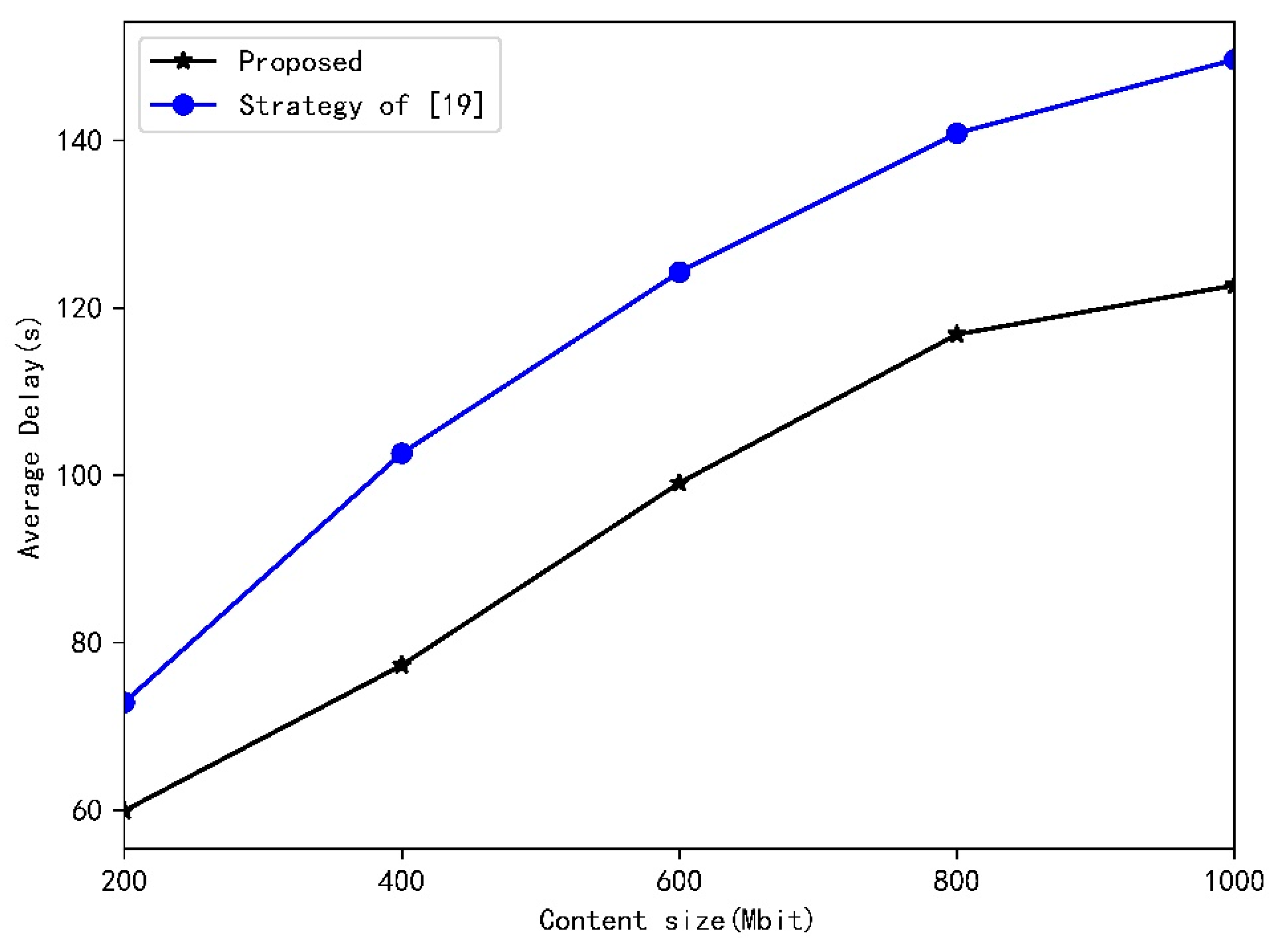

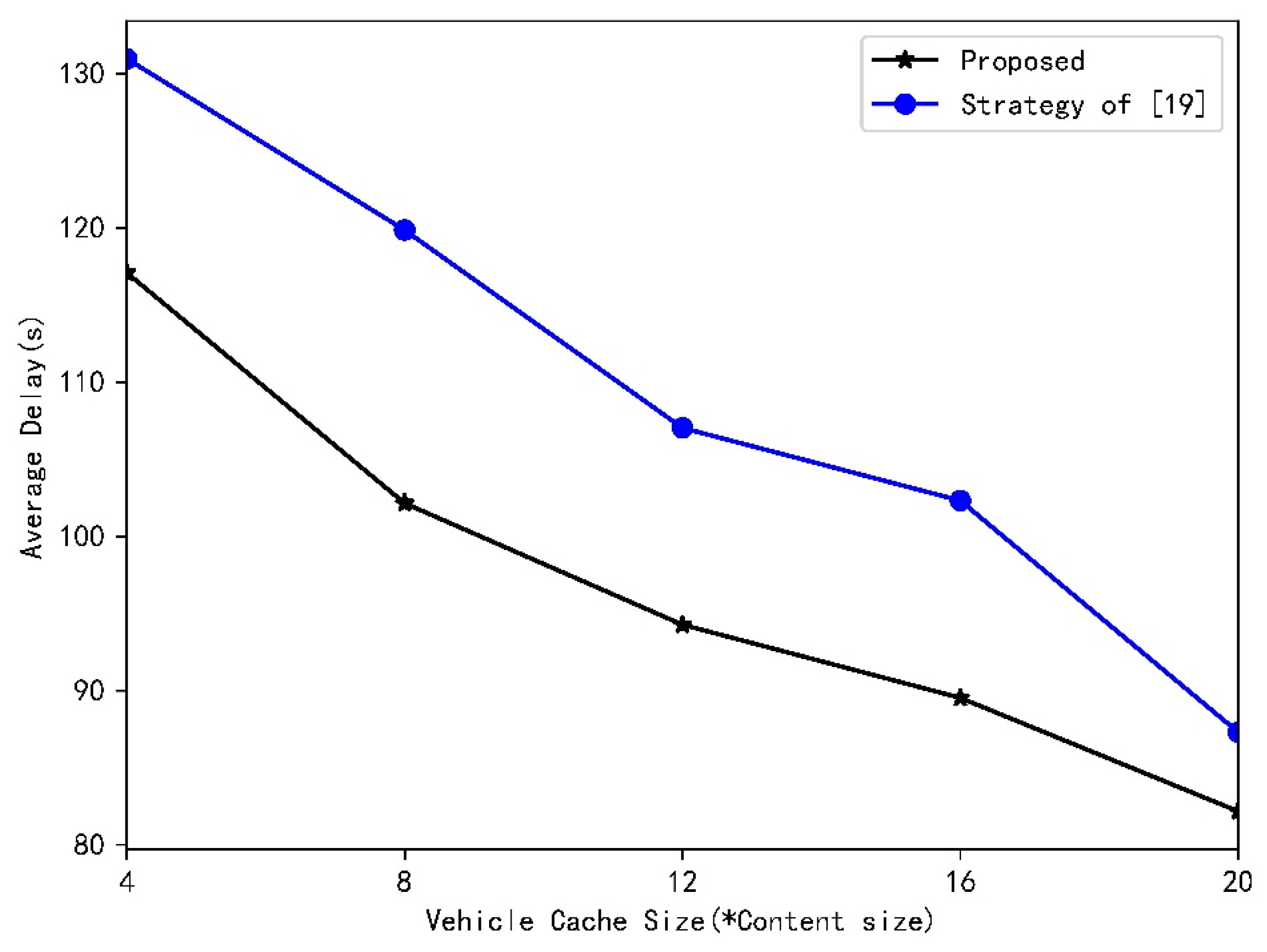

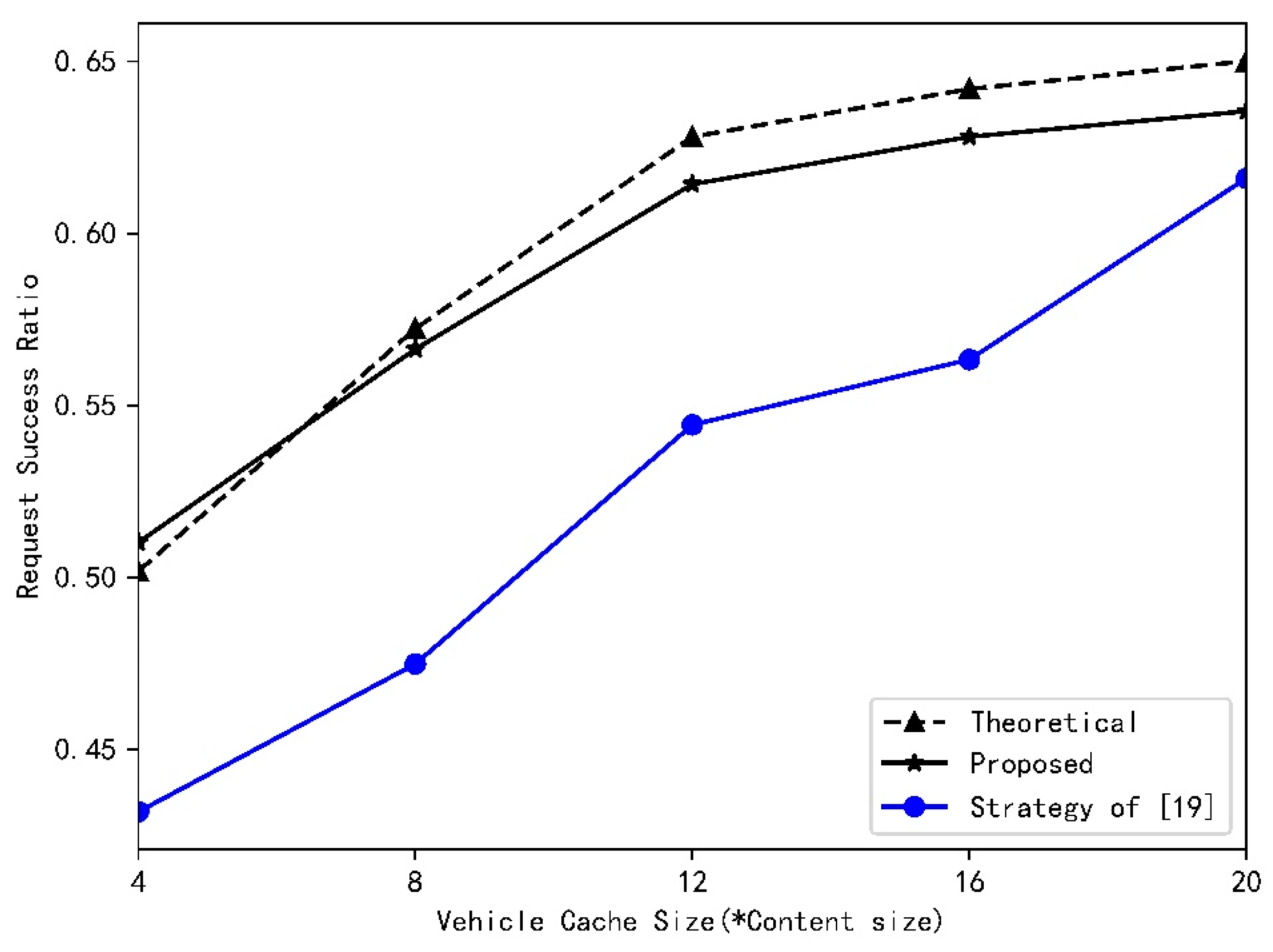

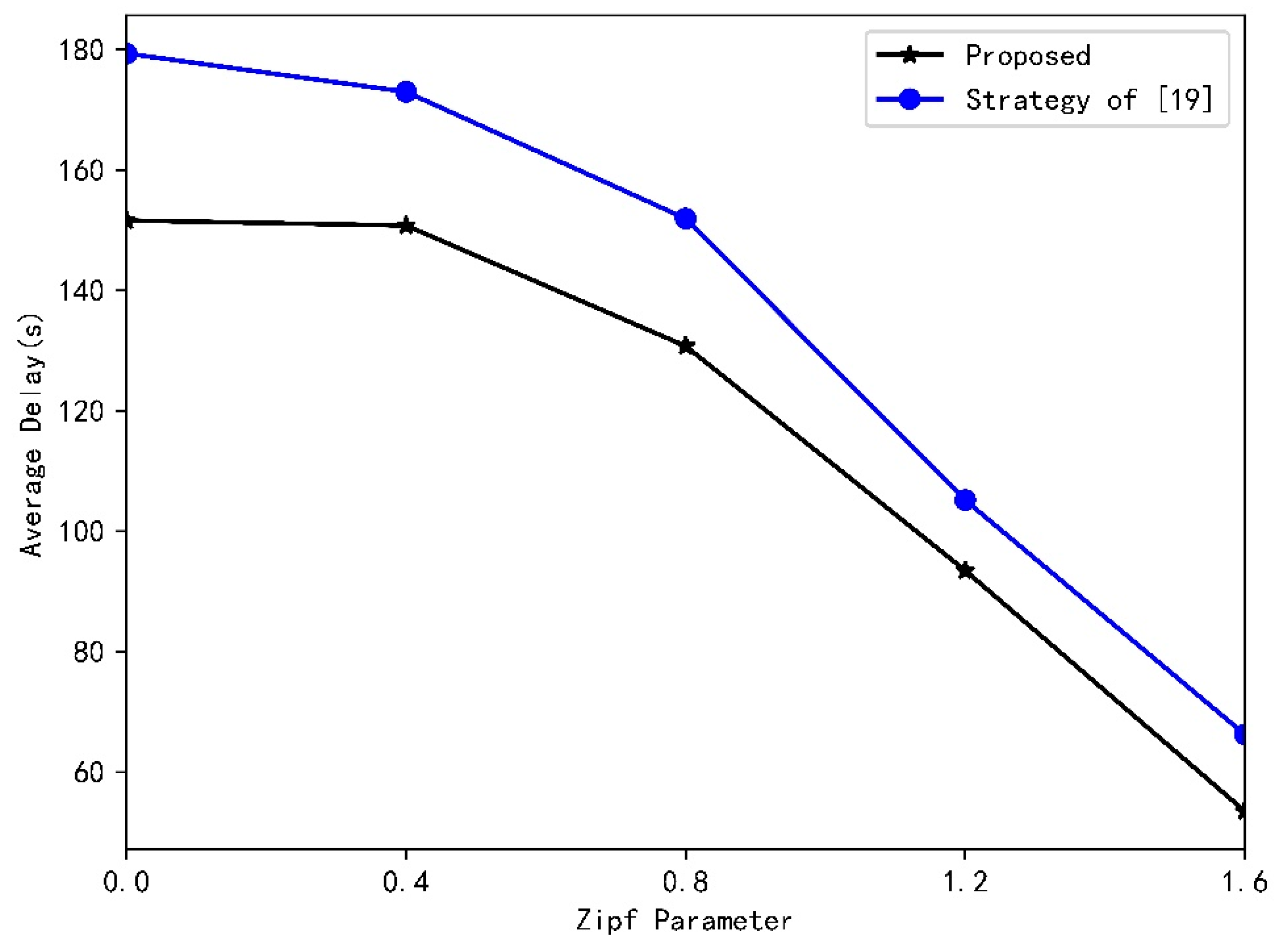

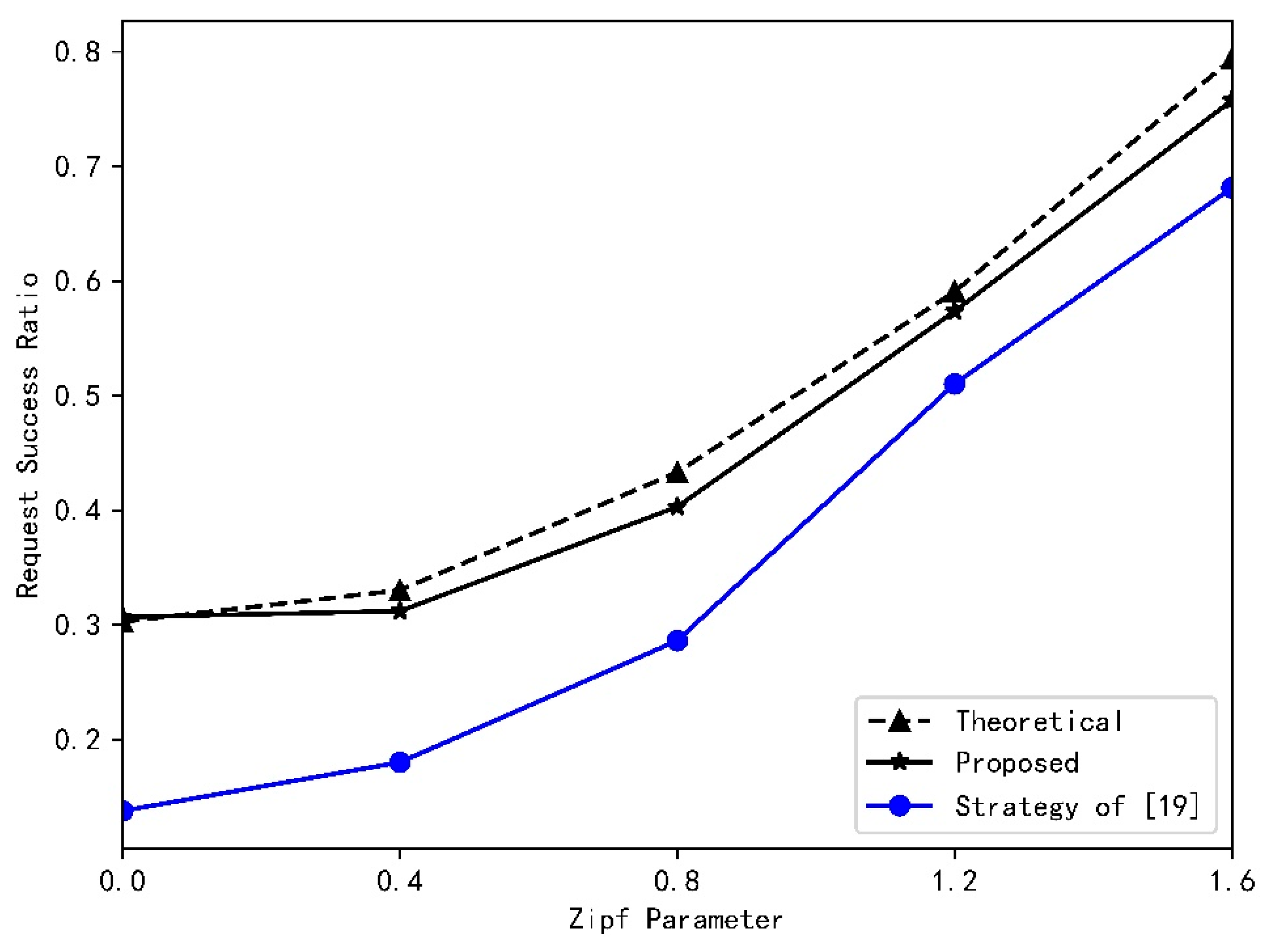

- Based on the statistical characteristics of vehicle’s speed, vehicle flow, and the number of request arrivals in RSU, we theoretically derive the success probability of content delivery. The simulation results verify the validity of the process of derivation and demonstrate that this paper improve the system’s performance in terms of time delay, as well as the success probability of content delivery. In comparison with the results achieved by the authors of [19], we increase success probability by about 20%.

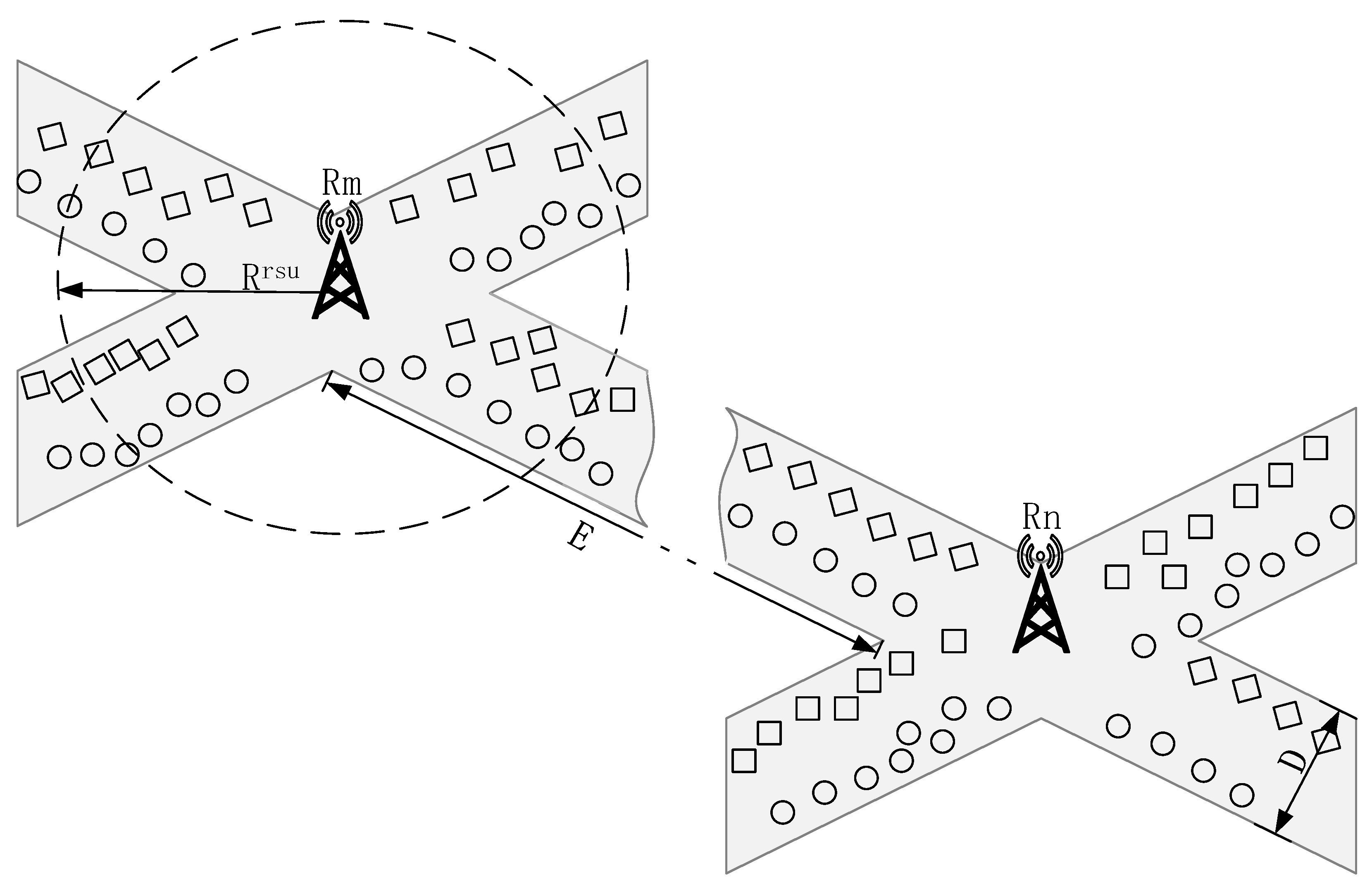

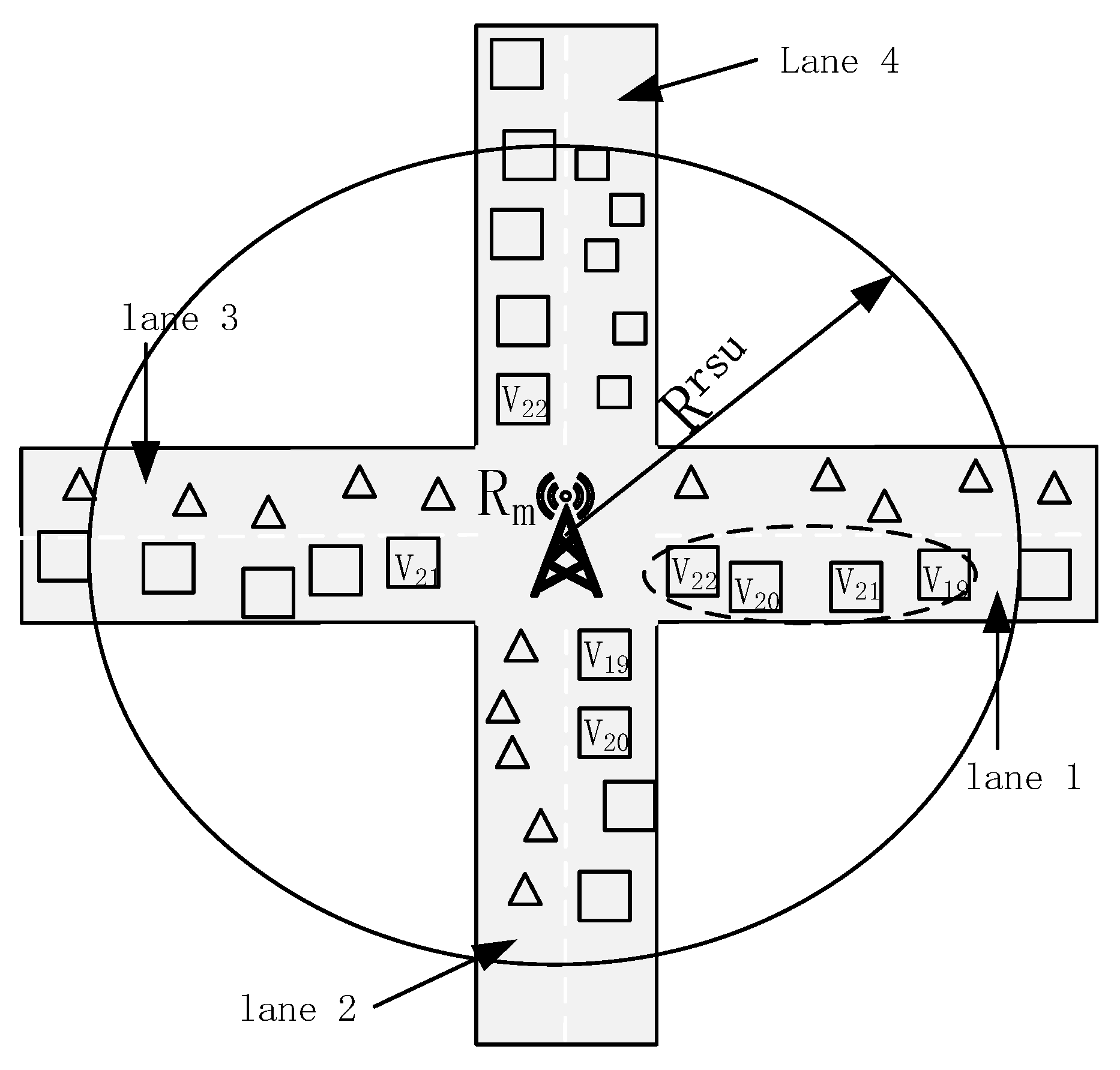

2. System Model

3. Content Acquisition Process and Caching Algorithm Optimization

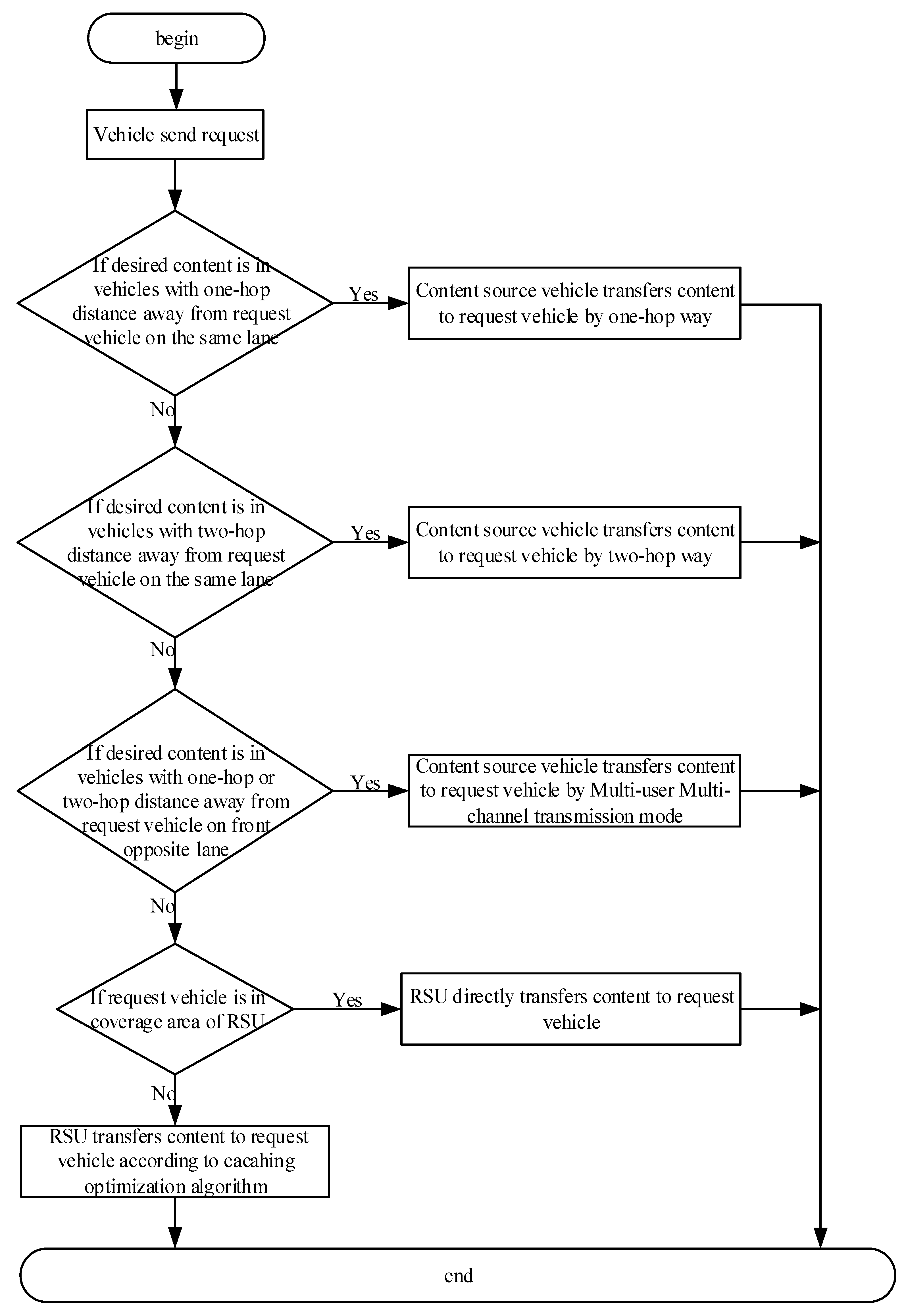

3.1. Content Acquisition Process

3.2. Caching Optimization Algorithm

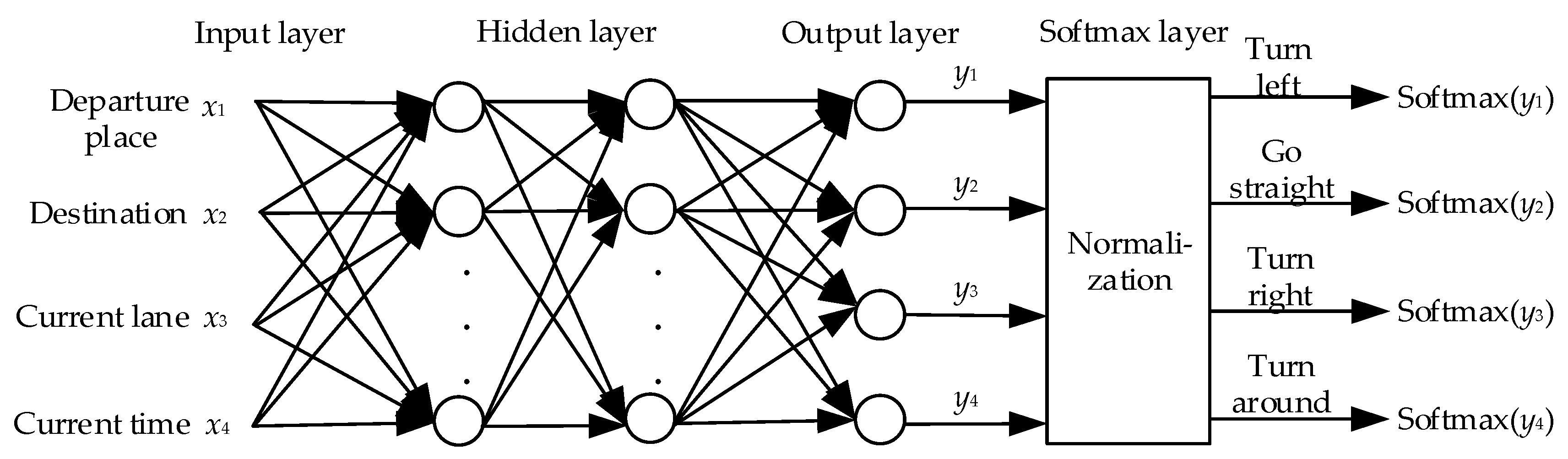

3.2.1. Cluster-Dividing Mechanism

| Algorithm 1 Cluster Dividing Algorithm Based on Mobility Prediction |

|

3.2.2. Caching optimization for number k of content chunks

| Algorithm 2 Caching Algorithm for Optimizing the Number of Content Chunks |

Initialization: All vehicles in coverage area of constitute the set , , ,

|

3.3. Analysis of Success Probability of Contnet Acquisition

- ➀

- Obtaining content from vehicles that are a one-hop distance away from the request vehicle

- ➁

- Obtaining content from vehicles that are a two-hop distance away from the request vehicle

- ➂

- Obtaining content from vehicles that are a one-hop or two-hop distance away from the request vehicle on the front opposite lane

- ➃

- Obtaining content directly from an RSU

- ➄

- Obtaining content from cluster with content cached by an RSU

4. Discussion

4.1. Parameter Settings

4.2. Results and Analysys

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, X.; Yang, L.; Shen, X. D2D for Intelligent Transportation Systems: A Feasibility Study. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1784–1793. [Google Scholar] [CrossRef]

- Campanile, L.; Iacono, M.; Levis, A.H.; Marulli, F.; Mastroianni, M. Privacy Regulations, Smart Roads, Blockchain, and Liability Insurance: Putting Technologies to Work. IEEE Secur. Priv. 2021, 19, 34–43. [Google Scholar] [CrossRef]

- Campanile, L.; Iacono, M.; Marulli, F.; Mastroianni, M. Designing a GDPR compliant blockchain-based IoV distributed information tracking system. Inf. Process. Manag. 2021, 58, 1–23. [Google Scholar] [CrossRef]

- Jacobson, V.; Smetters, D.K.; Thornton, J.D.; Plass, M.F.; Briggs, N.H.; Braynard, R.L. Networking named content. Commun. ACM. 2009, 55, 117–124. [Google Scholar] [CrossRef]

- Laoutaris, N.; Che, H.; Stavrakakis, I. The LCD interconnection of LRU caches and its analysis. Perform. Eval. 2006, 63, 609–634. [Google Scholar] [CrossRef]

- Laoutaris, N.; Syntila, S.; Stavrakakis, I. Meta Algorithms for Hierarchical Web Caches. In Proceedings of the IEEE International Conference on Performance, Computing, and Communications, Phoenix, AZ, USA, 15–17 April 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 445–452. [Google Scholar]

- Psaras, I.; Chai, W.K.; Pavlou, G. Probabilistic in-Network Caching for Information-Centric Networks. In Proceedings of the 2nd edition of the ICN Workshop on Information-Centric Networking, Helsinki, Finland, 17 August 2012; Association for Computing Machinery (ACM): New York, NY, USA, 2012; pp. 55–60. [Google Scholar]

- Grewe, D.; Wagner, M.; Frey, H. PeRCeIVE: Proactive Caching in ICN-Based VANETs. In Proceedings of the IEEE Vehicular Networking Conference, Columbus, OH, USA, 8–10 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Alnagar, Y.; Hosny, S.; El-Sherif, A.A. Towards Mobility-Aware Proactive Caching for Vehicular Ad hoc Networks. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference Workshop (WCNCW), Marrakech, Morocco, 15–18 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Khelifi, H.; Luo, S.L.; Nour, B.; Sellami, A.; Moungla, H.; Naït-Abdesselam, F. An Optimized Proactive Caching Scheme Based on Mobility Prediction for Vehicular Networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emerites, 9–13 December 2018; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Yao, L.; Chen, A.; Deng, J.; Wang, J.; Wu, G. A Cooperative Caching Scheme Based on Mobility Prediction in Vehicular Content Centric Networks. IEEE Trans. Veh. Technol. 2018, 67, 5435–5444. [Google Scholar] [CrossRef]

- Zhao, Z.; Guardalben, L.; Karimzadeh, M.; Silva, J.; Braun, T.; Sargento, S. Mobility Prediction-Assisted Over-the-Top Edge Prefetching for Hierarchical VANETs. IEEE J. Sel. Areas Commun. 2018, 36, 1786–1801. [Google Scholar] [CrossRef]

- Zhang, F.; Xu, C.; Zhang, Y.; Ramakrishnan, K.K.; Mukherjee, S.; Yates, R.; Nguyen, T. EdgeBuffer: Caching and Prefetching Content at the Edge in the MobilityFirst Future Internet Architecture. In Proceedings of the 16th International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Boston, MA, USA, 14–17 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–9. [Google Scholar]

- Hou, L.; Lei, L.; Zheng, K.; Wang, X. A Q-Learning based Proactive Caching Strategy for Non-safety Related Services in Vehicular Networks. IEEE Internet Things J. 2018, 6, 4512–4520. [Google Scholar] [CrossRef]

- Gerla, M.; Tsai, J.T.-C. Multicluster, mobile, multimedia radio network. Wirel. Netw. 1995, 1, 255–265. [Google Scholar] [CrossRef]

- Basu, P.; Khan, N.; Little, T.D.C. A Mobility Based Metric for Clustering in Mobile Ad Hoc Networks. In Proceedings of the 21st International Conference on Distributed Computing Systems Workshops, Mesa, AZ, USA, 16–19 April 2001; Association for Computing Machinery: New York, NY, USA, 2001; pp. 413–418. [Google Scholar]

- Chatterjee, M.; Das, S.K.; Turgut, D. WCA: A Weighted Clustering Algorithm for Mobile Ad Hoc Networks. Clust. Comput. 2002, 5, 193–204. [Google Scholar] [CrossRef]

- Huang, W.; Song, T.; Yang, Y.; Zhang, Y. Cluster-Based Cooperative Caching with Mobility Prediction in Vehicular Named Data Networking. IEEE Access 2019, 7, 23442–23458. [Google Scholar] [CrossRef]

- Huang, W.; Song, T.; Yang, Y.; Zhang, Y. Cluster-Based Selective Cooperative Caching Strategy in Vehicular Named Data Networking. In Proceedings of the 2018 1st IEEE International Conference on Hot Information-Centric Networking (HotICN), Shenzhen, China, 15–17 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7–12. [Google Scholar]

- Fang, S.; Fan, P. A Cooperative Caching Algorithm for Cluster-Based Vehicular Content Networks with Vehicular Caches. In Proceedings of the 2017 IEEE Globecom Workshops (GC Wkshps), Singapore, 4–8 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Hu, B.; Fang, L.; Cheng, X.; Yang, L. In-Vehicle Caching (IV-Cache) Via Dynamic Distributed Storage Relay (D2SR) in Vehicular Networks. IEEE Trans. Veh. Technol. 2019, 68, 843–855. [Google Scholar] [CrossRef]

- Dacey, M.F. Some Properties of the Superposition of a Point Lattice and a Poisson Point Process. Econ. Geogr. 2016, 47, 86–90. [Google Scholar] [CrossRef]

- Wei, M.; Jin, W.; Shen, L. A Platoon Dispersion Model Based on a Truncated Normal Distribution of Speed. J. Appl. Math. 2012, 2012, 1–13. [Google Scholar] [CrossRef]

- Cha, M.; Kwak, H.; Rodriguez, P.; Ahn, Y.; Moon, S. Analyzing the Video Popularity Characteristics of Large-Scale User Generated Content Systems. IEEE/ACM Trans. Netw. 2009, 17, 1357–1370. [Google Scholar] [CrossRef]

- Krajzewicz, D. Traffic Simulation with SUMO—Simulation of Urban Mobility. In Fundamentals of Traffic Simulation; Barceló, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 269–293. [Google Scholar]

- Kagalkar, A.; Raghuram, S. CORDIC Based Implementation of the Softmax Activation Function. In Proceedings of the 2020 24th International Symposium on VLSI Design and Test (VDAT), Bhubaneswar, India, 23–25 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

| Symbol | Description |

|---|---|

| The number of street | |

| The width of lane | |

| The length of lane | |

| The parameter of Poisson Point Process for the number of vehicles on lane | |

| The number of the roadside units | |

| The roadside unit with sequence number of | |

| The coverage range of roadside unit | |

| The maximum transmission rate of roadside unit | |

| The average travel distance of vehicle under the coverage range of roadside unit | |

| The parameter of Poisson Process for the request number arriving at | |

| The number of content in content library | |

| The parameter of Zipf distribution | |

| The size of content | |

| The vehicle with sequence number of | |

| The number of vehicles in the same lane as under the coverage range of | |

| The maximum number of content that vehicle’s caching capacity can accommodate | |

| The coverage range of vehicle | |

| The cluster with vehicles have next prediction lane under the coverage range of | |

| The number of vehicle in |

| Parameter | Value |

|---|---|

| The number of content | 200 |

| The size of content (Mbit) | 600 |

| The parameter of Zipf Distribution | 1 |

| The transmission power of vehicle (mW) | 200 |

| The transmission power of RSU (mW) | 2000 |

| The coverage range of vehicle (m) | 50 |

| The coverage range of RSU (m) | 100 |

| The length of lane (m) | 2000 |

| The width of lane (m) | 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, S.; Zhu, Q. A Mobility Prediction-Based Relay Cluster Strategy for Content Delivery in Urban Vehicular Networks. Appl. Sci. 2021, 11, 2157. https://doi.org/10.3390/app11052157

Yue S, Zhu Q. A Mobility Prediction-Based Relay Cluster Strategy for Content Delivery in Urban Vehicular Networks. Applied Sciences. 2021; 11(5):2157. https://doi.org/10.3390/app11052157

Chicago/Turabian StyleYue, Shaoqi, and Qi Zhu. 2021. "A Mobility Prediction-Based Relay Cluster Strategy for Content Delivery in Urban Vehicular Networks" Applied Sciences 11, no. 5: 2157. https://doi.org/10.3390/app11052157

APA StyleYue, S., & Zhu, Q. (2021). A Mobility Prediction-Based Relay Cluster Strategy for Content Delivery in Urban Vehicular Networks. Applied Sciences, 11(5), 2157. https://doi.org/10.3390/app11052157