Emotion Recognition: An Evaluation of ERP Features Acquired from Frontal EEG Electrodes

Abstract

:1. Introduction

2. Recent Studies

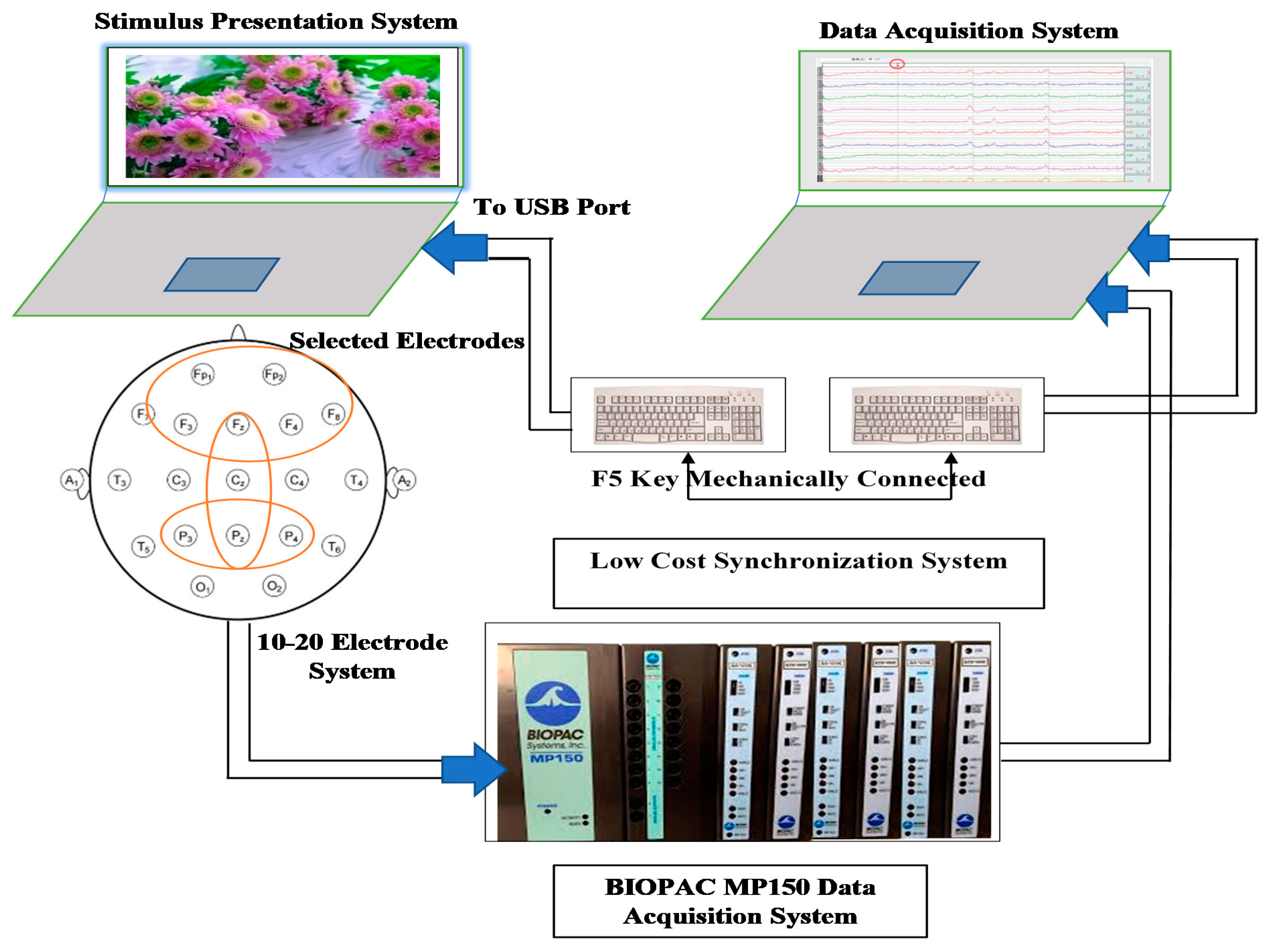

3. EEG Data Acquisition Methodology

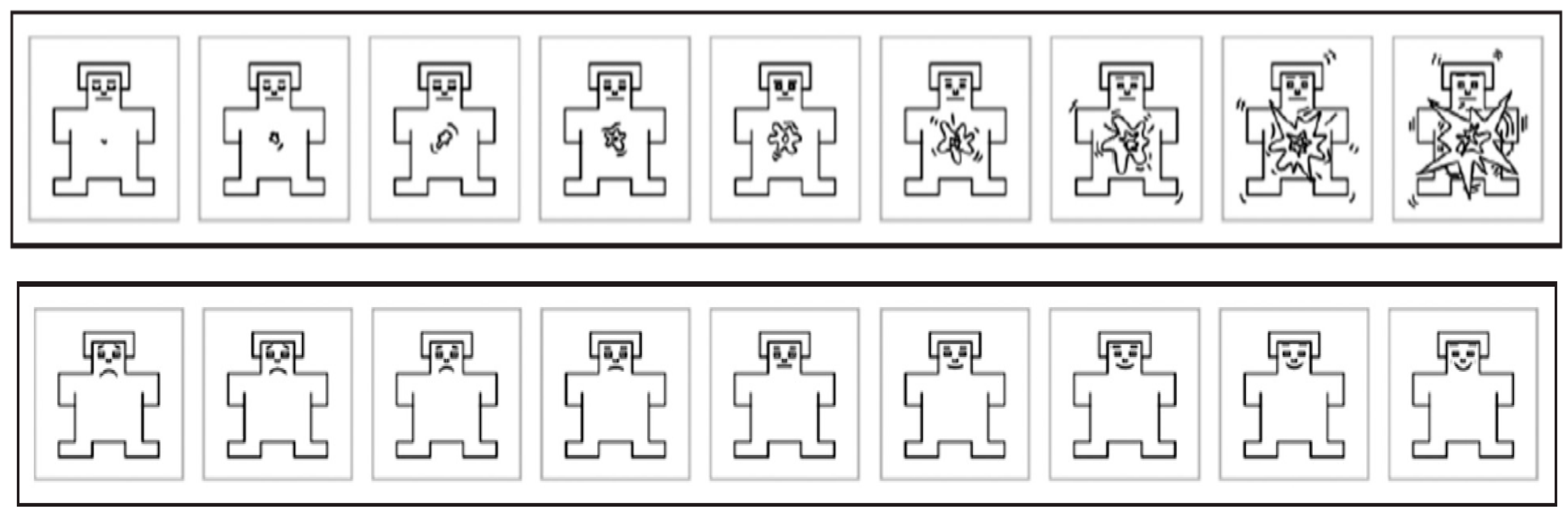

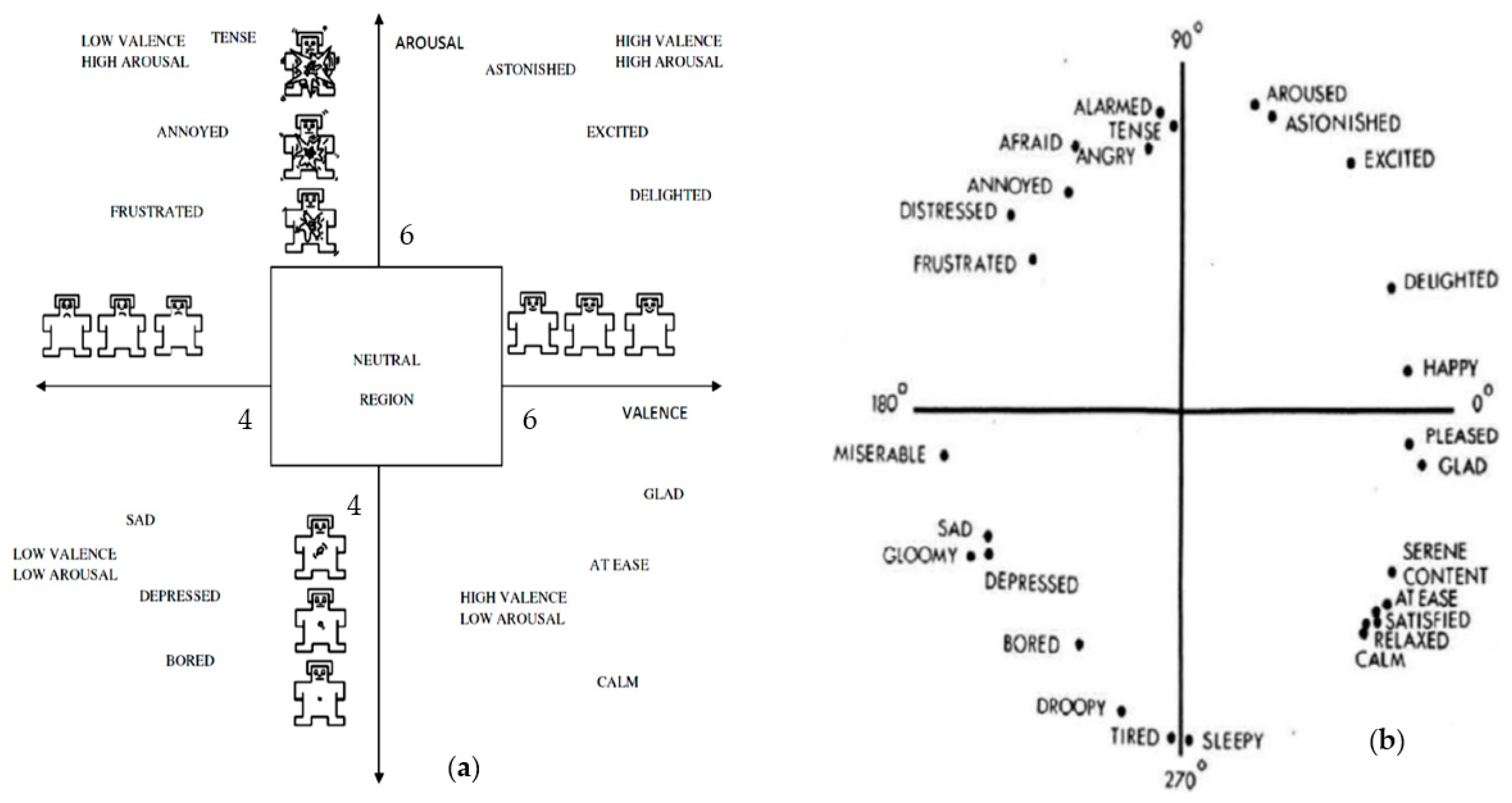

4. Selection of Emotion-Evoking Stimulus

Subse

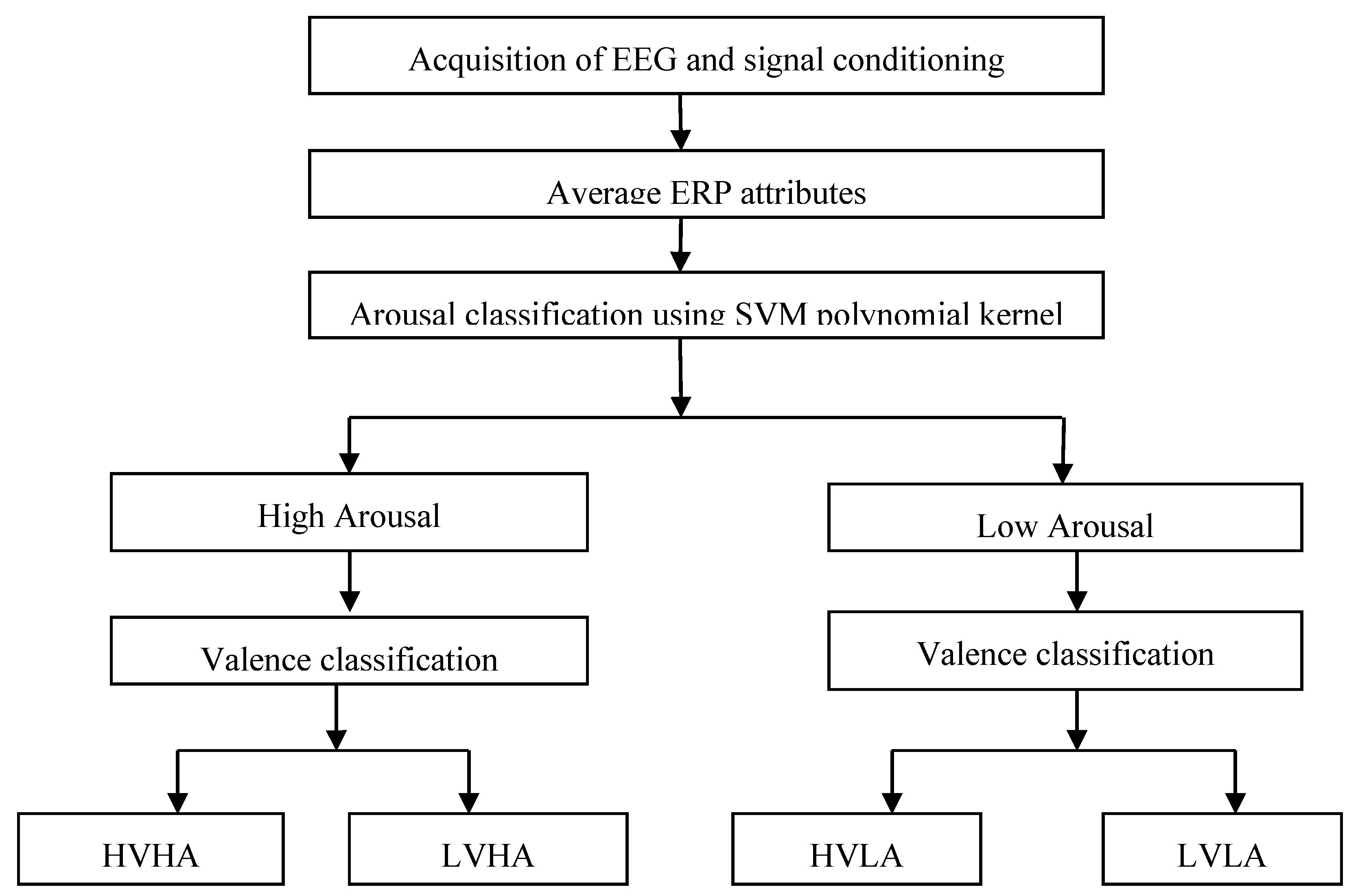

5. Preprocessing Operations on EEG and Feature Selection

6. Results and Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Izard, C.E. The Many Meanings/Aspects of Emotion: Definitions, Functions, Activation, and Regulation. Emot. Rev. 2010, 2, 363–370. [Google Scholar] [CrossRef] [Green Version]

- Widen, S.C.; Russell, J.A. Descriptive and Prescriptive Definitions of Emotion. Emot. Rev. 2010, 2, 377–378. [Google Scholar] [CrossRef]

- Moors, A. Theories of Emotion Causation: A Review. Cogn. Emot. 2009, 23, 625–662. [Google Scholar] [CrossRef] [Green Version]

- Lang, P.J. Emotion and Motivation: Toward Consensus Definitions and a Common Research Purpose. Emot. Rev. 2010, 2, 229–233. [Google Scholar] [CrossRef]

- LeDoux, J.; Phelps, L.; Alberini, C. What We Talk about When We Talk about Emotions. Cell 2016, 167, 1443–1445. [Google Scholar]

- Panksepp, J. Toward a General Psychobiological Theory of Emotions. Behav. Brain Sci. 1982, 5, 407–422. [Google Scholar] [CrossRef]

- Scherer, K.R. Emotion. In Introduction to Social Psychology. A European Perspective, 3rd ed.; Hewstone, M., Stroebe, W., Jonas, K., Eds.; Blackwell: Oxford, UK, 2001; pp. 151–191. [Google Scholar]

- Breckler, S.; Wiggins, E. Emotional responses and the affective component of attitude. J. Soc. Behav. Personal. 1993, 8, 282. [Google Scholar]

- Berscheid, E. The emotion-in-relationships model: Reflections and. In Memories, Thoughts, Emotions Essays Honor George Mandler; Lawrence Erlbaum Associates, Inc.: Hillsdale, NJ, USA, 1991; pp. 323–335. [Google Scholar]

- Gottman, J.M. What Predicts Divorce? The Relationship between Marital Processes and Marital Outcomes; Psychology Press: East Sussex, UK, 2014. [Google Scholar]

- Brody, L. Gender, Emotion, and the Family; Harvard University Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Rolls, E.T. Emotion and Decision-Making Explained; OUP Oxford: Oxford, UK, 2013. [Google Scholar]

- Russell, J.A. Core Affect and the Psychological Construction of Emotion. Psychol. Rev. 2003, 110, 145. [Google Scholar] [CrossRef]

- Barrett, L.F.; Mesquita, B.; Ochsner, K.N.; Gross, J.J. The Experience of Emotion. Annu. Rev. Psychol. 2007, 58, 373–403. [Google Scholar] [CrossRef] [Green Version]

- Usman, S.M.; Khalid, S.; Bashir, Z. Epileptic Seizure Prediction Using Scalp Electroencephalogram Signals. Biocybern. Biomed. Eng. 2021, 41, 211–220. [Google Scholar] [CrossRef]

- Tzimourta, K.D.; Christou, V.; Tzallas, A.T.; Giannakeas, N.; Astrakas, L.G.; Angelidis, P.; Tsalikakis, D.; Tsipouras, M.G. Machine Learning Algorithms and Statistical Approaches for Alzheimer’s Disease Analysis Based on Resting-State EEG Recordings: A Systematic Review. Int. J. Neural Syst. 2021, 31, 2130002. [Google Scholar] [CrossRef] [PubMed]

- Cacioppo, J.T.; Tassinary, L.G. Inferring Psychological Significance from Physiological Signals. Am. Psychol. 1990, 45, 16–28. [Google Scholar] [CrossRef]

- Lang, P.J.; Greenwald, M.K.; Bradley, M.M.; Hamm, A.O. Looking at Pictures: Affective, Facial, Visceral, and Behavioral Reactions. Psychophysiology 1993, 30, 261–273. [Google Scholar] [CrossRef] [PubMed]

- Chanel, G.; Ansari-Asl, K.; Pun, T. Valence-arousal evaluation using physiological signals in an emotion recall paradigm. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montréal, QC, Canada, 7–10 October 2007; IEEE: New York, NY, USA, 2007; pp. 2662–2667. [Google Scholar]

- Savran, A.; Ciftci, K.; Chanel, G.; Mota, J.; Hong Viet, L.; Sankur, B.; Akarun, L.; Caplier, A.; Rombaut, M. Emotion detection in the loop from brain signals and facial images. In Proceedings of the eNTERFACE 2006 Workshop, Dubrovnik, Croatia, 17 July–11 August 2006. [Google Scholar]

- Horlings, R.; Datcu, D.; Rothkrantz, L.J.M. Emotion recognition using brain activity. In Proceedings of the 9th International conference on Computer Systems and Technologies and Workshop for PhD Students in Computing, Gabrovo, Bulgaria, 12–13 June 2008; p. II-1. [Google Scholar] [CrossRef]

- Frantzidis, C.A.; Bratsas, C.; Papadelis, C.L.; Konstantinidis, E.; Pappas, C.; Bamidis, P.D. Toward Emotion Aware Computing: An Integrated Approach Using Multichannel Neurophysiological Recordings and Affective Visual Stimuli. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 589–597. [Google Scholar] [CrossRef] [PubMed]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion Recognition from Brain Signals Using Hybrid Adaptive Filtering and Higher Order Crossings Analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97. [Google Scholar] [CrossRef]

- Paul, E.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Jenke, R.; Peer, A.; Buss, M. Feature Extraction and Selection for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Li, M.; Lu, B.-L. Emotion classification based on gamma-band EEG. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis/St. Paul, MN, USA, 2–6 September 2009; IEEE: New York, NY, USA, 2009; pp. 1223–1226. [Google Scholar]

- Lin, Y.-P.; Wang, C.-H.; Jung, T.-P.; Wu, T.-L.; Jeng, S.-K.; Duann, J.-R.; Chen, J.-H. EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [PubMed]

- Murugappan, M.; Nagarajan, R.; Yaacob, S. Discrete Wavelet Transform Based Selection of Salient EEG Frequency Band for Assessing Human Emotions. Discret. Wavelet Transform. Appl. IntechOpen Ser. Malays. Kangar Malays. 2011, 33–52. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Sourina, O. Real-time fractal-based valence level recognition from EEG. In Transactions on Computational Science XVIII; Springer: Berlin, Germany, 2013; pp. 101–120. [Google Scholar]

- Mühl, C.; Allison, B.; Nijholt, A.; Chanel, G. A Survey of Affective Brain Computer Interfaces: Principles, State-of-the-Art, and Challenges. Brain Comput. Interfaces 2014, 1, 66–84. [Google Scholar] [CrossRef] [Green Version]

- Zheng, W.-L.; Zhu, J.-Y.; Lu, B.-L. Identifying Stable Patterns over Time for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2017, 10, 417–429. [Google Scholar] [CrossRef] [Green Version]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.K.; Nasrabadi, A.M. Emotion Recognition through EEG Phase Space Dynamics and Dempster-Shafer Theory. Med. Hypotheses 2019, 127, 34–45. [Google Scholar] [CrossRef]

- Liang, Z.; Oba, S.; Ishii, S. An Unsupervised EEG Decoding System for Human Emotion Recognition. Neural Netw. 2019, 116, 257–268. [Google Scholar] [CrossRef]

- Kong, T.; Shao, J.; Hu, J.; Yang, X.; Yang, S.; Malekian, R. EEG-Based Emotion Recognition Using an Improved Weighted Horizontal Visibility Graph. Sensors 2021, 21, 1870. [Google Scholar] [CrossRef] [PubMed]

- Koelstra, S.; Patras, I. Fusion of Facial Expressions and EEG for Implicit Affective Tagging. Image Vis. Comput. 2013, 31, 164–174. [Google Scholar] [CrossRef]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2011, 3, 42–55. [Google Scholar] [CrossRef] [Green Version]

- Soleymani, M.; Asghari-Esfeden, S.; Fu, Y.; Pantic, M. Analysis of EEG Signals and Facial Expressions for Continuous Emotion Detection. IEEE Trans. Affect. Comput. 2015, 7, 17–28. [Google Scholar] [CrossRef]

- Sourina, O.; Liu, Y. A Fractal-Based Algorithm of Emotion Recognition from EEG Using Arousal-Valence Model. In International Conference on Bio-Inspired Systems and Signal Processing; Scitepress: Rome, Italy, 2011; Volume 2, pp. 209–214. [Google Scholar]

- Hwang, S.; Hong, K.; Son, G.; Byun, H. Learning CNN Features from DE Features for EEG-Based Emotion Recognition. Pattern Anal. Appl. 2020, 3, 1323–1335. [Google Scholar] [CrossRef]

- Singh, M.I.; Singh, M. Development of Emotion Classifier Based on Absolute and Differential Attributes of Averaged Signals of Visually Stimulated Event Related Potentials. Trans. Inst. Meas. Control 2020, 42, 2057–2067. [Google Scholar] [CrossRef]

- Chanel, G.; Kronegg, J.; Grandjean, D.; Pun, T. Emotion assessment: Arousal evaluation using EEG’s and peripheral physiological signals. In International Workshop on Multimedia Content Representation, Classification and Security; Springer: Berlin, Germany, 2006; pp. 530–537. [Google Scholar]

- Frantzidis, C.A.; Lithari, C.D.; Vivas, A.B.; Papadelis, C.L.; Pappas, C.; Bamidis, P.D. Towards emotion aware computing: A study of arousal modulation with multichannel event-related potentials, delta oscillatory activity and skin conductivity responses. In Proceedings of the 2008 8th IEEE International Conference on BioInformatics and BioEngineering, Athens, Greece, 8–10 October 2008; IEEE: New York, NY, USA, 2008; pp. 1–6. [Google Scholar]

- Khalili, Z.; Moradi, M.H. Emotion recognition system using brain and peripheral signals: Using correlation dimension to improve the results of EEG. In Proceedings of the 2009 International Joint Conference on Neural Networks, Atlanta, GA, USA, 14–19 June 2009; IEEE: New York, NY, USA, 2009; pp. 1571–1575. [Google Scholar]

- Jatupaiboon, N.; Pan-Ngum, S.; Israsena, P. Real-Time EEG-Based Happiness Detection System. Sci. World J. 2013, 2013, 618649. [Google Scholar] [CrossRef]

- Hidalgo-Muñoz, A.R.; López, M.M.; Santos, I.M.; Pereira, A.T.; Vázquez-Marrufo, M.; Galvao-Carmona, A.; Tomé, A.M. Application of SVM-RFE on EEG Signals for Detecting the Most Relevant Scalp Regions Linked to Affective Valence Processing. Expert Syst. Appl. 2013, 40, 2102–2108. [Google Scholar] [CrossRef]

- Liu, Y.-H.; Wu, C.-T.; Cheng, W.-T.; Hsiao, Y.-T.; Chen, P.-M.; Teng, J.-T. Emotion Recognition from Single-Trial EEG Based on Kernel Fisher’s Emotion Pattern and Imbalanced Quasiconformal Kernel Support Vector Machine. Sensors 2014, 14, 13361–13388. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.-P.; Jung, T.-P. Improving EEG-Based Emotion Classification Using Conditional Transfer Learning. Front. Hum. Neurosci. 2017, 11, 334. [Google Scholar] [CrossRef] [PubMed]

- Menezes, M.L.R.; Samara, A.; Galway, L.; Sant’Anna, A.; Verikas, A.; Alonso-Fernandez, F.; Wang, H.; Bond, R. Towards Emotion Recognition for Virtual Environments: An Evaluation of EEG Features on Benchmark Dataset. Pers. Ubiquitous Comput. 2017, 21, 1003–1013. [Google Scholar] [CrossRef] [Green Version]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2018, 11, 532–541. [Google Scholar] [CrossRef] [Green Version]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; University of Florida: Gainesville, FL, USA, 2008; Tech Rep A-8. [Google Scholar]

- Singh, M.I.; Singh, M. Development of Low-Cost Event Marker for EEG-Based Emotion Recognition. Trans. Inst. Meas. Control. 2015, 39, 642–652. [Google Scholar] [CrossRef]

- Russell, J.A. A Circumplex Model of Affect. J. Pers. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Stanley, D.J.; Meyer, J.P. Two-Dimensional Affective Space: A New Approach to Orienting the Axes. Emotion 2009, 9, 214–237. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.I.; Singh, M. Development of a Real Time Emotion Classifier Based on Evoked EEG. Biocybern. Biomed. Eng. 2017, 37, 498–509. [Google Scholar] [CrossRef]

- Russell, J.A.; Barrett, L.F. Core Affect, Prototypical Emotional Episodes, and Other Things Called Emotion: Dissecting the Elephant. J. Pers. Soc. Psychol. 1999, 76, 805. [Google Scholar] [CrossRef]

- Watson, D.; Wiese, D.; Vaidya, J.; Tellegen, A. The Two General Activation Systems of Affect: Structural Findings, Evolutionary Considerations, and Psychobiological Evidence. J. Pers. Soc. Psychol. 1999, 76, 820–838. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, W. Emotion Recognition and Regulation Based on Stacked Sparse Auto-Encoder Network and Personalized Reconfigurable Music. Mathematics 2021, 9, 593. [Google Scholar] [CrossRef]

- Anderson, D.J.; Adolphs, R. A Framework for Studying Emotions across Species. Cell 2014, 157, 187–200. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| S.No | Reference Number, Stimulus, Number of Subjects | Number of Classes | Physiological Signals | Classifier | Features | Results |

|---|---|---|---|---|---|---|

| 1 | [42], IAPS, 4 | 2 (Calm and Exciting) | EEG | FDA | Power and statistical features | 55% |

| Physiological signals such as GSR, Plethysmograph, Respiration and Temperature | 53% | |||||

| Fusion of signals | 54% | |||||

| 2 (Calm and Exciting) | EEG, Physiological signals and fusion of the two | NB | 50–54% | |||

| 2 | [21], IAPS, eNTERFACE 2006 data and 10 other subjects | 5 along valence and arousal axis | EEG | SVM | ERD/ERS, cross correlation, peak frequency and Hjorth parameters | Valence—31%, Arousal—32% |

| ANN | Valence—31%, Arousal—28% | |||||

| NB | Valence—29%, Arousal—35% | |||||

| 3 | SVM | Valence—37%, Arousal—49% | ||||

| 2 | Valence—72%, Arousal—68% | |||||

| 3 | [43], IAPS, 26 | 4 (Joy, Fear, Happiness and Melancholy) | EEG and GSR | ANN | ERP features and the GSR duration | 80%, 100%, 80%, 70% |

| 4 | [44], IAPS, eNTERFACE 2006 data | 3 (Calm, Negatively Excited and Positively Excited) | EEG | QDC | Statistical features and power in 10 frequency bands ranging from 0.25 Hz to 2.75 Hz | 63.33–66.66% |

| Peripheral signals such as GSR, Temperature, B.P. and Respiration | 55–51.66% | |||||

| EEG+Peripheral (without correlation dimension) | 61.8–62.2% | |||||

| EEG (combination with correlation dimension) | 66.66–76.66% | |||||

| 5 | [22], IAPS, 28 | 4 (HVHA, LVHA, HVLA, LVLA) | EEG | MD | ERP and Event related oscillation | 79.46% |

| SVM | 81.25% | |||||

| 6 | [23], PFA database | 6 (Happy, Sad, Anger, Fear, Disgust and Surprise) | EEG | SVM polynomial QDA, k-NN, MD | HOC | 85.17% using SVM on attributes of all 3 channels. (Best average accuracy) |

| 7 | [28], Visual and Audio stimuli | 5 (Happy, Fear, Neutral, Surprise and Disgust) | EEG | KNN | Entropy and Power Ratios | 70–93% |

| LDA | 68–92% | |||||

| 8 | [32], Music Videos, 32 | 2 along Arousal (Low Arousal/High Arousal), 2 along Valence (Low Valence/High Valence), (Low Liking/High Liking) | EEG | GNB | Spectral Power features from EEG | Arousal—62% Valence—57.6% Liking—55.4% |

| Peripheral Signals such as EMG, GSR, Temperature, BVP and Respiration, EOG and ECG | Statistical features such as average, average of derivative, band energy ratio and standard deviation, etc. | Arousal—57% Valence—62.7% Liking—59.1% | ||||

| MCA | MFCC, pitch, and zero crossing rate | Arousal—65.1% Valence—61.8% Liking—67.7% | ||||

| Fusion | Fusion of all three | Arousal—61.6%, Valence 64.7% and Liking 61.8% | ||||

| 9 | [37], Hollywood Videos, 27 | 3 along Arousal (Calm, Medium Arousal and Excited/Activated), 3 along Valence (Unpleasant, Neutral Valence and Pleasant) | EEG | SVM RBF Kernel | Spectral features from different frequency bands | Arousal—52.4%, Valence—57% |

| Peripheral Signals such as ECG, GSR, respiration amplitude, and skin temperature | HRV, standard deviation of beat interval change per respiratory cycle, average skin resistance, band energy ratio, mean of derivative, range, spectral power in bands, etc. | Arousal—46.2% Valence—45.5% | ||||

| Eye Gaze | Features from pupil diameter, gaze distance, eye blinking and gaze coordinates such as average, standard deviation, spectral power in different bands, skewness, blink depth approach time ratio, etc. | Arousal—63.5%, Valence—68.8% | ||||

| Fusion | Fusion EEG and Gaze | Arousal—67.7%, Valence—76.1% | ||||

| 10 | [45], GAPED pictures and classical music, 10 | 2 (Happy and Unhappy) | EEG | PSD | Subject independent—63.67%, Subject dependent—70.55% (average accuracies) | |

| 11 | [46], IAPS, 26 | 2 (LVHA and HVHA) | EEG | SVM | Power | 96.15%–100% (Best with RFE) |

| 12 | [36], MAHNOB HCI database, 24 | 2 along Arousal (Low Arousal/High Arousal), 2 along Valence (Low Valence/High Valence), (Low Control/High Control) | EEG | GNB | Power spectrum density features | With ICA: rousal—66%, Valence—71.5%, Control—67.5% |

| With RFE: Arousal–67.5%, Valence—70%, Control—63.5% | ||||||

| Facial Expression Features | Action units activations | With ICA: Arousal—65%, Valence—64.5%, Control—64.5% | ||||

| With RFE: Arousal—67.5%, Valence—64%, Control—62% | ||||||

| Fusion of modalities and fusion at decision level | Fusion | Arousal—72.5% Valence—74% Control—73% (Best Results) | ||||

| 13 | [25], IAPS, 16 | 5 (Happy, Curious, Angry, Sad and Quiet) | EEG | QDA with diagonal covariance estimates | Time domain features such as power, mean, S.D., HOC, Hjorth Features, NSI, FD. Frequency domain features such as Band power, Entropy and Power ratios, etc. | 32–43%, (Average of 5 feature selection techniques) |

| 14 | [47], IAPS, images, 10 | 2 (Low Arousal/High Arousal and Low Valence/High Valence) | EEG | (IQK-SVM), ISVM, kNN, SVM | PSD with feature reduction techniques PCA, LDA, KFDA(KFEP) Kernel PCA | Arousal—84.8%, Valence—82.7% (Best average accuracies using IQK-SVM) |

| 15 | [38], MAHNOB HCI database, 28 | Valence | EEG | LSTM-RNN, MLR, SVR and CCRF | Power spectrum density features | Best APCC-0.45 on LSTM-RNN with decision level fusion |

| Facial Expression Features | Distance of eyebrows, lips and nose | |||||

| Fusion of modalities and fusion at decision level | Fusion | |||||

| 16 | [48], music videos, 26 | 2 (Low Arousal/High Arousal and Low Valence/High Valence) | EEG | GNB | DLAT (Spectral power) | Without TL Arousal—35.1% to 78.7%, Valence—21.8 to 85.4% With TL Arousal—64%, Valence—64% (Average results) |

| 17 | [49], DEAP database | 2 (Low Arousal/High Arousal and Low Valence/High Valence) | EEG | RF, SVM | Statistical parameters, Spectral band power and HOC | 3 classes using RF Arousal—63.1%, Valence—58.8%, 2 classes using RF Arousal—74%, Valence—88.4% 3 classes using SVM Arousal-59.7%, Valence—55.1%, 2 classes using SVM Arousal—57.2%, Valence—83.2% |

| 18 | [50], SEED, DREAMER | 2 with Dreamer Along Arousal, Valence and Dominance | EEG | DGCNN, DBN, SVM, GCNN | DE, PSD, DASM, RASM, DCAU | Subject independent (SEED data)—79.95% best on DE Subject dependent best average accuracy on dreamer dataset Arousal—84.54%, Valence—86.23%, Dominance—85.02%; Subject dependent best average accuracy on SEED dataset—90.40% |

| 19 | [34], DEAP database | 2 (Low Arousal/High Arousal, Low Valence/High Valence, Low Dominance/High Dominance, and Low Liking/High Liking) | EEG | Hypergraph partitioning in unsupervised manner | Frequency domain, Time domain and wavelet domain | Arousal—62.34% Valence—56.25% Dominance—64.22% Liking—66.09% |

| 20 | [33], (2019), DEAP database | 4 (HVHA, HVLA, LVLA and LVHA) | EEG | MLP, Bayes, Fusion in conjunction with DST | Angle space construction from EEG phase thereafter statistical attributes such as correlation dimension, fractal dimension, Largest Lyaponouv Exponent, Entropy, etc., from angle variability as well as length variability | Accuracy greater than 80% for Bayes, MLP and DST Best Accuracy—82% with DST |

| 21 | [40], (2020), SEED data set | 3 (Neutral, Positive and Negative) | EEG | CNN, SVM polynomial SVM RBF | DE, PSD | Best average subject dependent accuracy—91.68%, SVM polynomial—78.37 SVM RBF—81.16% |

| 22 | [41], IAPS, 14 | 4 (LVHA, HVHA, HVLA, LVLA) | EEG | SVM polynomial | Average ERP and Difference of Average ERP | 75% and 76.8% |

| 23 | [35], DEAP database | 2 (Arousal, Valence) | EEG | SVM, OF-KNN and DT | FWHVG, BWHVG and time domain features fusion | 97.53% and 97.75%; 98.12% and 98.06% (Fusion)(Best Results) |

| Time Bracket | ERP Acquired | Latency at Which ERP Has Been Acquired | ||

|---|---|---|---|---|

| Nomenclature for Maxima | Nomenclature for Minima | Nomenclature of Latency Value at Which Maxima Has Been Obtained | Nomenclature of Latency Value at Which Minima Has Been Obtained | |

| 80–120 ms | P100 | N100 | PT100 | NT100 |

| 180–220 ms | P200 | N200 | PT200 | NT200 |

| 280–320 ms | P300 | N300 | PT300 | NT300 |

| Time Bracket | Difference of ERP Used | Latency at Which ERP Has Been Acquired | |

|---|---|---|---|

| Nomenclature Used | Nomenclature of Latency Value at Which Maxima Has Been Obtained | Nomenclature of Latency Value at Which Minima Has Been Obtained | |

| 80–120 ms | P100-N100 | PT100 | NT100 |

| 180–220 ms | P200-N200 | PT200 | NT200 |

| 280–320 ms | P300-N300 | PT300 | NT300 |

| Confusion Matrix on Frontal | Predicted Classes | ||||

|---|---|---|---|---|---|

| LVHA | HVHA | HVLA | LVLA | ||

| Actual Classes | LVHA | 22 | 2 | 0 | 1 |

| HVHA | 1 | 23 | 1 | 0 | |

| HVLA | 1 | 3 | 21 | 0 | |

| LVLA | 6 | 0 | 2 | 17 | |

| Error Analysis | Sensitivity (%) | Specificity (%) | Precision (%) | Negative Predictive Value (%) | F1 Score (%) |

|---|---|---|---|---|---|

| LVHA | 88.0 | 89.3 | 73.3 | 95.7 | 80.0 |

| HVHA | 92.0 | 93.3 | 82.1 | 97.2 | 86.8 |

| HVLA | 84.0 | 96.0 | 87.5 | 94.7 | 85.7 |

| LVLA | 68.0 | 98.7 | 94.4 | 90.2 | 79.1 |

| Confusion Matrix | Predicted Classes | ||||

|---|---|---|---|---|---|

| LVHA | HVHA | HVLA | LVLA | ||

| Actual Classes | LVHA | 15 | 3 | 0 | 2 |

| HVHA | 1 | 17 | 2 | 0 | |

| HVLA | 1 | 3 | 14 | 2 | |

| LVLA | 0 | 4 | 2 | 14 | |

| Error Analysis | Sensitivity (%) | Specificity (%) | Precision (%) | Negative Predictive Value (%) | F1 Score (%) |

|---|---|---|---|---|---|

| LVHA | 75.0 | 96.7 | 88.2 | 92.1 | 81.1 |

| HVHA | 85.0 | 83.3 | 63.0 | 94.3 | 72.3 |

| HVLA | 70.0 | 93.3 | 77.8 | 90.3 | 73.7 |

| LVLA | 70.0 | 93.3 | 77.8 | 90.3 | 73.7 |

| Confusion Matrix | Predicted Classes | ||||

|---|---|---|---|---|---|

| LVHA | HVHA | HVLA | LVLA | ||

| Actual Classes | LVHA | 20 | 2 | 1 | 2 |

| HVHA | 5 | 16 | 4 | 0 | |

| HVLA | 3 | 4 | 15 | 3 | |

| LVLA | 4 | 3 | 2 | 16 | |

| Error Analysis | Sensitivity (%) | Specificity (%) | Precision (%) | Negative Predictive Value (%) | F1 Score (%) |

|---|---|---|---|---|---|

| LVHA | 80.0 | 84.0 | 62.5 | 92.6 | 70.2 |

| HVHA | 64.0 | 88.0 | 64.0 | 88.0 | 64.0 |

| HVLA | 60.0 | 90.7 | 68.2 | 87.2 | 63.8 |

| LVLA | 64.0 | 93.3 | 76.2 | 88.6 | 69.6 |

| Confusion Matrix on Frontal Electrodes | Predicted Classes | ||||

|---|---|---|---|---|---|

| LVHA | HVHA | HVLA | LVLA | ||

| Actual Classes | LVHA | 21 | 0 | 2 | 2 |

| HVHA | 2 | 18 | 5 | 0 | |

| HVLA | 0 | 3 | 18 | 4 | |

| LVLA | 1 | 1 | 6 | 17 | |

| Error Analysis | Sensitivity (%) | Specificity (%) | Precision (%) | Negative Predictive Value (%) | F1 Score (%) |

|---|---|---|---|---|---|

| LVHA | 84.0 | 96.0 | 87.5 | 94.7 | 85.7 |

| HVHA | 72.0 | 94.7 | 81.8 | 91.0 | 76.6 |

| HVLA | 72.0 | 82.7 | 58.1 | 89.9 | 64.3 |

| LVLA | 68.0 | 92.0 | 73.9 | 89.6 | 70.8 |

| Comparative Analysis | Features Used, Number of Selected Attributes (NoA) | Possibility of Testing on Single EEG Electrode | Type of Classifier Used | Number of Classifiers Used, Polynomial Order Used | % Accuracy along Arousal, Valence | Mid-Range Accuracy | Accuracy/ Total Test Instances |

|---|---|---|---|---|---|---|---|

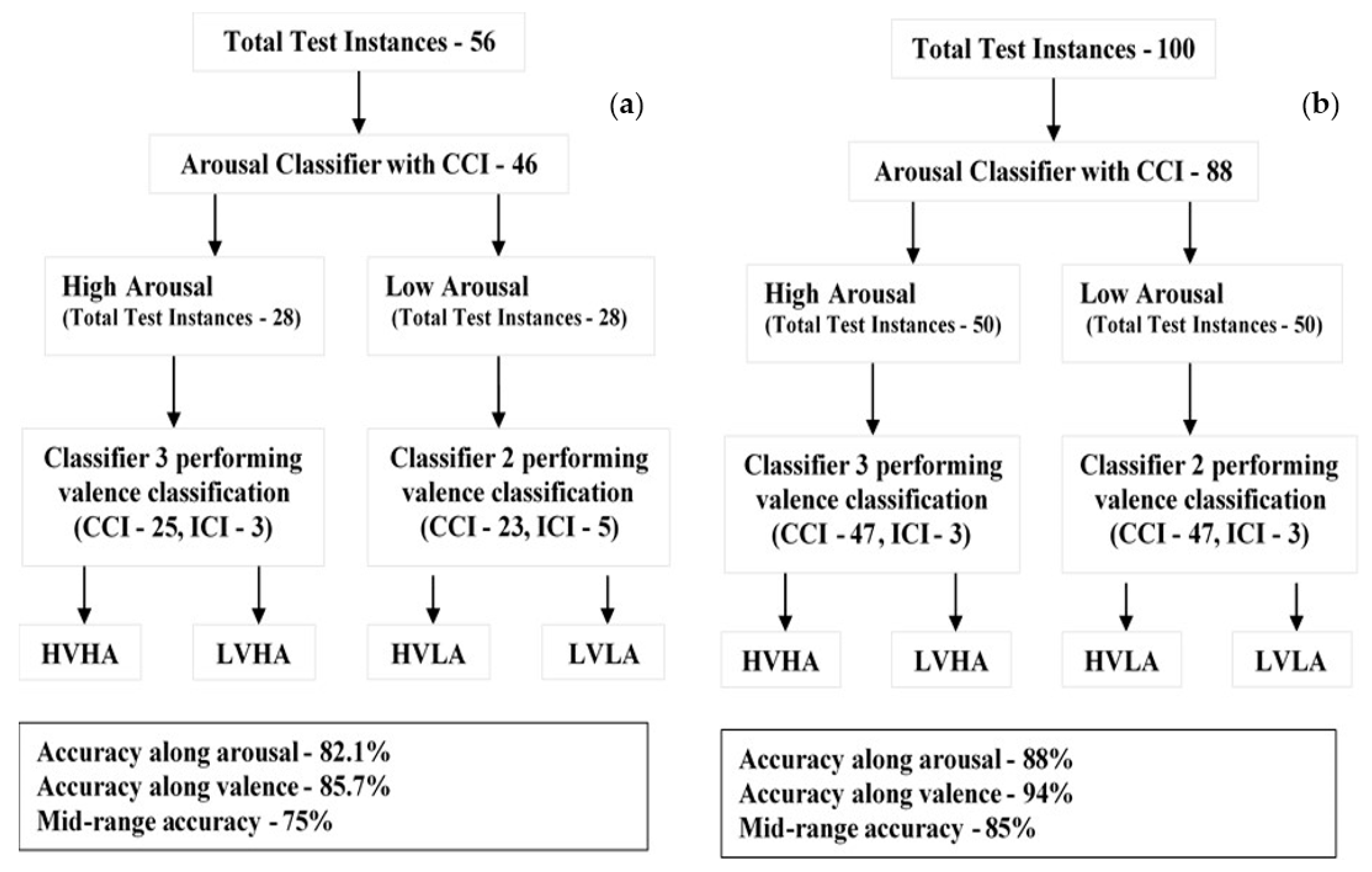

| Classifier proposed in existing methodology [22] | ERP and Event Related Oscillation, NoA between 16 and 22 | Not Possible | SVM linear, SVM polynomial SVM radial basis function | 3 (1 for Arousal, 2 for Valence), Arousal—3 Valence—3 and 2 | Arousal—82.1% Valence—85.7% | 75% | 81.3%/56 |

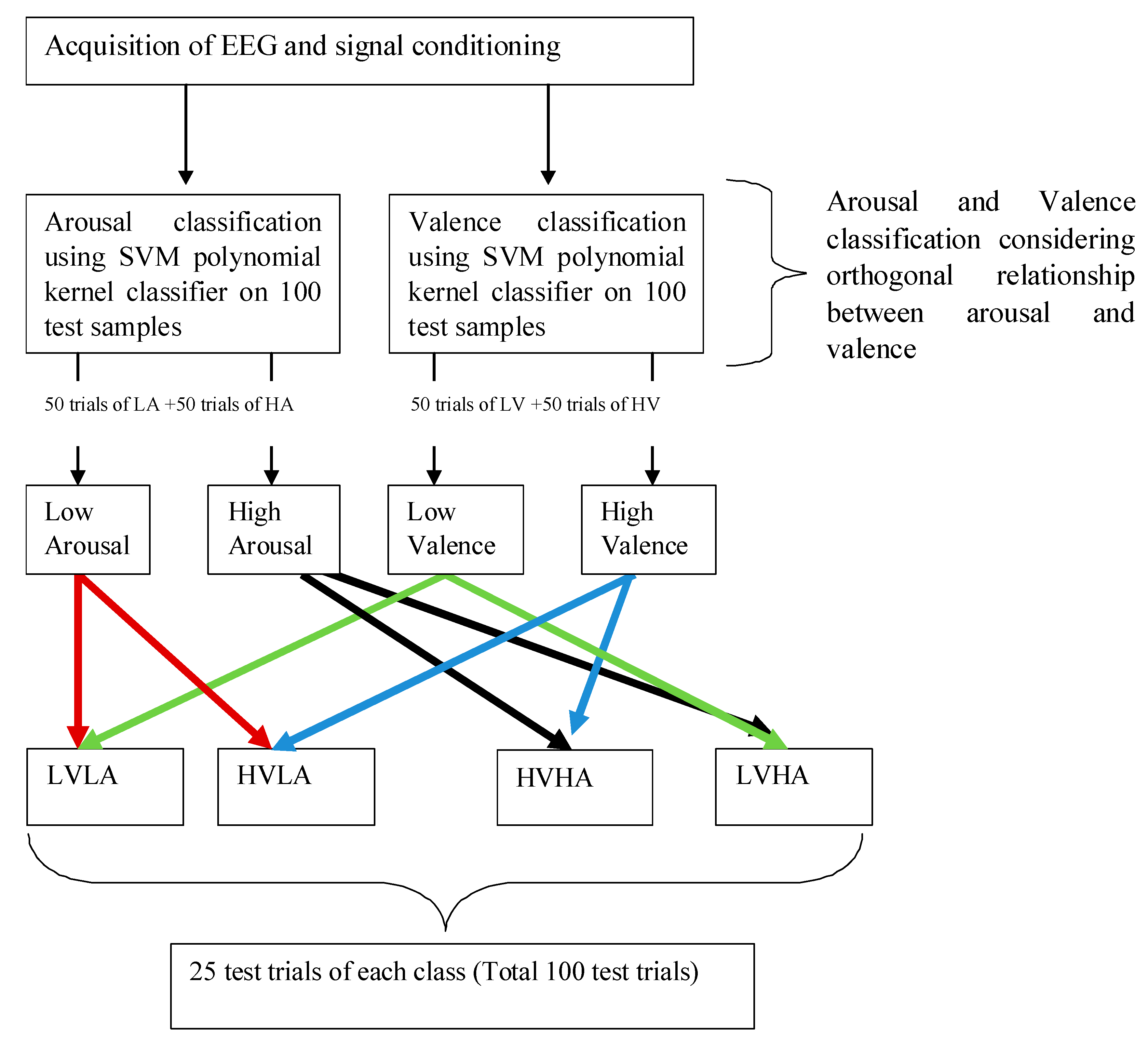

| Classifier 1 based on the three-classifier methodology as proposed in existing study | ERP, NoA less than or equal to 12 | Possible | SVM Polynomial | 3 (1 for Arousal, 2 for Valence), Arousal—6 Valence—4 and 6 | Arousal—88% Valence—94% | 85% | 83%/ 100 |

| Classifier 2 without Fp1 and Fp2 | ERP, NoA less than or equal to 12 | Possible | SVM Polynomial | 2 (1 for Arousal, 1 for Valence) | Arousal—85% Valence—83.75% | 76.25 | 75%/ 80 |

| Classifier 3 on average ERP with polynomial order 3 | ERP, NoA less than or equal to 12 | Possible | SVM Polynomial | 2 (1 for Arousal, 1 for Valence) | Arousal—79% Valence—81% | 69.5% | 67%/ 100 |

| Classifier 4 on difference of average ERP with polynomial order 3 | Difference of ERP, NoA less than or equal to 12 | Possible | SVM Polynomial | 2 (1 for Arousal, 1 for Valence) | Arousal—86% Valence—85% | 78% | 74%/ 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, M.I.; Singh, M. Emotion Recognition: An Evaluation of ERP Features Acquired from Frontal EEG Electrodes. Appl. Sci. 2021, 11, 4131. https://doi.org/10.3390/app11094131

Singh MI, Singh M. Emotion Recognition: An Evaluation of ERP Features Acquired from Frontal EEG Electrodes. Applied Sciences. 2021; 11(9):4131. https://doi.org/10.3390/app11094131

Chicago/Turabian StyleSingh, Moon Inder, and Mandeep Singh. 2021. "Emotion Recognition: An Evaluation of ERP Features Acquired from Frontal EEG Electrodes" Applied Sciences 11, no. 9: 4131. https://doi.org/10.3390/app11094131