Convolutional Adaptive Network for Link Prediction in Knowledge Bases

Abstract

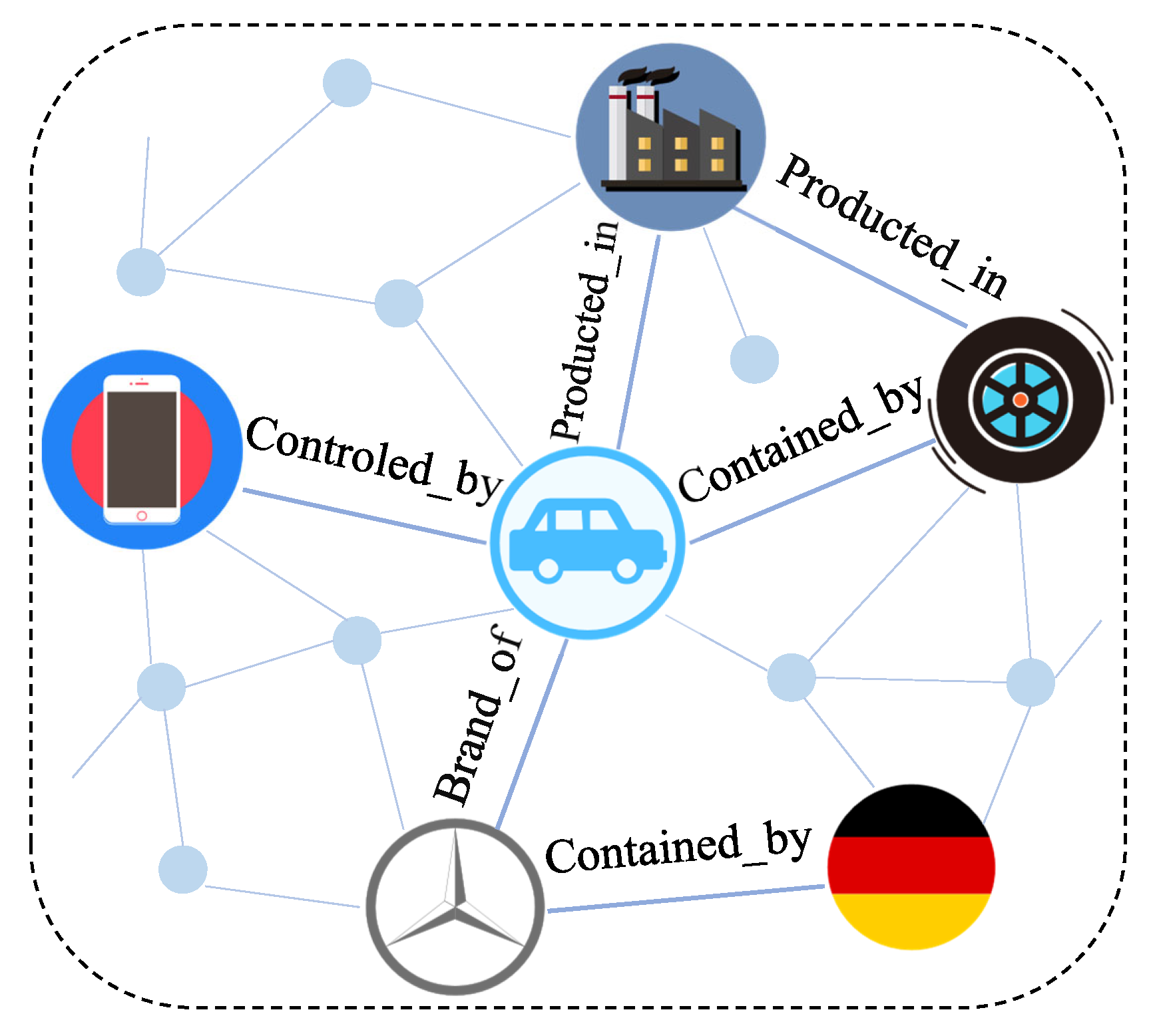

:1. Introduction

- A new link prediction method called CANet for learning the embedding representation of KBs is proposed. As a multi-layer network method, CANet takes advantage of the parameter-efficient and fast operators of CNNs, thereby generating additional expressive features and addressing the overfitting problem for large KBs.

- An interaction operation is adopted to generate multiple triplet-specific embeddings for relations and entities. Thus, for various triplet predictions, the operation can simulate the selection of different information. Furthermore, multiple interaction embeddings provide rich representations and generalization capabilities.

- To increase the representational capacity of CANet, an attention function is inserted into the convolutional operation. This functionality enables the network to achieve recalibration features adaptively. By learning to use global content, CANet can selectively accentuate features while suppressing pointless ones.

- To test the efficiency of our proposed approach, five real-world datasets were used in our experiments. In comparison to several previous approaches, the results show that CANet achieves state-of-the-art efficiency for general assessment metrics.

2. Related Works

2.1. Translational Distance Methods

2.2. Semantic Matching Methods

2.3. Multi-Layer Network Methods

3. Proposed Method

3.1. Problem Formulation

3.2. Outline of CANet

3.3. Training Objective

4. Experimental Setup

4.1. Evaluation Metrics

4.2. Datasets

- FB15k: FB15k [16] is a large-scale knowledge base containing massive amounts of knowledge and facts; it is a subset of Freebase [7]. It comprises approximately 1345 relations and 14,951 entities. FB15k describes information about awards, athletics, movies, stars, and sports teams, among other things, as part of a broad knowledge base about the human world.

- FB15k-237: FB15k-237 [13] is a subset of FB15k. Due to the inverse relations, it can observe that almost all of the test triplets in FB15k can be predicted easily. Thus, the FB15k-237 dataset was created so that the inverse relations were deleted. There are 14,541 entities in all, with 237 various relations between them.

- WN18: WN18 [16] is a knowledge base featuring lexical relations between words, and it is a subset of WordNet [8]. This dataset also contains massive numbers of inverse relations. There are 18 relations and 40,943 entities in this dataset. Its relations define lexical relationships between entities, while the entities define word senses.

- WN18RR: Similarly to the FB15K-237 dataset, WN18RR [16] is a subset of WN18 and was obtained by removing the inverse relations. It has a total of 40,943 entities and 11 different relations.

4.3. Comparison Methods

- TransE: TransE [16] is one of the most common link prediction models for knowledge bases. To model multi-relational data, TransE transforms entities and relations into embedding spaces and regards relations as a translation from the head entity to the tail entity.

- DistMult: DistMult [21] is the most commonly used semantic matching model. By comparing latent semantics in the embedding space, DistMult estimates the possibility of a triplet.

- ComplEx: ComplEx [22] is an extension of DistMult. ComplEx embeds entities and symmetric or antisymmetric relations into the complex space instead of real-valued ones.

- R-GCN: R-GCN [12] is an extension of the graph convolutional network. For working with extremely multi-relational data in KBs, R-GCN proposes a generalization method for graph convolutional networks.

- ConvE: ConvE [13] is the typical convolutional neural network-based model. It is a relatively simple multi-layer convolutional framework for link prediction.

- TorusE: TorusE [20] embeds relations and entities on a compact lie group. It follows the TransE and regards relations as a translation. Because of its flexibility, it can handle large KBs.

- CANet: Convolutional adaptive network (CANet) is the method proposed in this paper. The weights of the convolutional kernels are set to be adaptive.

4.4. Experimental Implementation

5. Results and Discussion

5.1. Experimental Results

- The proposed methods surpassed the other baseline methods in most instances. For the majority of the metrics, CANet achieved state-of-the-art results. As a result, the proposed methods could produce impressive link prediction results by using the CNN’s parameter-efficient and fast operators. In comparison to the optimal methods on the WN18RR, FB15k-237, and YAGO3-10 datasets, CANet improved the MRR by 4.3%, 5.8%, and 14.5%, respectively. This proved the efficacy and applicability of our method.

- Semantic matching methods are simple to learn and could be used to represent a knowledge base through the training process. However, because of their redundancy, they were highly susceptible to overfitting, resulting in lower performance than that of the proposed approaches.

- Because the former created the weights of convolutional kernels that were relevant to each reference, CANet surpassed ConvE on all metrics. As a result, multiple relations may be used to obtain various feature maps. As a result, CANet is better at identifying the differences between entities.

5.2. Influence of Kernel Size

5.3. Parameter Sensitivity

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rosa, R.L.; Schwartz, G.M.; Ruggiero, W.V.; Rodríguez, D.Z. A Knowledge-Based Recommendation System That Includes Sentiment Analysis and Deep Learning. IEEE Trans. Inform. 2019, 15, 2124–2135. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Exploring High-Order User Preference on the Knowledge Graph for Recommender Systems. ACM Trans. Inf. Syst. 2019, 37, 1–26. [Google Scholar] [CrossRef]

- Schneider, G.F.; Pauwels, P.; Steiger, S. Ontology-Based Modeling of Control Logic in Building Automation Systems. IEEE Trans. Inform. 2017, 13, 3350–3360. [Google Scholar] [CrossRef] [Green Version]

- Engel, G.; Greiner, T.; Seifert, S. Ontology-Assisted Engineering of Cyber–Physical Production Systems in the Field of Process Technology. IEEE Trans. Inform. 2018, 14, 2792–2802. [Google Scholar] [CrossRef]

- Zhou, H.; Young, T.; Huang, M.; Zhao, H.; Xu, J.; Zhu, X. Commonsense Knowledge Aware Conversation Generation with Graph Attention. In Proceedings of the International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 4623–4629. [Google Scholar]

- Huang, X.; Zhang, J.; Li, D.; Li, P. Knowledge graph embedding based question answering. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, VIC, Australia, 11–15 February 2019; pp. 105–113. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Suchanek, F.M.; Kasneci, G.; Weikum, G. Yago: A core of semantic knowledge. In Proceedings of the World Wide Web, Banff, AB, Canada, 8–12 May 2007; pp. 697–706. [Google Scholar]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge Graph Embedding: A Survey of Approaches and Applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Shi, B.; Weninger, T. ProjE: Embedding projection for knowledge graph completion. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 1236–1242. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; van den Berg, R.; Titov, I.; Welling, M. Modeling relational data with graph convolutional networks. In Proceedings of the 15th European Semantic Web Conference, Heraklion, Greece, 3–7 June 2018; pp. 593–607. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1811–1818. [Google Scholar]

- Gong, J.; Wang, S.; Wang, J.; Feng, W.; Peng, H.; Tang, J.; Yu, P.S. Attentional Graph Convolutional Networks for Knowledge Concept Recommendation in MOOCs in a Heterogeneous View. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Xi’an, China, 25–30 July 2020; pp. 79–88. [Google Scholar]

- Sakurai, R.; Yamane, S.; Lee, J. Restoring Aspect Ratio Distortion of Natural Images With Convolutional Neural Network. IEEE Trans. Inform. 2019, 15, 563–571. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2013; pp. 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 1112–1119. [Google Scholar]

- Lin, Y.; Liu, Z.; Zhu, X.; Zhu, X.; Zhu, X. Learning entity and relation embeddings for knowledge graph completion. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2181–2187. [Google Scholar]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge Graph Embedding via Dynamic Mapping Matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Ebisu, T.; Ichise, R. Generalized Translation-based Embedding of Knowledge Graph. IEEE Trans. Knowl. Data Eng. 2020, 32, 941–951. [Google Scholar] [CrossRef]

- Yang, B.; Yih, W.-t.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the 3rd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014; pp. 1–12. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex embeddings for simple link prediction. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 2071–2080. [Google Scholar]

- Nickel, M.; Rosasco, L.; Poggio, T.A. Holographic Embeddings of Knowledge Graphs. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1955–1961. [Google Scholar]

- Liu, H.; Wu, Y.; Yang, Y. Analogical inference for multi-relational embeddings. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 2168–2178. [Google Scholar]

- Guo, L.; Sun, Z.; Hu, W. Learning to Exploit Long-term Relational Dependencies in Knowledge Graphs. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2505–2514. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 1–4. [Google Scholar]

| Datasets | # Entities | # Relations | # Triplets | ||

|---|---|---|---|---|---|

| Train | Valid | Test | |||

| FB15k | 14,951 | 1345 | 483,142 | 50,000 | 59,071 |

| FB15k-237 | 14,541 | 237 | 272,115 | 17,535 | 20,466 |

| WN18 | 40,943 | 18 | 141,442 | 5000 | 5000 |

| WN18RR | 40,943 | 11 | 86,835 | 3034 | 3134 |

| YAGO3-10 | 123,182 | 37 | 1,079,040 | 5000 | 5000 |

| Model | WN18 | FB15k | ||||||

|---|---|---|---|---|---|---|---|---|

| MRR | Hits | MRR | Hits | |||||

| @10 | @3 | @1 | @10 | @3 | @1 | |||

| TransE | 0.454 | 0.934 | 0.823 | 0.089 | 0.380 | 0.641 | 0.472 | 0.231 |

| DistMult | 0.822 | 0.936 | 0.914 | 0.728 | 0.654 | 0.824 | 0.733 | 0.546 |

| ComplEx | 0.941 | 0.947 | 0.936 | 0.936 | 0.692 | 0.840 | 0.759 | 0.599 |

| R-GCN | 0.814 | 0.955 | 0.928 | 0.686 | 0.696 | 0.842 | 0.760 | 0.601 |

| ConvE | 0.943 | 0.956 | 0.946 | 0.935 | 0.657 | 0.831 | 0.723 | 0.558 |

| TorusE | 0.947 | 0.954 | 0.950 | 0.943 | 0.733 | 0.832 | 0.771 | 0.674 |

| CANet | 0.950 | 0.955 | 0.952 | 0.945 | 0.753 | 0.862 | 0.796 | 0.684 |

| Model | WN18RR | FB15k-237 | ||||||

|---|---|---|---|---|---|---|---|---|

| MRR | Hits | MRR | Hits | |||||

| @10 | @3 | @1 | @10 | @3 | @1 | |||

| TransE | 0.182 | 0.444 | 0.295 | 0.027 | 0.257 | 0.420 | 0.284 | 0.174 |

| DistMult | 0.430 | 0.490 | 0.440 | 0.390 | 0.241 | 0.419 | 0.263 | 0.155 |

| ComplEx | 0.440 | 0.510 | 0.460 | 0.410 | 0.247 | 0.428 | 0.275 | 0.158 |

| R-GCN | - | - | - | - | 0.249 | 0.417 | 0.264 | 0.151 |

| ConvE | 0.430 | 0.520 | 0.440 | 0.400 | 0.325 | 0.501 | 0.356 | 0.237 |

| TorusE | 0.452 | 0.512 | 0.464 | 0.422 | 0.316 | 0.484 | 0.335 | 0.217 |

| CANet | 0.478 | 0.532 | 0.489 | 0.445 | 0.339 | 0.530 | 0.378 | 0.251 |

| Model | YAGO3-10 | |||

|---|---|---|---|---|

| MRR | Hits | |||

| @10 | @3 | @1 | ||

| DistMult | 0.340 | 0.540 | 0.380 | 0.240 |

| ComplEx | 0.360 | 0.550 | 0.400 | 0.260 |

| ConvE | 0.440 | 0.620 | 0.490 | 0.350 |

| CANet | 0.504 | 0.662 | 0.562 | 0.423 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, X.; Liu, Y.; Li, Z. Convolutional Adaptive Network for Link Prediction in Knowledge Bases. Appl. Sci. 2021, 11, 4270. https://doi.org/10.3390/app11094270

Hou X, Liu Y, Li Z. Convolutional Adaptive Network for Link Prediction in Knowledge Bases. Applied Sciences. 2021; 11(9):4270. https://doi.org/10.3390/app11094270

Chicago/Turabian StyleHou, Xiaoju, Yanshen Liu, and Zhifei Li. 2021. "Convolutional Adaptive Network for Link Prediction in Knowledge Bases" Applied Sciences 11, no. 9: 4270. https://doi.org/10.3390/app11094270

APA StyleHou, X., Liu, Y., & Li, Z. (2021). Convolutional Adaptive Network for Link Prediction in Knowledge Bases. Applied Sciences, 11(9), 4270. https://doi.org/10.3390/app11094270