Enhanced Image Captioning with Color Recognition Using Deep Learning Methods

Abstract

:1. Introduction

2. Related Works

- An enhanced image captioning algorithm is proposed that can successfully generate the textual description of an image;

- The obtained results not only provide the overall information of the image, but also provide detailed explanation of a scenario showing the activity performed by each recognized object;

- Color recognition of objects is addressed, such that more detailed information of an object can be identified. Thus, a more accurate caption can be generated;

- The textual description of an image is displayed through a text-to-speech module that could provide more useful applications.

3. Methods

3.1. Object Detection

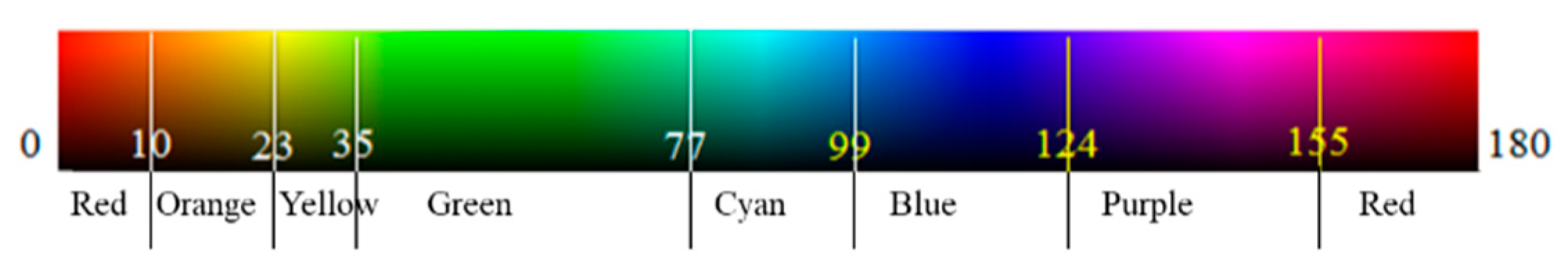

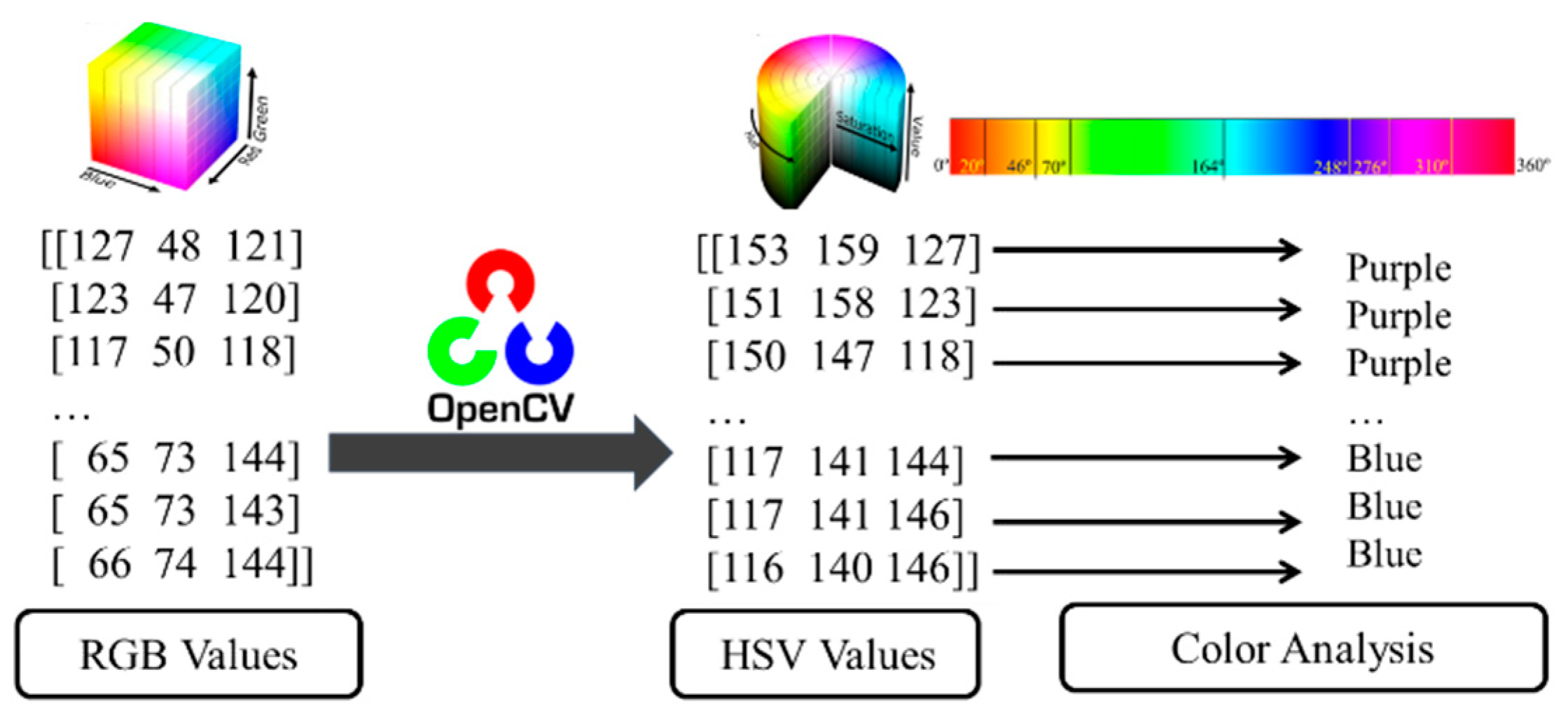

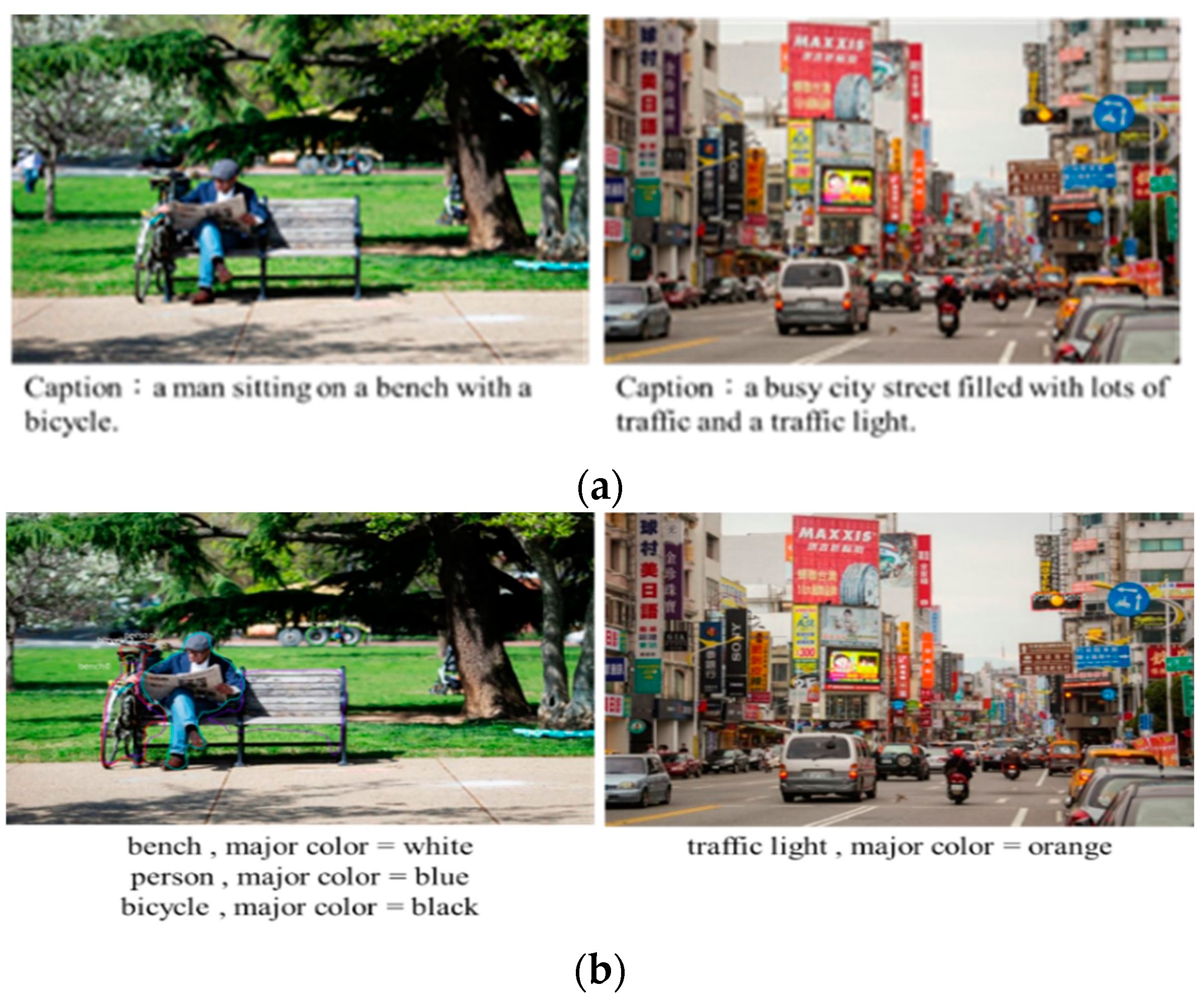

3.2. Color Analysis

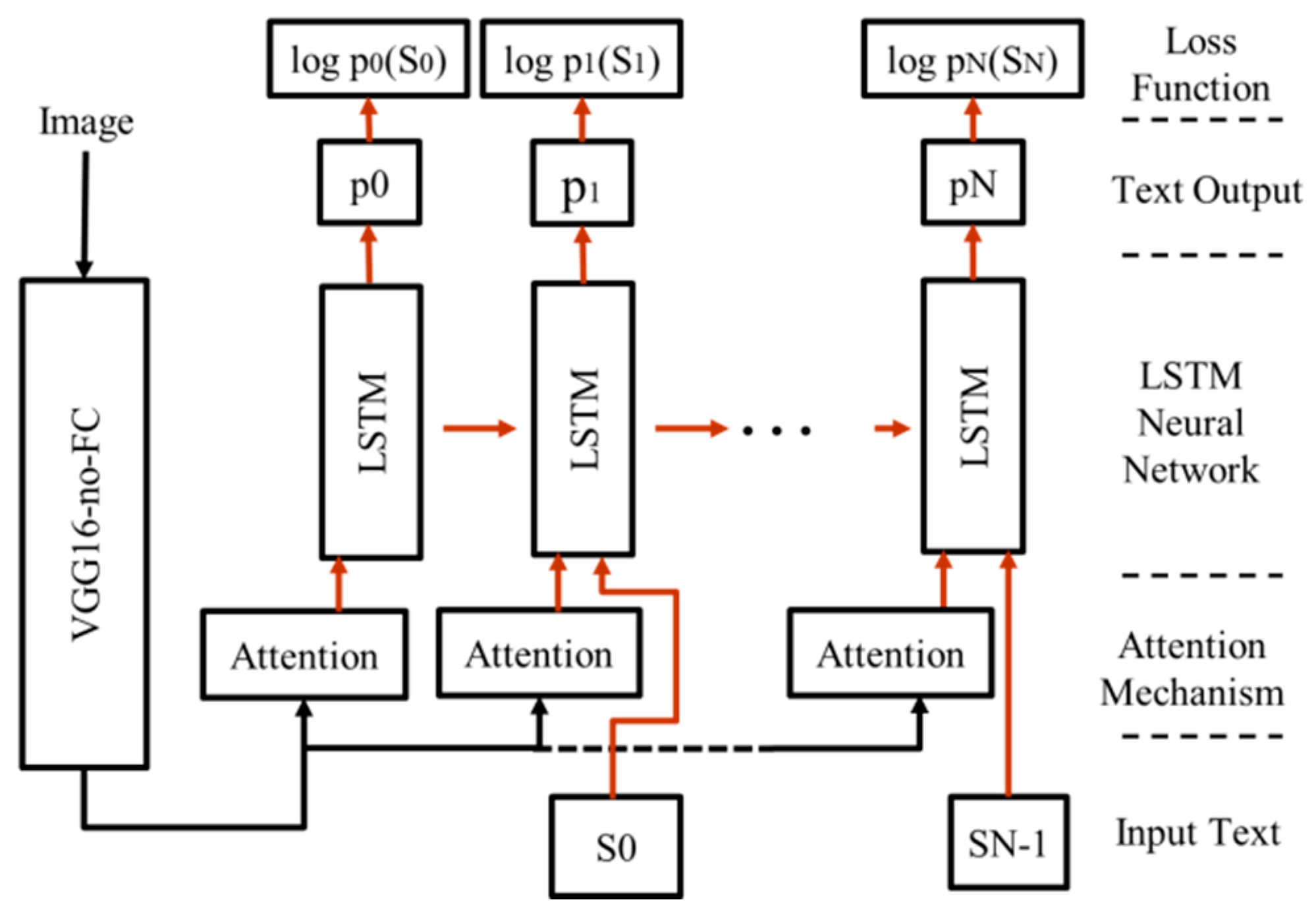

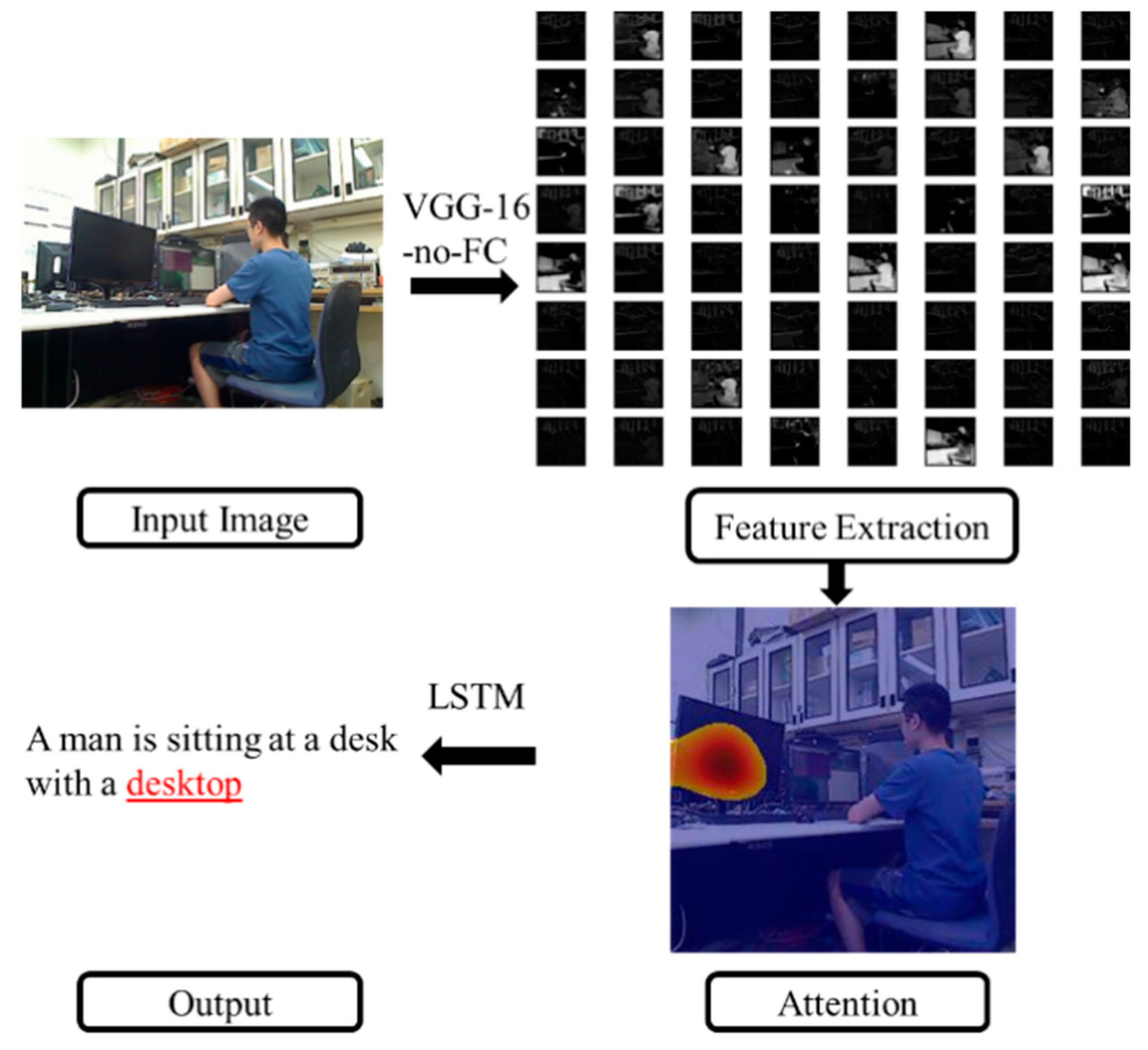

3.3. Image Captioning

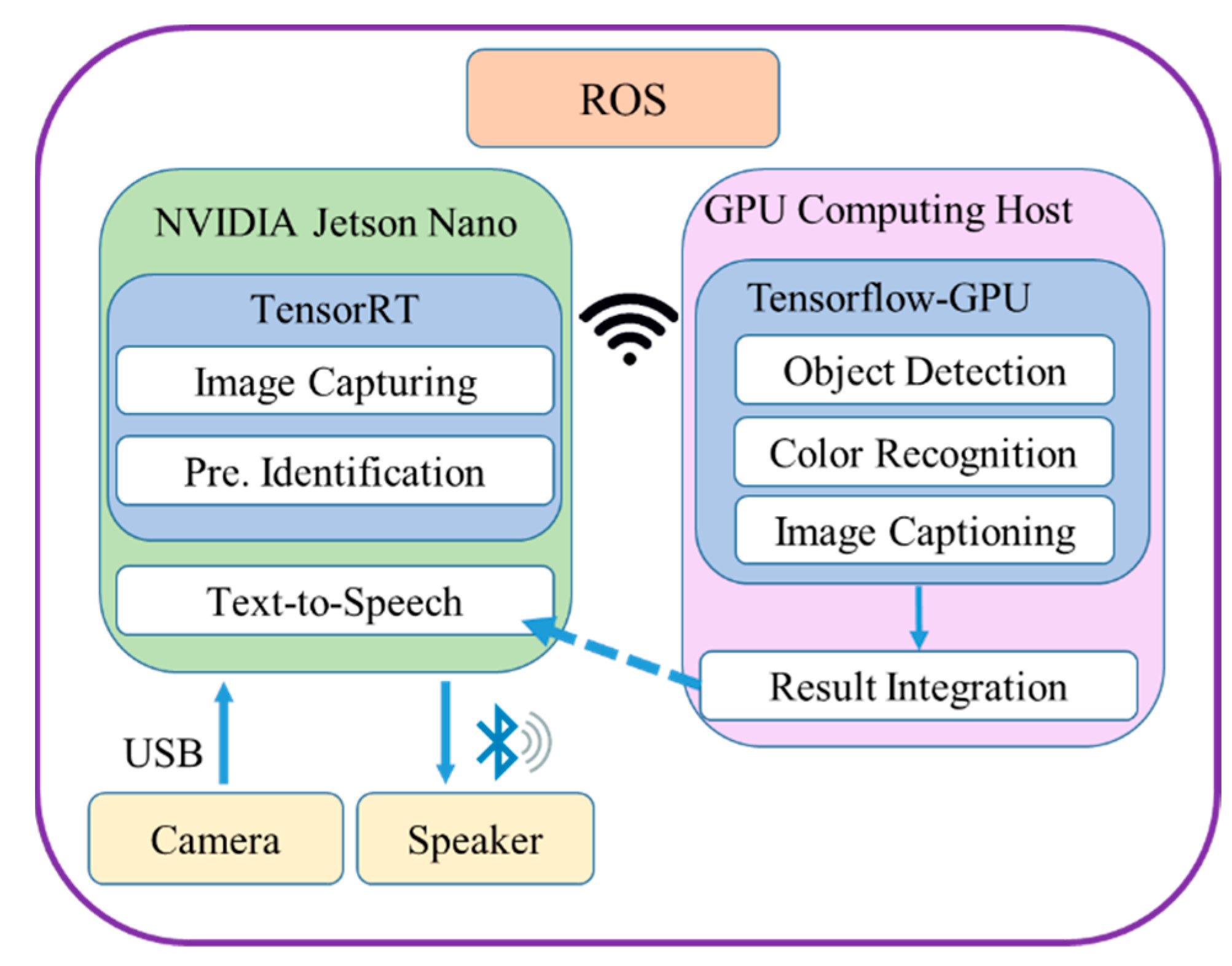

3.4. Implementation

4. Experiments and Results

4.1. Model Training and Datasets

4.2. Preliminary Identification

4.3. Image Captioning and Object Recognition

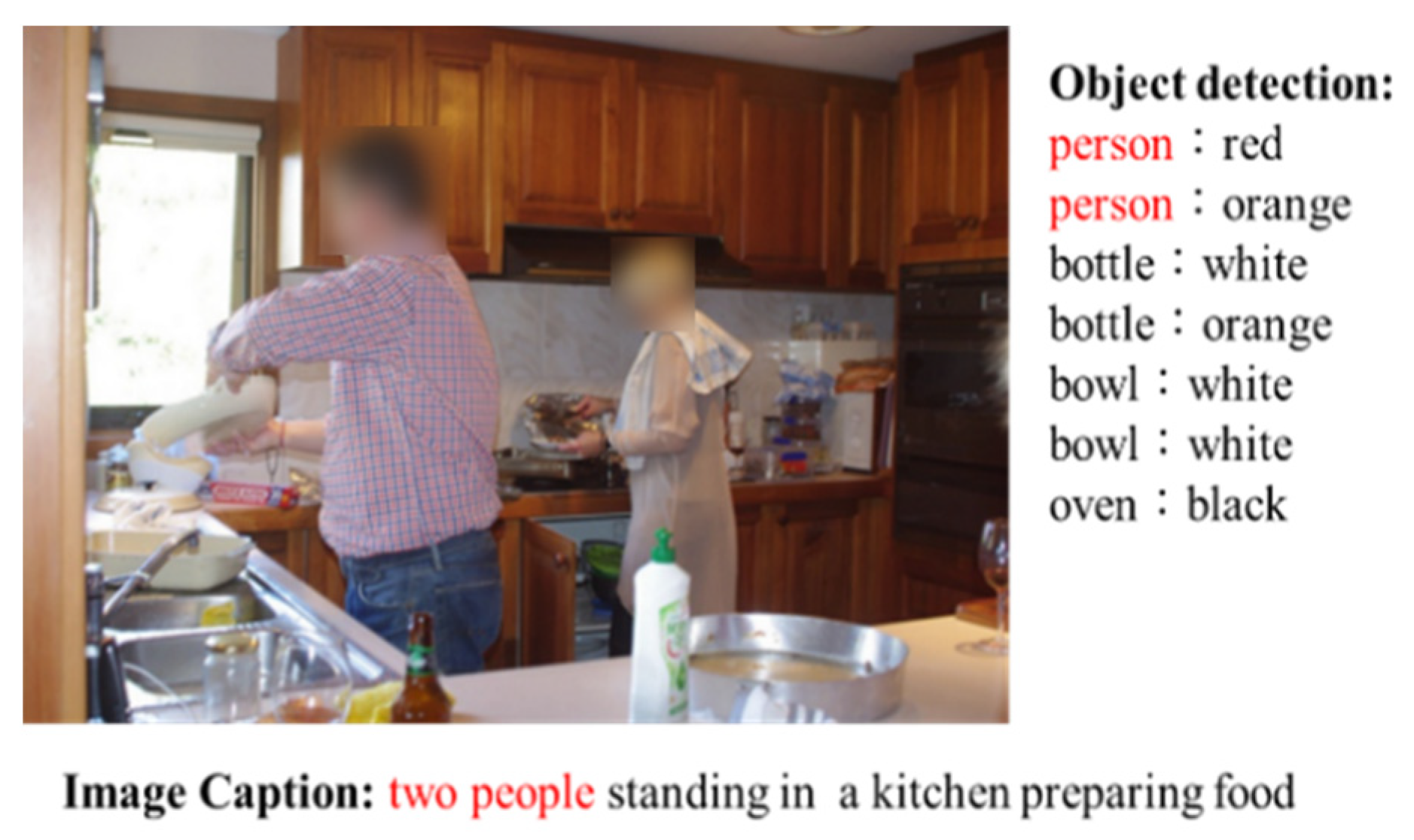

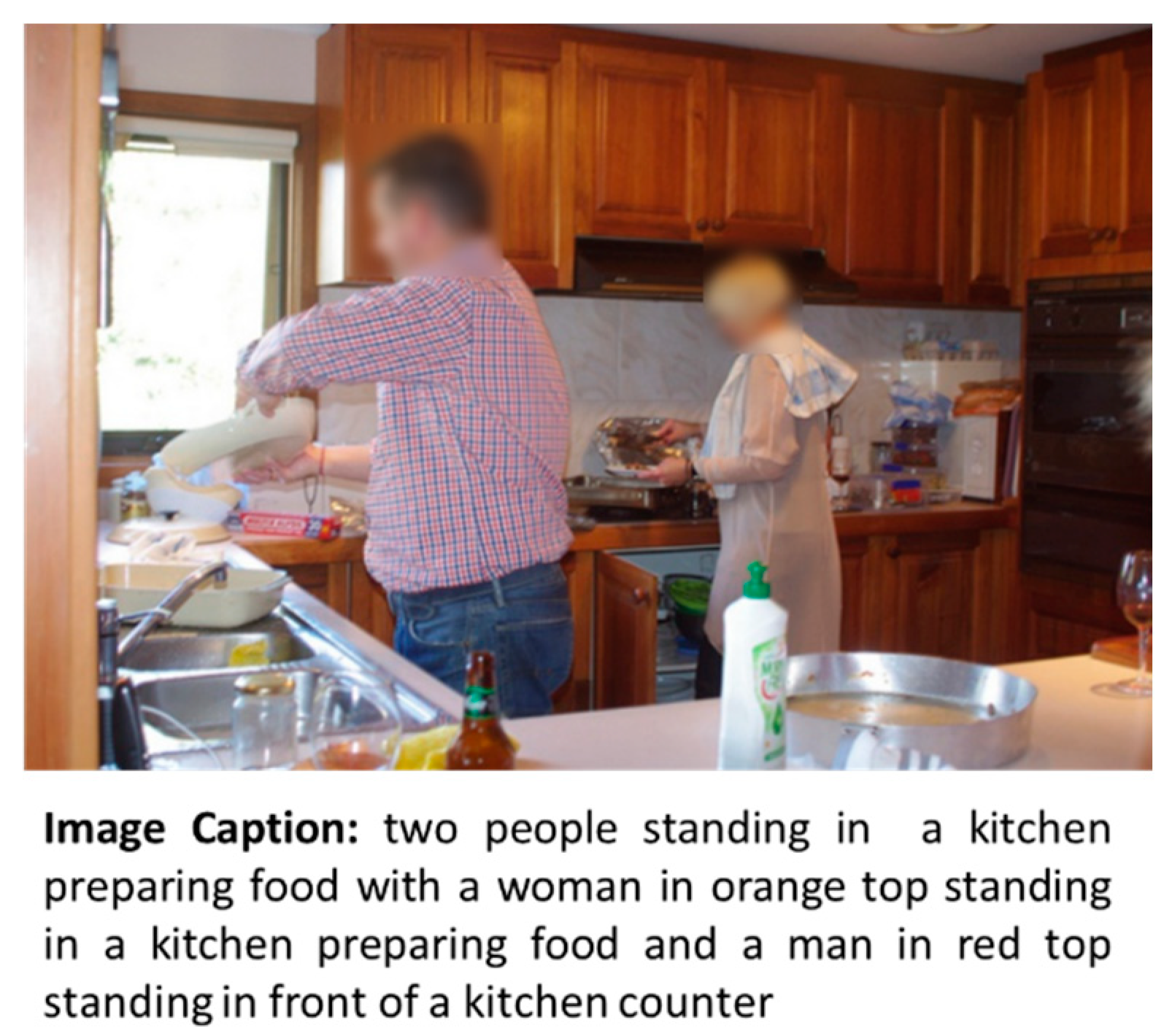

4.4. Integration of Image Captioning and Object Recognition Results

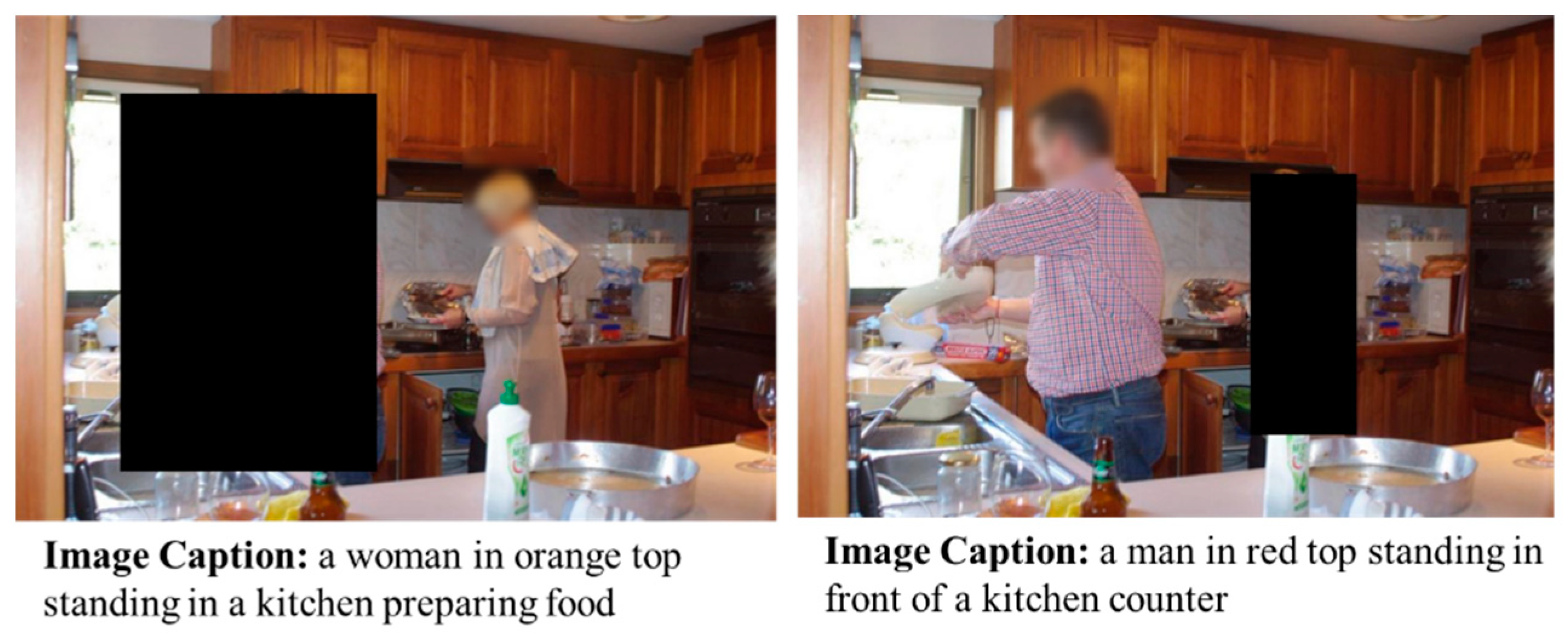

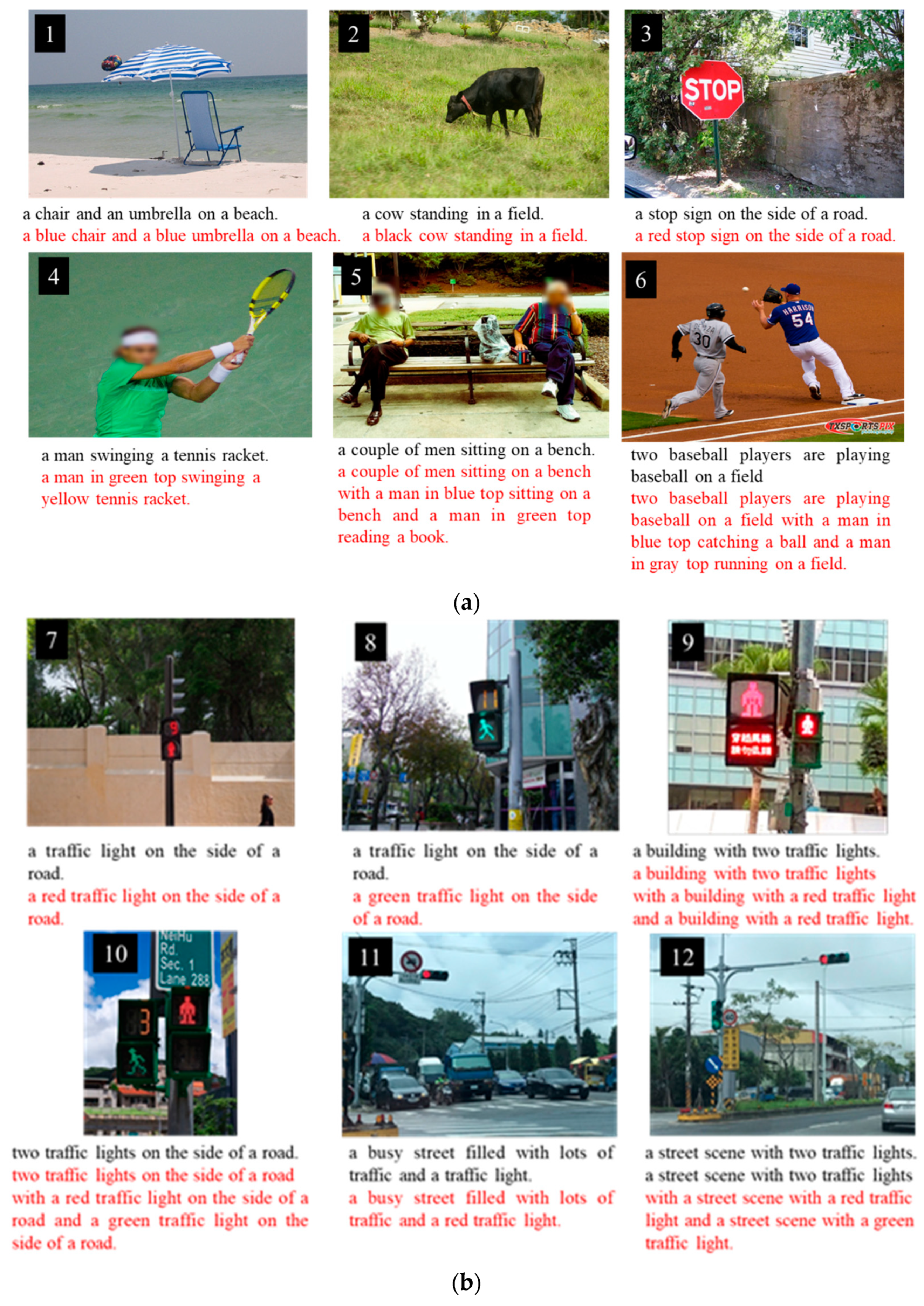

4.5. Enhanced Image Captioning Algorithm

4.6. Cases

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Image Captioning. Available online: https://www.slideshare.net/mz0502244226/image-captioning (accessed on 10 March 2021).

- Kojima, A.; Tamura, T.; Fukunaga, K. Natural Language Description of Human Activities from Video Images Based on Concep Hierarchy of Actions. Int. J. Comput. Vis. 2002, 50, 171–184. [Google Scholar] [CrossRef]

- Hede, P.; Moellic, P.; Bourgeoys, J.; Joint, M.; Thomas, C. Automatic generation of natural language descriptions for images. In Proceedings of the Recherche Dinformation Assistee Par Ordinateur, Avignon, France, 26–28 April 2004; pp. 1–8. [Google Scholar]

- Shuang, B.; Shan, A. A survey on automatic image caption generation. Neurocomputing 2018, 311, 291–304. [Google Scholar]

- Ordonez, V.; Han, X.; Kuznetsova, P.; Kulkarni, G.; Mitchell, M.; Yamaguchi, K.; Stratos, K.; Goyal, A.; Dodge, J.; Mensch, A.; et al. Large scale retrieval and generation of image descriptions. Int. J. Comput. Vis. 2016, 119, 46–59. [Google Scholar] [CrossRef]

- Gupta, A.; Verma, Y.; Jawahar, C.V. Choosing linguistics over vision to describe images. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 606–612. [Google Scholar]

- Farhadi, A.; Hejrati, M.; Sadeghi, M.A.; Young, P.; Rashtchian, C.; Hockenmaier, J.; Forsyth, D. Every Picture Tells a Story: Generating Sentences from Images. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 15–29. [Google Scholar]

- Ordonez, V.; Kulkarni, G.; Berg, T.L. Im2Text: Describing images using 1 million captioned photographs. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Granada, Spain, 12–15 December 2011; pp. 1143–1151. [Google Scholar]

- Kulkarni, G.; Premraj, V.; Dhar, S.; Li, S.; Choi, Y.; Berg, A.C.; Berg, T.L. Baby talk: Understanding and generating simple image descriptions. In Proceedings of the Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1601–1608. [Google Scholar]

- Mason, R.; Charniak, E. Nonparametric Method for Data-driven Image Captioning. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Baltimore, MD, USA, 23–25 June 2014; pp. 592–598. [Google Scholar]

- Hodosh, M.; Young, P.; Hockenmaier, J. Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics. J. Artif. Intell. Res. 2013, 47, 853–899. [Google Scholar] [CrossRef] [Green Version]

- Kulkarni, G.; Premraj, V.; Ordonez, V.; Dhar, S.; Li, S.; Choi, Y.; Berg, A.C.; Berg, T.L. BabyTalk: Understanding and Generating Simple Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2891–2903. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gong, Y.; Wang, L.; Hodosh, M.; Hockenmaier, J.; Lazebnik, S. Improving Image-Sentence Embeddings Using Large Weakly Annotated Photo Collections. In Proceedings of the Lecture Notes in Computer Science; Springer: Singapore, 2014; pp. 529–545. [Google Scholar]

- Li, S.; Kulkarni, G.; Berg, T.L.; Berg, A.C.; Choi, Y. Composing simple image descriptions using web-scale n-grams. In Proceedings of the Fifteenth Conference on Computational Natural Language Learning, Portland, OR, USA, 23–24 June 2011. [Google Scholar]

- Ushiku, Y.; Yamaguchi, M.; Mukuta, Y.; Harada, T. Common Subspace for Model and Similarity: Phrase Learning for Caption Generation from Images. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2668–2676. [Google Scholar]

- Deng, Z.; Jiang, Z.; Lan, R.; Huang, W.; Luo, X. Image captioning using DenseNet network and adaptive attention. Signal Process. Image Commun. 2020, 85, 115836. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, X.; Wang, F.; Wu, T.-Y.; Chen, C.-M.; Wang, E.K. Multilayer Dense Attention Model for Image Caption. IEEE Access 2019, 7, 66358–66368. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, W.; Diao, W.; Yan, M.; Gao, X.; Sun, X. VAA: Visual Aligning Attention Model for Remote Sensing Image Captioning. IEEE Access 2019, 7, 137355–137364. [Google Scholar] [CrossRef]

- Gao, L.; Li, X.; Song, J.; Shen, H.T. Hierarchical LSTMs with Adaptive Attention for Visual Captioning. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1112–1131. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, B.; Wang, C.; Zhang, Q.; Su, Y.; Wang, Y.; Xu, Y. Cross-Lingual Image Caption Generation Based on Visual Attention Model. IEEE Access 2020, 8, 104543–104554. [Google Scholar] [CrossRef]

- Ozturk, B.; Kirci, M.; Gunes, E.O. Detection of green and orange color fruits in outdoor conditions for robotic applications. In Proceedings of the 2016 Fifth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Tianjin, China, 18–20 July 2016; pp. 1–5. [Google Scholar]

- Liu, G.; Zhang, C.; Guo, Q.; Wan, F. Automatic Color Recognition Technology of UAV Based on Machine Vision. In Proceedings of the 2019 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Beijing, China, 15–17 August 2019; pp. 220–225. [Google Scholar]

- Zhang, W.; Zhang, C.; Li, C.; Zhang, H. Object color recognition and sorting robot based on OpenCV and machine vision. In Proceedings of the 2020 IEEE 11th International Conference on Mechanical and Intelligent Manufacturing Technologies (ICMIMT), Cape Town, South Africa, 20–22 January 2020; pp. 125–129. [Google Scholar]

- Ashtari, A.H.; Nordin, J.; Fathy, M. An Iranian License Plate Recognition System Based on Color Features. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1690–1705. [Google Scholar] [CrossRef]

- Object Detection. Available online: https://en.wikipedia.org/wiki/Object_detection (accessed on 20 February 2021).

- Gupta, A.K.; Seal, A.; Prasad, M.; Khanna, P. Salient Object Detection Techniques in Computer Vision—A Survey. Entropy 2020, 22, 1174. [Google Scholar] [CrossRef]

- Lan, L.; Ye, C.; Wang, C.; Zhou, S. Deep Convolutional Neural Networks for WCE Abnormality Detection: CNN Architecture, Region Proposal and Transfer Learning. IEEE Access 2019, 7, 30017–30032. [Google Scholar] [CrossRef]

- Zhang, W.; Zheng, Y.; Gao, Q.; Mi, Z. Part-Aware Region Proposal for Vehicle Detection in High Occlusion Environment. IEEE Access 2019, 7, 100383–100393. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Baclig, M.M.; Ergezinger, N.; Mei, Q.; Gül, M.; Adeeb, S.; Westover, L. A Deep Learning and Computer Vision Based Multi-Player Tracker for Squash. Appl. Sci. 2020, 10, 8793. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef] [Green Version]

- Su, C.-H.; Chiu, H.-S.; Hsieh, T.-M. An efficient image retrieval based on HSV color space. In Proceedings of the 2011 International Conference on Electrical and Control Engineering, Yichang, China, 16–18 September 2011; pp. 5746–5749. [Google Scholar]

- Feng, L.; Xiaoyu, L.; Yi, C. An efficient detection method for rare colored capsule based on RGB and HSV color space. In Proceedings of the 2014 IEEE International Conference on Granular Computing (GrC), Noboribetsu, Japan, 22–24 October 2014; pp. 175–178. [Google Scholar]

- Robot Operating System. Available online: http://wiki.ros.org (accessed on 15 March 2021).

- Google Cloud Text-to-Speech. Available online: https://appfoundry.genesys.com (accessed on 5 April 2021).

| Dataset | Category | Image Quantity | BBox Quantity |

|---|---|---|---|

| PASCAL VOC (07++12) | 20 | 21,503 | 62,199 |

| MSCOCO (2014trainval) | 80 | 123,287 | 886,266 |

| ImageNet Det (2017train) | 200 | 349,319 | 478,806 |

| Dataset | Total | Small | Middle | Large |

|---|---|---|---|---|

| PASCAL VOC (07++12) | 62,199 | 6983 | 19,677 | 35,539 |

| MSCOCO (2014trainval) | 886,266 | 278,651 | 311,999 | 295,616 |

| ImageNet Det (2017train) | 478,806 | 22,677 | 86,439 | 369,690 |

| Image Captioning results | A man sitting at a desk with a desktop. |

| Object recognition results | Person: blue Chair: blue Desk: white TV: black |

| Sentence segmentation | a, man, sitting, at, a, desk, with, a, desktop. |

| Match text and insert color | a, man, sitting, at, a, white, desk, with, a, desktop. |

| Integration results | a man in blue top sitting at a white desk with a desktop. |

| Single Object | Multiple Objects | |

|---|---|---|

| (Sub-images 2, 3, 7, 8, and 11 in Figure 20) | (Sub-images 1, 4, 5, 6, 9, 10, and 12 in Figure 20) | |

| Traditional method [4,10,20] (CNN-LSTM-Attention) | Captions in sub-image 2, e.g., a cow standing in a field | Captions in sub-image 5, e.g., a couple of men sitting on a bench |

| Proposed method (VGG16-LSTM-Attention, color analysis, enhanced image captioning) | a black cow standing in a field | a couple of men sitting on a bench with a man in blue top sitting on a bench and a man in green top reading a book |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, Y.-H.; Chen, Y.-J.; Huang, R.-H.; Yu, Y.-T. Enhanced Image Captioning with Color Recognition Using Deep Learning Methods. Appl. Sci. 2022, 12, 209. https://doi.org/10.3390/app12010209

Chang Y-H, Chen Y-J, Huang R-H, Yu Y-T. Enhanced Image Captioning with Color Recognition Using Deep Learning Methods. Applied Sciences. 2022; 12(1):209. https://doi.org/10.3390/app12010209

Chicago/Turabian StyleChang, Yeong-Hwa, Yen-Jen Chen, Ren-Hung Huang, and Yi-Ting Yu. 2022. "Enhanced Image Captioning with Color Recognition Using Deep Learning Methods" Applied Sciences 12, no. 1: 209. https://doi.org/10.3390/app12010209

APA StyleChang, Y.-H., Chen, Y.-J., Huang, R.-H., & Yu, Y.-T. (2022). Enhanced Image Captioning with Color Recognition Using Deep Learning Methods. Applied Sciences, 12(1), 209. https://doi.org/10.3390/app12010209