Automated Prediction of Extraction Difficulty and Inferior Alveolar Nerve Injury for Mandibular Third Molar Using a Deep Neural Network

Abstract

:1. Introduction

- For the first time, we propose a method that can predict both the extraction difficulty of mandibular third molars and the likelihood of IAN injury following extraction.

- The proposed deep neural network ensures consistent performance because it uses the largest dental panoramic radiographic image dataset to our knowledge.

- We achieved high performance using classification models, with an extraction difficulty accuracy of 83.5%, AUROC of 92.79% and the likelihood of IAN injury accuracy of 81.1%, AUROC of 90.02%.

2. Materials and Methods

2.1. Dataset

2.2. Ground Truth

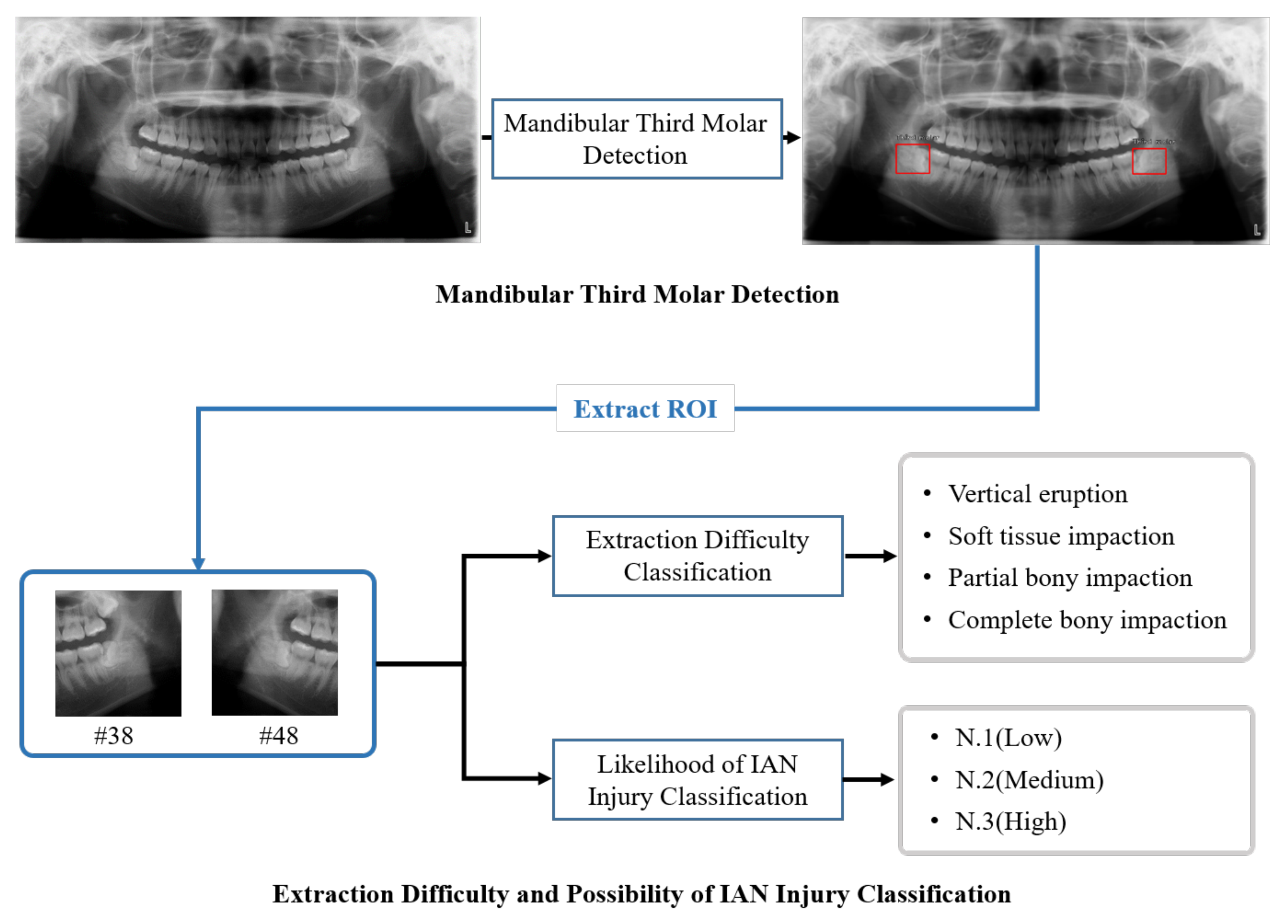

2.2.1. Mandibular Third Molar Detection

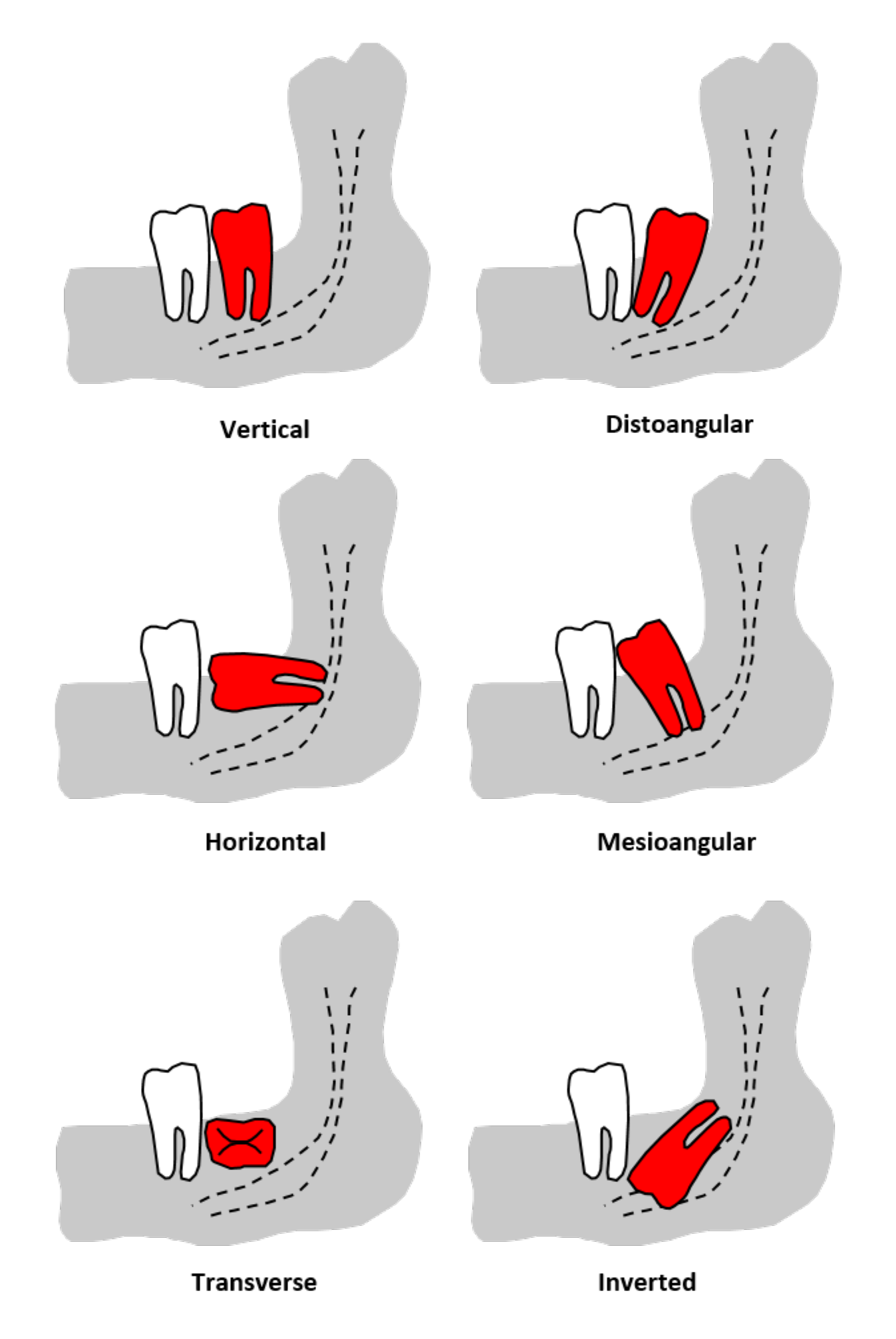

2.2.2. Extraction Difficulty Classification

- Vertical eruption: Simple extraction without gum incision or bone fracture;

- Soft tissue impaction: Extraction after a gum incision;

- Partial bony impaction: Tooth segmentation is required for extraction;

- Complete bony impaction: Where more than two-thirds of the crown is impacted, this requires tooth segmentation and bone fracture.

- Class A: The impacted mandibular third molar’s highest point of occlusal surface is at the same height as the occlusal surface of the adjacent tooth.

- Class B: The impacted mandibular third molar’s highest point of occlusal surface is between the occlusal surface of the adjacent tooth and the cervical line.

- Class C: The impacted mandibular third molars’ highest point is below the cervical line of the adjacent tooth.

- Class I: The distance between the distal surface of the mandible’s second molar and the anterior edge of the mandible is wider than the width of the impacted mandibular third molar’s occlusal surface.

- Class II: The distance between the distal surface of the mandible’s second molar and the anterior edge of the mandible is narrower than the width of the impacted mandibular third molars’ occlusal surface and wider than 1/2.

- Class III: The distance from the distal surface of the second molar of the mandible to the anterior edge of the mandible is narrower than the width of the impacted mandibular third molar’s occlusal surface.

2.2.3. Classification of Likelihood of IAN Injury

- N.1(low): The mandibular third molar does not reach the IAN canal in the panoramic radiographic image.

- N.2(medium): The mandibular third molar interrupts one line of the IAN canal in the panoramic radiographic image.

- N.3(high): The mandibular third molar interrupts two lines of the IAN canal in the panoramic radiographic image.

2.3. Mandibular Third Molar Detection Model

2.4. Extraction Difficulty and Likelihood of IAN Injury Classification Model

2.5. Metrics

3. Results

3.1. Mandibular Third Molar Detection

3.2. Extraction Difficulty Classification

3.3. Classification of Likelihood of IAN Injury

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shin, S.; Lee, Y.; Kim, S.; Choi, S.; Kim, J.G.; Lee, K. Rapid and non-destructive spectroscopic method for classifying beef freshness using a deep spectral network fused with myoglobin information. Food Chem. 2021, 352, 129329. [Google Scholar] [CrossRef]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-based vision meets deep learning on steering prediction for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5419–5427. [Google Scholar]

- Mahler, J.; Matl, M.; Liu, X.; Li, A.; Gealy, D.; Goldberg, K. Dex-net 3.0: Computing robust vacuum suction grasp targets in point clouds using a new analytic model and deep learning. In Proceedings of the 2018 IEEE International Conference on robotics and automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5620–5627. [Google Scholar]

- Back, S.; Lee, S.; Shin, S.; Yu, Y.; Yuk, T.; Jong, S.; Ryu, S.; Lee, K. Robust Skin Disease Classification by Distilling Deep Neural Network Ensemble for the Mobile Diagnosis of Herpes Zoster. IEEE Access 2021, 9, 20156–20169. [Google Scholar] [CrossRef]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef] [PubMed]

- Kanavati, F.; Toyokawa, G.; Momosaki, S.; Rambeau, M.; Kozuma, Y.; Shoji, F.; Yamazaki, K.; Takeo, S.; Iizuka, O.; Tsuneki, M. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci. Rep. 2020, 10, 9297. [Google Scholar] [CrossRef] [PubMed]

- Vaidyanathan, A.; van der Lubbe, M.F.; Leijenaar, R.T.; van Hoof, M.; Zerka, F.; Miraglio, B.; Primakov, S.; Postma, A.A.; Bruintjes, T.D.; Bilderbeek, M.A.; et al. Deep learning for the fully automated segmentation of the inner ear on MRI. Sci. Rep. 2021, 11, 2885. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.; Chen, X.; Liu, Y.; Lu, Z.; You, J.; Yang, M.; Yao, S.; Zhao, G.; Xu, Y.; Chen, T.; et al. Clinically applicable deep learning framework for organs at risk delineation in CT images. Nat. Mach. Intell. 2019, 1, 480–491. [Google Scholar] [CrossRef]

- Seo, H.; Back, S.; Lee, S.; Park, D.; Kim, T.; Lee, K. Intra-and inter-epoch temporal context network (IITNet) using sub-epoch features for automatic sleep scoring on raw single-channel EEG. Biomed. Signal Process. Control 2020, 61, 102037. [Google Scholar] [CrossRef]

- Kühnisch, J.; Meyer, O.; Hesenius, M.; Hickel, R.; Gruhn, V. Caries Detection on Intraoral Images Using Artificial Intelligence. J. Dent. Res. 2021, 00220345211032524. [Google Scholar] [CrossRef]

- Wang, H.; Minnema, J.; Batenburg, K.; Forouzanfar, T.; Hu, F.; Wu, G. Multiclass CBCT Image Segmentation for Orthodontics with Deep Learning. J. Dent. Res. 2021, 100, 943–949. [Google Scholar] [CrossRef]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schürmann, F.; Krejci, I.; Markram, H. Caries detection with near-infrared transillumination using deep learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef] [Green Version]

- Majanga, V.; Viriri, S. Automatic blob detection for dental caries. Appl. Sci. 2021, 11, 9232. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis. 2020, 26, 152–158. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, Y.H.; Noh, Y.K.; Park, F.C.; Auh, Q.S. Age-group determination of living individuals using first molar images based on artificial intelligence. Sci. Rep. 2021, 11, 1073. [Google Scholar] [CrossRef]

- Kruger, E.; Thomson, W.M.; Konthasinghe, P. Third molar outcomes from age 18 to 26: Findings from a population-based New Zealand longitudinal study. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2001, 92, 150–155. [Google Scholar] [CrossRef] [PubMed]

- Alfadil, L.; Almajed, E. Prevalence of impacted third molars and the reason for extraction in Saudi Arabia. Saudi Dent. J. 2020, 32, 262–268. [Google Scholar] [CrossRef]

- Yilmaz, S.; Adisen, M.Z.; Misirlioglu, M.; Yorubulut, S. Assessment of third molar impaction pattern and associated clinical symptoms in a central anatolian turkish population. Med. Princ. Pract. 2016, 25, 169–175. [Google Scholar] [CrossRef] [PubMed]

- Kumar, V.R.; Yadav, P.; Kahsu, E.; Girkar, F.; Chakraborty, R. Prevalence and Pattern of Mandibular Third Molar Impaction in Eritrean Population: A Retrospective Study. J. Contemp. Dent. Pract. 2017, 18, 100–106. [Google Scholar] [CrossRef]

- Obiechina, A.; Arotiba, J.; Fasola, A. Third molar impaction: Evaluation of the symptoms and pattern of impaction of mandibular third molar teeth in Nigerians. Trop. Dent. J. 2001, 22–25. [Google Scholar]

- Eshghpour, M.; Nezadi, A.; Moradi, A.; Shamsabadi, R.M.; Rezaer, N.; Nejat, A. Pattern of mandibular third molar impaction: A cross-sectional study in northeast of Iran. Niger. J. Clin. Pract. 2014, 17, 673–677. [Google Scholar]

- Quek, S.; Tay, C.; Tay, K.; Toh, S.; Lim, K. Pattern of third molar impaction in a Singapore Chinese population: A retrospective radiographic survey. Int. J. Oral Maxillofac. Surg. 2003, 32, 548–552. [Google Scholar] [CrossRef]

- Jaroń, A.; Trybek, G. The Pattern of Mandibular Third Molar Impaction and Assessment of Surgery Difficulty: A Retrospective Study of Radiographs in East Baltic Population. Int. J. Environ. Res. Public Health 2021, 18, 6016. [Google Scholar] [CrossRef]

- Osborn, T.P.; Frederickson, G., Jr.; Small, I.A.; Torgerson, T.S. A prospective study of complications related to mandibular third molar surgery. J. Oral Maxillofac. Surg. 1985, 43, 767–769. [Google Scholar] [CrossRef]

- Blondeau, F.; Daniel, N.G. Extraction of impacted mandibular third molars: Postoperative complications and their risk factors. J. Can. Dent. Assoc. 2007, 73, 325. [Google Scholar] [PubMed]

- Van Gool, A.; Ten Bosch, J.; Boering, G. Clinical consequences of complaints and complications after removal of the mandibular third molar. Int. J. Oral Surg. 1977, 6, 29–37. [Google Scholar] [CrossRef]

- Benediktsdóttir, I.S.; Wenzel, A.; Petersen, J.K.; Hintze, H. Mandibular third molar removal: Risk indicators for extended operation time, postoperative pain, and complications. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2004, 97, 438–446. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.J.; Jo, Y.J.; Choi, J.S.; Kim, H.J.; Kim, J.; Moon, S.Y. Anatomical Risk Factors of Inferior Alveolar Nerve Injury Association with Surgical Extraction of Mandibular Third Molar in Korean Population. Appl. Sci. 2021, 11, 816. [Google Scholar] [CrossRef]

- Valmaseda-Castellón, E.; Berini-Aytés, L.; Gay-Escoda, C. Inferior alveolar nerve damage after lower third molar surgical extraction: A prospective study of 1117 surgical extractions. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2001, 92, 377–383. [Google Scholar] [CrossRef]

- Smith, A.C.; Barry, S.E.; Chiong, A.Y.; Hadzakis, D.; Kha, S.L.; Mok, S.C.; Sable, D.L. Inferior alveolar nerve demage following removal of mandibular third molar teeth. A prospective study using panoramic radiography. Aust. Dent. J. 1997, 42, 149–152. [Google Scholar] [CrossRef]

- Roccuzzo, A.; Molinero-Mourelle, P.; Ferrillo, M.; Cobo-Vázquez, C.; Sanchez-Labrador, L.; Ammendolia, A.; Migliario, M.; de Sire, A. Type I Collagen-Based Devices to Treat Nerve Injuries after Oral Surgery Procedures. A Systematic Review. Appl. Sci. 2021, 11, 3927. [Google Scholar] [CrossRef]

- Miloro, M.; DaBell, J. Radiographic proximity of the mandibular third molar to the inferior alveolar canal. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2005, 100, 545–549. [Google Scholar] [CrossRef]

- Kang, F.; Sah, M.; Fei, G. Determining the risk relationship associated with inferior alveolar nerve injury following removal of mandibular third molar teeth: A systematic review. J. Stomatol. Oral Maxillofac. Surg. 2020, 121, 63–69. [Google Scholar] [CrossRef]

- Vinayahalingam, S.; Xi, T.; Bergé, S.; Maal, T.; de Jong, G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019, 9, 1–7. [Google Scholar] [CrossRef]

- Yoo, J.H.; Yeom, H.G.; Shin, W.; Yun, J.P.; Lee, J.H.; Jeong, S.H.; Lim, H.J.; Lee, J.; Kim, B.C. Deep learning based prediction of extraction difficulty for mandibular third molars. Sci. Rep. 2021, 11, 1954. [Google Scholar] [CrossRef] [PubMed]

- Nakagawa, Y.; Ishii, H.; Nomura, Y.; Watanabe, N.Y.; Hoshiba, D.; Kobayashi, K.; Ishibashi, K. Third molar position: Reliability of panoramic radiography. J. Oral Maxillofac. Surg. 2007, 65, 1303–1308. [Google Scholar] [CrossRef] [PubMed]

- Barone, R.; Clauser, C.; Testori, T.; Del Fabbro, M. Self-assessed neurological disturbances after surgical removal of impacted lower third molar: A pragmatic prospective study on 423 surgical extractions in 247 consecutive patients. Clin. Oral Investig. 2019, 23, 3257–3265. [Google Scholar] [CrossRef] [PubMed]

- Rood, J.; Shehab, B.N. The radiological prediction of inferior alveolar nerve injury during third molar surgery. Br. J. Oral Maxillofac. Surg. 1990, 28, 20–25. [Google Scholar] [CrossRef]

- Yuasa, H.; Kawai, T.; Sugiura, M. Classification of surgical difficulty in extracting impacted third molars. Br. J. Oral Maxillofac. Surg. 2002, 40, 26–31. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.Y.; Yong, H.S.; Park, K.H.; Huh, J.K. Modified difficult index adding extremely difficult for fully impacted mandibular third molar extraction. J. Korean Assoc. Oral Maxillofac. Surg. 2019, 45, 309–315. [Google Scholar] [CrossRef]

- Lima, C.J.; Silva, L.C.; Melo, M.R.; Santos, J.A.; Santos, T.S. Evaluation of the agreement by examiners according to classifications of third molars. Med. Oral Patol. Oral y Cir. Bucal 2012, 17, e281. [Google Scholar] [CrossRef]

- Iizuka, T.; Tanner, S.; Berthold, H. Mandibular fractures following third molar extraction: A retrospective clinical and radiological study. Int. J. Oral Maxillofac. Surg. 1997, 26, 338–343. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Vinayahalingam, S.; Kempers, S.; Limon, L.; Deibel, D.; Maal, T.; Hanisch, M.; Bergé, S.; Xi, T. Classification of caries in third molars on panoramic radiographs using deep learning. Sci. Rep. 2021, 11, 12609. [Google Scholar] [CrossRef]

- Kim, B.S.; Yeom, H.G.; Lee, J.H.; Shin, W.S.; Yun, J.P.; Jeong, S.H.; Kang, J.H.; Kim, S.W.; Kim, B.C. Deep Learning-Based Prediction of Paresthesia after Third Molar Extraction: A Preliminary Study. Diagnostics 2021, 11, 1572. [Google Scholar] [CrossRef]

- Demirtas, O.; Harorli, A. Evaluation of the maxillary third molar position and its relationship with the maxillary sinus: A CBCT study. Oral Radiol. 2016, 32, 173–179. [Google Scholar] [CrossRef]

- Lim, A.A.T.; Wong, C.W.; Allen, J.C., Jr. Maxillary third molar: Patterns of impaction and their relation to oroantral perforation. J. Oral Maxillofac. Surg. 2012, 70, 1035–1039. [Google Scholar] [CrossRef] [PubMed]

- Hasegawa, T.; Tachibana, A.; Takeda, D.; Iwata, E.; Arimoto, S.; Sakakibara, A.; Akashi, M.; Komori, T. Risk factors associated with oroantral perforation during surgical removal of maxillary third molar teeth. Oral Maxillofac. Surg. 2016, 20, 369–375. [Google Scholar] [CrossRef] [PubMed]

| Model | AP[0.5] | AP[0.75] | AP[0.5:0.95] |

|---|---|---|---|

| Retinanet-152 | 99.0% | 97.7% | 85.3% |

| Model | Accuracy (%) | F1-Score (%) | AUROC(%) |

|---|---|---|---|

| ResNet-34 | 80.07 | 63.28 | 91.43 |

| ResNet-152 | 82.18 | 63.23 | 91.45 |

| R50+ViT-L/32 | 83.5 | 66.35 | 92.79 |

| Model | Accuracy (%) | F1-Score (%) | AUROC (%) |

|---|---|---|---|

| ResNet-34 | 77.27 | 70.99 | 86.02 |

| ResNet-152 | 80.07 | 72.62 | 88.19 |

| R50+ViT-L/32 | 81.1 | 75.55 | 90.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Park, J.; Moon, S.Y.; Lee, K. Automated Prediction of Extraction Difficulty and Inferior Alveolar Nerve Injury for Mandibular Third Molar Using a Deep Neural Network. Appl. Sci. 2022, 12, 475. https://doi.org/10.3390/app12010475

Lee J, Park J, Moon SY, Lee K. Automated Prediction of Extraction Difficulty and Inferior Alveolar Nerve Injury for Mandibular Third Molar Using a Deep Neural Network. Applied Sciences. 2022; 12(1):475. https://doi.org/10.3390/app12010475

Chicago/Turabian StyleLee, Junseok, Jumi Park, Seong Yong Moon, and Kyoobin Lee. 2022. "Automated Prediction of Extraction Difficulty and Inferior Alveolar Nerve Injury for Mandibular Third Molar Using a Deep Neural Network" Applied Sciences 12, no. 1: 475. https://doi.org/10.3390/app12010475