Multi-Sensor Fusion for Lateral Vehicle Localization in Tunnels

Abstract

:1. Introduction

2. Methods

2.1. Attitude Update Algorithm for Strapdown Inertial Navigation

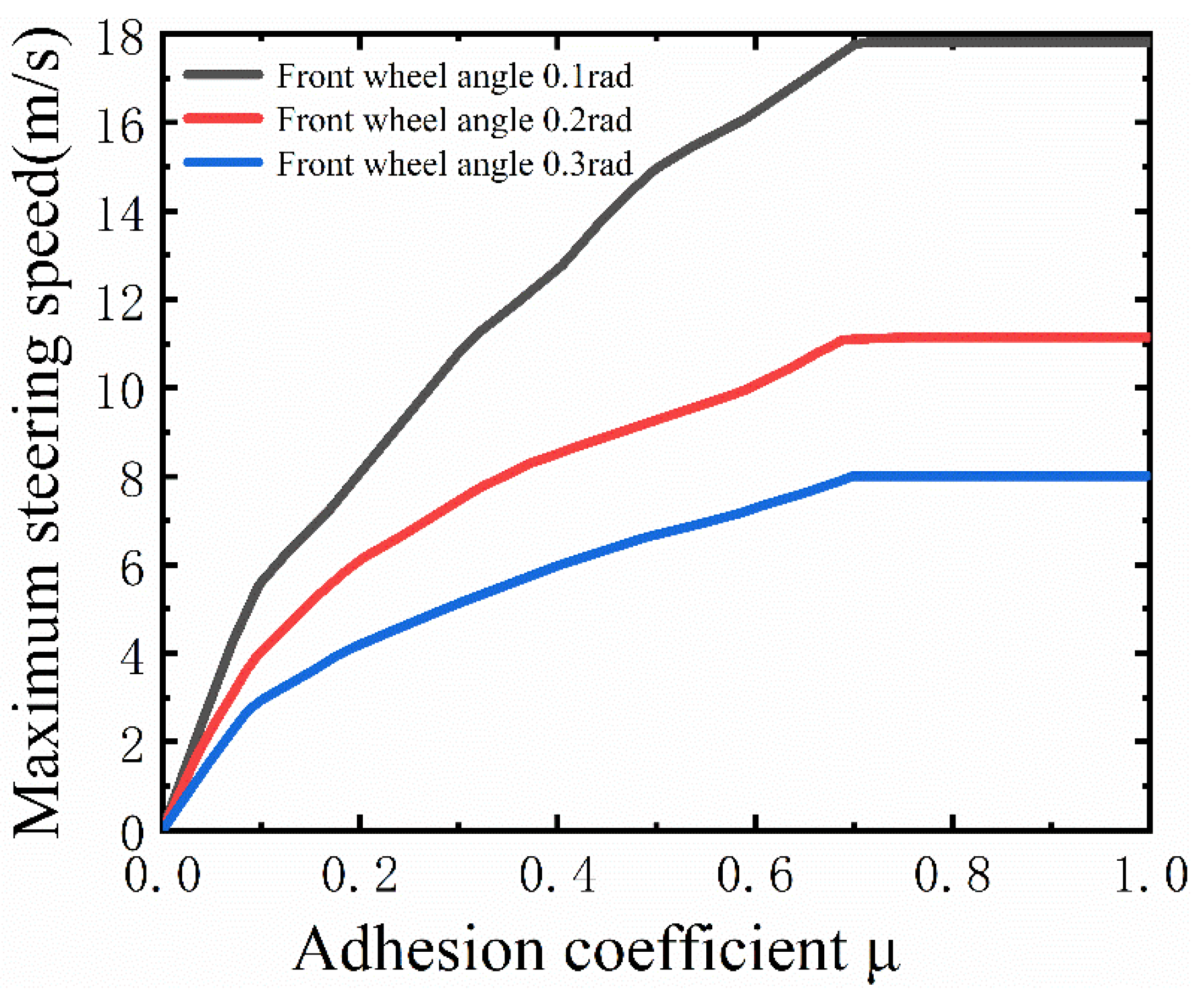

2.2. Vehicle Lateral Positioning Algorithm

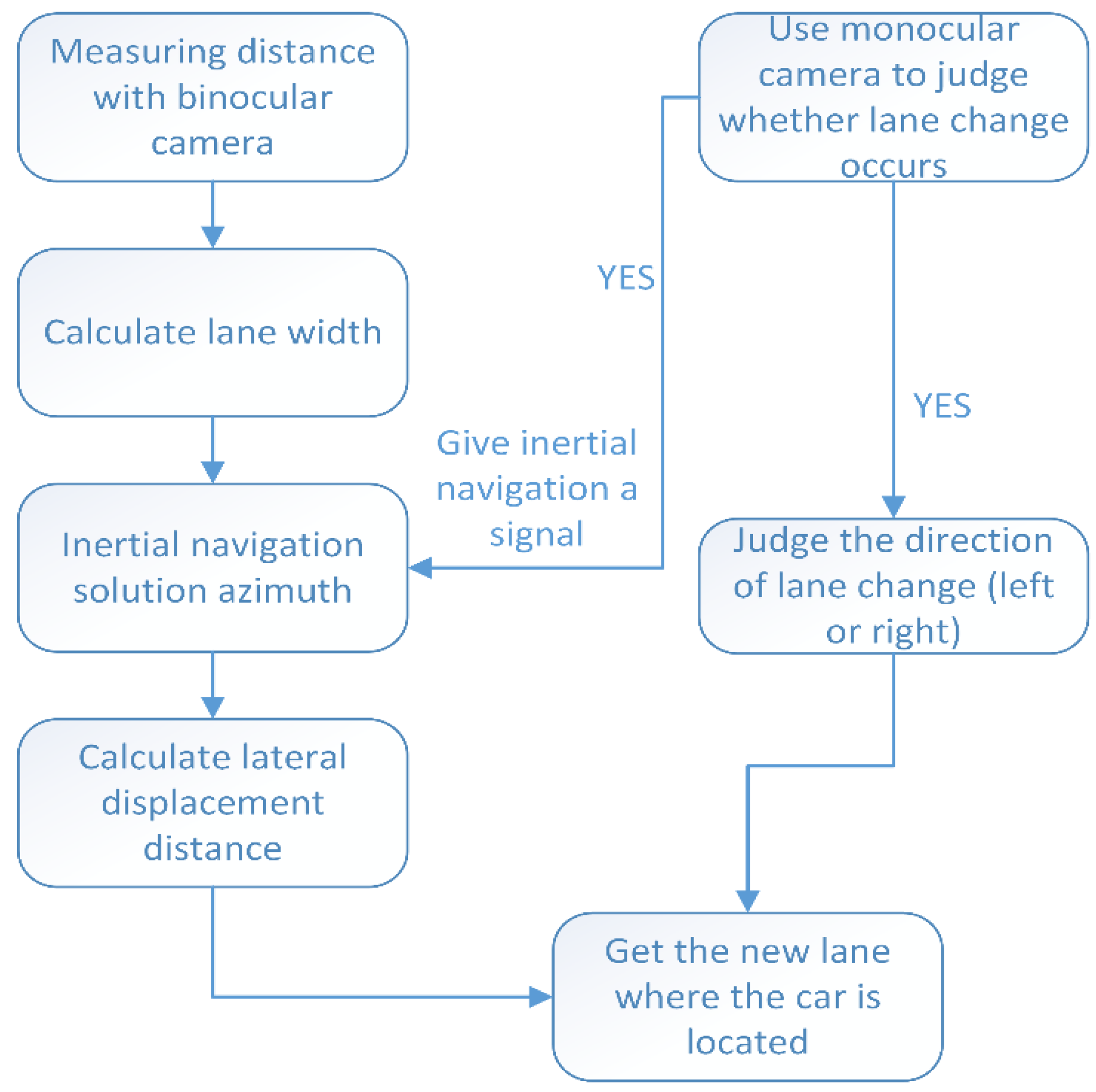

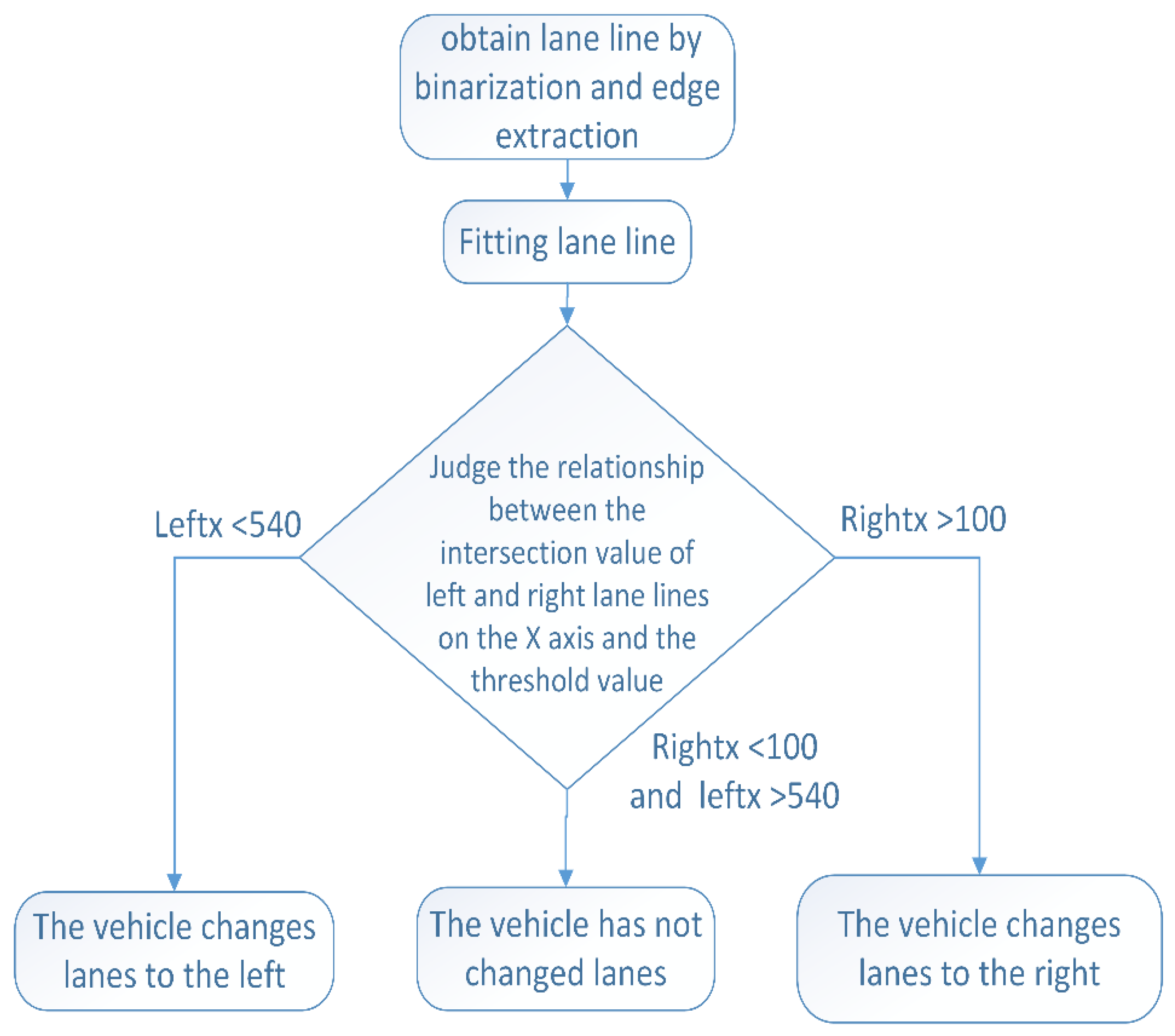

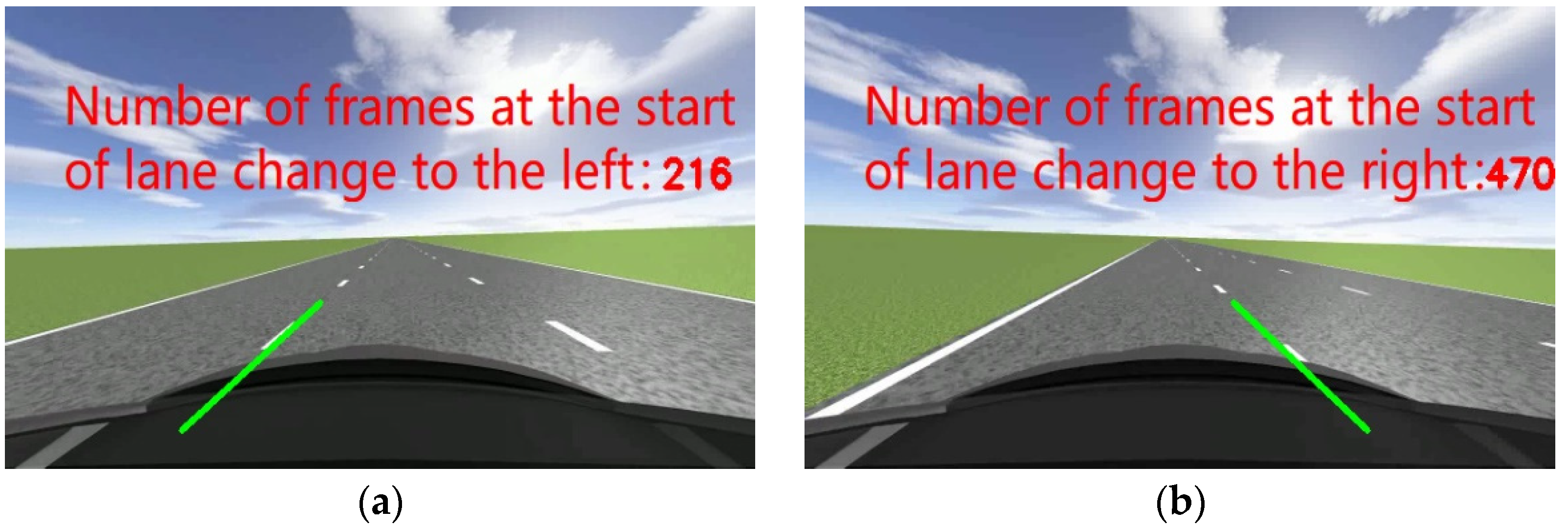

2.3. Monocular Camera to Assist in Determining Lane Changes

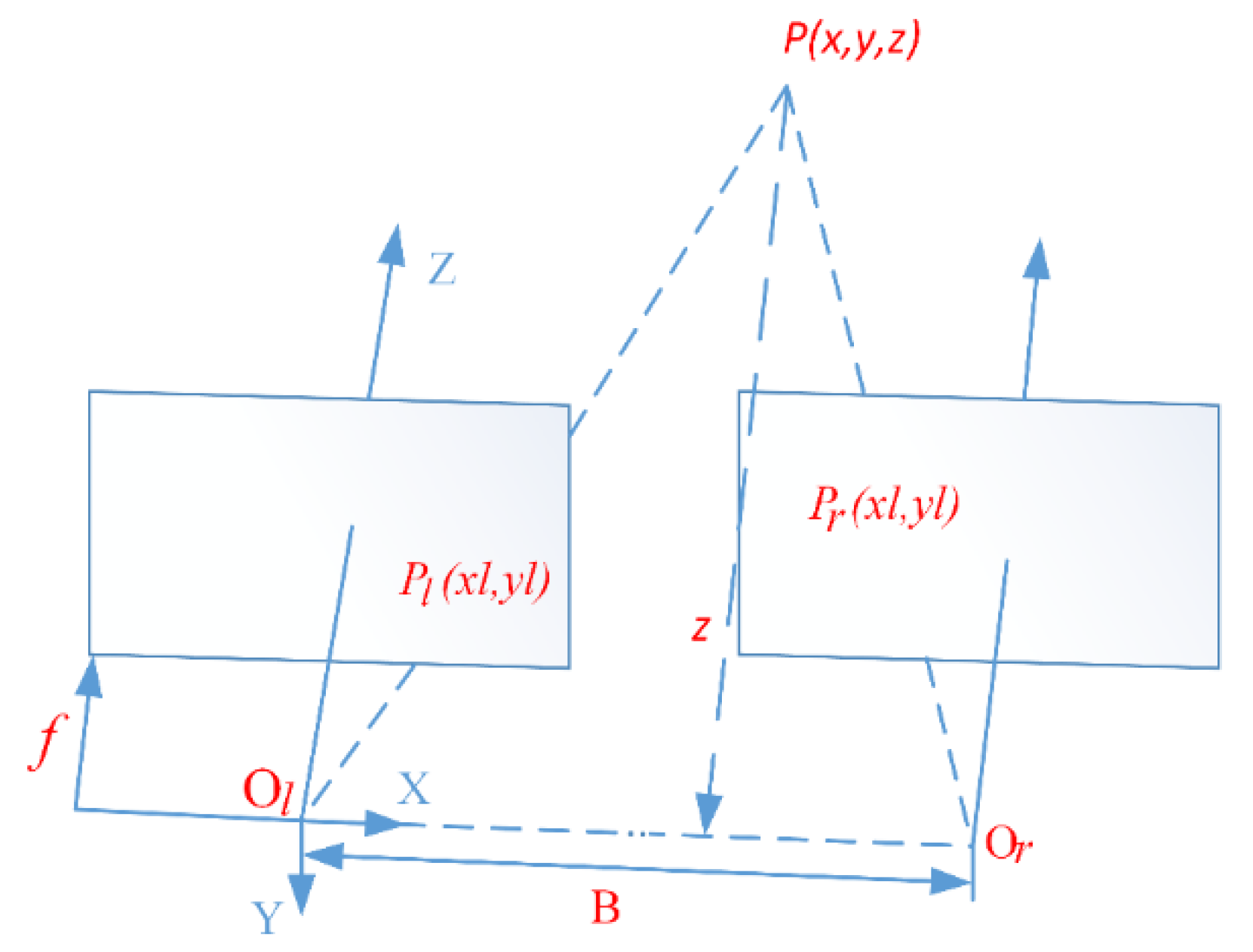

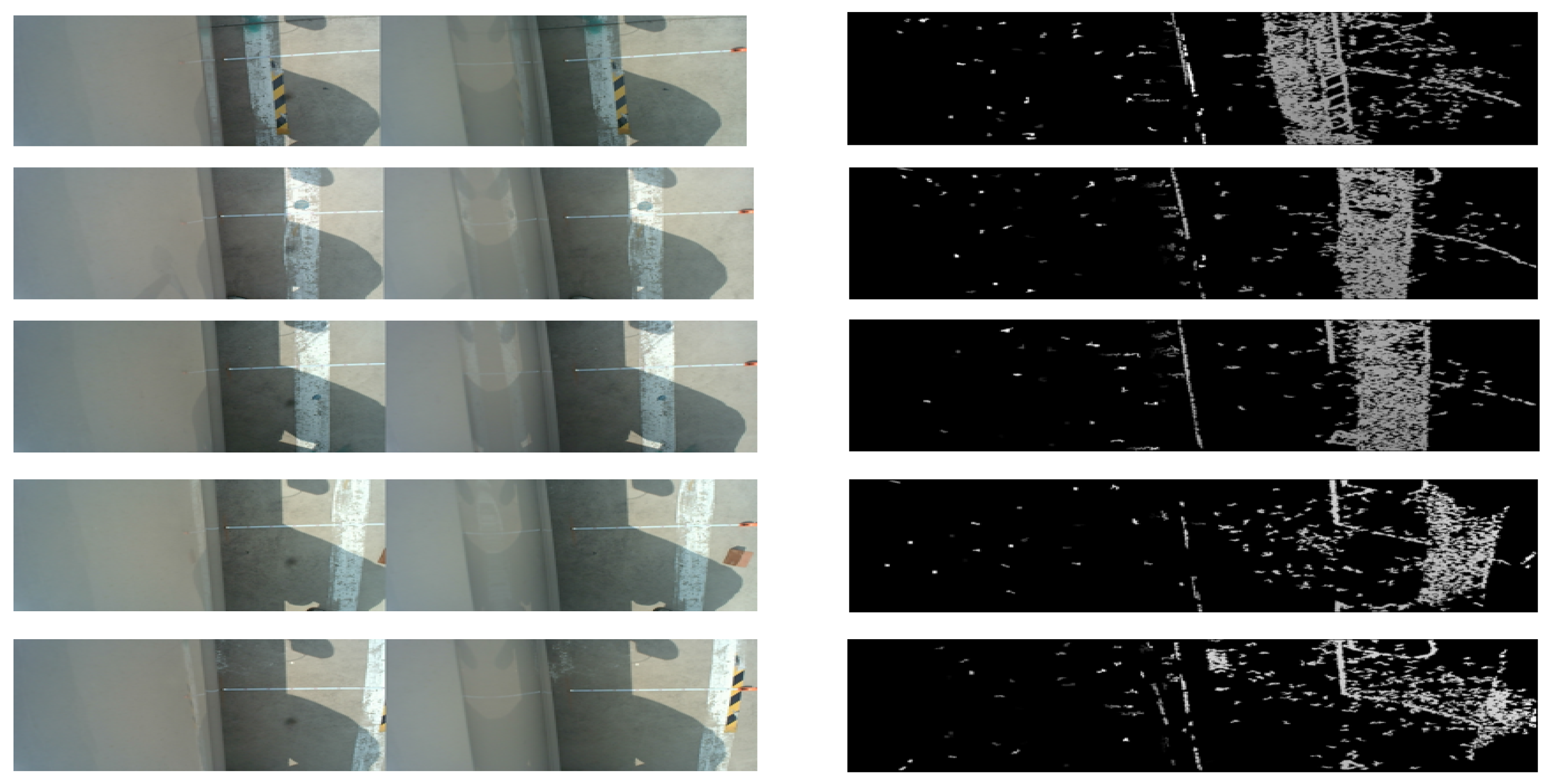

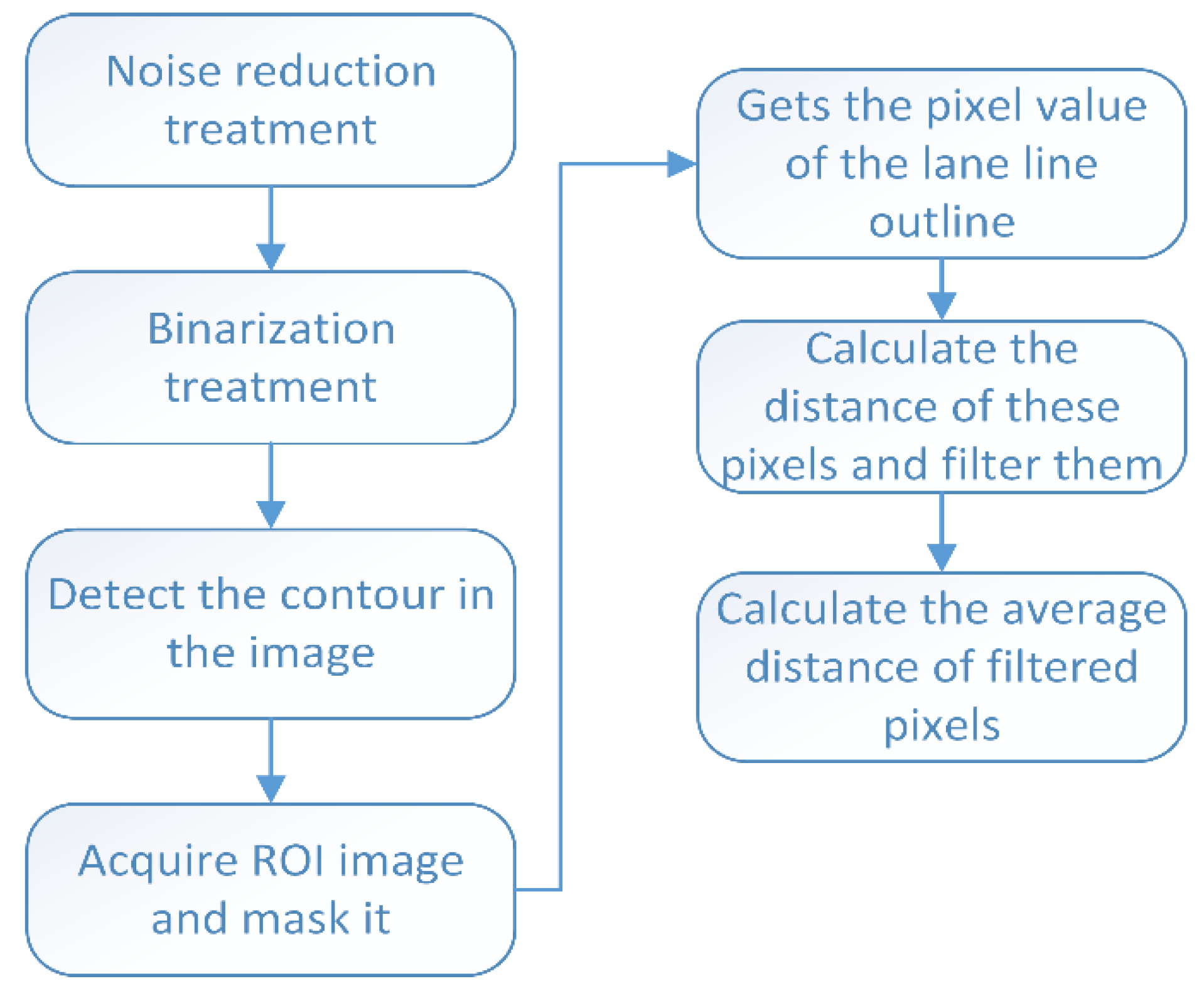

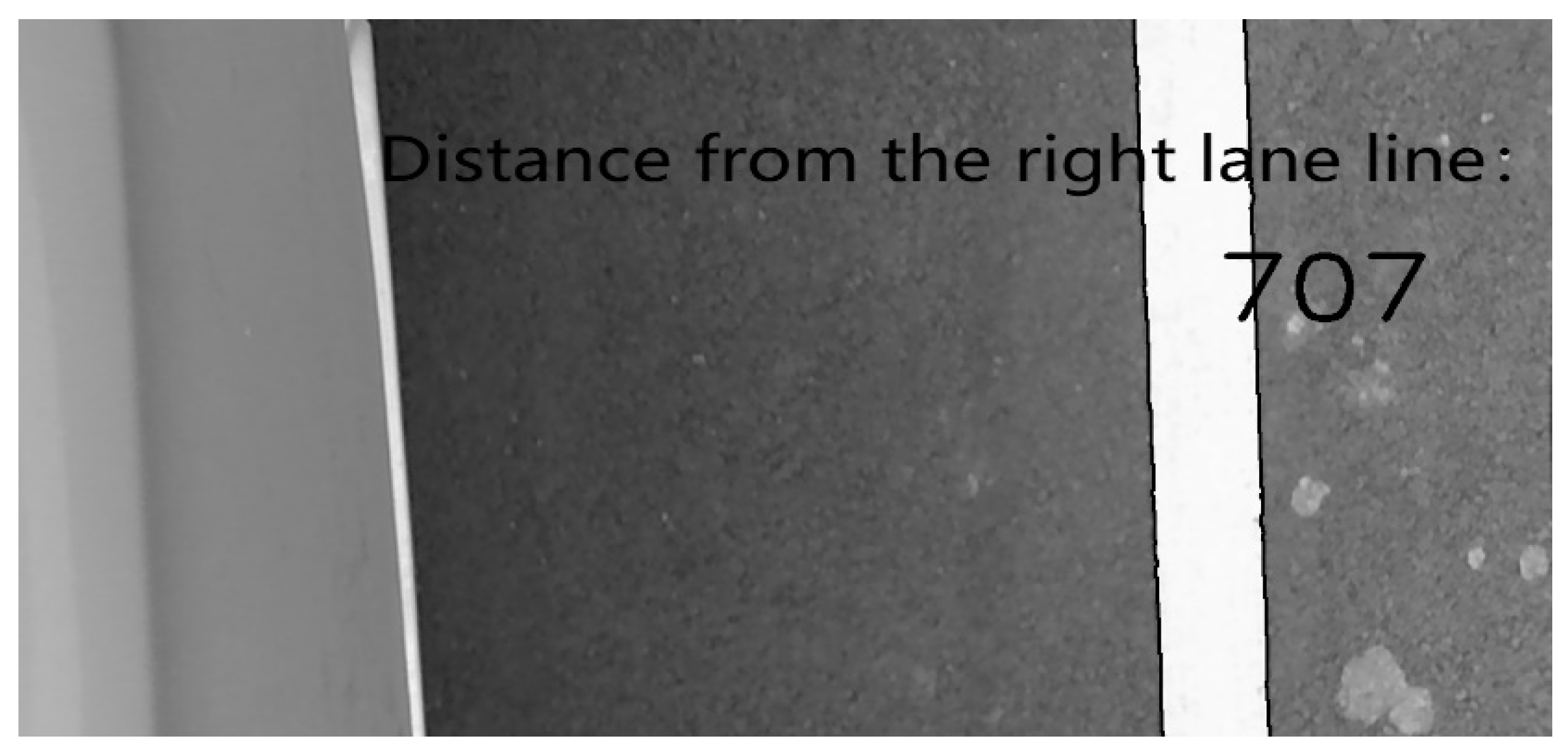

2.4. Binocular Camera Distance Measurement

3. Results

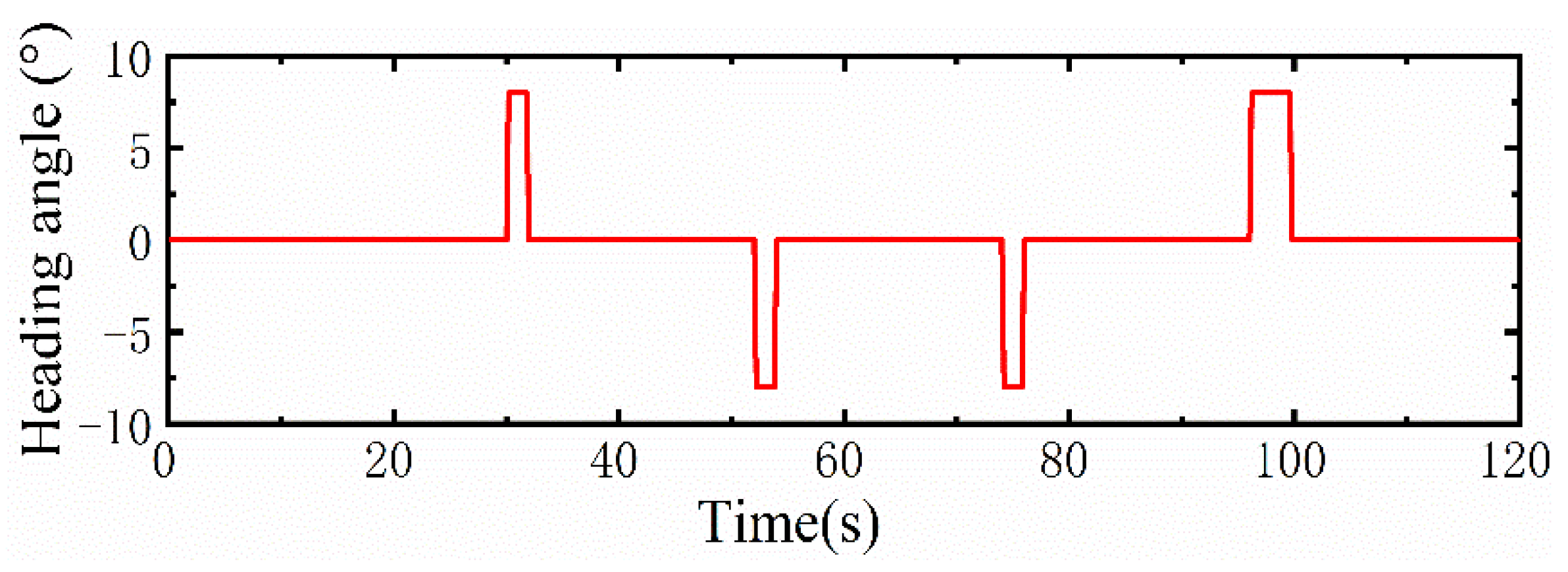

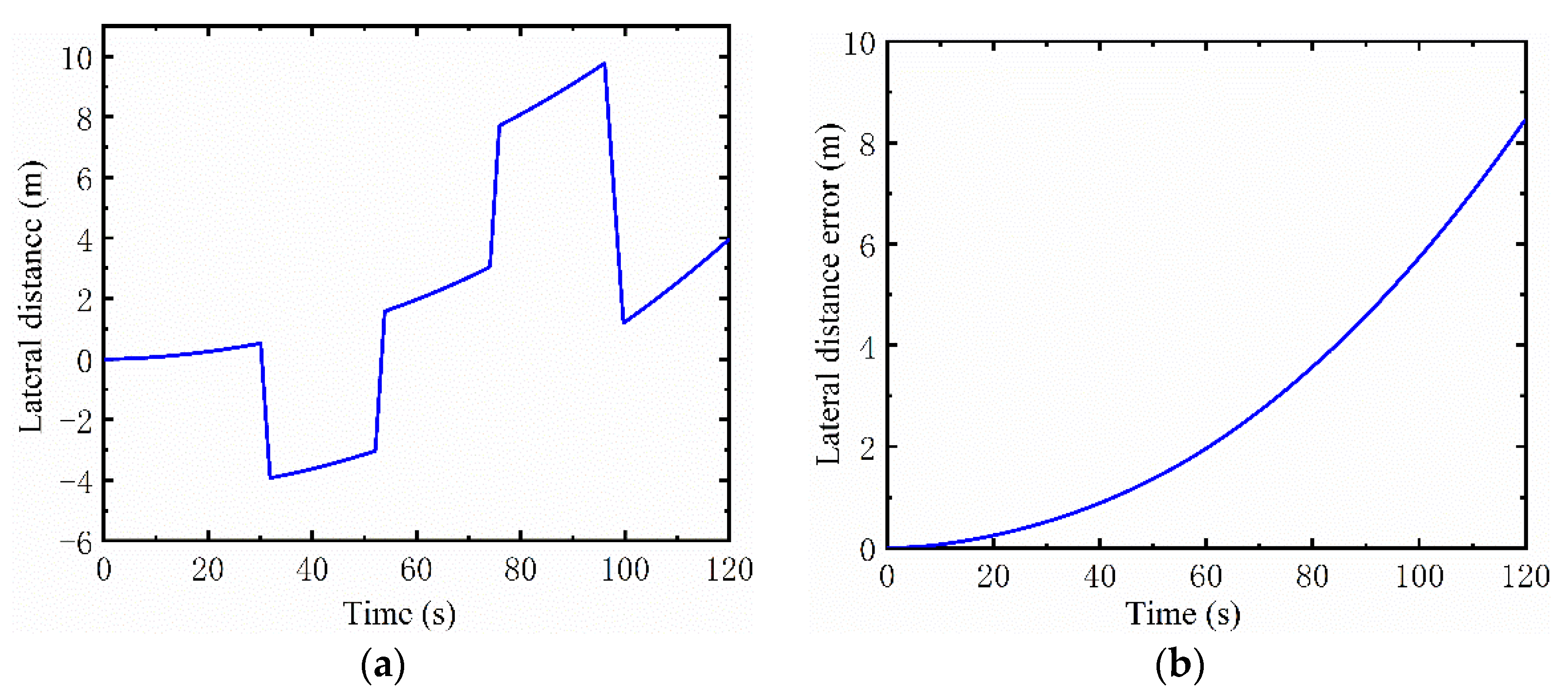

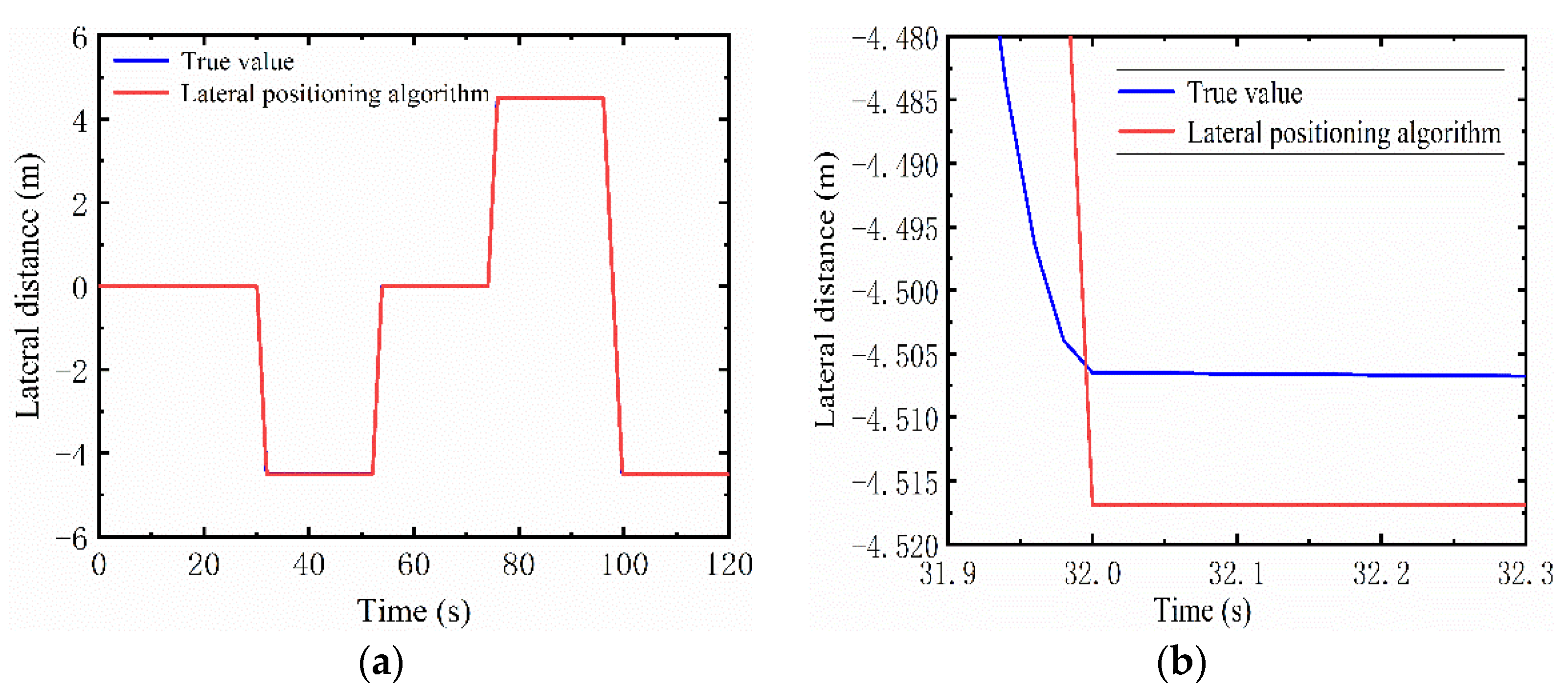

3.1. Simulation Analysis of Lateral Positioning Algorithm

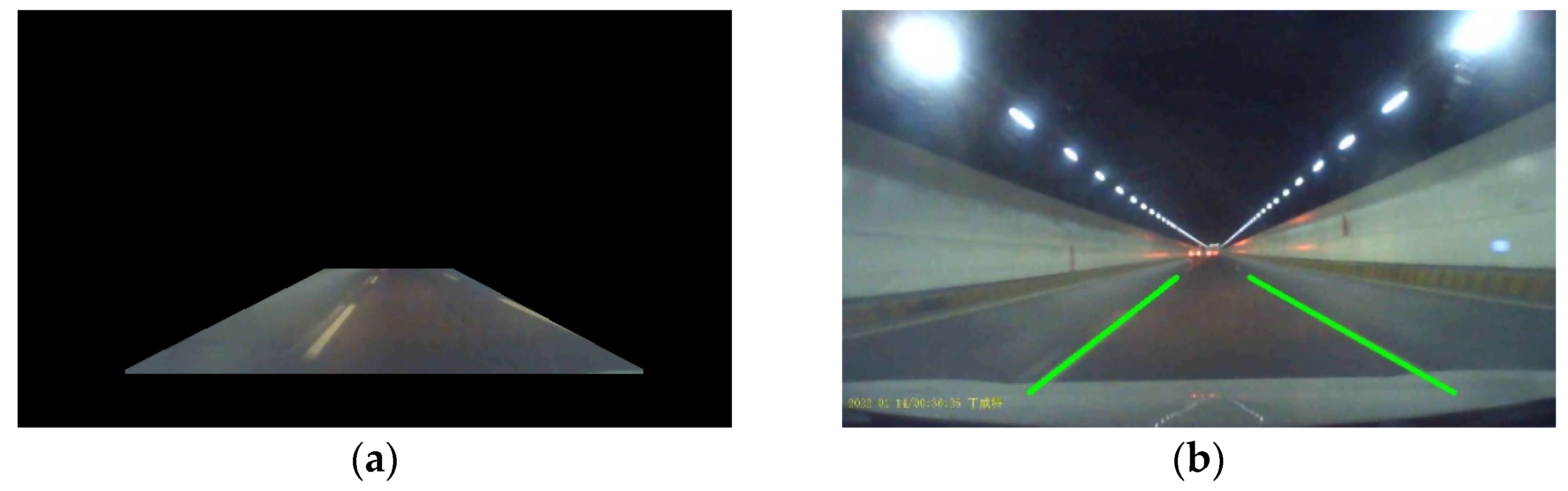

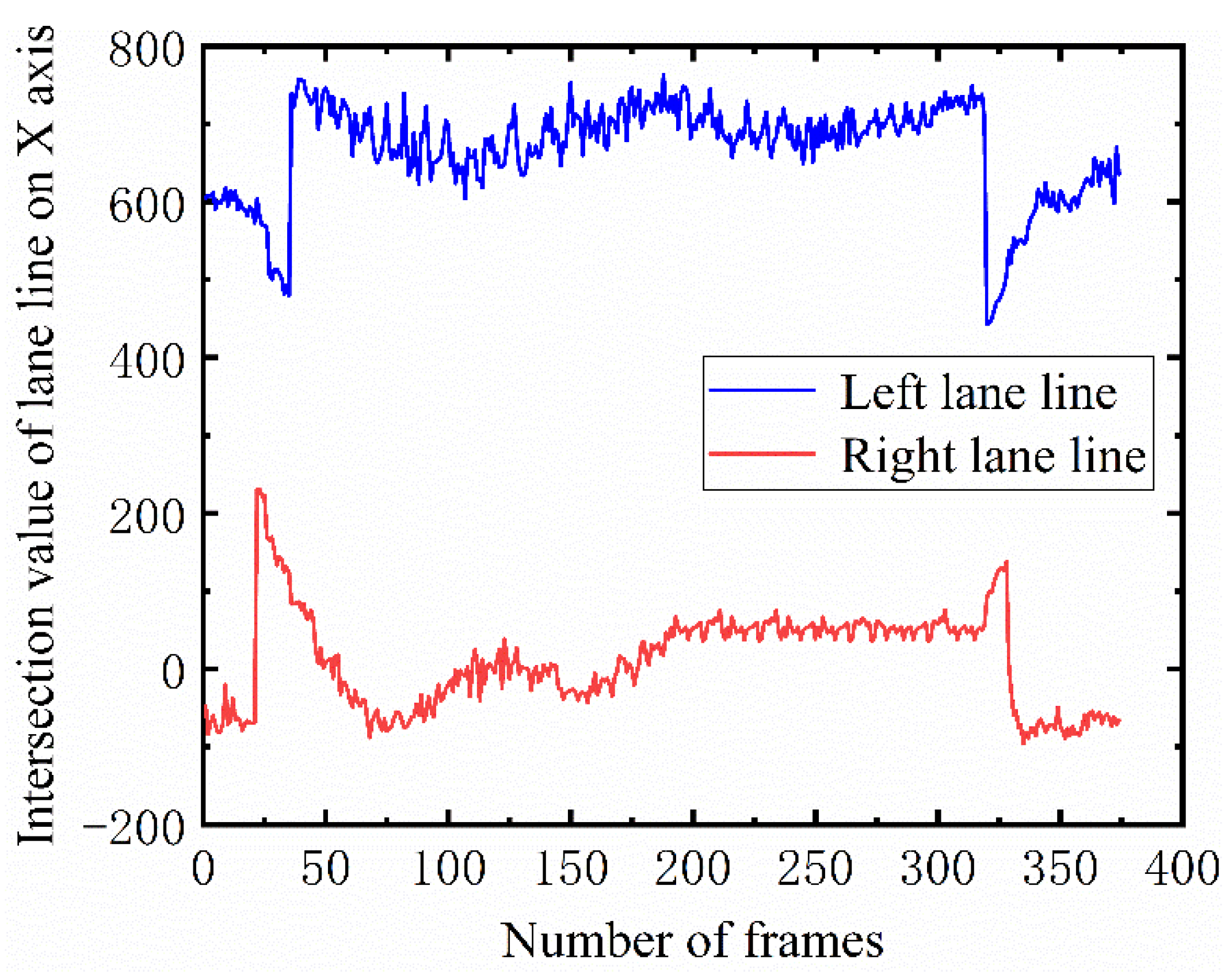

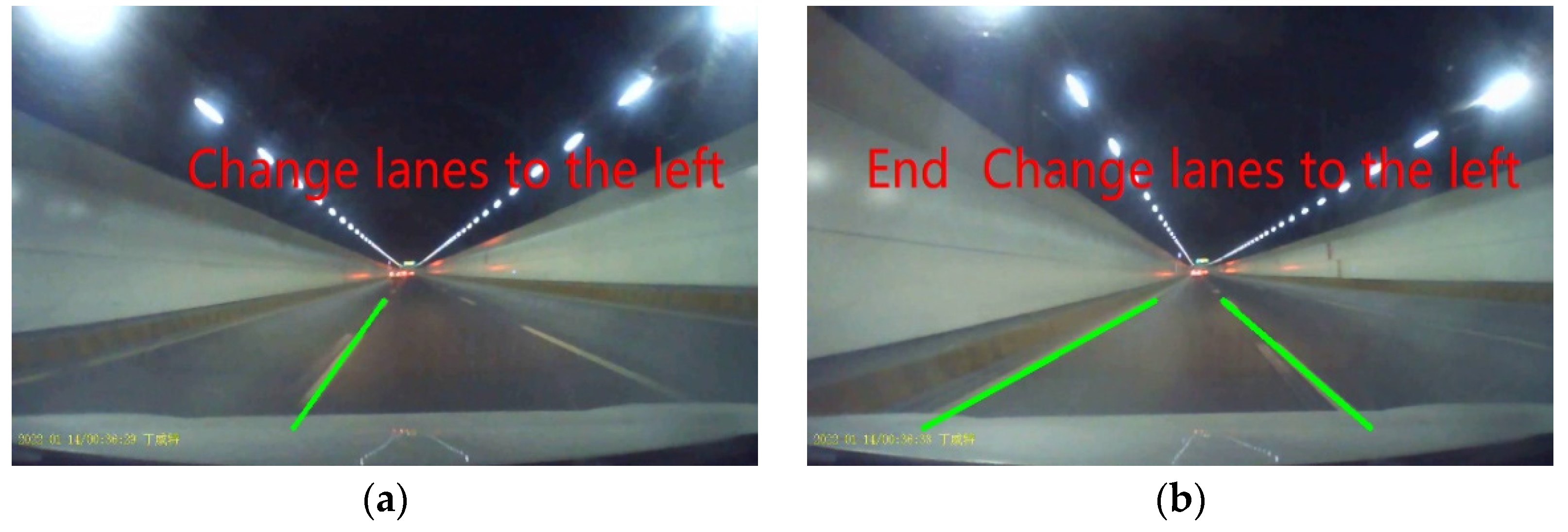

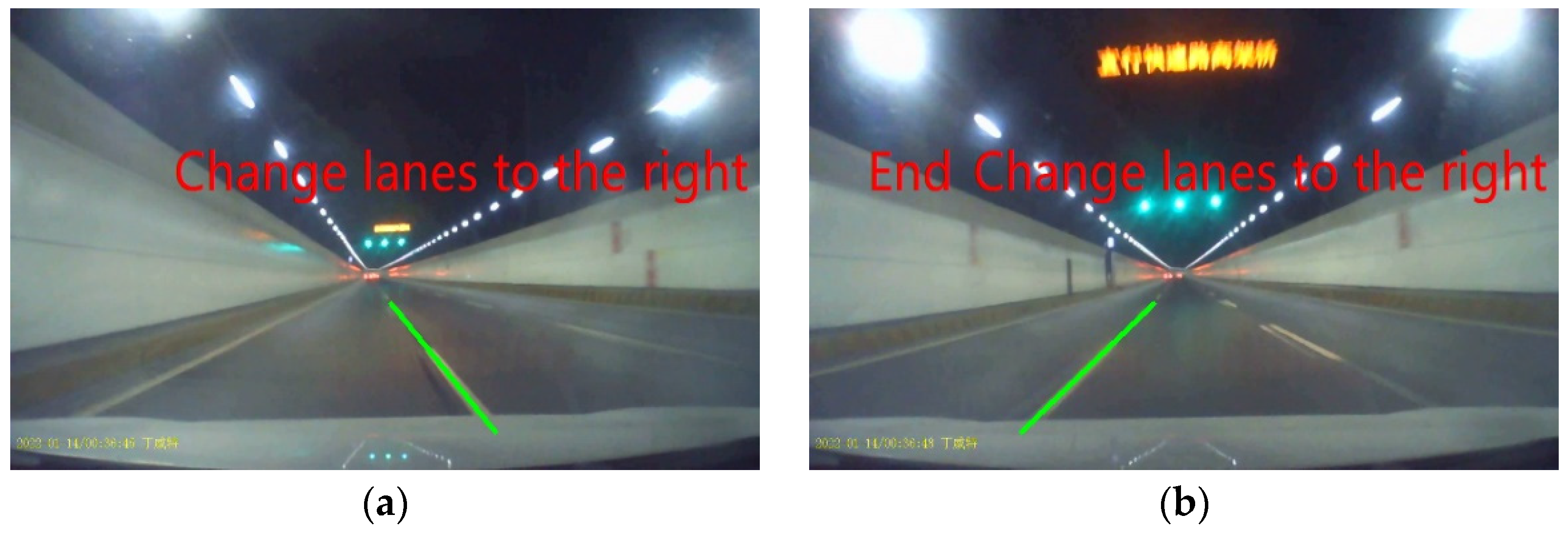

3.2. Simulation of Monocular Camera Lane Change Judgment

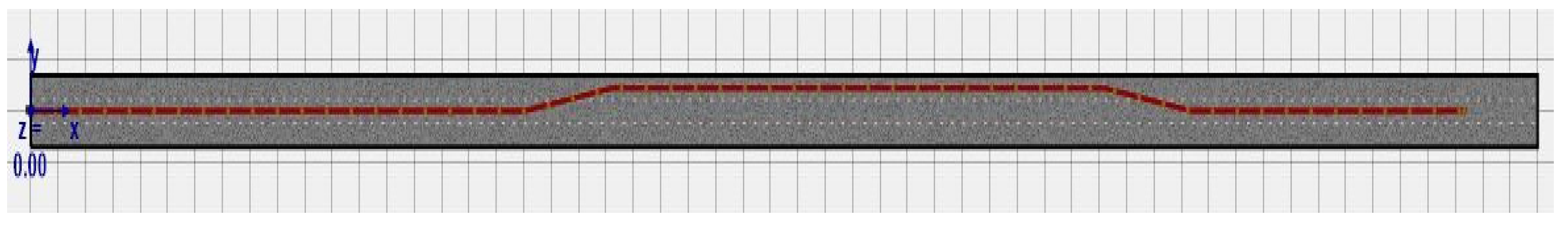

3.3. Simulation and Experiment of Binocular Camera Range Measurement

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, H.; Xu, Q. A Reliable Fusion Positioning Strategy for Land Vehicles in GPS-Denied Environments Based on Low-Cost Sensors. IEEE Trans. Ind. Electron. 2017, 64, 3205–3215. [Google Scholar] [CrossRef]

- Elsheikh, M.; Noureldin, A.; El-Sheimy, N.; Korenberg, M. Performance Analysis of MEMS-based RISS/PPP Integrated Positioning for Land Vehicles. In Proceedings of the 2020 IEEE 92nd Vehicular Technology Conference (VTC2020-Fall), Victoria, BC, Canada, 18 November–16 December 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Zheng, C.; Libo, C.; Linbo, Y.; Qin, Q.; Ruifeng, Z. Lane-level positioning system based on RFID and vision. In Proceedings of the IET International Conference on Intelligent and Connected Vehicles (ICV 2016), Chongqing, China, 22–23 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Wei, S.; Zou, Y.; Zhang, X.; Zhang, T.; Li, X. An Integrated Longitudinal and Lateral Vehicle Following Control System with Radar and Vehicle-to-Vehicle Communication. IEEE Trans. Veh. Technol. 2019, 68, 1116–1127. [Google Scholar] [CrossRef]

- Chen, H.; Taha, T.M.; Chodavarapu, V.P. Towards Improved Inertial Navigation by Reducing Errors Using Deep Learning Methodology. Appl. Sci. 2022, 12, 3645. [Google Scholar] [CrossRef]

- Tang, J.; Zhang, Y.; Li, A. Research on Location Method Based on GNSS/IMU/LIDAR Multi-source Information Fusion. In Proceedings of the 2021 3rd International Conference on Intelligent Control, Measurement and Signal Processing and Intelligent Oil Field (ICMSP), Xi’an, China, 23–25 July 2021; pp. 98–102. [Google Scholar]

- Song, D.; Tian, G.-M.; Liu, J. Real-time localization measure and perception detection using multi-sensor fusion for Automated Guided Vehicles. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 3219–3224. [Google Scholar] [CrossRef]

- Pan, L.; Ji, K.; Zhao, J. Tightly-Coupled Multi-Sensor Fusion for Localization with LiDAR Feature Maps. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5215–5221. [Google Scholar] [CrossRef]

- Alrousan, Q.; Matta, S.; Tasky, T. Multi-Sensor Fusion in Slow Lanes for Lane Keep Assist System; SAE Technical Paper 2021-01-0084; SAE International: Warrendale, PA, USA, 2021. [Google Scholar] [CrossRef]

- Qian, H.; An, D.; Xia, Q. IMM-UKF based land-vehicle navigation with low-cost GPS/INS. In Proceedings of the 2010 IEEE International Conference on Information and Automation, Harbin, China, 20–23 June 2010; pp. 2031–2035. [Google Scholar] [CrossRef]

- Zhu, X.; Tian, W.; Li, G.; Yu, J. Research on Localization Vehicle Based on Multiple Sensors Fusion System. In Proceedings of the 2017 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 23–25 September 2017; pp. 491–494. [Google Scholar] [CrossRef]

- Yang, B.; Xue, L.; Fan, H.; Yanga, X. SINS/Odometer/Doppler Radar High-Precision Integrated Navigation Method for Land Vehicle. IEEE Sens. J. 2021, 21, 15090–15100. [Google Scholar] [CrossRef]

- Serov, A.; Clemens, J.; Schill, K. Visual-Multi-Sensor Odometry with Application in Autonomous Driving. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, F.; Duan, J.-M.; Zheng, B.-G. Machine vision based localization of intelligent vehicle. In Proceedings of the 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012; pp. 4638–4643. [Google Scholar] [CrossRef]

- Bai, X.; Wu, C.; Hou, Q.; Feng, D. Vehicle Precise Positioning Based on Integrated Navigation System in Vehicle Networking. In Proceedings of the 2021 IEEE International Conference on Robotics, Automation and Artificial Intelligence (RAAI), Hong Kong, China, 21–23 April 2021; pp. 48–52. [Google Scholar] [CrossRef]

- Li, K.; Ouyang, Z.; Hu, L.; Hao, D.; Kneip, L. Robust SRIF-based LiDAR-IMU Localization for Autonomous Vehicles. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5381–5387. [Google Scholar]

- Zhang, Z.; Zhao, J.; Huang, C.; Li, L. Learning Visual Semantic Map-Matching for Loosely Multi-sensor Fusion Localization of Autonomous Vehicles. IEEE Trans. Intell. Veh. 2022. [Google Scholar] [CrossRef]

- Yu, L.; Jie, L.; Haoru, L.; Sijia, L. Large-scale scene mapping and localization based on multi-sensor fusion. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 1130–1135. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Q.; Pan, J.; Liang, J. A vehicle autonomous positioning technology based on terrain relative navigation. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 6737–6740. [Google Scholar] [CrossRef]

- Liu, B.; Liu, G.; Wei, S.; Su, G.; Wang, J. On Improved Algorithm of SINS/DR Integrated Navigation System. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, Z.; Song, C.; Xiong, D. Vehicle dynamic model-based integrated navigation system for land vehicles. In Proceedings of the 2018 25th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS), St. Petersburg, Russia, 28–30 May 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Vigrahala, J.; Ramesh, N.V.K.; Devanaboyina, V.R.; Reddy, B.N.K. Attitude, Position and Velocity determination using Low-cost Inertial Measurement Unit for Global Navigation Satellite System Outages. In Proceedings of the 2021 10th IEEE International Conference on Communication Systems and Network Technologies (CSNT), Bhopal, India, 18–19 June 2021; pp. 61–65. [Google Scholar] [CrossRef]

- Abosekeen, A.; Noureldin, A.; Korenberg, M.J. Improving the RISS/GNSS Land-Vehicles Integrated Navigation System Using Magnetic Azimuth Updates. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1250–1263. [Google Scholar] [CrossRef]

- Jiang, Y.F.; Lin, Y.P. Error analysis of quaternion transformations (inertial navigation). IEEE Trans. Aerosp. Electron. Syst. 1991, 27, 634–639. [Google Scholar] [CrossRef]

- Yaping, Z.; Zhihang, S. Research on Binocular Forest Fire Source Location and Ranging System. In Proceedings of the 2020 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 28–30 July 2020; pp. 199–202. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

| Vehicle Speed | Front Wheel Steering Angle | Angular Speed of Steering | Angular Threshold per Unit of Time |

|---|---|---|---|

| 0.175 | 0.875 | 0.0175 | |

| 0.122 | 0.611 | 0.0122 | |

| 0.087 | 0.436 | 0.0087 |

| Real Time (s) | Time of Monocular Camera Judgment (Frame) | Time Difference (s) | |

|---|---|---|---|

| First lane change | 10 | 216 | 0.8 |

| Second lane change | 21.8 | 470 | 1.7 |

| Lane Width (m) | Threshold Value of Intersection Point of Left Lane Line on x-axis during Left Lane Change | Threshold Value of Intersection Point of Right Lane Line on x-axis during Right Lane Change |

|---|---|---|

| 3 | 480 | 150 |

| 3.5 | 500 | 130 |

| 4 | 520 | 115 |

| Lane Width (m) | The Real Time of the First Lane Change (s) | The First Lane Change Time Judged by Monocular Camera (Frame) | The Real Time of the Second Lane Change (s) | The Second Lane Change Time Judged by Monocular Camera (Frame) | First Lag Time (s) | Second Lag Time (s) |

|---|---|---|---|---|---|---|

| 3 | 10 | 216 | 22.4 | 455 | 0.8 | 0.35 |

| 3.5 | 10 | 221 | 22.7 | 455 | 1.05 | 0.05 |

| 4 | 10 | 221 | 23.2 | 464 | 1.05 | 0 |

| Serial Number | Actual Distance (mm) | Measuring Distance of Binocular Camera (mm) | Error (mm) |

|---|---|---|---|

| 1 | 159 | 163 | 4 |

| 2 | 339 | 330 | 9 |

| 3 | 342 | 345 | 3 |

| 4 | 522 | 529 | 7 |

| 5 | 670 | 651 | 19 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, X.; Liu, Z.; Liu, B.; Liu, J. Multi-Sensor Fusion for Lateral Vehicle Localization in Tunnels. Appl. Sci. 2022, 12, 6634. https://doi.org/10.3390/app12136634

Jiang X, Liu Z, Liu B, Liu J. Multi-Sensor Fusion for Lateral Vehicle Localization in Tunnels. Applied Sciences. 2022; 12(13):6634. https://doi.org/10.3390/app12136634

Chicago/Turabian StyleJiang, Xuedong, Zunmin Liu, Bilong Liu, and Jiang Liu. 2022. "Multi-Sensor Fusion for Lateral Vehicle Localization in Tunnels" Applied Sciences 12, no. 13: 6634. https://doi.org/10.3390/app12136634

APA StyleJiang, X., Liu, Z., Liu, B., & Liu, J. (2022). Multi-Sensor Fusion for Lateral Vehicle Localization in Tunnels. Applied Sciences, 12(13), 6634. https://doi.org/10.3390/app12136634